Smoke Object Segmentation and the Dynamic Growth Feature Model for Video-Based Smoke Detection Systems

Abstract

1. Introduction

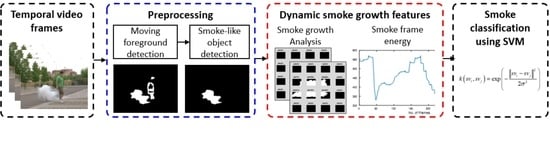

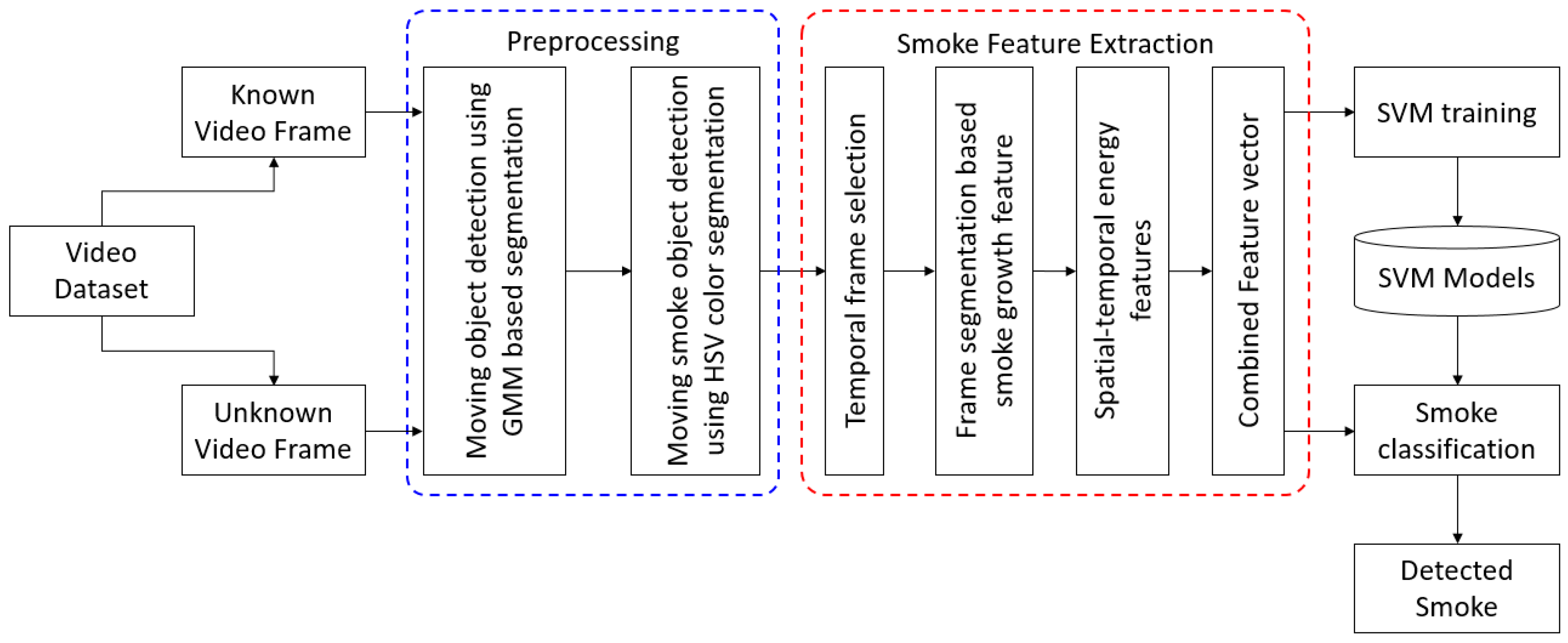

2. Proposed Model

2.1. Preprocessing and Hybrid Segmentation

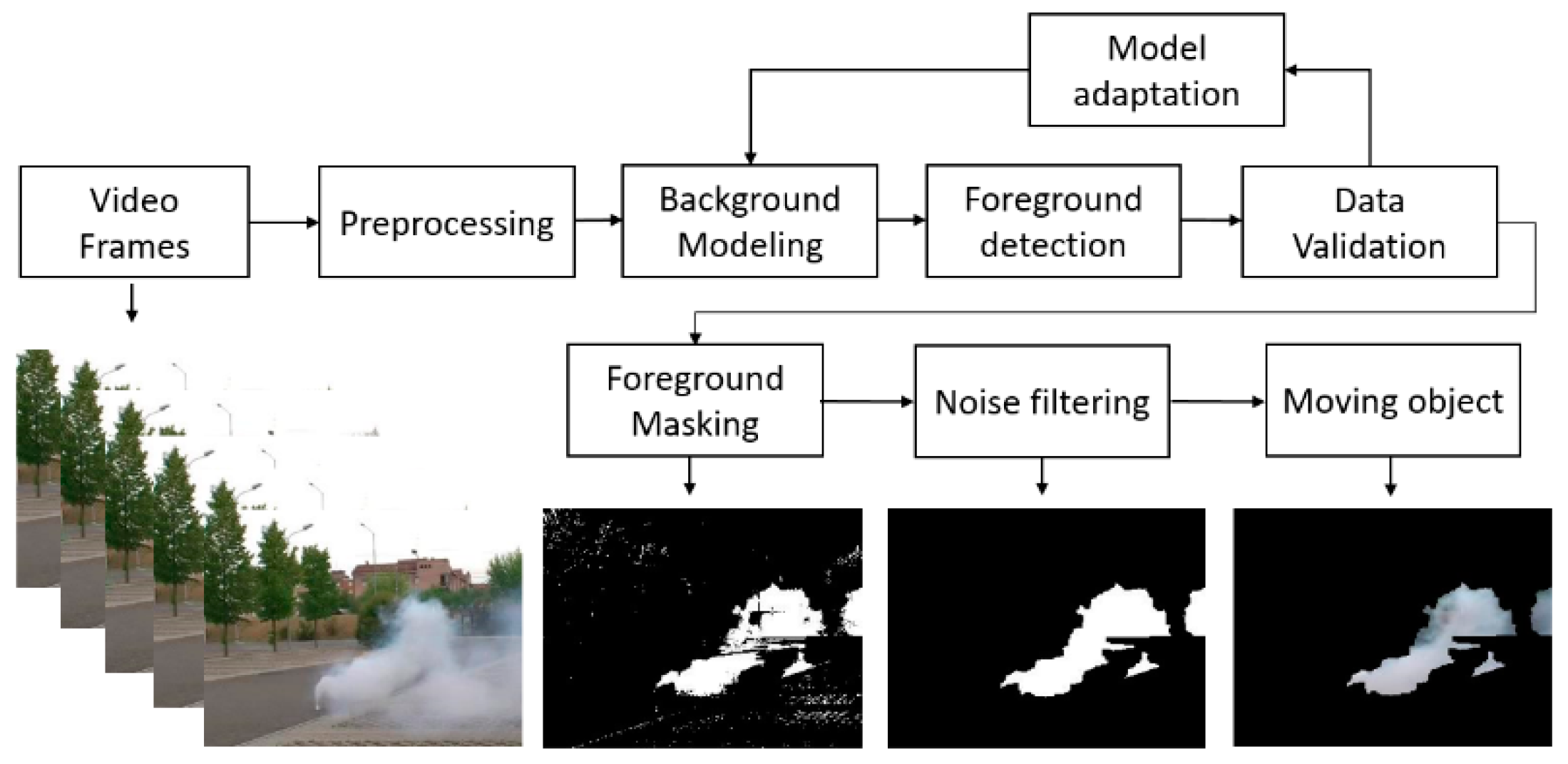

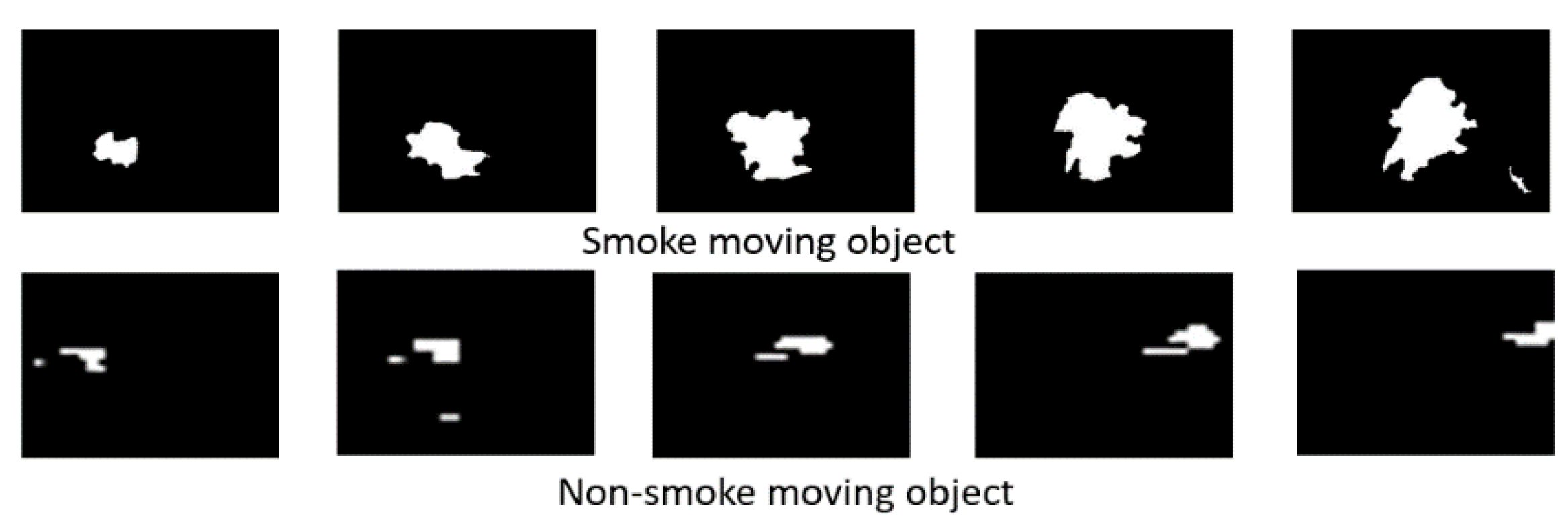

2.1.1. Moving Foreground Detection Using GMM Segmentation

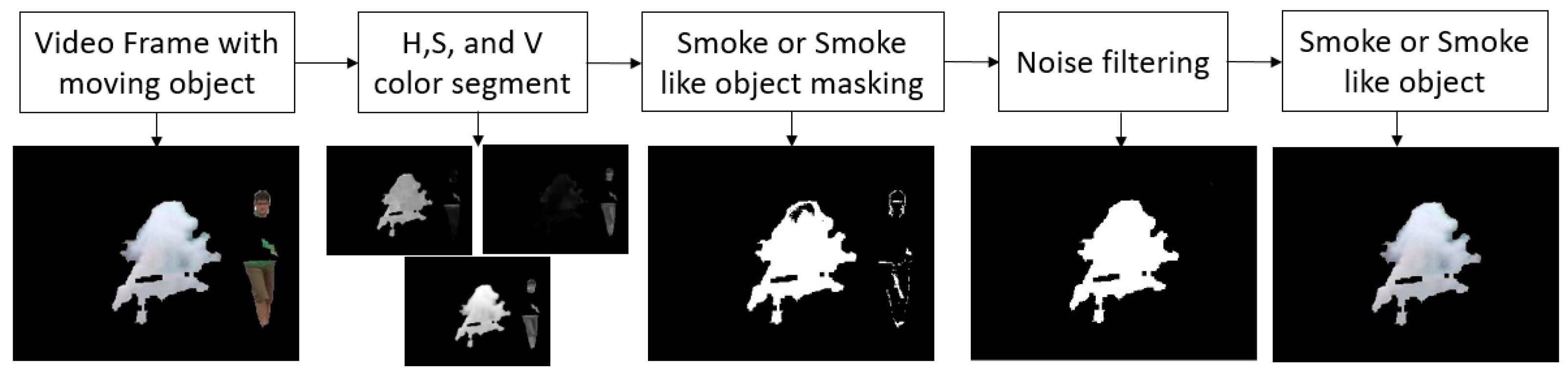

2.1.2. Smoke-Like Moving Object Separation Using HSV Color Segmentation

2.2. Smoke Feature Extraction

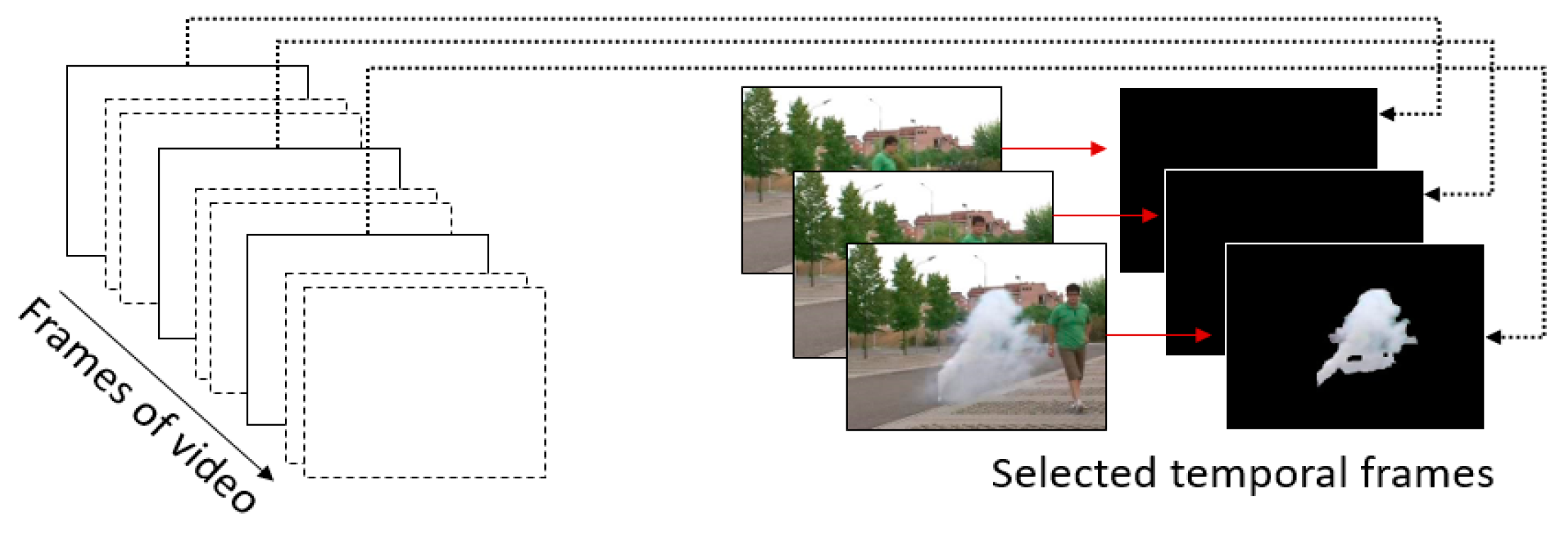

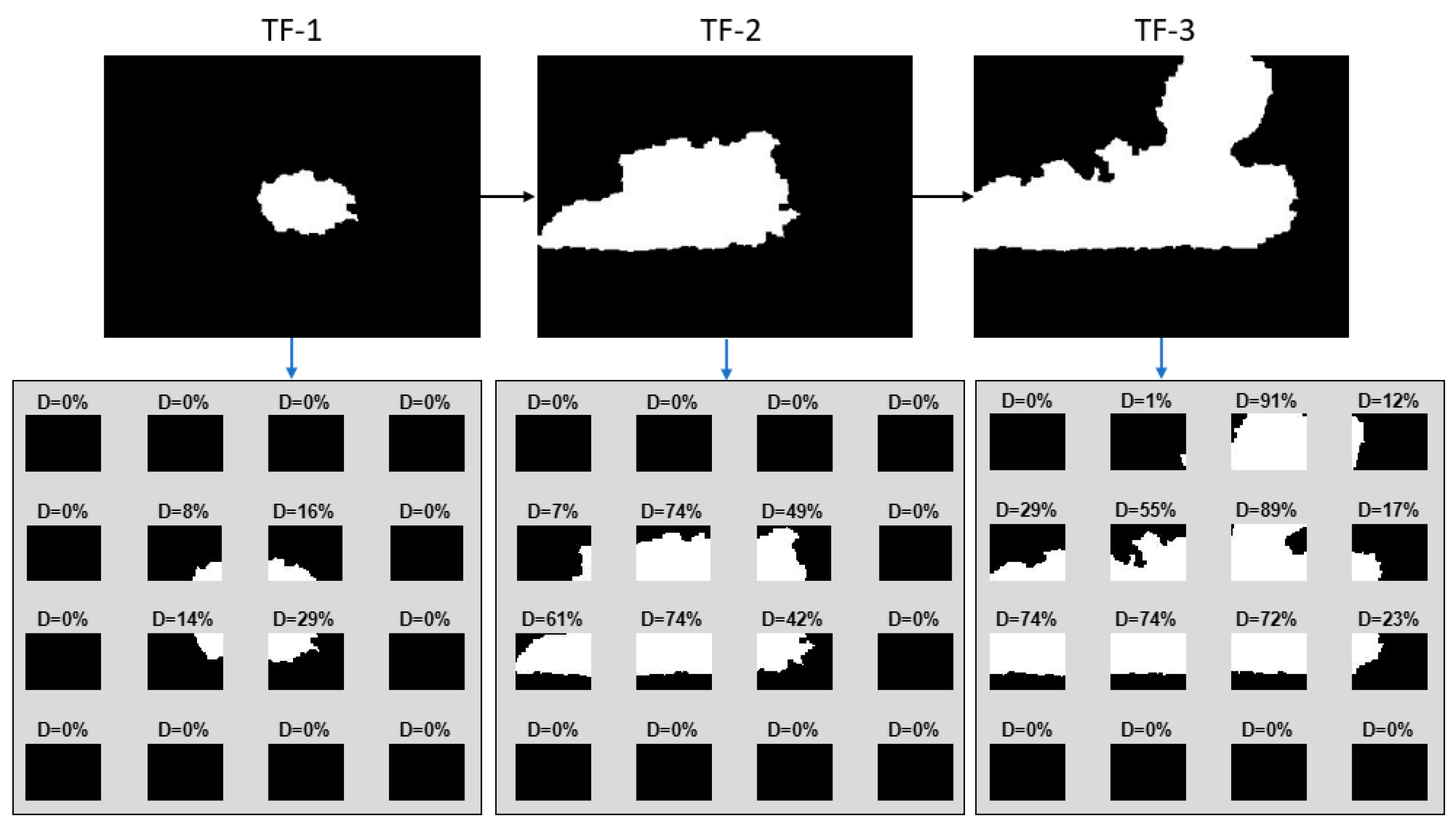

2.2.1. Temporal Frame Selection

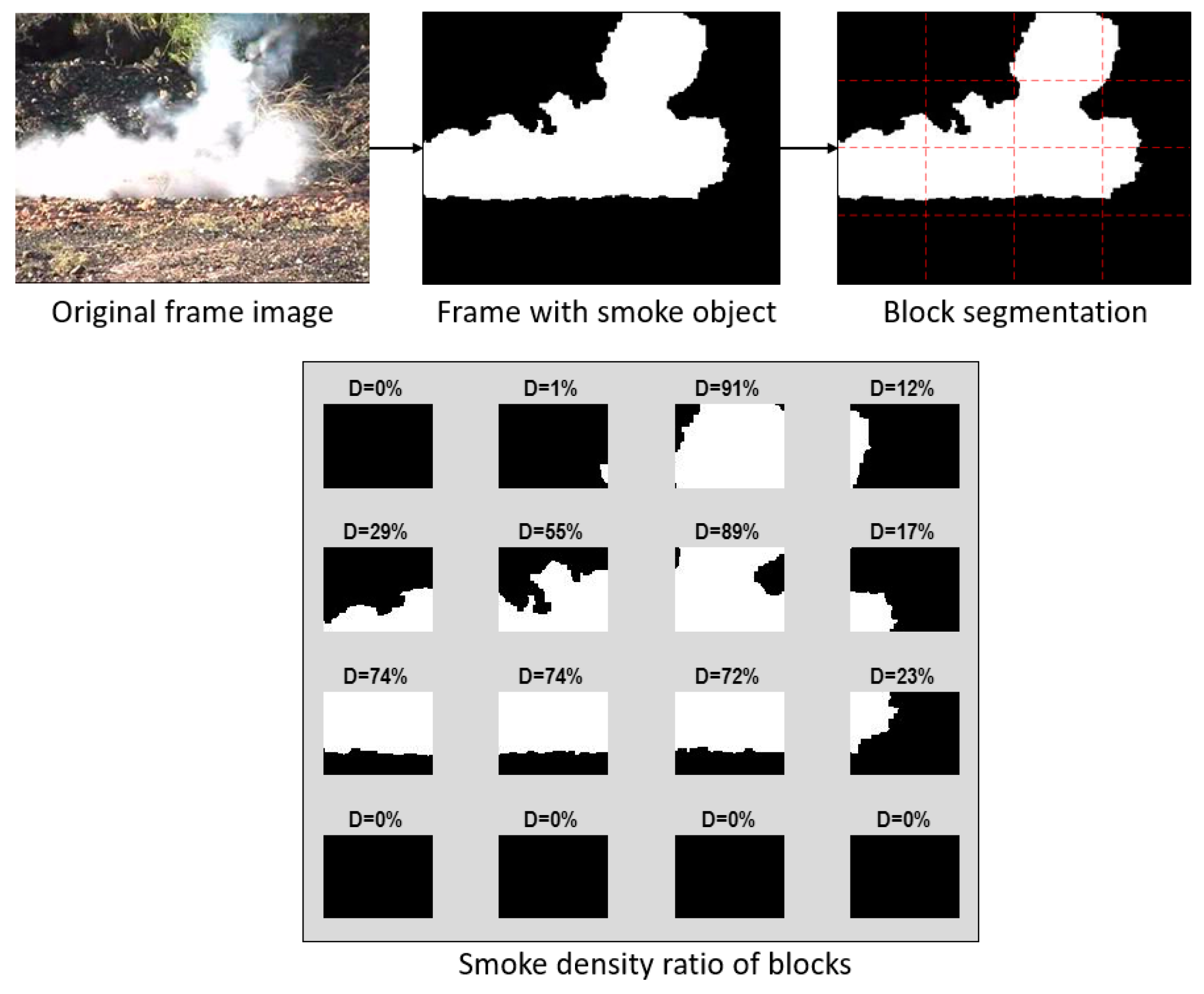

2.2.2. Frame Blocks Segmentation

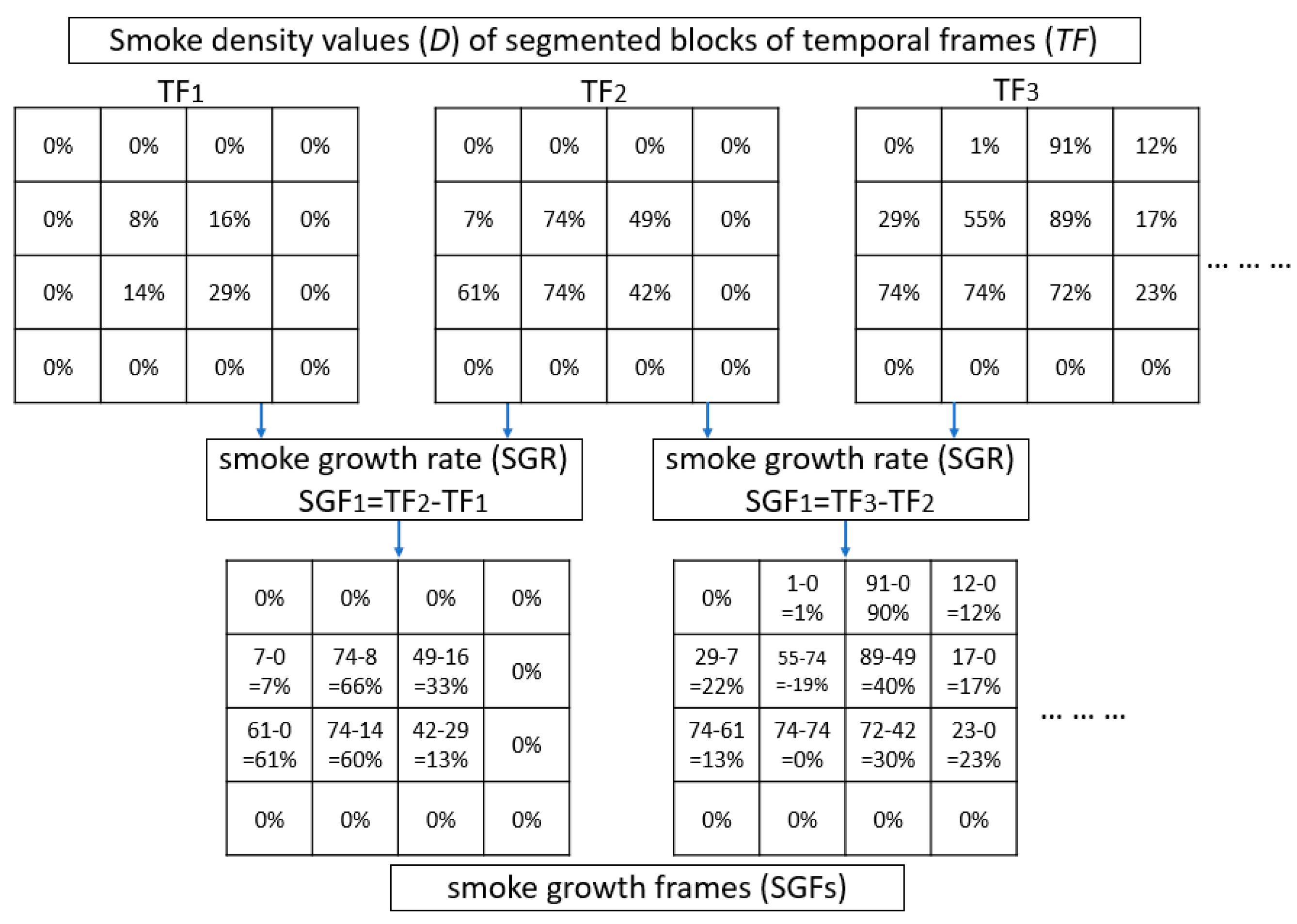

2.2.3. Smoke Growth Segmented Frame Block

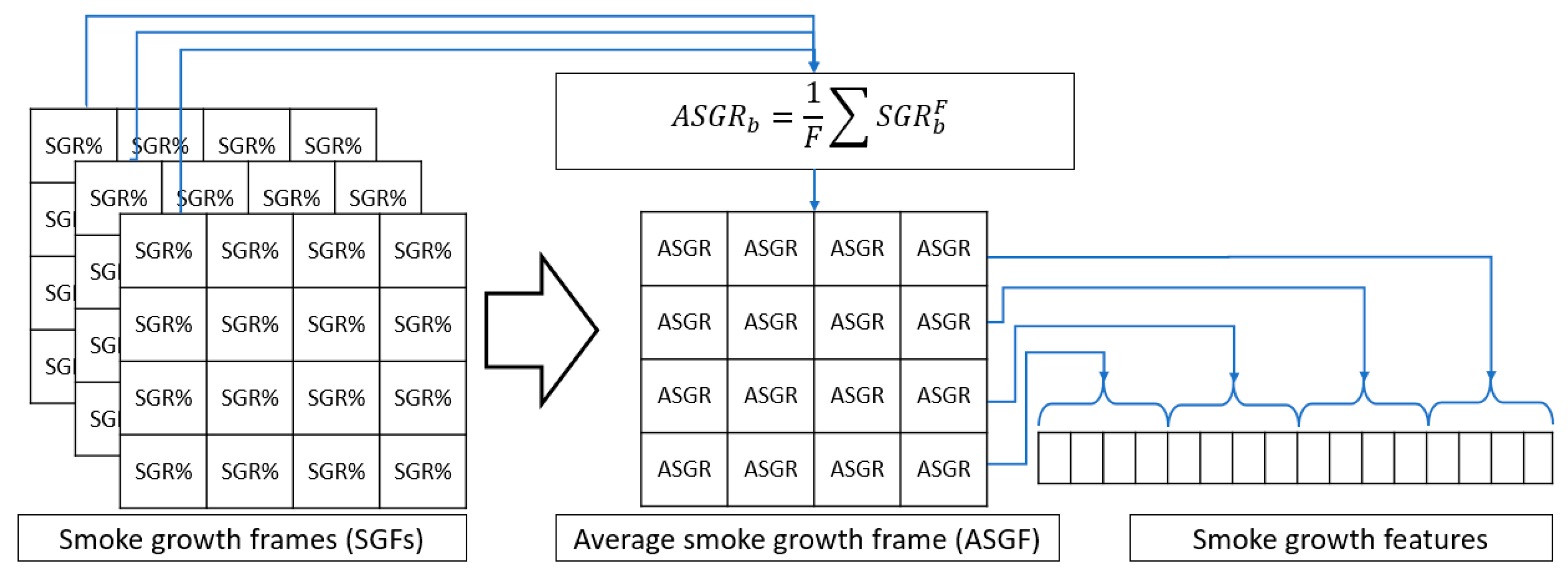

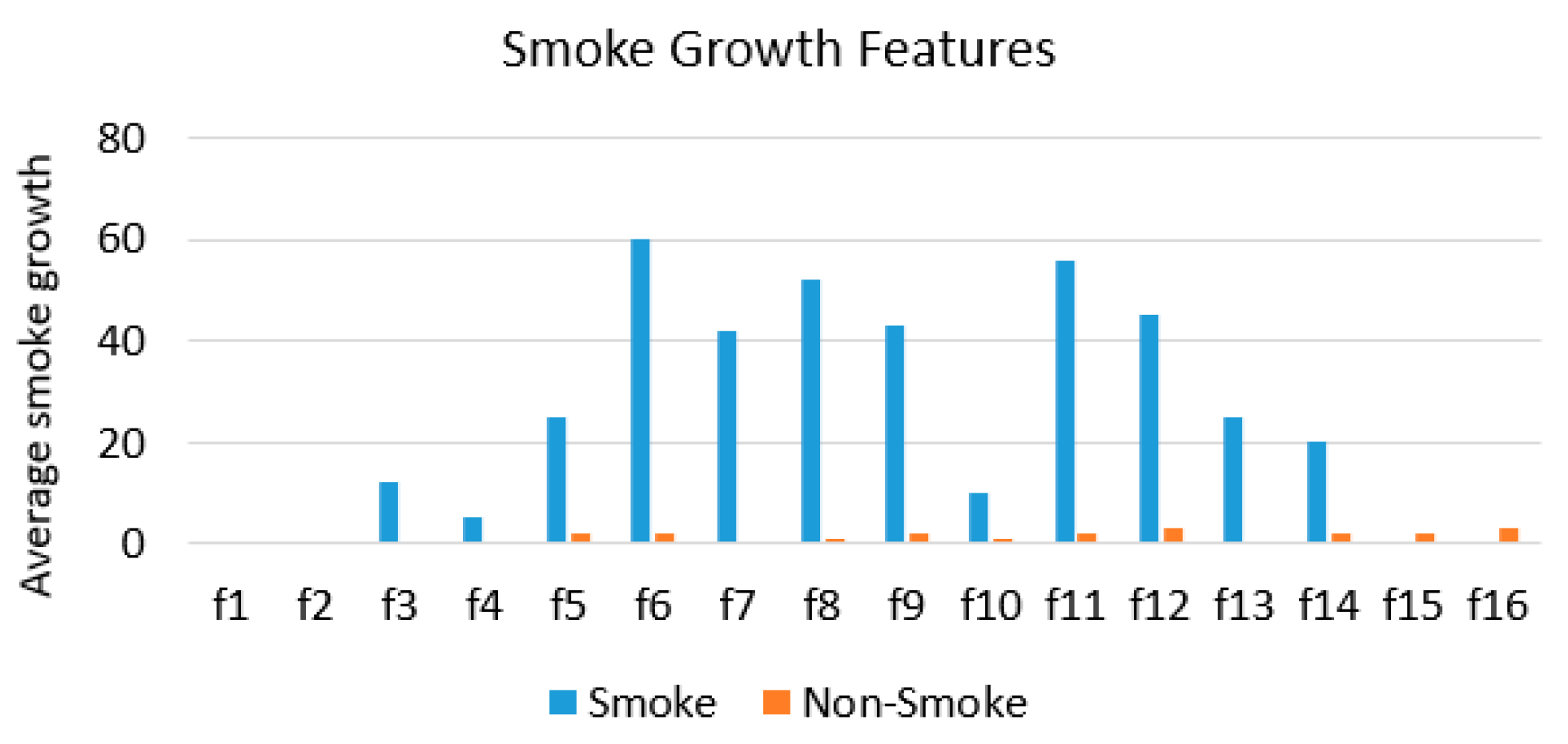

2.2.4. Smoke Growth Features

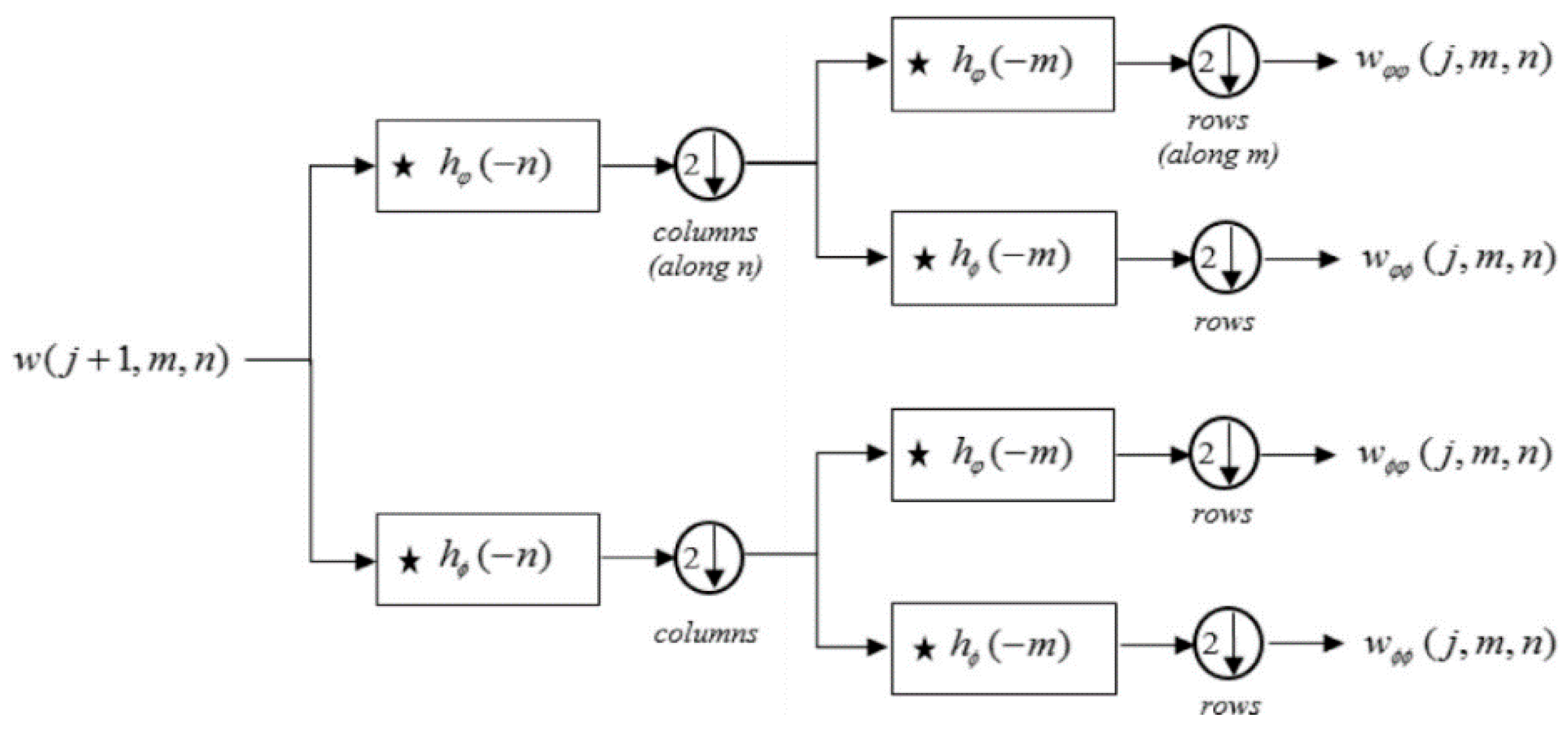

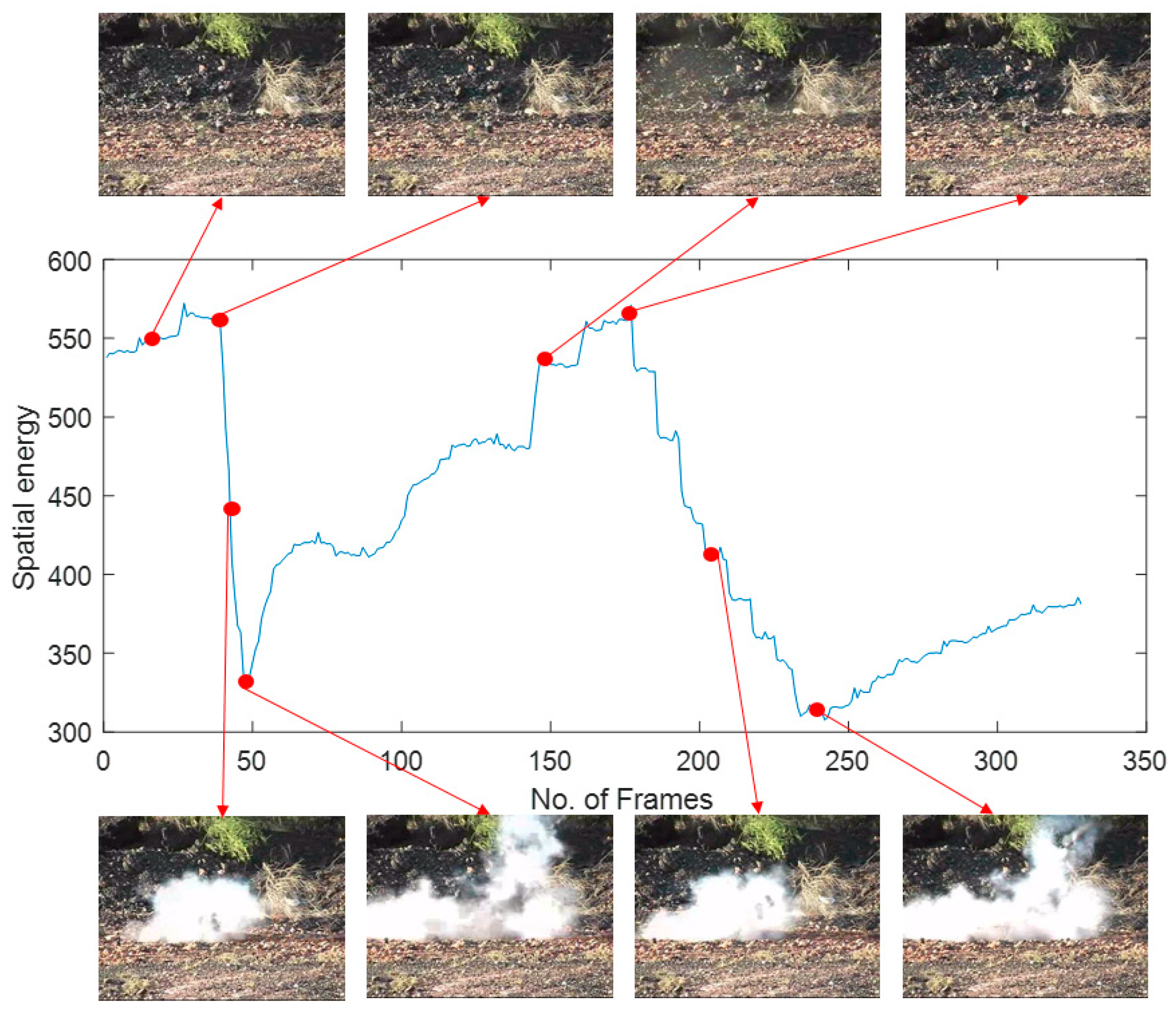

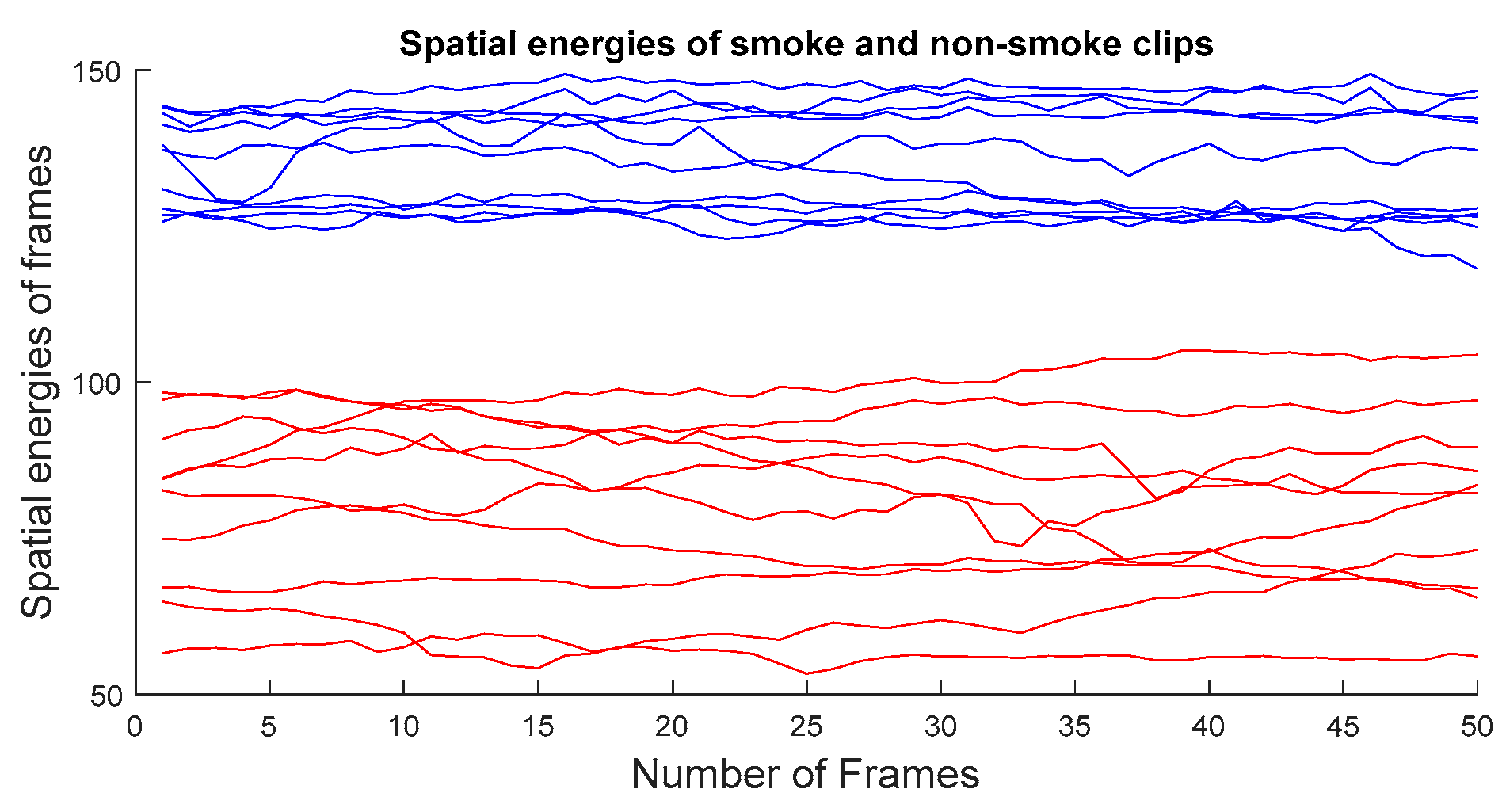

2.2.5. Spatial-Temporal Energy Features

2.2.6. Final Feature Vector

2.3. Smoke Identification Using SVM

3. Experimental Result and Evaluation

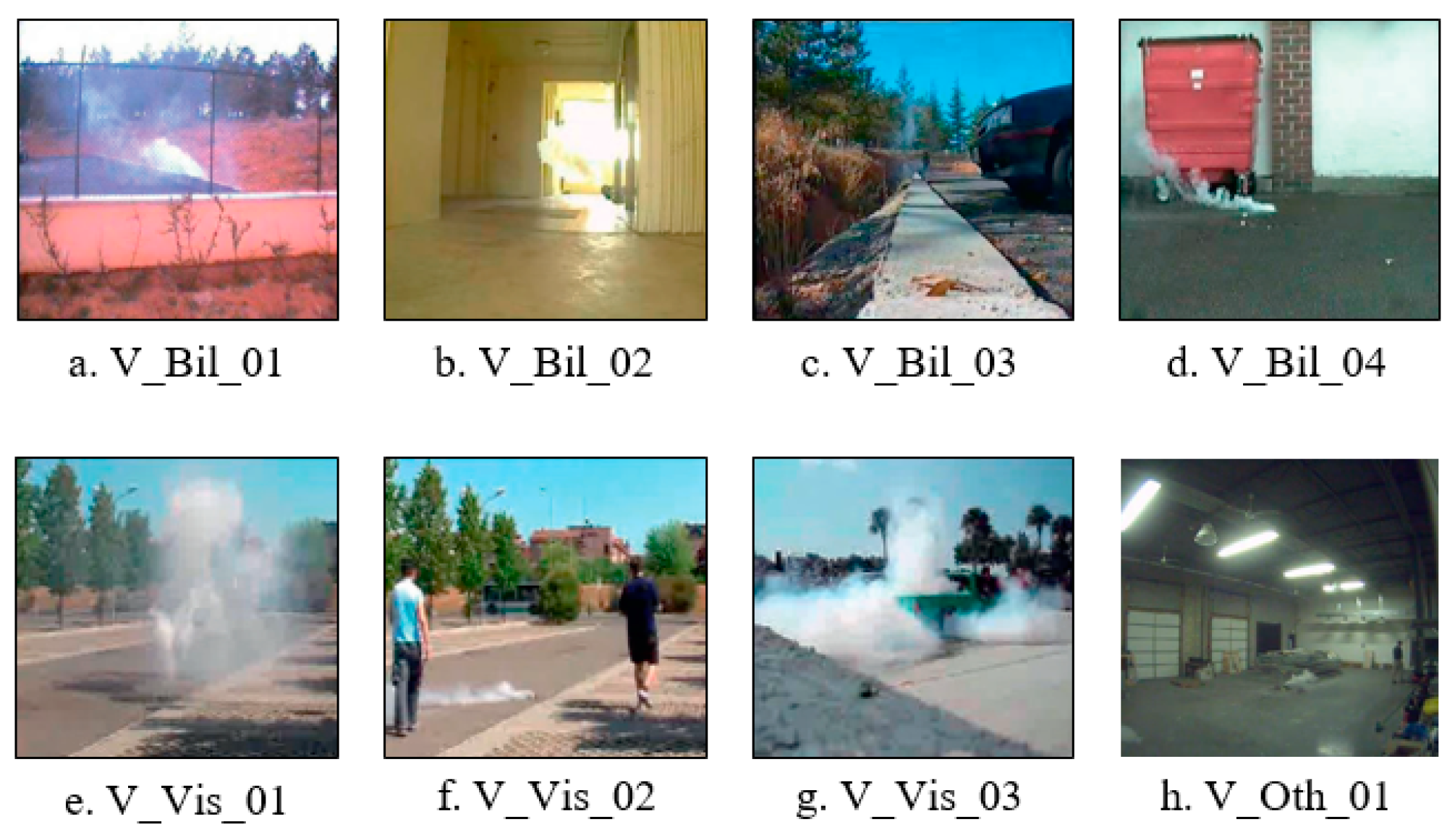

3.1. Experimental Setup and Video Dataset

3.2. Experimental Process

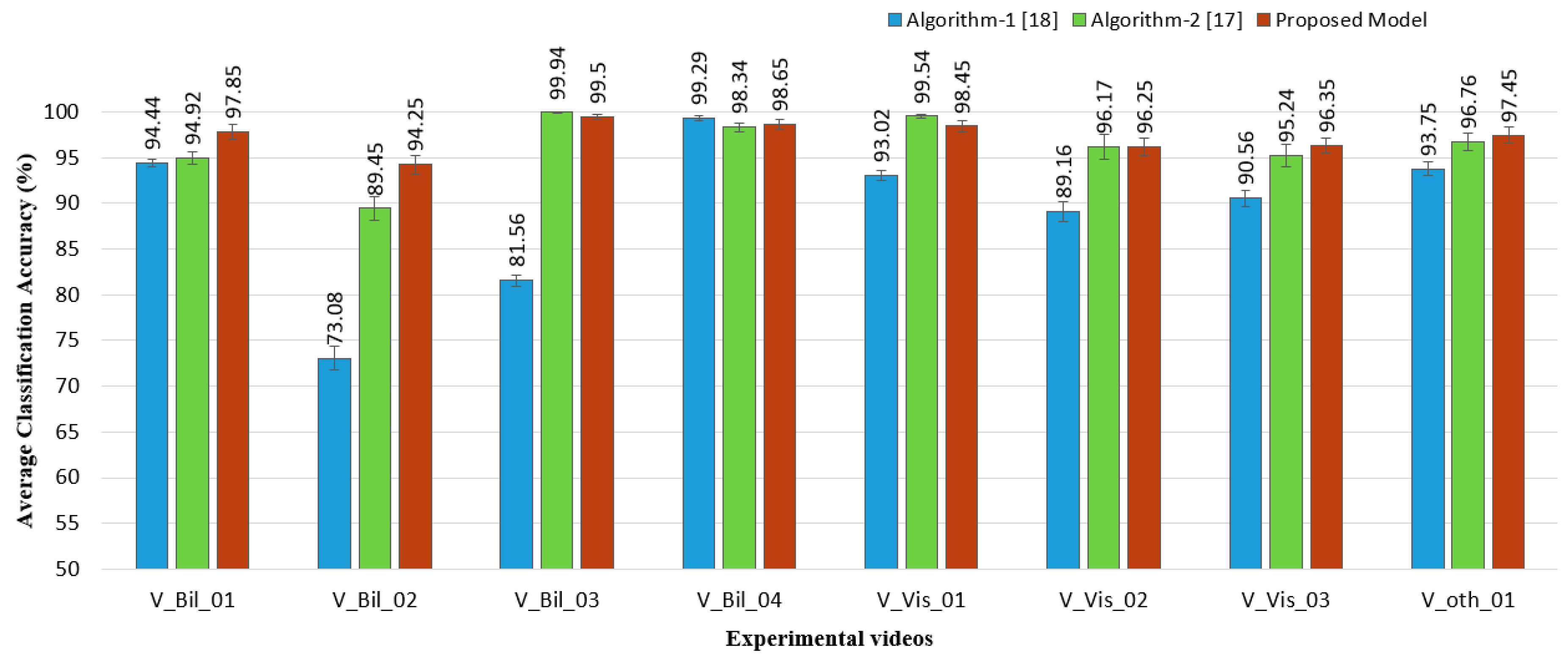

3.3. Experimental Result and Evaluation

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xiong, Z.; Caballero, R.; Wang, H.; Finn, A.M.; Lelic, M.A.; Peng, P.-Y. Video-based smoke detection: Possibilities, techniques, and challenges. In Proceedings of the IFPA, Fire Suppression and Detection Research and Applications—A Technical Working Conference (SUPDET), Orlando, FL, USA, 5–8 March 2007. [Google Scholar]

- Zikria, Y.B.; Afzal, M.K.; Kim, S.W. Internet of Multimedia Things (IoMT): Opportunities, Challenges and Solutions. Sensors 2020, 20, 2334. [Google Scholar] [CrossRef] [PubMed]

- Fujiwara, N.; Terada, K. Extraction of a smoke region using fractal coding. In Proceedings of the IEEE International Symposium on Communications and Information Technology (ISCIT), Sapporo, Japan, 26–29 October 2004; Volume 2, pp. 659–662. [Google Scholar]

- Ojo, J.A.; Oladosu, J.A. Application of panoramic annular lens for motion analysis tasks: Surveillance and smoke detection. In Proceedings of the 15th International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000; Volume 4, pp. 714–717. [Google Scholar]

- Catrakis, H.J.; Aguirre, R.C.; Ruiz-Plancarte, J.; Thayne, R.D. Shape complexity of whole-field three-dimensional space-time fluid interfaces in turbulence. Phys. Fluids 2002, 14, 3891–3898. [Google Scholar] [CrossRef]

- Cappellini, Y.; Mattii, L.; Mecocci, A. An intelligent System for automatic fire detection in forests. In Proceedings of the IEEE 3th International Conference on Image Processing and its Applications, Warwick, UK, 18–20 July 2002; pp. 563–570. [Google Scholar]

- Yamagishi, H.; Yamaguchi, I. Fire flame detection algorithm using a color camera. In Proceedings of the 1999 International Symposium on Micromechanics and Human Science, Nagoya, Japan, 23–26 November 2002; pp. 255–260. [Google Scholar]

- Foggia, P.; Saggese, A.; Vento, M. Real-time fire detection for video-surveillance applications using a combination of experts based on color, shape, and motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Kolesov, I.; Karasev, P.; Tannenbaum, A.; Haber, E. Fire and smoke detection in video with optimal mass transport based optical flow and neural networks. In Proceedings of the IEEE International Conference Image Process, Hong Kong, 26–29 September 2010; pp. 761–764. [Google Scholar]

- Toreyin, B.U.; Dedeoglu, Y.; Cetin, A.E. Wavelet based real-time smoke detection in video. In Proceedings of the 13th European Signal Processing Conference, Antalya, Turkey, 4–8 September 2005; pp. 1–4. [Google Scholar]

- Muller, M.; Karasev, P.; Tannenbaum, A.; Kolesov, I. Optical flow estimation for flame detection in videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef] [PubMed]

- Stauffer, C.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999; pp. 246–252. [Google Scholar]

- Healey, G.; Slatcr, D.; Lin, T.; Drda, B.; Goedeke, A.D. A system for real-time fire detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993; pp. 605–606, date of published 06 August 2002. [Google Scholar]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Chunyu, Y.; Jun, F.; Jinjun, W.; Yongming, Z. Video fire smoke detection using motion and color features. Fire Technol. 2010, 46, 651–666. [Google Scholar] [CrossRef]

- Vicente, J.; Guillemant, P. An image processing technique for automatically detecting forest fire. Int. J. Therm. Sci. 2002, 41, 1113–1120. [Google Scholar] [CrossRef]

- Appana, D.K.; Islam, M.R.; Kim, J.M. Smoke detection approach using optical flow characteristics for alarm systems. Inf. Sci. 2017, 2017, 418–419. [Google Scholar]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Smoke detection using spatio-temporal analysis, motion modeling and dynamic texture recognition. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 1078–1082. [Google Scholar]

- Uddin, M.S.; Islam, M.R.; Khan, S.A.; Kim, J.; Kim, J.-M.; Sohn, S.-M.; Choi, B. Distance and density similarity based enhanced k-NN classifier for improving fault diagnosis performance of bearings. Shock Vib. 2016, 2016, 1–11. [Google Scholar] [CrossRef]

- Rahim, M.A.; Islam, M.R.; Shin, J. Non-touch sign word recognition based on dynamic hand gesture using hybrid segmentation and CNN feature fusion. Appl. Sci. 2019, 9, 3790. [Google Scholar] [CrossRef]

- Islam, M.R.; Uddin, J.; Kim, J.-M. Acoustic emission sensor network based fault diagnosis of induction motors using a gabor filter and multiclass Support Vector Machines. Ad. Hoc. Sens. Wirel. Net. (AHSWN) 2016, 34, 273–287. [Google Scholar]

| Ref. | Aim of the Research | Method | Pros | Cons |

|---|---|---|---|---|

| [8] | Fires detection based on video analysis by surveillance cameras | Foreground masking, Background subtraction, Optical flow analysis using color evaluation, shape variation, and movement evaluation. | A hybrid combination of color evaluation, shape variation, and movement evaluation for optical flow shows the effective results. | Background subtraction and foreground masking based on frame differencing is vulnerable to a dynamic changing environment. |

| [9] | Fire and smoke detection using video | Optimal Mass Transportation (OMT) for extracting optical flow descriptor of RGB video frame and Neural Networks for classifying smoke/fire | OMT is useful for detecting smoke or fire on a similar colored background. | There is no background subtraction process is introduced and smoke color moving object might misguide the detection process |

| [14] | Flame modeling for wildfire detection using a video signal | Background subtraction using non-parametric model, Spatio-temporal features such as color probability, flickering, spatial and Spatio-temporal energy, and dynamic texture analysis for wildfire detection | Codebook with the combination of various Spatio-temporal and dynamic texture analysis construct a strong feature vector to classify fire using SVM. | This model is for flame and fire detection which need to be enhanced for smoke detection. Also, feature extraction using several flame movement descriptors demands high computation power. |

| [17] | optical flow characteristics for fire alarm systems | Combined features from the Gabor filter-based edge orientation and the smoke energy components of Spatial-temporal frequencies. SVM is used for smoke classification. | HSV color segmentation is effective for detecting a smoke object. Gabor filter-based edge orientation of frame differencing and Spatial-temporal energy of frame shows good smoke classification result. | Background segmentation on a static frame is suboptimal for a dynamically changing environment. Smoke descriptor based on temporal frame differencing might show some false alarm. |

| [18] | Motion modeling and dynamic texture recognition for smoke detection | HSV color segmentation for candidate smoke regions detection, Spatio-temporal energy analysis, and histograms of oriented gradients and optical flows (HOGHOFs) | spatio-temporal energy analysis, (HOGHOFs) show effectiveness for moving smoke detection | HOGHOF descriptors for smoke motion modeling are sub-optimal for a smoke-like moving object |

| Video # | Video Name | f/s | Time | No. of Frames |

|---|---|---|---|---|

| V_Bil_01 | Bilkent/sBehindtheFance | 10.00 | 1 min 3 s | 630 |

| V_Bil_02 | Bilkent/sEmptyR1 | 16.67 | 28 s | 466 |

| V_Bil_03 | Bilkent/sParkingLot | 25.00 | 1 min 9 s | 1725 |

| V_Bil_04 | Bilkent/sWasteBasket | 10.00 | 1 min 30 s | 900 |

| V_Vis_01 | Visor/movie13 | 25.00 | 1 min 20 s | 2000 |

| V_Vis_02 | Visor/movie14 | 25.00 | 1 min 26 s | 2150 |

| V_Vis_03 | Visor/burnout | 25.00 | 1 min 28 s | 2200 |

| V_oth_01 | other/IndoorVideo | 14.99 | 1 min 20 s | 1199 |

| Parameter Name | Notation | Value |

|---|---|---|

| HSV color segmentation | ||

| Min threshold of hue (H) | hlow | 0 |

| Max threshold of hue (H) | hhigh | 1 |

| Min threshold of saturation (S) | slow | 0 |

| Max threshold of saturation (S) | shigh | 0.28 |

| Min threshold of value (V) | vlow | 0.38 |

| Max threshold of value (V) | Vhigh | 0.985 |

| Temporal frame selection | ||

| Frame per second | f/s | Based on video |

| Selected frame per second | n | 2 |

| Total number of considered frame | N | 4 |

| Total time duration | T | 2 |

| Frame block segmentation | ||

| Segmented density block | SBij | 16, i = 4, j = 4 |

| Special temporal energy | ||

| Level of wavelet transformation | 3 | |

| Feature vector | ||

| Total number of features | Nfeature | 20 |

| Total number of classes | Nclass | 2 |

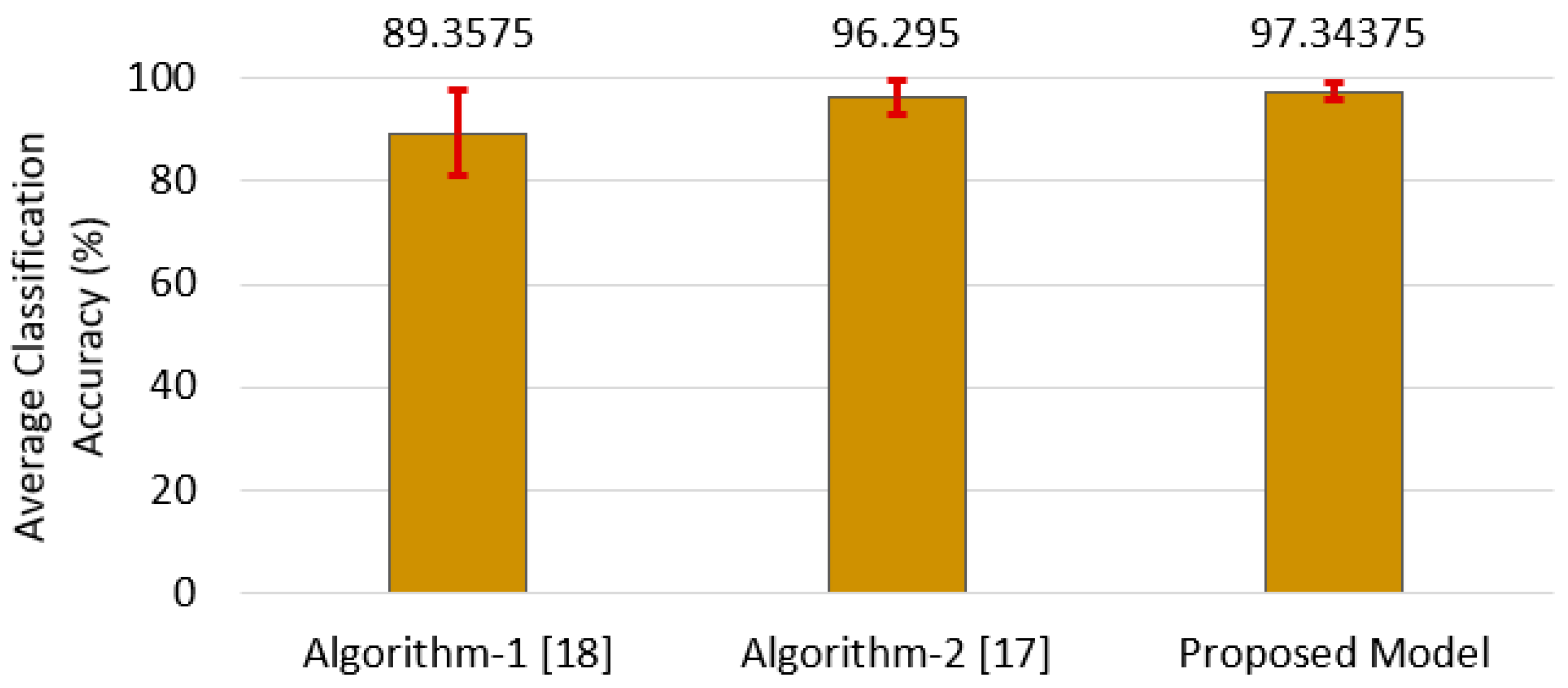

| Algorithms | Ref # | Title | Method |

|---|---|---|---|

| Algorithm-1 | [18] | Smoke detection using Spatio-temporal analysis, motion modeling and dynamic texture recognition | HSV color segmentation for candidate smoke regions detection, Spatio-temporal energy analysis, and histograms of oriented gradients and optical flows (HOGHOFs) |

| Algorithm-2 | [17] | Smoke detection approach using optical flow characteristics for alarm systems | Combined features from the Gabor filter-based edge orientation and the smoke energy components of Spatial-temporal frequencies. SVM is used for smoke classification. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, M.R.; Amiruzzaman, M.; Nasim, S.; Shin, J. Smoke Object Segmentation and the Dynamic Growth Feature Model for Video-Based Smoke Detection Systems. Symmetry 2020, 12, 1075. https://doi.org/10.3390/sym12071075

Islam MR, Amiruzzaman M, Nasim S, Shin J. Smoke Object Segmentation and the Dynamic Growth Feature Model for Video-Based Smoke Detection Systems. Symmetry. 2020; 12(7):1075. https://doi.org/10.3390/sym12071075

Chicago/Turabian StyleIslam, Md Rashedul, Md Amiruzzaman, Shahriar Nasim, and Jungpil Shin. 2020. "Smoke Object Segmentation and the Dynamic Growth Feature Model for Video-Based Smoke Detection Systems" Symmetry 12, no. 7: 1075. https://doi.org/10.3390/sym12071075

APA StyleIslam, M. R., Amiruzzaman, M., Nasim, S., & Shin, J. (2020). Smoke Object Segmentation and the Dynamic Growth Feature Model for Video-Based Smoke Detection Systems. Symmetry, 12(7), 1075. https://doi.org/10.3390/sym12071075