Research on the Task Assignment Problem with Maximum Benefits in Volunteer Computing Platforms

Abstract

1. Introduction

- (1)

- To the best of our knowledge, the task assignment problem with the greatest benefit in VCPs was proposed for the first time in this paper, and we gave the evaluation criteria for the benefits.

- (2)

- We proposed a new list-based task assignment (LTA) strategy while considering the reliability of the VCPs, and we proved the LTA strategy could complete the task with a deadline constraint as soon as possible.

- (3)

- Based on the (LTA) strategy, we proposed a new task assignment algorithm while considering the task deadline constraints.

- (4)

- We evaluated the efficiency of the proposed algorithm based on the simulation experiments. The experimental results showed that our proposed algorithm had a greater benefit than the current algorithms.

2. Related Works

2.1. Static Task Assignment Algorithms

2.2. Dynamic Task Assignment Algorithms

3. Problem Description

4. Algorithm Description

4.1. The List-Based Task Assignment (LTA) Strategy

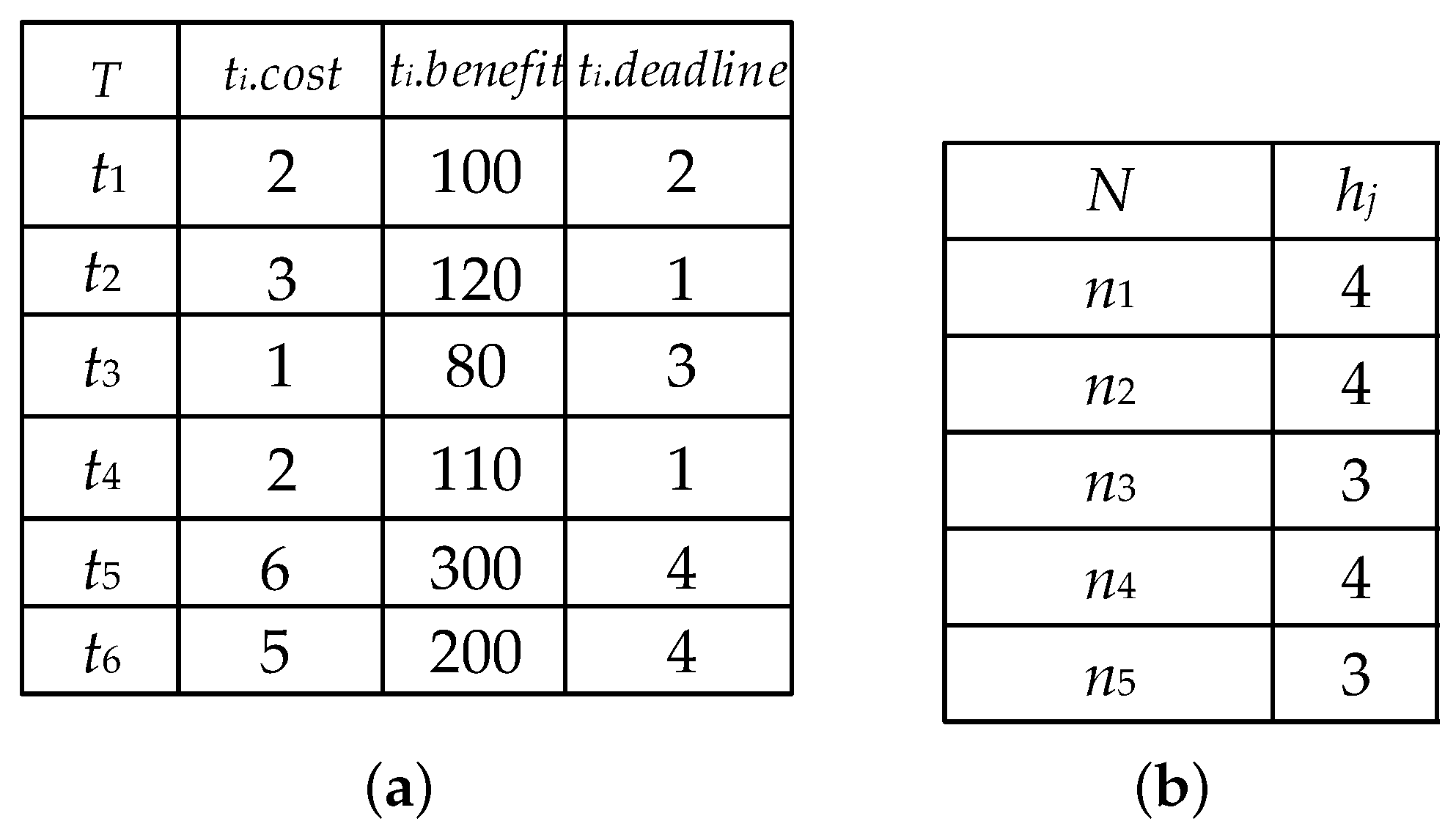

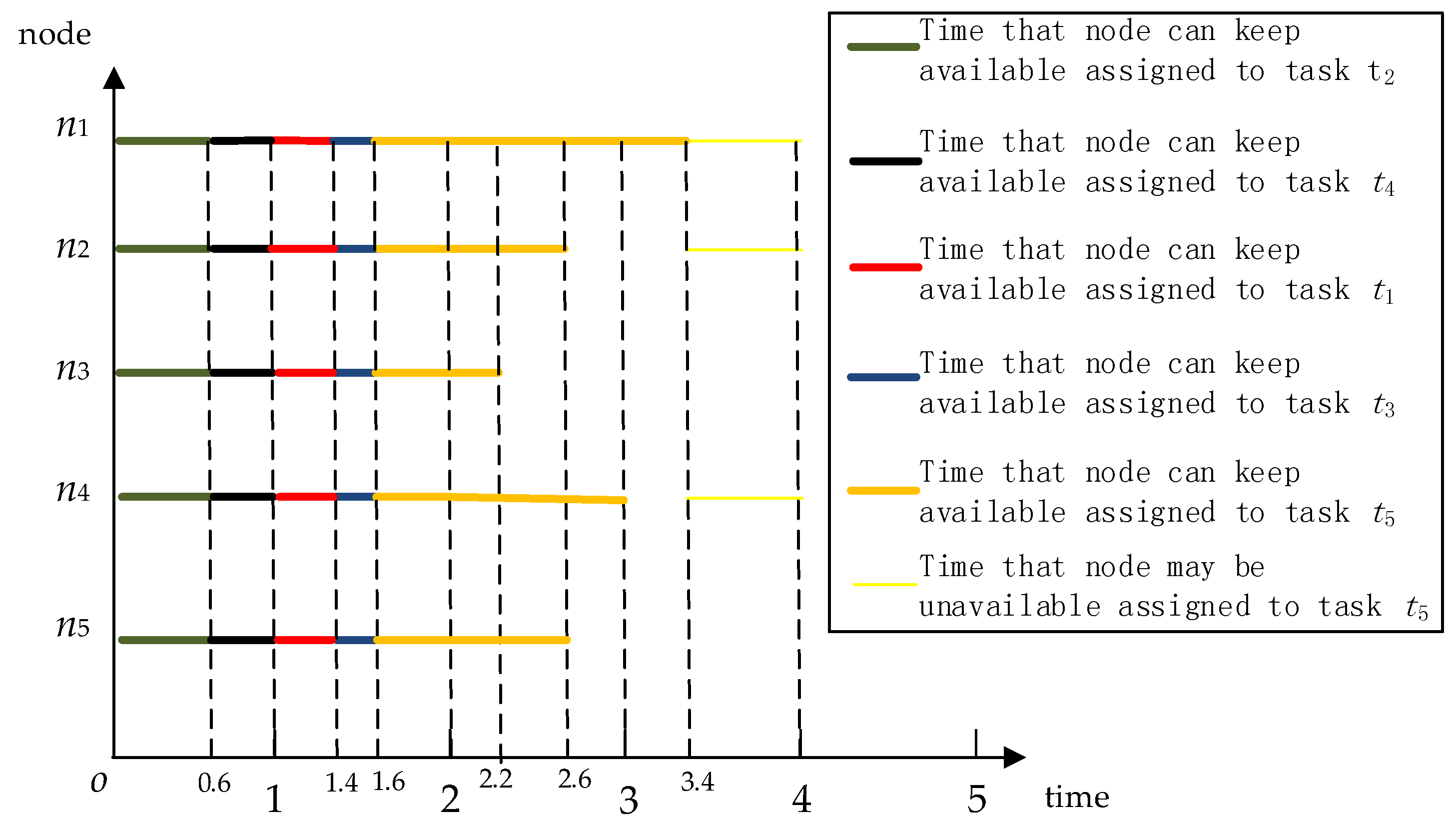

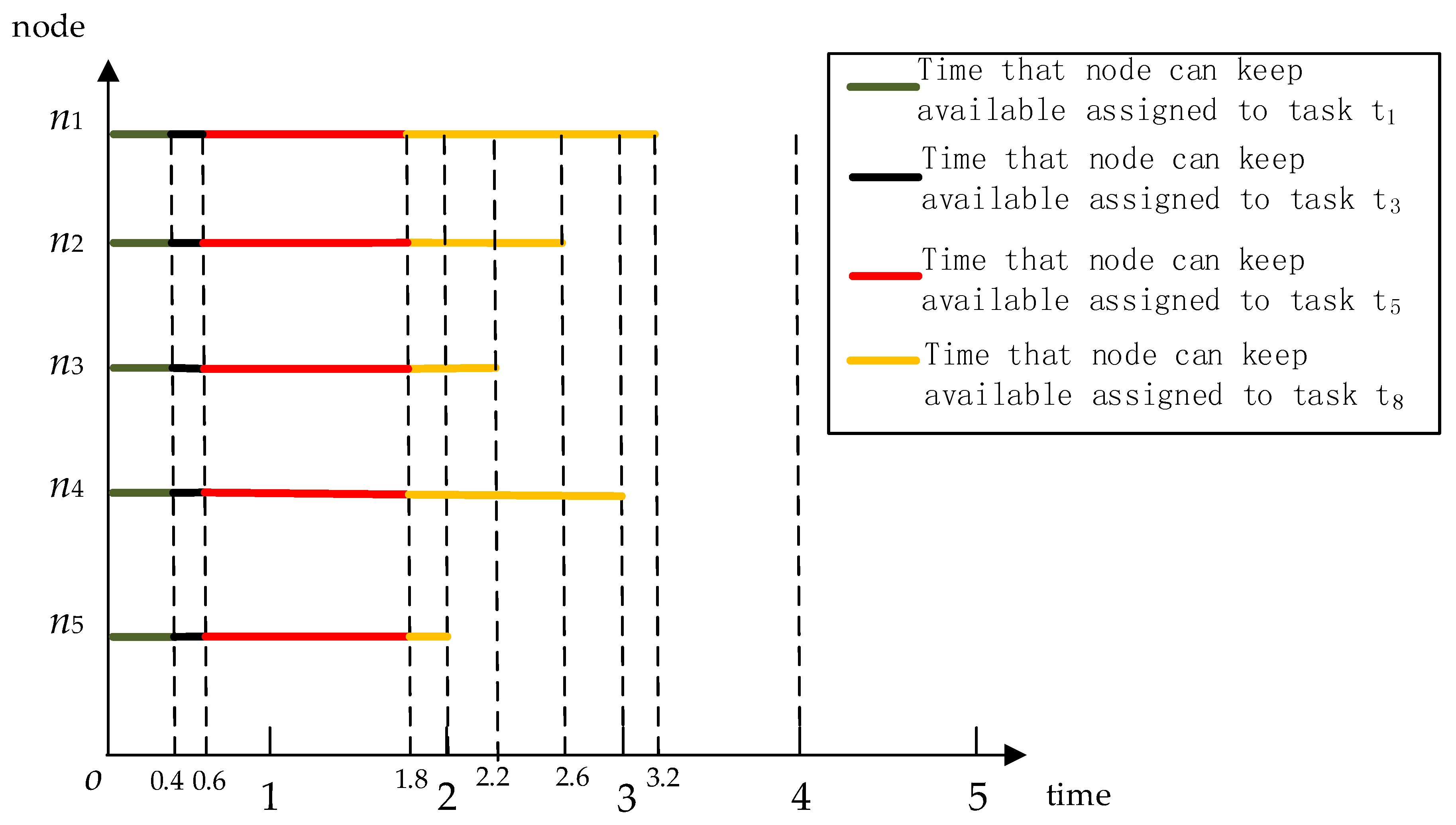

- Phase 1: The LTA strategy first fetches the first task of the list L and then assigns it to the appropriate node. Specifically, during the task assignment, nodes are not always available due to the reasons mentioned before, which makes the actual benefit to VCPs less than the expected benefit. Although the available time of a node is grouped by a large number of discrete time intervals, a statistical analysis shows that it is possible to obtain a relatively accurate availability interval using probabilistic law [22,24,45]. Based on this, in the LTA strategy, we first select the time interval when the node remains available to assign tasks, and we adopt the confidence interval proposed by Xu et al. [24] to predict the time interval. The definition of the confidence interval is as follows:Definition 4(Confidence Interval). The confidence interval of a node at time is denoted by , which means that can remain available before time and may be unavailable at any time in the time interval .Briefly, the upper bound of the confidence interval about node was , and the lower bound of the confidence interval can be calculated by interpolation. Specifically, we counted the actual availability time and the expected availability time of nearly 100 times and obtained ratios of the actual availability time and expected availability time about , and presence probabilities of different ratios, as shown in Figure 2a. In Figure 2a, represents the ratio of the actual availability time of to the expected availability time and the represents the presence probability of the different ratios.Therefore, according to Figure 2a, for any given of , the lower bound of the confidence interval and the presence probability of different actual availability times can be calculated by interpolation. For example, if the expected availability time of is 4 h, then according to Figure 2a, the lower bound of the confidence interval of is 2 h. Similarly, we can determine any node in the VCPs according to interpolation, and the confidence intervals of the nodes at time are shown as Figure 2b.

- Phase 2: According to the definition of the list, task could not be executed until that task was completed. Therefore, to make full use of the computing resources in VCPs, during the assigning task , we divided it into equal task slices and assigned these task slices to nodes in the VCPs, and the size of each task slice was . Specifically, we supposed that task was executed at time m, and the confidence interval of node n was . During assigning these task slices, for each node n in the VCPs, if ; then all the nodes could complete the task slice and assign the task slice whose size was to each node n. Otherwise, we assigned the task slice whose size was to each node n, and we added the rest of task to the head of the list and stopped assigning tasks to node n.

- Phase 3: Finally, if the list L is null, we assume that all tasks have been completed; if all time intervals of nodes keep available have been assigned, and there are still uncompleted tasks, the LTA strategy would not stop selecting time intervals until all nodes disconnect or tasks are completed.

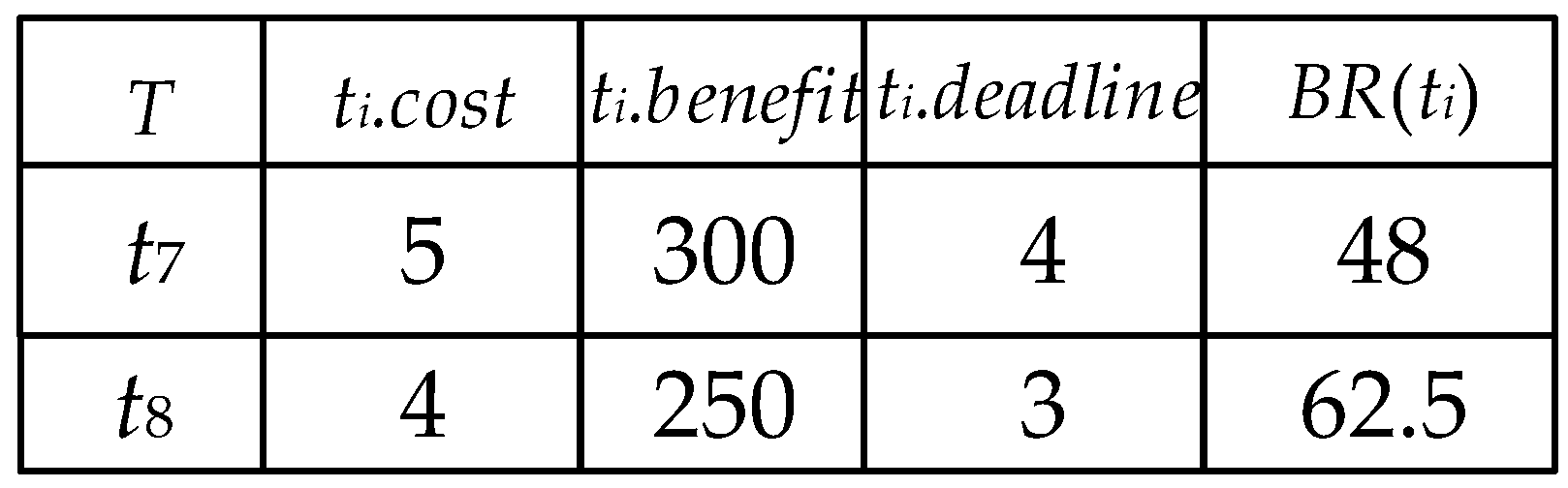

4.2. The Maximum Benefit Scheduling (MBS) Algorithm

- (1)

- As the computing power of the VCPs is limited, the benefit ratio of the task mainly used the benefit per unit time.

- (2)

- If a task is too large, computing it will affect receiving other tasks, which may reduce the benefits of the VCPs. Therefore, we should consider penalizing such large tasks when calculating the task benefit ratios.

- (3)

- If the deadline constraint of a task is too small, it is easy to miss its deadline constraint, which may affect the benefits of the VCPs. Therefore, we should consider punishing such emergency tasks (short deadline) when calculating the benefit ratio of the task.

| Algorithm 1 The EffectiveList function (). |

| Input: node set , task set , current time , the current valid list L. Output: valid list set

|

- (1)

- There are new nodes or tasks arriving at the VCPs;

- (2)

- There are no tasks that are being executed.

| Algorithm 2 The MBS algorithm. |

| Input: node set , task set , current time , the current valid list L. Output: the benefit of VCPs B

|

5. Experimental Evaluation

5.1. Experimental Results and Analysis on Static Task Sets

- The task set scale; i.e., the number of tasks included in the task set T.

- The average size of tasks in task set T, denoted by S, which was measured by the number of input file fragments. Specifically, in our experiment, ∀, the size of the task was a random value of the interval .

- The average deadline constraints of tasks in T, denoted by D. Specifically, in our experiment, ∀, the deadline constraint of the task was a random value of the interval .

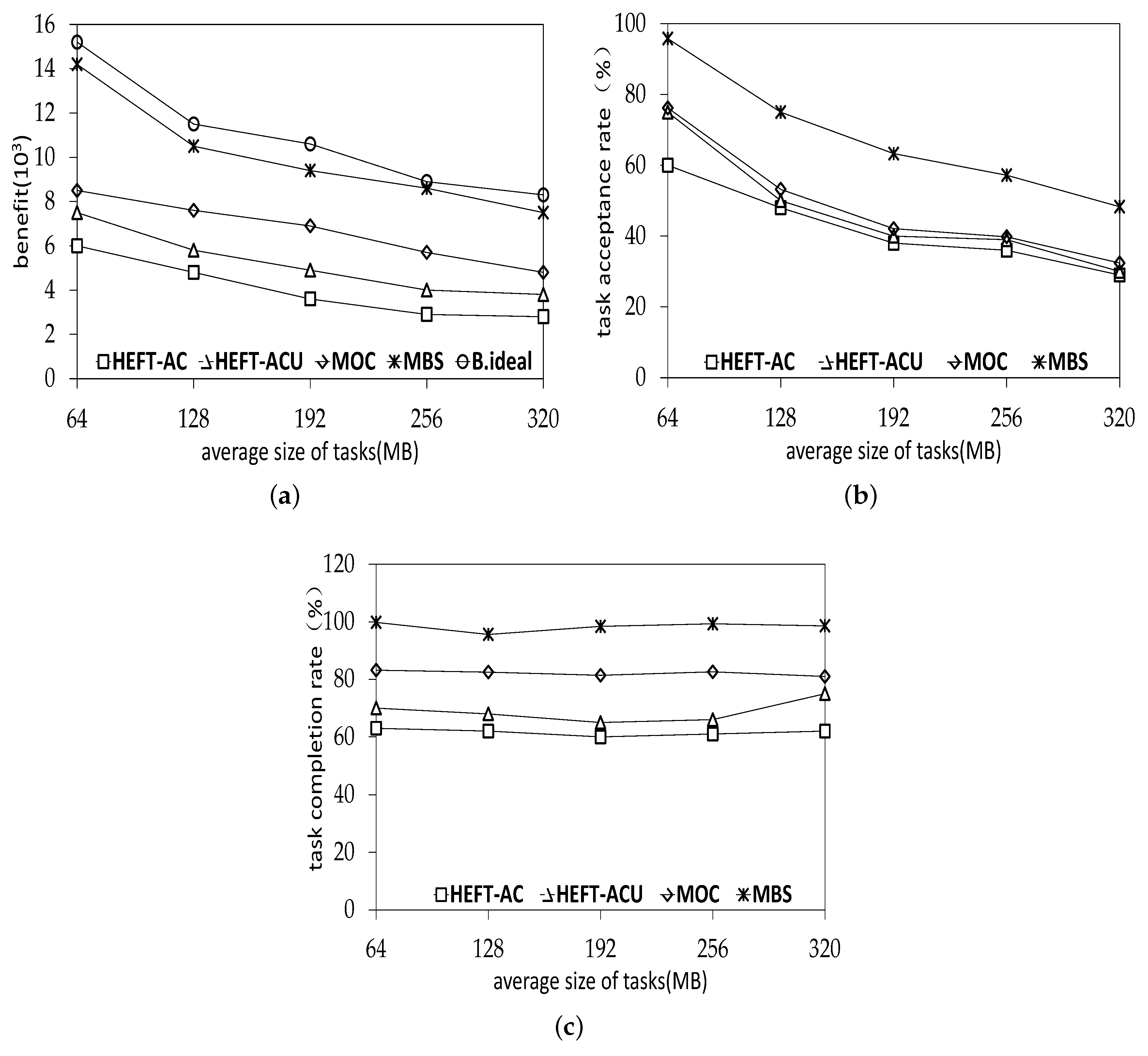

5.1.1. The Impact of the Average Size of Tasks

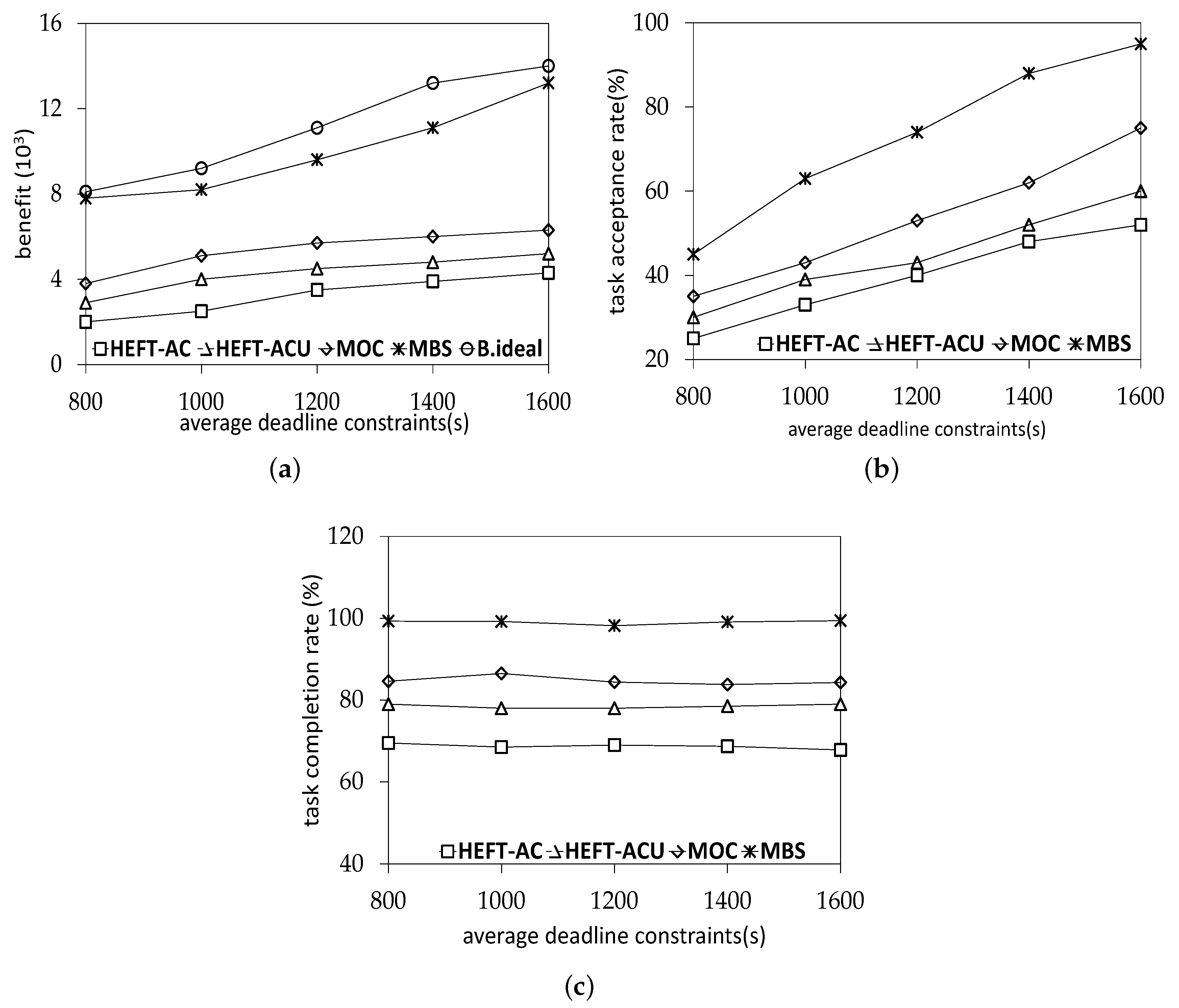

5.1.2. The Impact of the Average Deadline Constraints of Tasks

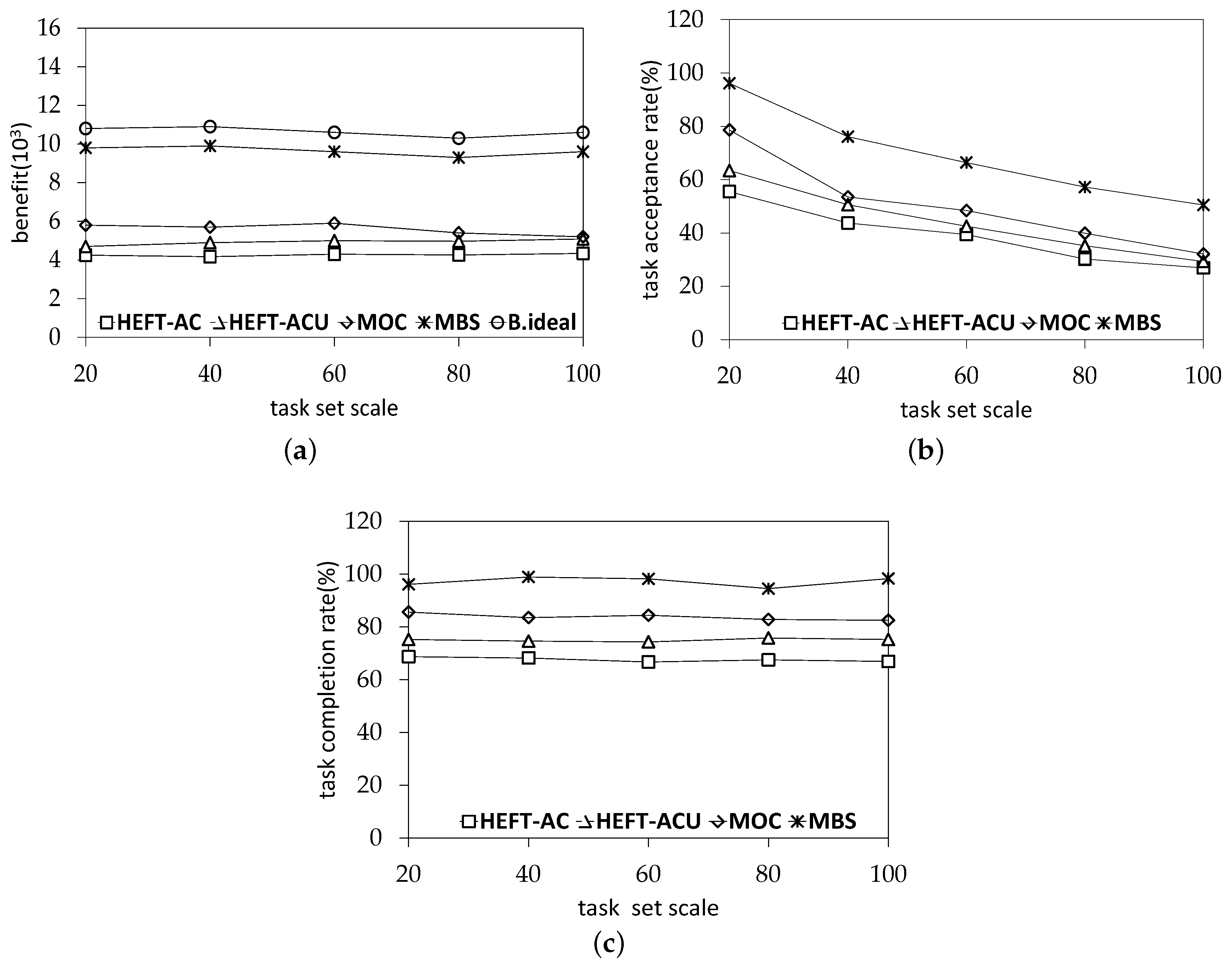

5.1.3. The Impact of the Task Set Scale

5.2. Experimental Results and the Analysis on Dynamic Task Sets

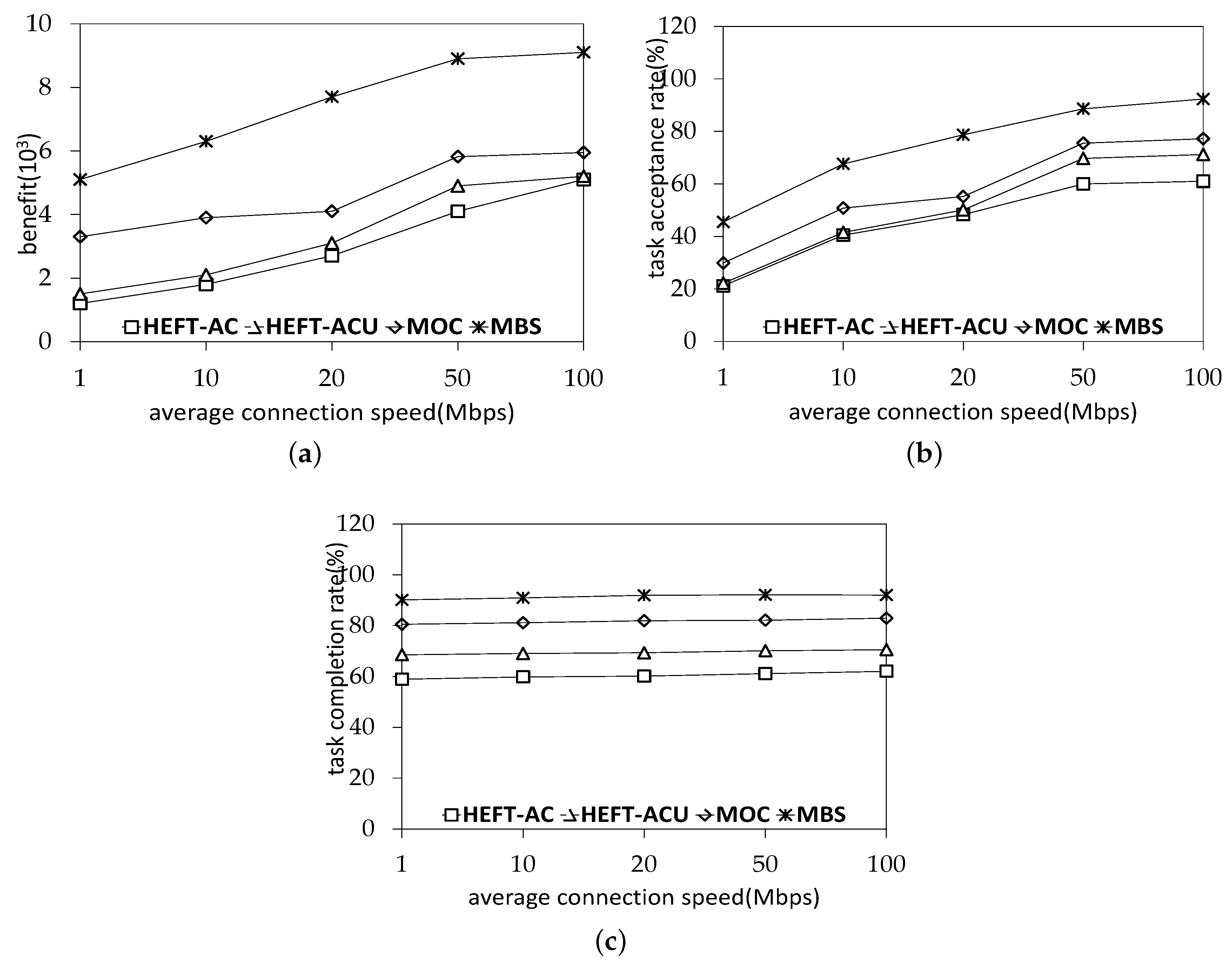

5.3. Experimental Results and the Analysis on Average Connection Speed

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Anderson, D.P. BOINC: A Platform for Volunteer Computing. arXiv 2019, arXiv:1903.01699. [Google Scholar] [CrossRef]

- Anderson, D.P.; Cobb, J.; Korpela, E.; Lebofsky, M.; Werthimer, D. SETI@home: An experiment in public-resource computing. Commun. ACM 2002, 45, 56–61. [Google Scholar] [CrossRef]

- Beberg, A.L.; Ensign, D.L.; Jayachandran, G.; Khaliq, S.; Pande, V.S. Folding@home: Lessons from Eight Years of Volunteer Distributed Computing. In Proceedings of the 2009 IEEE International Symposium on Parallel & Distributed Processing, Rome, Italy, 23–29 May 2009. [Google Scholar]

- Adambourdarios, C.; Wu, W.; Cameron, D.; Lancon, E.; Filipčič, A. ATLAS@Home: Harnessing Volunteer Computing for HEP; IOP Publishing: Bristol, UK, 2015. [Google Scholar]

- Jiang, J.C.; Wang, T.Q. An Operating System Architecture Design for Heterogeneous Multi-Core Processor Based on Multi-Master Model. Adv. Mater. Res. 2011, 187, 190–197. [Google Scholar] [CrossRef]

- Filep, L. Model for Improved Load Balancing in Volunteer Computing Platforms. In Proceedings of the European, Mediterranean, and Middle Eastern Conference on Information Systems, Limassol, Cyprus, 4–5 October 2018; pp. 131–143. [Google Scholar]

- Ostermann, S.; Iosup, A.; Yigitbasi, N.; Prodan, R.; Fahringer, T.; Epema, D. A Performance Analysis of EC2 Cloud Computing Services for Scientific Computing. In Proceedings of the International Conference on Cloud Computing, Bangalore, India, 21–25 September 2009; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Kondo, D.; Javadi, B.; Malecot, P.; Cappello, F.; Anderson, D. Cost-benefit analysis of Cloud Computing versus desktop grids. In Proceedings of the IEEE International Symposium on Parallel and Distributed Processing, Rome, Italy, 23–29 May 2009. [Google Scholar]

- Gridcoin. The Computation Power of a Blockchain Driving Science and Data Analysis. 2018. Available online: https://gridcoin.us/assets/img/whitepaper.pdf (accessed on 6 November 2019).

- Topcuoglu, H.; Hariri, S.; Wu, M.Y. Performance-effective and low-complexity task scheduling for heterogeneous computing. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 260–274. [Google Scholar] [CrossRef]

- Ding, S.; Wu, J.; Xie, G.; Zeng, G. A hybrid heuristic-genetic algorithm with adaptive parameters for static task scheduling in heterogeneous computing system. In Proceedings of the 14th IEEE International Conference on Embedded Software And Systems, Sydney, Australia, 1–4 August 2017; pp. 761–766. [Google Scholar]

- Boveiri, H.R.; Khayami, R. Static Homogeneous Multiprocessor Task Graph Scheduling Using Ant Colony Optimization. Ksii Trans. Internet Inform. Syst. 2017, 11, 3046–3070. [Google Scholar]

- Zhou, N.; Qi, D.; Wang, X.; Zheng, S. A static task scheduling algorithm for heterogeneous systems based on merging tasks and critical tasks. J. Comput. Methods Sci. Eng. 2017, 17, 1–18. [Google Scholar] [CrossRef]

- Liu, T.; Liu, Y.; Song, P. DScheduler: Dynamic Network Scheduling Method for MapReduce in Distributed Controllers. In Proceedings of the IEEE International Conference on Parallel Distributed Systems, Wuhan, China, 13–16 December 2017. [Google Scholar]

- Anderson, D.P.; McLeod, J. Local scheduling for volunteer computing. In Proceedings of the 2007 IEEE International Parallel and Distributed Processing Symposium, Rome, Italy, 26–30 March 2007; pp. 1–8. [Google Scholar]

- Guler, H.; Cambazoglu, B.B.; Ozkasap, O. Task allocation in volunteer computing networks under monetary budget constraint. Peer Netw. Appl. 2015, 8, 938–951. [Google Scholar] [CrossRef]

- Ghafarian, T.; Javadi, B. Cloud-aware data intensive workflow scheduling on volunteer computing systems. Future Gener. Comput. Syst. 2015, 51, 87–97. [Google Scholar] [CrossRef]

- Miyakoshi, Y.; Yasuda, S.; Watanabe, K.; Fukushi, M.; Nogami, Y. Dynamic Job Scheduling Method Based on Expected Probability of Completion of Voting in Volunteer Computing. In Proceedings of the Second International Symposium on Computing and Networking, Hokkaido, Japan, 8–11 December 2015; pp. 2132–2140. [Google Scholar]

- Canon, L.C.; Chang, A.K.W.; Robert, Y.; Vivien, F. Scheduling Independent Stochastic Tasks Under Deadline and Budget Constraints. In Proceedings of the International Symposium on Computer Architecture and High Performance Computing, Lyon, France, 24–27 September 2018; pp. 33–40. [Google Scholar]

- Chuprat, S.; Salleh, S. A deadline-based algorithm for dynamic task scheduling with precedence constraints. In Proceedings of the Conference on Iasted International Multi-Conference: Parallel and Distributed Computing and Networks, Innsbruck, Austria, 13–15 February 2007; ACTA Press: Calgary, AB, Canada, 2007. [Google Scholar]

- Yin, P.Y.; Yu, S.S.; Wang, P.P.; Wang, Y.T. Task allocation for maximizing reliability of a distributed system using hybrid particle swarm optimization. J. Syst. Softw. 2007, 80, 724–735. [Google Scholar] [CrossRef]

- Essafi, A.; Trystram, D.; Zaidi, Z. An efficient algorithm for scheduling jobs in volunteer computing platforms. In Proceedings of the Parallel Distributed Processing Symposium Workshops (IPDPSW), Phoenix, AZ, USA, 19–23 May 2014; pp. 68–76. [Google Scholar]

- Salehi, M.A.; Smith, J.; Maciejewski, A.A. Stochastic-based robust dynamic resource allocation for independent tasks in a heterogeneous computing system. J. Parallel Distrib. Comput. 2016, 97, 96–111. [Google Scholar] [CrossRef]

- Xu, L.; Qiao, J.; Lin, S.; Qi, R. Task Assignment Algorithm Based on Trust in Volunteer Computing Platforms. Information 2019, 10, 244. [Google Scholar] [CrossRef]

- Kang, Q.; He, H.; Wei, J. An effective iterated greedy algorithm for reliability-oriented task allocation in distributed computing systems. J. Parallel Distrib. Comput. 2013, 73, 1106–1115. [Google Scholar] [CrossRef]

- Omara, F.A.; Arafa, M.M. Genetic algorithms for task scheduling problem. J. Parallel Distrib. Comput. 2010, 70, 13–22. [Google Scholar] [CrossRef]

- Wu, A.S.; Yu, H.; Jin, S.; Lin, K.; Schiavone, G. An incremental genetic algorithm approach to multiprocessor scheduling. IEEE Trans. Paral. Distrib. Syst. 2004, 15, 824–834. [Google Scholar] [CrossRef]

- Page, A.J.; Naughton, T.J. Framework for Task Scheduling in Heterogeneous Distributed Computing Using Genetic Algorithms. Artif. Intell. Rev. 2005, 24, 415–429. [Google Scholar] [CrossRef]

- Chai, S.; Li, Y.; Wang, J.; Wu, C. A List Simulated Annealing Algorithm for Task Scheduling on Network-on-Chip. J. Comput. 2014, 9, 176–182. [Google Scholar] [CrossRef]

- Li, J.; Luo, Z.; Ferry, D.; David, F.; Agrawal, K.; Gill, D.; Lu, C. Global EDF scheduling for parallel real-time tasks. Real Time Syst. 2015, 51, 395–439. [Google Scholar] [CrossRef]

- Zhou, N.; Qi, D.; Wang, X.; Zheng, Z.; Lin, W. A list scheduling algorithm for heterogeneous systems based on a critical node cost table and pessimistic cost table. Concurr. Comput. Pract. Exp. 2017, 29, 1–11. [Google Scholar] [CrossRef]

- Liu, W.; Li, H.; Du, W.; Shi, F. Energy-Aware Task Clustering Scheduling Algorithm for Heterogeneous Clusters. In Proceedings of the IEEE/ACM International Conference on Green Computing and Communications, Chengdu, China, 4–5 August 2011; ACM: New York, NY, USA, 2011; pp. 34–37. [Google Scholar]

- Maurya, A.K.; Tripathi, A.K. ECP: A novel clustering-based technique to schedule precedence constrained tasks on multiprocessor computing systems. Computing 2019, 101, 1015–1039. [Google Scholar] [CrossRef]

- Kanemitsu, H.; Hanada, M.; Nakazato, H. Clustering-Based Task Scheduling in a Large Number of Heterogeneous Processors. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 3144–3157. [Google Scholar] [CrossRef]

- Tang, X.; Li, K.; Liao, G.; Li, R. List scheduling with duplication for heterogeneous computing systems. J. Parallel Distrib. Comput. 2010, 70, 323–329. [Google Scholar] [CrossRef]

- Bansal, S.; Kumar, P.; Singh, K. An improved duplication strategy for scheduling precedence constrained graphs in multiprocessor systems. IEEE Trans. Parallel Distrib. Syst. 2003, 14, 533–544. [Google Scholar] [CrossRef]

- Hu, M.; Luo, J.; Wang, Y.; Veeravalli, B. Adaptive Scheduling of Task Graphs with Dynamic Resilience. IEEE Trans. Comput. 2017, 66, 17–23. [Google Scholar] [CrossRef]

- Nayak, S.K.; Padhy, S.K.; Panigrahi, S.P. A novel algorithm for dynamic task scheduling. Future Gener. Comput. Syst. 2012, 28, 709–717. [Google Scholar] [CrossRef]

- Juarez, F.; Ejarque, J.; Badia, R.M. Dynamic energy-aware scheduling for parallel task-based application in cloud computing. Future Gener. Comput. Syst. 2018, 78, 257–271. [Google Scholar] [CrossRef]

- Andrew, J.; Thomas, J. Dynamic Task Scheduling using Genetic Algorithms for Heterogeneous Distributed Computing. In Proceedings of the 19th International Parallel and Distributed Processing Symposium (IPDPS 2005), Denver, CO, USA, 4–8 April 2005. [Google Scholar]

- Estrada, T.; Flores, D.A.; Taufer, M.; Teller, P.J.; Kerstens, A.; Anderson, D.P. The Effectiveness of Threshold-Based Scheduling Policies in BOINC Projects. In Proceedings of the IEEE International Conference on E-science and Grid Computing, Auckland, New Zealand, 24–27 October 2006; IEEE Computer Society: Washington, DC, USA, 2006. [Google Scholar]

- Xu, L.; Qiao, J.; Lin, S.; Zhang, W. Dynamic Task Scheduling Algorithm with Deadline Constraint in Heterogeneous Volunteer Computing Platforms. Future Internet 2019, 11, 121. [Google Scholar] [CrossRef]

- Sakai, T.; Fukushi, M. A Reliable Volunteer Computing System with Credibility-based Voting. J. Inform. Process. 2016, 24, 266–274. [Google Scholar] [CrossRef][Green Version]

- Bazinet, A.L.; Cummings, M.P. Subdividing Long-Running, Variable-Length Analyses Into Short, Fixed-Length BOINCWorkunits. J. Grid. Comput. 2016, 14, 1–13. [Google Scholar] [CrossRef]

- Javadi, B.; Kondo, D.; Vincent, J.M. Discovering statistical models of availability in large distributed systems: An empirical study of seti@ home. IEEE Trans. Parallel Distrib. Syst. 2011, 22, 1896–1903. [Google Scholar] [CrossRef]

| Notation | Definition |

|---|---|

| T | The set of tasks |

| The acceptable task set | |

| The ith task of the set T | |

| The number of tasks in the set T | |

| N | The set of nodes |

| The jth node of the set N | |

| The number of nodes in the set N | |

| The node contributes hours | |

| The trust value of the node | |

| The completion time needed of | |

| The benefit of completing within its deadline | |

| The deadline of | |

| B | The total benefit of |

| The ideal total benefit of | |

| The compensation coefficient | |

| The confidence interval of the node | |

| The ratio of the actual availability time of node n to its expected availability time | |

| The probability of different ratios | |

| The total time assigned to the node |

| Parameter | Default | Value Range |

|---|---|---|

| Average size of tasks (MB) | 128 | 64–320 |

| Task set scale | 50 | 20–100 |

| Average deadline constraints(s) | 1000 | 800–1600 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, L.; Qiao, J.; Lin, S.; Wang, X. Research on the Task Assignment Problem with Maximum Benefits in Volunteer Computing Platforms. Symmetry 2020, 12, 862. https://doi.org/10.3390/sym12050862

Xu L, Qiao J, Lin S, Wang X. Research on the Task Assignment Problem with Maximum Benefits in Volunteer Computing Platforms. Symmetry. 2020; 12(5):862. https://doi.org/10.3390/sym12050862

Chicago/Turabian StyleXu, Ling, Jianzhong Qiao, Shukuan Lin, and Xiaowei Wang. 2020. "Research on the Task Assignment Problem with Maximum Benefits in Volunteer Computing Platforms" Symmetry 12, no. 5: 862. https://doi.org/10.3390/sym12050862

APA StyleXu, L., Qiao, J., Lin, S., & Wang, X. (2020). Research on the Task Assignment Problem with Maximum Benefits in Volunteer Computing Platforms. Symmetry, 12(5), 862. https://doi.org/10.3390/sym12050862