Framework of Specific Description Generation for Aluminum Alloy Metallographic Image Based on Visual and Language Information Fusion

Abstract

1. Introduction

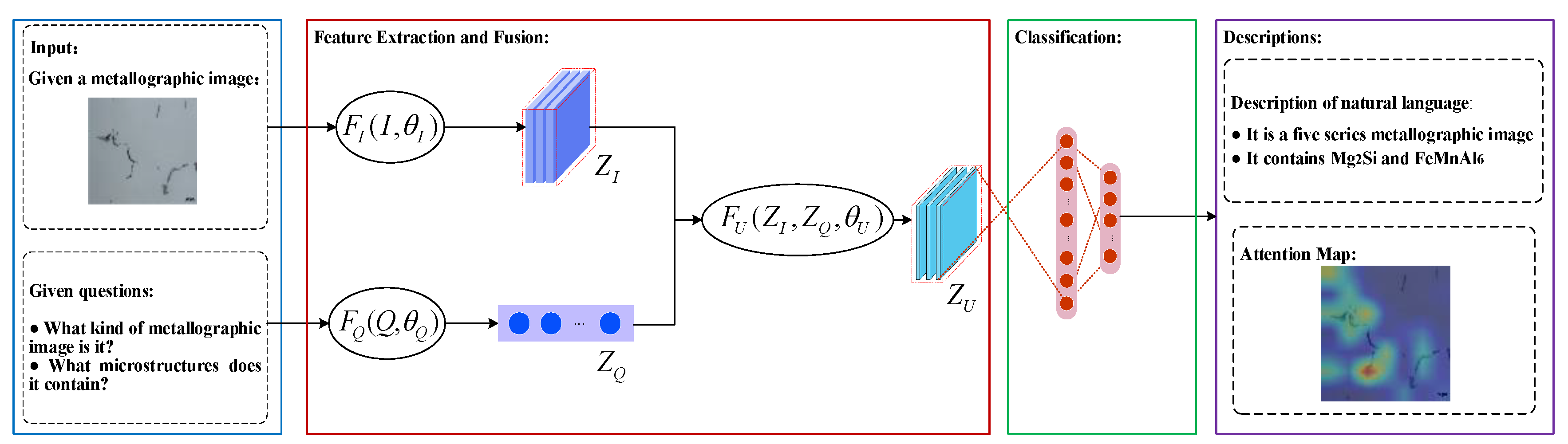

2. The Proposed Methods

2.1. Feature Extraction and Fusion Scheme

| Algorithm 1: The fusion strategy of latent visual and text features |

| Inputs: Latent visual feature , text feature and network parameter . Output: Fused feature . Step 1: Compute by Equations (3)–(5). Step 2: Compute by Equation (6). Step 3: Compute by Equation (7). Step 4: Compute by Equation (8). |

2.2. Classification Method

| Algorithm 2: Generation method of the language description for given aluminum alloy metallographic image |

| Inputs: Training dataset , new aluminum alloy metallographic image and question . Output: The description . Step 1: Initialization:

|

3. Experimental Results

3.1. Experimental Dataset

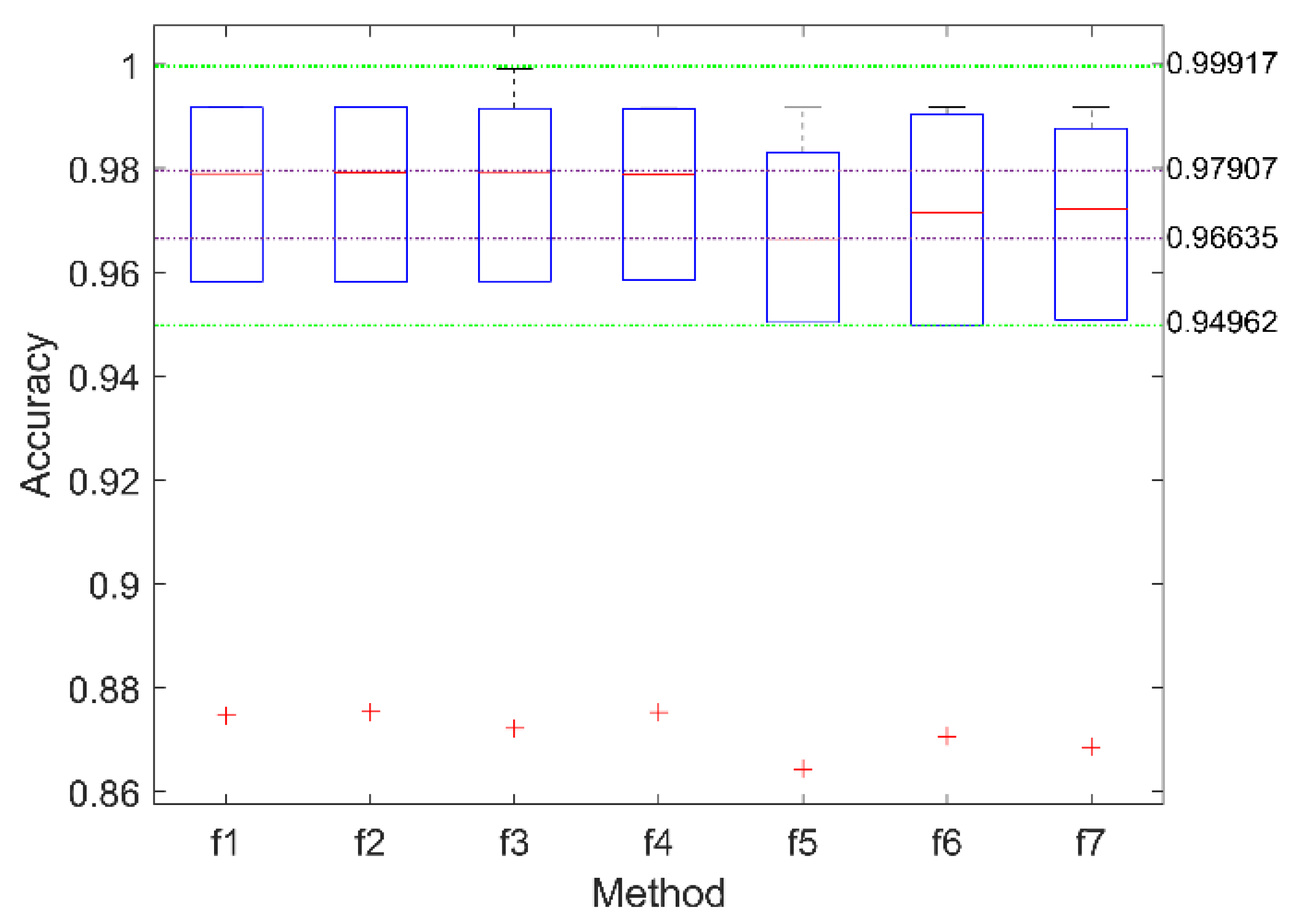

3.2. Performance Comparison

- ResNet152: 50 residual blocks (each residual block consists of three convolutional layers), two convolutional layers, and five pooling layers.

- ResNet34: 16 residual blocks (each residual block consists of two convolutional layers), two convolutional layers, and five pooling layers.

- CNN: four convolutional layers and four pooling layers.

- LSTM1024: Each LSTM unit consists of three gate control systems and one cell, output dimension is 1024.

- LSTM256: Similar to LSTM1024, output dimension is 256.

- TextCNN: 100-dimensional word embedding, three convolutional layers and three pooling layers; they are individuals.

- FastText: 100-dimensional word embedding and two linear layers.

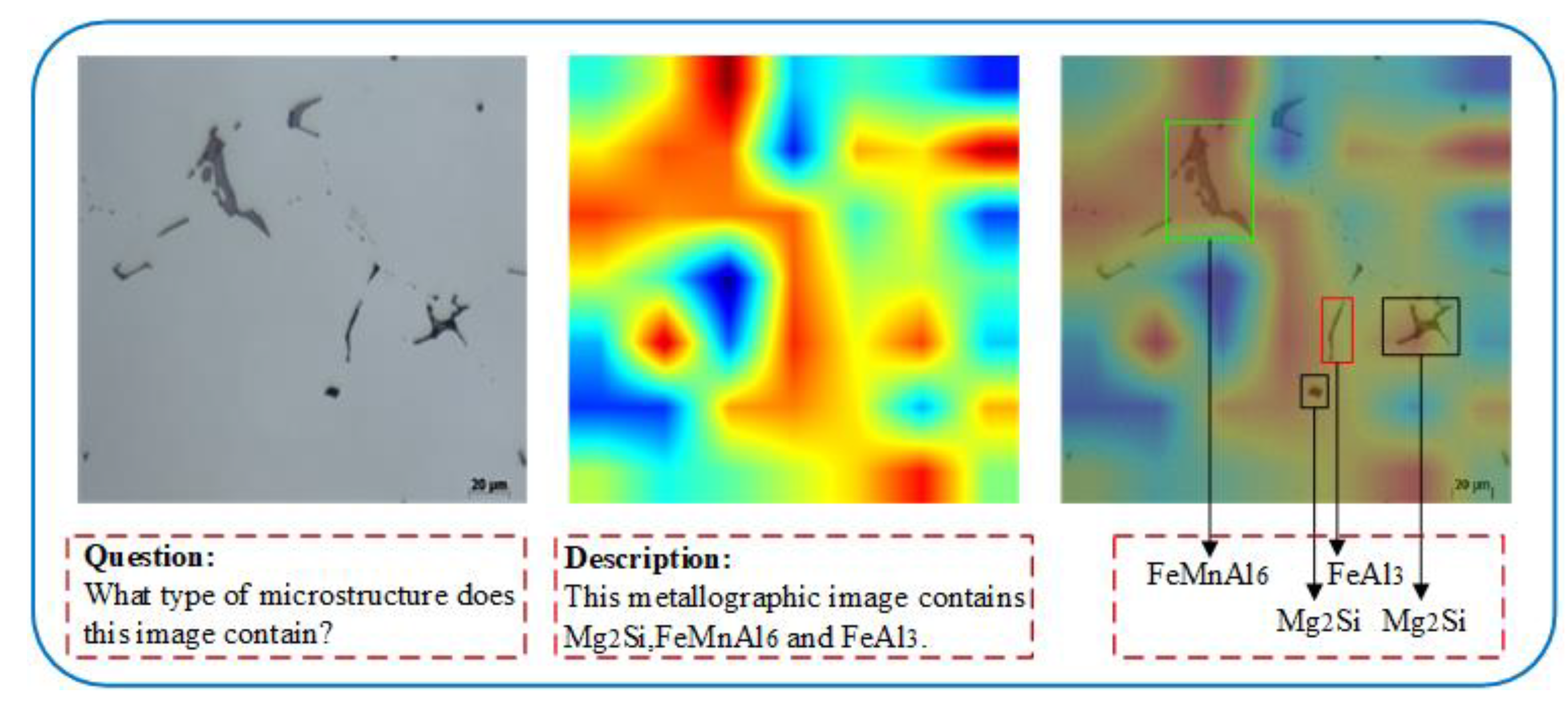

3.3. Attention Map Analysis

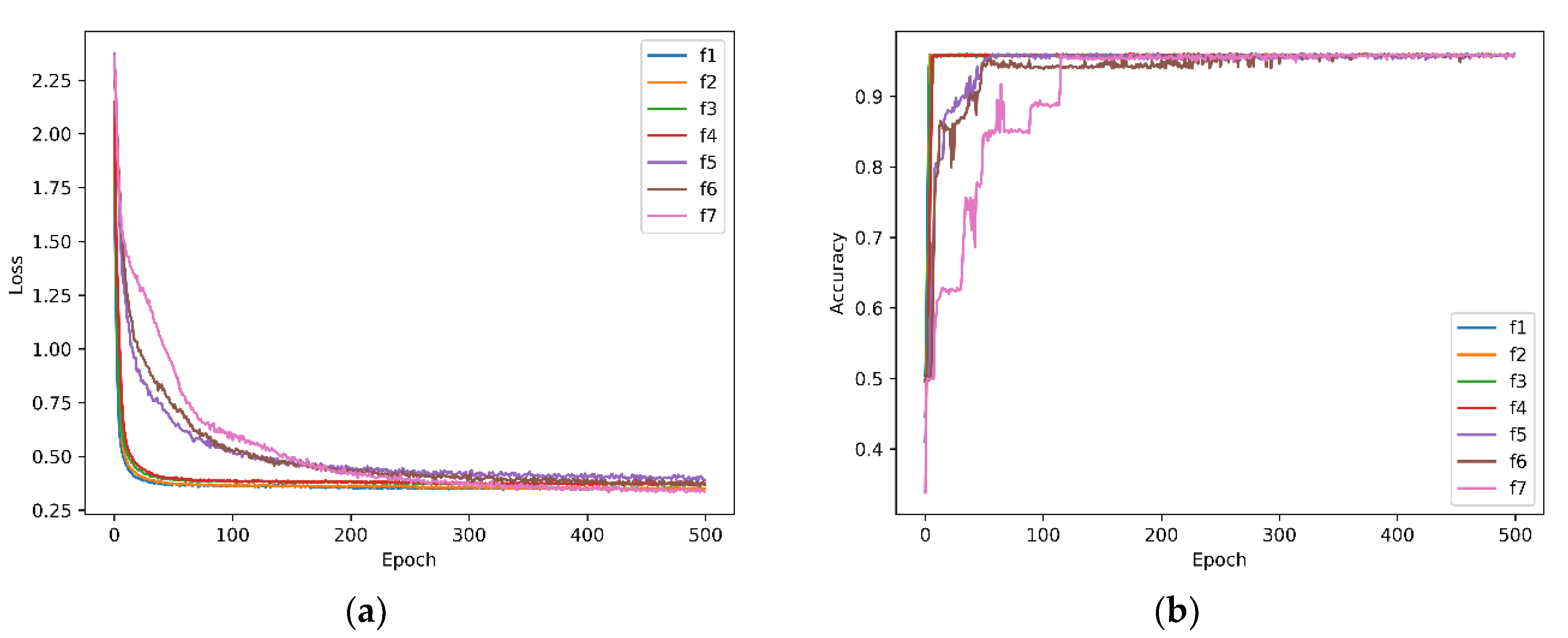

3.4. Convergence Analysis

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hirsch, J.; Al-Samman, T. Superior light metals by texture engineering: Optimized aluminum and magnesium alloys for automotive applications. Acta Mater. 2013, 61, 818–843. [Google Scholar] [CrossRef]

- Zhang, J.L.; Song, B.; Wei, Q.S.; Bourell, D.; Shi, Y.S. A review of selective laser melting of aluminum alloys: Processing, microstructure, property and developing trends. J. Mater. Sci. Technol. 2019, 35, 270–284. [Google Scholar] [CrossRef]

- Kadleckova, M.; Minarik, A.; Smolka, P.; Mracek, A.; Wrzecionko, E.; Novak, L.; Musilova, L.; Gajdosik, R. Preparation of Textured Surfaces on Aluminum-Alloy Substrates. Materials 2019, 12, 109. [Google Scholar] [CrossRef]

- Heinz, A.; Haszler, A.; Keidel, C. Recent development in aluminium alloys for aerospace applications. Mater. Sci. Eng. A 2000, 280, 102–107. [Google Scholar] [CrossRef]

- Du, Y.J.; Damron, M.; Tang, G. Inorganic/organic hybrid coatings for aircraft aluminum alloy substrates. Progress Org. Coat. 2001, 41, 226–232. [Google Scholar]

- Martin, J.H.; Yahata, B.D.; Hundley, J.M.; Mayer, J.A.; Schaedler, T.A.; Pollock, T.M. 3D printing of high-strength aluminium alloys. Nature 2017, 549, 365–369. [Google Scholar] [CrossRef]

- Girault, E.; Jacques, P.; Harlet, P. Metallographic Methods for Revealing the Multiphase Microstructure of TRIP-Assisted Steels. Mater. Charact. 1998, 40, 111–118. [Google Scholar] [CrossRef]

- Roy, N.; Samuel, A.M.; Samuel, F.H. Porosity formation in AI9 Wt Pct Si3 Wt Pct Cu alloy systems: Metallographic observations. Metall. Mater. Trans. A 1996, 27, 415–429. [Google Scholar] [CrossRef]

- Rohatgi, A.; Vecchio, K.S.; Gray, G.T. A metallographic and quantitative analysis of the influence of stacking fault energy on shock-hardening in Cu and Cu–Al alloys. Acta Mater. 2001, 49, 427–438. [Google Scholar] [CrossRef]

- Rajasekhar, K.; Harendranath, C.S.; Raman, R. Microstructural evolution during solidification of austenitic stainless steel weld metals: A color metallographic and electron microprobe analysis study. Mater. Charact. 1997, 38, 53–65. [Google Scholar] [CrossRef]

- Tamadon, A.; Pons, D.J.; Sued, K.; Clucas, D. Development of Metallographic Etchants for the Microstructure Evolution of A6082-T6 BFSW Welds. Metals 2017, 7, 423. [Google Scholar] [CrossRef]

- Moreira, F.D.; Xavier, F.G.; Gomes, S.L.; Santos, J.C.; Freitas, F.N.; Freitas, R.G. New Analysis Method Application in Metallographic Images through the Construction of Mosaics via Speeded up Robust Features and Scale Invariant Feature Transform. Materials 2015, 8, 3864–3882. [Google Scholar]

- Paulic, M.; Mocnik, D.; Ficko, M. Intelligent system for prediction of mechanical properties of material based on metallographic images. Teh. Vjesn.—Tech. Gaz. 2015, 22, 1419–1424. [Google Scholar]

- Povstyanoi, O.Y.; Sychuk, V.A.; Mcmillan, A. Metallographic Analysis and Microstructural Image Processing of Sandblasting Nozzles Produced by Powder Metallurgy Methods. Powder Metall. Metal Ceram. 2015, 54, 234–240. [Google Scholar] [CrossRef]

- Chowdhury, A.; Kautz, E.; Yener, B.; Lewis, D. Image driven machine learning methods for microstructure recognition. Comput. Mater. Sci. 2016, 123, 176–187. [Google Scholar] [CrossRef]

- DeCost, B.L.; Holm, E.A. A computer vision approach for automated analysis and classification of microstructural image data. Comput. Mater. Sci. 2015, 110, 126–133. [Google Scholar] [CrossRef]

- Gola, J. Advanced microstructure classification by data mining methods. Comput. Mater. Sci. 2018, 148, 324–335. [Google Scholar] [CrossRef]

- Jiang, F.; Gu, Q.; Hao, H. A method for automatic grain segmentation of multi-angle cross-polarized microscopic images of sandstone. Comput. Geosci. 2018, 115, 143–153. [Google Scholar] [CrossRef]

- De Albuquerque, V.H.; de Alexandria, A.R.; Cortez, P.C.; Tavares, J.M. Evaluation of multilayer perceptron and self-organizing map neural network topologies applied on microstructure segmentation from metallographic images. NDT E Int. 2009, 42, 644–651. [Google Scholar] [CrossRef]

- Bulgarevich, S. Pattern recognition with machine learning on optical microscopy images of typical metallurgical microstructures. Sci. Rep. 2018, 8, 2078. [Google Scholar] [CrossRef]

- De Albuquerque, V.H.; Silva, C.C.; Menezes, T.I.; Farias, J.P.; Tavares, J.M. Automatic evaluation of nickel alloy secondary phases from sem images. Microsc. Res. Tech. 2011, 74, 36–46. [Google Scholar] [CrossRef] [PubMed]

- Papa, J.P.; Nakamura, R.Y.; De Albuquerque, V.H.; Falcão, A.X.; Tavares, J.M. Computer techniques towards the automatic characterization of graphite particles in metallographic images of industrial materials. Expert Syst. Appl. 2013, 40, 590–597. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y.; Goodfellow, J.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Azimi, S.M.; Britz, D.; Engstler, M.; Fritz, M.; Mücklich, F. Advanced steel microstructure classification by deep learning methods. Sci. Rep. 2018, 8, 2128. [Google Scholar] [CrossRef] [PubMed]

- Ma, B.; Ban, X.; Huang, H.; Chen, Y.; Liu, W.; Zhi, Y. Deep learning-based image segmentation for al-la alloy microscopic images. Symmetry 2018, 10, 107. [Google Scholar] [CrossRef]

- Zhang, S.; Chen, D.; Liu, S.; Zhang, P.; Zhao, W.C. Aluminum alloy microstructural segmentation method based on simple noniterative clustering and adaptive density-based spatial clustering of applications with noise. J. Electron. Imaging 2019, 28, 33035. [Google Scholar] [CrossRef]

- Campbell, A.; Murray, P.; Yakushina, E.; Marshall, S.; Ion, W. New methods for automatic quantification of microstructural features using digital image processing. Mater. Design 2018, 141, 395–406. [Google Scholar] [CrossRef]

- Campbell, A.; Murray, P.; Yakushina, E.; Marshall, S.; Ion, W. Automated microstructural analysis of titanium alloys using digital image processing. IOP Conf. Ser. Mater. Sci. Eng. 2017, 179, 012011. [Google Scholar] [CrossRef]

- Zhenying, X.; Jiandong, Z.; Qi, Z.; Yamba, P. Algorithm Based on Regional Separation for Automatic Grain Boundary Extraction Using Improved Mean Shift Method. Surf. Topogr. Metrol. Prop. 2018, 6, 25001. [Google Scholar] [CrossRef]

- Journaux, S.; Pierre, G.; Thauvin, G. Evaluating creep in metals by grain boundary extraction using directional wavelets and mathematical morphology. J. Mater. Process. Technol. 2001, 117, 132–145. [Google Scholar] [CrossRef]

- Karpathy, A.; Fei-Fei, L. Deep Visual-Semantic Alignments for Generating Image Descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 39, 664–676. [Google Scholar]

- Zhao, G.; Ahonen, T.; Matas, J.; Pietikainen, M. Rotation-Invariant Image and Video Description with Local Binary Pattern Features. IEEE Trans. Image Process. 2011, 21, 1465–1477. [Google Scholar] [CrossRef]

- Kulkarni, G.; Premraj, V.; Ordonez, V.; Dhar, S.; Li, S.; Choi, Y.; Berg, A.C.; Berg, T.L. BabyTalk: Understanding and Generating Simple Image Descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 35, 2891–2903. [Google Scholar] [CrossRef]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. Vqa: Visual question answering. Int. J. Comput. Vis. 2015. [Google Scholar] [CrossRef]

- Wu, Q.; Teney, D.; Wang, P.; Shen, C.; Dick, A.; van den Hengel, A. Visual Question Answering: A Survey of Methods and Datasets. Comput. Vis. Image Underst. 2017, 163, 21–40. [Google Scholar] [CrossRef]

- Kazemi, V.; Elqursh, A. Show, Ask, Attend, and Answer: A Strong Baseline for Visual Question Answering. arXiv 2017, arXiv:1704.03162. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR); IEEE Computer Society: Washington DC, USA, 2016. [Google Scholar]

- Kwan, C.; Chou, B.; Yang, J.; Rangamani, A.; Tran, T.; Zhang, J.; Etienne-Cummings, R. Deep Learning-Based Target Tracking and Classification for Low Quality Videos Using Coded Aperture Cameras. Sensors 2019, 19, 3702. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B.; Yang, J.; Tran, T. Deep Learning Based Target Tracking and Classification for Infrared Videos Using Compressive. J. Signal Inf. Process. 2019, 10, 167. [Google Scholar] [CrossRef][Green Version]

- Gers, F. Long Short-Term Memory in Recurrent Neural Networks. Ph.D. Thesis, Swiss Federal Institute of Technology, Lausanne, Switzerland, 2001. [Google Scholar] [CrossRef]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. In European Association of Computational Linguistics (EACL); Association for Computational Linguistics: Stroudsburg, PA, USA, 2017. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

| Questions (Q) |

|

| Descriptions (C) |

|

| Methods | ||||||||

|---|---|---|---|---|---|---|---|---|

| ResNet152 | ✓ | ✓ | ||||||

| ResNet34 | ✓ | ✓ | ||||||

| CNN | ✓ | ✓ | ✓ | |||||

| LSTM1024 | ✓ | ✓ | ||||||

| LSTM256 | ✓ | ✓ | ✓ | |||||

| TextCNN | ✓ | |||||||

| FastText | ✓ | |||||||

| ACC | 1 | 2 | 3 | 4 | 5 | 6 | Average |

|---|---|---|---|---|---|---|---|

| 0.87461 | 0.99168 | 0.99179 | 0.99140 | 0.95819 | 0.96650 | 0.96236 | |

| 0.87538 | 0.99167 | 0.99179 | 0.99173 | 0.95808 | 0.96647 | 0.96252 | |

| 0.87216 | 0.99123 | 0.99152 | 0.99917 | 0.95824 | 0.96680 | 0.96319 | |

| 0.87527 | 0.99146 | 0.99140 | 0.99157 | 0.95841 | 0.96630 | 0.96241 | |

| 0.86434 | 0.99191 | 0.97439 | 0.98305 | 0.95832 | 0.95028 | 0.95371 | |

| 0.87059 | 0.99180 | 0.99030 | 0.98471 | 0.95825 | 0.94962 | 0.95754 | |

| 0.86858 | 0.99168 | 0.98622 | 0.98766 | 0.95796 | 0.95076 | 0.95714 |

| Training Time | 1 | 2 | 3 | 4 | 5 | 6 | Average |

|---|---|---|---|---|---|---|---|

| 27 min 25.9 s | 35 min 0.6 s | 34 min 4.4 s | 34 min 5.6 s | 35 min 17.3 s | 34 min 35.7 s | 33 min 4.8 s | |

| 24 min 12.4 s | 33 min 51.1 s | 26 min 28.1 s | 32 min 3.6 s | 32 min 46.8 s | 33 min 2.2 s | 30 min 24.0 s | |

| 16 min 12.4 s | 17 min 14.6 s | 17 min 49.1 s | 16 min 47.8 s | 16 min 52.6 s | 16 min 12.5 s | 16 min 51.5 s | |

| 14 min 32.5 s | 14 min 26.8 s | 15 min 58.6 s | 14 min 40.2 s | 14 min 14.6 s | 14 min 30.6 s | 14 min 43.9 s | |

| 48 min 56.6 s | 49 min 4.2 s | 49 min 17.5 s | 44 min 23.3 s | 48 min 4.1 s | 47 min 40.5 s | 47 min 54.6 s | |

| 35 min 17.4 s | 38 min 44.2 s | 40 min 2.1 s | 50 min 12.1 s | 45 min 37.7 s | 45 min 4.3 s | 40 min 57.1 s | |

| 46 min 9.6 s | 40 min 31.5 s | 41 min 56.0 s | 41 min 56.9 s | 41 min 16.4 s | 38 min 50.9 s | 41 min 46.9 s |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, D.; Liu, Y.; Liu, S.; Liu, F.; Chen, Y. Framework of Specific Description Generation for Aluminum Alloy Metallographic Image Based on Visual and Language Information Fusion. Symmetry 2020, 12, 771. https://doi.org/10.3390/sym12050771

Chen D, Liu Y, Liu S, Liu F, Chen Y. Framework of Specific Description Generation for Aluminum Alloy Metallographic Image Based on Visual and Language Information Fusion. Symmetry. 2020; 12(5):771. https://doi.org/10.3390/sym12050771

Chicago/Turabian StyleChen, Dali, Yang Liu, Shixin Liu, Fang Liu, and Yangquan Chen. 2020. "Framework of Specific Description Generation for Aluminum Alloy Metallographic Image Based on Visual and Language Information Fusion" Symmetry 12, no. 5: 771. https://doi.org/10.3390/sym12050771

APA StyleChen, D., Liu, Y., Liu, S., Liu, F., & Chen, Y. (2020). Framework of Specific Description Generation for Aluminum Alloy Metallographic Image Based on Visual and Language Information Fusion. Symmetry, 12(5), 771. https://doi.org/10.3390/sym12050771