1. Introduction

Symmetry is an important—if not the most important—predictor of aesthetic evaluation for many types of images. It can be found everywhere [

1,

2,

3] and it is often considered to be relevant for the beauty of an object [

4,

5,

6,

7,

8,

9]. Symmetry is also one of the classical Gestalt principles that enable perceptual grouping and determine “good shape” [

10,

11]. Symmetry can be rapidly detected—especially if it is vertical (bilateral) symmetry [

2,

3,

12,

13,

14,

15]. On the other hand, symmetry has a rather low standing in art, where it is considered to be at best one of many other factors influencing aesthetic evaluation. Also, it has been assumed that preference for symmetry decreases with art training [

16,

17,

18,

19]. However, symmetry is clearly especially relevant for the aesthetics of abstract patterns [

7,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31] and human faces [

32,

33,

34,

35,

36,

37,

38,

39].

Many researchers claimed that symmetry is the most important predictor of aesthetic evaluation in abstract patterns [

7,

21,

22,

23,

25,

27,

30,

31,

40]. Though, concerning the aesthetic appeal of small differences to perfect symmetry, results are somewhat mixed. McManus [

17] argued that while symmetry is often linked to beauty, it can also be perceived as sterile and rigid. Consequently, deviations from symmetry are relatively common in art. Specifically, he found that almost 30% of the investigated polyptychs from the Italian Renaissance contain a form of deliberately broken, thus imperfect, symmetry. While this does not necessarily mean that such deviations from symmetry increased aesthetic appeal, and they probably also had some symbolic meanings, McManus concludes from this and other examples that some asymmetry can generate interest and excitement in artworks. For abstract patterns, results are also not entirely clear. While some researchers found that small deviations from symmetry are not liked [

27], others found—using an unusual gaze-driven evolutionary algorithm to make participants produce instead of only evaluate stimuli—that small differences from perfect symmetry can be at least tolerated [

41]. It seems likely that these mixed findings are the result of different types of applied stimuli and/or evaluation (respectively production) methods. The human face is a special case of a symmetric object, whose evaluations of beauty and attractiveness are thought to be deeply rooted in biology and evolutionary factors. Symmetry preference in human faces is a complex process, which has been explained in different ways. First, symmetry can be interpreted as a biological signal for good genes and good health [

42,

43,

44]. Second, symmetry preference could also simply be a by-product of efficient object recognition processes [

45]—i.e., fluent processing [

46,

47,

48]. However, some researchers [

49] found a gender difference in symmetry preference for neutral stimuli and concluded that perceptual efficiency is probably not enough to explain preference for symmetry in objects. Also, there is some evidence that natural, slightly asymmetric faces might be preferred over (artificial) perfectly symmetric faces [

50,

51,

52]. However, these two different explanations are not necessarily in conflict and might be both valid [

38] and could both be interpreted as biological explanations of symmetry preference in general.

Symmetry is also linked to the concept of complexity as symmetry is a form of order and redundancy that is thought to reduce perceived complexity [

19,

28,

46,

53,

54] and thus it can also be seen as an aspect of visual complexity. There is still some debate about the influence of complexity on aesthetic evaluation. While Berlyne [

55,

56] suggested a relationship between complexity and pleasingness in the form of an inverted U-shape (thus, medium complexity is preferred), recent research questioned the generality of this assumption [

21,

53,

57]. The actual relationship between complexity and preference likely depends on type of used images, range of complexity, individual differences, etc. Furthermore, art training might also alter the influence of complexity on aesthetic processing [

58]. However, in this paper we will not focus on the influence of visual complexity on aesthetic evaluation and therefore applied stimuli that were matched in terms of perceived visual complexity.

Another important factor that is known to influence aesthetic evaluation is art expertise—thus, an attribute of the participants (or viewers) and not the stimuli (or artworks/images). Aesthetic processing differs between laypersons and art experts [

5,

6,

19,

58,

59,

60,

61]. For instance, following the assumptions of the model of aesthetic appreciation and aesthetic judgments [

5,

6], it can be assumed that higher stages of aesthetic processing like cognitive mastering are only reached by art experts [

62]. Furthermore, it was found that art expertise even modulates neural activity during aesthetic judgments [

63]. Specifically, experts have domain-specific knowledge and interpret artworks more in relation to artistic style, while laypersons do refer more to personal experiences and feelings [

64]. Also, art experts often rate artworks higher than art novices. More differences between art experts and novices were found in cognition-oriented judgments such as beauty and liking, than in affective judgments as valence and arousal [

65]. While emotions play an important role for art appreciation in general, non-experts show higher correlations between emotions and understanding than experts [

66]. In contrast, art experts show attenuated reactions to emotionally-valenced art [

67]. Furthermore, experts often find art more interesting and easier to understand (or less confusing). This is particularly the case regarding abstract or complex art [

58,

68]. Interestingly, degrading of art images (from figurative to abstract or from color to black-and-white) seems to have a stronger negative effect on laypersons than on experts. Thus, artistic taste appears to change with artistic training [

69]. Also, it was found that level of abstractness of paintings influences the aesthetic judgments and emotional valence of laypersons but not of experts. Laypersons’ aesthetic and emotional ratings were highest for representational and lowest for abstract paintings, while experts were independent of abstraction level [

70]. These results were also confirmed in a recent study [

71] that found that experts rated abstract artworks as more interesting and more beautiful than representational artworks. While non-experts strongly distinguished between abstract and representational artworks, experts appraised these two types of art similarly. Also, it has been suggested that symmetry preference decreases with art training [

16,

18,

19]. Thus, level of abstraction, and to a lesser extent also symmetry, seem to be important properties of artworks, which are differently processed by art experts and laypersons.

In the present study, we use abstract black-and-white patterns as stimuli, similar to those applied by Gartus and Leder [

27,

28]. While symmetry is a known strong predictor of aesthetic appreciation, it can be assumed that symmetry preference also differs between art experts and laypersons [

17,

19]. A recent study [

18] directly compared preference for symmetry in art experts and laypersons. Consistent with many previous studies, it was found that symmetrical patterns were generally preferred over asymmetrical patterns. Also, using implicit evaluation (Implicit Association Test, IAT), no difference in preference for symmetrical over asymmetrical patterns was found in art experts vs. laypersons. However, when asked to rate the patterns explicitly, beauty ratings increased for asymmetrical patterns in experts, while they still rated symmetrical patterns higher than asymmetrical patterns. Another recent study [

16] employing only explicit beauty ratings even found complete contrasting preferences between art experts and laypersons; experts did prefer asymmetric over symmetric patterns, while it was the complete opposite for laypersons.

So, what makes the difference between an art expert and a layperson regarding aesthetic preferences? While some of the differences involve the interplay of cognitive and emotional processing [

67], we also assume another distinction that is more related to visual and contextual processing. For instance, some authors [

72] suggested that the aesthetic preferences of laypersons evolved from direct natural selection, while the aesthetic preferences of art experts are also indirectly selected via an ongoing process of coevolution based on prestige-driven social learning. Thus, experts’ appreciation of artworks is more based on the prestige of the associated context and the admiration of the artist. This obviously requires some knowledge, which is acquired during the intense study of art and art history. That could explain why modern art (that does not always appeal to the senses as more traditional or popular art) is more likely to be appreciated by art experts, while laypersons are more attracted by what is sometimes called “easy beauty” [

73]. Now, as has been discussed above, our visual system has a preference for mirror (bilateral) symmetry [

3]. Thus, symmetry could possibly be interpreted as a form of naturally evolved “easy beauty”, which is less appreciated by art experts. Furthermore, it is known that laypersons prefer representational over abstract art [

70] and vice versa [

71]. In the same way as for symmetry, one could argue that our brain and visual system evolved to rapidly recognize the gist of a visual scene [

74,

75] and thus, again, representational art could be interpreted as a form of more “easy beauty” compared to abstract art.

Because of the discussed importance of level of abstraction in aesthetic evaluation, we wanted to not just use abstract patterns, but also include more representational patterns in this study. To this end, we employed similar “abstract” patterns as used in Gartus and Leder [

27,

28]. However, already during the generation of these patterns, we had observed that a surprisingly large number of patterns having vertical (bilateral) symmetry could be perceived as (human or animal) faces. While in our previous studies, we have excluded such “faces” precisely for the fact that they are no longer abstract patterns in the full sense of the word (i.e., they do represent objects of the real world—namely faces), in the present study, we deliberately included them in our set of stimuli. Thus, the main factors we investigated in this study are the stimulus-dependent factors of level of abstractness (or “face-likeness”) and degree of symmetry, plus the participant-dependent factor of art expertise. In addition to fully symmetric and asymmetric patterns, we also included stimuli that only deviated slightly from full symmetry (“broken” symmetric patterns), as this factor showed a strong influence on aesthetic evaluation and perceived visual complexity in previous studies [

27,

28].

During the experiment, we also applied a mood questionnaire [

76] and a questionnaire measuring need for cognitive closure [

77] to be able to control for these two factors. We assumed that positive mood would generally increase the ratings and need for closure would increase ratings specifically for symmetry as it also is related to preference for order. Furthermore, an art expertise questionnaire [

78] was administered to obtain an objective measure of art expertise as a major factor in our study.

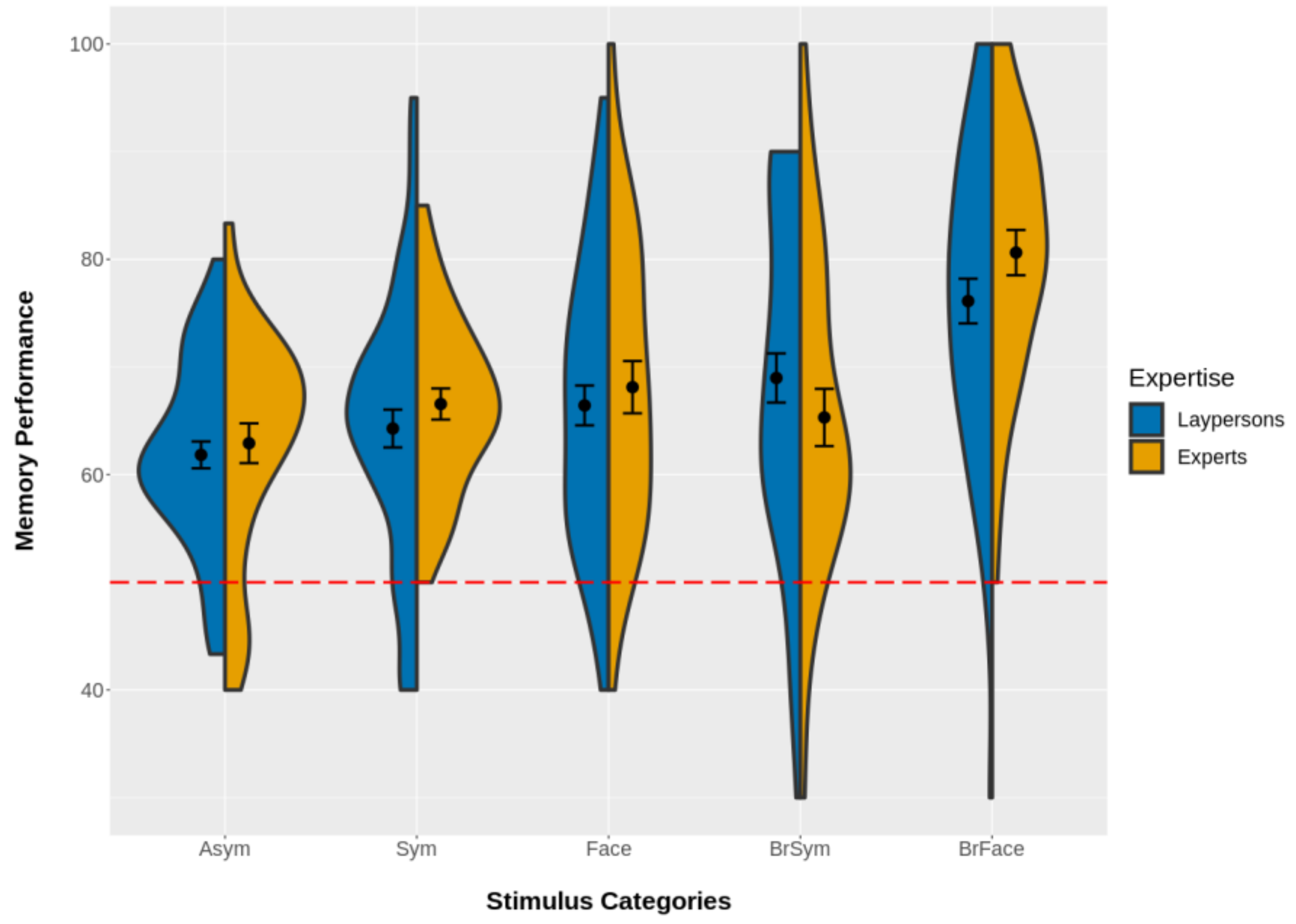

In sum, the main hypotheses for the present study are that symmetry is generally preferred over asymmetry (or broken symmetry), but that this effect should be less pronounced (or even reversed) for art experts. Also, representational (“face-like”) patterns should be rated higher by laypersons than by art experts, because laypersons prefer representational over abstract artworks. We expected these hypothesized response patterns for both applied rating scales of liking and interest. Furthermore, art experts may have developed sophisticated encoding schemes during their extensive training that might potentially change their cognitive processing of visual patterns and as well help to improve memory performance. To rule out such cognitive processing differences between art experts and laypersons, which potentially could explain differences in aesthetic evaluation, we also added a memory recognition task using newly and previously presented patterns as a second part to our experiment. In addition, we also wanted to explore whether some specific stimulus types (e.g., face-like patterns) are in general easier to remember than others.

2. Materials and Methods

2.1. Stimuli

The employed stimuli were similar to those used in Gartus and Leder [

27,

28]. They consisted of 36 to 44 black triangles, placed in an 8 × 8 regular grid on a white background showing several types of symmetry and were generated by a stochastic optimization algorithm implemented in MATLAB R2013a (The MathWorks, Inc., Natick, MA, USA). In this study, asymmetric patterns and patterns having a vertical symmetry axis were used. In addition, we also used “broken” patterns that slightly deviated from perfect vertical symmetry [

27,

28]. Patterns with a vertical symmetry axis are quite often perceived as faces (the pareidolia phenomenon). To systematically study this phenomenon, 300 preselected patterns with a vertical symmetry axis were examined in three prestudies to select the stimuli for the main study.

In Prestudy 1, patterns which are either frequently or rarely perceived as faces were collected. Ten people participated in this prestudy (4 men, 6 women, age range = 25–31; mean age = 27.8; all students of psychology at the University of Vienna). Participants had to state whether they see a face in a pattern or not. As a result, 217 patterns were selected: 115 patterns that were frequently perceived as faces and 102 that were only rarely or never perceived as faces.

In Prestudy 2, we additionally collected ratings of the easiness of perceiving a face in a pattern for each of the 217 patterns selected in Prestudy 1 on a 7-point scale. Ten people participated in this prestudy (4 men, 6 women, age range = 25–37; mean age = 29.9; all students of psychology at the University of Vienna). As the combined result of Prestudy 1 and Prestudy 2, 116 patterns with a vertical symmetry axis were selected for the main study: 60 patterns often and easily perceived as faces and 56 patterns seldom or never perceived as faces.

In Prestudy 3, ratings of subjective visual complexity were collected on 7-point scales to be able to control the finally selected stimuli for complexity. Twenty-one people participated in this prestudy (9 men, 12 women, age range = 19–37; mean age = 27.2; all students at the University of Vienna) and rated 305 patterns for visual complexity.

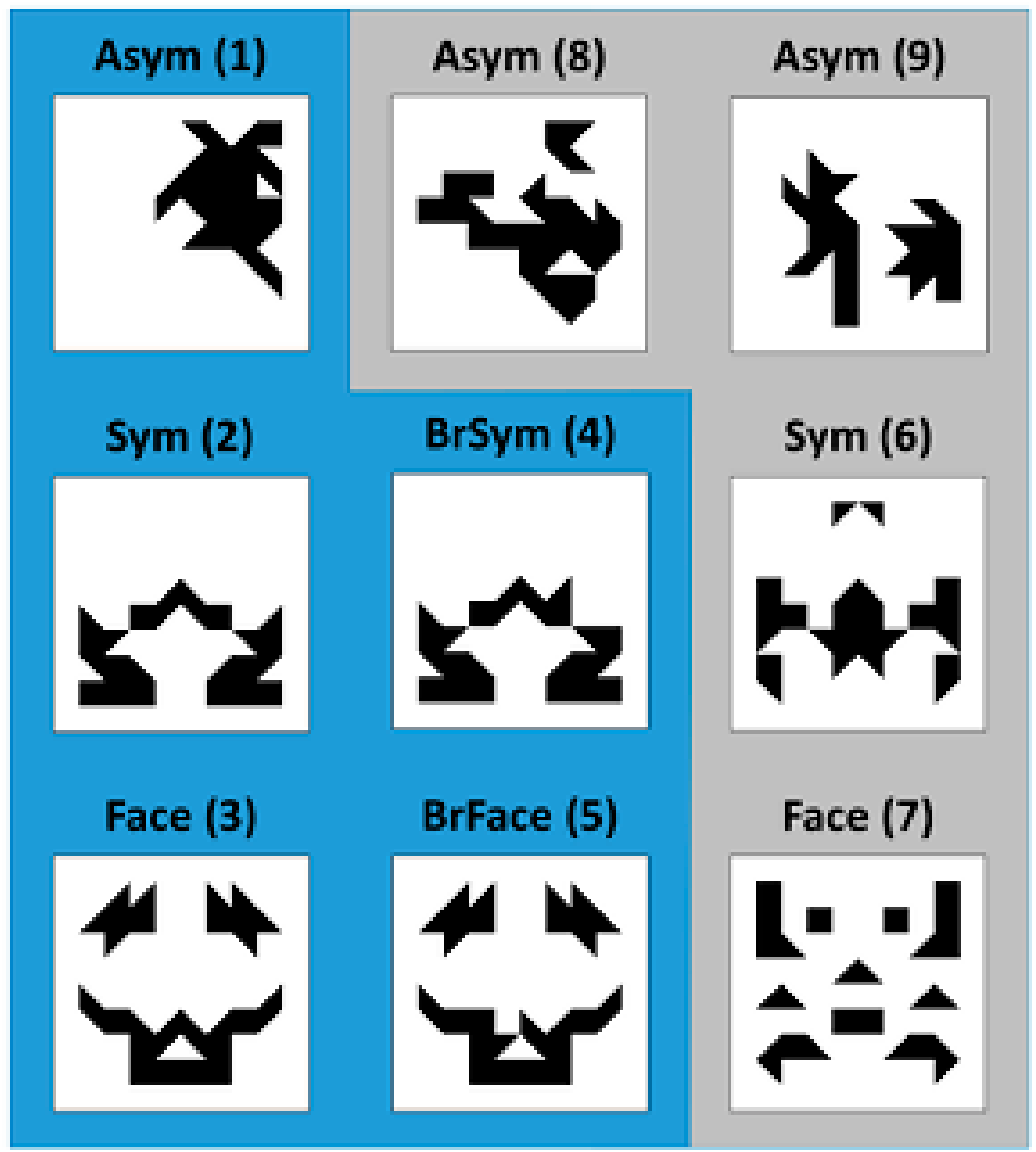

The resulting patterns were divided into five categories: Asymmetric (Asym), symmetric (Sym), symmetric face-like (Face), broken symmetric (BrSym), and broken symmetric face-like (BrFace) patterns. According to the collected mean complexity ratings, 135 patterns were selected for the main study: 90 for the first part where patterns were to be rated for liking and interest and 45 for the second part which involved a memory task. Specifically, we first selected 10 patterns from each of the categories Asym, Sym, and Face with similar mean complexity per group, as well as the corresponding patterns from the categories BrSym and BrFace (groups 1–5). Because the broken symmetric patterns showed a higher average visual complexity than the symmetric ones, we additionally selected patterns from the categories Sym, Face, and Asym (groups 6–9), matched in complexity to the selected patterns from the categories BrSym and BrFace (groups 4 and 5). In sum, we selected nine groups of patterns, each consisting of 10 patterns. See

Table 1 for an overview of selected pattern groups and categories and

Figure 1 for examples of selected stimulus patterns.

While for Prestudy 1 and Prestudy 2, E-Prime 2.0.8.90 (Psychology Software Tools, Inc., Pittsburgh, PA, USA) was used as experimental software, Prestudy 3 was programmed in OpenSesame 2.8.2 [

79].

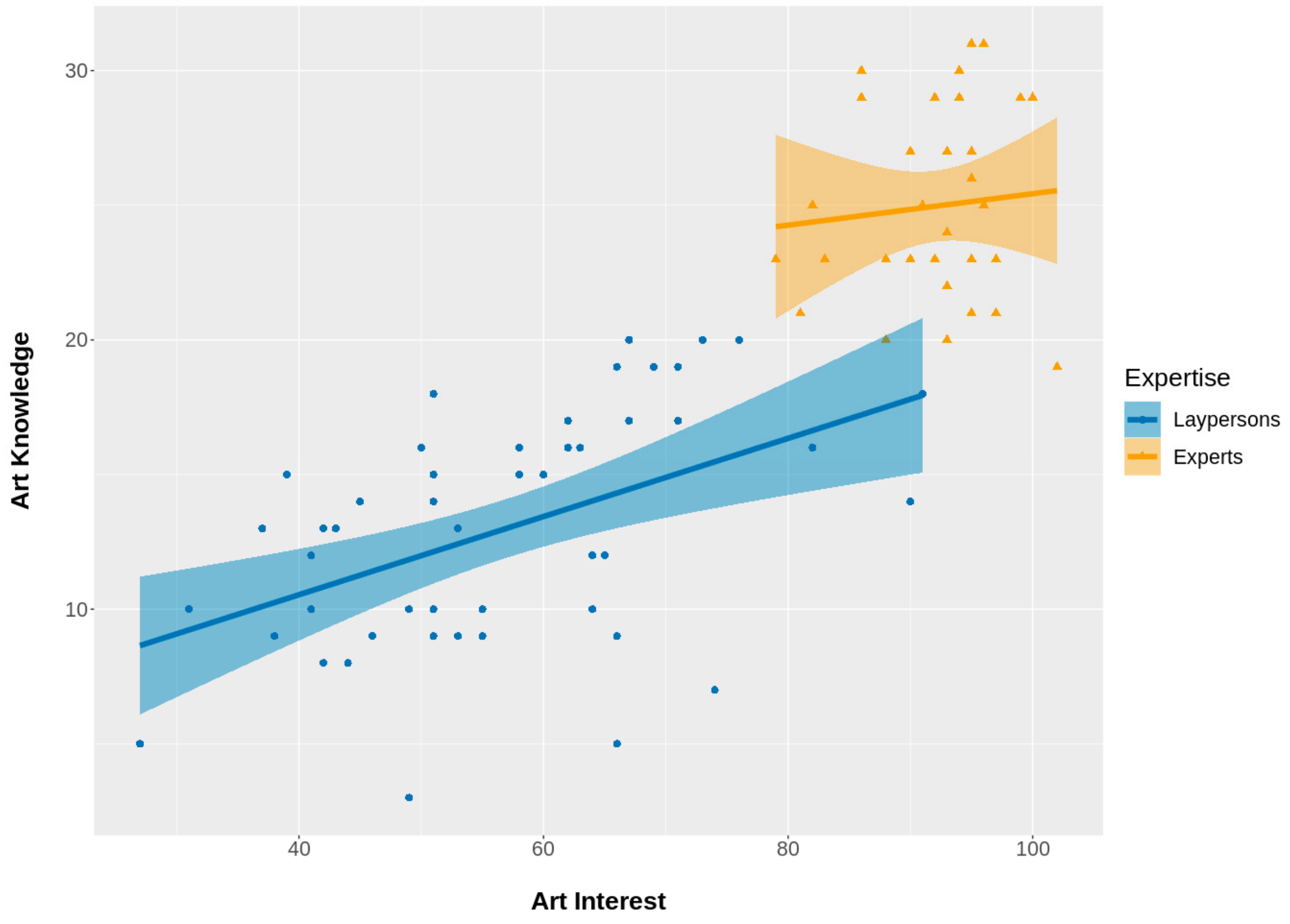

2.2. Participants

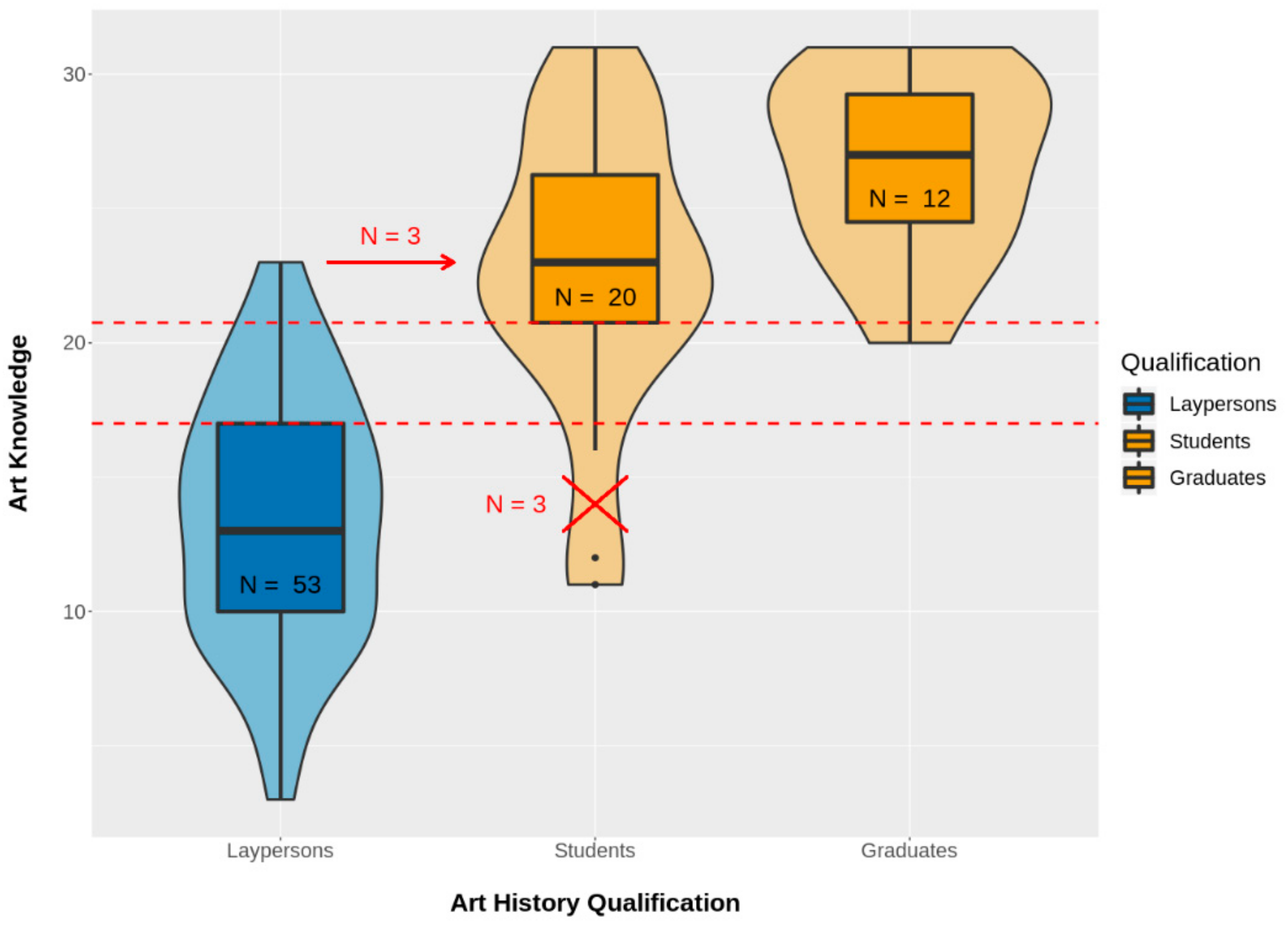

Mostly psychology and art history students of the University of Vienna participated in the main study. Psychology students participated for course credit, while art history students received chocolate as small thank-you in the first round of data collection and €10 in the second round, when recruiting was more difficult. In sum, 85 persons (20 men, 65 women, mean age = 25.0, SD = 5.6; 32 students or graduates of art history) participated in the study. Data collection was performed at two different time points (64 participants in 2015 and additional 21 participants in 2019). The additional testing was mostly done to increase the number of art experts in the sample (13 out of the 21 new participants). All were tested for visual acuity and gave written informed consent prior to the study. They were informed that they could quit the study at any time without further consequences. The study lasted about 30–40 min and was not in any way harmful or medically invasive. Thus, no ethical approval was requested, which is in accordance with the ethical guidelines of the University of Vienna. All but five participants were fluent German speakers.

2.3. Questionnaires

The German version of the PANAS—Positive and Negative Affect Schedule [

76,

80]—was applied to obtain a short measure of current mood of the participants. This was considered relevant, as it is assumed that affective states can influence cognitive processing and also the quality of aesthetic experiences [

5,

81,

82].

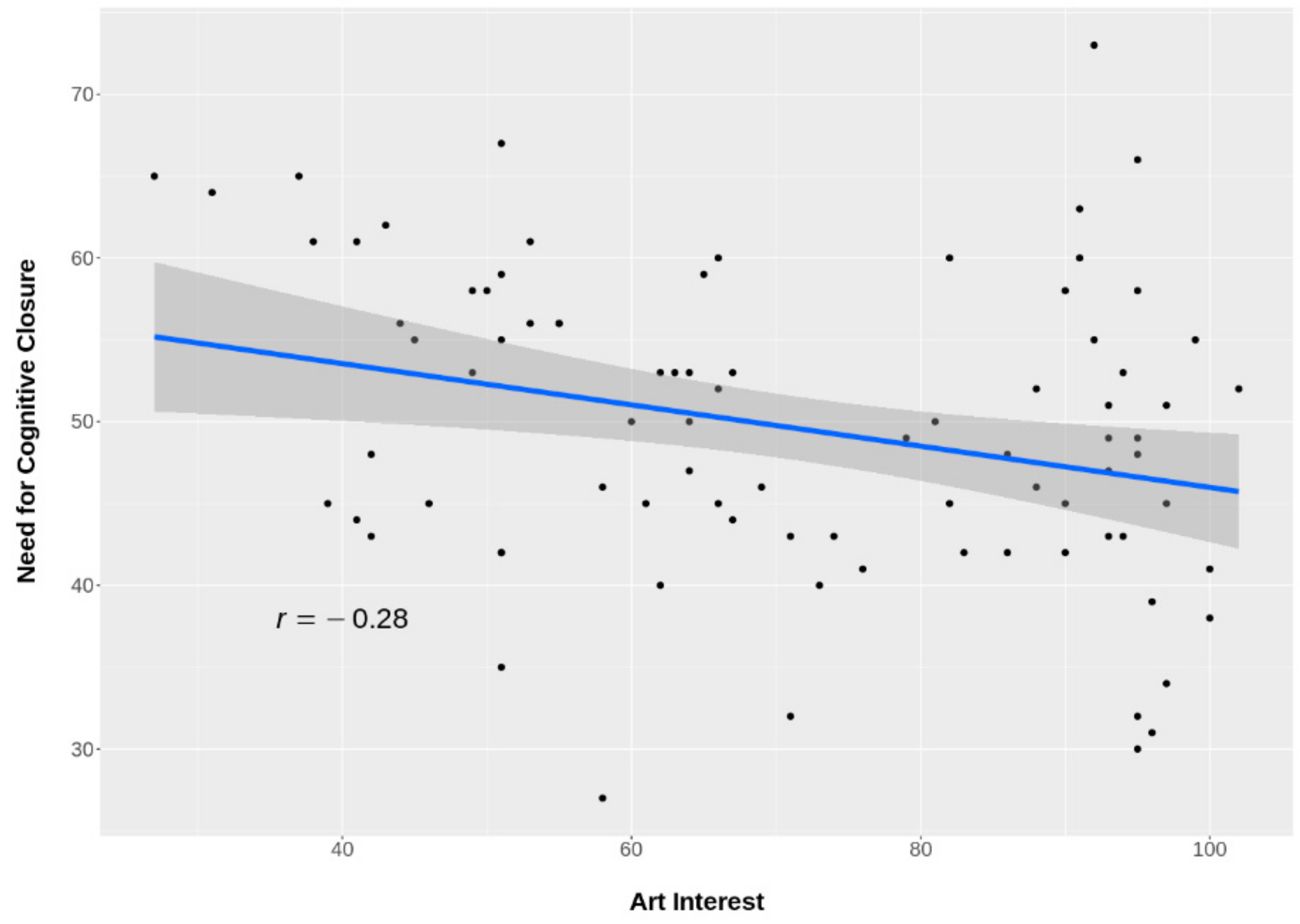

In addition, we also applied a scale of need for cognitive closure (NCC; [

83]). The need for cognitive closure can be defined as the desire for fast answers and predictability, as well as the preference for order and structure and discomfort with ambiguity. Thus, high need for cognitive closure could potentially lead to higher preference for symmetry and/or concrete images, in contrast to abstract images (both because of discomfort with ambiguity and faster processing due to increased fluency; [

46,

47]). To be able to also adjust for this factor, we used the German 16-NCCS scale [

77].

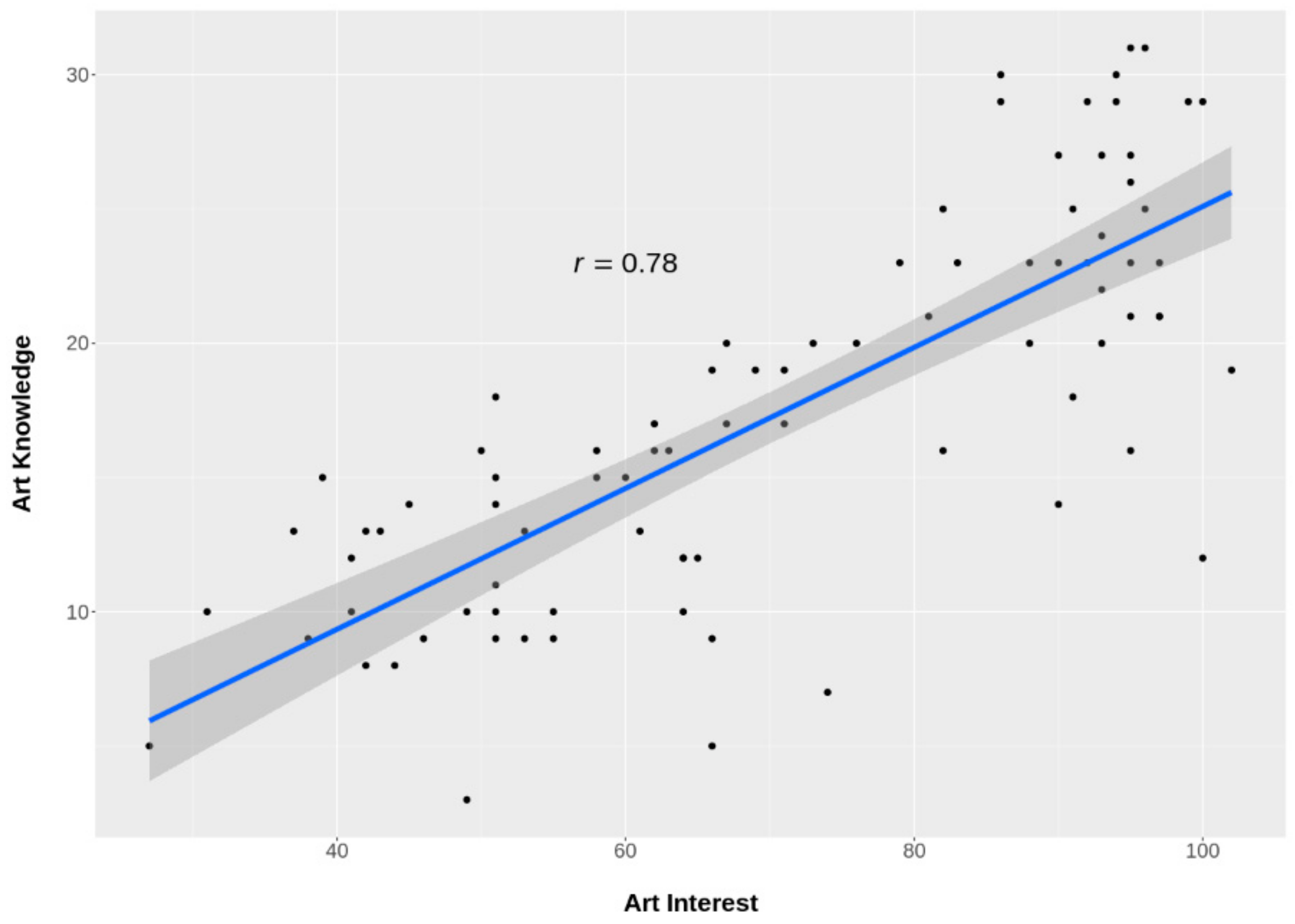

As final questionnaire, an art interest and art knowledge questionnaire was employed to obtain a measure of art expertise, as this is the main between-subject factor of interest in our study. Specifically, we used a predecessor version of the VAIAK—Vienna Art Interest and Art Knowledge Questionnaire [

78]—which was regularly used in our lab at the time of the study. It was assumed that art experts would be less inclined to give pareidolian faces a high rating, as they might consider them as a form of “easy beauty” [

73].

Note that during the first part of data collection in 2015, questionnaires were presented online, while during the second part in 2019, paper–pencil versions of the questionnaires were used.

2.4. Experimental Procedure

The experiment was conducted on lab computers using E-Prime 2.0.8.90 in 2015 and E-Prime 2.0.10.356 (Psychology Software Tools, Inc., Pittsburgh, PA, USA) in 2019 as experimental software. Patterns were presented in a size of 500 × 500 pixels in front of a black background for 3000 ms on a variety of different monitors. Participants were sitting in an approximate distance of 70 cm to the monitors. As we did not assume an influence of small differences in presentation size, we did not control for monitor sizes.

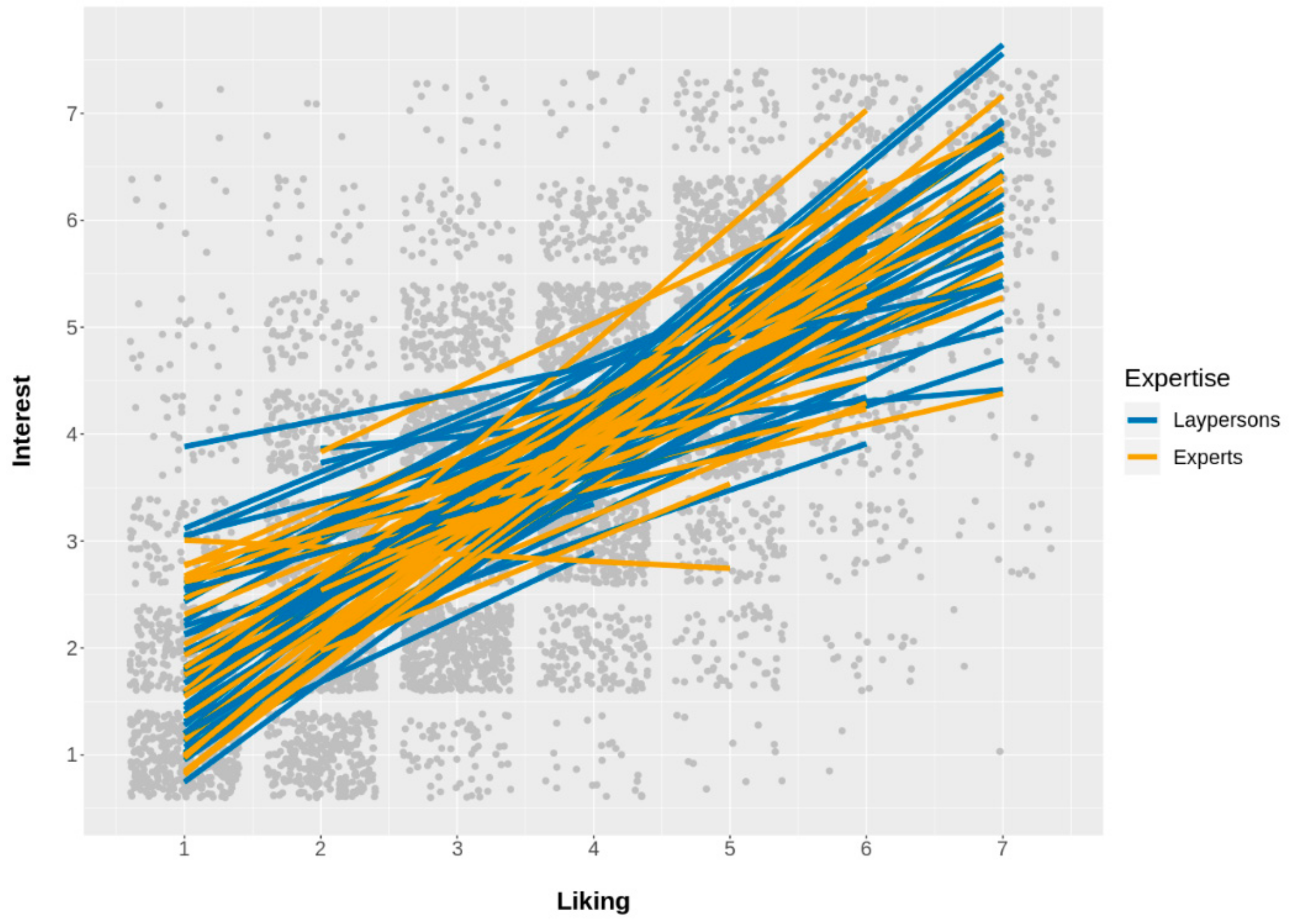

Concerning the experimental sequence, participants were first welcomed to the experiment and asked whether they speak German proficiently. If not, we provided them all materials translated into English. Then, they filled out the informed consent form, the 16-NCCS, and the PANAS. After completion of the questionnaires, they were introduced to the first part of the experiment. Instructions were both given on the computer screen and as a short verbal summary. The task in this part of the experiment was to rate 90 abstract patterns for liking and interest on 7-point scales. The exact rating questions were: “How do you like this pattern?” (German: “Wie gefällt Ihnen dieses Muster?”) and “How interesting does this pattern appear?” (German: “Wie interessant erscheint dieses Muster?”). During the self-paced rating, small versions of the patterns (downsized to 200 × 200 pixels) were presented together with the rating scales. After finishing the first experimental part, they were given the art interest and art knowledge questionnaire. Thereafter, participants were introduced to the second part of the experiment. Here, participants were presented 90 patterns of which they had already seen 45 in the first part. Participants were asked whether they had already seen the patterns or not. The detailed question was “Have you already seen this pattern? Yes/No” (German: “Haben Sie dieses Muster bereits gesehen? Ja/Nein”). Finally, participants were debriefed. They were asked whether they recognized anything during the experiment that they want to tell us, received a short verbal explanation of the purpose of the study, and were thanked for participating.

2.5. Data Analysis

We used Bayesian linear mixed-effects models for data analysis of our experiments for several reasons; linear mixed-effects models enable generalization across stimuli and participants and not only across participants [

84]. Bayesian data analysis was applied to avoid the statistical peculiarities of null-hypothesis significance testing, for instance, the dependence on predefined sampling plans [

85,

86,

87]. More specifically, we applied Bayesian estimation and did not use Bayes Factors [

88,

89]. We used R version 3.6.2 [

90], the tidyverse package version 1.2.1 [

91] for data preparation, and the brms package version 2.10.0 [

92,

93], a high-level interface to Stan [

94], for data analysis. We set weakly informative priors for the intercept as normal(0,5), for fixed effects as normal(0,3), and otherwise kept default priors. For model estimation, four chains with 10,000 iterations (5000 warmup and 5000 sampling) were used. Convergence was checked via Gelman–Rubin [

95] convergence statistics (Rhat close or equal to 1.0) and by visual inspection of trace plots. Categorical predictors were dummy coded and continuous predictors standardized by subtracting the mean and dividing by two standard deviations [

96]. The random-effects structure of the models consisted of by-subject random intercepts and random slopes of pattern category and visual complexity, and by-stimulus random intercepts and random slopes of positive and negative affect (PANAS), need for cognitive closure (16-NCCS), and art expertise (experts vs. laypersons or VAIAK). Random slopes also included all possible interactions of predictors. In the main text, traditional models are presented that treat the dependent rating variables as metric. However, because it has been shown that this can cause problems in some cases [

97,

98], we also included ordered-probit models in an

Appendix C. Post-hoc contrasts were extracted using the emmeans package version 1.4.3.01 [

99] and the tidybayes package version 1.1.0 [

100]. In addition, ggplot2 package version 3.2.1 [

101] was used for data visualization. The BayesianFirstAid package version 0.1 [

102] was used with default settings to test correlations and to compare scores between laypersons and experts (with a Bayesian two sample test as an alternative to a t-test). All presented credible intervals are highest density intervals (HDI). We applied a decision rule based on the HDI and predefined regions of practical equivalence (ROPE) around zero [

88]. For correlations, [−0.05, 0.05] was chosen as ROPE, which corresponds to half of the value of a negligible correlation following Cohen’s (1988) rule of thumb. For the linear mixed-effects models, we also chose [−0.05, 0.05] as ROPE, because predictors are standardized by dividing by two standard deviations [

96]. Therefore, the resulting standard deviation is 0.5 and not 1.0 and thus, the recommended ROPE of [−0.1 *

SD, 0.1 *

SD] amounts to [−0.05, 0.05]. For the Bayesian

t-tests, a similar rule was used as for the linear models.

4. Discussion

In the present study, we investigated aesthetic evaluation and memory recognition for different abstract (and representational) black-and-white patterns in art experts and laypersons. The motivation for this research is rooted in cognitive theories of empirical aesthetics assuming that aesthetic processing differs between art experts and laypersons [

5,

6,

65]. This is especially the case for the categories abstract vs. representational [

62,

70,

71] but also for symmetric vs. asymmetric [

19].

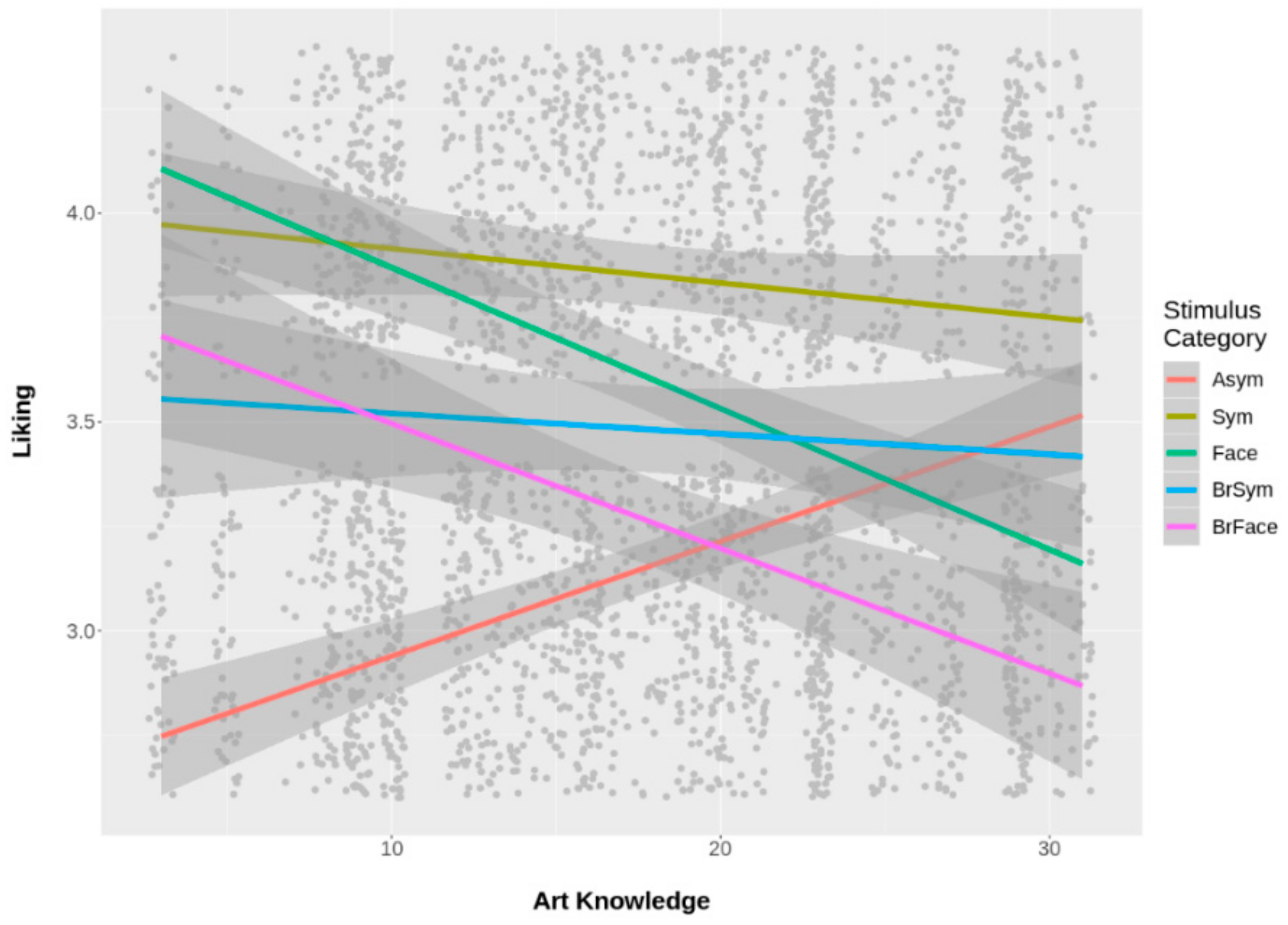

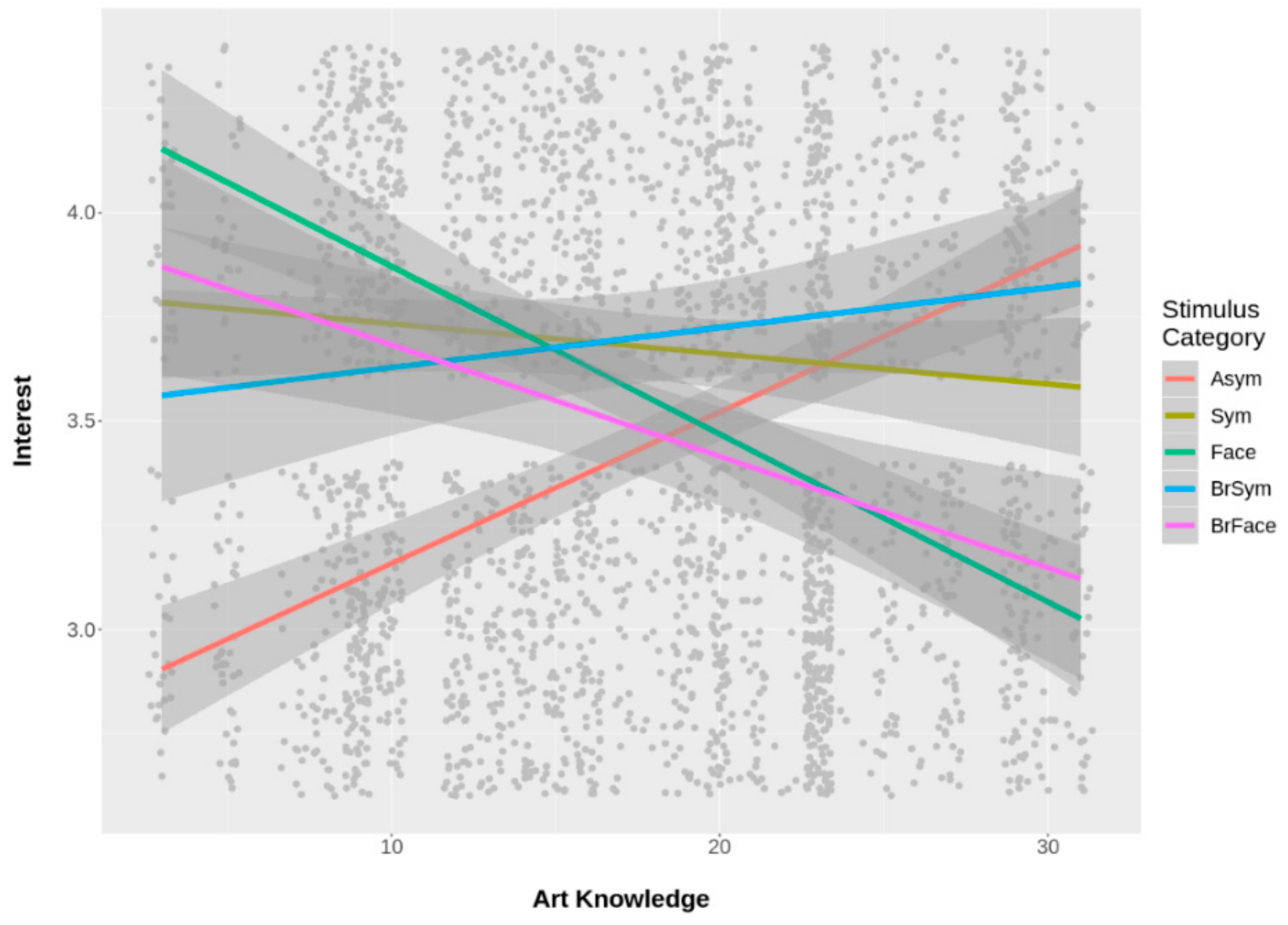

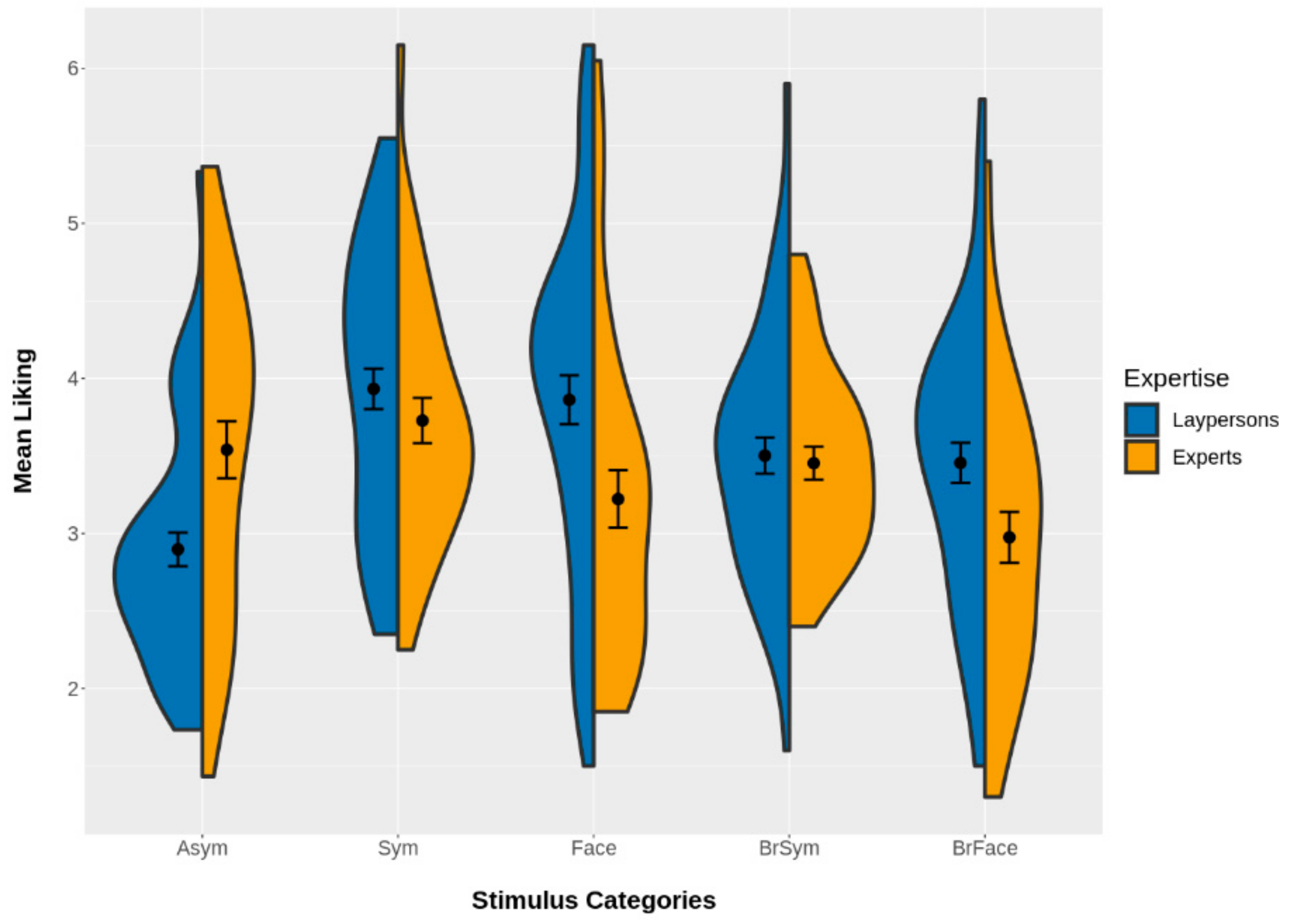

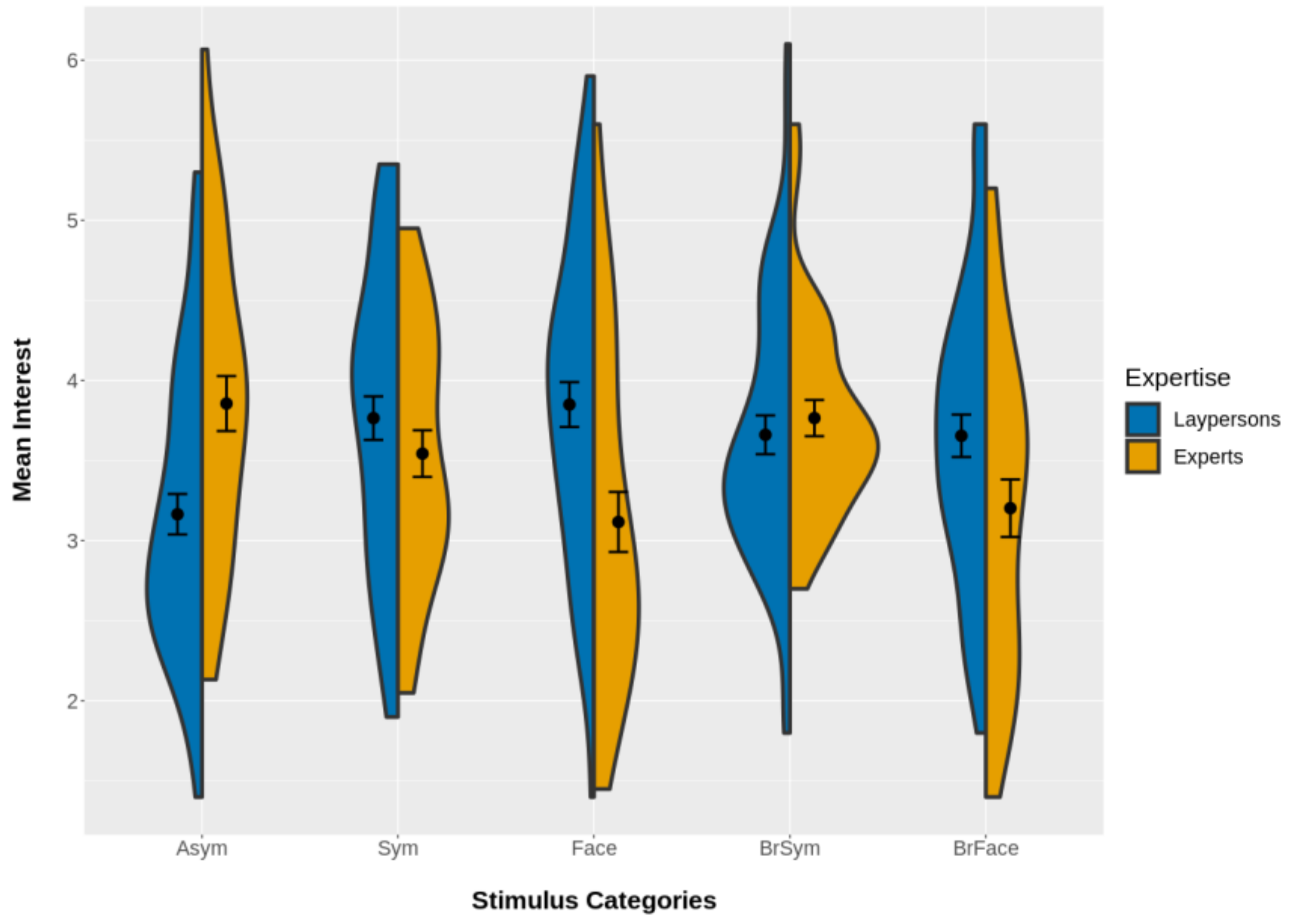

While it was already found that preference for symmetry is less pronounced in art experts than in laypersons [

16,

18], it was not clear whether the difference in aesthetic evaluation concerning abstract vs. representational art can be similarly found as a preference for abstract over representational patterns in art experts. Specifically, we used five categories of “abstract” patterns (Asym—asymmetric, Sym—symmetric, Face—face-like (symmetric), BrSym—broken symmetric, and BrFace—broken face-like (symmetric); thus, the face-like patterns were no longer abstract in the full sense of the word) as stimuli, presented them to art experts and laypersons which were asked to rate them for liking and interest in the first part, and finally to judge whether they had already seen the patterns before in the second part of the experiment.

Concerning effects of adjustment variables, we found a positive effect of positive affect/mood (PANAS score) on interest ratings (and also a less clear, smaller effect for liking that nevertheless went in the same direction). Thus, participants with a higher positive affect gave higher ratings, which is in line with our assumptions [

81]. Also, visual complexity had a positive effect on liking, as well as on interest ratings (both for experts and for laypersons). Because complexity is a secondary factor of aesthetic evaluation with a positive influence on preference for abstract patterns [

21,

27,

103], this is also in line with theory. Finally, we found no effect of need for cognitive closure (NCC). While NCC was negatively correlated with art interest and art knowledge, it had no effect on the ratings. One could assume that a high NCC would be related to higher preference for symmetry and for representational, face-like patterns, as it is also associated with a high desire for order and structure [

83]. However, we did not find such an effect, which could of course be due to the effect or our sample simply being rather small.

Our main results can be summarized as follows. While in general symmetric patterns received the highest liking ratings, art experts liked asymmetric abstract patterns more than laypersons. On the other hand, art experts liked representational patterns (pareidolian, face-like patterns) less than laypersons. This was also found for representational patterns that slightly deviated from perfect symmetry (i.e., broken face-like patterns). Furthermore, laypersons liked all other pattern categories (symmetric, face-like, broken symmetric, and broken face-like) better than asymmetric patterns. These factors were included in negative interactions with art expertise, suggesting that these preferences were lower for art experts and only one difference in liking ratings of symmetrical compared with asymmetric patterns was found for art experts; experts liked broken face-like patterns less than asymmetric patterns. Thus, while laypersons liked symmetric patterns better than asymmetric ones, no difference was found for art experts. Results were very similar for interest ratings. Art experts found asymmetric patterns more interesting than laypersons, and face-like and broken face-like patterns less interesting than laypersons. Furthermore, while laypersons found symmetric patterns more interesting than asymmetric ones, no such difference was found for art experts. See the Results section for a more detailed description of differences between art experts and laypersons. Thus, our results are consistent with the findings of Weichselbaum, Leder, and Ansorge [

18]. However, for art experts, we found no differences in ratings of asymmetric vs. symmetric patterns and thus no preference for asymmetric over symmetric patterns as in Leder et al. [

16].

Furthermore, we did not find differences in memory performance between art experts and laypersons. Concerning differences between stimulus groups, asymmetric patterns generally showed the lowest recognition rates (but still well above chance level), while broken face-like patterns clearly showed the highest recognition rates. Therefore, a memory effect as explanation for the differences in aesthetic evaluations between experts and laypersons is unlikely. For instance, if art experts would have had a better memory for asymmetric patterns, as could have resulted from different encoding of these patterns, this could have been a potential explanation for the higher liking and interest ratings. However, we did not find such a memory effect. The only marginal difference between art experts and laypersons was that experts tended to remember broken face-like patterns slightly better than laypersons. In general, broken face-like patterns were remembered best. One explanation for this memory effect could be that broken face-like patterns might be more distinct than symmetric face-like patterns. It is known that distinctive faces are remembered well (e.g., [

104,

105]). We also found a positive influence of negative mood on memory performance. This is in line with several studies demonstrating that negative mood can improve memory accuracy [

82,

106].

One limitation of the current study could be the distinction between art experts and laypersons, as not every student or graduate of art history is necessarily also a high-level art expert and not every student of psychology in necessarily an art layperson. To control for this potentially unclear distinction, we calculated our models both with art expertise as a dichotomous factor (mostly) derived from the study choices of our participants and with art knowledge as a continuous factor based on a questionnaire. The results of both types of models largely agreed. In addition, persons with extremely high art expertise were probably underrepresented in our sample. This is a problem of many studies comparing art experts and laypersons and somewhat limits our conclusions, as we do not know whether the evaluative judgments of high-level experts would have differed from the judgments of our merely mid-level experts. Another limitation is maybe our relatively heterogeneous experimental setup and the two distant sampling timepoints. However, we think that it is unlikely that this has introduced systematic bias into our study and that the use of a Bayesian approach has furthermore helped to avoid some of the pitfalls of classical statistical methods. In addition, while we introduced the stimuli to our participants as abstract patterns—thus, as non-art objects—we cannot fully rule out the possibility that some participants might have also perceived them as artworks. Of course, this would contradict our conclusion that the difference between art experts and laypersons also extends to non-art objects. However, as today everything can potentially be an artwork, it is mostly context and available information that make the difference between an artwork and a non-art object [

107,

108]. Thus, we think that it is unlikely that our participants have interpreted the presented abstract patterns as artworks.

To sum up, in addition to the effects of art expertise on symmetry preference that are compatible with previous findings [

16,

18], we also found differences in aesthetic appreciation of representational, face-like patterns between art experts and laypersons. Simply put: While laypersons liked symmetric and face-like (also symmetric) patterns best and asymmetric patterns least, art experts liked symmetric and asymmetric patterns best and face-like patterns least. As also discussed in other studies, we assume that the reason for this striking difference in aesthetic evaluations between art experts and laypersons is rooted in the extensive art-specific training and reflecting on pictures that is necessary to achieve a high level of art expertise [

16,

58,

72,

109].

In conclusion, it seems that the well-known fact that, on average, art experts prefer abstract and laypersons representational artworks [

66,

69,

70,

71,

110] can indeed be extended to “abstract” and “representational” black-and-white patterns, in other words, to non-art objects. Thus, it appears that these differences in aesthetic evaluation between art experts and laypersons are not strictly limited to artworks and likely represent a more general difference in evaluative perceptual judgments.