AppCon: Mitigating Evasion Attacks to ML Cyber Detectors

Abstract

1. Introduction

2. Related Work

2.1. Machine Learning for Cyber Detection

- AdaBoost: similar to Random Forests, these algorithms are able to improve their final performance by putting more emphasis on the “errors” committed during their training phase [30].

- Wide and Deep: this technique is a combination of a linear “wide” model, and a “deep” neural network. The idea is to jointly train these two models and foster the effectiveness of both.

2.2. Adversarial Attacks

- Influence, which denotes whether an attack is performed at training-time or test-time.

- -

- Training-time: it is possible to thwart the algorithm by manipulating the training-set before its training phase, for example by the insertion or removal of critical samples (also known as data poisoning).

- -

- Test-time: here, the model has been deployed and the goal is subverting its behavior during its normal operation.

- Violation, which identifies the security violation, targeting the availability or integrity of the system.

- -

- Integrity: these attacks have the goal of increasing the model’s rate of false negatives. In cybersecurity, this involves having malicious samples being classified as benign, and these attempts are known as evasion attacks.

- -

- Availability: the aim is to generate excessive amounts of false alarms that prevent or limit the use of the target model.

2.3. Existing Defenses

3. Materials and Methods

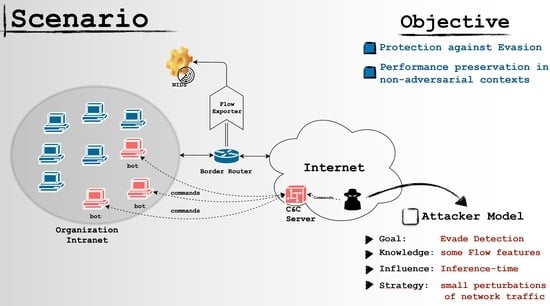

3.1. Threat Model

- Goal: the main goal of the attacker is to evade detection so that he can maintain his access to the network, compromise more machines, or exfiltrate data.

- Knowledge: the attacker knows that network communications are monitored by an ML-based NIDS. However, he does not have any information on the model integrated in the detector, but he (rightly) assumes that this model is trained over a dataset containing samples generated by the same or a similar malware variant deployed on the infected machines. Additionally, since he knows that the data-type used by the detector is related to network traffic, he knows some of the basic features adopted by the machine learning model.

- Capabilities: we assume that the attacker can issue commands to the bot through the CnC infrastructure; however, he cannot interact with the detector.

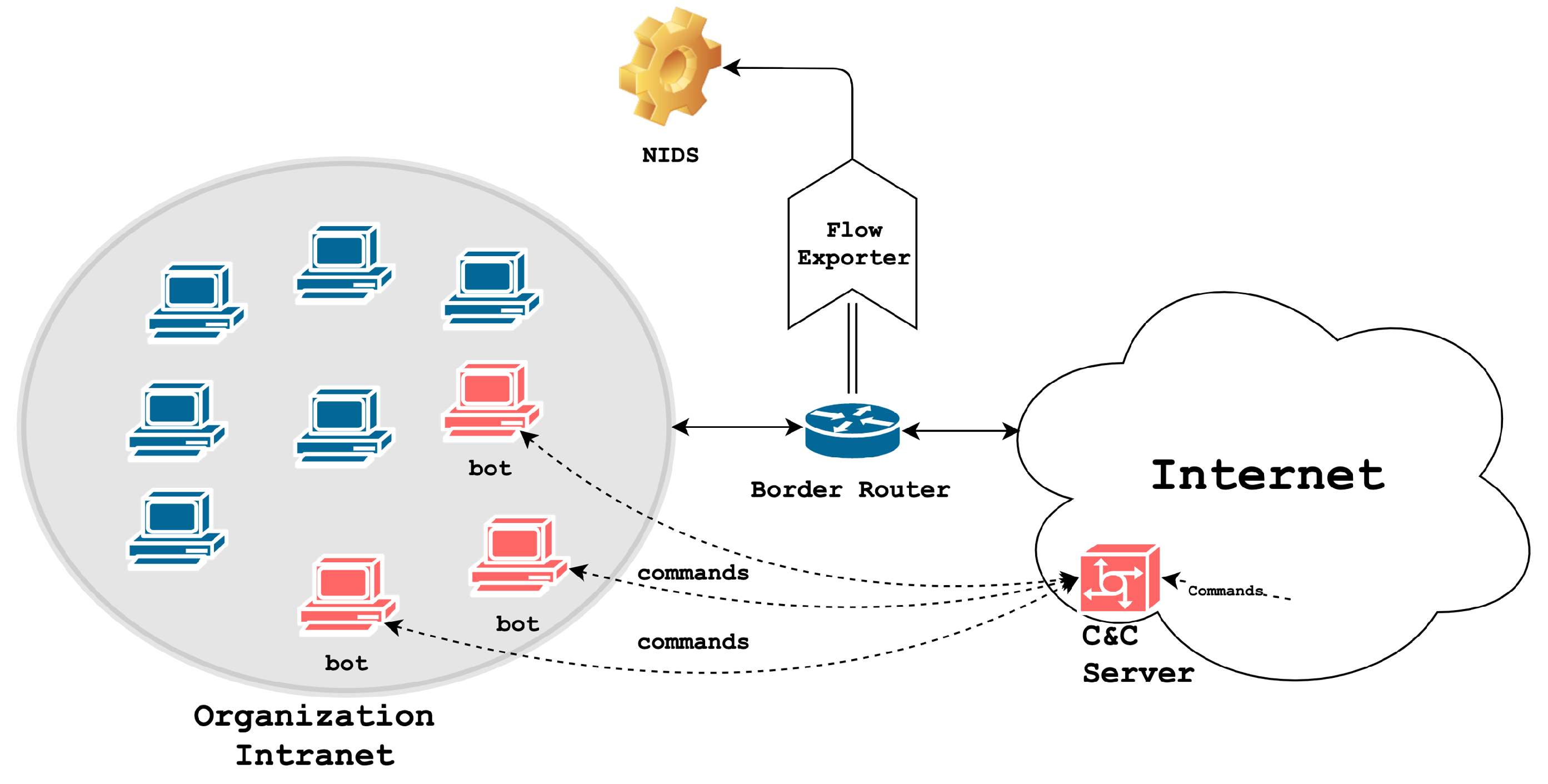

3.2. Proposed Countermeasure

3.3. Experimental Settings

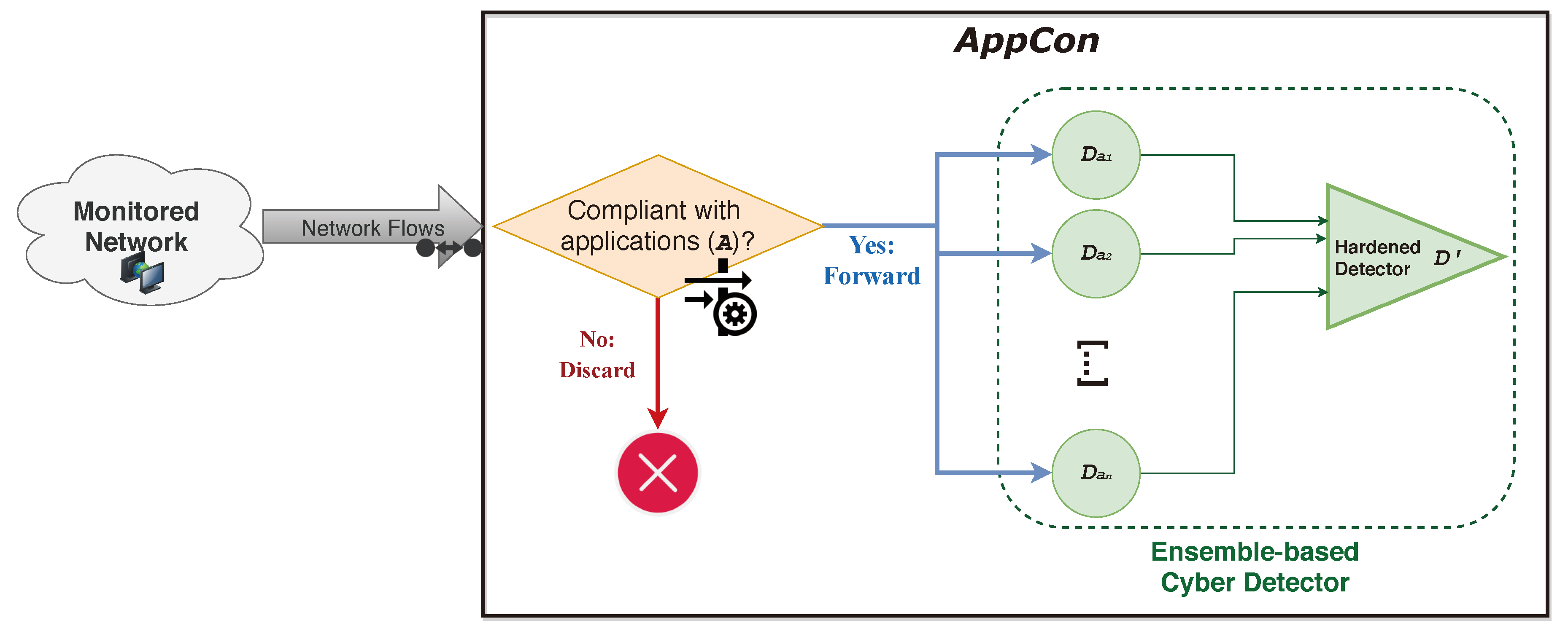

3.3.1. Dataset

3.3.2. Developing the Baseline Detectors

3.3.3. Design and Implementation of AppCon

3.3.4. Generation of Adversarial Samples

3.3.5. Performance Metrics

| Algorithm 1: Algorithm for generating datasets of adversarial samples. Source: [72] |

|

- Accuracy: This metric denotes the percentage of correct predictions out of all the predictions made. It is computed as follows:In Cybersecurity contexts, and most notably in Network Intrusion Detection [28], the amount of malicious samples is several orders of magnitude lower with respect of that of benign samples; that is, malicious actions can be considered as “rare events”. Thus, this metric is often neglected in cybersecurity [3,73]. Consider for example a detector that is validated on a dataset with 990 benign samples and 10 malicious samples: if the detector predicts that all samples are benign, it will achieve almost perfect Accuracy despite being unable to recognize any attack.

- Precision: This metric denotes the percentage of correct detections out of all “positive” predictions made. It is computed as follows:Models that obtain a high Precision have a low rate of false positives, which is an appreciable result in Cybersecurity. However, this metric does not tell anything about false negatives.

- Recall: This metric, also known as Detection Rate or True Positive Rate, denotes the percentage of correct detections with respect of all possible detections, and is computed as follows:In Cybersecurity contexts, it is particularly important due to its ability to reflect how many malicious samples were correctly identified.

- F1-score: it is a combination of the Precision and Recall metrics. It is computed as follows:It is used to summarize in a single value the Precision and Recall metrics.

4. Experimental Results

- determine the performance of the “baseline” detectors in non-adversarial settings;

- assess the effectiveness of the considered evasion attacks against the “baseline” detectors;

- measure the performance of the “hardened” detectors in non-adversarial settings;

- gauge the impact of the considered evasion attacks against the “hardened” detectors.

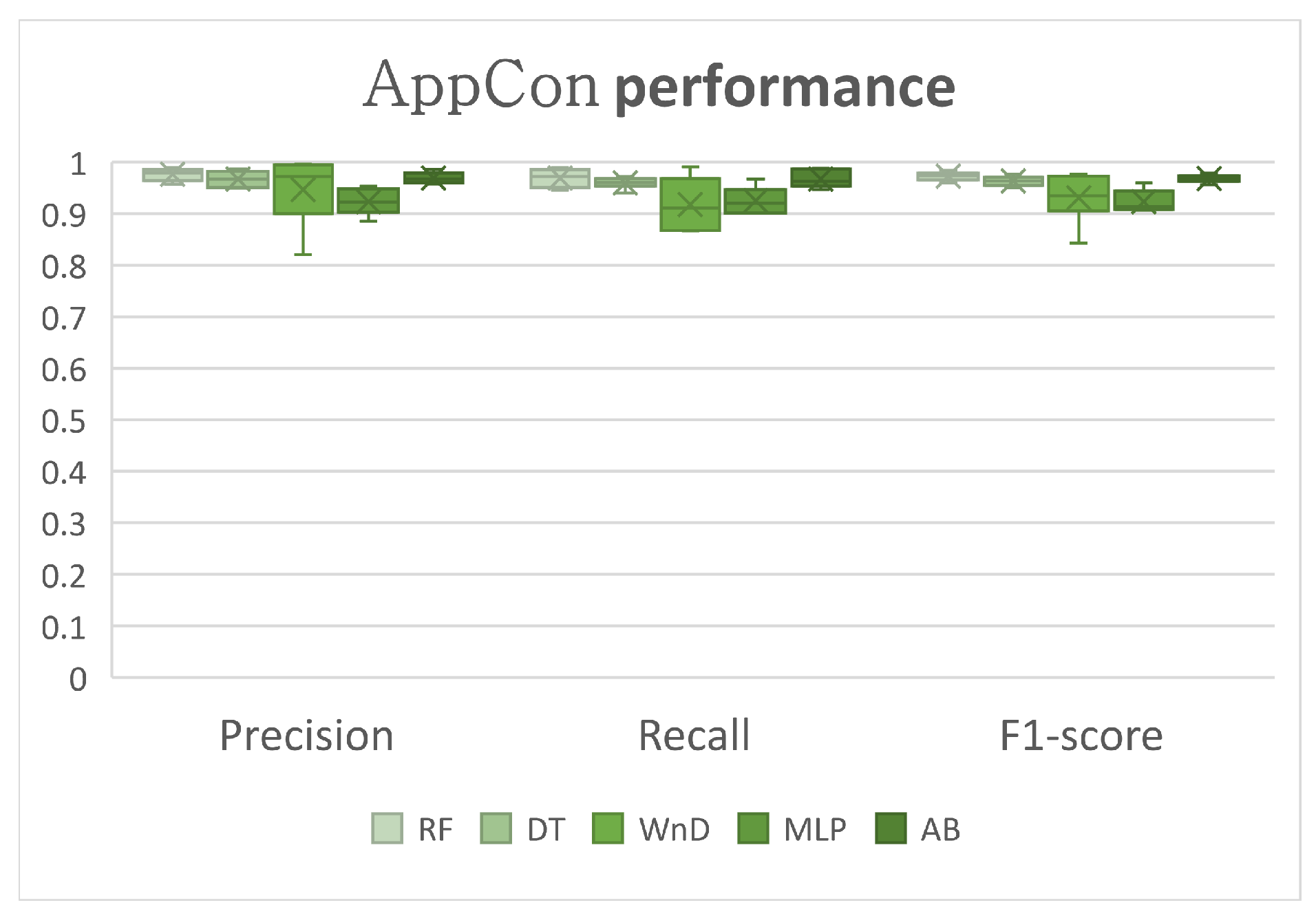

4.1. Baseline Performance in Non-Adversarial Settings

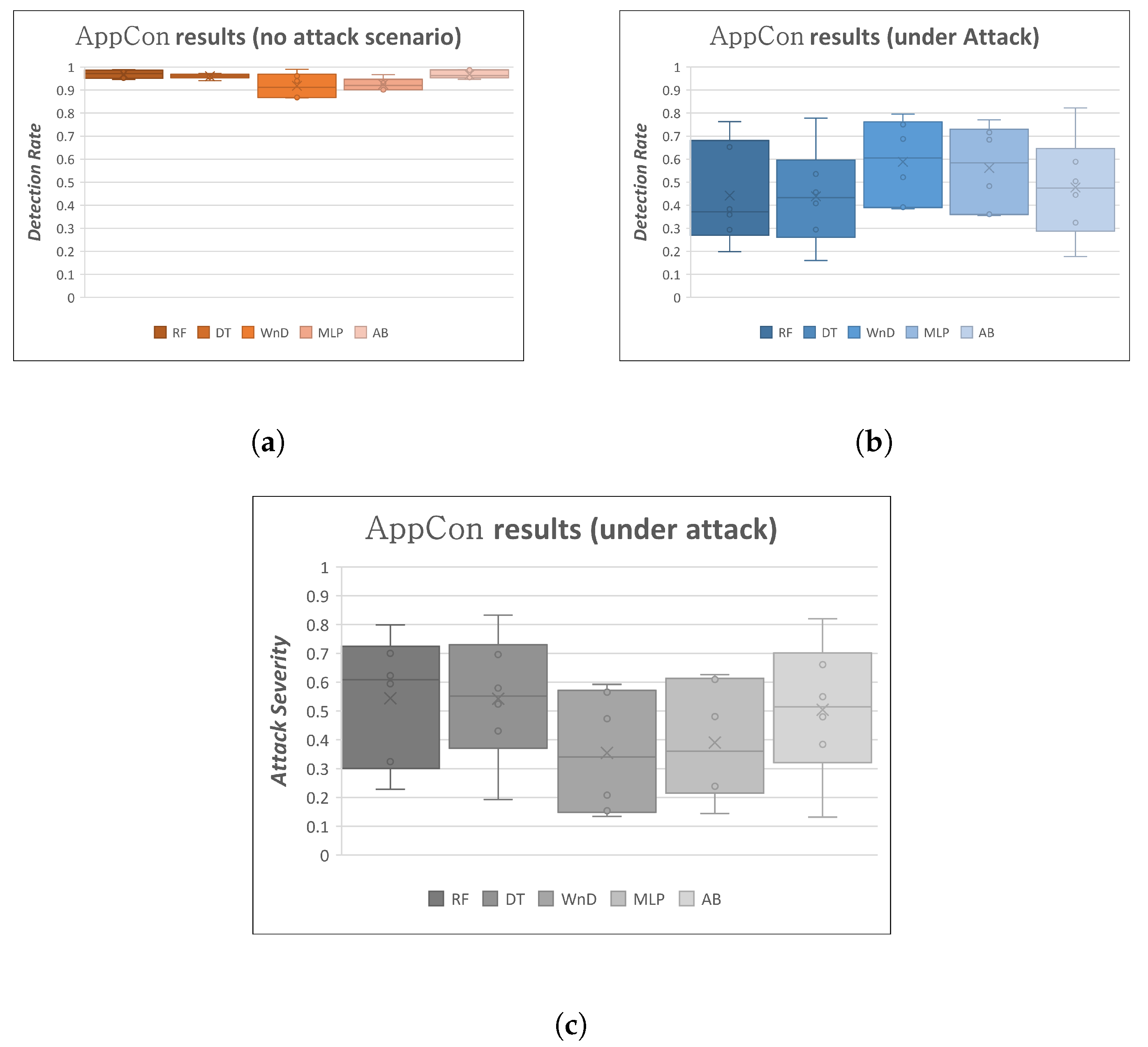

4.2. Adversarial Samples against Baseline Detectors

4.3. Hardened Performance in Non-Adversarial Settings

4.4. Countermeasure Effectiveness

4.5. Considerations

- the attacker must know (fully or partially) the set of web-applications A considered by AppCon. Let us call this set .

- the attacker must know the characteristics of the traffic that generate in the targeted organization. We denote this piece of knowledge with .

- the attacker must be able to modify its malicious botnet communications so as to conform to .

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gardiner, J.; Nagaraja, S. On the security of machine learning in malware c&c detection: A survey. ACM Comput. Surv. 2016, 49, 59. [Google Scholar]

- Biggio, B.; Roli, F. Wild patterns: Ten years after the rise of adversarial machine learning. Elsevier Pattern Recogn. 2018, 84, 317–331. [Google Scholar] [CrossRef]

- Buczak, A.L.; Guven, E. A survey of data mining and machine learning methods for cyber security intrusion detection. IEEE Commun. Surv. Tutor. 2016, 18, 1153–1176. [Google Scholar] [CrossRef]

- Huang, L.; Joseph, A.D.; Nelson, B.; Rubinstein, B.I.; Tygar, J. Adversarial machine learning. In Proceedings of the 4th ACM Workshop on Security and Artificial Intelligence, Chicago, IL, USA, 21 October 2011; pp. 43–58. [Google Scholar]

- Biggio, B.; Nelson, B.; Laskov, P. Poisoning attacks against support vector machines. In Proceedings of the 29th International Coference on International Conference on Machine, Edinburgh, UK, 26 June–1 July 2012; pp. 1467–1474. [Google Scholar]

- Biggio, B.; Corona, I.; Maiorca, D.; Nelson, B.; Šrndić, N.; Laskov, P.; Giacinto, G.; Roli, F. Evasion attacks against machine learning at test time. In Proceedings of the 2013th European Conference on Machine Learning and Knowledge Discovery in Databases, Prague, Czech Republic, 23–27 September 2013; pp. 387–402. [Google Scholar]

- Papernot, N.; McDaniel, P.; Sinha, A.; Wellman, M. SoK: Security and Privacy in Machine Learning. In Proceedings of the 2018 IEEE European Symposium on Security and Privacy (EuroS&P), London, UK, 24–26 April 2018; pp. 399–414. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 2019. [Google Scholar] [CrossRef]

- Wu, D.; Fang, B.; Wang, J.; Liu, Q.; Cui, X. Evading Machine Learning Botnet Detection Models via Deep Reinforcement Learning. In Proceedings of the 2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Apruzzese, G.; Colajanni, M.; Marchetti, M. Evaluating the effectiveness of Adversarial Attacks against Botnet Detectors. In Proceedings of the 2019 IEEE 18th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 26–28 September 2019; pp. 1–8. [Google Scholar]

- Laskov, P. Practical evasion of a learning-based classifier: A case study. In Proceedings of the 2014 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 18–21 May 2014; pp. 197–211. [Google Scholar]

- Demontis, A.; Russu, P.; Biggio, B.; Fumera, G.; Roli, F. On security and sparsity of linear classifiers for adversarial settings. In Proceedings of the Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR), Mérida, Mexico, 29 November–2 December 2016; pp. 322–332. [Google Scholar]

- Demontis, A.; Melis, M.; Biggio, B.; Maiorca, D.; Arp, D.; Rieck, K.; Corona, I.; Giacinto, G.; Roli, F. Yes, machine learning can be more secure! A case study on android malware detection. IEEE Trans. Dependable Secur. Comput. 2017. [Google Scholar] [CrossRef]

- Corona, I.; Biggio, B.; Contini, M.; Piras, L.; Corda, R.; Mereu, M.; Mureddu, G.; Ariu, D.; Roli, F. Deltaphish: Detecting phishing webpages in compromised websites. In Proceedings of the ESORICS 2017—22nd European Symposium on Research in Computer Security, Oslo, Norway, 11–15 September 2017; pp. 370–388. [Google Scholar]

- Liang, B.; Su, M.; You, W.; Shi, W.; Yang, G. Cracking classifiers for evasion: A case study on the google’s phishing pages filter. In Proceedings of the 25th International World Wide Web Conference (WWW 2016), Montréal, QC, Canada, 11–15 April 2016; pp. 345–356. [Google Scholar]

- Muñoz-González, L.; Biggio, B.; Demontis, A.; Paudice, A.; Wongrassamee, V.; Lupu, E.C.; Roli, F. Towards poisoning of deep learning algorithms with back-gradient optimization. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 27–38. [Google Scholar]

- Apruzzese, G.; Colajanni, M. Evading botnet detectors based on flows and Random Forest with adversarial samples. In Proceedings of the 2018 IEEE 17th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 1–3 November 2018; pp. 1–8. [Google Scholar]

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Marchetti, M. Addressing Adversarial Attacks against Security Systems based on Machine Learning. In Proceedings of the 2019 11th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 28–31 May 2019; pp. 1–18. [Google Scholar]

- Grosse, K.; Papernot, N.; Manoharan, P.; Backes, M.; McDaniel, P. Adversarial perturbations against deep neural networks for malware classification. arXiv 2016, arXiv:1606.04435. [Google Scholar]

- Calzavara, S.; Lucchese, C.; Tolomei, G. Adversarial Training of Gradient-Boosted Decision Trees. In Proceedings of the 28th ACM Inter-national Conference on Information and Knowledge Management (CIKM’19), Beijing, China, 3–7 November 2019; pp. 2429–2432. [Google Scholar]

- Biggio, B.; Corona, I.; He, Z.M.; Chan, P.P.; Giacinto, G.; Yeung, D.S.; Roli, F. One-and-a-half-class multiple classifier systems for secure learning against evasion attacks at test time. In Proceedings of the 12th International Workshop, MCS 2015, Günzburg, Germany, 29 June–1 July 2015; pp. 168–180. [Google Scholar]

- Kettani, H.; Wainwright, P. On the Top Threats to Cyber Systems. In Proceedings of the 2019 IEEE 2nd International Conference on Information and Computer Technologies (ICICT), Kahului, HI, USA, 14–17 March 2019; pp. 175–179. [Google Scholar]

- Garcia, S.; Grill, M.; Stiborek, J.; Zunino, A. An empirical comparison of botnet detection methods. Elsevier Comput. Secur. 2014, 45, 100–123. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Truong, T.C.; Diep, Q.B.; Zelinka, I. Artificial Intelligence in the Cyber Domain: Offense and Defense. Symmetry 2020, 12, 410. [Google Scholar] [CrossRef]

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Guido, A.; Marchetti, M. On the Effectiveness of Machine and Deep Learning for Cybersecurity. In Proceedings of the 2018 10th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 29 May–1 June 2018; pp. 371–390. [Google Scholar]

- Xu, R.; Cheng, J.; Wang, F.; Tang, X.; Xu, J. A DRDoS detection and defense method based on deep forest in the big data environment. Symmetry 2019, 11, 78. [Google Scholar] [CrossRef]

- Yavanoglu, O.; Aydos, M. A review on cyber security datasets for machine learning algorithms. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 2186–2193. [Google Scholar]

- Cheng, H.T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & deep learning for recommender systems. In Proceedings of the Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 7–10. [Google Scholar]

- Blanzieri, E.; Bryl, A. A survey of learning-based techniques of email spam filtering. Artif. Intell. Rev. 2008, 29, 63–92. [Google Scholar] [CrossRef]

- Sommer, R.; Paxson, V. Outside the closed world: On using machine learning for network intrusion detection. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Berkeley/Oakland, CA, USA, 16–19 May 2010; pp. 305–316. [Google Scholar]

- Alazab, M.; Venkatraman, S.; Watters, P.; Alazab, M. Zero-day malware detection based on supervised learning algorithms of API call signatures. In Proceedings of the Ninth Australasian Data Mining Conference, December 2011, Ballarat, Australia, 1–2 December 2011; Volume 121, pp. 171–182. [Google Scholar]

- Mannino, M.; Yang, Y.; Ryu, Y. Classification algorithm sensitivity to training data with non representative attribute noise. Elsevier Decis. Support Syst. 2009, 46, 743–751. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Dalvi, N.; Domingos, P.; Sanghai, S.; Verma, D. Adversarial classification. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 99–108. [Google Scholar]

- Lowd, D.; Meek, C. Adversarial learning. In Proceedings of the Eleventh ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Chicago, IL, USA, 21–24 August 2005; pp. 641–647. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Gama, J.; Žliobaitė, I.; Bifet, A.; Pechenizkiy, M.; Bouchachia, A. A survey on concept drift adaptation. ACM Comput. Surv. 2014, 46, 44. [Google Scholar] [CrossRef]

- Kantchelian, A.; Afroz, S.; Huang, L.; Islam, A.C.; Miller, B.; Tschantz, M.C.; Greenstadt, R.; Joseph, A.D.; Tygar, J. Approaches to adversarial drift. In Proceedings of the 2013 ACM Workshop on Artificial Intelligence and Security, Berlin, Germany, 4 November 2013; pp. 99–110. [Google Scholar]

- Xu, W.; Qi, Y.; Evans, D. Automatically evading classifiers. In Proceedings of the Network and Distributed Systems Symposium 2016, San Diego, CA, USA, 21–24 February 2016; pp. 21–24. [Google Scholar]

- Ibitoye, O.; Shafiq, O.; Matrawy, A. Analyzing adversarial attacks against deep learning for intrusion detection in IoT networks. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019. [Google Scholar]

- Papernot, N.; McDaniel, P.; Wu, X.; Jha, S.; Swami, A. Distillation as a defense to adversarial perturbations against deep neural networks. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 582–597. [Google Scholar]

- Fajana, O.; Owenson, G.; Cocea, M. TorBot Stalker: Detecting Tor Botnets through Intelligent Circuit Data Analysis. In Proceedings of the 2018 IEEE 17th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 1–3 November 2018; pp. 1–8. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar]

- Anderson, H.S.; Woodbridge, J.; Filar, B. DeepDGA: Adversarially-tuned domain generation and detection. In Proceedings of the 2016 ACM Workshop on Artificial Intelligence and Security, Vienna, Austria, 28 October 2016; pp. 13–21. [Google Scholar]

- Kantchelian, A.; Tygar, J.D.; Joseph, A. Evasion and hardening of tree ensemble classifiers. In Proceedings of the 33rd International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; pp. 2387–2396. [Google Scholar]

- Zhang, F.; Chan, P.P.; Biggio, B.; Yeung, D.S.; Roli, F. Adversarial feature selection against evasion attacks. IEEE Trans Cybern. 2016, 46, 766–777. [Google Scholar] [CrossRef]

- Gourdeau, P.; Kanade, V.; Kwiatkowska, M.; Worrell, J. On the hardness of robust classification. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 7444–7453. [Google Scholar]

- Do, C.T.; Tran, N.H.; Hong, C.; Kamhoua, C.A.; Kwiat, K.A.; Blasch, E.; Ren, S.; Pissinou, N.; Iyengar, S.S. Game theory for cyber security and privacy. ACM Comput. Surv. 2017, 50, 30. [Google Scholar] [CrossRef]

- Wooldridge, M. Does game theory work? IEEE Intell. Syst. 2012, 27, 76–80. [Google Scholar] [CrossRef]

- Pavlovic, D. Gaming security by obscurity. In Proceedings of the 2011 New Security Paradigms Workshop, Marin County, CA, USA, 12–15 September 2011; pp. 125–140. [Google Scholar]

- Cisco IOS NetFlow. Available online: https://www.cisco.com/c/en/us/products/ios-nx-os-software/ios-netflow/ (accessed on 14 April 2020).

- Xiang, C.; Binxing, F.; Lihua, Y.; Xiaoyi, L.; Tianning, Z. Andbot: Towards advanced mobile botnets. In Proceedings of the 4th USENIX Workshop on Large-Scale Exploits and Emergent Threats, LEET ’11, Boston, MA, USA, 29 March 2011; p. 11. [Google Scholar]

- Marchetti, M.; Pierazzi, F.; Guido, A.; Colajanni, M. Countering Advanced Persistent Threats through security intelligence and big data analytics. In Proceedings of the 2016 8th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 31 May–3 June 2016; pp. 243–261. [Google Scholar]

- Bridges, R.A.; Glass-Vanderlan, T.R.; Iannacone, M.D.; Vincent, M.S.; Chen, Q.G. A Survey of Intrusion Detection Systems Leveraging Host Data. ACM Comput. Surv. 2019, 52, 128. [Google Scholar] [CrossRef]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A survey of deep learning methods for cyber security. Information 2019, 10, 122. [Google Scholar] [CrossRef]

- Pierazzi, F.; Apruzzese, G.; Colajanni, M.; Guido, A.; Marchetti, M. Scalable architecture for online prioritisation of cyber threats. In Proceedings of the 2017 9th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 30 May–2 June 2017; pp. 1–18. [Google Scholar]

- OpenArgus. Available online: https://qosient.com/argus/ (accessed on 14 April 2020).

- Stevanovic, M.; Pedersen, J.M. An analysis of network traffic classification for botnet detection. In Proceedings of the 2015 International Conference on Cyber Situational Awareness, Data Analytics and Assessment (CyberSA), London, UK, 8–9 June 2015; pp. 1–8. [Google Scholar]

- Abraham, B.; Mandya, A.; Bapat, R.; Alali, F.; Brown, D.E.; Veeraraghavan, M. A Comparison of Machine Learning Approaches to Detect Botnet Traffic. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Alejandre, F.V.; Cortés, N.C.; Anaya, E.A. Feature selection to detect botnets using machine learning algorithms. In Proceedings of the 2017 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 22–24 February 2017; pp. 1–7. [Google Scholar]

- Pektaş, A.; Acarman, T. Deep learning to detect botnet via network flow summaries. Neural Comput. Appl. 2019, 31, 8021–8033. [Google Scholar] [CrossRef]

- Stevanovic, M.; Pedersen, J.M. An efficient flow-based botnet detection using supervised machine learning. In Proceedings of the 2014 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 3–6 February 2014; pp. 797–801. [Google Scholar]

- WhatsApp Customer Stories. 2019. Available online: https://www.whatsapp.com/business/customer-stories (accessed on 14 April 2020).

- OneDrive Customer Stories. 2019. Available online: https://products.office.com/en-us/onedrive-for-business/customer-stories (accessed on 14 April 2020).

- General Electric Uses Teams. 2019. Available online: https://products.office.com/en-us/business/customer-stories/726925-general-electric-microsoft-teams (accessed on 14 April 2020).

- OneNote Testimonials. 2019. Available online: https://products.office.com/en/business/office-365-customer-stories-office-testimonials (accessed on 14 April 2020).

- nProbe: An Extensible NetFlow v5/v9/IPFIX Probe for IPv4/v6. 2015. Available online: http://www.ntop.org/products/netflow/nprobe/ (accessed on 14 April 2020).

- Apruzzese, G.; Pierazzi, F.; Colajanni, M.; Marchetti, M. Detection and Threat Prioritization of Pivoting Attacks in Large Networks. IEEE Trans. Emerg. Top. Comput. 2017. [Google Scholar] [CrossRef]

- Apruzzese, G.; Andreolini, M.; Colajanni, M.; Marchetti, M. Hardening Random Forest Cyber Detectors against Adversarial Attacks. IEEE Trans. Emerg. Top. Comput. Intell. 2019. [Google Scholar] [CrossRef]

- Xin, Y.; Kong, L.; Liu, Z.; Chen, Y.; Li, Y.; Zhu, H.; Gao, M.; Hou, H.; Wang, C. Machine learning and deep learning methods for cybersecurity. IEEE Access 2018, 6, 35365–35381. [Google Scholar] [CrossRef]

- Usama, M.; Asim, M.; Latif, S.; Qadir, J. Generative Adversarial Networks for Launching and Thwarting Adversarial Attacks on Network Intrusion Detection Systems. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 78–83. [Google Scholar]

| Date Flow Start | Duration | Proto | Src IP Addr:Port | Dst IP Addr:Port | Packets | Bytes |

|---|---|---|---|---|---|---|

| 2020-09-01 00:00:00.459 | 2.346 | TCP | 192.168.0.142:24920 | 192.168.0.1:22126 | 1 | 46 |

| 2020-09-01 00:00:00.763 | 1.286 | UDP | 192.168.0.145:22126 | 192.168.0.254:24920 | 1 | 80 |

| Scenario | Duration (h) | Size (GB) | Packets | Netflows | Malicious Flows | Benign Flows | Botnet | # Bots |

|---|---|---|---|---|---|---|---|---|

| 1 | 6.15 | 52 | 71,971,482 | 2,824,637 | 40,959 | 2,783,677 | Neris | 1 |

| 2 | 4.21 | 60 | 71,851,300 | 1,808,122 | 20,941 | 1,787,181 | Neris | 1 |

| 3 | 66.85 | 121 | 167,730,395 | 4,710,638 | 26,822 | 4,683,816 | Rbot | 1 |

| 4 | 4.21 | 53 | 62,089,135 | 1,121,076 | 1808 | 1,119,268 | Rbot | 1 |

| 5 | 11.63 | 38 | 4,481,167 | 129,832 | 901 | 128,931 | Virut | 1 |

| 6 | 2.18 | 30 | 38,764,357 | 558,919 | 4630 | 554,289 | Menti | 1 |

| 7 | 0.38 | 6 | 7,467,139 | 114,077 | 63 | 114,014 | Sogou | 1 |

| 8 | 19.5 | 123 | 155,207,799 | 2,954,230 | 6126 | 2,948,104 | Murlo | 1 |

| 9 | 5.18 | 94 | 115,415,321 | 2,753,884 | 184,979 | 2,568,905 | Neris | 10 |

| 10 | 4.75 | 73 | 90,389,782 | 1,309,791 | 106,352 | 1,203,439 | Rbot | 10 |

| 11 | 0.26 | 5 | 6,337,202 | 107,251 | 8164 | 99,087 | Rbot | 3 |

| 12 | 1.21 | 8 | 13,212,268 | 325,471 | 2168 | 323,303 | NSIS.ay | 3 |

| 13 | 16.36 | 34 | 50,888,256 | 1,925,149 | 39,993 | 1,885,156 | Virut | 1 |

| # | Feature Name | Type |

|---|---|---|

| 1, 2 | src/dst IP address type | Bool |

| 3, 4 | src/dst port | Num |

| 5 | flow direction | Bool |

| 6 | connection state | Cat |

| 7 | duration (seconds) | Num |

| 8 | protocol | Cat |

| 9, 10 | src/dst ToS | Num |

| 11, 12 | src/dst bytes | Num |

| 13 | exchanged packets | Num |

| 14 | exchanged bytes | Num |

| 15, 16 | src/dst port type | Cat |

| 17 | bytes per second | Num |

| 18 | bytes per packet | Num |

| 19 | packets per second | Num |

| 20 | ratio of src/dst bytes | Num |

| Group (g) | Altered Features |

|---|---|

| 1a | Duration (in seconds) |

| 1b | Src_bytes |

| 1c | Dst_bytes |

| 1d | Tot_pkts |

| 2a | Duration, Src_bytes |

| 2b | Duration, Dst_bytes |

| 2c | Duration, Tot_pkts |

| 2d | Src_bytes, Tot_pkts |

| 2e | Src_bytes, Dst_bytes |

| 2f | Dst_bytes, Tot_pkts |

| 3a | Duration, Src_bytes, Dst_bytes |

| 3b | Duration, Src_bytes, Tot_pkts |

| 3c | Duration, Dst_bytes, Tot_pkts |

| 3d | Src_bytes, Dst_bytes, Tot_pkts |

| 4a | Duration, Src_bytes, Dst_bytes, Tot_pkts |

| Step (s) | Duration | Src_bytes | Dst_bytes | Tot_pkts |

|---|---|---|---|---|

| I | ||||

| II | ||||

| III | ||||

| IV | ||||

| V | ||||

| VI | ||||

| VII | ||||

| VIII | ||||

| IX |

| Predicted | |||

|---|---|---|---|

| Malicious | Benign | ||

| Actual | Malicious | TP | FN |

| Benign | FP | TN | |

| Algorithm | F1-Score (std. dev.) | Precision (std. dev.) | Recall (std. dev.) |

|---|---|---|---|

| 𝖱𝖥 | () | () | () |

| 𝖬𝖫𝖯 | () | () | () |

| 𝖣𝖳 | () | () | () |

| 𝖶𝗇𝖣 | () | () | () |

| 𝖠𝖡 | () | () | () |

| Average | () | () | () |

| Algorithm | Recall (no-attack) | Recall (attack) | Attack Severity |

|---|---|---|---|

| 𝖱𝖥 | () | () | () |

| 𝖬𝖫𝖯 | () | () | () |

| 𝖣𝖳 | () | () | () |

| 𝖶𝗇𝖣 | () | () | () |

| 𝖠𝖡 | () | () | () |

| Average | () | () | () |

| Algorithm | F1-Score (std. dev.) | Precision (std. dev.) | Recall (std. dev.) |

|---|---|---|---|

| 𝖱𝖥 | () | () | () |

| 𝖬𝖫𝖯 | () | () | () |

| 𝖣𝖳 | () | () | () |

| 𝖶𝗇𝖣 | () | () | () |

| 𝖠𝖡 | () | () | () |

| Average | () | () | () |

| Detector | 𝖱𝖥 | 𝖬𝖫𝖯 | 𝖣𝖳 | 𝖶𝗇𝖣 | 𝖠𝖡 | avg |

|---|---|---|---|---|---|---|

| Baseline | 5:34 | 9:58 | 4:12 | 16:15 | 5:58 | 8:23 |

| Hardened | 9:48 | 16:31 | 6:37 | 24:22 | 10:09 | 13:29 |

| Application | Blocked Samples | Forwarded Samples |

|---|---|---|

| Teams | ||

| OneDrive | ||

| OneNote | ||

| Skype | ||

| Average |

| Algorithm | Recall (no-attack) | Recall (attack) | Attack Severity |

|---|---|---|---|

| 𝖱𝖥 | () | () | () |

| 𝖬𝖫𝖯 | () | () | () |

| DT | () | () | () |

| 𝖶𝗇𝖣 | () | () | () |

| 𝖠𝖡 | () | () | () |

| Average | () | () | () |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Apruzzese, G.; Andreolini, M.; Marchetti, M.; Colacino, V.G.; Russo, G. AppCon: Mitigating Evasion Attacks to ML Cyber Detectors. Symmetry 2020, 12, 653. https://doi.org/10.3390/sym12040653

Apruzzese G, Andreolini M, Marchetti M, Colacino VG, Russo G. AppCon: Mitigating Evasion Attacks to ML Cyber Detectors. Symmetry. 2020; 12(4):653. https://doi.org/10.3390/sym12040653

Chicago/Turabian StyleApruzzese, Giovanni, Mauro Andreolini, Mirco Marchetti, Vincenzo Giuseppe Colacino, and Giacomo Russo. 2020. "AppCon: Mitigating Evasion Attacks to ML Cyber Detectors" Symmetry 12, no. 4: 653. https://doi.org/10.3390/sym12040653

APA StyleApruzzese, G., Andreolini, M., Marchetti, M., Colacino, V. G., & Russo, G. (2020). AppCon: Mitigating Evasion Attacks to ML Cyber Detectors. Symmetry, 12(4), 653. https://doi.org/10.3390/sym12040653