Artificial Intelligence in the Cyber Domain: Offense and Defense

Abstract

1. Introduction

- To present the impact of AI techniques on cybersecurity: we provide a brief overview of AI and discuss the impact of AI in the cyber domain.

- Applications of AI for cybersecurity: we conduct a survey of the applications of AI for cybersecurity, which covers a wide-range cyber attack types.

- Discussion on the potential security threats from adversarial uses of AI technologies: we investigate various potential threats and attacks may arise through the use of AI systems.

- Challenges and future directions: We discuss the potential research challenges and open research directions of AI in cybersecurity.

2. Research Methodology

- Papers which had titles belonging to subjects outside the scope of this research.

- Books, patent documents, technical reports, citations.

- Papers which were not written in English.

3. The impact of AI on Cybersecurity

3.1. The Positive Uses of AI

- AI can discover new and sophisticated changes in attack flexibility: Conventional technology is focused on the past and relies heavily on known attackers and attacks, leaving room for blind spots when detecting unusual events in new attacks. The limitations of old defense technology are now being addressed through intelligent technology. For example, privileged activity in an intranet can be monitored, and any significant mutation in privileged access operations can denote a potential internal threat. If the detection is successful, the machine will reinforce the validity of the actions and become more sensitive to detecting similar patterns in the future. With a larger amount of data and more examples, the machine can learn and adapt better to detect anomalous, faster, and more accurate operations. This is especially useful while cyber-attacks are becoming more sophisticated, and hackers are making new and innovative approaches.

- AI can handle the volume of data: AI can enhance network security by developing autonomous security systems to detect attacks and respond to breaches. The volume of security alerts that appear daily can be very overwhelming for security groups. Automatically detecting and responding to threats has helped to reduce the work of network security experts and can assist in detecting threats more effectively than other methods. When a large amount of security data is created and transmitted over the network every day, network security experts will gradually have difficulty tracking and identifying attack factors quickly and reliably. This is where AI can help, by expanding the monitoring and detection of suspicious activities. This can help network security personnel react to situations that they have not encountered before, replacing the time-consuming analysis of people.

- An AI security system can learn over time to respond better to threats: AI helps detect threats based on application behavior and a whole network’s activity. Over time, AI security system learns about the regular network of traffic and behavior, and makes a baseline of what is normal. From there, any deviations from the norm can be spotted to detect attacks.

3.2. Drawbacks and Limitations of Using AI

- Data sets: Creating an AI system demands a considerable number of input samples, and obtaining and processing the samples can take a long time and a lot of resources.

- Resource requirements: Building and maintaining the fundamental system needs an immense amount of resources, including memory, data, and computing power. What is more, skilled resources necessary to implement this technology require a significant cost.

- False alarms: Frequent false alarms are an issue for end-users, disrupting business by potentially delaying any necessary response and generally affecting efficiency. The process of fine-tuning is a trade-off between reducing false alarms and maintaining the security level.

- Attacks on the AI-based system: Attackers can use various attack techniques that target AI systems, such as adversarial inputs, data poisoning, and model stealing.

4. AI Methodology for Cybersecurity

4.1. Learning Algorithms

- Supervised learning: This type requires a training process with a large and representative set of data that has been previously labeled. These learning algorithms are frequently used as a classification mechanism or a regression mechanism.

- Unsupervised learning: In contrast to supervised learning, unsupervised learning algorithms use unlabeled training datasets. These approaches are often used to cluster data, reduce dimensionality, or estimate density.

- Reinforcement learning: Reinforcement learning is a type of learning algorithm that learns the best actions based on rewards or punishment. Reinforcement learning is useful for situations where data is limited or not given.

4.2. Machine Learning Methods

4.3. Deep Learning Methods

4.4. Bio-Inspired Computation Methods

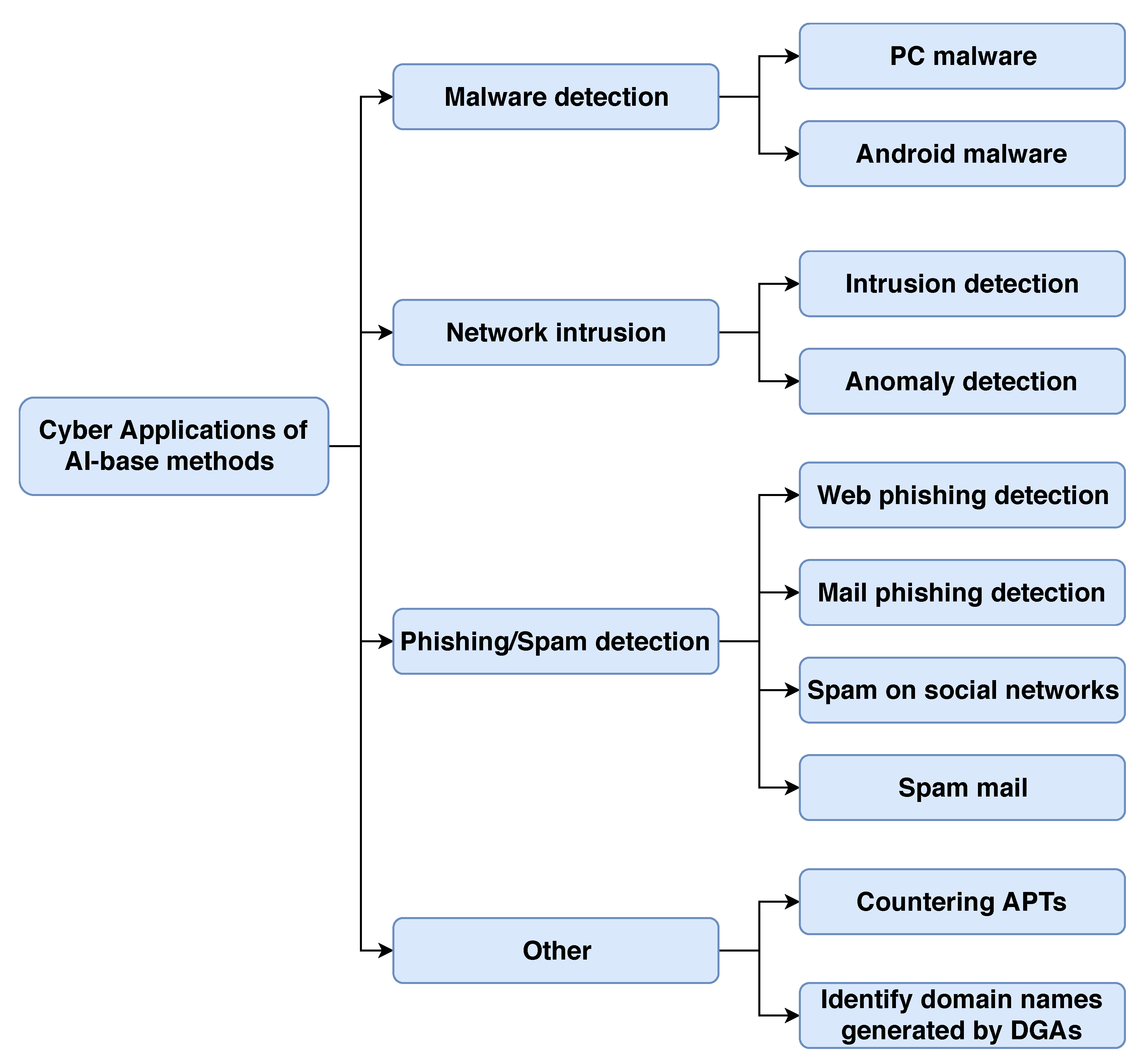

5. AI-Based Approaches for Defending Against Cyberspace Attacks

5.1. Malware Identification

5.2. Intrusion Detection

5.3. Phishing and SPAM Detection

5.4. Other: Counter APTs and Identify DGAs

5.4.1. Countering an Advanced, Persistent Threat

5.4.2. Identifying Domain Names Generated by DGAs

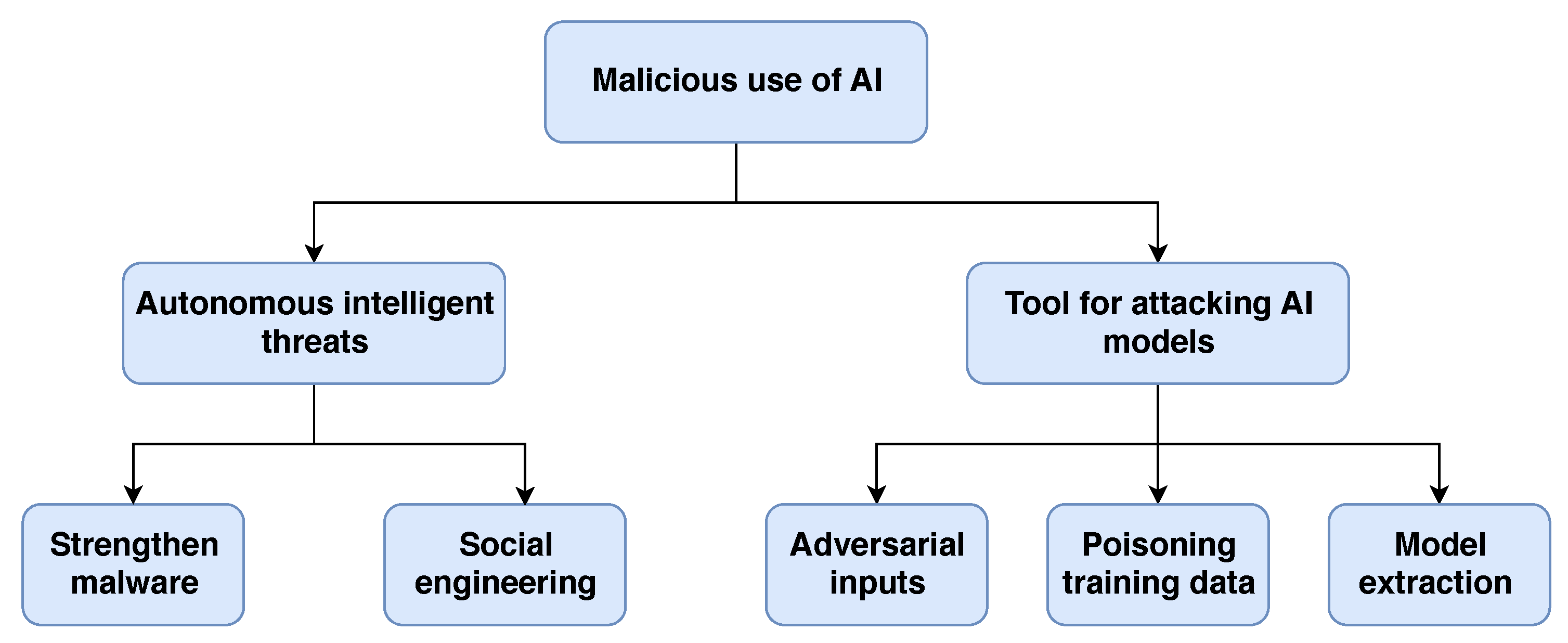

6. The Nefarious Use of AI

6.1. AI and Autonomy Intelligent Threats

6.1.1. AI-Powered Malware

6.1.2. AI Used in Social Engineering Attacks

6.2. AI as a Tool for Attacking AI Models

- Adversarial inputs: This is a technique where malicious actors design the inputs to make models predict erroneously in order to evade detection. Recent studies demonstrated how to generate adversarial malware samples to avoid detection. The authors of [68,69] crafted adversarial examples to attack the Android malware detection model. Meanwhile, scholars in [70] presented a generative adversarial network (GAN) based algorithm called MalGAN to craft adversarial samples, which was capable of bypassing black-box machine learning-based detection models. Another approach by Anderson et al. [71] adopted GAN to create adversarial domain names to avoid the detection of domain generation algorithms. The authors in [72] investigated adversarial generated methods to avoid detection by DL models. Meanwhile, in [73], the authors presented a framework based on reinforcement learning for attacking static portable executable (PE) anti-malware engines.

- Poisoning training data: In this kind of attack, the malicious actors could pollute the training data from which the algorithm was learning in such a way that reduced the detection capabilities of the system. Different domains are vulnerable to poisoning attacks; for example, network intrusion, spam filtering, or malware analysis [74,75].

- Model extraction attacks: These techniques are used to reconstruct the detection models or recover training data via black-box examination [76]. On this occasion, the attacker learns how ML algorithms work by reversing techniques. From this knowledge, the malicious actors know what the detector engines are looking for and how to avoid it.

7. Challenges and Open Research Directions

7.1. Challenges

7.2. Open Research Directions

8. Discussion

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| ML | Machine learning |

| DL | Deep learning |

| DT | Decision trees |

| SVM | Support vector machines |

| KNN | K-nearest neighbor |

| RF | Random forest |

| AR | Association rule algorithms |

| EL | Ensemble learning |

| PCA | Principal component analysis |

| FNN | Feedforward neural networks |

| CNNs | Convolutional neural networks |

| RNN | Recurrent neural networks |

| DBNs | Deep belief networks |

| SAE | Stacked autoencoders |

| GANs | Generative adversarial networks |

| RBMs | Restricted Boltzmann machines |

| EDLNs | Ensemble of deep learning networks |

| GA | Genetic algorithms |

| ES | Evolution strategies |

| ACO | Ant colony optimization |

| PSO | Particle swarm optimization |

| AIS | Artificial immune systems (AIS) |

References

- Buczak, A.L.; Guven, E. A survey of data mining and machine learning methods for cyber security intrusion detection. IEEE Commun. Surv. Tutor. 2015, 18, 1153–1176. [Google Scholar] [CrossRef]

- Torres, J.M.; Comesaña, C.I.; García-Nieto, P.J. Machine learning techniques applied to cybersecurity. Int. J. Mach. Learn. Cybern. 2019, 1–14. [Google Scholar] [CrossRef]

- Guan, Z.; Bian, L.; Shang, T.; Liu, J. When machine learning meets security issues: A survey. In Proceedings of the 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Shenyang, China, 24–27 August 2018; pp. 158–165. [Google Scholar]

- Xin, Y.; Kong, L.; Liu, Z.; Chen, Y.; Li, Y.; Zhu, H.; Gao, M.; Hou, H.; Wang, C. Machine learning and deep learning methods for cybersecurity. IEEE Access 2018, 6, 35365–35381. [Google Scholar] [CrossRef]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A survey of deep learning methods for cyber security. Information 2019, 10, 122. [Google Scholar] [CrossRef]

- Wickramasinghe, C.S.; Marino, D.L.; Amarasinghe, K.; Manic, M. Generalization of Deep Learning for Cyber-Physical System Security: A Survey. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 745–751. [Google Scholar]

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Guido, A.; Marchetti, M. On the effectiveness of machine and deep learning for cyber security. In Proceedings of the 2018 10th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 30 May–1 June 2018; pp. 371–390. [Google Scholar]

- Li, J.H. Cyber security meets artificial intelligence: A survey. Front. Inf. Technol. Electron. Eng. 2018, 19, 1462–1474. [Google Scholar] [CrossRef]

- Xu, Z.; Ray, S.; Subramanyan, P.; Malik, S. Malware detection using machine learning based analysis of virtual memory access patterns. In Proceedings of the Conference on Design, Automation & Test in Europe, Lausanne, Switzerland, 27–31 March 2017; pp. 169–174. [Google Scholar]

- Chowdhury, M.; Rahman, A.; Islam, R. Malware analysis and detection using data mining and machine learning classification. In Proceedings of the International Conference on Applications and Techniques in Cyber Security and Intelligence, Ningbo, China, 16–18 June 2017; pp. 266–274. [Google Scholar]

- Hashemi, H.; Azmoodeh, A.; Hamzeh, A.; Hashemi, S. Graph embedding as a new approach for unknown malware detection. J. Comput. Virol. Hacking Tech. 2017, 13, 153–166. [Google Scholar] [CrossRef]

- Ye, Y.; Chen, L.; Hou, S.; Hardy, W.; Li, X. DeepAM: A heterogeneous deep learning framework for intelligent malware detection. Knowl. Inf. Syst. 2018, 54, 265–285. [Google Scholar] [CrossRef]

- McLaughlin, N.; Martinez del Rincon, J.; Kang, B.; Yerima, S.; Miller, P.; Sezer, S.; Safaei, Y.; Trickel, E.; Zhao, Z.; Doupé, A.; et al. Deep android malware detection. In Proceedings of the Seventh ACM on Conference on Data and Application Security and Privacy, Scottsdale, AZ, USA, 22–24 March 2017; pp. 301–308. [Google Scholar]

- Li, J.; Sun, L.; Yan, Q.; Li, Z.; Srisa-an, W.; Ye, H. Significant permission identification for machine-learning-based android malware detection. IEEE Trans. Ind. Inform. 2018, 14, 3216–3225. [Google Scholar] [CrossRef]

- Zhu, H.J.; You, Z.H.; Zhu, Z.X.; Shi, W.L.; Chen, X.; Cheng, L. DroidDet: Effective and robust detection of android malware using static analysis along with rotation forest model. Neurocomputing 2018, 272, 638–646. [Google Scholar] [CrossRef]

- Karbab, E.B.; Debbabi, M.; Derhab, A.; Mouheb, D. MalDozer: Automatic framework for android malware detection using deep learning. Digit. Investig. 2018, 24, S48–S59. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, M.; Wang, J. Effective android malware detection with a hybrid model based on deep autoencoder and convolutional neural network. J. Ambient Intell. Humaniz. Comput. 2019, 10, 3035–3043. [Google Scholar] [CrossRef]

- Ab Razak, M.F.; Anuar, N.B.; Othman, F.; Firdaus, A.; Afifi, F.; Salleh, R. Bio-inspired for features optimization and malware detection. Arab. J. Sci. Eng. 2018, 43, 6963–6979. [Google Scholar] [CrossRef]

- Altaher, A.; Barukab, O.M. Intelligent Hybrid Approach for Android Malware Detection based on Permissions and API Calls. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 60–67. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Goswami, R.T.; Mukherjee, K. A feature selection technique based on rough set and improvised PSO algorithm (PSORS-FS) for permission based detection of Android malwares. Int. J. Mach. Learn. Cybern. 2019, 10, 1893–1907. [Google Scholar] [CrossRef]

- Alejandre, F.V.; Cortés, N.C.; Anaya, E.A. Feature selection to detect botnets using machine learning algorithms. In Proceedings of the 2017 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 22–24 February 2017; pp. 1–7. [Google Scholar]

- Fatima, A.; Maurya, R.; Dutta, M.K.; Burget, R.; Masek, J. Android Malware Detection Using Genetic Algorithm based Optimized Feature Selection and Machine Learning. In Proceedings of the 2019 42nd International Conference on Telecommunications and Signal Processing (TSP), Budapest, Hungary, 1–3 July 2019; pp. 220–223. [Google Scholar]

- Al-Yaseen, W.L.; Othman, Z.A.; Nazri, M.Z.A. Multi-level hybrid support vector machine and extreme learning machine based on modified K-means for intrusion detection system. Expert Syst. Appl. 2017, 67, 296–303. [Google Scholar] [CrossRef]

- Kabir, E.; Hu, J.; Wang, H.; Zhuo, G. A novel statistical technique for intrusion detection systems. Future Gener. Comput. Syst. 2018, 79, 303–318. [Google Scholar] [CrossRef]

- Ashfaq, R.A.R.; Wang, X.Z.; Huang, J.Z.; Abbas, H.; He, Y.L. Fuzziness based semi-supervised learning approach for intrusion detection system. Inf. Sci. 2017, 378, 484–497. [Google Scholar] [CrossRef]

- Shone, N.; Ngoc, T.N.; Phai, V.D.; Shi, Q. A deep learning approach to network intrusion detection. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 41–50. [Google Scholar] [CrossRef]

- Hamamoto, A.H.; Carvalho, L.F.; Sampaio, L.D.H.; Abrão, T.; Proença, M.L., Jr. Network anomaly detection system using genetic algorithm and fuzzy logic. Expert Syst. Appl. 2018, 92, 390–402. [Google Scholar] [CrossRef]

- Botes, F.H.; Leenen, L.; De La Harpe, R. Ant colony induced decision trees for intrusion detection. In Proceedings of the 16th European Conference on Cyber Warfare and Security, Dublin, Ireland, 29–30 June 2017; pp. 53–62. [Google Scholar]

- Otero, F.E.; Freitas, A.A.; Johnson, C.G. Inducing decision trees with an ant colony optimization algorithm. Appl. Soft Comput. 2012, 12, 3615–3626. [Google Scholar] [CrossRef]

- Syarif, A.R.; Gata, W. Intrusion detection system using hybrid binary PSO and K-nearest neighborhood algorithm. In Proceedings of the 2017 11th International Conference on Information & Communication Technology and System (ICTS), Surabaya, India, 31 October 2017; pp. 181–186. [Google Scholar]

- Ali, M.H.; Al Mohammed, B.A.D.; Ismail, A.; Zolkipli, M.F. A new intrusion detection system based on fast learning network and particle swarm optimization. IEEE Access 2018, 6, 20255–20261. [Google Scholar] [CrossRef]

- Chen, W.; Liu, T.; Tang, Y.; Xu, D. Multi-level adaptive coupled method for industrial control networks safety based on machine learning. Saf. Sci. 2019, 120, 268–275. [Google Scholar] [CrossRef]

- Garg, S.; Batra, S. Fuzzified cuckoo based clustering technique for network anomaly detection. Comput. Electr. Eng. 2018, 71, 798–817. [Google Scholar] [CrossRef]

- Hajisalem, V.; Babaie, S. A hybrid intrusion detection system based on ABC-AFS algorithm for misuse and anomaly detection. Comput. Netw. 2018, 136, 37–50. [Google Scholar] [CrossRef]

- Garg, S.; Kaur, K.; Kumar, N.; Kaddoum, G.; Zomaya, A.Y.; Ranjan, R. A Hybrid Deep Learning based Model for Anomaly Detection in Cloud Datacentre Networks. IEEE Trans. Netw. Serv. Manag. 2019. [Google Scholar] [CrossRef]

- Khan, M.A.; Karim, M.; Kim, Y. A Scalable and Hybrid Intrusion Detection System Based on the Convolutional-LSTM Network. Symmetry 2019, 11, 583. [Google Scholar] [CrossRef]

- Selvakumar, B.; Muneeswaran, K. Firefly algorithm based feature selection for network intrusion detection. Comput. Secur. 2019, 81, 148–155. [Google Scholar]

- Gu, T.; Chen, H.; Chang, L.; Li, L. Intrusion detection system based on improved abc algorithm with tabu search. IEEJ Trans. Electr. Electron. Eng. 2019, 14. [Google Scholar] [CrossRef]

- Smadi, S.; Aslam, N.; Zhang, L. Detection of online phishing email using dynamic evolving neural network based on reinforcement learning. Decis. Support Syst. 2018, 107, 88–102. [Google Scholar] [CrossRef]

- Jain, A.K.; Gupta, B.B. Towards detection of phishing websites on client-side using machine learning based approach. Telecommun. Syst. 2018, 68, 687–700. [Google Scholar] [CrossRef]

- Feng, F.; Zhou, Q.; Shen, Z.; Yang, X.; Han, L.; Wang, J. The application of a novel neural network in the detection of phishing websites. J. Ambient. Intell. Humaniz. Comput. 2018, 1–15. [Google Scholar] [CrossRef]

- Sahingoz, O.K.; Buber, E.; Demir, O.; Diri, B. Machine learning based phishing detection from URLs. Expert Syst. Appl. 2019, 117, 345–357. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Z.; Chen, X.; Yuan, H.; Liu, W. A stacking model using URL and HTML features for phishing webpage detection. Future Gener. Comput. Syst. 2019, 94, 27–39. [Google Scholar] [CrossRef]

- Feng, W.; Sun, J.; Zhang, L.; Cao, C.; Yang, Q. A support vector machine based naive Bayes algorithm for spam filtering. In Proceedings of the 2016 IEEE 35th International Performance Computing and Communications Conference (IPCCC), Las Vegas, NV, USA, 9–11 December 2016; pp. 1–8. [Google Scholar]

- Kumaresan, T.; Palanisamy, C. E-mail spam classification using S-cuckoo search and support vector machine. Int. J. Bio-Inspired Comput. 2017, 9, 142–156. [Google Scholar] [CrossRef]

- Sohrabi, M.K.; Karimi, F. A feature selection approach to detect spam in the Facebook social network. Arab. J. Sci. Eng. 2018, 43, 949–958. [Google Scholar] [CrossRef]

- Aswani, R.; Kar, A.K.; Ilavarasan, P.V. Detection of spammers in twitter marketing: A hybrid approach using social media analytics and bio inspired computing. Inf. Syst. Front. 2018, 20, 515–530. [Google Scholar] [CrossRef]

- Faris, H.; Ala’M, A.Z.; Heidari, A.A.; Aljarah, I.; Mafarja, M.; Hassonah, M.A.; Fujita, H. An intelligent system for spam detection and identification of the most relevant features based on evolutionary random weight networks. Inf. Fusion 2019, 48, 67–83. [Google Scholar] [CrossRef]

- Moon, D.; Im, H.; Kim, I.; Park, J.H. DTB-IDS: An intrusion detection system based on decision tree using behavior analysis for preventing APT attacks. J. Supercomput. 2017, 73, 2881–2895. [Google Scholar] [CrossRef]

- Sharma, P.K.; Moon, S.Y.; Moon, D.; Park, J.H. DFA-AD: A distributed framework architecture for the detection of advanced persistent threats. Clust. Comput. 2017, 20, 597–609. [Google Scholar] [CrossRef]

- Rosenberg, I.; Sicard, G.; David, E.O. DeepAPT: Nation-state APT attribution using end-to-end deep neural networks. In Proceedings of the International Conference on Artificial Neural Networks, Alghero, Sardinia, Italy, 11–14 September 2017; pp. 91–99. [Google Scholar]

- Burnap, P.; French, R.; Turner, F.; Jones, K. Malware classification using self organising feature maps and machine activity data. Comput. Secur. 2018, 73, 399–410. [Google Scholar] [CrossRef]

- Ghafir, I.; Hammoudeh, M.; Prenosil, V.; Han, L.; Hegarty, R.; Rabie, K.; Aparicio-Navarro, F.J. Detection of advanced persistent threat using machine-learning correlation analysis. Future Gener. Comput. Syst. 2018, 89, 349–359. [Google Scholar] [CrossRef]

- Lison, P.; Mavroeidis, V. Automatic detection of malware-generated domains with recurrent neural models. arXiv 2017, arXiv:1709.07102. [Google Scholar]

- Curtin, R.R.; Gardner, A.B.; Grzonkowski, S.; Kleymenov, A.; Mosquera, A. Detecting DGA domains with recurrent neural networks and side information. arXiv 2018, arXiv:1810.02023. [Google Scholar]

- Yu, B.; Pan, J.; Hu, J.; Nascimento, A.; De Cock, M. Character level based detection of DGA domain names. In Proceedings of the IEEE 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Tran, D.; Mac, H.; Tong, V.; Tran, H.A.; Nguyen, L.G. A LSTM based framework for handling multiclass imbalance in DGA botnet detection. Neurocomputing 2018, 275, 2401–2413. [Google Scholar] [CrossRef]

- Wang, Z.; Dong, H.; Chi, Y.; Zhang, J.; Yang, T.; Liu, Q. DGA and DNS Covert Channel Detection System based on Machine Learning. In Proceedings of the 3rd International Conference on Computer Science and Application Engineering, Sanya, China, 22–24 October 2019; p. 156. [Google Scholar]

- Yang, L.; Zhai, J.; Liu, W.; Ji, X.; Bai, H.; Liu, G.; Dai, Y. Detecting Word-Based Algorithmically Generated Domains Using Semantic Analysis. Symmetry 2019, 11, 176. [Google Scholar] [CrossRef]

- Thanh, C.T.; Zelinka, I. A Survey on Artificial Intelligence in Malware as Next-Generation Threats. Mendel 2019, 25, 27–34. [Google Scholar] [CrossRef]

- Stoecklin, M.P. DeepLocker: How AI Can Power a Stealthy New Breed of Malware. Secur. Intell. 2018, 8. Available online: https://securityintelligence.com/deeplocker-how-ai-can-power-a-stealthy-new-breedof-malware/ (accessed on 8 February 2020).

- Rigaki, M.; Garcia, S. Bringing a gan to a knife-fight: Adapting malware communication to avoid detection. In Proceedings of the 2018 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 24 May 2018; pp. 70–75. [Google Scholar]

- Ney, P.; Koscher, K.; Organick, L.; Ceze, L.; Kohno, T. Computer Security, Privacy, and DNA Sequencing: Compromising Computers with Synthesized DNA, Privacy Leaks, and More. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017; pp. 765–779. [Google Scholar]

- Zelinka, I.; Das, S.; Sikora, L.; Šenkeřík, R. Swarm virus-Next-generation virus and antivirus paradigm? Swarm Evol. Comput. 2018, 43, 207–224. [Google Scholar] [CrossRef]

- Truong, T.C.; Zelinka, I.; Senkerik, R. Neural Swarm Virus. In Swarm, Evolutionary, and Memetic Computing and Fuzzy and Neural Computing; Springer: Berlin, Germany, 2019; pp. 122–134. [Google Scholar]

- Seymour, J.; Tully, P. Weaponizing data science for social engineering: Automated E2E spear phishing on Twitter. Black Hat USA 2016, 37. [Google Scholar] [CrossRef]

- Seymour, J.; Tully, P. Generative Models for Spear Phishing Posts on Social Media. arXiv 2018, arXiv:1802.05196. [Google Scholar]

- Grosse, K.; Papernot, N.; Manoharan, P.; Backes, M.; McDaniel, P. Adversarial examples for malware detection. In Proceedings of the European Symposium on Research in Computer Security, Oslo, Norway, 11–15 September 2017; pp. 62–79. [Google Scholar]

- Yang, W.; Kong, D.; Xie, T.; Gunter, C.A. Malware detection in adversarial settings: Exploiting feature evolutions and confusions in android apps. In Proceedings of the 33rd Annual Computer Security Applications Conference, Orlando, FL, USA, 4–8 December 2017; pp. 288–302. [Google Scholar]

- Hu, W.; Tan, Y. Generating adversarial malware examples for black-box attacks based on GAN. arXiv 2017, arXiv:1702.05983. [Google Scholar]

- Anderson, H.S.; Woodbridge, J.; Filar, B. DeepDGA: Adversarially-tuned domain generation and detection. In Proceedings of the 2016 ACM Workshop on Artificial Intelligence and Security, Vienna, Austria, 28 October 2016; pp. 13–21. [Google Scholar]

- Kolosnjaji, B.; Demontis, A.; Biggio, B.; Maiorca, D.; Giacinto, G.; Eckert, C.; Roli, F. Adversarial malware binaries: Evading deep learning for malware detection in executables. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 533–537. [Google Scholar]

- Anderson, H.S.; Kharkar, A.; Filar, B.; Evans, D.; Roth, P. Learning to evade static PE machine learning malware models via reinforcement learning. arXiv 2018, arXiv:1801.08917. [Google Scholar]

- Li, P.; Liu, Q.; Zhao, W.; Wang, D.; Wang, S. BEBP: An poisoning method against machine learning based idss. arXiv 2018, arXiv:1803.03965. [Google Scholar]

- Chen, S.; Xue, M.; Fan, L.; Hao, S.; Xu, L.; Zhu, H.; Li, B. Automated poisoning attacks and defenses in malware detection systems: An adversarial machine learning approach. Comput. Secur. 2018, 73, 326–344. [Google Scholar] [CrossRef]

- Tramèr, F.; Zhang, F.; Juels, A.; Reiter, M.K.; Ristenpart, T. Stealing machine learning models via prediction apis. In Proceedings of the 25th USENIX Security Symposium (USENIX Security 16), Austin, TX, USA, 10–12 August 2016; pp. 601–618. [Google Scholar]

- Carlini, N.; Liu, C.; Erlingsson, Ú.; Kos, J.; Song, D. The secret sharer: Evaluating and testing unintended memorization in neural networks. In Proceedings of the 28th USENIX Security Symposium (USENIX Security 19), San Diego, CA, USA, 14–16 August 2019; pp. 267–284. [Google Scholar]

- Resende, J.S.; Martins, R.; Antunes, L. A Survey on Using Kolmogorov Complexity in Cybersecurity. Entropy 2019, 21, 1196. [Google Scholar] [CrossRef]

| References | Year | Focus | Tech. | Features | Dataset | Validation Metrics |

|---|---|---|---|---|---|---|

| [9] | 2017 | PC malware | SVM,RF Logistic regression | MAP’s feature sets | RIPE | DR: 99% FPR: 5% |

| [10] | 2017 | PC malware | BAM, MLP | N-gram, Windows API calls | Self collection: 52,185 samples | ACC: 98.6% FPR: 2% |

| [11] | 2017 | PC malware | KNN, SVM | OpCode graph | Self collection: 22,200 samples | ACC, FPR |

| [13] | 2017 | Android malware | CNN | Opcode sequence | GNOME, McAfee Labs | ACC: 98%/80%/87%, F-score: 97%/78%/86% |

| [19] | 2017 | Android malware | ANF, PSO | Permissions, API Calls | Self collection: 500 samples | ACC: 89% |

| [21] | 2017 | Botnet | C4.5, GA | Multi features | ISOT, ISCX | DR: 99.46%/95.58% FPR: 0.57%/ 2.24% |

| [12] | 2018 | PC malware | AutoEncoder, RBM | Windows API calls | Self collection: 20,000 samples | ACC: 98.2% |

| [14] | 2018 | Android malware | SVM, DT | Significant permissions | Self collection: 54,694 samples | ACC: 93.67% FPR: 4.85% |

| [15] | 2018 | Android malware | Rotation Forest | Permissions, APIs, system events | Self collection: 2,030 smaples | ACC: 88.26% |

| [16] | 2018 | Android malware | ANN | API call | Malgenome, Drebin, Maldozer | F1-Score: 96.33% FPR: 3.19% |

| [18] | 2018 | Android malware | PSO, RF, J48, KNN, MLP, AdaBoost | Permissions | Self collection: 8500 samples | TPR: 95.6% FPR: 0.32% |

| [17] | 2019 | Android malware | DAE, CNN | Permissions, filtered intents, API calls, hardware features, code related patterns | Self collection: 23000 samples | ACC: 98.5%/98.6% FPR: 1.67%/1.82% |

| [20] | 2019 | Android malware | PSO, Bayesnet, Naïve Bayes, SMO, DT, RT, RF J48, MLP | Permissions | UCI, KEEL, Contagiodump, Wang’s repository | ACC: 79.4%/47.6%/ 82.9%/94.1%/ 100%/77.9% |

| [22] | 2019 | Android malware | SVM, ANN | App Components, Permissions | Self collection: 44,000 samples | ACC: 95.2%/96.6% |

| ACC: Accuracy | CNN: Convolutional neural network |

| FPR: False positive rate | ANF: Adaptive neural fuzzy |

| DR: Detection rate | GA: Genetic Algorithm |

| RF: Random forest | RBMs: Restricted Boltzmann machines |

| SVM: Support vector machine | DT: Decision tree |

| MLP: multilayer perceptron | GP: Genetic programming |

| BAM: binary associative memory | DT: decision tree |

| KNN: k-nearest neighbors | DAE: Deep auto-encoder |

| References | Year | Focus | Tech. | Anomaly Types | Dataset | Validation Metrics |

|---|---|---|---|---|---|---|

| [23] | 2017 | intrusion detection | SVM, K-means | DoS, Probe, U2R, R2L | KDD’99 | ACC: 95.75%, FPR: 1.87% |

| [25] | 2017 | intrusion detection | NN with random weights | DoS, Probe, R2L, U2R | NSL-KDD | ACC: 84.12% |

| [28] | 2017 | intrusion detection | ACO, DT | DoS, Probe, R2L, U2R | NSL-KDD | ACC: 65%, FPR: 0% |

| [30] | 2017 | intrusion detection | PSO, KNN | DoS, Probe, R2L, U2R | KDD’99 | ACC: - Dos: 99.91% - Probe: 94.41% - U2L: 99.77% - R2L: 99.73% |

| [24] | 2018 | intrusion detection | LS-SVM | DoS, Probe, U2R, and R2L | KDD’99 | ACC: Over 99.6% |

| [26] | 2018 | intrusion detection | DAE, RF | DoS, Probe, R2L, U2R | KDD’99, NSL-KDD | Average ACC: 85.42% - 97.85% |

| [27] | 2018 | Anomaly detection | Fuzzy logic, GA | DoS, DDoS, Flash crowd | University dataset | ACC: 96.53%, FPR: 0.56% |

| [31] | 2018 | Intrusion detection | PSO, FLN | DoS, Probe, R2L, U2R | KDD’99 | ACC: - Dos: 98.37% - Probe: 90.77% - U2L: 93.63% - R2L: 63.64% |

| [33] | 2018 | Anomaly detection | CSO, K-means | DoS, Probe, R2L, U2R | UCI-ML, NSL-KDD | ACC: 97.77%, FPR: 1.297% |

| [34] | 2018 | Intrusion detection & classification | ABC, AFS | DoS, Probe, R2L, U2R, Fuzzers, Analysis, Exploits, Generic, Worms, RA, Shellcode, Backdoors | NSL-KDD, UNSW-NB15 | ACC: 97.5%, FPR: 0.01% |

| [32] | 2019 | anomaly detection | PSO, SVM, K-means, AFS | DoS,Probe, R2L, U2R, RA, RI, CI | KDD’99, Gas Pipeline | ACC: 95% |

| [35] | 2019 | Anomaly detection | GWO, CNN | DoS, Probe, U2R, R2L | DARPA’98, KDD’99 | ACC: 97.92%/98.42% FPR: 3.6%/2.22% |

| [36] | 2019 | anomaly &misuse detection | Spark ML, LSTM | DSoS, DoS, Botnet, Brute Force SSH | ISCX-UNB | ACC: 97.29% FPR: 0.71% |

| [37] | 2019 | Anomaly detection | FA, C4.5, Bayesian Networks | DoS, Probe, U2R, R2L | KDD’99 | DoS(ACC: 99.98%, FPR: 0.01%) Probe(ACC: 93.92%, FPR: 0.01%), R2L(ACC: 98.73%, FPR: 0%), U2R(ACC: 68.97%, FPR: 0%) |

| [38] | 2019 | Intrusion dectection | Tabu search, ABC, SVM | DoS, Probe, U2R, R2L | KDD’99 | ACC: 94.53%, FPR: 7.028% |

| ACC: Accuracy FPR: False positive rate SVM: Support vector machine DT: Decision tree NN: Neural Network CNN: Convolutional neural network KNN: K-nearest neighbors LS-SVM: Least squares support vector machines DAE: Deep Auto-Encoder FLN: Fast learning network RF: Random forest ACO: Ant colony optimization PSO: Particle swarm optimization | GA: Genetic Algorithms CSO: Cuckoo Search Optimization ABC: Artificial bee colony AFS: Artificial fish swarm FA: Firefly algorithm GWO: Grey wolf optimization Dos: Denial of Service R2L: Remote to local U2R: User to Root RI:Response Injection RA: Reconnaissance Attacks CI: Command Injection |

| Reference | Year | Focus | Tech. | Features | Dataset | Validation Metrics |

|---|---|---|---|---|---|---|

| [44] | 2016 | Spam detection | Naive Bayes, SVM | 99 features | DATAMALL | Not provide |

| [45] | 2017 | Spam classification | CSO, SVM | 101 features | Ling-spam corpus | ACC: 87%/88% |

| [39] | 2018 | Mail phishing detection | NN, RL | 50 features | Self collection: 9900 samples | ACC: 98.6%, FPR: 1.8% |

| [40] | 2018 | Website phishing detection | RF, SVM, NN, logistic regression, naïve Bayes | 19 features | Phishtank, Openphish, Alexa, Payment gateway, Top banking website | ACC: 99.09% |

| [41] | 2018 | Website phishing detection | NN | 30 features | UCI repository phishing dataset | ACC: 97.71%, FPR: 1.7%. |

| [46] | 2018 | Spam message detection | PSO, DE, DT DB index, SVM, | 13 features | Self collection: 200,000 samples | Not provide |

| [47] | 2018 | Spammer detection | LFA, FCM | 21 features | Self collections: 14,235 samples | ACC: 97.98% |

| [42] | 2019 | Website phishing detection | Naive Bayes, KNN, Adaboost, K-star, SMO, RF, DT | 104 features | Self collection: 73,575 samples | ACC: 97.98% |

| [43] | 2019 | Website phishing detection | GBDT, XGBoost, LightGBM | 20 features | Self collection: - 1st: 49,947 samples - 2nd: 53,103 samples | ACC: 97.30%/98.60% FPR: 1.61%/1.24% |

| [48] | 2019 | spam detection | GA, RWN | 140 features | Spam Assassin, LingSpam, CSDMC2010 | ACC: 96.7%/93%/90.8% |

| ACC: Accuracy FPR: False positive rate SVM: Support vector machine DT: Decision tree NN: Neural Network KNN: K-nearest neighbors RF: Random forest | GDBT: Gradient Boosting Decision Tree RWN: Random Weight Network FCM: Fuzzy C-Means PSO: Particle swarm op timization GA: Genetic Algorithms CSO: Cuckoo Search Optimization LFA: Levy Flight Firefly Algorithm |

| References | Year | Focus Area | Tech. | Features | Dataset | Validation Metrics |

|---|---|---|---|---|---|---|

| [49] | 2017 | APTs detection | DT | API calls | Self collection: 130 samples | ACC: 84.7% |

| [50] | 2017 | APTs detection | GT, DP, CART, SVM | Log events | Self collection | ACC: 98.5%, FPR: 2.4% |

| [51] | 2017 | nation-states APTs detection | DNN | Raw text | Self collection: 3200 samples | ACC: 94.6% |

| [54] | 2017 | DGA domains detection | RNN | Letter combinations | Self collection: over 2.9 million samples | ACC: 97.3% |

| [52] | 2018 | APTs detection | SOFM, DT, Bayesian network, SVM, NN | Machine activity metrics | Self collection: 1188 samples | ACC: 93.76% |

| [53] | 2018 | APTs detection and prediction | DT, KNN, SVM, EL | Network traffic | Self collection, university live traffic | ACC: 84.8%, FPR: 4.5% |

| [55] | 2018 | DGA domains detection | RNN | Characters | Self collection: 2.3 million samples | FPR: <=1% |

| [56] | 2018 | DGA domains detection | RNN, CNN | Strings | Self collection: 2 million samples | ACC: 97–98% |

| [57] | 2018 | DGA botnet detection | LSTM | Characters | Alexa, OSINT | F1:98.45% |

| [59] | 2019 | DGA detection | Ensemble classifier | words | Self collection: 1 million samples | ACC: 67.98%/89.91%/91.48% |

| [58] | 2019 | DGA, DNS covert chanel detection | TF-IDF | Strings | Self collection: 1 million samples | ACC: 99.92% |

| ACC: Accuracy FPR: False positive rate SVM: Support vector machine DT:Decision tree GP: Genetic programming DT: decision tree CART: Classification and regression trees DBG-Model: Dynamic Bayesian game model DNN: Deep neural network | NN: Neural Networks KNN: k-nearest neighbors EL: Ensemble learning RNN: Recurrent neural network CNN: Convolutional neural network TF - IDF: term frequency - inverse document frequency LSTM: Long Short-Term Memory network SOFM: Self Organising Feature Map SVM: Support Vector Machines |

| References | Year | Focus | Tech. | Innovation Point | Main Idea |

|---|---|---|---|---|---|

| [71] | 2016 | Adversarial attacks | GAN | New attack model | create adversarial domain names to avoid the detection of domain generation algorithms |

| [76] | 2016 | Stealing model | AE | model extraction attacks | extract target ML models by the machine learning prediction APIs |

| [66] | 2016 | Social engineering attacks | RNN | New attack model | Automated spear phishing campaign generator for social network |

| [63] | 2017 | Compromise computer | Encoding DNAs | Encoding malware to DNAs | compromise the computer by encoding malware in a DNA sequence |

| [68] | 2017 | Adversarial attacks | AE | New attack algorithm | adversarial attacks against deep learning based Android malware classification |

| [69] | 2017 | Adversarial attacks | AE | New attack algorithm | use the adversarial examples method to conduct new malware variants for malware detectors |

| [70] | 2017 | Adversarial attacks | GAN | New attack model | present a GAN based algorithm to craft malware that capable to bypass black-box machine learning-based detection models |

| [61] | 2018 | Malware creation | DNN | AI-powered malware | Leverage deep neural network enhance malware, make it more evasive and high targeting |

| [62] | 2018 | Malware creation | GAN | AI-powered malware | avoid detection by simulating the behaviors of legitimate applications |

| [64] | 2018 | Malware creation | ACO | SI-based malware | use ACO algorithms to create a prototype malware that have a decentralize behavior |

| [73] | 2018 | Adversarial attacks | AL | New attack method | a generic black-box for attacking static portable executable machine learning malware models |

| [72] | 2018 | Adversarial attacks | AM | New attack algorithm | adversarial generated methods to attack neural network-based malware detection |

| [74] | 2018 | Poisoning attack | EPD | New poisoning data method | present a novel poisoning approach that attack against machine learning algorithms used in IDSs |

| [75] | 2018 | Poisoning attack | AM | Analysis poisoning data method | present three kind of poisoning attacks on machine learning-based mobile malware detection |

| [67] | 2018 | Social engineering attacks | LSTM | New attack model | introduced a machine learning method to manipulate users into clicking on deceptive URLs |

| [65] | 2019 | Malware creation | ANN | next generation malware | fuse swarm base intelligence, neural network to form a new kind of malware |

| GAN: Generative adversarial network AE: Adversarial Examples SI: Swarm Intelligent ANN: Artificial Neural Network RL: Reinforcement learning AMB: Adversarial Malware Binaries | EPD: Edge pattern detection RNN: Recurrent neural network DNN: Deep neural network ACO: Ant colony optimization AM: Adversarial machine learning LSTM: Long short term memory |

| Content | References | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Year | [7] | [8] | [6] | [3] | [4] | [5] | [2] | This Survey | |

| 2018 | 2018 | 2018 | 2018 | 2018 | 2019 | 2019 | 2019 | ||

| AI methods | Machine learning | x | x | x | x | x | x | ||

| Deep learning | x | x | x | x | x | x | |||

| Bio-inspire computing | x | x | |||||||

| Defense applications | Malware detection | x | x | x | x | x | x | x | x |

| Intrusion detection | x | x | x | x | x | x | x | ||

| Phishing detection | x | x | x | x | x | x | |||

| Spam identification | x | x | x | x | x | ||||

| APTs detection | x | x | x | x | |||||

| DGAs detection | x | x | x | x | |||||

| Malicous use of AI | Ai-powerd malware | x | x | ||||||

| Attack against AI | x | x | x | x | |||||

| Social engineering attacks | x | x | |||||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Truong, T.C.; Diep, Q.B.; Zelinka, I. Artificial Intelligence in the Cyber Domain: Offense and Defense. Symmetry 2020, 12, 410. https://doi.org/10.3390/sym12030410

Truong TC, Diep QB, Zelinka I. Artificial Intelligence in the Cyber Domain: Offense and Defense. Symmetry. 2020; 12(3):410. https://doi.org/10.3390/sym12030410

Chicago/Turabian StyleTruong, Thanh Cong, Quoc Bao Diep, and Ivan Zelinka. 2020. "Artificial Intelligence in the Cyber Domain: Offense and Defense" Symmetry 12, no. 3: 410. https://doi.org/10.3390/sym12030410

APA StyleTruong, T. C., Diep, Q. B., & Zelinka, I. (2020). Artificial Intelligence in the Cyber Domain: Offense and Defense. Symmetry, 12(3), 410. https://doi.org/10.3390/sym12030410