Abstract

It is difficult to select small targets in freehand distal pointing on large displays due to physical fatigue and jitter. Previous research proposed many solutions but not through the semantic meaning of hand behaviors. The purpose of this study is to investigate the hand behaviors, including hand shapes and motions in the Z-dimension between small and large target selections, by means of two controlled experiments. The generated results indicated that the following: (1) when interacting with a large display from a remote distance, users preferred to complete a low-precision task by the whole hand while using the finger in high-precision tasks. As a result, users moved small targets by the index finger gesture while moving large targets by open hand gesture; (2) selection of small targets led to hand stretching which might reduce the interaction distance; (3) the orientation had significant effects on hand motions in the Z-dimension. The relationship between orientation and hand motions in the Z-dimension is similar to a sinusoidal function; (4) mouse-like pointing had much impact on freehand interaction. Based on these results, some design guidelines for freehand interaction and design suggestions for freehand pointing were discussed.

1. Introduction

Low-cost motion and depth-sensing technology changed the way humans interact with computers or any other smart devices. It makes us possible to interact with them using freehand gestures at different distances. This greatly enriched scenarios for users to interact with the computer and made the interaction itself more natural than before. Interaction with the computer is mainly based on two kinds of gestures: manipulation and semaphores [1]. With manipulative gestures, some entity is controlled by applying a tight relationship between the actual movements of the hand/arm and the entity being manipulated. Before manipulation, target-pointing and selection are recognized as fundamental operations.

Unlike mouse-based operations in desktop monitors, freehand distal pointing has no fixed surface support, which results in jitter and faster physical fatigue. These characteristics of freehand pointing make it difficult to hold the cursor steadily and hard to select small targets [2,3,4]. Many solutions were proposed to solve these problems. Some studies focused on changing interaction metaphors, including grab metaphors, ray-casting metaphors, and mouse metaphors, to improve the efficiency of freehand pointing and accuracy in small target acquiring [5,6]. Some studies focused on changing the control-display gains dynamically according to the velocity of the hand or whether the cursor is inside the target or not [3,7]. Some studies focused on noisy signals filters to reduce jitter like the 1€ Filter [8]. However, the majority of studies focused on motion control but not on the interaction behaviors and semantic meanings. Both of them simultaneously affect freehand pointing performance [9]. Some behaviors such as hand alternations, orientation, interaction distance, physical navigations [9], and hand shape used to select target [10] were studied, but some gaps remain, such as how the hand shape used and Z-dimension movements change with respect to the different degree of precisions needed in pointing situations. As a result, the hand shape used in freehand pointing research tends to be arbitrary with only ergonomics considered. Z-dimension movement as a new input proved to be a practical way of interacting with the computer [11], but little is known about the Z-dimension movement behaviors when pointing to targets with different sizes, orientations, and densities. These two behaviors reveal how users point and manipulate entities to improve the efficiency of freehand pointing.

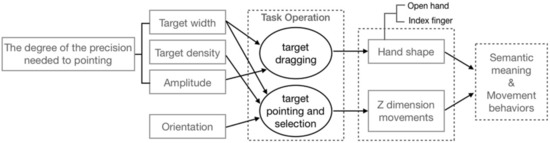

In this study, two experiments were conducted to help investigate the semantic meaning of the hand shape and the Z-dimension movements when pointing to targets by freehand, including different width, density, and direction. From the findings, we summarize practical implications for improving the efficiency of freehand pointing and hand interaction without additional cognitive burden. The block diagram of the proposed research is shown in Figure 1.

Figure 1.

The block diagram of this study.

2. Related Work

Gestural interaction was investigated for a long time, and the gestures can be classified into two categories: manipulation and semaphores. In this section, we provide a summary of the previous work on freehand pointing, hand shape, and Z-dimension movements through the structure of this classification.

2.1. Manipulation and Semaphores

The future focus in human-computer interaction (HCI), called natural user interface (NUI) design, is to make human–computer interaction more like how people interact with each other and with the world [12]. However, the hand gestures used in communication are different from those used for manipulation purposes. According to these differences, those “whose intended purpose is to control some entity by applying a tight relationship between the actual movements of the hand with the entity being manipulated” are defined as manipulative gestures by Quek et al. [1]. In contrast, some gestures which express semantic meanings are defined as semaphoric gestures. Manipulative gestures represent a large proportion of human hand use that make it direct and easy to learn. However, Quek et al. also argued that manipulative gestures used in human–computer interaction lack tactile or force-feedback, whereas semaphoric gestures are performed without such constraints. However, semaphoric gestures are subject to wide individual and cultural differences [13]. Even for the same person, the gestures change somewhat depending on situational factors, such as mood, fatigue, task, etc. In order to deal with such issues, Nielsen, Störring, Moeslund, and Granum [14] outlined a procedure for designing a gesture vocabulary for hands-free computer interaction from a user-centered viewpoint, such as the learning rate, ergonomics, and intuition. Wobbrock et al. [15,16] presented an approach to designing tabletop gestures that relies on eliciting gestures, and they introduced the concept of guessability and agreement score. Similar elicitation methods were used to design a gesture vocabulary for smart televisions [17], smart rooms [18], and dual-screen mobiles [19]. Although semaphoric gestures are successful applied in different gesture interaction systems, the agreement level between the proposed gestures was low. It is suggested that gestures should be standardized when widely popularized, either by a formal standards body or simply by convention [20].

Although both manipulative and semaphoric gesture models have disadvantages, a synthesis of both can achieve interaction richness and robustness that neither can provide alone. Chen, Schwarz, Harrison, Mankoff, and Hudson [21] combined touch and in-air gestures to interact with mobile devices. For example, a user can draw a circle in the air (i.e., semaphoric gesture) and tap to trigger a context menu (i.e., manipulation). Alkemade, Verbeek, and Lukosch [22] also combined manipulative and semaphoric gestures to manipulate three-dimensional (3D) objects in a Virtual Reality (VR) user interface. Matulic and Vogel [23] presented a “multiray” space, formed by ray intersections created by hand postures, to interact with a large display. The “multiray” space is used to trigger actions with hand movements used to manipulate. Human cognitive and motion control were both considered in gesture interaction design in these studies. Gesture interaction should optimize the relationship between human cognitive and physical processes, and computer gesture recognition systems for human–computer interaction [24].

2.2. Semaphores in Freehand Pointing

Semaphores represent a miniscule portion of the conversational gestures which are classified into four major categories: iconic (i.e., bears a formal relationship to the semantic content of speech), metaphoric (i.e., describing abstract ideas), deictic (i.e., pointing), and beats (i.e., no discernible meaning but can be recognized by their movement characteristics) [25]. Particularly interesting for our purpose are deictic gestures which are pointing movements performed with the pointing finger or body part. Cochet and Vauclair [26] made a distinction regarding deictic gestures according to the form of pointing: imperative pointing (i.e., a request for a desired object), declarative expressive pointing (i.e., sharing some interest in a specific object), and declarative informative pointing (i.e., produced to help a communicative partner by providing information). They found significant results showing that imperative pointing was more frequently associated with index finger gestures than declarative expressive pointing and declarative informative pointing. Proximal pointing was more frequently associated with index finger gestures than distal pointing in declarative expressive and declarative informative situations. They hypothesized that hand shape is influenced by the distance between the gesturer and the referent, in relation to the degree of precision and individuation required to identify the referent. Adults frequently use index finger pointing to disambiguate referential situations, whereas whole-hand pointing and thumb pointing are mostly used in situations requiring little precision [27].

In addition to linguists, the hand shape used in freehand pointing on display tends to be arbitrary. Vogel and Balakrishnan [6] used index finger (i.e., the index finger extended and palm facing down) for ray-casting pointing (see Section 2.3) and used open hand for relative cursor control. Foehrenbach, König, Gerken, and Reiterer [28] used open hand for ray-casting because the index finger gesture requires higher tension. In other studies, the open hand was used more in mouse metaphor pointing (see Section 2.3) [7,29]. Lin, Adamson, and Rempel [10] found that index finger gestures have a faster selection time and lower error rate compared to palm gestures in small target selection but not for large target selection. They argued that index gestures may be preferred due to hand ergonomics. Actually, another different open hand gesture with palm pointing down is the most relaxed posture [24]. Apart from the ergonomics, we hypothesize that there is a relationship between the hand shape used and the degree of the precision needed in pointing. Finally, the relationship between hand postures and human cognition of pointing is little researched. The hand postures can be mapped to parameters of pointing as well (i.e., cursor size, control-to-display gain, etc.) to improve performance of freehand pointing.

2.3. Manipulation in Remote Pointing

With different pointing metaphors, a tight relationship between the movements or orientation of the hand and the cursor movements is applied to pointing. Unlike traditional devices like the mouse, freehand pointing or remote pointing has three metaphors: grab metaphor, ray-casting metaphor, and mouse metaphor [5,6]. The grab metaphor tries to mimic the user reaching for a book on a high/distant shelf. The position of the cursor in the display is determined by the location of the hand. The ray-casting metaphor mimics the position of the cursor, determined by the location where a ray emanating from the index finger intersects with the display [6]. The mouse metaphor works like the mouse, but on a vertical plane. The movements of the cursor are determined by the movements of the hand. Apart from these three metaphors, the transfer ratio between cursor movement and the input motions named control-to-display (CD) gain also affects the performance of the pointing. According to the ratio, remote pointing can be classified into two categories: absolute position and relative position. Limited to the movement distance of the hand, the absolute grab and absolute cursor metaphors are not practical in large display interactions. In Table 1, a comparison among several pointing techniques using different pointing metaphors is presented.

Table 1.

Comparison of pointing techniques using different pointing metaphors.

The relative grab metaphor limits the range of the hand motion, as reaching the corner of the display may cause fatigue more easily. However, it was adopted by many studies because of the simple and direct position mapping [7,30,31]. Absolute ray-casting is efficient in pointing but with high error rates in small target selection [5,6,35]. As the distance from the display increases, the accuracy of the pointing is greatly reduced due to the jitter of the hand [3]. Relative ray-casting solves these problems through changing the CD gains dynamically, but it has issues with clutching problems. The relative mouse metaphor is a legacy from traditional interaction. It also mimics using hands to move the cursor to the target then select or drag it. Lou et al. [9] used this metaphor based on the PA technique, a transfer ratio function which changed CD gains according to the velocity of the hand in their research. Moreover, some studies used mixed metaphors in remote pointing, such as using ray-casting in coarse pointing and using mouse metaphor in repositioning near small targets [6,36,37,38]. When interacting with a room display, the mouse metaphor is faster on the front wall while ray-casting is faster on the other walls [39].

Several studies focused on a motion control model for freehand pointing. A widely adopted model is the Fitts Law which evaluates motor performance according to the size and amplitude of the targets [40]. Murata, Cha, and Myung [41,42] extended the Fitts Law in 3D pointing. Kopper et al. [2] presented an extended Fitts Law based on the ray-casting metaphor. Some studies compared freehand pointing with a mouse using Fitts Law and perceived usability [43,44]. They found that the gesture-based interface had lower throughput, more fatigue, and lower usability. The control over the amplitude and the duration of the forces applied during a movement in freehand pointing becomes more complicated with the increase in dimensionality of the task or the degrees of freedom related to the participating muscles and joints [41,42]. Freehand pointing should be redesigned if we want to turn this input mode into a practical one.

2.4. Z-Dimension Movements and Orientation

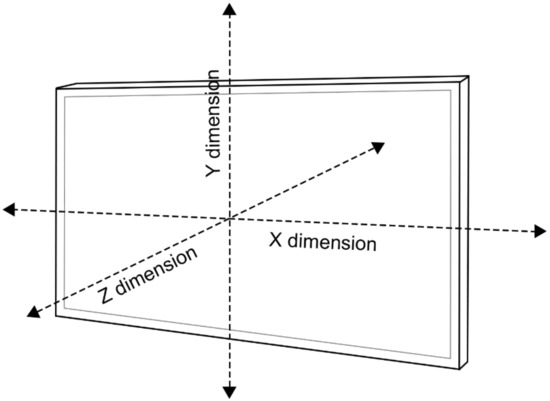

Z-dimension refers to the orientation perpendicular to the target direction plane (see Figure 2). The movement distance in the Z-dimension was called the interaction distance in many studies [9,45]. People adjust interaction distance through two methods: stepping toward or away from the display and moving body parts by leaning forward or using hand movement. It was demonstrated that interaction distances have significant effects on information perceptions and are used as contextual cues to enable implicit interaction [45,46]. Proximity to the display is also used as an input mode to zoom in/out contents [47,48]. Li, Lou, Hansen, and Peng [49] presented a correlation between distance and perceived usability; as the distance from commonly visited ranges increases, the perceived usability level decreases, and vice versa. Lou et al. [9] found that a large interaction distance benefits efficiency but harms accuracy in freehand pointing. Apart from distance changing by stepping forward or backward, arm stretching was proven to be a practical way of interacting with the computer [10]. Ren and O’ Neill [50] found that target orientation has a significant effect on the Z-dimension movement. The user’s hands tended to move forward in the up, right-up, and left-up directions, while other directions kept the same value in freehand marking menu selection with the reach technique. One interesting exception was that hands moved backward in the left-down direction. Similarly, a kinesphere model was proposed whereby, when a joint (e.g., wrist, elbow, or shoulder) is rested and the remaining joints are stretched, the user’s fingertip will be approximately on a partial sphere centered on the joint [51]. Guinness et al. [52] also used a similar sphere model to evaluate the kinematic trace of the hand in rested touchless gestural interaction. While the shoulder joint is fixed, a similar Z-dimension movement was found [53]. Kim, Han, and Chung used this natural movement to design a new control-display mapping in 3D target pointing. Nonetheless, studies on how the Z-dimension movement change without a rested joint and the detailed difference between it and the sphere model are still missing, especially in two-dimensional (2D) pointing. In addition to these, little is known about the Z-dimension movement behaviors when pointing to targets with different sizes and densities. It is necessary to understand the Z-dimension movement behaviors so that we can improve the pointing performance.

Figure 2.

Illustration of Z-dimension movements.

3. Experiment 1: Hand Shape in Manipulation

In this experiment, we were interested in the relationship between the hand shape and task variables like target width and movement amplitude. Through the experiment, the semantic meaning of the hand shape and the human cognition of pointing in different tasks are discussed. This may help us improve the efficiency and satisfaction of freehand pointing. Based on the literature summarized before, we hypothesized an association between the hand shape and the degree of precision required in manipulation. The details of the hypothesis are as follows:

Hypothesis 1 (H1).

Users prefer to use index finger gestures to move small targets and open hand gestures to move large targets.

Hypothesis 2 (H2).

Users prefer to use index finger gestures to move targets in small amplitude and open hand gestures to move targets in large amplitude.

3.1. Participants

Because the experimental tasks require using simple hand gestures that almost every user may adopt to interact with any smart device, student participants were invited to take part in the experiment. That is, 30 volunteers (16 males and 14 females) aged 19 to 23 (mean (M) = 21.3, SD = 0.85) participated in the study. The participants were undergraduate students specializing in graphic design or industrial design. All the participants were reported to be right-handed and had normal or correct-to-normal eyesight.

3.2. Apparatus

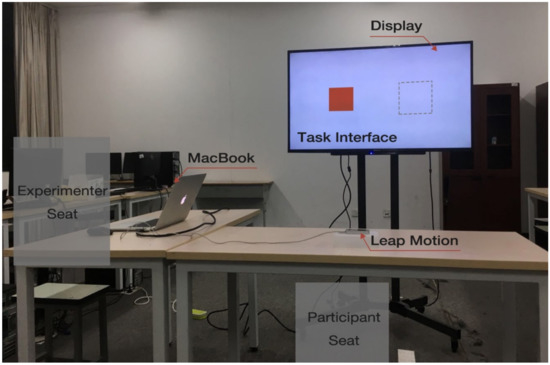

The experiment application was developed with web technologies and Leap Motion SDK V2. A 55-inch television (TV) with a 3840 × 2160 resolution was used as a large display. A MacBook Pro (2.2-GHz Intel Core i7 processor, and 16 GB memory) was connected to the display and ran the experiment application.

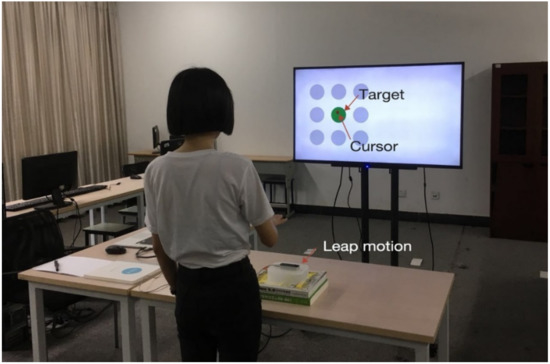

The participants were seated before a rectangular table facing the display. The distance between the participants and the display was about 1500 mm. The height between the bottom of the display and the floor was around 800 mm. A Leap Motion (60 fps, precision: 0.01 mm) was placed in front of the participants on the rectangular table. The gestures of the participants were captured by the Leap Motion sensor. The experiment environment is shown in Figure 3.

Figure 3.

Photo of the experiment environment.

3.3. Design

A 4 × 3 repeated-measures within-subjects design was adopted with the following two independent variables:

- Target width: 10 mm, 100 mm, 250 mm, 400 mm;

- Amplitude: 20 mm, 200 mm, 400 mm.

The smallest target width was 10 mm because a target smaller than this was difficult to select. The largest target width and amplitude were 400 mm due to the width of the display used in the experiment. The intermediate widths and amplitude were almost the quartiles or mean. The presentation order of target widths and amplitudes was randomized.

The dependent variable of the study was hand shapes divided into three categories: open hand, index finger, and others. We defined the open hand as the extension of all fingers whether slightly flexed or not. Unlike the open hand, the index finger was defined as the extension of the index with the other fingers curled tightly or lightly. We classified other gestures as the same category named others. The experimenter recorded all the hand shapes through observation.

3.4. Task and Procedure

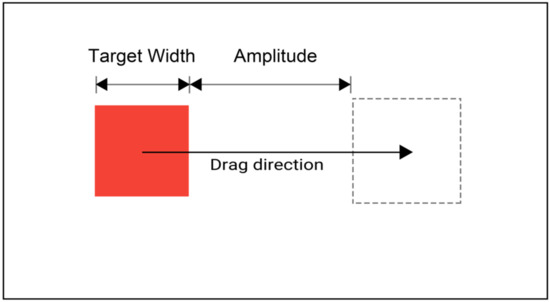

A drag task was designed to combine different sizes of targets with varying movement amplitudes (see Figure 4). Before the movement, the participants were asked to think about how to drag the red square. Then, they would raise their hands and pay attention to the red square image they were prepared to move from a remote distance. After one second, the gestures were captured by the Leap Motion sensor. The color of the square turned to green. After that, the participants could move the green square into the dashed one. After moving the green square into the dashed one and holding for one second, the green square disappeared. The participants had to turn their hands back to the rest area to complete the task. During the dragging, a relative mouse metaphor was applied to map the position of the hand and the solid square because the manipulation mimicked reaching a target and moving it to a specified position. The participants could only move the square in the X-dimension so that they could complete the task easier. We used a dwelling, i.e., a gesture that kept the hand motionless and the cursor within the target area for a certain amount of time, to confirm the command because it was proven to be the best way of doing this, while other gestures, such as grabbing, have low recognition rates under the current algorithms [11].

Figure 4.

Illustration of the dragging task.

The participants were given a brief introduction to the dragging tasks at the beginning of the study. Then, they were asked to sit before a rectangular table and faced the center of the display directly. The participants were asked not to aim for fast dragging and accuracy, but to use the gestural interface as naturally as they could. After the participants completed the task and turned their hands back, the experimenter would record the hand shape and ask them to do the dragging again in another condition. The participants were asked to use the right hand. When they found it difficult to hold the solid square inside the dashed one, the experimenter would directly change the condition and suggest the participants to turn their hands back. The participants had to drag 12 targets with different sizes and amplitudes. At the end of the experiment, the participants were asked to explain the reason for the hand shape they used to drag and were encouraged to comment on the interactive techniques and tasks. It took each participant about 10 min on average to complete all tasks.

3.5. Results

In total, the participants produced 360 gestures and five different kinds of hand shapes: open hand, index finger, two extended fingers, grabbing with the whole hand, and grabbing with thumb and index finger. No participants changed the hand shape through dragging in one task. The majority of the hand shapes used in the tasks involved the index finger (63.9%), followed by open hand (21.1%). The percentages of the hand shapes used in the different conditions are presented in Table 2.

Table 2.

Percentages of hand shapes used in different conditions.

Chi-square tests revealed that there was a significant difference between target widths and hand shapes (x2 = 69.2, df = 6, p < 0.01), but no significant difference between amplitudes and hand shapes. Participants preferred to use the open hand (45.6%) to drag large targets, while the percentage of the index finger in this condition was 41.1%. In other conditions, participants preferred to use the index finger (90.0%, 68.9%, and 55.6% for 10 mm, 100 mm, and 250 mm, respectively), while the percentage of the open hand was 0.0%, 13.3%, and 25.6% accordingly. The other gestures were not analyzed because most of them had a very low percentage corresponding to each hand type.

3.6. Discussions of Experiment 1

Hypothesis 1, which stated that the target widths had effects on the hand shape used in tasks, was supported. Users preferred to use open hand to move large targets, which was consistent with the interview results. The participants who changed their hand shape into open hand noted that they could select the large target without too much effort but had to use the index finger to aim at small targets. This demonstrated that users prefer to use open hand in manipulative tasks with low precision. This finding contributes new understandings about hand shapes used in pointing gestures, as related to Cochet and Vauclair [26]. In other conditions, users preferred to use the index finger to move targets. In total, the percentage of the index finger was the highest, as with Lin’s research result [10]. This may be due to three reasons. Firstly, the freehand interaction is affected by the mouse pointing and selection [54]. Users who used the index finger to drag all the targets noted that they could just click on the red square and drag it to the dashed one. However, when told to use an open hand to move large targets by the experimenter in the interview, some of the users thought that there were no differences between different hand shapes while some of them thought that it was reasonable to change the hand shape. Secondly, users tended to keep the initial hand shape unchanged until the manipulation changed significantly. Lastly, a target being larger than the hands may have motivated users to change their hand shape.

Hypothesis 2 was not supported as the amplitudes had no significant effect on the hand shape. This could be due to the findings of Lou et al. [9]. The target width had significant effects on target selection accuracy while the effects of amplitude were not significant. Nonetheless, because of the limitation on the width of the display used in the experiment, the effect of larger amplitude on the hand shape is subject to further verification.

4. Experiment 2: Z-Dimension Movements in Freehand Pointing

In the first study, it was found that the hand shape used in freehand interaction is related to the degree of precision required in the task. This motivated us to investigate whether the spatial movements are also related to the degree of the precision. Lou et al. [9] found that smaller targets resulted in users tending to interact at closer distances. Thus, it was hypothesized that, if users could not step forward or backward, they would move their hands forward when selecting small targets. Another issue is that the hand movement distance in the Z-dimension varied depending on target directions in menu selection [50]. Thus, how the distance varied with directions in free hand pointing was studied in the experiment.

4.1. Participants

Twenty volunteers (10 males and 10 females) aged 18 to 22 (M = 20.1, SD = 1.05) were invited to take part in the study. The participants were also undergraduate students specializing in graphic or industrial design and had normal or correct-to-normal eyesight. All of them were asked to self-report whether they were left- or right-handed. Then, the Edinburgh Handedness Inventory was used to confirm their handedness. As a result, all the participants were right-handed except one, who was neutral. None of the participants took part in Experiment 1 and nine of them had previous experience in motion-sensing interactions (e.g., Kinect-based video games).

4.2. Apparatus

The experiment application was developed with web technologies and Leap Motion SDK V2. The apparatus in Experiment 2 was the same as Experiment 1, except that the participants stood before a rectangular table and the height between the bottom of the display and the floor was around 1200 mm. The experiment environment is shown in Figure 5.

Figure 5.

Photo of measurements.

4.3. Design

A 3 × 3 × 8 repeated-measures within-subjects design was adopted with the following three independent variables:

- Target width: 20 mm, 60 mm, 100 mm;

- Target density (i.e., the width between targets): 1 mm, 20 mm, 40 mm;

- Orientation: right, right-up, up, left-up, left, left-down, down, and right-down.

For Z-dimension movement distance, a minus value meant the hand finishing position was behind the starting position (i.e., closer to the participant’s chest), and a positive value meant that the finishing position was in front of the starting position.

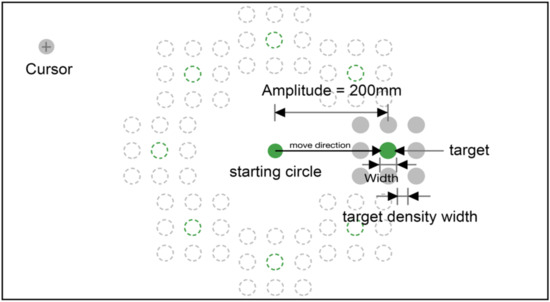

4.4. Task and Procedure

A pointing and selection task was designed in the experiment (see Figure 6). The task procedures were conducted as follows: at the beginning of each trial, the participants had to raise their hands and move the cursor (i.e., a circle with a cross in the center) into the starting circle which was located in the center of the display and held for one second. After that, the starting circle disappeared, and a target appeared in a random direction. The participants had to move the cursor into the target and hold for one second to select it. Then, the target disappeared, and the starting circle appeared once again in the center of the display. The participants had to repeat 72 trials. Once the number was met, the task was terminated. In 72 trials, all conditions appeared once, and the order was randomized. All targets and the starting circle were green while the distractors were gray. The pointing metaphor and confirm gesture were consistent with Experiment 1, except a visual feedback was added to the cursor during the holding process. When the starting circle or the target was selected, the spatial position of the hand was recorded by the application. The difference between starting and ending positions in the Z-dimension was defined as the Z-dimension movement distance (ZMD). Along with the movement time, the data were stored in a log file.

Figure 6.

Illustration of selection task.

The participants were given an introduction of the task and filled out a questionnaire with personal information at the beginning of the study. After that, they were instructed to stand in front of the display and used their right hand to initiate the interaction. Each participant had one block to practice. By means of the practicing, the experimenter could help adjust the Leap Motion sensor to make sure that the participant could control the cursor comfortably. The participants were asked not to aim for fast selection and accuracy, but to move the cursor to the center of the target. Each participant had to complete eight blocks and had at least five minutes to rest their arms and eyes between each block. At the end of the experiment, the participants were encouraged to comment on the interactive technique and tasks. It took each participant about two hours on average to complete all tasks.

4.5. Result

In total, the study collected 11,520 data. Movement time records that exceeded three standard deviations from the mean value (Z-score > 3) were treated as outliers and were excluded from statistical analysis. As a result, 11,353 (98.6%) data were preserved. The majority of the outliers occurred in 20-mm-wide target selections (91.6%), while only 3.7% and 4.8% of outliers occurred in 60-mm- and 100-mm-wide target selections, respectively.

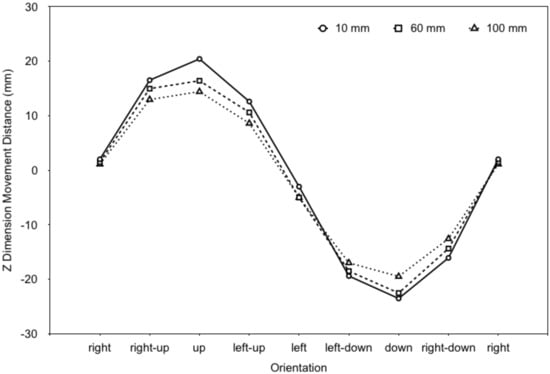

A repeated-measures ANOVA for target width × target density × movement orientation was performed. Main effects were found for target width (F (1.92, 282.23) = 6.39, p < 0.01) and movement orientation (F (2.56, 376.63) = 865.43, p < 0.01). The mean ZMD of 20-mm-wide target selections was −1.30 mm, while they were −2.04 mm and −1.90 mm for the 60-mm- and 100-mm-wide target selections (see Figure 7). Bonferroni post hoc tests showed that the mean ZMD of the 20-mm-wide targets was significantly larger than that of the others (p < 0.05), while the other differences were not significant.

Figure 7.

Z-dimension movement distance for different target selections of widths.

The mean ZMDs of right, right-up, up, and left-up were positive, while the others were negative (see Figure 8). The relationship between ZMD and the orientation was similar to a sinusoidal function. Bonferroni post hoc tests showed that all the mean ZMDs in different directions were significantly different (p < 0.01).

Figure 8.

Z-dimension movement distance for different orientation selections.

We found an interaction effect for target width × orientation (F (7.85, 1153.79) = 14.14, p < 0.01) (see Figure 9). This indicated that the orientation effect on the ZMD was significantly larger than that of the target width.

Figure 9.

Interaction effect of target width and orientation.

Further analyses were performed using a one-way repeated-measures ANOVA for target width in the left and right directions. These two directions had little effect on the ZMD. In the left direction, the mean ZMD of 20-mm-wide target selections was significantly larger than that of the other two target selections (p < 0.05). In the right direction, the mean ZMDs of 20 mm, 60 mm, and 100 mm were 1.48 mm, 1.09 mm, and 0.57 mm, respectively, with no significant differences.

4.6. Discussion of Experiment 2

We found a strong directional effect on ZMD. The relationship between ZMD and the orientation was similar to a sinusoidal function. In detail, participants tended to move upward while moving forward and tended to move downward while moving backward. When moving left, they tended to move backward slightly. In contrast, when moving right, they moved forward slightly. In general, the position of the hand was still on a partial sphere centered on the elbow [51,53]. However, the hand moved outside the sphere in up and right-down directions. This could be due to the different distances moved in the task where the participants stretched their arms and changed the position of the elbow. This means that a sinusoidal function can be more suitable for freehand pointing without a rested elbow over a large distance.

Compared to the orientation, target widths had slight effects on the ZMD. Participants preferred to move their hand forward when selecting very small targets which required much more effort. This means the degree of the precision required in a task had an effect on the ZMD. However, when participants moved downward, a smaller target resulted in the ZMD moving further backward. This could be due to the distance moved in target selection. Although the participants were told to move the cursor to the center of the target, they tended to hold the cursor around the center of large targets. Compared to the right direction, the target width effect on ZMD was stronger in the left direction. This is because the target selection on display areas at the operating hand’s side was more efficient and accurate [9]. Participants required more effort to select small targets on the left side with the right hand; thus, they moved hand more forward.

The target density had no significant effect on the ZMD. This could be due to the cursor used in the tasks. Only using an area cursor like a bubble cursor might affect the index of difficulty in target selection [55,56].

5. Design Suggestions

In this study, the hand shapes and Z-dimension movement distances in distal pointing on large displays were investigated through two experiments. The study shows that the hand shape and the Z-dimension movement distance are influenced by the target width, in relation to the degree of the precision of tasks. In addition, the relationship between orientation and the Z-dimension movement distance is similar to a sinusoidal function. Combining these results, some design guidelines for freehand interaction with large displays and design suggestions for distal pointing are suggested as follows:

- Unlike traditional input modalities like mouse and touch, freehand has a more powerful input bandwidth. We can express more details in a command through the semantic meaning of gestures. For distal freehand pointing, users can use different hand shapes and the Z-dimension movement to change the size and CD gains of the cursor, thereby improving the freehand pointing efficiency.

- Users prefer to complete a low-precision task with the whole hand, while using the finger in high-precision tasks on large displays from a remote distance. For distal freehand pointing, the perceptual precision of the open hand gesture and index finger gesture is different. Users can use the open hand to control an area cursor in coarse selections, while moving a pointer cursor through the index finger in high-precision selections.

- Users tended to stretch their hand to reduce the distance between the large display and themselves in high-precision tasks, especially in small target selection. Thus, one way to use hand motion in the Z-dimension is by mapping it to the precision of an interactive tool. For example, when people moved their hand forward in pointing, it may have decreased the CD gain and size of the area cursor, thereby helping them select small targets easier.

- Orientation has a significant effect on hand motion in the Z-dimension (Figure 6). We can use this relationship to predict the Z-dimension movement distance when moving in different directions. That is, when using the Z-dimension movement distance as a command attribute, the orientation has to be taken into account.

- In dragging tasks, it was found that some people preferred to keep the initial hand shape unchanged in manipulation due to the legacy of mouse interaction. Thus, designers had to be careful when using gestures to increase the expressiveness in the interaction. It is important to keep the gestures simple and easy to understand. Too complicated gestures can be confusing.

6. Conclusions

In this paper, we presented two related studies exploring the relationship between the precision of tasks and gestures including hand shape and hand motion in the Z-dimension. Our findings show that users prefer to use index finger gestures and move forward in high-precision selections, while using open hand gestures in large target selections. We also found that the orientation has an effect on the hand motion in the Z-dimension. Based on our results, we presented some design guidelines for freehand interaction and a design concept for freehand pointing with large displays. These can benefit freehand interaction design and provide understanding of human behavior in remote pointing.

Limited by the size of the display and the gesture recognition range of the Leap Motion, it can be interesting in studying the effect of amplitudes larger than 400 mm and hand movements larger than 600 mm in freehand interaction in the future. There is also a research limitation in terms of the participants we used in this study. That is, only undergraduate students specializing in graphic design or industrial design were invited to participate in the experiment. The research results may only reflect the younger generation. To enhance the external validity, participants with different ages, backgrounds, or technology competences can be used for future study. We also plan to design a freehand pointing technique according to the results of this paper and study its usability in real applications.

Author Contributions

C.-H.C. is a professor at Taiwan Tech. He facilitates the planning the research design and provides advices pertinent to problems encountered during the research period. J.-L.W. is the primary researcher who performs the experiment, conducts the statistical analysis, and completes the writing of this article. All authors have read and agree to the published version of the manuscript.

Funding

This research study received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Quek, F.; McNeill, D.; Bryll, R.; Duncan, S.; Ma, X.-F.; Kirbas, C.; Ansari, R. Multimodal human discourse: Gesture and speech. ACM Trans. Comput. Hum. Interact. 2002, 9, 171–193. [Google Scholar] [CrossRef]

- Kopper, R.; Bowman, D.A.; Silva, M.G.; McMahan, R.P. A human motor behavior model for distal pointing tasks. Int. J. Hum. Comput. Stud. 2010, 68, 603–615. [Google Scholar] [CrossRef]

- Nancel, M.; Pietriga, E.; Chapuis, O.; Beaudouin-Lafon, M. Mid-Air Pointing on Ultra-Walls. ACM Trans. Comput. Hum. Interact. 2015, 22, 1–62. [Google Scholar] [CrossRef]

- Tse, E.; Hancock, M.; Greenberg, S. Speech-filtered bubble ray: Improving target acquisition on display walls. In Proceedings of the 9th International Conference on Multimodal Interfaces, Nagoya, Aichi, Japan, 12–15 November 2007; pp. 307–314. [Google Scholar]

- Jota, R.; Pereira, J.M.; Jorge, J.A. A comparative study of interaction metaphors for large-scale displays. In Proceedings of the CHI ’09 Extended Abstracts on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009. [Google Scholar]

- Vogel, D.; Balakrishnan, R. Distant freehand pointing and clicking on very large, high resolution displays. In Proceedings of the 18th Annual ACM Symposium on User Interface Software and Technology, Seattle, WA, USA, 23–27 October 2005. [Google Scholar]

- Mäkelä, V.; Heimonen, T.; Turunen, M. Magnetic Cursor: Improving Target Selection in Freehand Pointing Interfaces. In Proceedings of the International Symposium on Pervasive Displays, Copenhagen, Denmark, 3–4 June 2014. [Google Scholar]

- Casiez, G.; Roussel, N.; Vogel, D. 1€ Filter: A simple speed-based low-pass filter for noisy input in interactive systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- Lou, X.; Peng, R.; Hansen, P.; Li, X.A. Effects of User’s Hand Orientation and Spatial Movements on Free Hand Interactions with Large Displays. Int. J. Hum. Comput. Interact. 2017, 34, 519–532. [Google Scholar] [CrossRef]

- Lin, J.; Harris-Adamson, C.; Rempel, D. The Design of Hand Gestures for Selecting Virtual Objects. Int. J. Hum. Comput. Interact. 2019, 35, 1729–1735. [Google Scholar] [CrossRef]

- Tian, F.; Lyu, F.; Zhang, X.; Ren, X.; Wang, H. An Empirical Study on the Interaction Capability of Arm Stretching. Int. J. Hum. Comput. Interact. 2017, 33, 565–575. [Google Scholar] [CrossRef]

- Goth, G. Brave NUI world. Commun. ACM 2011, 54, 14–16. [Google Scholar] [CrossRef]

- Morrel-Samuels, P. Clarifying the distinction between lexical and gestural commands. Int. J. Man Mach. Stud. 1990, 32, 581–590. [Google Scholar] [CrossRef]

- Nielsen, M.; Störring, M.; Moeslund, T.B.; Granum, E. A Procedure for Developing Intuitive and Ergonomic Gesture Interfaces for HCI. In Proceedings of the 5th International Gesture Workshop (GW 2003), Genova, Italy, 15–17 April 2003; Camurri, A., Volpe, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 409–420. [Google Scholar]

- Wobbrock, J.O.; Aung, H.H.; Rothrock, B.; Myers, B.A. Maximizing the guessability of symbolic input. In Proceedings of the CHI ’05 Extended Abstracts on Human Factors in Computing Systems, Portland, OR, USA, 2 April 2005. [Google Scholar]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-defined gestures for surface computing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4 April 2009. [Google Scholar]

- Dong, H.W.; Danesh, A.; Figueroa, N.; El Saddik, A. An Elicitation Study on Gesture Preferences and Memorability toward a Practical Hand-Gesture Vocabulary for Smart Televisions. IEEE Access 2015, 3, 543–555. [Google Scholar] [CrossRef]

- Chen, Z.; Ma, X.C.; Zhou, Y.; Yao, M.G.; Ma, Z.; Wang, C.; Shen, M.W. User-defined gestures for gestural interaction: Extending from hands to other body parts. Int. J. Hum. Comput. Interact. 2018, 34, 238–250. [Google Scholar] [CrossRef]

- Wu, H.; Yang, L. User-Defined Gestures for Dual-Screen Mobile Interaction. Int. J. Hum. Comput. Interact. 2019, 1–15. [Google Scholar] [CrossRef]

- Norman, D.A. Natural user interfaces are not natural. Interactions 2010, 17, 6–10. [Google Scholar] [CrossRef]

- Chen, X.A.; Schwarz, J.; Harrison, C.; Mankoff, J.; Hudson, S.E. Air + touch: Interweaving touch & in-air gestures. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014. [Google Scholar]

- Alkemade, R.; Verbeek, F.J.; Lukosch, S.G. On the Efficiency of a VR Hand Gesture-Based Interface for 3D Object Manipulations in Conceptual Design. Int. J. Hum. Comput. Interact. 2017, 33, 882–901. [Google Scholar] [CrossRef]

- Matulic, F.; Vogel, D. Multiray: Multi-Finger Raycasting for Large Displays. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar]

- Rempel, D.; Camilleri, M.J.; Lee, D.L. The Design of Hand Gestures for Human-Computer Interaction: Lessons from Sign Language Interpreters. Int. J. Hum. Comput. Stud. 2015, 72, 728–735. [Google Scholar] [CrossRef] [PubMed]

- McNeil, D. Hand and Mind: What Gestures Reveal about Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Cochet, H.; Vauclair, J. Deictic gestures and symbolic gestures produced by adults in an experimental context: Hand shapes and hand preferences. Later. Asymmetries Body Brain Cognit. 2014, 19, 278–301. [Google Scholar] [CrossRef]

- Wilkins, D. Why pointing with the index finger is not a universal (in sociocultural and semiotic terms). In Pointing: Where Language, Culture, and Cognition Meet; Kita, S., Ed.; Erlbaum: Mahwah, NJ, USA, 2003; pp. 171–215. [Google Scholar]

- Foehrenbach, S.; König, W.A.; Gerken, J.; Reiterer, H. Natural Interaction with Hand Gestures and Tactile Feedback for large, high-res Displays. In Proceedings of the Workshop on Multimodal Interaction through Haptic Feedback (MITH 08), held in Conjunction with International Working Conference on Advanced Visual Interfaces (AVI 08), Napoli, Italy, 28–30 May 2008. [Google Scholar]

- Haque, F.; Nancel, M.; Vogel, D. Myopoint: Pointing and Clicking Using Forearm Mounted Electromyography and Inertial Motion Sensors. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015. [Google Scholar]

- Sambrooks, L.; Wilkinson, B. Comparison of gestural, touch, and mouse interaction with Fitts’ law. In Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration, Adelaide, Australia, 25 November 2013. [Google Scholar]

- Jude, A.; Poor, G.M.; Guinness, D. Personal space: User defined gesture space for GUI interaction. In Proceedings of the CHI ’14 Extended Abstracts on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014. [Google Scholar]

- Jota, R.; Nacenta, M.A.; Jorge, J.A.; Carpendale, S.; Greenberg, S. A comparison of ray pointing techniques for very large displays. In Proceedings of the Graphics Interface 2010, Ottawa, ON, Canada, 31 May–2 June 2010. [Google Scholar]

- Baloup, M.; Oudjail, V.; Pietrzak, T.; Casiez, G. Pointing techniques for distant targets in virtual reality. In Proceedings of the 30th Conference on l’Interaction Homme-Machine, Brest, France, 23–26 October 2018. [Google Scholar] [CrossRef]

- Bateman, S.; Mandryk, R.L.; Gutwin, C.; Xiao, R. Analysis and comparison of target assistance techniques for relative ray-cast pointing. Int. J. Hum. Comput. Stud. 2013, 71, 511–532. [Google Scholar] [CrossRef]

- Siddhpuria, S.; Malacria, S.; Nancel, M.; Lank, E. Pointing at a Distance with Everyday Smart Devices. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Debarba, H.; Nedel, L.; Maciel, A. LOP-cursor: Fast and precise interaction with tiled displays using one hand and levels of precision. In Proceedings of the 2012 IEEE Symposium on 3D User Interfaces (3DUI), Costa Mesa, CA, USA, 4–5 March 2012. [Google Scholar]

- Nancel, M.; Chapuis, O.; Pietriga, E.; Yang, X.-D.; Irani, P.P.; Beaudouin-Lafon, M. High-precision pointing on large wall displays using small handheld devices. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013. [Google Scholar]

- Endo, Y.; Fujita, D.; Komuro, T. Distant Pointing User Interfaces based on 3D Hand Pointing Recognition. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, Brighton, UK, 17–20 October 2017. [Google Scholar] [CrossRef]

- Petford, J.; Nacenta, M.A.; Gutwin, C. Pointing All Around You: Selection Performance of Mouse and Ray-Cast Pointing in Full-Coverage Displays. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381–391. [Google Scholar] [CrossRef]

- Cha, Y.; Myung, R. Extended Fitts’ law for 3D pointing tasks using 3D target arrangements. Int. J. Ind. Ergon. 2013, 43, 350–355. [Google Scholar] [CrossRef]

- Murata, A.; Iwase, H. Extending Fitts’ law to a three-dimensional pointing task. Hum. Mov. Sci. 2001, 20, 791–805. [Google Scholar] [CrossRef]

- Burno, R.A.; Wu, B.; Doherty, R.; Colett, H.; Elnaggar, R. Applying Fitts’ Law to Gesture Based Computer Interactions. Procedia Manuf. 2015, 3 (Suppl. C), 4342–4349. [Google Scholar] [CrossRef]

- Jones, K.S.; McIntyre, T.J.; Harris, D.J. Leap Motion- and Mouse-Based Target Selection: Productivity, Perceived Comfort and Fatigue, User Preference, and Perceived Usability. Int. J. Hum. Comput. Interact. 2019, 1–10. [Google Scholar] [CrossRef]

- Ballendat, T.; Marquardt, N.; Greenberg, S. Proxemic interaction: Designing for a proximity and orientation-aware environment. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, Saarbrücken, Germany, 7–10 November 2010. [Google Scholar]

- Vogel, D.; Balakrishnan, R. Interactive public ambient displays: Transitioning from implicit to explicit, public to personal, interaction with multiple users. In Proceedings of the 17th Annual ACM Symposium on User Interface Software and Technology, Santa Fe, NM, USA, 24–27 October 2004. [Google Scholar]

- Harrison, C.; Dey, A.K. Lean and zoom: Proximity-aware user interface and content magnification. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008. [Google Scholar]

- Clark, A.; Dünser, A.; Billinghurst, M.; Piumsomboon, T.; Altimira, D. Seamless interaction in space. In Proceedings of the 23rd Australian Computer-Human Interaction Conference, Canberra, Australia, 28 November–2 December 2011. [Google Scholar]

- Li, A.X.; Lou, X.L.; Hansen, P.; Peng, R. On the Influence of Distance in the Interaction with Large Displays. J. Disp. Technol. 2016, 12, 840–850. [Google Scholar] [CrossRef]

- Ren, G.; O’ Neill, E. 3D selection with freehand gesture. Comput. Gr. 2013, 37, 101–120. [Google Scholar] [CrossRef]

- Lubos, P.; Bruder, G.; Ariza, O.; Steinicke, F. Touching the Sphere: Leveraging Joint-Centered Kinespheres for Spatial User Interaction. In Proceedings of the 2016 Symposium on Spatial User Interaction, Tokyo, Japan, 15–16 October 2016. [Google Scholar]

- Guinness, D.; Jude, A.; Poor, G.M.; Dover, A. Models for Rested Touchless Gestural Interaction. In Proceedings of the 3rd ACM Symposium on Spatial User Interaction, Los Angeles, Ca, USA, 8–9 August 2015. [Google Scholar]

- Kim, H.; Oh, S.; Han, S.H.; Chung, M.K. Motion–Display Gain: A New Control—Display Mapping Reflecting Natural Human Pointing Gesture to Enhance Interaction with Large Displays at a Distance. Int. J. Hum. Comput. Interact. 2019, 35, 180–195. [Google Scholar] [CrossRef]

- Morris, M.R.; Danielescu, A.; Drucker, S.; Fisher, D.; Lee, B.; Schraefel, M.C.; Wobbrock, J.O. Reducing legacy bias in gesture elicitation studies. Interactions 2014, 21, 40–45. [Google Scholar] [CrossRef]

- Grossman, T.; Balakrishnan, R. The bubble cursor: Enhancing target acquisition by dynamic resizing of the cursor’s activation area. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2 April 2005. [Google Scholar]

- Blanch, R.; Ortega, M. Benchmarking pointing techniques with distractors: Adding a density factor to Fitts’ pointing paradigm. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7 May 2011. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).