1. Introduction

A large portion of the world’s population is associated with the agriculture sector. Many developing and underdeveloped countries’ economies rely on agriculture. This sector has experienced multiple transformations with the increase in the world’s population [

1]. The growth in the human population is directly related to the need to increase the world’s agricultural productivity and its sustainability. Technology was introduced to agriculture more than one century ago, and, to improve production efficiency, several studies have been conducted since the 1990s [

1]. In recent times, advanced industrial technologies based on artificial intelligence (AI) have been applied in the field of agriculture to improve productivity, environmental impact, food security and sustainability. Before addressing any problems in this area, one is required to understand the basic ecosystem of agriculture and the fundamental requirements of farming. This field is considered one of the most challenging research fields, and technology has massive potential to be incorporated into it to increase the mass and quality of agricultural products. The incorporation of AI, particularly deep learning perceptions, can be used to make advances in the agricultural sector [

2].

In the agriculture sector, the farming of crops relies heavily on seeds. Without seeds, there is no chance of producing or harvesting any crops. The human population has been increasing rapidly for many years. Due to this population growth, agricultural land is reducing day by day, which causes a decline in the production of food. To balance consumption rate with production rate, crop production must be increased. In this regard, people have started growing crops and vegetables in their homes. However, not everyone possessed the knowledge needed to do this. Only a person who has expertise in identifying seeds can cultivate them. To eliminate this dependency, there is the need for an automated system that can assist in identifying and classifying the different types of seeds. Several studies have been conducted in which various issues related to seeds have been addressed by using AI techniques, ranging from simple object identification-based techniques to complex texture and pattern identification. In recent studies, machine learning techniques have been observed more frequently to perform seed classification of various crops, fruits and vegetables. Most of these studies have been conducted on a single genre of seed (e.g., weed seeds [

3], cottonseeds [

4], rice seeds [

5,

6], oat seeds [

7], sunflower seeds [

8], tomato seeds [

9] and corn [

10,

11]) with varying purposes. These included observing germination and vigour detection, purification and growth stages.

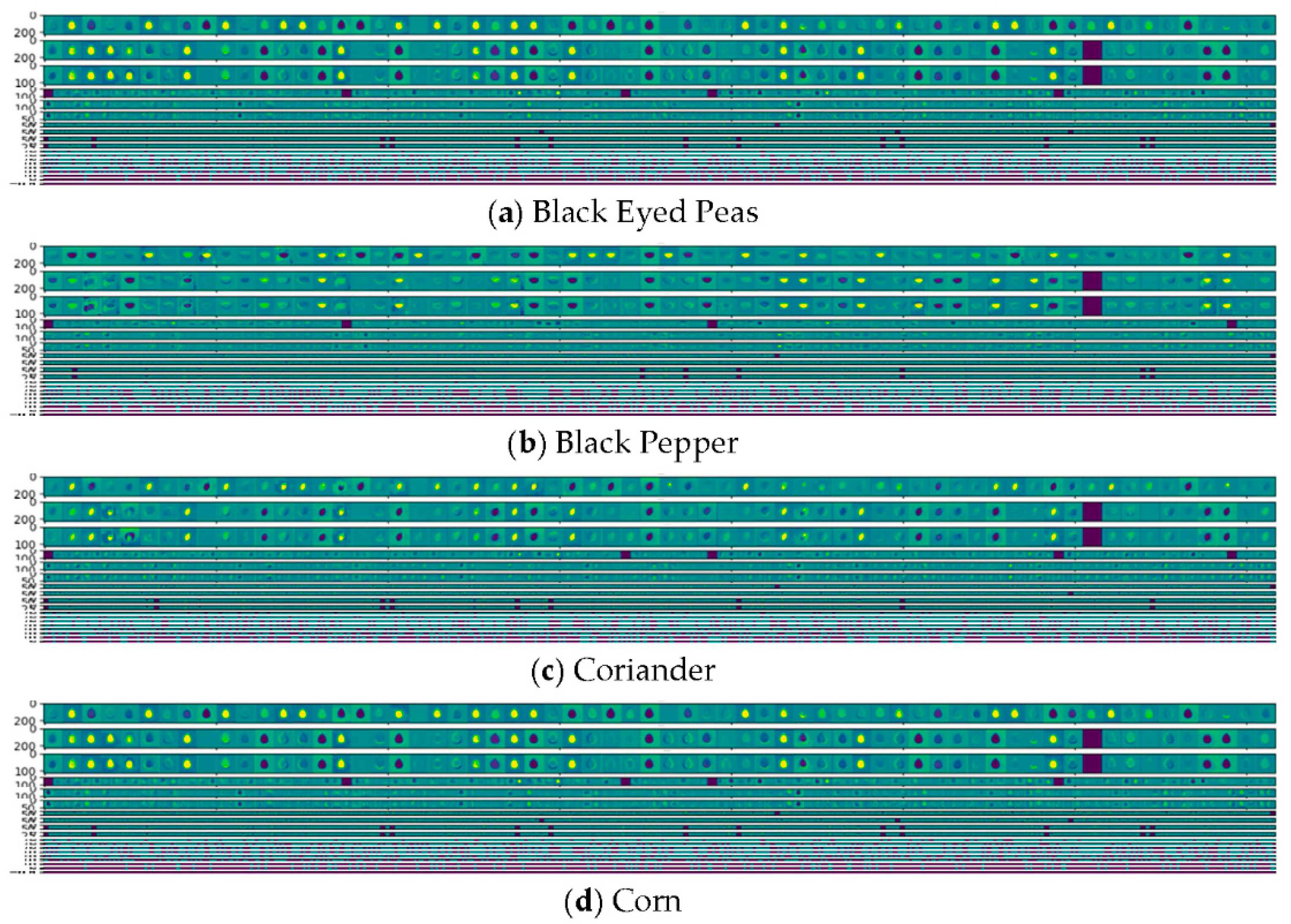

There are hardly any studies that apply convolutional neural networks (CNNs) [

12] in their models to address seed identification/classification problems. CNNs are deep learning models consisting of several layers: convolutional, pooling and fully connected layers. The convolutional layers perform feature extractions, the pooling layers perform compression and fully connected layers are for classification. Their main use is image recognition and classification. A general representation of CNNs model can be seen in

Figure 1. CNN can bring efficiency and accuracy in visual imagery analysis using precise feature extractions [

12,

13].

To the best of our knowledge, there is no study that has addressed subject heterogeneity problems with regards to various kinds of seeds. Also, most of the studies incorporated only traditional machine learning techniques for feature extraction and classification. Hence, an approach that is capable of solving the problem of subject heterogeneity by incorporating deep learning techniques to bring efficiency and accuracy in identifying and classifying seeds is needed. Previously, many researchers reported their successful results by using traditional machine learning techniques. Although these traditional techniques/approaches have been successfully applied in many practical studies, they still have many limitations in certain real-world scenarios [

14].

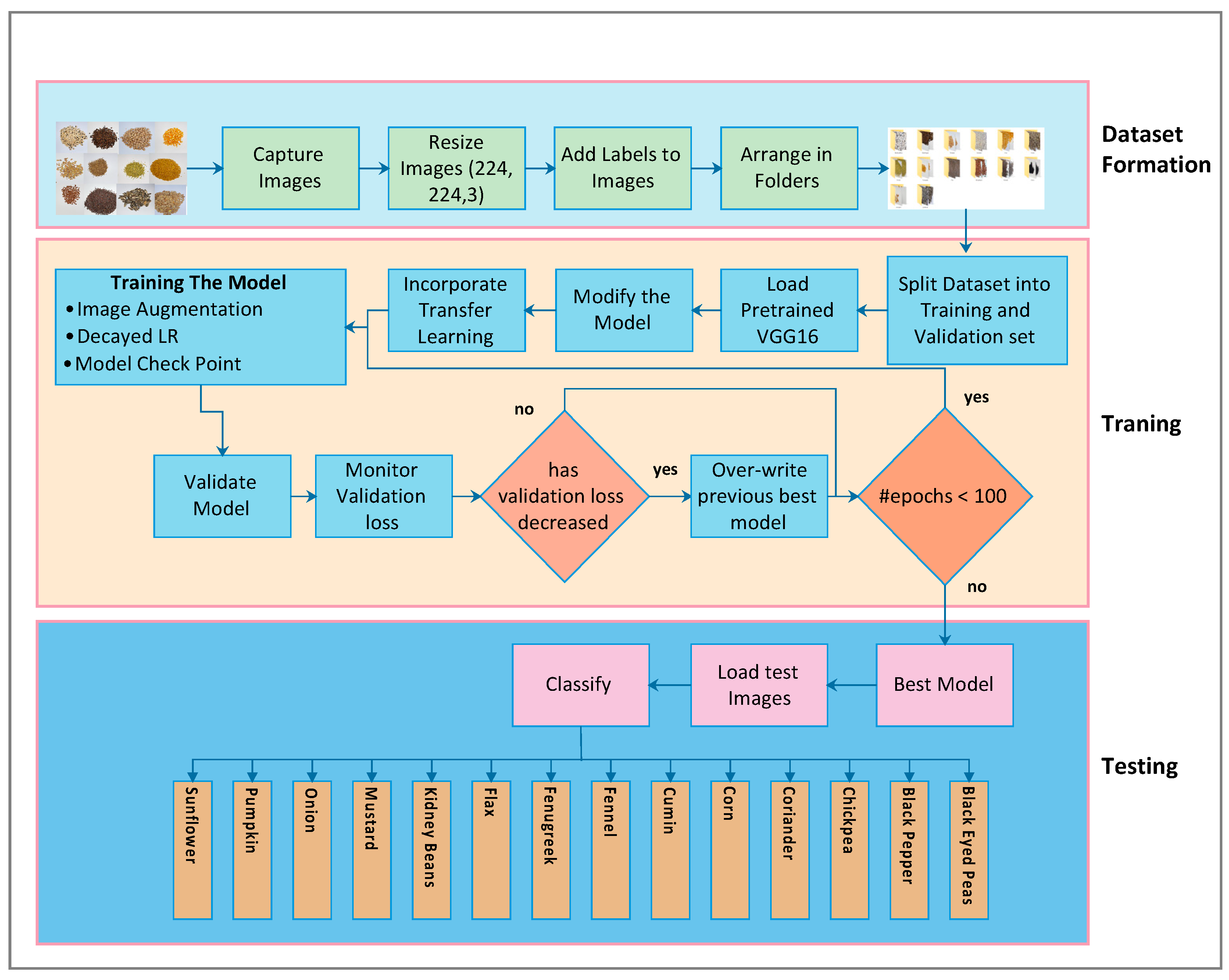

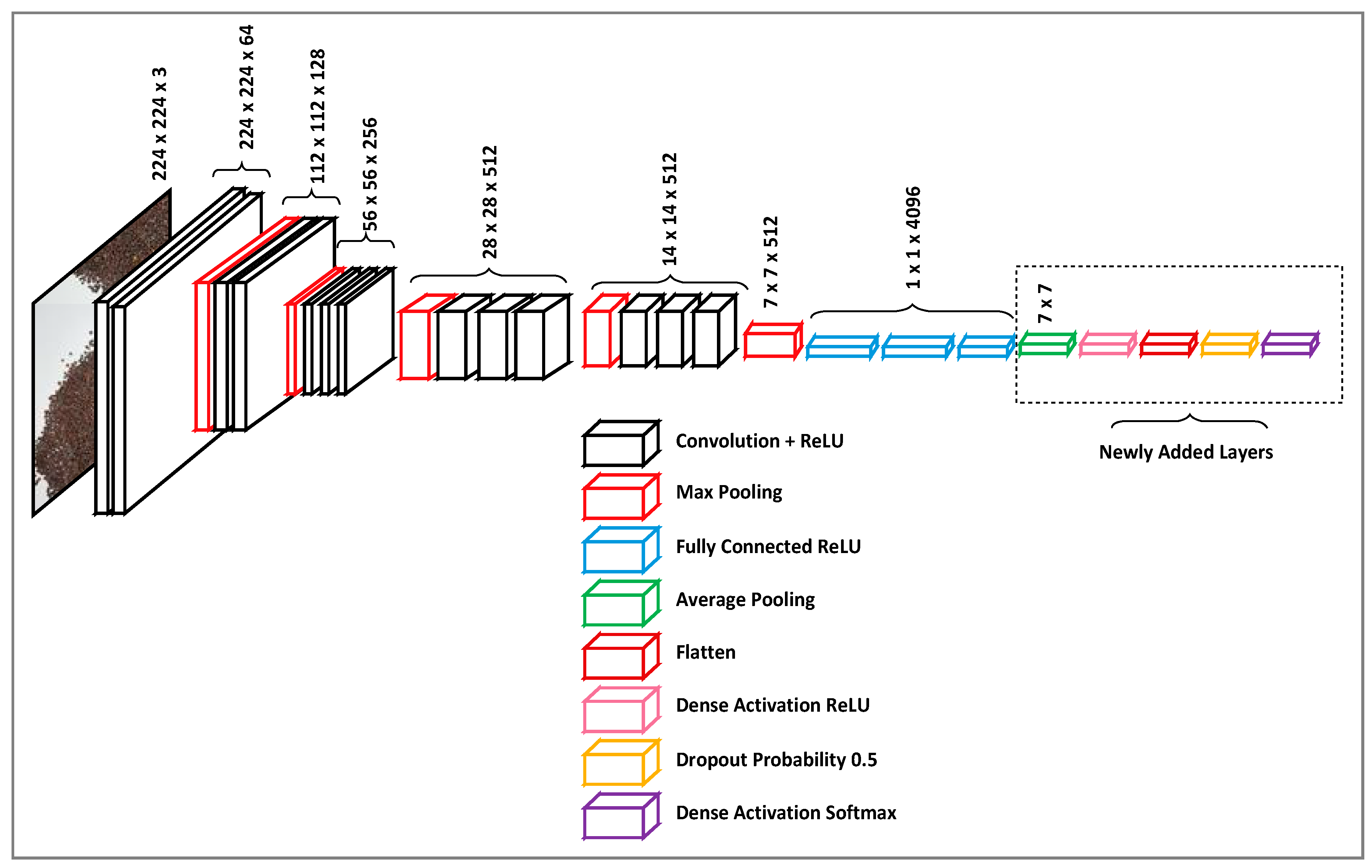

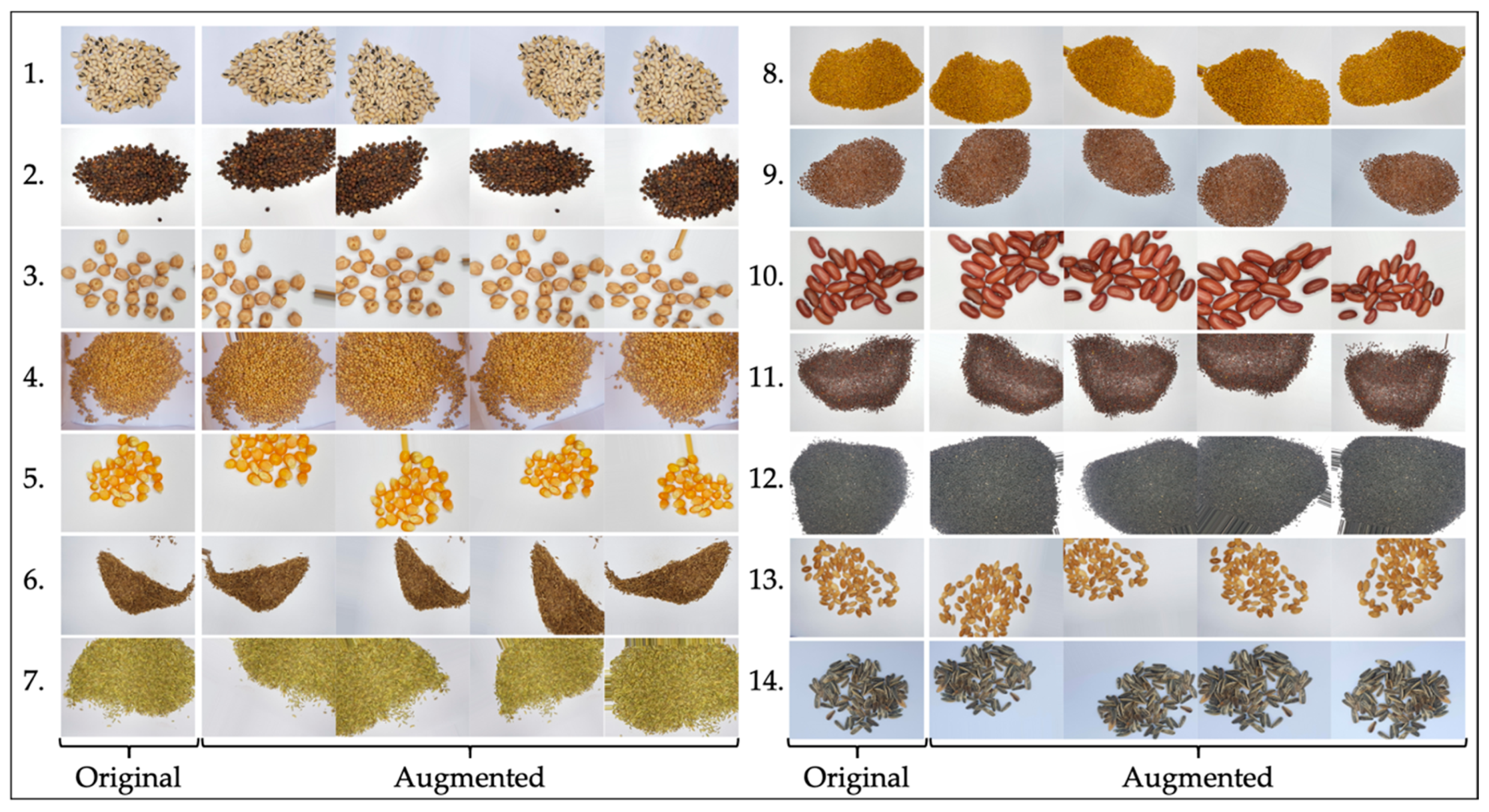

This research proposed an efficient approach for seed identification and classification based on a deep learning CNN model and the application of symmetry. The model is trained by implementing transfer learning, which focuses on transferring knowledge across domains and is considered a promising machine learning methodology. It is an important machine learning (ML) tool in solving problems related to insufficient training data, which can encourage the idea of not training the model from scratch and significantly reducing the training time [

15]. In this research, a dataset comprised of symmetric images of various seeds was used to carry out the seed classification process. Many symmetric images from each seed class were captured and then augmented to train the proposed model. Further details pertaining to the dataset are presented in

Section 3. This research work adopted a VGG16-trained model in order to accomplish successful seed identification and classification [

16]. However, the model was modified by adding new layers, which aimed to increase accuracy and reduce the error rate of the classification process. This modification in the VGG16 model leads to the optimisation of the classification process and eventually assists the common man in identifying and classifying the different type of seeds.

The following points summarise the contributions of this paper:

We conduct a detailed review examining the most notable work in the area of seed classification via machine learning and deep learning.

We re-introduce the problem of seed classification using pre-trained VGG16 convolutional neural network architecture, where variant seeds have been classified, unlike in previously proposed models, which focused only on one kind of seed.

We propose a new model for seed identification and classification by using advanced deep learning techniques. Furthermore, dropout and data augmentation techniques have been incorporated to minimise the chances of overfitting.

We fine-tune our model by applying an adjustable learning rate to make sure that our model performs equally well on the dataset and does not miss the global minimum.

We develop an optimisation technique that monitors any positive change in the validation accuracy and validation error rate. In case of change, it takes the backup of an optimal model at the end of the iteration to make sure that the proposed model is at its highest level of accuracy with the least validation loss.

The remainder of the paper is organised as follows. In

Section 2, the previous work related to this research are reported and discussed. The proposed model is explained and illustrated in

Section 3, and the results and discussion are illustrated and explained in

Section 4. Our conclusions are described in

Section 5.

2. Related Work

AI has made many contributions to agriculture, as it brings flexibility, high performance, accuracy and cost-effective solutions to solve various problems [

17]. Image processing aligned with computer vision has remained the main area of interest for many researchers. Image processing was introduced in agriculture less than a decade ago to address seed sorting and classification for the quality of crop production. Image processing involves feature extraction, which is considered a complex task and helps in object identification and classification. In AI, traditional ML techniques have been widely applied in object identification and classification.

Jamuna et al. [

4] employed machine learning techniques (e.g. Naïve Bayes classifier, a decision tree classifier and MLP) to train the model in feature extraction using a sample of 900 cotton seeds. They reported that the decision tree classifier and MLP gave the same accuracy in classifying the seed cotton, with a rate of 98.7%, and Naïve Bayes classifier had an accuracy rate of 94.22%. Their results show that Naïve Bayes classifier had the highest error rate, as it made incorrect classification 52 times, whereas the decision tree classifier and MLP made 11 incorrect classifications each.

Feature extraction in traditional ML techniques mainly relies on user-specified features that may cause the loss of some important information, due to which researchers are then faced with difficulty in getting accurate results. Deep learning techniques determine the features of the images in different layers instead of relying on the self-made features of the images [

2]. For example, in a study by Rozman and Stajnko [

9], the quality of tomato seeds was reported in terms of their vigour and germination. In their study, they proposed a computer vision system and reported a detailed procedure for image processing and feature extraction by incorporating a Gaussian filter, segmentation, and Region of Interest (ROI). This study incorporated machine learning classification algorithms including Naïve Bayes classifiers (NBC), k-nearest neighbours (k-NN), decision tree classifiers, support vector machines (SVM) and artificial neural networks (ANN) to sort a sample of 700 seeds. Among these algorithms, the ANN (MLP architecture) showed the best performance in seed classification, with an accuracy of 95.44%. Other accuracy rates were NBC at 87.89%, k-NN at 91.66%, DT at 93.66% and SVM at 93.09% [

9].

A comparative study conducted by Agrawal and Dahiya [

18] on ML algorithms aimed to classify various grain seeds by using logistic regression (LR), Linear Discriminant Analysis (LDA), k-Nearest Neighbors classifier (kNN), a decision tree classifier (CART), Gaussian Naïve Bayes (NB) and support vector machine (SVM). This study reported performance rates for both linear (LR and LDA) and non-linear (kNN, CART, NB and SVM) algorithms. The accuracy rates for these six algorithms were as follows: the rate of LR was 91.6%, the rate of LDA was 95.8%, the rate of kNN was 87.5%, the rate of CART was 88%, the rate of NB was 88.05% and the rate of SVM was 88.71%. From these results, it can be seen that LDA had the superior performance [

18].

Another classification study conducted on corn seeds incorporated a probabilistic neural network (PNN). This analysis has been done on waveform data and aligned the terahertz time-domain spectroscopy (THz-TDS) with the machine learning algorithm PNN. The result of their classification rate showed 75% accuracy with 5-fold cross-validation [

10].

Moreover, on feature extraction, research carried out by Vlasov and Fadeev [

19] on grain crop seeds by used a machine learning approach over mechanical methods. They elaborated the details of feature extraction by using traditional machine learning, which included image feature extraction, descriptors retrieval, clustering and finishing with a vocabulary of visual words. Although their major focus was on the traditional ML approach, they also reported deep learning as a second method for seed classification and purification. Their results showed that the deep learning approach reached 95% classification accuracy, where traditional learning had a rate of around 75% [

19].

In another study, the feature extraction of rice, which was based on physical properties like shape, colour and texture, was done for classification. The researchers used four methods (LR, LDA, k-NN and SVM) of statistical machine learning techniques, and five pre-trained models (VGG16, VGG19, Xception, InceptionV3 and InceptionResNetV2) with deep learning techniques were used for the classification performance comparison. The best accuracy rate was obtained from the SVM method (83.9%), while the best accuracy from the deep learning techniques was obtained from the InceptionResNetV2 model (95.15%) [

6].

In a study conducted on corn seed purification, a hybrid dataset preparation was reported. The authors performed a tedious procedure to extract features from corn seeds. The hybrid feature dataset was comprised of a histogram, texture features and spectral features. The classification models used in their study included random forest (RF), BayesNet (BN), LogitBoost (LB) and multilayer perceptron (MLP), along with optimised multi-feature using the (10-fold) cross-validation approach. Among these classifiers, MLP reported outstanding classification accuracy (98.93%) on ROIs size (150 × 150) [

11].

With regards to traditional AI-based algorithms, they involve detailed steps in the feature extraction technique. They also need assistance from experts, which has a negative impact on the efficiency of the algorithms. Deep learning (DL), unlike traditional classification learning methods, is not limited to shallow structure algorithms. It can perform complex functions with limited samples, extracting the most essential features from only a small number of training samples. A study conducted by Xinshao [

3], based on a sample of weed seeds, used the deep learning technique principal component analysis network (PCANet). This study minimised the limitation of manual feature extraction by learning features from the dataset.

DL, which mainly focuses on machine learning, has brought advancements to the producing of results within various data analysis tasks, especially in computer vision [

5]. DL is capable of representing data by automatically learning abstract deep features of a deep network. It is widely applied in various visual tasks. A study conducted on oat seed variety implemented a deep convolutional neural network (DCNN) in combination with some traditional classifiers, namely logistic regression (LR), support vector machine with RBF kernel (RBF_SVM) and linear kernel (LINEAR_SVM) [

7].

Another study [

5] compared the performance of k-nearest neighbours (kNN), a support vector machine (SVM) and CNN models on spectral data of rice. They reported that CNN outperformed the other two models with 89.6% and 87% accuracy rates on a training set and testing set, respectively.

The complexity of sunflower seed features was addressed in a study with a core focus on CNN. The authors claim that the impurities in sunflower seeds are difficult to recognise due to their texture. They reported that, among all available methods, CNN achieved great success in object detection and identification. Therefore, they used it to address their research problem. They developed an eight-layer CNN model to extract image features. The results of their extensive experiments affirmed the model’s accuracy to be much higher than any other traditional model [

8].

There are not many studies found that incorporated CNN to identify and classify varieties of seeds. In their study, Maeda-Gutiérrez et al. [

20] reported comparisons among CNN-based architectures, including AlexNet [

21], GoogleNet [

22], Inception V3 [

23] and Residual Network (ResNet 18 and 50) [

24]. The data set used in their research contained a single genre (tomato plant seeds), whereas, in our research, we have proposed an efficient model for seed identification and classification based on CNN, which is a deep learning model that possesses a high precision level in image features extraction. Unlike most of the relevant studies, the dataset of this research contains 14 types of seed. The training of this model was carried out through transfer learning, which made the focus of this research more about validation and the testing of the model.

4. Experimentation and Result Analysis

To fairly evaluate the performance and prove the efficiency of our proposed solution to processing seed identification and classification over a broad range of seeds, several extensive experiments were designed. The proposed work is implemented in Python, a scientific programming language on the Windows 10 operating system. The system configuration includes an i7 processor with 16GB RAM. It took 67 h to train the proposed model with the specified configurations. To capture images, an android device running on android Pi with 6GB RAM and a camera resolution of 12-megapixels was used. With regards to lighting, white light was used, and seeds were placed on a white background.

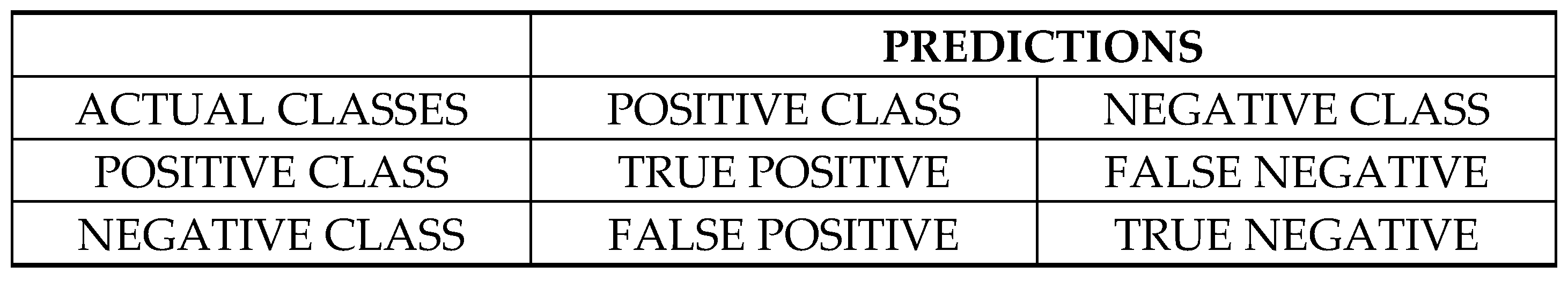

To assess the performance of the classification model, a range of performance metrics were employed, derived from a 2 × 2 confusion matrix. By definition, a confusion matrix (C) is such that C

i,j is equal to the number of observations known to be in group (

i) and predicted to be in group (

j), as described in

Figure 9.

There are four different cases in the confusion matrix, from which more advanced metrics are obtained:

True positive: a presented image is of a mustard seed and the model classifies it as a mustard seed image.

True negative: a presented image is not of mustard seed and the model does not classify it as a mustard seed image.

False positive: a presented image is not of mustard seed; however, the model incorrectly classifies it as the mustard seed image.

False negative: a presented image is actually of mustard seed; however, the model incorrectly classifies it as something else.

In machine learning, life cycle model evaluation is an important phase used to check its performance. To check the performance of our proposed model, the following metrics have been measured:

We have also assessed the trained model for validation accuracy and validation loss. Furthermore, the model is being continuously monitored in terms of validation accuracy and validation loss to spot any noticeable deviation in training and validation performance.

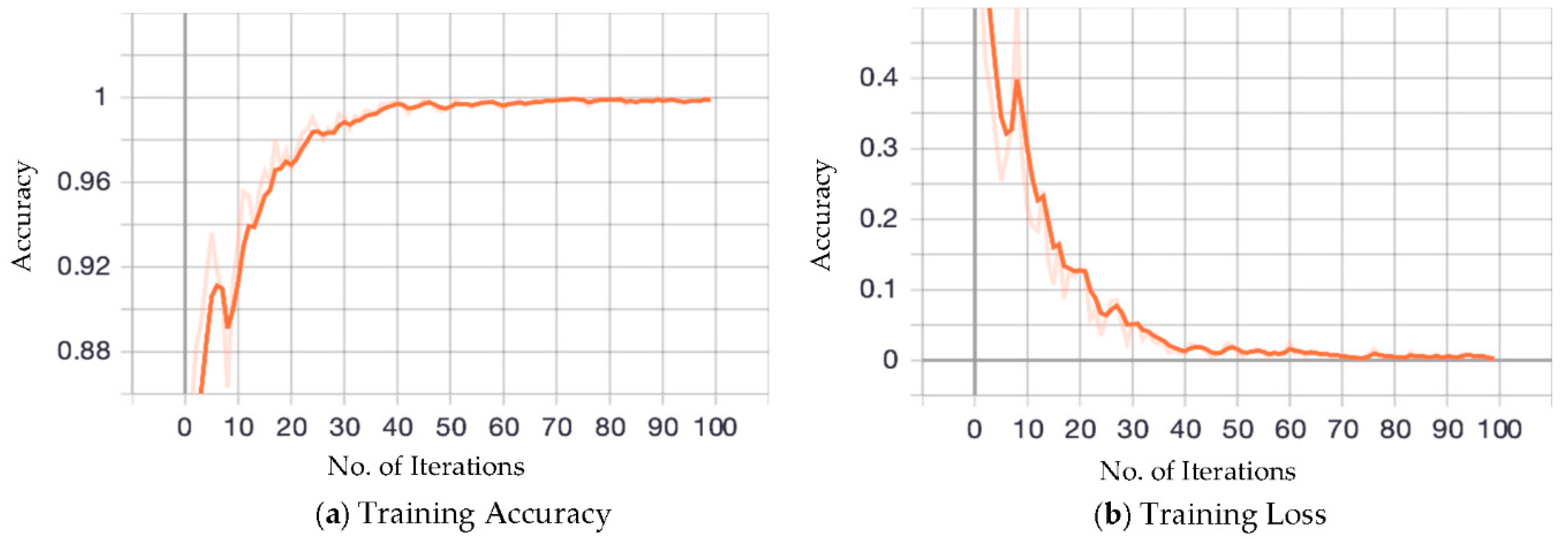

Figure 10 depicts the training accuracy and loss of the model. In

Figure 10a, it can be seen that the model started with a training accuracy of 0.81 in the first epoch. The model performance continues to improve from iterations 1 to 5, and reaches a maximum accuracy of 0.93. Thereafter, accuracy plunges in the successive iteration, and by the end of the 10th iteration, it drops to the initial starting accuracy.

In these 10 iterations, we could see both high and low accuracy rates, which indicates that the model is not yet best fit for the data. The performance of the model appreciably improves from the 10th to the 40th iteration, reaching a maximum value of 0.996. In between, it can be seen that there are some cases where accuracy reduces. However, this does not have any impact on the performance of the model. From the 40th iteration onwards, there is a small reduction in the accuracy up to the next 60 iterations by keeping the performance steady. This indicates that the optimal model has been obtained for the training data.

Figure 10b illustrates the training loss of the model. In the beginning, the model lost value, which is normal at the initial stage of the training process, as a model has not been exposed to many examples of the data.

Figure 10b also depicts that the training loss drops with each successive epoch, which is considered to be a characteristic of a good model. From the figure, it can be seen that the model’s loss oscillates between noticeable highs and lows up to the 40th iteration. However, after the 40th iteration, the model maintains its smallest loss value. The steadiness in the results shown is due to the quality of the dataset and the implementation of dense architecture with multiple layers.

It is evident from the literature that, during the training of the model, the performance can be made desirable. However, this process can be misleading and may fail on the validation dataset. This is because the model has only seen supervised data and is not well-learned. To consider a model as the best fit, it should not only perform well on the training dataset, but also the validation dataset.

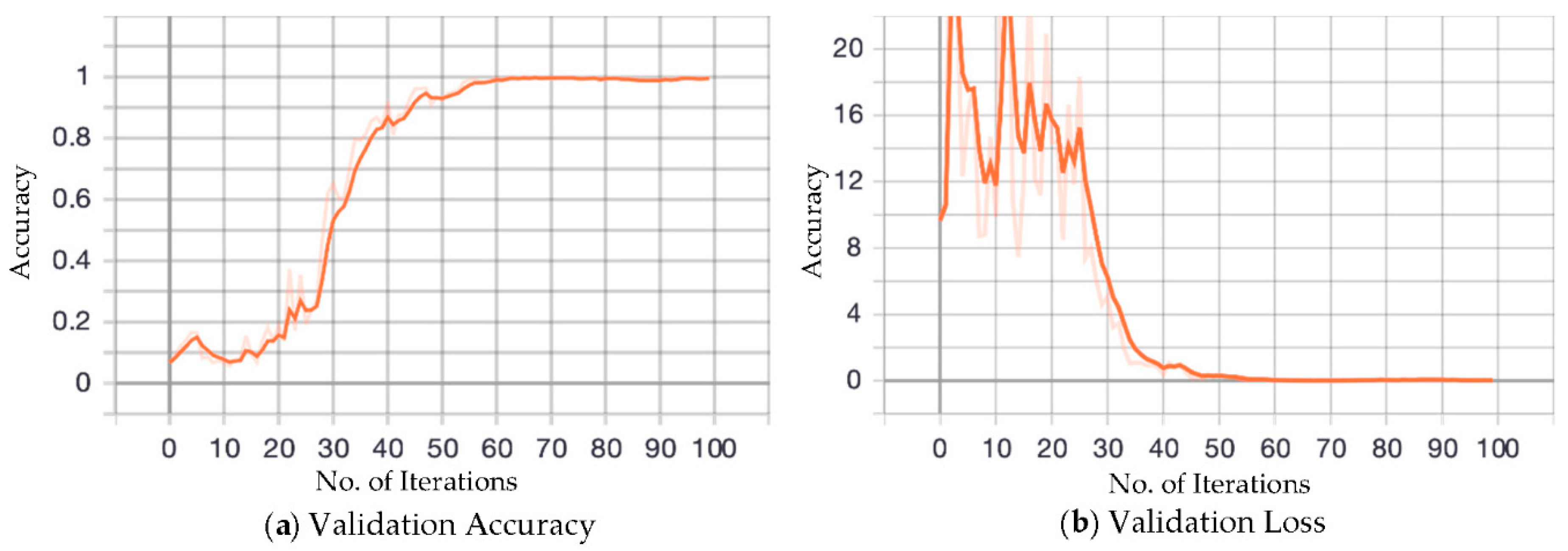

Figure 11 elucidates the validation accuracy and loss of the proposed model.

From

Figure 11a, it can be seen that the model initially performs poorly with an accuracy rate of around 0.22. This is because the model is not trained enough. The performance of the model stays more or less the same for the first 20 iterations. From the 20th to the 30th iteration, there is a gradual improvement in the performance of the model. It reaches its peak value at the 40th iteration. From there onwards, the performance of the model remains steady. This is due to the fact that we implemented dense architecture with multiple layers.

Figure 11b shows the validation loss of the model. It can be seen that the model had a very high validation loss at the initial stage during the validation process, which was not even close to the acceptable range. The figure also shows that the loss oscillates between highs and lows for the first 40 iterations. From the 40th iteration onwards, the loss starts to reduce monotonously and eventually reaches the minimum value in the 55th iteration, remaining unchanged from there.

The model showed stability in its performance during the training and validation processes, which was due to the pre-processing techniques employed in the model. This started with the collection of data, within which an effort was made to have a balanced distribution of data across all of the classes. In addition, the image augmentation technique also helped to make sure that the system was exposed to the most variations of the dataset. The dropout technique played a major role in the model’s validation performance by making sure that the model did not deviate much from its training performance.

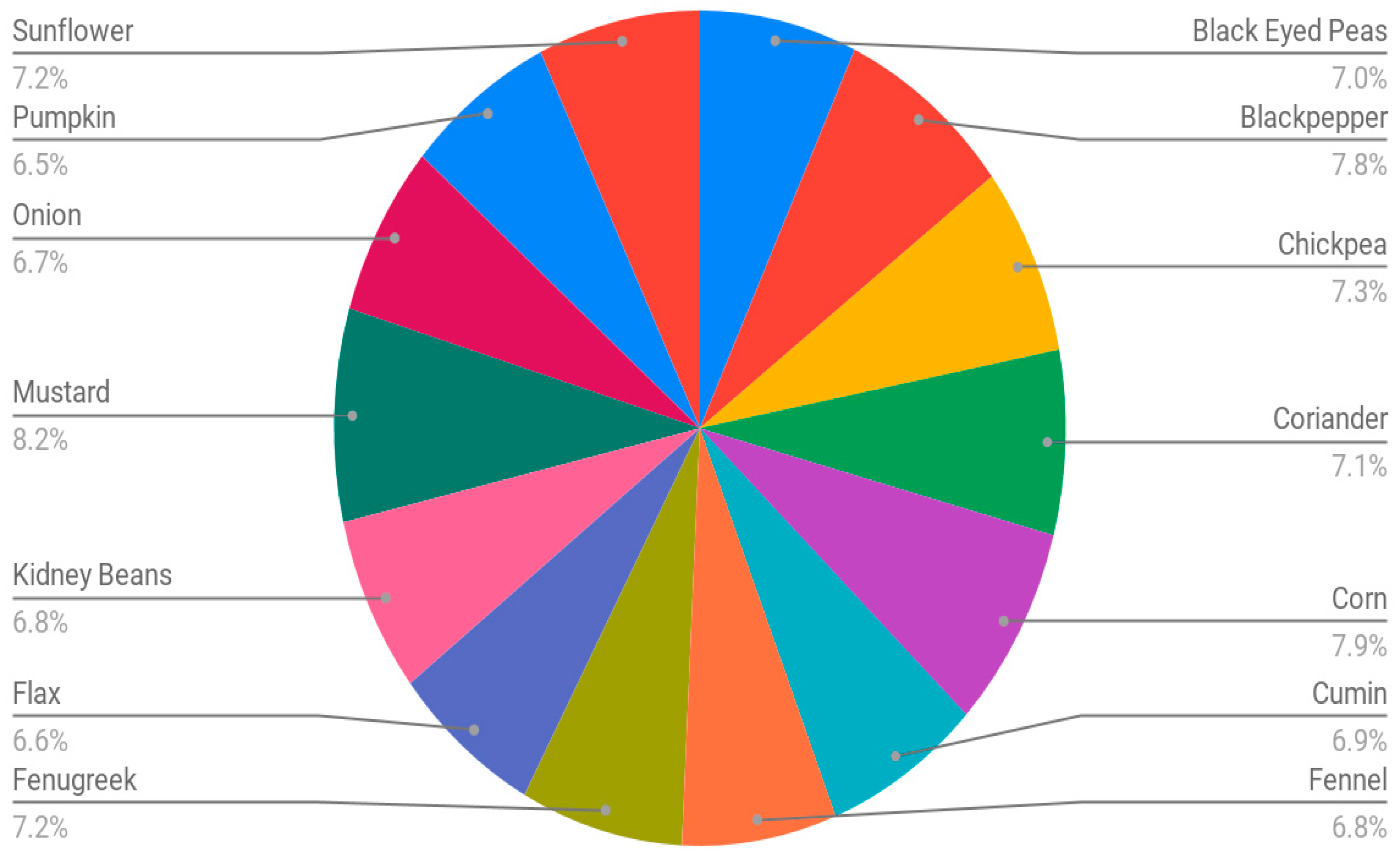

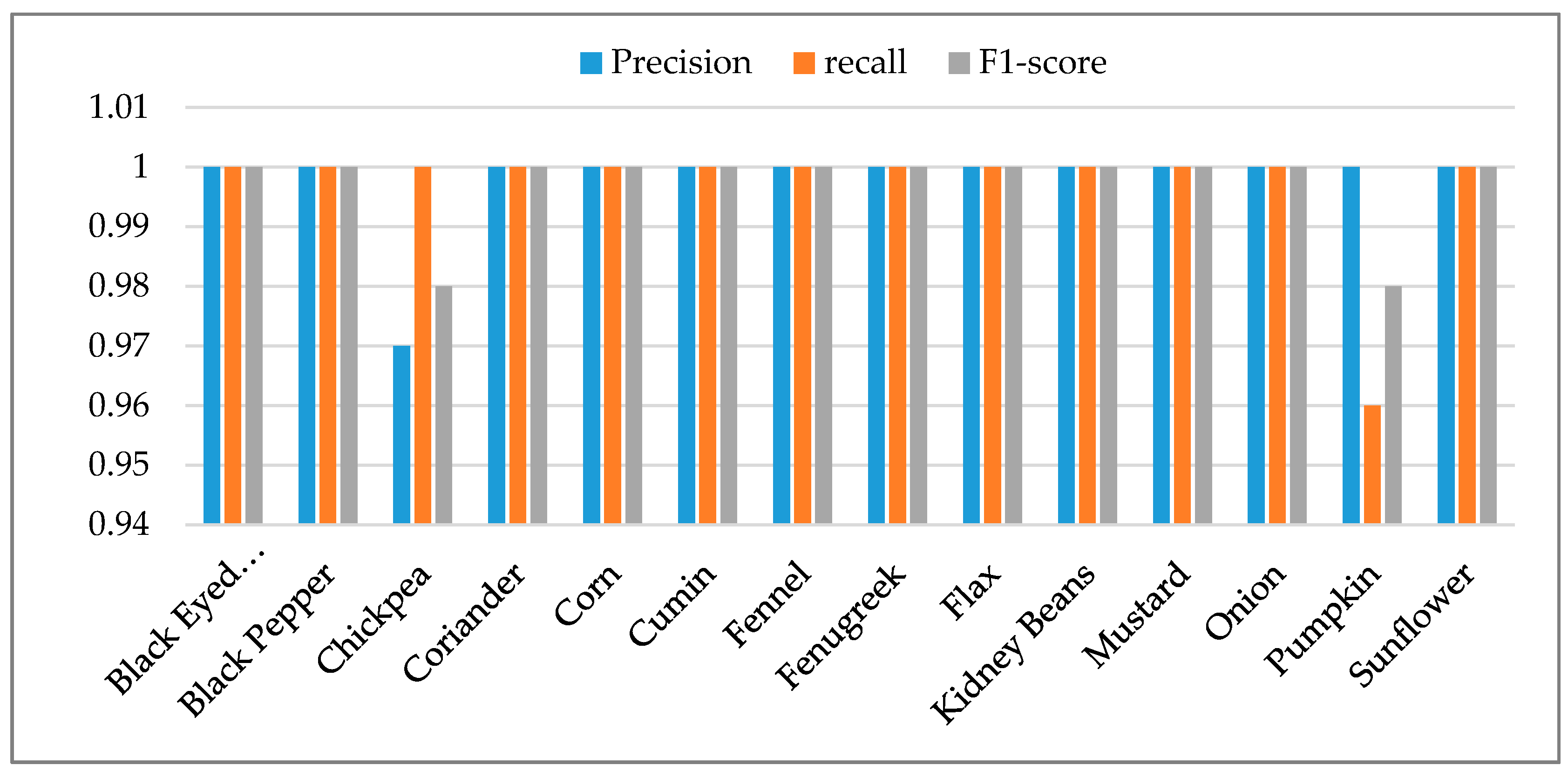

Table 2 outlines the performance of each seed class mentioned in the proposed model in terms of precision, recall, F1-score and support. Support represents the number of instances of each class that was performed during model training. It is important to note that, with the help of image augmentation for each class, around 1700 to 1800 random instances were synthetically created. From the table, it can be noticed that the model showed the maximum possible values for each seed class except the chickpea and pumpkin seed classes. This is because the model mixed up these two classes due to the similarities in their texture. This may have been caused by the light settings of the camera. From the table, it can also be seen that the aggregate accuracy value of the proposed model is equal to 1, which is considered the maximum value. This high performance of the model was due to the implementation of the pre-processing techniques, as well as other hyperparameters. A visual representation of the model training performance can be seen in

Figure 12.

It is important to test the proposed model and check its performance on unseen real-world data. In this regard, a new dataset was prepared in which images were captured randomly in different light settings and angles. This newly prepared dataset was used by the trained model to predict the images.

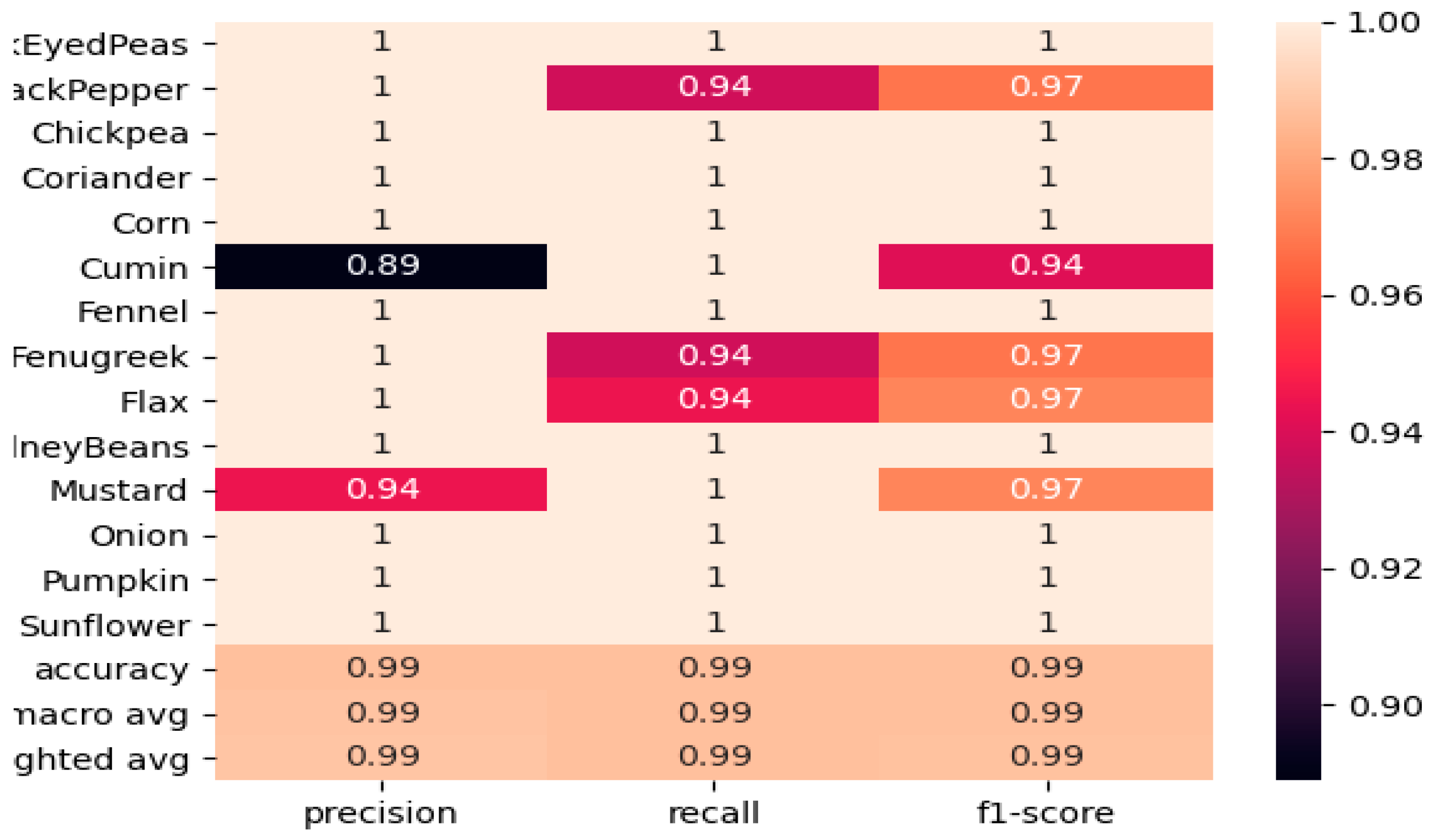

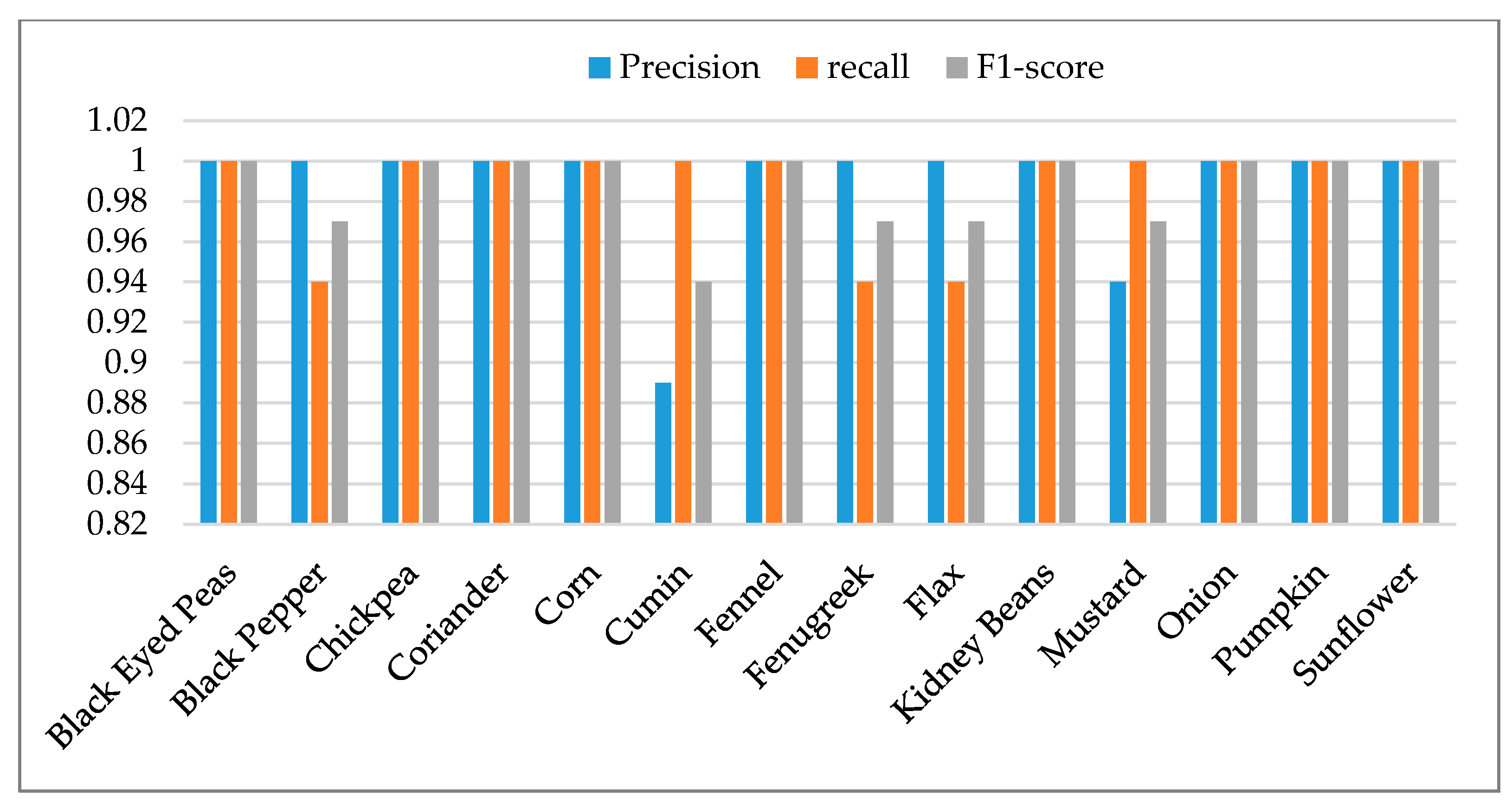

Table 3 presents the test results of the proposed model. It can be seen from the table that the model reported a precision value of 1 for most of the classes. However, the cumin seeds had the lowest precision value (0.89). This is because the textures of the cumin and fennel seeds are alike. From the table, it is obvious that the precision value of the mustard seed is second lowest (0.94). This is because, while zooming, the images of mustard seeds show similar features to those seen in the images of black pepper seeds. Similarly, in the recall, the model reports maximum values for most of the classes, except black pepper, fenugreek and flax seeds, all with a value of 0.94. F1-scores are also reported in the table.

Figure 13 depicts a heat map of the performance of the proposed model on the testing set. It also shows the steady performance given for most of the classes in the model in terms of precision, recall and F1-score. A visual representation of the model testing performance can be seen in

Figure 14. The test results of the proposed model are encouraging and can be used in the industry for sorting seeds in conveyor systems and for packaging.

Since this study is the first attempt to investigate the issues of seed identification and classification with a large variety of different seed types (up to 14 types), we compare our proposed solution to other well-known existing strategies that are the closest to this work, namely kNN, a decision tree classifier, Gaussian Naïve Bayes, random forest classifier, AdaBoost classifier and logistic regression.

Table 4 presents the precision, recall and F1-score results produced using the training data by kNN, decision tree classifier, Gaussian Naïve Bayes, random forest classifier, AdaBoost classifier, logistic regression and our proposed model, respectively. From these results, it can be seen that our proposed model outperforms all other models by achieving the highest values for precision, recall and F1-score. Also, the table depicts that the decision tree classifier was the worst, while the random forest classifier achieved the most acceptable precision results compared to other models except our proposed model. The results also indicate that kNN, Gaussian Naïve Bayes and AdaBoost classifiers were slightly better than the decision tree classifier in terms of precision. The results described in

Table 4 illustrate that our proposed model is superior to the other models considered in this research work with regards to recall and F1-score. Lastly, the results demonstrate that AdaBoost classifier was the worst in terms of recall and F1-score. The random forest classifier steadily outperformed the other models by achieving the second-highest recall and F1-score values, after our proposed model. The main reason that our proposed approach achieved the highest precision, recall, and F1-score was the application of the CNN strategy and the incorporation of five new layers in the VGG16 model. This helped in generating more accurate classifications of seed based on provided images.