A Modified Whale Optimization Algorithm with Single-Dimensional Swimming for Global Optimization Problems

Abstract

:1. Introduction

2. Standard WOA Algorithm

2.1. Encircling Prey (Exploitation Phase)

2.2. Bubble-Net Attacking Method (Exploitation Phase)

2.3. Search for Prey (Exploration Phase)

2.4. The Pseudo Code of WOA

| Algorithm 1 WOA |

01 initialize maxIteration, popsize and parameter b 02 initialize the population and calculate fitness 03 obtain the optimal agent 04 WHILE t<maxIteration DO 06 WHILE i<popsize DO 07 generate random number 08 IF p < 0.5 THEN 09 IF THEN 10 update position of agent i by Equation (2) 11 ELSE 12 generate random agent rand 13 update position of agent i by Equation (9) 14 ENDIF 15 ELSE 16 update position of agent i by Equation (6) 17 ENDIF 18 i = i + 1 19 ENDWHILE 20 update optimal agent if there is a better solution 21 t = t + 1 22 ENDWHILE 23 RETURN optimal agent |

3. Whale Optimization Algorithm with Single-Dimensional Swimming (SWWOA)

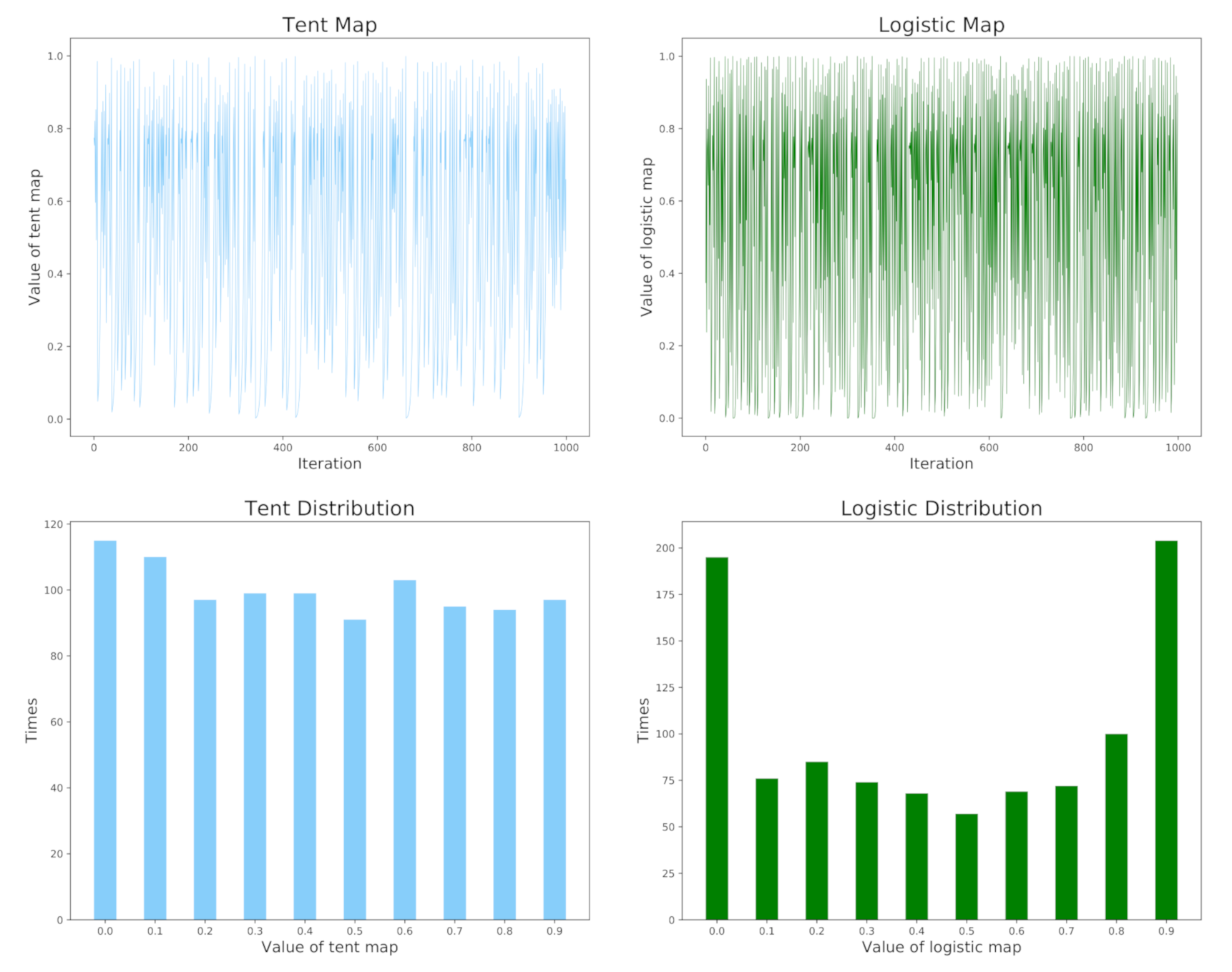

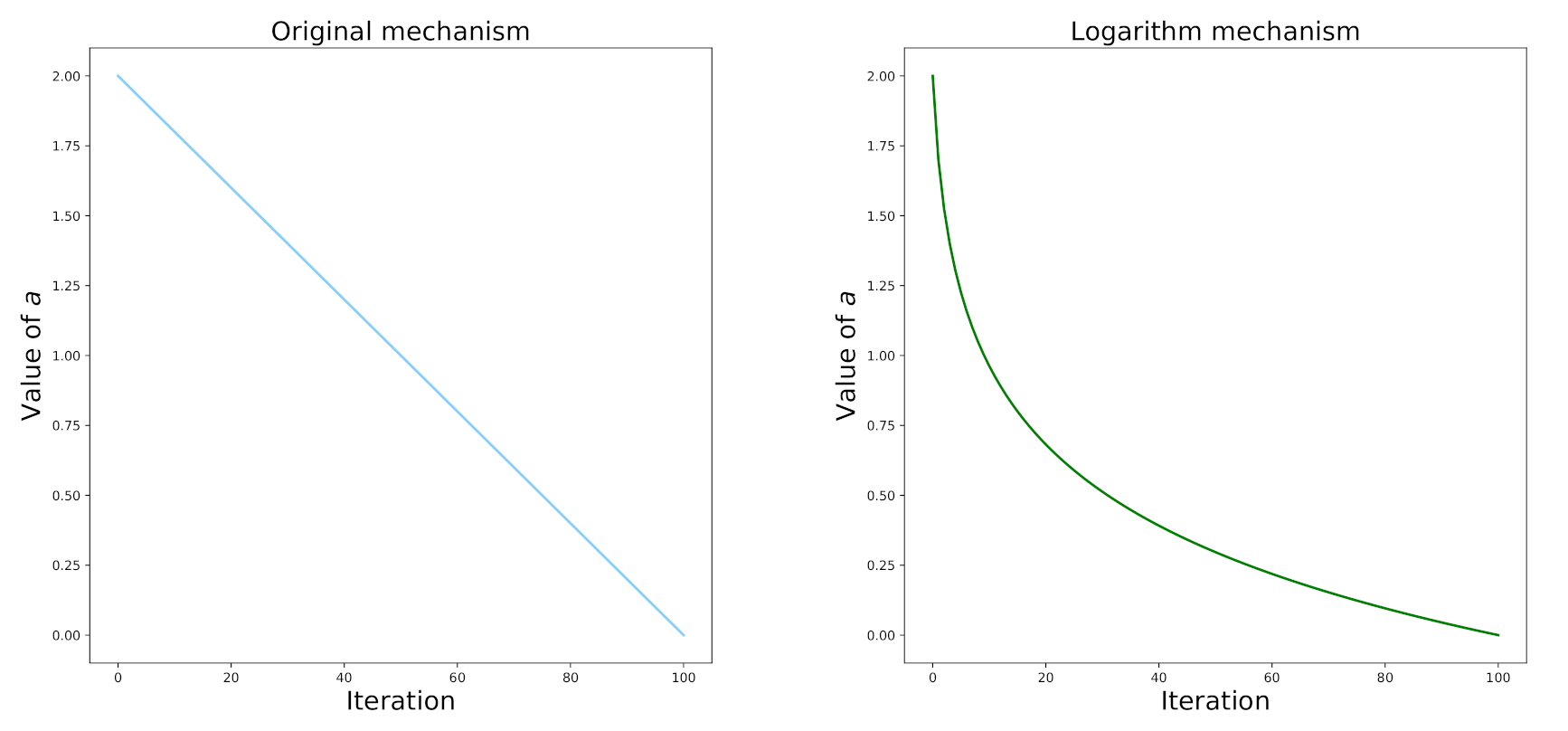

3.1. Chaotic Sequence Based on Tent Map

3.2. Quasi-Opposition Learning

3.3. Logarithm-Based Nonlinear Control Parameter

3.4. Single-Dimensional Swimming

3.5. The Pseudo Code of SWWOA

| Algorithm 2 SWWOA |

01 initialize maxIteration, popsize and parameter b 03 obtain the optimal agent 04 WHILE t<maxIteration DO 05 update a by Equation (14) 07 WHILE i<popsize DO 08 quasi-opposition learning on agent i by Equation (13) 09 generate random number 10 IF p < 0.5 THEN 11 IF THEN 12 generate random dimension d 14 ELSE 15 generate random agent rand 16 update position of agent i by Equation (9) 17 ENDIF 18 ELSE 19 update position of agent i by Equation (6) 20 ENDIF 21 compare and , retaining the better agent 22 i = i + 1 23 ENDWHILE 24 update optimal agent if there is a better solution 25 t = t + 1 26 ENDWHILE 27 RETURN optimal agent |

4. Experimental Results and Analysis

4.1. Test Functions

4.2. Numerical Analysis

4.2.1. Test on Shifted Rotated Functions

4.3. Wilcoxon’S Rank Sum Test Analysis

4.4. Convergence Speed Comparison

4.5. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yildiz, Y.E.; Topal, A.O. Large scale continuous global optimization based on micro differential evolution with local directional search. Inf. Sci. 2019, 477, 533–544. [Google Scholar] [CrossRef]

- Deng, H.; Peng, L.; Zhang, H.; Yang, B.; Chen, Z. Ranking-based biased learning swarm optimizer for large-scale optimization. Inf. Sci. 2019, 493, 120–137. [Google Scholar] [CrossRef]

- Han, F.; Jiang, J.; Ling, Q.H.; Su, B.Y. A survey on metaheuristic optimization for random single-hidden layer feedforward neural network. Neurocomputing 2019, 335, 261–273. [Google Scholar] [CrossRef]

- Boussaïd, I.; Lepagnot, J.; Siarry, P. A survey on optimization metaheuristics. Inf. Sci. 2013, 237, 82–117. [Google Scholar] [CrossRef]

- López-Campos, J.A.; Segade, A.; Casarejos, E.; Fernández, J.R.; Días, G.R. Hyperelastic characterization oriented to finite element applications using genetic algorithms. Adv. Eng. Softw. 2019, 133, 52–59. [Google Scholar] [CrossRef]

- Hussain, S.F.; Iqbal, S. CCGA: Co-similarity based Co-clustering using genetic algorithm. Appl. Soft Comput. 2018, 72, 30–42. [Google Scholar] [CrossRef]

- Assad, A.; Deep, K. A Hybrid Harmony search and Simulated Annealing algorithm for continuous optimization. Inf. Sci. 2018, 450, 246–266. [Google Scholar] [CrossRef]

- Morales-Castañeda, B.; Zaldívar, D.; Cuevas, E.; Maciel-Castillo, O.; Aranguren, I.; Fausto, F. An improved Simulated Annealing algorithm based on ancient metallurgy techniques. Appl. Soft Comput. 2019, 84, 105761. [Google Scholar] [CrossRef]

- Devi Priya, R.; Sivaraj, R.; Sasi Priyaa, N. Heuristically repopulated Bayesian ant colony optimization for treating missing values in large databases. Knowl. Based Syst. 2017, 133, 107–121. [Google Scholar] [CrossRef]

- Silva, B.N.; Han, K. Mutation operator integrated ant colony optimization based domestic appliance scheduling for lucrative demand side management. Future Gener. Comput. Syst. 2019, 100, 557–568. [Google Scholar] [CrossRef]

- Pedroso, D.M.; Bonyadi, M.R.; Gallagher, M. Parallel evolutionary algorithm for single and multi-objective optimisation: Differential evolution and constraints handling. Appl. Soft Comput. 2017, 61, 995–1012. [Google Scholar] [CrossRef]

- Kohler, M.; Vellasco, M.M.; Tanscheit, R. PSO+: A new particle swarm optimization algorithm for constrained problems. Appl. Soft Comput. 2019, 85, 105865. [Google Scholar] [CrossRef]

- Lynn, N.; Suganthan, P.N. Ensemble particle swarm optimizer. Appl. Soft Comput. 2017, 55, 533–548. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, H.; Tu, L. A modified particle swarm optimization for multimodal multi-objective optimization. Eng. Appl. Artif. Intell. 2020, 95, 103905. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, Y.; Gao, S.; Pan, H.; Yang, G. A hierarchical gravitational search algorithm with an effective gravitational constant. Swarm Evol. Comput. 2019, 46, 118–139. [Google Scholar] [CrossRef]

- Kong, D.; Chang, T.; Dai, W.; Wang, Q.; Sun, H. An improved artificial bee colony algorithm based on elite group guidance and combined breadth-depth search strategy. Inf. Sci. 2018, 442–443, 54–71. [Google Scholar] [CrossRef]

- Kumar, D.; Mishra, K. Co-variance guided Artificial Bee Colony. Appl. Soft Comput. 2018, 70, 86–107. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, S.; Shi, Y.; Gong, D.W.; Zhao, X. Cost-sensitive feature selection using two-archive multi-objective artificial bee colony algorithm. Expert Syst. Appl. 2019, 137, 46–58. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, T.; Liu, Z. A whale optimization algorithm based on quadratic interpolation for high-dimensional global optimization problems. Appl. Soft Comput. 2019, 85, 105744. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, X.; Chen, Y.; Liu, Z. A modified whale optimization algorithm for large-scale global optimization problems. Expert Syst. Appl. 2018, 114, 563–577. [Google Scholar] [CrossRef]

- Chen, H.; Li, W.; Yang, X. A whale optimization algorithm with chaos mechanism based on quasi-opposition for global optimization problems. Expert Syst. Appl. 2020, 158, 113612. [Google Scholar] [CrossRef]

- Ling, Y.; Zhou, Y.; Luo, Q. Lévy Flight Trajectory-Based Whale Optimization Algorithm for Global Optimization. IEEE Access 2017, 5, 6168–6186. [Google Scholar] [CrossRef]

- Ding, S.; An, Y.; Zhang, X.; Wu, F.; Xue, Y. Wavelet twin support vector machines based on glowworm swarm optimization. Neurocomputing 2017, 225, 157–163. [Google Scholar] [CrossRef]

- Chen, X.; Zhou, Y.; Tang, Z.; Luo, Q. A hybrid algorithm combining glowworm swarm optimization and complete 2-opt algorithm for spherical travelling salesman problems. Appl. Soft Comput. 2017, 58, 104–114. [Google Scholar] [CrossRef]

- Luo, K.; Zhao, Q. A binary grey wolf optimizer for the multidimensional knapsack problem. Appl. Soft Comput. 2019, 83, 105645. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Prayogo, D. Symbiotic organisms search algorithm: Theory, recent advances and applications. Expert Syst. Appl. 2019, 119, 184–209. [Google Scholar] [CrossRef]

- Truong, K.H.; Nallagownden, P.; Baharudin, Z.; Vo, D.N. A Quasi-Oppositional-Chaotic Symbiotic Organisms Search algorithm for global optimization problems. Appl. Soft Comput. 2019, 77, 567–583. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S.; Gholizadeh, H. A comprehensive survey: Whale Optimization Algorithm and its applications. Swarm Evol. Comput. 2019, 48, 1–24. [Google Scholar] [CrossRef]

- Got, A.; Moussaoui, A.; Zouache, D. A guided population archive whale optimization algorithm for solving multiobjective optimization problems. Expert Syst. Appl. 2020, 141, 112972. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Mirjalili, S. A hyper-heuristic for improving the initial population of whale optimization algorithm. Knowl. Based Syst. 2019, 172, 42–63. [Google Scholar] [CrossRef]

- Chen, H.; Yang, C.; Heidari, A.A.; Zhao, X. An efficient double adaptive random spare reinforced whale optimization algorithm. Expert Syst. Appl. 2020, 154, 113018. [Google Scholar] [CrossRef]

- Luo, J.; Chen, H.; Heidari, A.A.; Xu, Y.; Zhang, Q.; Li, C. Multi-strategy boosted mutative whale-inspired optimization approaches. Appl. Math. Model. 2019, 73, 109–123. [Google Scholar] [CrossRef]

- Agrawal, R.; Kaur, B.; Sharma, S. Quantum based Whale Optimization Algorithm for wrapper feature selection. Appl. Soft Comput. 2020, 89, 106092. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Opposition-Based Learning: A New Scheme for Machine Intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; Volume 1, pp. 695–701. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M.A. Quasi-oppositional Differential Evolution. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 2229–2236. [Google Scholar] [CrossRef]

- Garg, V.; Deep, K. Performance of Laplacian Biogeography-Based Optimization Algorithm on CEC 2014 continuous optimization benchmarks and camera calibration problem. Swarm Evol. Comput. 2016, 27, 132–144. [Google Scholar] [CrossRef]

- Drueta, S.; Ivi, S. Examination of benefits of personal fitness improvement dependent inertia for Particle Swarm Optimization. Soft Comput. 2017, 21, 3387–3400. [Google Scholar] [CrossRef]

| Algorithm | Parameter Settings |

|---|---|

| ABC | , , |

| PSO | , , , |

| , , | |

| WOA | , , , |

| OBCWOA | , , , , |

| SWWOA | , , , |

| Name | Equation | Range | Type |

|---|---|---|---|

| Sphere | US | ||

| Sum Squares | US | ||

| Schwefel 2.21 | US | ||

| Powell Sum | US | ||

| Quartic | US | ||

| Step | US | ||

| Zakharov | UN | ||

| Rosenbrock | UN | ||

| Schwefel 1.2 | UN | ||

| Schwefel 2.22 | UN | ||

| Discus | UN | ||

| Cigar | UN | ||

| Alpine | MS | ||

| Rastrigin | MS | ||

| Bohachevsky | MS | ||

| Griewank | MN | ||

| Weierstrass | MN | ||

| Ackley | MN | ||

| Schaffer | MN | ||

| Salomon | MN |

| Function | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| best | 8.38 | 1.29 | 1.86 | 0.00 | 0.00 | |

| avg | 8.38 | 1.29 | 1.15 | 0.00 | 0.00 | |

| std | 4.74 | 1.46 | 2.19 | 0.00 | 0.00 | |

| best | 1.32 | 2.30 | 4.19 | 0.00 | 0.00 | |

| avg | 1.46 | 9.61 | 1.81 | 0.00 | 0.00 | |

| std | 4.80 | 3.80 | 0.00 | 0.00 | 0.00 | |

| best | 7.24 | 4.18 | 1.34 | 1.96 | 0.00 | |

| avg | 7.24 | 4.18 | 1.58 | 2.87 | 0.00 | |

| std | 0.00 | 0.00 | 3.00 | 0.00 | 0.00 | |

| best | 1.10 | 4.33 | 1.49 | 0.00 | 0.00 | |

| avg | 1.10 | 4.33 | 1.81 | 0.00 | 0.00 | |

| std | 1.52 | 7.80 | 0.00 | 0.00 | 0.00 | |

| best | 1.05 | 1.22 | 7.63 | 0.00 | 0.00 | |

| avg | 5.76 | 1.22 | 5.52 | 0.00 | 0.00 | |

| std | 4.46 | 2.25 | 0.00 | 0.00 | 0.00 | |

| best | 1.97 | 4.20 | 0.00 | 0.00 | 0.00 | |

| avg | 1.97 | 4.20 | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| best | 3.07 | 1.06 | 4.91 | 3.38 | 6.94 | |

| avg | 3.07 | 1.06 | 6.87 | 3.15 | 2.48 | |

| std | 5.08 | 1.85 | 3.03 | 1.31 | 2.68 | |

| best | 3.79 | 1.14 | 3.37 | 1.44 | 1.17 | |

| avg | 3.79 | 1.14 | 3.59 | 7.16 | 1.31 | |

| std | 5.08 | 0.00 | 2.31 | 3.84 | 5.46 | |

| best | 1.45 | 4.83 | 2.23 | 0.00 | 0.00 | |

| avg | 1.47 | 4.83 | 8.77 | 0.00 | 0.00 | |

| std | 1.02 | 0.00 | 1.53 | 0.00 | 0.00 | |

| best | 1.54 | 2.52 | 3.54 | 2.10 | 0.00 | |

| avg | 1.54 | 2.52 | 1.68 | 3.03 | 0.00 | |

| std | 3.10 | 0.00 | 3.21 | 0.00 | 0.00 | |

| best | 1.12 | 2.36 | 1.70 | 0.00 | 0.00 | |

| avg | 1.12 | 2.36 | 2.04 | 0.00 | 0.00 | |

| std | 1.55 | 3.83 | 0.00 | 0.00 | 0.00 | |

| best | 3.12 | 1.24 | 3.60 | 0.00 | 0.00 | |

| avg | 3.12 | 1.16 | 3.12 | 0.00 | 0.00 | |

| std | 1.67 | 1.73 | 0.00 | 0.00 | 0.00 | |

| best | 2.64 | 4.26 | 3.48 | 6.94 | 0.00 | |

| avg | 2.75 | 4.26 | 3.80 | 1.47 | 0.00 | |

| std | 3.19 | 7.40 | 7.35 | 0.00 | 0.00 | |

| best | 1.60 | 2.49 | 0.00 | 0.00 | 0.00 | |

| avg | 2.81 | 2.49 | 0.00 | 0.00 | 0.00 | |

| std | 3.12 | 3.18 | 0.00 | 0.00 | 0.00 | |

| best | 3.85 | 0.00 | 0.00 | 0.00 | 0.00 | |

| avg | 1.99 | 1.39 | 0.00 | 0.00 | 0.00 | |

| std | 2.01 | 1.43 | 0.00 | 0.00 | 0.00 | |

| best | 3.13 | 3.33 | 0.00 | 0.00 | 0.00 | |

| avg | 1.23 | 3.33 | 0.00 | 0.00 | 0.00 | |

| std | 3.71 | 0.00 | 0.00 | 0.00 | 0.00 | |

| best | 2.02 | 5.34 | 0.00 | 0.00 | 0.00 | |

| avg | 7.10 | 1.23 | 0.00 | 0.00 | 0.00 | |

| std | 4.93 | 5.42 | 0.00 | 0.00 | 0.00 | |

| best | 6.85 | 4.31 | 4.44 | 4.44 | 4.44 | |

| avg | 2.02 | 4.31 | 2.40 | 4.44 | 4.44 | |

| std | 9.08 | 0.00 | 7.90 | 0.00 | 0.00 | |

| best | 4.99 | 4.15 | 0.00 | 0.00 | 0.00 | |

| avg | 4.99 | 4.28 | 8.26 | 4.86 | 0.00 | |

| std | 4.97 | 9.25 | 1.55 | 9.47 | 0.00 | |

| best | 1.21 | 4.50 | 4.25 | 0.00 | 0.00 | |

| avg | 1.21 | 4.50 | 8.49 | 0.00 | 0.00 | |

| std | 1.59 | 3.97 | 1.59 | 0.00 | 0.00 |

| Function | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| best | 4.13 | 7.69 | 3.61 | 0.00 | 0.00 | |

| avg | 7.82 | 8.34 | 2.15 | 0.00 | 0.00 | |

| std | 4.96 | 2.88 | 0.00 | 0.00 | 0.00 | |

| best | 3.16 | 8.59 | 2.13 | 0.00 | 0.00 | |

| avg | 3.16 | 8.76 | 1.02 | 0.00 | 0.00 | |

| std | 4.07 | 7.57 | 1.47 | 0.00 | 0.00 | |

| best | 9.45 | 5.55 | 3.63 | 4.78 | 0.00 | |

| avg | 9.46 | 5.80 | 9.67 | 4.43 | 0.00 | |

| std | 1.17 | 1.23 | 1.88 | 0.00 | 0.00 | |

| best | 1.00 | 1.55 | 3.57 | 0.00 | 0.00 | |

| avg | 1.23 | 9.91 | 4.61 | 0.00 | 0.00 | |

| std | 5.45 | 7.22 | 0.00 | 0.00 | 0.00 | |

| best | 1.20 | 7.38 | 8.34 | 0.00 | 0.00 | |

| avg | 3.30 | 1.73 | 4.91 | 0.00 | 0.00 | |

| std | 1.57 | 3.24 | 0.00 | 0.00 | 0.00 | |

| best | 2.01 | 2.90 | 0.00 | 0.00 | 0.00 | |

| avg | 2.01 | 3.05 | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 6.23 | 0.00 | 0.00 | 0.00 | |

| best | 1.48 | 1.64 | 6.41 | 3.61 | 1.15 | |

| avg | 1.39 | 2.11 | 1.56 | 2.07 | 3.08 | |

| std | 2.08 | 2.35 | 1.27 | 4.08 | 3.56 | |

| best | 8.24 | 1.00 | 5.59 | 3.17 | 9.50 | |

| avg | 8.24 | 1.78 | 1.71 | 5.70 | 9.75 | |

| std | 0.00 | 2.57 | 1.31 | 2.05 | 5.28 | |

| best | 2.79 | 1.63 | 7.36 | 0.00 | 0.00 | |

| avg | 3.18 | 2.05 | 1.83 | 0.00 | 0.00 | |

| std | 2.37 | 1.53 | 8.87 | 0.00 | 0.00 | |

| best | 3.55 | 1.21 | 2.42 | 3.33 | 0.00 | |

| avg | 4.21 | 1.50 | 5.95 | 1.07 | 0.00 | |

| std | 1.22 | 1.17 | 1.16 | 0.00 | 0.00 | |

| best | 5.09 | 1.00 | 3.20 | 0.00 | 0.00 | |

| avg | 5.09 | 1.60 | 7.45 | 0.00 | 0.00 | |

| std | 6.21 | 2.19 | 0.00 | 0.00 | 0.00 | |

| best | 1.00 | 1.07 | 1.75 | 0.00 | 0.00 | |

| avg | 1.84 | 1.17 | 1.78 | 0.00 | 0.00 | |

| std | 1.26 | 3.28 | 0.00 | 0.00 | 0.00 | |

| best | 2.63 | 7.77 | 1.16 | 2.16 | 0.00 | |

| avg | 3.56 | 2.08 | 2.25 | 3.78 | 0.00 | |

| std | 2.78 | 4.83 | 2.49 | 0.00 | 0.00 | |

| best | 3.36 | 1.02 | 0.00 | 0.00 | 0.00 | |

| avg | 4.44 | 1.21 | 0.00 | 0.00 | 0.00 | |

| std | 2.78 | 6.84 | 0.00 | 0.00 | 0.00 | |

| best | 1.66 | 2.68 | 0.00 | 0.00 | 0.00 | |

| avg | 4.07 | 3.26 | 0.00 | 0.00 | 0.00 | |

| std | 1.11 | 1.84 | 0.00 | 0.00 | 0.00 | |

| best | 1.05 | 4.12 | 0.00 | 0.00 | 0.00 | |

| avg | 1.80 | 5.91 | 0.00 | 0.00 | 0.00 | |

| std | 4.11 | 2.53 | 0.00 | 0.00 | 0.00 | |

| best | 8.03 | 7.44 | 0.00 | 0.00 | 0.00 | |

| avg | 2.27 | 9.07 | 0.00 | 0.00 | 0.00 | |

| std | 4.53 | 4.14 | 0.00 | 0.00 | 0.00 | |

| best | 7.75 | 3.46 | 4.44 | 4.44 | 4.44 | |

| avg | 9.89 | 5.07 | 1.69 | 4.44 | 4.44 | |

| std | 6.97 | 7.40 | 7.58 | 0.00 | 0.00 | |

| best | 5.00 | 4.96 | 0.00 | 0.00 | 0.00 | |

| avg | 5.00 | 4.96 | 9.15 | 4.37 | 0.00 | |

| std | 3.93 | 1.80 | 3.36 | 2.16 | 0.00 | |

| best | 4.87 | 7.50 | 4.90 | 0.00 | 0.00 | |

| avg | 4.87 | 8.38 | 5.49 | 2.00 | 0.00 | |

| std | 0.00 | 4.82 | 2.22 | 1.79 | 0.00 |

| Function | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| best | 4.69 | 5.31 | 1.62 | 0.00 | 0.00 | |

| avg | 1.41 | 1.08 | 1.81 | 0.00 | 0.00 | |

| std | 1.06 | 1.68 | 3.45 | 0.00 | 0.00 | |

| best | 2.02 | 1.36 | 5.00 | 0.00 | 0.00 | |

| avg | 3.82 | 1.47 | 1.83 | 0.00 | 0.00 | |

| std | 7.26 | 5.92 | 0.00 | 0.00 | 0.00 | |

| best | 9.80 | 8.66 | 8.53 | 1.09 | 0.00 | |

| avg | 9.83 | 9.61 | 1.52 | 6.38 | 0.00 | |

| std | 1.22 | 4.03 | 2.93 | 0.00 | 0.00 | |

| best | 2.52 | 4.44 | 8.13 | 0.00 | 0.00 | |

| avg | 1.11 | 6.11 | 2.03 | 0.00 | 0.00 | |

| std | 1.43 | 4.36 | 0.00 | 0.00 | 0.00 | |

| best | 3.45 | 2.44 | 2.17 | 0.00 | 0.00 | |

| avg | 3.35 | 7.11 | 1.58 | 0.00 | 0.00 | |

| std | 1.21 | 2.71 | 0.00 | 0.00 | 0.00 | |

| best | 1.76 | 1.38 | 0.00 | 0.00 | 0.00 | |

| avg | 2.16 | 3.21 | 0.00 | 0.00 | 0.00 | |

| std | 1.98 | 6.05 | 0.00 | 0.00 | 0.00 | |

| best | 1.00 | 6.13 | 2.23 | 8.80 | 4.50 | |

| avg | 1.04 | 6.92 | 3.37 | 4.27 | 1.46 | |

| std | 3.24 | 3.15 | 2.63 | 6.43 | 2.82 | |

| best | 2.17 | 1.20 | 8.76 | 2.52 | 1.96 | |

| avg | 2.19 | 1.34 | 1.23 | 1.30 | 1.98 | |

| std | 2.38 | 6.42 | 1.90 | 3.72 | 2.71 | |

| best | 1.52 | 1.43 | 1.55 | 0.00 | 0.00 | |

| avg | 1.52 | 2.08 | 1.59 | 0.00 | 0.00 | |

| std | 2.08 | 3.22 | 6.54 | 0.00 | 0.00 | |

| best | 9.62 | 1.49 | 8.18 | 5.75 | 0.00 | |

| avg | 1.38 | 1.68 | 3.49 | 1.28 | 0.00 | |

| std | 5.11 | 8.13 | 6.80 | 0.00 | 0.00 | |

| best | 9.11 | 3.01 | 4.75 | 0.00 | 0.00 | |

| avg | 1.04 | 4.46 | 3.93 | 0.00 | 0.00 | |

| std | 6.48 | 1.14 | 0.00 | 0.00 | 0.00 | |

| best | 1.00 | 2.42 | 2.19 | 0.00 | 0.00 | |

| avg | 1.64 | 8.05 | 3.09 | 0.00 | 0.00 | |

| std | 1.27 | 2.76 | 0.00 | 0.00 | 0.00 | |

| best | 5.59 | 2.33 | 1.24 | 6.32 | 0.00 | |

| avg | 9.31 | 3.09 | 5.09 | 6.65 | 0.00 | |

| std | 9.07 | 2.96 | 9.16 | 0.00 | 0.00 | |

| best | 7.21 | 2.43 | 0.00 | 0.00 | 0.00 | |

| avg | 1.06 | 2.75 | 0.00 | 0.00 | 0.00 | |

| std | 6.07 | 1.24 | 0.00 | 0.00 | 0.00 | |

| best | 2.45 | 1.01 | 0.00 | 0.00 | 0.00 | |

| avg | 3.57 | 1.21 | 0.00 | 0.00 | 0.00 | |

| std | 1.34 | 4.76 | 0.00 | 0.00 | 0.00 | |

| best | 2.00 | 3.33 | 0.00 | 0.00 | 0.00 | |

| avg | 2.08 | 4.37 | 0.00 | 0.00 | 0.00 | |

| std | 1.55 | 3.78 | 0.00 | 0.00 | 0.00 | |

| best | 8.81 | 2.41 | 0.00 | 0.00 | 0.00 | |

| avg | 1.46 | 2.56 | 0.00 | 0.00 | 0.00 | |

| std | 1.38 | 4.11 | 0.00 | 0.00 | 0.00 | |

| best | 1.23 | 5.35 | 4.44 | 4.44 | 4.44 | |

| avg | 1.45 | 6.17 | 1.51 | 4.44 | 4.44 | |

| std | 7.17 | 3.27 | 7.28 | 0.00 | 0.00 | |

| best | 5.00 | 4.99 | 0.00 | 0.00 | 0.00 | |

| avg | 5.00 | 4.99 | 7.77 | 6.32 | 0.00 | |

| std | 2.27 | 4.18 | 1.74 | 2.07 | 0.00 | |

| best | 7.58 | 1.29 | 1.41 | 0.00 | 0.00 | |

| avg | 7.68 | 1.32 | 6.49 | 4.99 | 0.00 | |

| std | 4.44 | 1.34 | 2.13 | 9.73 | 0.00 |

| Function | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| best | 1.33 | 1.56 | 9.48 | 0.00 | 0.00 | |

| avg | 9.21 | 2.00 | 2.22 | 0.00 | 0.00 | |

| std | 2.59 | 1.69 | 4.31 | 0.00 | 0.00 | |

| best | 7.75 | 7.47 | 1.19 | 0.00 | 0.00 | |

| avg | 7.63 | 9.79 | 1.11 | 0.00 | 0.00 | |

| std | 2.36 | 4.56 | 2.06 | 0.00 | 0.00 | |

| best | 9.90 | 1.13 | 1.06 | 9.88 | 0.00 | |

| avg | 9.92 | 1.28 | 1.92 | 7.55 | 0.00 | |

| std | 7.70 | 3.99 | 2.91 | 0.00 | 0.00 | |

| best | 1.99 | 6.44 | 6.58 | 0.00 | 0.00 | |

| avg | 2.72 | 1.38 | 2.00 | 0.00 | 0.00 | |

| std | 1.68 | 2.43 | 0.00 | 0.00 | 0.00 | |

| best | 4.63 | 4.76 | 2.83 | 0.00 | 0.00 | |

| avg | 1.71 | 1.01 | 6.00 | 0.00 | 0.00 | |

| std | 7.30 | 1.92 | 0.00 | 0.00 | 0.00 | |

| best | 1.22 | 1.06 | 0.00 | 0.00 | 0.00 | |

| avg | 1.34 | 1.43 | 0.00 | 0.00 | 0.00 | |

| std | 5.16 | 1.14 | 0.00 | 0.00 | 0.00 | |

| best | 1.00 | 1.98 | 7.15 | 2.55 | 4.90 | |

| avg | 5.55 | 9.49 | 8.17 | 9.20 | 1.07 | |

| std | 1.29 | 1.27 | 4.39 | 1.57 | 1.64 | |

| best | 1.13 | 1.81 | 1.33 | 3.36 | 4.98 | |

| avg | 1.64 | 2.43 | 5.96 | 2.79 | 4.98 | |

| std | 3.09 | 2.06 | 2.59 | 9.76 | 4.21 | |

| best | 6.72 | 1.25 | 1.07 | 0.00 | 0.00 | |

| avg | 7.41 | 2.03 | 1.12 | 0.00 | 0.00 | |

| std | 2.54 | 1.71 | 3.24 | 0.00 | 0.00 | |

| best | 3.55 | 1.18 | 3.17 | 6.23 | 0.00 | |

| avg | 7.67 | 1.31 | 2.87 | 4.90 | 0.00 | |

| std | 6.86 | 4.09 | 5.57 | 0.00 | 0.00 | |

| best | 3.98 | 6.66 | 2.48 | 0.00 | 0.00 | |

| avg | 6.83 | 1.39 | 4.53 | 0.00 | 0.00 | |

| std | 1.28 | 2.42 | 0.00 | 0.00 | 0.00 | |

| best | 1.00 | 4.45 | 1.78 | 0.00 | 0.00 | |

| avg | 3.03 | 8.45 | 9.28 | 0.00 | 0.00 | |

| std | 1.71 | 1.11 | 0.00 | 0.00 | 0.00 | |

| best | 2.35 | 4.33 | 5.30 | 1.88 | 0.00 | |

| avg | 4.07 | 5.50 | 4.57 | 4.43 | 0.00 | |

| std | 4.95 | 2.99 | 8.91 | 0.00 | 0.00 | |

| best | 2.51 | 1.34 | 0.00 | 0.00 | 0.00 | |

| avg | 3.60 | 1.56 | 0.00 | 0.00 | 0.00 | |

| std | 2.83 | 4.47 | 0.00 | 0.00 | 0.00 | |

| best | 2.19 | 7.42 | 0.00 | 0.00 | 0.00 | |

| avg | 1.47 | 8.53 | 0.00 | 0.00 | 0.00 | |

| std | 8.77 | 3.16 | 0.00 | 0.00 | 0.00 | |

| best | 4.25 | 3.27 | 0.00 | 0.00 | 0.00 | |

| avg | 3.12 | 3.87 | 0.00 | 0.00 | 0.00 | |

| std | 1.56 | 1.77 | 0.00 | 0.00 | 0.00 | |

| best | 2.55 | 7.62 | 0.00 | 0.00 | 0.00 | |

| avg | 4.68 | 7.95 | 0.00 | 0.00 | 0.00 | |

| std | 9.75 | 6.32 | 0.00 | 0.00 | 0.00 | |

| best | 1.62 | 6.82 | 4.44 | 4.44 | 4.44 | |

| avg | 1.70 | 7.55 | 1.69 | 4.44 | 4.44 | |

| std | 2.73 | 2.23 | 7.58 | 0.00 | 0.00 | |

| best | 5.00 | 5.00 | 0.00 | 0.00 | 0.00 | |

| avg | 5.00 | 5.00 | 6.80 | 4.37 | 0.00 | |

| std | 5.05 | 8.62 | 1.99 | 2.16 | 0.00 | |

| best | 1.23 | 1.77 | 3.22 | 0.00 | 0.00 | |

| avg | 1.24 | 1.93 | 6.49 | 3.50 | 0.00 | |

| std | 2.01 | 3.14 | 2.13 | 2.13 | 0.00 |

| Function | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|

| best | 1.40 | 0.00 | 0.00 | |

| avg | 1.07 | 0.00 | 0.00 | |

| std | 2.09 | 0.00 | 0.00 | |

| best | 1.18 | 0.00 | 0.00 | |

| avg | 3.41 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 1.41 | 7.14 | 0.00 | |

| avg | 9.83 | 4.49 | 0.00 | |

| std | 1.50 | 0.00 | 0.00 | |

| best | 6.62 | 0.00 | 0.00 | |

| avg | 2.29 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 5.30 | 0.00 | 0.00 | |

| avg | 2.08 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | |

| avg | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 1.11 | 4.84 | 9.94 | |

| avg | 1.59 | 1.84 | 1.31 | |

| std | 8.17 | 2.66 | 6.18 | |

| best | 3.18 | 1.50 | 9.98 | |

| avg | 8.18 | 6.60 | 9.98 | |

| std | 9.74 | 1.87 | 4.41 | |

| best | 4.92 | 0.00 | 0.00 | |

| avg | 7.18 | 0.00 | 0.00 | |

| std | 2.33 | 0.00 | 0.00 | |

| best | 7.51 | 3.76 | 0.00 | |

| avg | 3.61 | 5.96 | 0.00 | |

| std | 5.34 | 0.00 | 0.00 | |

| best | 1.41 | 0.00 | 0.00 | |

| avg | 1.41 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 1.43 | 0.00 | 0.00 | |

| avg | 2.95 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 2.10 | 1.19 | 0.00 | |

| avg | 4.13 | 1.54 | 0.00 | |

| std | 5.08 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | |

| avg | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | |

| avg | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | |

| avg | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | |

| avg | 0.00 | 0.00 | 0.00 | |

| std | 0.00 | 0.00 | 0.00 | |

| best | 4.44 | 4.44 | 4.44 | |

| avg | 2.04 | 4.44 | 4.44 | |

| std | 7.90 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | |

| avg | 7.29 | 6.80 | 0.00 | |

| std | 1.88 | 1.99 | 0.00 | |

| best | 3.03 | 0.00 | 0.00 | |

| avg | 6.99 | 3.00 | 0.00 | |

| std | 2.05 | 2.05 | 0.00 |

| n = 20 | n = 100 | n = 200 | n = 500 | n = 1000 | ||

|---|---|---|---|---|---|---|

| WOA | 1.58 | 9.67 | 1.52 | 1.92 | 9.83 | |

| OBCWOA | 2.87 | 4.43 | 6.38 | 7.55 | 4.49 | |

| SWWOA | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| WOA | 6.87 | 1.56 | 3.37 | 8.17 | 1.59 | |

| OBCWOA | 3.15 | 2.07 | 4.27 | 9.20 | 1.84 | |

| SWWOA | 2.48 | 3.08 | 1.46 | 1.07 | 1.31 | |

| WOA | 3.80 | 2.25 | 5.09 | 4.57 | 4.13 | |

| OBCWOA | 1.47 | 3.78 | 6.65 | 4.43 | 1.54 | |

| SWWOA | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| WOA | 8.26 | 9.15 | 7.77 | 6.80 | 7.29 | |

| OBCWOA | 4.86 | 4.37 | 6.32 | 4.37 | 6.80 | |

| SWWOA | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| n | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| 20 | 0 | 0 | 1 | 5 | 6 | |

| 100 | 0 | 0 | 1 | 5 | 6 | |

| – (US) | 200 | 0 | 0 | 1 | 5 | 6 |

| 500 | 0 | 0 | 1 | 5 | 6 | |

| 1000 | – | – | 1 | 5 | 6 | |

| 20 | 0 | 0 | 1 | 3 | 5 | |

| 100 | 0 | 0 | 1 | 3 | 5 | |

| – (UN) | 200 | 0 | 0 | 1 | 3 | 5 |

| 500 | 0 | 0 | 1 | 3 | 5 | |

| 1000 | – | – | 1 | 3 | 5 | |

| 20 | 0 | 0 | 2 | 2 | 3 | |

| 100 | 0 | 0 | 2 | 2 | 3 | |

| – (MS) | 200 | 0 | 0 | 2 | 2 | 3 |

| 500 | 0 | 0 | 2 | 2 | 3 | |

| 1000 | – | – | 2 | 2 | 3 | |

| 20 | 0 | 0 | 2 | 4 | 5 | |

| 100 | 0 | 0 | 2 | 3 | 5 | |

| – (MN) | 200 | 0 | 0 | 2 | 3 | 5 |

| 500 | 0 | 0 | 2 | 3 | 5 | |

| 1000 | – | – | 2 | 3 | 5 |

| Function | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| best | 6.27 | 1.04 | 5.99 | 3.64 | 1.01 | |

| Shifted Rotated | avg | 1.49 | 2.16 | 2.21 | 1.52 | 5.20 |

| std | 1.92 | 1.25 | 5.54 | 3.19 | 2.91 | |

| best | 8.93 | 1.99 | 3.34 | 0.00 | 0.00 | |

| Rotated | avg | 1.28 | 1.99 | 1.51 | 0.00 | 0.00 |

| std | 3.98 | 2.03 | 1.11 | 0.00 | 0.00 | |

| best | 3.79 | 6.92 | 7.21 | 0.00 | 0.00 | |

| Rotated | avg | 3.81 | 6.92 | 3.46 | 0.00 | 0.00 |

| std | 1.54 | 1.22 | 6.74 | 0.00 | 0.00 | |

| best | 2.89 | 6.87 | 1.18 | 1.12 | 2.59 | |

| Shifted | avg | 7.86 | 7.46 | 1.79 | 1.55 | 2.79 |

| std | 1.06 | 1.54 | 1.22 | 7.29 | 9.75 | |

| best | 2.99 | 2.99 | 2.99 | 2.99 | 2.99 | |

| Shifted Rotated | avg | 2.99 | 2.99 | 2.99 | 2.99 | 2.99 |

| std | 2.54 | 2.54 | 2.54 | 2.54 | 2.54 | |

| best | 4.45 | 4.45 | 4.45 | 4.45 | 4.45 | |

| Shifted Rotated | avg | 4.45 | 4.45 | 4.45 | 4.45 | 4.45 |

| std | 7.63 | 7.63 | 7.63 | 7.63 | 7.63 | |

| best | 3.34 | 3.34 | 3.34 | 3.34 | 3.34 | |

| Shifted Rotated | avg | 3.34 | 3.34 | 3.34 | 3.34 | 3.34 |

| std | 6.36 | 6.36 | 6.36 | 6.36 | 6.36 | |

| best | 2.16 | 2.16 | 2.16 | 2.16 | 2.16 | |

| Shifted Rotated | avg | 2.16 | 2.16 | 2.16 | 2.16 | 2.16 |

| std | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Function | ABC | PSO | WOA | OBCWOA | SWWOA | |

|---|---|---|---|---|---|---|

| best | 6.30 | 1.87 | 5.47 | 3.84 | 3.16 | |

| Shifted Rotated | avg | 1.20 | 4.97 | 7.36 | 5.29 | 7.48 |

| std | 1.63 | 9.05 | 4.96 | 3.48 | 1.45 | |

| best | 2.18 | 1.03 | 5.87 | 0.00 | 0.00 | |

| Rotated | avg | 3.49 | 1.56 | 1.58 | 0.00 | 0.00 |

| std | 3.63 | 3.31 | 7.89 | 0.00 | 0.00 | |

| best | 9.01 | 6.83 | 3.19 | 0.00 | 0.00 | |

| Rotated | avg | 3.94 | 4.42 | 1.67 | 0.00 | 0.00 |

| std | 1.64 | 2.16 | 3.26 | 0.00 | 0.00 | |

| best | 3.35 | 5.42 | 1.24 | 1.03 | 3.20 | |

| Shifted | avg | 4.74 | 6.17 | 1.32 | 1.20 | 4.08 |

| std | 3.38 | 2.34 | 1.60 | 3.41 | 2.09 | |

| best | 1.70 | 1.70 | 1.70 | 1.70 | 1.70 | |

| Shifted Rotated | avg | 1.70 | 1.70 | 1.70 | 1.70 | 1.70 |

| std | 4.07 | 4.07 | 4.07 | 4.07 | 4.07 | |

| best | 3.47 | 3.47 | 3.47 | 3.47 | 3.47 | |

| Shifted Rotated | avg | 3.47 | 3.47 | 3.47 | 3.47 | 3.47 |

| std | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| best | 1.81 | 1.81 | 1.81 | 1.81 | 1.81 | |

| Shifted Rotated | avg | 1.81 | 1.81 | 1.81 | 1.81 | 1.81 |

| std | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| best | 2.17 | 2.17 | 2.17 | 2.17 | 2.17 | |

| Shifted Rotated | avg | 2.17 | 2.17 | 2.17 | 2.17 | 2.17 |

| std | 3.18 | 3.18 | 3.18 | 3.18 | 3.18 |

| n | Funcs | ABC | PSO | WOA | OBCWOA | Funcs | ABC | PSO | WOA | OBCWOA |

|---|---|---|---|---|---|---|---|---|---|---|

| 20 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 200 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 500 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 20 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 200 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 500 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | 1 | ||

| 20 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 100 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 200 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 500 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 20 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 200 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 500 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 20 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 200 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 500 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 20 | <0.001 | <0.001 | 1 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 100 | <0.001 | <0.001 | 1 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 200 | <0.001 | <0.001 | 1 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 500 | <0.001 | <0.001 | 1 | 1 | <0.001 | <0.001 | 1 | 1 | ||

| 20 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 1 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 1 | 1 | ||

| 200 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 1 | 1 | ||

| 500 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 1 | 1 | ||

| 20 | <0.001 | <0.001 | <0.001 | 0.589 | <0.001 | <0.001 | 0.003 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | 0.194 | <0.001 | <0.001 | 0.007 | 1 | ||

| 200 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.030 | 1 | ||

| 500 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.176 | 1 | ||

| 20 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 100 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 200 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 500 | <0.001 | <0.001 | <0.001 | 1 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 20 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 1 | ||

| 100 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||

| 200 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.015 | ||

| 500 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.003 |

| Function | WOA | A1 | A2 | A3 | SWWOA | |

|---|---|---|---|---|---|---|

| best | 1.89 | 3.53 | 0.00 | 0.00 | 0.00 | |

| avg | 4.73 | 4.00 | 0.00 | 0.00 | 0.00 | |

| std | 9.17 | 7.15 | 0.00 | 0.00 | 0.00 | |

| best | 1.73 | 1.05 | 3.51 | 1.15 | 4.71 | |

| avg | 8.87 | 1.39 | 3.30 | 3.12 | 8.54 | |

| std | 4.55 | 4.72 | 4.50 | 4.30 | 7.74 | |

| best | 7.37 | 1.22 | 0.00 | 0.00 | 0.00 | |

| avg | 1.43 | 2.23 | 0.00 | 0.00 | 0.00 | |

| std | 1.28 | 4.10 | 0.00 | 0.00 | 0.00 | |

| best | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| avg | 7.29 | 6.32 | 0.00 | 0.00 | 0.00 | |

| std | 1.88 | 2.07 | 0.00 | 0.00 | 0.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, P.; Cheng, W.; Liu, N.; Zhang, H.; Lu, J. A Modified Whale Optimization Algorithm with Single-Dimensional Swimming for Global Optimization Problems. Symmetry 2020, 12, 1892. https://doi.org/10.3390/sym12111892

Du P, Cheng W, Liu N, Zhang H, Lu J. A Modified Whale Optimization Algorithm with Single-Dimensional Swimming for Global Optimization Problems. Symmetry. 2020; 12(11):1892. https://doi.org/10.3390/sym12111892

Chicago/Turabian StyleDu, Pengzhen, Weiming Cheng, Ning Liu, Haofeng Zhang, and Jianfeng Lu. 2020. "A Modified Whale Optimization Algorithm with Single-Dimensional Swimming for Global Optimization Problems" Symmetry 12, no. 11: 1892. https://doi.org/10.3390/sym12111892

APA StyleDu, P., Cheng, W., Liu, N., Zhang, H., & Lu, J. (2020). A Modified Whale Optimization Algorithm with Single-Dimensional Swimming for Global Optimization Problems. Symmetry, 12(11), 1892. https://doi.org/10.3390/sym12111892