An Empirical Study on Deep Neural Network Models for Chinese Dialogue Generation

Abstract

1. Introduction

- We conducted extensive experiments to compare the performances of six typical DNN architectures for the dialogue generation task on three open Chinese corpora.

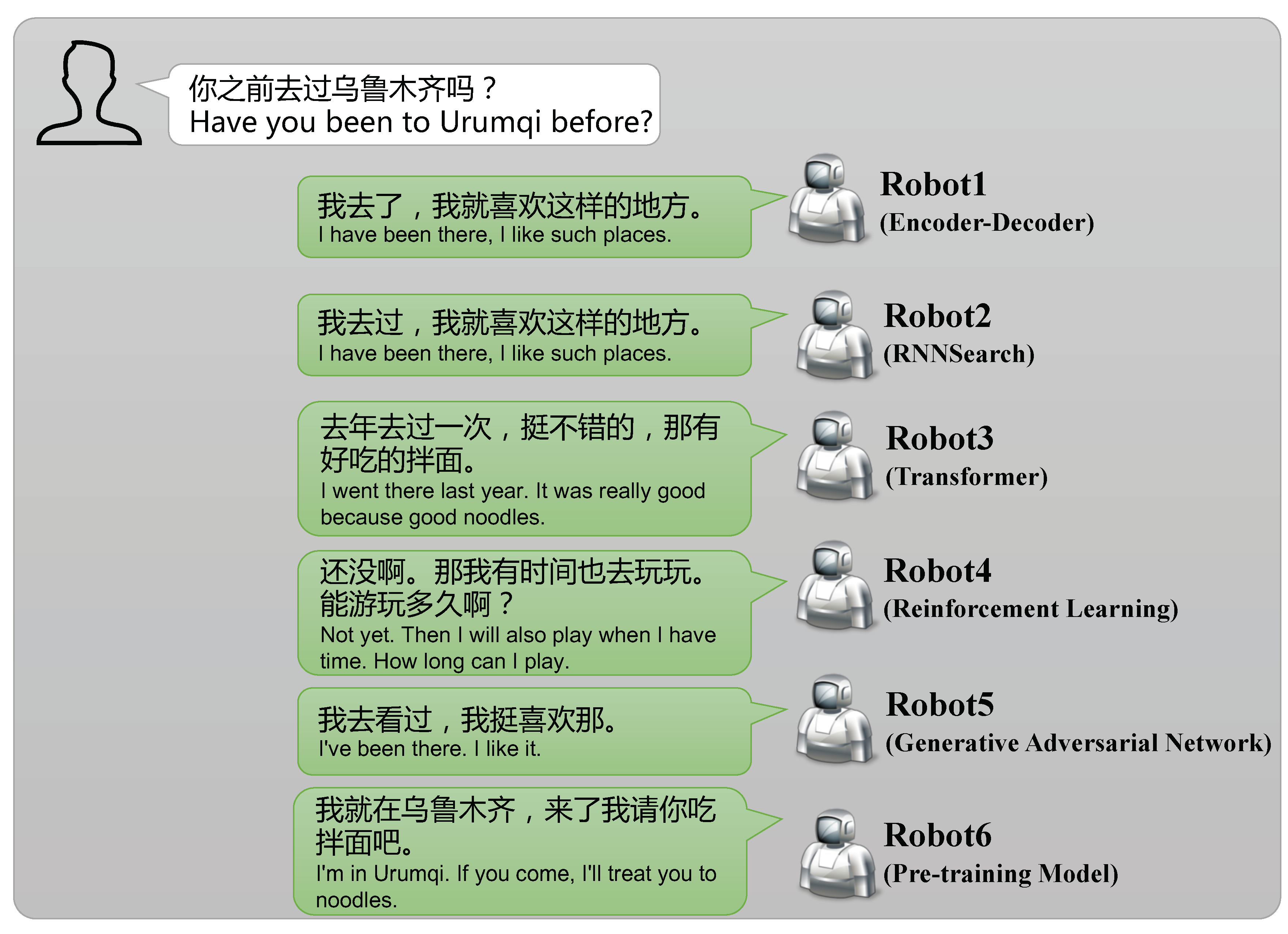

- We performed a case study to help understand their performances in an intuitive manner.

- We analyzed the mechanisms behind the different performances by these models and provide practical recommendations for model selection.

2. Background

2.1. Seq2Seq

2.2. RNNSearch

2.3. Transformer

2.4. Generative Adversarial Nets

2.5. Reinforcement Learning

2.6. Pre-Training Language Model

3. Related Work

- Seq2Seq: The first group learns the response generation model under the simple Seq2Seq architecture. Reference [17] used multi-layer LSTM to map the input sequence to a fixed-dimensional vector and then used another deep-layer LSTM to decode the target sequence from the vector, with high automation and flexibility. Reference [18] introduced HRED, which uses a hierarchical codec architecture to model all contextual sentences. Reference [19] used an extended encoder-decoder that provides encoding of context and external knowledge.

- RNNSearch: The second group is based on the basic sequence-to-sequence with attention frame [20,21]. Reference [22] proposed a new multi-round dialogue generation model, which uses a self-attention mechanism to capture long-distance dependence—a weighted sequence(wise) attention model which uses cosine similarity to measure the degree of correlation proposed by [23] for HEED. Reference [24] introduced an attention mechanism into HRED, and proposed a new hierarchical recursive attention network (HRAN).

- Transformer: The last group at the peak of attention architectures with the transformer framework. Reference [25] proposed a NMT model via multi-head attention; others were inspired by this paper. Reference [26] proposed an incremental transformer with the deliberation decoder to solve the task of document grounded conversations. Reference [27] proposed a transformer-based model to address multi-turn unstructured text facts open-domain dialogue.

4. Empirical Study

4.1. Datasets

- KdConv: KdConv is a dataset of Chinese multi-domain knowledge-driven transformation, which establishes topics in multiple rounds of dialogues on the knowledge graph. KdConv contains 4.5 K dialogues and 86 K utterances from three fields (film, music, and tourism), with an average number of rounds of 19.0. These dialogues on related topics include in-depth discussions and natural transitions between multiple topics. At the same time, the corpus can also be used for transfer learning and exploration of domain adaptation.

- Weibo: A single-turn open-domain Chinese dialogue dataset, which is originally collected and released by reference [38] from a Chinese social media platform Weibo https://www.weibo.com/. Here, we used a refined version released by reference [39] (https://ai.tencent.com/ailab/nlp/dialogue/#datasets). The number of samples for training, validation, and testing are 400 M, 19,357, and 3200 respectively. Character-based vocabulary size is 10,231.

- Douban: A multi-turn open-domain Chinese dialogue corpus collected from Douban group, a well-known Chinese online community which is also a common data source for dialogue systems [40]. Here, we utilize a version called Restoration-200 K dataset released by reference [41]. There are 193,769 samples for training, 5095 for validation, and 5104 for testing. Vocabulary size is 5800.

4.2. Evaluation Metrics

4.3. Comparison Models

- HRED (the code implementations can be found on https://github.com/julianser/hed-dlg): HRED [18] is a hierarchical RNN-based encoder-decoder constructed for the multi-turn dialogue generation tasks. In the dialogue, the encoder RNN maps each utterance to a discourse vector. High-level context neural networks track past utterances by iteratively processing each discourse vector. The next utterance prediction is implemented by RNN decoder, which obtains the hidden state of context RNN and generates probability distribution on the token of the next utterance.

- ReCoSa (the code and data are available at https://github.com/zhanghainan/ReCoSa): ReCoSa [22] is a model based on attention mechanism, Firstly, the word-level LSTM encoder is executed to get an initial representation of each context. The self-focus mechanism is then used to update both the context and the mask response representation. Finally, the weight of attention between each context and response representation is calculated and used for further decoding.

- Guyu (the code and models are available at https://github.com/lipiji/Guyu): Guyu [26] is a transformer-based auto-regressive model for the task of open-domain dialogue generation. Guyu conducts representation learning utilize masking multi-head self-attention as the core technical operation. Various decoding strategies are employed to conduct the response-text generation. Adam with Noam learning-rate decay strategy is employed to optimize the model parameters.

- RL (the code and models are available at https://github.com/liuyuemaicha/Deep-Reinforcement-Learning-for-Dialogue-Generation-in-tensorflow): This is a model that uses reinforcement learning to simulate the future returns in chat robot dialogues. The model simulates the conversation between two virtual agents and uses the policy gradient method to reward a sequence, which shows three useful conversation characteristics: large amount of information, strong consistency, and easy answer (related to forward-looking function).

- GAN-AEL (the code and models are available at https://github.com/lan2720/GAN-AEL): GAN-AEL [29] is a GAN framework to model single-turn short-text conversations. GAN-AEL trains the Seq2Seq network to simultaneously perform a discriminant classifier, which measures the difference between the human-generated response and the machine-generated response and introduces an approximate embedding layer to solve the non-differentiable problem caused by sampling-based output decoding in the Seq2Seq generation model steps.

- BigLM-24: (the code and models are available at https://github.com/lipiji/Guyu) This is a language model with both the pre-training and fine-tuning procedures [26]. BigLM-24 is the typical GPT-2 model with 345 million parameters (1024 dimensions, 24 layers, 16 heads). During training, we employ maximum likelihood estimation (MLE) to conduct the parameter learning. In the inference stage, various decoding strategies such as greedy search, beam search, truncated top-k sampling, and nucleus sampling are employed to conduct the response-text generation.

4.4. Setup

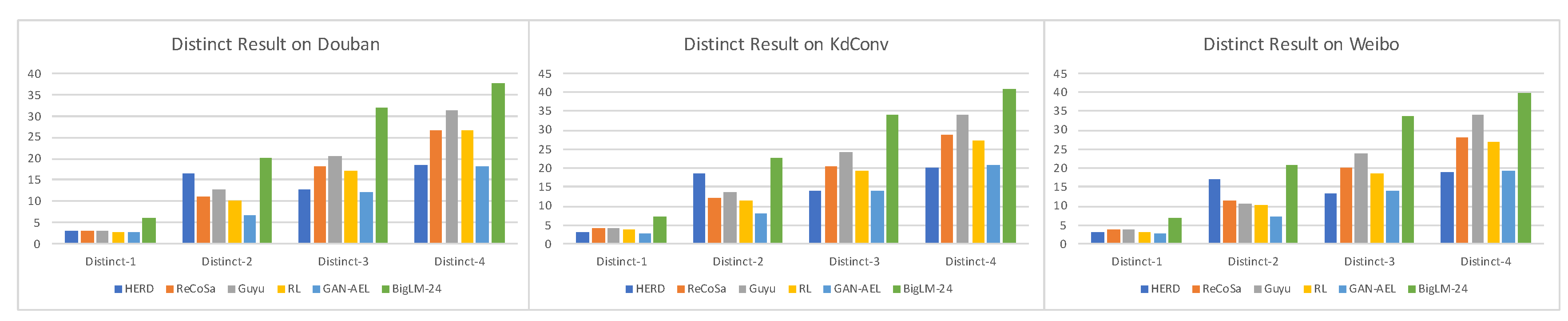

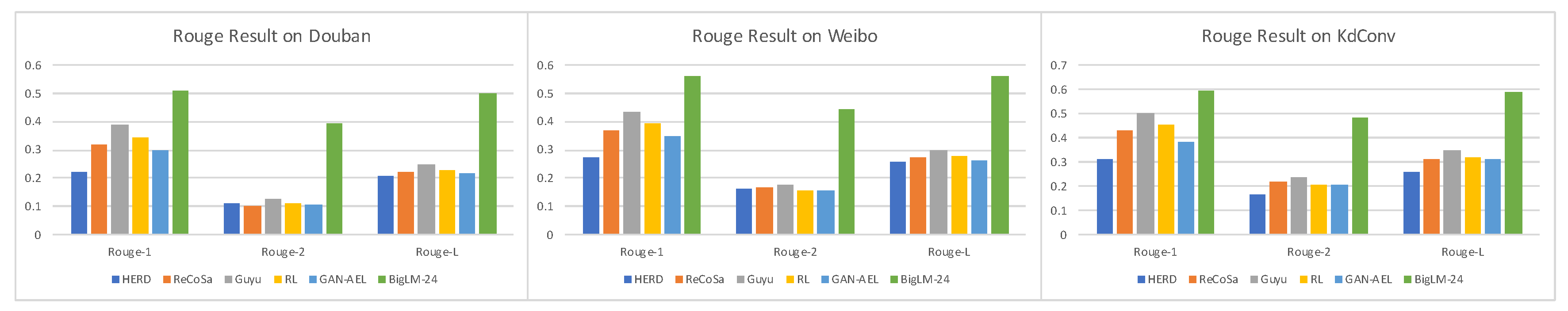

4.5. Results and Analysis

4.6. Case Study

4.7. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wang, H.; Lu, Z.; Li, H.; Chen, E. A dataset for research on short-text conversations. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Washington, DC, USA, 18–21 October 2013; pp. 935–945. [Google Scholar]

- Hu, B.; Lu, Z.; Li, H.; Chen, Q. Convolutional neural network architectures for matching natural language sentences. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2042–2050. [Google Scholar]

- Serban, I.V.; Sordoni, A.; Bengio, Y.; Courville, A.; Pineau, J. Hierarchical neural network generative models for movie dialogues. arXiv 2015, arXiv:1507.04808. [Google Scholar]

- Li, J.; Monroe, W.; Ritter, A.; Jurafsky, D.; Galley, M.; Gao, J. Deep Reinforcement Learning for Dialogue Generation. arXiv 2016, arXiv:1606.01541. [Google Scholar]

- Olabiyi, O.; Mueller, E.T. Multi-turn dialogue response generation with autoregressive transformer models. arXiv 2019, arXiv:1908.01841. [Google Scholar]

- Li, X.; Li, P.; Bi, W.; Liu, X.; Lam, W. Relevance-Promoting Language Model for Short-Text Conversation. arXiv 2019, arXiv:1911.11489. [Google Scholar] [CrossRef]

- Li, P. An Empirical Investigation of Pre-Trained Transformer Language Models for Open-Domain Dialogue Generation. arXiv 2020, arXiv:2003.04195. [Google Scholar]

- Wang, Q.; Yin, H.; Wang, H.; Hung, N.Q.V.; Huang, Z.; Cui, L. Enhancing Collaborative Filtering with Generative Augmentation. In KDD ’19: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; Association for Computing Machinery: New York, NY, USA, 2019; pp. 548–556. [Google Scholar]

- Wang, Q.; Yin, H.; Chen, T.; Huang, Z.; Wang, H.; Zhao, Y.; Viet Hung, N.Q. Next Point-of-Interest Recommendation on Resource-Constrained Mobile Devices. In Proceedings of the Web Conference, Taipei, Taiwan, 20–24 April 2020; pp. 906–916. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Wang, Q.; Yin, H.; Hu, Z.; Lian, D.; Wang, H.; Huang, Z. Neural Memory Streaming Recommender Networks with Adversarial Training. In KDD ’18: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; Association for Computing Machinery: New York, NY, USA, 2018; pp. 2467–2475. [Google Scholar]

- Dai, Y.; Li, H.; Tang, C.; Li, Y.; Sun, J.; Zhu, X. Learning Low-Resource End-To-End Goal-Oriented Dialog for Fast and Reliable System Deployment. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Stroudsburg, PA, USA, 9–10 July 2020; pp. 609–618. [Google Scholar]

- Cui, L.; Wu, Y.; Liu, S.; Zhang, Y.; Zhou, M. MuTual: A Dataset for Multi-Turn Dialogue Reasoning. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 1406–1416. [Google Scholar] [CrossRef]

- Qin, L.; Xu, X.; Che, W.; Zhang, Y.; Liu, T. Dynamic Fusion Network for Multi-Domain End-to-end Task-Oriented Dialog. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6344–6354. [Google Scholar] [CrossRef]

- Cho, H.; May, J. Grounding Conversations with Improvised Dialogues. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 2398–2413. [Google Scholar] [CrossRef]

- Ma, W.; Cui, Y.; Liu, T.; Wang, D.; Wang, S.; Hu, G. Conversational Word Embedding for Retrieval-Based Dialog System. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 1375–1380. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in neural information processing systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Serban, I.V.; Sordoni, A.; Bengio, Y.; Courville, A.; Pineau, J. Building end-to-end dialogue systems using generative hierarchical neural network models. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16), Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Ghazvininejad, M.; Brockett, C.; Chang, M.W.; Dolan, B.; Gao, J.; Yih, W.t.; Galley, M. A knowledge-grounded neural conversation model. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Vinyals, O.; Le, Q. A neural conversational model. arXiv 2015, arXiv:1506.05869. [Google Scholar]

- Shang, L.; Lu, Z.; Li, H. Neural Responding Machine for Short-Text Conversation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 16–21 August 2015; pp. 1577–1586. [Google Scholar]

- Zhang, H.; Lan, Y.; Cheng, X. ReCoSa: Detecting the Relevant Contexts with Self-Attention for Multi-Turn Dialogue Generation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3721–3730. [Google Scholar]

- Tian, Z.; Yan, R.; Mou, L.; Song, Y.; Feng, Y.; Zhao, D. How to make context more useful? An empirical study on context-aware neural conversational models. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 231–236. [Google Scholar]

- Xing, C.; Wu, W.; Wu, Y.; Zhou, M.; Huang, Y.; Ma, W.Y. Hierarchical recurrent attention network for response generation. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Li, Z.; Niu, C.; Meng, F.; Feng, Y.; Li, Q.; Zhou, J. Incremental Transformer with Deliberation Decoder for Document Grounded Conversations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 12–21. [Google Scholar]

- Dinan, E.; Roller, S.; Shuster, K.; Fan, A.; Auli, M.; Weston, J. Wizard of Wikipedia: Knowledge-Powered Conversational Agents. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April– 3 May 2018. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. Seqgan: Sequence generative adversarial nets with policy gradient. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Xu, Z.; Liu, B.; Wang, B.; Sun, C.J.; Wang, X.; Wang, Z.; Qi, C. Neural response generation via gan with an approximate embedding layer. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 617–626. [Google Scholar]

- Boyd, A.; Puri, R.; Shoeybi, M.; Patwary, M.; Catanzaro, B. Large Scale Multi-Actor Generative Dialog Modeling. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 66–84. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, X.; Gao, J.; Chen, E. Budgeted Policy Learning for Task-Oriented Dialogue Systems. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3742–3751. [Google Scholar]

- Su, S.Y.; Li, X.; Gao, J.; Liu, J.; Chen, Y.N. Discriminative Deep Dyna-Q: Robust Planning for Dialogue Policy Learning. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3813–3823. [Google Scholar]

- Wu, Y.; Li, X.; Liu, J.; Gao, J.; Yang, Y. Switch-based active deep dyna-q: Efficient adaptive planning for task-completion dialogue policy learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7289–7296. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Ham, D.; Lee, J.G.; Jang, Y.; Kim, K.E. End-to-End Neural Pipeline for Goal-Oriented Dialogue Systems using GPT-2. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 583–592. [Google Scholar] [CrossRef]

- Bao, S.; He, H.; Wang, F.; Wu, H.; Wang, H. PLATO: Pre-trained Dialogue Generation Model with Discrete Latent Variable. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 85–96. [Google Scholar] [CrossRef]

- Shang, L.; Lu, Z.; Li, H. Neural Responding Machine for Short-Text Conversation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers); Association for Computational Linguistics: Beijing, China, 2015; pp. 1577–1586. [Google Scholar] [CrossRef]

- Gao, J.; Bi, W.; Liu, X.; Li, J.; Shi, S. Generating multiple diverse responses for short-text conversation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6383–6390. [Google Scholar]

- Wu, Y.; Wu, W.; Xing, C.; Zhou, M.; Li, Z. Sequential Matching Network: A New Architecture for Multi-turn Response Selection in Retrieval-Based Chatbots. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 496–505. [Google Scholar]

- Pan, Z.; Bai, K.; Wang, Y.; Zhou, L.; Liu, X. Improving Open-Domain Dialogue Systems via Multi-Turn Incomplete Utterance Restoration. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 1824–1833. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A Diversity-Promoting Objective Function for Neural Conversation Models. In Proceedings of the NAACL-HLT 2016, San Diego, CA, USA, 12–17 June 2016; pp. 110–119. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2014, arXiv:1312.6114. [Google Scholar]

- Zhao, T.; Zhao, R.; Eskenazi, M. Learning Discourse-level Diversity for Neural Dialog Models using Conditional Variational Autoencoders. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 654–664. [Google Scholar]

| Corpus | Type | Train | Dev | Test | Vocab | Avg Length |

|---|---|---|---|---|---|---|

| KdConv | Multi-Turn | 3600 | 450 | 450 | 63,134 | 21.41 |

| Single-Turn | 4,244,093 | 19,357 | 3200 | 10,231 | 34.21 | |

| Douban | Multi-Turn | 193,769 | 5095 | 5104 | 5800 | 31.44 |

| Model | Relevance | Perplexity | |||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ||

| HRED [18] | 36.87 | 26.68 | 21.31 | 17.96 | 41.07 |

| ReCoSa [22] | 27.69 | 14.13 | 7.35 | 14.31 | 36.71 |

| Guyu [26] | 37.15 | 28.27 | 23.13 | 18.89 | 35.56 |

| RL [4] | 30.29 | 21.79 | 16.15 | 13.02 | 37.96 |

| GAN-AEL [29] | 25.08 | 13.73 | 8.71 | 5.75 | 46.09 |

| BigLM-24 [26] | 61.82 | 57.71 | 54.96 | 52.79 | 26.68 |

| Model | Relevance | Perplexity | |||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ||

| HRED [18] | 15.17 | 2.41 | 0.27 | 0.05 | 90.47 |

| ReCoSa [22] | 32.14 | 10.34 | 4.27 | 2.19 | 89.24 |

| Guyu [26] | 38.11 | 12.34 | 5.38 | 4.57 | 53.10 |

| RL [4] | 35.04 | 11.45 | 5.39 | 3.29 | 58.67 |

| GAN-AEL [29] | 28.34 | 8.45 | 4.38 | 3.03 | 91.31 |

| BigLM-24 [26] | 38.93 | 14.72 | 7.59 | 5.07 | 40.23 |

| Model | Relevance | Perplexity | |||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ||

| HRED [18] | 13.13 | 2.67 | 0.36 | 0.07 | 93.94 |

| ReCoSa [22] | 14.58 | 1.38 | 0.28 | 0.09 | 89.84 |

| Guyu [26] | 17.19 | 5.66 | 1.06 | 0.16 | 77.24 |

| RL [4] | 14.43 | 1.48 | 0.19 | 0.01 | 79.28 |

| GAN-AEL [29] | 13.30 | 0.90 | 0.05 | 0.01 | 85.44 |

| BigLM-24 [26] | 14.39 | 4.26 | 1.26 | 0.59 | 41.46 |

| Corpus | |||

|---|---|---|---|

| Architecture | KdConv | Douban | |

| Query | 今天天气不错 It’s a nice day today | 新疆是个 美丽的地方 Xinjiang is a beautiful place | 什么是有 意义的生活 What is a meaningful life? |

| Ground Truth | 确实是个好天气。 It’s really nice weather. | 新疆确实是个 美丽的地方。 Xinjiang is really a beautiful place. | 生命的意义 在于奋斗。 The meaning of life lies in fighting. |

| Seq2Seq [18] | 你也要注意身体呀. You should take care of your health, too. | 你要去看看吗? Would you like to see it? | 存在的意义 在于生活 The meaning of existence lies in life. |

| RNNSearch [22] | 我想看到你 I want to see you. | 你知道有 什么景点么? Do you know any scenic spots? | 生活就是生活 Life is life. |

| Transformer [26] | 北京天气不错的说 The weather in Beijing is good. | 周边景点有哪些? What are the surrounding scenic spots? | 生活毫无意义, 重要的是什么让你快乐 Life is meaningless. What matters is what makes you happy. |

| RL [4] | 好天气 The good weather. | 新疆的美食还是有的 Xinjiang cuisine is still available. | 这是为了 你的生活 It’s for your life. |

| GAN [29] | 天气不错吗? Is the weather nice? | 地址在哪呀 Where is the address? | 我还是快乐的 过着自己的生活 I’m still happy with my life. |

| PTM [26] | 早安!早上好! 美丽的北京! Good morning! Beautiful Beijing! | 需要门票吗?能玩多久? Do I need tickets? How long will it last? | 个人的生活 其实就是妥协, 找到平衡点 Personal life is all about compromise and finding balance. |

| Seed Query | 你的梦想是什么? What is your dream? |

| Robot A:你的梦想是什么? What is your dream? | |

| Results | Robot B:你猜呀? Can you guess? |

| Robot A:成为最好的人工智能机器人。 Be an excellent artificial intelligence robot. | |

| Robot B:跟我多聊聊你会知道的。 Well, talk to me a lot and you’ll know. | |

| Robot A:调侃你么? Making fun of you ? | |

| Robot B:我知道你知道的。 I know you know. | |

| Robot A:你知道北京石刻艺术博物馆吗? Do you know Beijing Stone Carving Art Museum ? | |

| Robot B:我不知道。 I don’t know ? |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Maimaiti, M.; Sheng, J.; Ke, Z.; Silamu, W.; Wang, Q.; Li, X. An Empirical Study on Deep Neural Network Models for Chinese Dialogue Generation. Symmetry 2020, 12, 1756. https://doi.org/10.3390/sym12111756

Li Z, Maimaiti M, Sheng J, Ke Z, Silamu W, Wang Q, Li X. An Empirical Study on Deep Neural Network Models for Chinese Dialogue Generation. Symmetry. 2020; 12(11):1756. https://doi.org/10.3390/sym12111756

Chicago/Turabian StyleLi, Zhe, Mieradilijiang Maimaiti, Jiabao Sheng, Zunwang Ke, Wushour Silamu, Qinyong Wang, and Xiuhong Li. 2020. "An Empirical Study on Deep Neural Network Models for Chinese Dialogue Generation" Symmetry 12, no. 11: 1756. https://doi.org/10.3390/sym12111756

APA StyleLi, Z., Maimaiti, M., Sheng, J., Ke, Z., Silamu, W., Wang, Q., & Li, X. (2020). An Empirical Study on Deep Neural Network Models for Chinese Dialogue Generation. Symmetry, 12(11), 1756. https://doi.org/10.3390/sym12111756