1. Introduction

In several applications, it is desirable to have effective tools allowing one to construct solutions of functional differential equations under various boundary conditions. Many real processes are modelled by systems of equations with argument deviations (see, e.g., [

1,

2,

3] and references therein). For delay equations, the classical method of steps [

1] allows one to construct the solution of the Cauchy problem by extending it from the initial interval in a stepwise manner; in this way, an ordinary differential equation is solved at every step, with every preceding part of the curve serving as a historical function for the next one. This technique, together with the ODE solvers available in the mathematical software, is commonly used in the practical analysis of dynamic models based on equations with retarded argument under the initial conditions (e.g., in economical models [

4,

5,

6]).

In certain cases, boundary conditions are more complicated and deviations are of mixed type (i. e., equations involve both retarded and advanced terms [

7,

8] or deviations of neither type), which in particular, makes impossible to apply the method of steps due to the absence of the Volterra property of the corresponding operator. The aim of this paper is to show that the techniques suggested in [

9] for boundary value problems for ordinary differential equations, under certain assumptions, can be adopted for application to functional differential equations covering, in particular, the case of deviations of mixed type and general boundary conditions. As a result, one can formulate a scheme for the effective finding of approximations to solutions of boundary value problems for functional differential equations, which also theoretically allows one to establish the solvability in a rigorous manner. Here, we consider the linear case and deal with the construction of approximate solutions only. The techniques are rather flexible and can be used in relation to other problems. Although the approach is not explicitly designed for partial differential equations, it still might be used in the cases where systems of equations with one independent variable arise (e.g., equations obtained when a symmetry reduction is possible, equations related to the inverse scattering transform or discretization [

10,

11]). Unlike methods used for integrable systems (e.g., the Hirota bilinear method [

12]), the technique is aimed at getting approximate solutons without previous knowledge of the structure of the solution set.

We consider the system of

n linear functional differential equations

under the linear boundary conditions

where

,

,

is a linear bounded operator,

is a bounded linear vector-valued functional,

and

are fixed. System (

1) can be rewritten in coordinates as

where

are the components of the operator

defined by the equalities

for

v from

(here,

stands for the

jth unit vector). The difference between (

1) and (

3) is a matter of notation: given system (

3), it can be rewritten as (

1) by setting

for

. Here, we consider the case where the component operators have values in

, i. e., the coefficients in the equation are essentially bounded, which excludes the presence of integrable singularities (e.g.,

,

).

System (

3) covers, in particular, the system of differential equations with argument deviations of the form

where

, are measurable functions,

and

, are from

. A particular case of (

6) is, e.g., the well-known pantograph equation, which arises independently in several applied problems of various nature (see, e.g., [

13,

14] and references therein). The assumption that

is not a restriction of generality because the setting involving initial functions can be reduced to the present one by a suitable transformation [

15]. The retarded character of the equation is not assumed (in particular, for (

6), it is not required that

for all

i,

j) and, therefore, the method of steps is inapplicable.

We use the following notation. For any vectors

,

, we write

and

The inequalities between vectors as well as minima and maxima of vector functions are understood likewise in the componentwise sense.

denotes the spectral radius of a matrix

A. For an essentially bounded function

and an interval

, we put

2. Auxiliary Problems with Two-Point Conditions

The idea is to use a suitable parametrization. Motivated by [

9], we will seek for a solution of (

1), (

2) among all solutions of Equation (

1) under the simplest two-point conditions

with unfixed boundary values

and

. Under suitable assumptions, every problem (

1), (

9) is shown to be uniquely solvable and its solution is constructed as the limit of certain iterations

. The vectors

and

are parameters the values of which remain unknown at the moment; they should be chosen appropriately in order to satisfy condition (

2).

Let us fix closed bounded connected sets

and

in

and focus on the solutions of problem (

1), (

2) with the values at the endpoints such that

To treat solutions with properties (

10), we carry out “freezing” at the endpoints by passing temporarily to conditions (

9). Thus, instead of (

1), (

2) we will consider the parametrised problem (

1), (

9). There are two (or, in coordinates,

) degrees of freedom there and we will see that one can, in a sense, go back to the original problem by choosing the values of the parameters appropriately.

4. Applicability Conditions

Let

L be the matrix constituted by the norms of the components of

l as operators from

to

:

All the components of the matrix

L are well defined due to the boundedness of

l as a mapping from

to

. It follows immediately from (

4) and (

18) that the componentwise inequality

holds for a.e.

and arbitrary

u from

.

Theorem 1. Assume that the spectral radius of L satisfies the inequalityThen, for any fixed,

, the sequenceconverges to a certain functionasuniformly on. The functionsatisfies conditions (9) and the differential equationwhereThe functionis the unique solution of (21) with the initial conditionand also the unique solution of Equation (17). The functionsatisfies the original Equation (1) if and only if ξ and η are chosen so that The form of iteration sequence (

14) is chosen specifically according to the two-point separated boundary conditions (

9), so that (

9) is automatically satisfied on every step. The smallness of

(assumption (

20)) ensures the unique solvability of all the auxiliary initial value problems (

16) and (

24),

which is also helpful in cases where the initial value problem (

1), (

24) for the original equation is not uniquely solvable. It should be noted that the last mentioned issue may arise even for very simple equations of type (

6); for example, it is easy to verify that if (

1) has the form

then the initial value problem (

25), (

24) is not uniquely solvable (it has infinitely many solutions if

and no solution for

) and the corresponding Picard iterations diverge. However, the iterations defined according to equalities (

13) and (

14) can be used since assumption (

20) is satisfied (

in this case).

Equation (

21) clearly resembles (

16) with the difference that

appearing in (

21), where the limit function is directly involved, is not a functional term. Equation (

21) can thus be regarded as a perturbation of equation (

1) with a constant forcing term,

the value of which is related to the two-point condition (

9).

Theorem 2. Assume that condition (20) holds and ξ, η are fixed. Then the solution of (26) with the initial value (24) satisfies the conditionif and only ifwith Δ

given by (22).

Proof. The proof is similar to that of ([

9] Theorem 7). If

in (

26) has form (

28), then the function

, which is well defined by Theorem 1, is the unique solution of the Cauchy problem (

26), (

24). By Lemma 1, this function also satisfies condition (

27).

Conversely, let

v be a function satisfying (

26) and (

24) with a certain value of

. The integration of (

26) gives the representation

whence

Combining (

29) and (

30) and assuming that

, we get

or, which is the same,

for

. Equation (

31) coincides with (

17) and thus

v is a solution of (

17). Since, by Theorem 1, the function

is the unique solution of equation (

17), it follows that

,

and, hence, by (

30),

is necessarily of form (

28). □

Theorem 3. Under assumption (20), the limit functionof sequence (14) is a solution of the original problem (1), (2) if and only if the vectors ξ and η satisfy the system ofequations Proof. It is sufficient to apply Theorem 1. Equations (

32) bring us from the auxiliary two-point parametrised conditions back to the given functional conditions (

2). □

Combining these statements, one can formulate the following theorem.

Theorem 4. Assume condition (20). If there existfor which equations (32) are satisfied, then the boundary value problem (1), (2) has a solutionsuch thatand. Conversely, if problem (1), (2) has a solution, then the valuesandsatisfy (32).

Proof. Indeed, if

satisfy (

32) then, by Theorem 3, the function

is a solution of problem (

1), (

2). By Theorem 1, this function satisfies the two-point conditions (

9).

Assume now that

u is a solution of problem (

1), (

2) and put

,

. Then

u is a solution of the initial value problem (

26), (

24) with

and, furthermore,

because the solution of this problem is unique (Theorem 1). By Theorem 2, it follows that (

23) holds. Since, by assumption,

u satisfies (

2), it is obvious from (

55) that

, i. e., (

32) holds. □

The boundary value problem (

1), (

2) is thus theoretically reduced to the solution of Equation (

32) in the variables

and

.

7. Practical Realisation

Theorems 1 and 2 suggest to replace the boundary value problem (

1), (

2) by the system of

Equation (

32). These equations are usually referred to as determining equations because their roots determine the solutions of the original problem among the solutions of the auxiliary ones (i.e., we obtain a solution once the values of

and

are found). The main difficulty here is the fact that

is known in exceptional cases only and thus system (

32), in general, cannot be constructed explicitly. This complication can be resolved by using the approximate determining systems of the form

where

is fixed and the function

is given by the formula

for arbitrary

,

. The function

is obtained after

m iterations and thus, in contrast to system (

32), Equation (

58) involve only functions that are constructed in a finite number of steps.

The uniform convergence of functions (

14) to

implies that systems (

32) and (

58) are close enough to one another for

m sufficiently large and, under suitable conditions, the solvability of the

mth approximate determining system (

58) can be used to prove that of (

32). Existence theorems can be formulated by analogy to [

17] (we do not discuss this kind of statements here). A practical scheme of analysis of the boundary value problem (

1), (

2) along these lines can be described as follows.

The approach involves both the analytic part (construction of iterations with parameters according to equalities (

13) and (

14)) and the numerical computation, when the approximate determining Equation (

58) are solved. We can (and, in this approach, are generally encouraged to) start with step 1 without knowing anything about the solvability of the problem, because a hint on the existence of a solution and its localisation is likely to be obtained in the course of computation.

When analysing the results obtained at step 3, the following main scenarios may be observed:

Clear signs of convergence and a good degree of coincidence ( satisfies the set accuracy requirements; it remains only to check the solvability rigorously as mentioned above).

There are signs of convergence but the accuracy requirements are not met (continue the computation with ).

There are signs of divergence, usually accompanied by failure to solve some of the equations (either there is no solution or the convergence boundary is trespassed; the scheme is inapplicable).

Failure to carry out symbolic computations at a certain point (software or hardware limitations; some simplifications should be used).

The main restriction is assumption (

20), without which the presented proof fails and the procedure may diverge. When condition (

20) is not satisfied, to exclude scenario 3, the use of the interval division technique [

18] can be suggested, for which purpose the auxiliary two-point conditions of form (

9) are very suitable. In the case of difficulties with symbolic computation, polynomial approximations can be used by analogy to [

19]. We do not discuss these two issues here in more detail.

The accuracy of approximation can be checked by substituting

into the equation and computing the residual functions

for

, where

By assumption, the operator

l in Equation (

1) has range among essentially bounded functions and, hence, one may require the smallness of the values

,

.

It follows from inequality (

56) of Lemma 6 that the estimate

holds for all

, where

is an exact solution of problem (

1), (

2) (provided it exists), the values

are the roots of the

mth approximate determining system (

58), and

is the corresponding

mth approximation (

64). In other words, according to (

67), the convergence of the roots of approximate determining equations to the corresponding values of a particular exact solution

at the points

a and

b guarantees the approximation of

by

the quality of which is growing with

m.

8. A Numerical Example

To show the practical realisation of the above scheme, let us consider the problem of solving the system of differential equations with argument deviations

where

is the function

given by the formula

and

is a positive constant, under the non-local boundary conditions

The given particular form of the forcing terms

,

and

in (

68) is chosen for the purpose of checking explicitly an exact solution, which is known to be

in this case.

Along with the retarded term containing the deviation

, the right-hand side of system (

68) involves also terms with the argument reflection

and the argument transformation

for which the function

has multiple sign changes. The latter two are deviations of mixed type (i. e., of neither retarded nor advanced type).

System (

68) is obviously of type (

6) with

,

,

,

for

,

, while the boundary conditions (

69) can be rewritten as (

2) with

and

. It is easy to verify that the corresponding operator

l (see formula (

5)) satisfies the componentwise inequality (

19) for all

with the matrix

Since

, it follows that condition (

20) holds if

Let us choose, e.g.,

and proceed as described in

Section 7 (note that condition (

20) is only sufficient; in particular, numerical experiments for larger values of

show that the convergence is still observed for

).

We start from the zeroth approximation defined according to formula (

13). The linearity of the functional differential system (

68) and of the boundary conditions (

69) implies that the approximate determining system are linear. To construct the zeroth approximate determining system (

60), one should only substitute (

13) into (

59) with

. After some comutation we find that, in this case, (

60) has the form

where

and

,

, are the Fresnel integrals. System (

71) consists of six equations in six variables

,

,

, which according to (

65), have the meaning of approximate values of

,

,

. Solving Equation (

71), we find its roots

Substituting values (

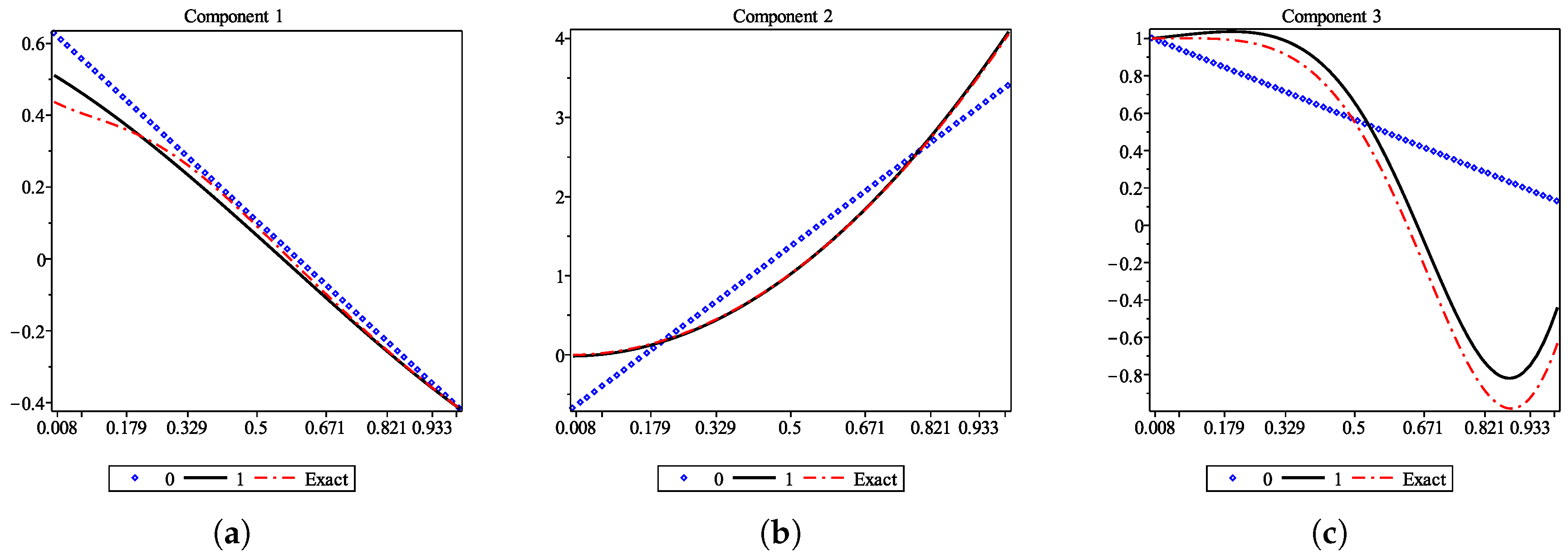

72) into formula (

13) according to (

61), we obtain the zeroth approximation

, whose components

represent the straight line segments joining the points

and

,

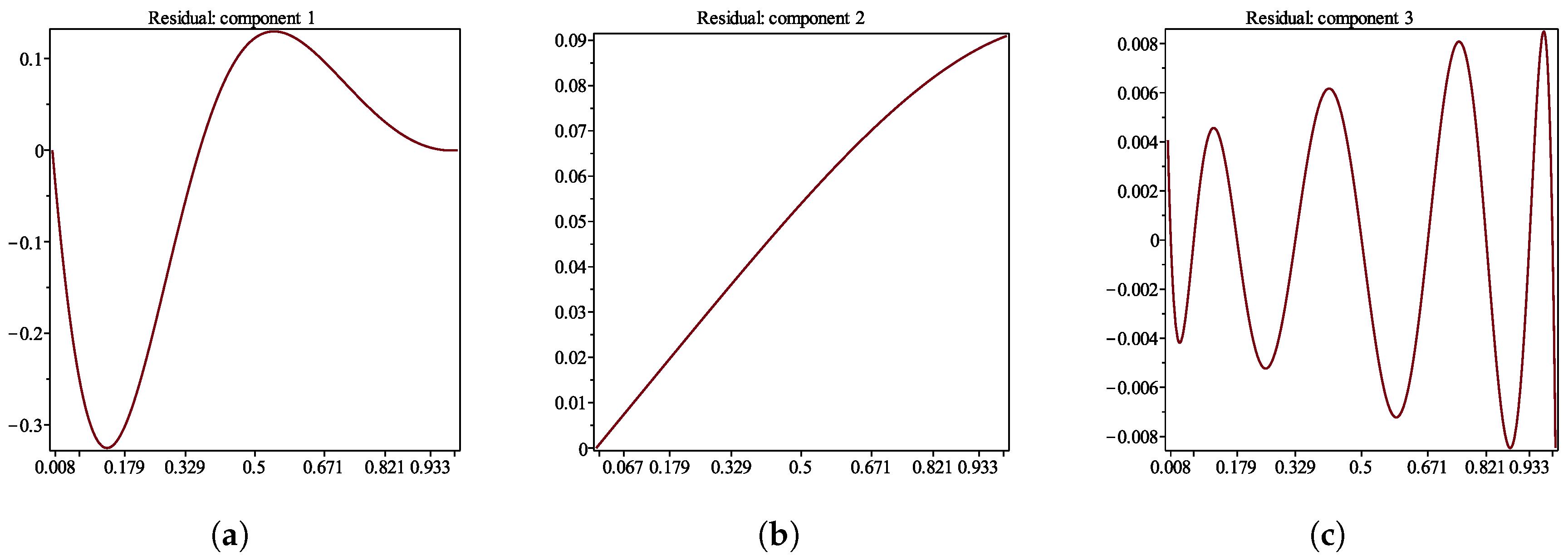

. Although this linear approximation is very rough, it still provides some useful information on the localisation of the solution. On

Figure 1, its graph is drawn together with that of the known exact solution (

70). In particular, we have (see also

Table 1) that

The values of

and

in (

71) are determined directly due to the form of the boundary conditions (

69).

Furthermore, better approximations are obtained when we proceed to higher order iterations according to formula (

14). For problem (

68), (

69), by virtue of (

11), this formula means that the functions

,

, are constructed according to the recurrence relations

for

,

, where

is the starting approximation (

13) and the functions

,

, are computed by formula (

12). A direct computation shows that, in this case,

,

, have the form

The values

and

,

, are kept as parameters when passing to the next iteration. The main computational work is to construct the functions

,

, analytically, for which purpose computer algebra systems are natural to be used.

Here, when carrying out computations according to the above recurrence relations, we use MAPLE and additionally simplify the task by using polynomial approximations in the spirit of [

19] (more precisely, 9th order polynomials over the Chebyshev nodes; the interpolation nodes used in the course of computation are marked on the graphs). Carried out without interpolation (i.e., literally according to

Section 7), the procedure allows achieving the required accuracy in a fewer number of steps but is more computationally expensive.

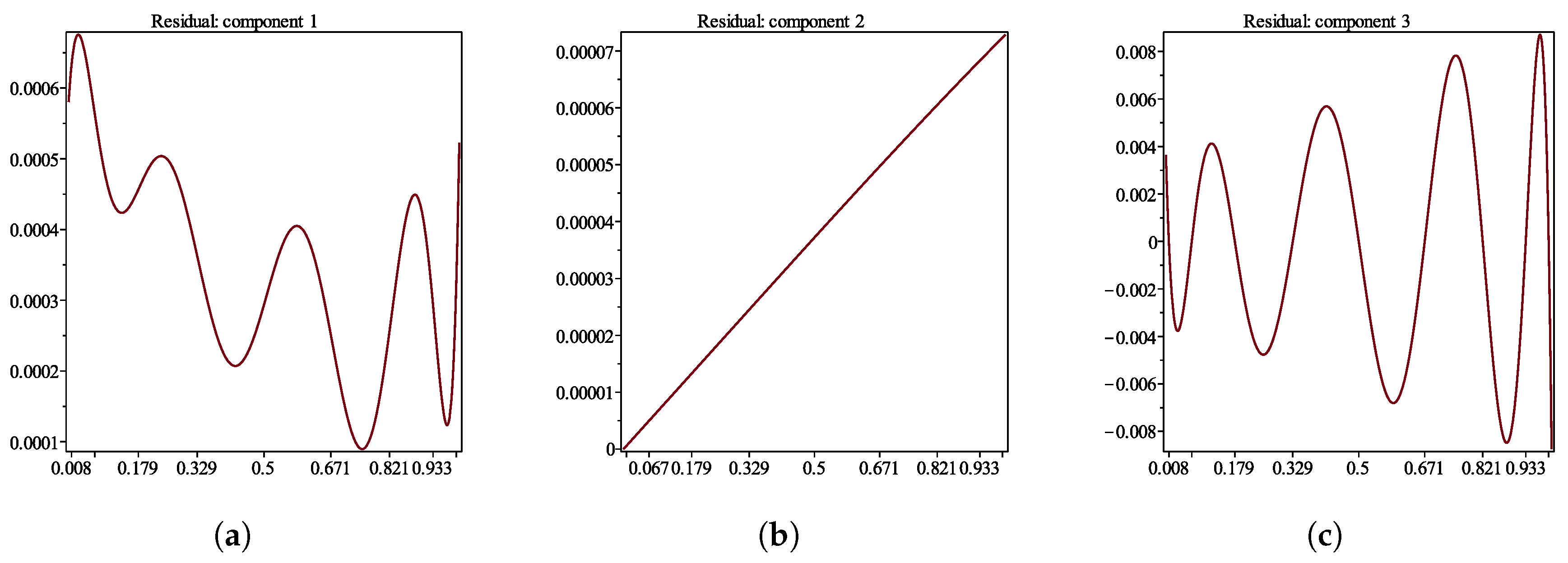

The rough zeroth approximation is improved when we start the iteration. To obtain the first approximation (

63), we need to construct the functions

,

, and the corresponding first approximate determining system (

62). Constructing system (

62) and solving it numerically, we get the values

and, by (

63), obtain the first approximation

the graph of which is shown on

Figure 2. Checking the residual function

corresponding to

(Formula (

66)) in

Figure 3, we see that already the first approximation provides a reasonable degree of accuracy. Higher order approximations are obtained by repeating these steps for more iterations.

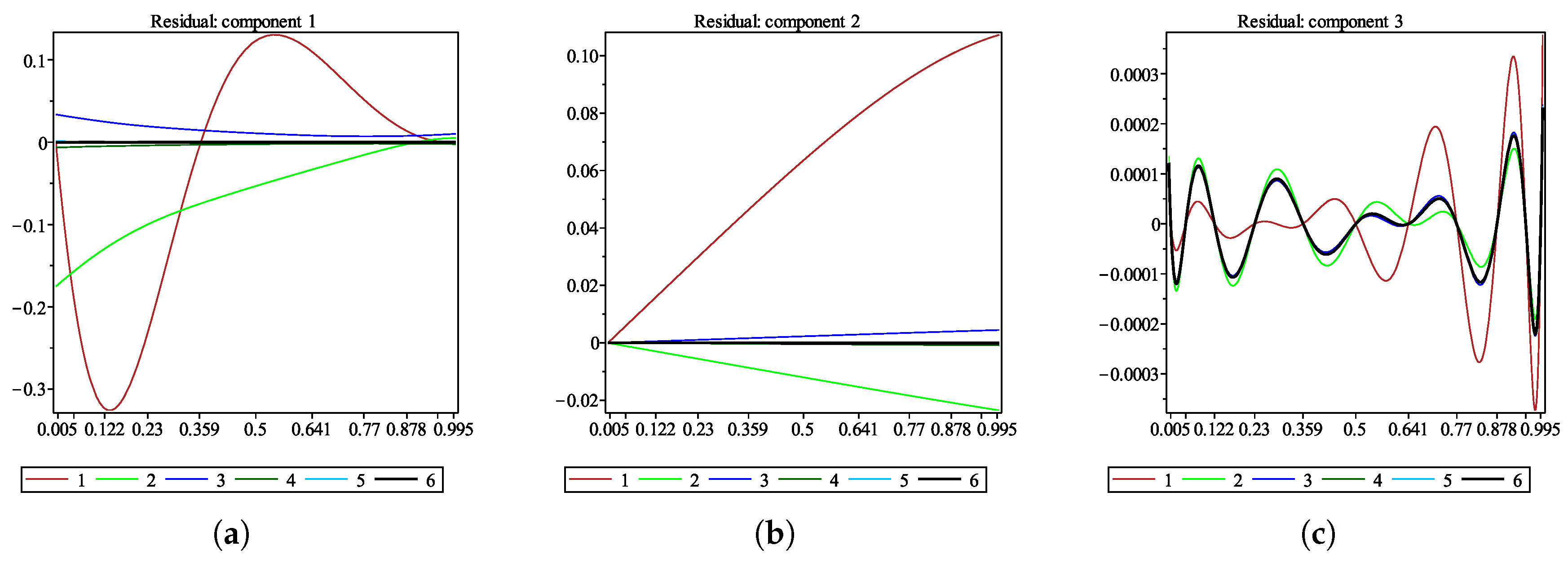

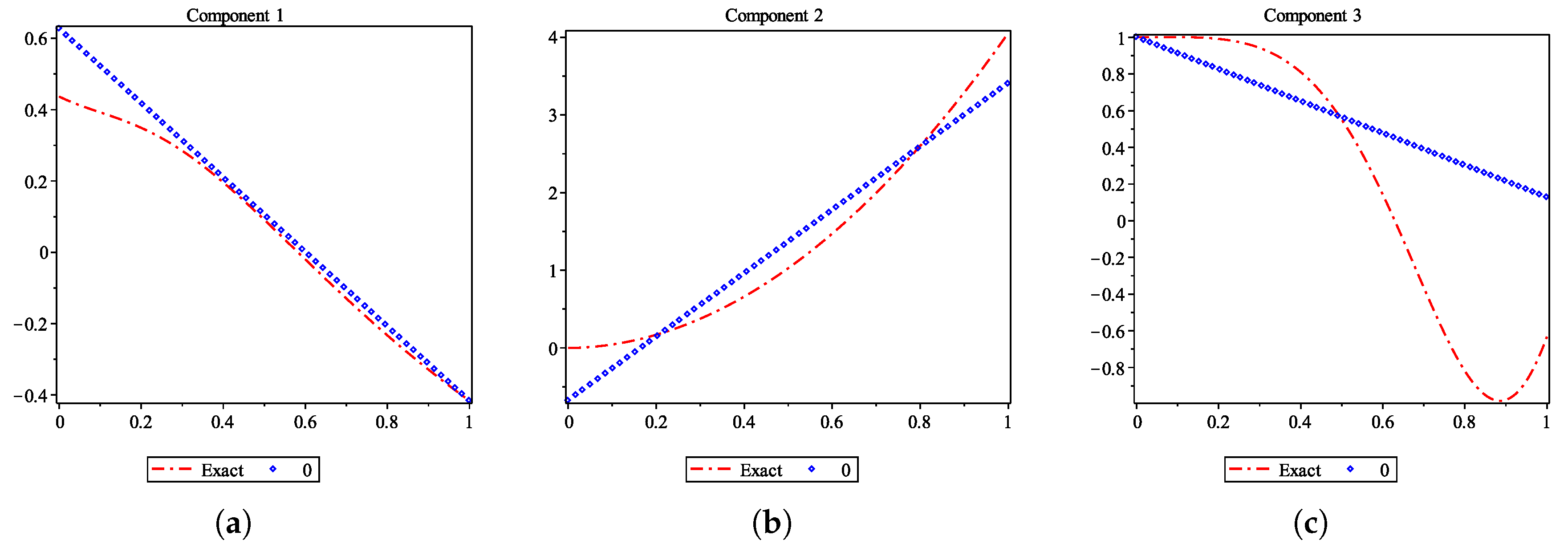

Figure 4 shows the graphs of several further approximations. We can observe that, starting from the third one, the graphs in fact coincide with one another. The maximal value of the residual

of the fifth approximation

is about

(see

Figure 5). The result is refined further when we continue the computation with more iterations. In this particular case, when interpolation is additionally used, this depends also on the number of nodes; increasing it from 9, e.g., to 11, we get the sixth approximation

with the residual not exceeding

in every component. This is seen in

Figure 6 (especially

Figure 6c), where the graphs of the components of

,

, are shown. The graph of

does not optically differ from that of

presented in

Figure 4.

The values

,

obtained by numerically solving the approximate determining Equation (

58) for

(with interpolation on 11 nodes) are collected in

Table 1. In the last row of the table, for the sake of comparison, we give the values

,

,

, of the exact solution (

70).

The approximations (

64) are constructed in an analytic form; e.g., for the above-mentioned

, using M

aple we obtain the explicit formulae

for all

.

The accuracy of approximation of

by

can be verified in

Figure 7, where the graphs of the corresponding error functions

,

, are shown. We can see that the maximal value of the absolute error does not exceed

.