1. Introduction

Informally, a record is an extraordinary value of a variable, which surpasses all of its kind. Records are very popular in fields such as sports, climatology, finance or insurance. In mathematical terms, given a sequence of real-valued observations

we define

as the first record, by convention, and we say that

is an upper record (or simply a record) if

holds, where

, for

. Records have been extensively studied in Extreme Value Theory and their probabilistic properties, mainly under the assumption of independent and identically distributed observations, with continuous underlying distribution, are well known. This classical setting has significant symmetry which greatly simplifies calculations because it implies that all orderings of observations are equally likely. On the other hand, departures from symmetry, such as the existence of a trend in the sequence of observations, brings about technical complexities which require the use of more sophisticated mathematical tools. For general information on record theory, see Reference [

1] or Reference [

2].

In parallel, statistical inference for record data has developed considerably, impelled by the availability of many data sets of records and also because, in contexts such as destructive stress-testing, efficient sampling schemes (in terms of the number of broken units) yield record series. There is a vast literature on inference for record data and the interested reader can consult, for example, References [

3,

4,

5].

A serious problem with record data is relative scarceness, since a sequence of

n iid observations has only about

records. So extra data may be needed and a reasonable option is near-record data, which can be available along with records. By near-record data we mean observations that are close to being records, in a sense to be made precise. Our working hypothesis is this: if one uses statistical methods specifically designed for record data but feels that the record series is too short, then a sound option is to incorporate near-records. Of course, the methodology has to be adapted to handle the new data, along with records. In this paper we show how this can be done, in the particular case of the Weibull model. We assess via simulations the impact of the additional information and present an application to real data. Various definitions of near-records have been proposed, for example, in References [

6,

7,

8]. In this paper we consider near-records in the sense of Reference [

7], which are closely related to

-records. The latter were independently defined in Reference [

9], as natural and tractable generalizations of records, which are as easily collected as records. Their probabilistic properties have been studied in References [

9,

10,

11,

12,

13,

14].

Concerning the statistical applications of

-records, their likelihood function for a continuous distribution was first published in Reference [

10], with results on maximum likelihood estimation (MLE) for the exponential and Weibull distributions. In

Section 4.3 of the above cited paper, a variant of the sequential stress-testing scheme is proposed to collect

-records, which we briefly describe here. Suppose we wish to test, say, tensile strength. In a classical sampling scheme, all items are stressed until they break. In the more efficient sequential testing, each item is stressed up to the maximum level that a previous item broke and this yields a sequence of (lower) records. The proposed variant consists in stressing the items further than the previous record, by a fixed value

, to obtain a sequence of lower

-records. The likelihood of lower

-records can be easily obtained by adapting the ideas from the theory of standard (upper)

-records; see Reference [

10] for details. Additionally, Bayesian and MLE methods, for parameter estimation and prediction of future records in the geometric distribution, were presented in Reference [

15]. A conclusion to be drawn from results in these papers is that inference methods based on

-records outperform their corresponding record-only versions.

The main objective of the present paper is to investigate properties of inferences based on

-records for the Weibull model, such as strong consistency of the MLE of parameters, maximum likelihood prediction (MLP) of records and Bayesian estimation and prediction of records. The reason for focusing on the Weibull distribution is twofold: First, the model and recently introduced variants are widely used in applications; see References [

16,

17]. Second, inference for its parameters using records has drawn significant attention in recent years; see References [

4,

5,

18,

19,

20,

21,

22,

23,

24].

In our analysis we consider two cases: known and unknown shape parameter, while the scale parameter is always assumed unknown. According to References [

25,

26] and [

27] (Section 14.2), in many practical problems it is not unreasonable to assume that the shape parameter is known or, at least, it is one among a small number of values. We do not analyze the situation of known scale parameter since, according to the literature, it is not considered natural and very few papers deal with it; see Section 14.2.2 in Reference [

27]. It is important to warn the reader that, while statistical inference based on records from a Weibull distribution, with known shape parameter, can be reduced to inference from the exponential distribution (via power transform), this is not the case for

-records. See Remark 2.

We assess the impact of

-records in parameter estimation and prediction of new records, by means of Montecarlo simulation and the analysis of real data. We perform comparative analyses of estimators and predictors based on

-records, in a variety of settings. In particular, we show that the performance of estimators and predictors is improved when using

-record data with respect to only record data. Regarding real data, we analyze cumulative rainfall information recorded at the Castellote weather station in Spain; see Reference [

10].

The paper is organized as follows:

Section 2 is devoted to preliminary definitions and notation. MLE and MLP of future records are developed in

Section 3; we show existence of estimators and predictors and prove the strong consistency of the MLE of the scale parameter, if the shape parameter is known. Results of simulation are presented in

Section 3.4, showing that

-records bring about noticeable improvement in estimation and prediction. The analysis of real data is presented in

Section 3.5.

Section 4 is devoted to Bayesian inference. We compute Bayes estimators and highest posterior density (HPD) intervals of parameters, using two different priors in the case of both parameters unknown. Then we consider Bayesian prediction of future records. Results from simulations and real data are shown in

Section 4.5 and

Section 4.6. In

Section 5 we present our conclusions.

We end this introduction with some comments about the novelty of results presented in this paper. We remark first that, while the expressions for the likelihood of the sample of

-records and for the MLE of the parameters were first obtained in Reference [

10], the remaining results are new, in the context of continuous distributions. These novel results include strong consistency of the MLE of the scale parameter (under known shape parameter); the development of a frequentist strategy for the prediction of future records and the proof of existence of predictors. In the Bayesian framework we develop the estimation of parameters, under a variety of choices of prior distributions, also complemented with a consistency result and, finally, we propose and analyze a method for predicting new records.

2. Preliminaries

Let , be a sequence of independent and identically distributed (iid) random variables, with common distribution function and density , where is a parameter. Let , be the sequence of partial maxima.

Definition 1. Let be a record by convention and, for , is a (upper) record if . The indexes , corresponding to record observations, are called record times. That is, and for , . Records (or record-values) are defined by .

Definition 2. Let δ be a fixed, real parameter. Let be a δ-record by convention and, for , is a δ-record if .

The sequences of

-record times and

-records are defined analogously as for records. Note that if

,

-record are just records. If

,

-records are a subsequence of records and, on the contrary, if

, records are a subsequence of

-records. So, the only statistically relevant situation is

, since

-records contain all records plus the so-called near-records (in the sense of Reference [

7]). Given

,

is a

-near-record (or simply near-record) if

. In other words, near-records are close to being records but are not records. It is clear also that near records are not symmetrically clustered around records. In the rest of the paper we assume

.

Definition 3.

(i) A near-record is said to be associated to the m-th record , if .

(ii) The number of near-records associated to is denoted by .

(iii) If , the vector of near-records associated to is denoted by .

(iv) The sample is defined by the vector , where , and .

When referring to the sample as a random object we use bold upper-case letters, otherwise we use

. Note that

contains a fixed number

n of records (

R), plus the counts (

S) and respective values (

Y) of all near-records associated to each record. So,

has random length, depending on the (random) numbers

of near-records associated to each record

. In turn, the distribution of

depends in a non trivial way on

and is also affected by the tail behavior of

. For example, the number of near-records obviously increases with the absolute value of

. On the other hand, if

is heavy-tailed, there will tend to be fewer near-records. Laws of large numbers and central limit theorems, for the number of near-records, can be found in References [

28,

29]. Normalizing sequences of asymptotic results in Example 4 of Reference [

10], may serve as proxies for the expected values of the number of near-records, for the Weibull distribution.

2.1. Likelihood of -Record Observations

Proposition 1. Let . The likelihood function of sample is given bywhere , and , for , . Proof. See Proposition 1 in Reference [

10]. ☐

Remark 1. Observe that in (

1)

the sample is assumed to contain all near-records associated to the last record . To ensure that all near-records are present in the sample, the value of must be observed. As commented in Section 4.2.1 of Reference [10], the data may not contain all near-records associated to , since is not observed. So, it is not known if some near-records associated to are missing and, in such situation, the likelihood has to be modified accordingly. It is easy to see that this amounts to substituting for in (

1)

. Then the modified likelihood , of a possibly incomplete sample, is In the rest of the paper, except in the analysis of real data, we work with L (thereby assuming that all near-records associated to have been collected). Results for L can be adapted to , with only minor modifications.

2.2. Density of Future Records

The prediction of a future record , , is based on the conditional density, given , as presented below.

Proposition 2. The density of the m-th record conditional on , is given byfor and where . Proof. Conditionally on

,

is independent of

(see Proposition 1 in Reference [

10]). Therefore, the density of

given

is the same as the density of

given

, which proves the result. ☐

2.3. Weibull Distribution

We present here the likelihoods corresponding the Weibull distribution, with two parametrizations:

, for classical (frequentist) inference, and

, for Bayesian analysis. We find it convenient to work with these two parametrizations mainly because they are found in the literature, associated respectively to classical or Bayesian inferences. See, for example, the Weibull Distribution Handbook [

27], where the author argues that

separates parameters and has the advantage over

, of simplifying the algebra in Bayesian manipulations.

Definition 4. Let the parametrizations of the Weibull distribution be defined respectively by

- P1 :

,

- P2 :

,

for and .

Parameter

in

is the so-called scale parameter, while

is known as shape parameter. In

the scale parameter is given by

. From (

1) we obtain the corresponding likelihoods

where

and

J are defined by

For possibly incomplete data, using (

2), the corresponding likelihoods are (

4) and (

5), with

substituted by

.

3. Maximum Likelihood Analysis

This section is devoted to study the MLE of parameters and the MLP of future records. We begin with the MLE of parameters , related to and, as commented before, we consider two cases: known and unknown. Throughout this section is assumed unknown.

Remark 2. Notice that if β is known, some inferences for λ can be reduced to the exponential distribution since, if X is Weibull distributed, then is exponentially distributed. However, this is not so for δ-records because, for , the β-th power of δ-records sampled from X, are not distributed as δ-records sampled from . The reason being that δ-record extraction and power transform of the data are not symmetrical actions, in the sense that they do not commute. Therefore, the consistency result of Theorem 1, for , does not follow from the corresponding result for the exponential model ().

The existence of the MLE of is established in Proposition 3. For ease of notation, we omit their dependence on . The set of solutions of a maximization problem is denoted argmax.

3.1. MLE of Parameters

Proposition 3. (i) For all , has a unique element(ii) Let . Then and , for any . Proof. (i) (

7) is obtained by solving

for

.

(ii) It can be shown that maximizing

over

is equivalent to maximizing

and that

, where

and

. See Reference [

10] for details. ☐

Remark 3. Note that depends on all δ-records (records and near-records) in the sample . If, by chance, no near-records are observed (, for ), thenwhich is in contrast with the case , where the estimator depends only on the last record . The numerical computation of the MLE is straightforward. If

is known, the explicit formula for the MLE of

is given in (

7). If

is unknown, the numerical maximization of

over

must be carried out to find

, as explained in the proof of Proposition 3. Then

and

are the MLE of

and

respectively.

3.2. Strong Consistency

We state below the strong consistency of

, the MLE of

when

is known. The proof of this result is split into several technical lemmas, presented in

Section 6.1. Strong convergence as

is denoted

.

Theorem 1. , for all .

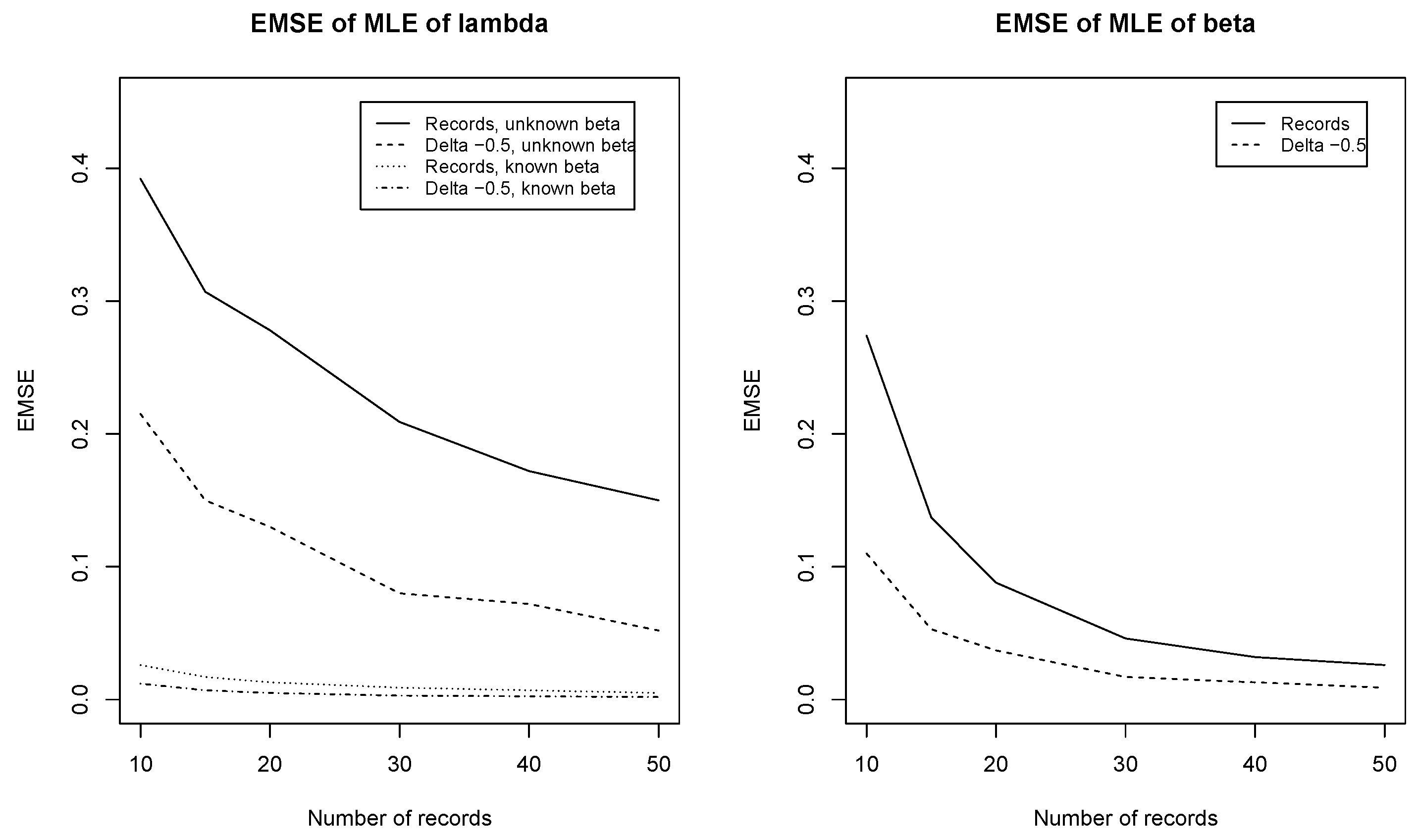

Remark 4. If β is unknown, the question of consistency of remains open. Nevertheless, we have run Montecarlo simulations which suggest that consistency also holds in this case. In the left panel of Figure 1, values of the EMSE of are plotted versus n. It can be seen that appears to be consistent, for unknown β (in mean square sense). We also observe that convergence in the case of known β is much faster (lower curves). Moreover, in both situations δ-records yield an EMSE smaller than records. On the right panel of Figure 1 we can see a steep descent of the EMSE of , suggesting consistency of this estimator as well. 3.3. Maximum Likelihood Prediction of Future Records

The MLP of future records, as defined in Reference [

30], consists in maximizing the so-called predictive likelihood function. The following definitions follows that idea, adapted to the sample

of

-records.

Definition 5. Let the predictive likelihood of and θ be defined by . In the case of the Weibull distribution, using parametrization , we have, for ,and so, Definition 6. The MLP of , is defined by , where . In the case of the Weibull distribution, using parametrization , we have

(i) , where , if β is known, and

(ii) , where , if β is unknown.

Remark 5. The estimators in Definition 6 are the so-called predictive maximum likelihood estimators of , according to Reference [7]. Their properties are not investigated in this paper. The existence of MLP of future records in the Weibull distribution is established in Proposition 4 and the corresponding proof is presented in

Section 6.2.

Proposition 4.

(i) For all , there is a unique pair , given bywhere , with(ii) Let . Then and , for any . Remark 6. According to Proposition 4, if β is known, there is an explicit formula for the MLP of , equal to in (

9)

. On the other hand, if β is unknown, and are plugged in and a maximization problem in one real variable must be solved (numerically), namely . This is straightforward since, as shown in Section 6.2, there is a compact interval containing a solution . Finally, it suffices to replace β by in (

9)

, to find the MLP. Observe, also in Proposition 4, that, if , then (because ), and, consequently, . Hence, regardless of β being known or unknown, . See Section 6.2 for details. 3.4. Simulation Study

To assess the behavior of the MLE and MLP, we carry out Montecarlo simulations. For several values of and for , we generate samples of records and their near-records.

The MLE of the Weibull parameters using

-records were first studied in Section 4.1.1 of Reference [

10]. A comparison of the accuracy of estimators, for

,

and

can be found in that paper. Throughout this paper we work in a different scenario, namely

, with several values of

and

.

The estimated mean square errors (EMSE) of the MLE of

are computed as averages of the squared deviations of the MLE from the true values of the parameters. Results in

Table 1 show that, for

known, the EMSE of

is much lower for

than for

(only records). For

unknown, the observed improvement is greater in

than in

. Regarding bias, it is known that the MLE of

and

, from record data, are biased; see Reference [

23]. In the case of

-records our simulations show a small positive bias in the estimations of both parameters.

For MLP of future records we proceed as above in terms of the number of simulated samples, the number

n of records and the values of

and

. The record to be predicted is

(that is

), which is the first interesting case for

, since

, as commented in Remark 6. For each run we simulate

and compute the EMSE, as the average of squared deviations of

from the simulated value of

. Results show that predictions based on records or on

-records tend to underestimate

. For example, given

and

, the mean of

is 1.75; for

, the mean of

is 1.39 while, for

, the mean of

is 1.47. The values of the EMSE are displayed in

Table 2.

Although, in absolute terms, the advantage of

-records appears to be greater in estimation than in prediction, it should be borne in mind that there exists a positive lower bound of the EMSE of any predictor of

, based on past information, even if parameters were known. This is because the optimal mean square predictor, based on past information, is the conditional expectation with respect to the past. Indeed, let

be the

-algebra generated by

and

a predictor based on the available information before record

(that is,

-measurable), then

In particular, for the Weibull model, with

,

is distributed as sums of

n iid exponential random variables, with mean

, hence

For example, if

,

and

, the bound in (

11) is equal to 0.5. Therefore, when comparing the EMSE 0.884 (

) versus 0.793 (

), in the penultimate line of

Table 2, the bound should be taken into account. A fair estimate of the gain is obtained by subtracting 0.5 from each quantity and computing

, which yields a

reduction, instead of just

, without such correction. An overall conclusion from

Table 1 and

Table 2 is that estimators and predictors, based on

-records, outperform those based on records only. This is coherent with the fact that there is more information in

-records than in records and also with results in Reference [

15], where this property was also observed in the case of the geometric distribution.

3.5. Real Data

We compute estimations and predictions for the rainfall data mentioned in the introduction. The data consists of cumulative rainfall (measured in millimeters), from September to November, recorded at the Castellote weather station in Spain, between 1927 and 2000. The complete sample of 74 values is well fitted by a Weibull distribution, as can be seen in Table 3 and Figure 1 in Reference [

10]. We have also run the Ljung-Box test to check the existence of autocorrelation, yielding a

p-value of

. There are

records in the sample and, for

,

and

, the number of

-records is 6, 9 and 18, respectively. For completeness we show the

-record data in

Table 3.

Since in real data applications, unlike in simulations, we do not know the values of the parameters, it is not clear how to assess the impact of -records. This is also the case in predicting records, because no new record has been observed between the years 2000 and 2018. So, in order to measure the improvement of estimations and predictions due to -records, we compare our results with those obtained from the complete sample of 74 values, taken as benchmarks. Note that the complete sample can be seen as the set of -records with .

The MLE of

and

were reported in Reference [

10], showing that

-records give estimates closer to the benchmark than the estimates from records only. For completeness we present those results here, in the second and third columns of

Table 4.

Maximum likelihood prediction was not considered in Reference [

10]. The results in

Table 4 show that the prediction with delta-records is closer to the estimation using the complete sample, even though the gain is not as clear as in the case of estimation. Recall, however, that the predicted values for future records using the whole sample are not the real values (since they have not been observed yet). Conclusions on the advantage of using delta-records over records must be drawn from Montecarlo simulations in

Section 3.4, rather than from this particular dataset. The reader interested in applications to real data can also see Section 4.2.2 in Reference [

10], where the theory is adapted to lower records and lower

-records. This is readily done by taking advantage of the symmetry between the definitions of upper and lower records.

4. Bayesian Analysis

We develop the estimation of parameters and prediction of future records in the Bayesian framework. We use parametrization

because it is frequently found in the literature on Bayesian analysis of the Weibull model; see Section 14.2 of Reference [

27]. As in

Section 3, we analyze the cases of

known and unknown, while in this section

is assumed unknown. In all expressions below,

are positive. Let the Gamma

density, with parameters

, be denoted

.

If

is known, we assume that

has prior

, which is easily checked to be conjugate. In the case of

unknown, there seems to be no tractable conjugate family for

and, among several alternatives found in the literature, we decided to follow Kundu [

31] and Soland [

32]. In Kundu’s approach

and

have independent gamma distributions; in Soland’s approach,

is discrete, taking values in a finite set and

conditional on

is gamma distributed. Other options, not considered here, are found in References [

33,

34], where

, as well as

conditional on

, have gamma distributions. Definitions of Kundu’s and Soland’s priors are given below.

Definition 7. (i) Let Kundu’s prior be defined by and , where are hyperparameters and do not depend on β.

(ii) Let Soland’s prior be defined by and , where are hyperparameters and are positive known values, for , with .

For simplicity we write hereafter and so forth, instead of and so forth. In what follows we determine the posterior distributions to be used in inferences, namely if is known and , if is unknown. Integrals with respect to or are understood on and so, the limits of the integrals are omitted.

4.1. Posterior Distributions

Suppose first that

is known and recall that

. From (

5) we have

and so,

.

If

is unknown, using Kundu’s prior we obtain the posteriors

with common normalizing constant (numerically computed), given by

For Soland’s prior we have, from (

5) and Definition 7, the posteriors

with common normalizing constant given by

4.2. Estimation of Hyperparameters in Soland’s Prior

A practical challenge with Soland’s prior is the choice of the hyperparameters

. We propose to estimate them, using the empirical-Bayes-type method described below, inspired by Reference [

4].

For

and

, consider the expectations

Note that the value

in (

17) follows from

being distributed as

, where

are iid exponential, with parameter one, so

; see Reference [

2]. Note also that (

16) depends on

, the actual

j-th record value of the sample, while (

17) depends on the random variable

. Then

can be estimated by choosing, if possible (as described in Lemma 1 below), two suitable records

and solving for

in the equations

Lemma 1. If there exist records , such that , then (

18)

has a solution. Proof. Let

and

, then (

18) is equivalent to

Solving for

y in (

19) and equating we have

. Furthermore, observe that

and that

, as

. Hence, if the derivatives satisfy

or, equivalently, if

, there exists

such that

. Finally,

x is replaced by

in either expression of (

19) to solve for

y. ☐

4.3. Bayes Estimators of Parameters

Bayes estimators are defined under quadratic loss. In the case of known

, the estimator of

is denoted

and follows at once from

. So,

Credible intervals for

are readily obtained from

, as well. Additionally, it may be of interest the next result of consistency for

, which follows from Theorem 1. See Reference [

35] for a discussion on Bayesian consistency.

Corollary 1. , for all .

Proof. From the definitions of

, we know that

. Using this and (

20), we can write

in terms of

(the MLE of

in (

7)) as

. Last, Theorem 1 yields

☐

If

is unknown and Kundu’s prior is used, we (numerically) compute the Bayes estimators of

, denoted respectively

, by taking expectations of the posterior densities (

12). We obtain

where

is defined in (

13).

In the case of Soland’s prior, the Bayes estimators are also readily computed from (

14), as

where

is defined in (

15). It should be noted that in simulations and in the analysis of real data, using Soland’s prior, we prefer to estimate

using

, in order to stay within the set of possible

values.

4.4. Prediction of Future Records

The Bayesian prediction of future records is based either on , if is known, or on , if is unknown. In all densities below we assume that parameters are positive and .

From (

3) and using parametrization

, we have

First, if

is known, we use the posterior

to compute

Then the Bayes predictor of

, given by

, is numerically computed as

If

is unknown we use

(

, for Kundu and

, for Soland) to compute

and the Bayes predictor is given by

. Bayesian prediction intervals are also readily obtained.

In the case of Kundu’s prior, from (

12) and (

24) we have

In the case of Soland’s prior, from (

14) and (

24), we get

4.5. Simulation Study

We assess here the performance of estimators, credible intervals and predictors. To that end, we simulate samples of -records, with records. For each sample we compute estimators or predictors for records only () and for -records ().

4.5.1. Known

In

Table 5 we show results for the Bayes estimator

, defined in (

20) and 95% HPD intervals for

. For several values of

and

, we simulate

independent observations of

, from the Gamma

distribution. Then, for each

we simulate a random sample of 5 records and their associated near-records and compute

. The EMSE is computed as average of squared differences

, over the

simulation runs.

We report in

Table 5 the mean coverage and average length of the

HPD intervals. Regarding the coverage, as we sample

from its prior distribution, approximately

of the intervals (both for records and

-records), contain the value of the parameter in the simulation. Since this happens in all the HPD we construct in this section, we do not include the coverage of the HPD intervals in the remaining tables. In all cases we observe that

and the HPD intervals based on

-records compare quite favorably with their counterparts based only on records (

), in terms of smaller EMSE and interval length.

Additionally, we analyze the frequentist coverage for particular values of the parameter. In order to do so, we take

and fix a value of

in a grid from 0.2 to 2; we then simulate 200 samples of records (and

-records), compute the corresponding HPDs for

, using a Gamma

prior and check if they contain the value of

. We repeat this for each value of

.

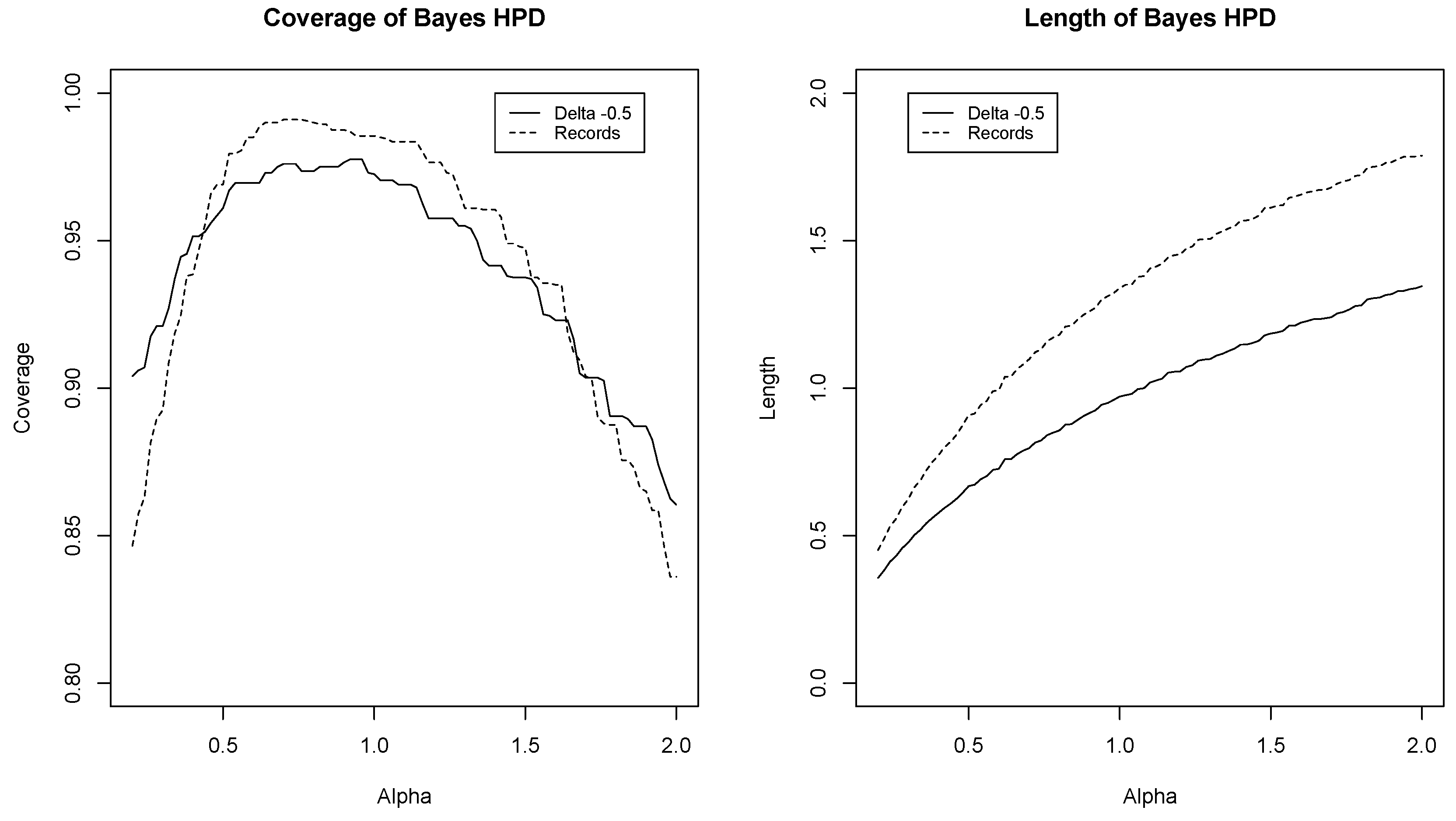

Figure 2 (left) shows the coverage for

. We observe that

-records provide intervals with frequentist coverage closer to

than records. The right plot in

Figure 2 shows the average length of the intervals as a function of

.

In the assessment of Bayes predictors of future records (known ), we consider different gamma priors for and simulate values of . For each simulated we generate a sample of -records and the values of and . Then we compute the EMSE of and , as the average of the squared deviations and , over the simulation runs. We also compute the lengths of the HPD intervals as the average of the lengths of the estimated intervals. The coverage of the intervals, defined as the proportion of runs where the simulated record is in the interval, is included as well. As in the case of estimation, since we sample from the prior distribution in the simulations, approximately 95% of the intervals contain the corresponding record. So, we do not include the coverage in the rest of the tables for prediction.

Results are displayed in

Table 6, where it is apparent that predictors are more accurate with

-records. While

Table 5 shows a significant improvement in the estimation of

, with the use of

-records, this improvement is less visible when forecasting future records. Nevertheless, as in the case of MLP, a fair comparison between the EMSE of predictions should take into account the lower bounds commented there. For instance, if

and

is fixed, the bound is

. Therefore, when

has a prior Gamma

, with

, the lower bound can be computed as

In the particular case , , , the bound is . Then the gain with the use of -records, once subtracted the lower bound, is . That is, while the absolute gain of 0.2 may not seem relevant, relative to 6.292, it is so when the lower bound on the EMSE is taken into account.

4.5.2. Unknown

We begin with Kundu’s prior

, described in Definition 7. For fixed

we simulate

pairs

from

and, for each

we generate a sample of 5 records and their near-records. Once the sample is observed, we numerically compute

in (

13), to obtain an approximation of

. We then compute the Bayes estimators

in (

21) and the HPD intervals from

. The EMSE are obtained as averages of the squared deviations

and

, over the

simulation runs. As in the case of known

, we observe in

Table 7 that

-records have a positive effect, both in the accuracy of the estimations and in the length of the HPD intervals.

For Soland’s prior

we choose the values

for

, with different prior probabilities and two different gamma distributions for

, given

. The HPD intervals for

are not computed since

is discrete and takes only five different values. Results are shown in

Table 8.

Simulation results of Bayes predictors of future records (

unknown), using Kundu’s and Soland’s priors, are presented in

Table 9 and

Table 10, respectively. As before, we proceed by simulating first the parameter values, from the prior distributions and then the sample of

-records and the values of future records. There is, however, a practical difficulty when computing the EMSE of predictors, since a few huge records, which actually appear in simulations, completely dominate the EMSE because it is just the average of squared deviations. Suppose, for example, that we use Kundu’s prior and that the values

have been obtained from

. Then, the corresponding Weibull distribution has expectation

, the value of

will likely be very large and so, a huge value of

will be observed. These “outliers” make the EMSE, computed over all the simulation runs, a useless measure of performance. In order to avoid this problem, we compute the

trimmed mean of

, over the simulations. That is, we eliminate the

smallest and largest values of

and compute the average with the remaining ones. The results in

Table 9 and

Table 10 show that

-records have an impact in the prediction of future records, as observed in the case of known

.

4.6. Real Data

Given that we do not have actual prior knowledge of the parameters, we decided to consider values around 2 for and around for , having only illustrative meaning. In Kundu’s prior , we take . For Soland’s prior we consider uniformly distributed on and in order to determine the values of , we apply the method described before Lemma 1, if possible. Recall that in order to apply the method on , there must exist a pair of records such that . For and , there exists no such pair of records while, for and , the method can be applied and yields the following hyperparameter pairs (after rounding up to the nearest integer): and for and respectively. For and , where the method fails, we pick the value of the closest , that is, . For numerical convenience, we analyze rainfall data using decimeters instead of millimeters, so that the values of and are now divided by 100.

The results are shown in

Table 11 and

Table 12. In both tables we observe that the estimates of the parameters using

-records, with

, outperform those based on records only, because they are closer to results using the complete sample. As in maximum likelihood prediction, the gain in prediction of future records using

-records versus records is not clear; while there is some improvement using Soland’s prior, this is not the case for Kundu’s prior. This can be due to the surprising closeness of Kundu’s prediction using records to the prediction using the whole sample, which we believe happens by chance in this particular instance.