Weakly Supervised and Semi-Supervised Semantic Segmentation for Optic Disc of Fundus Image

Abstract

1. Introduction

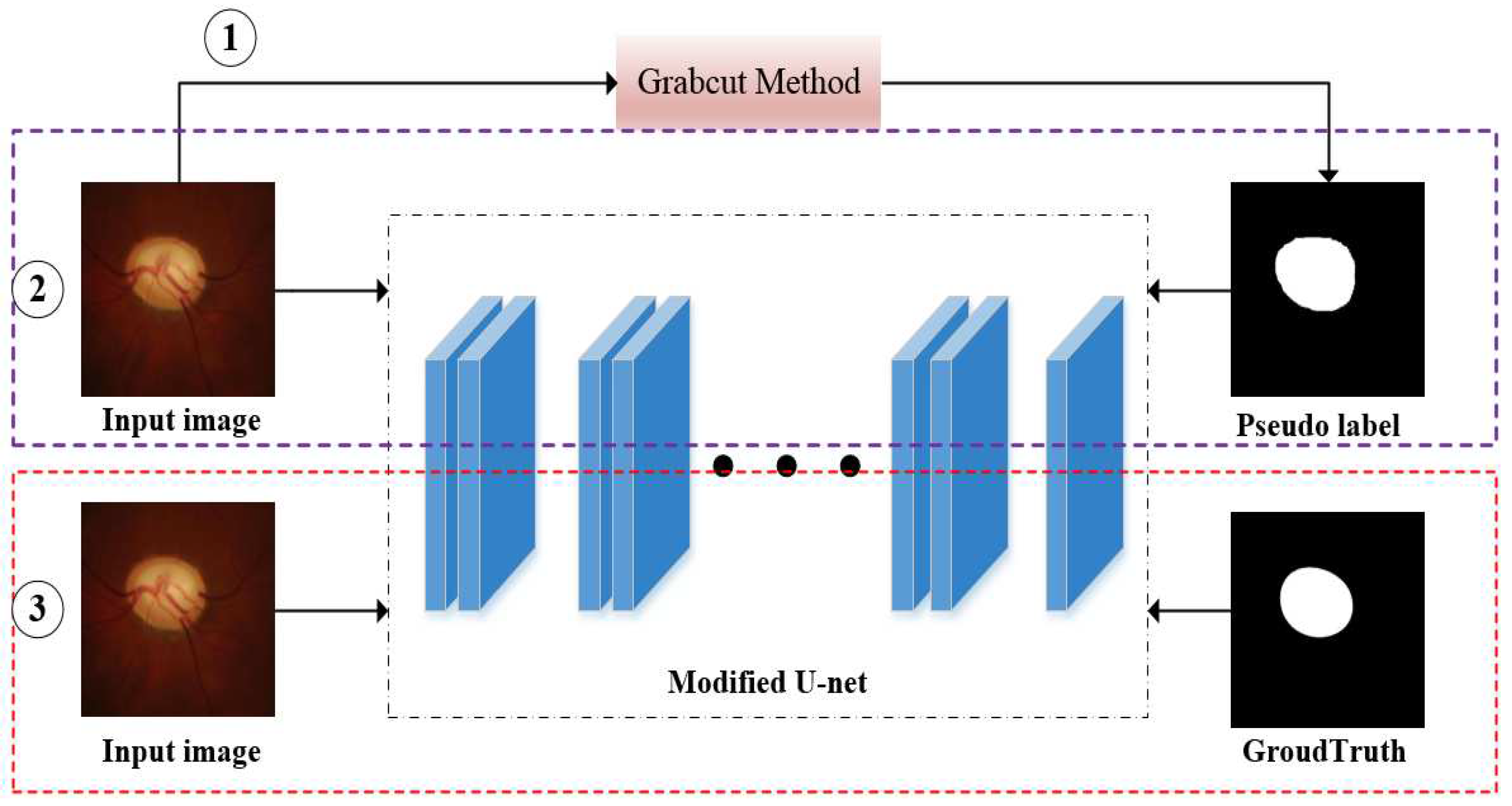

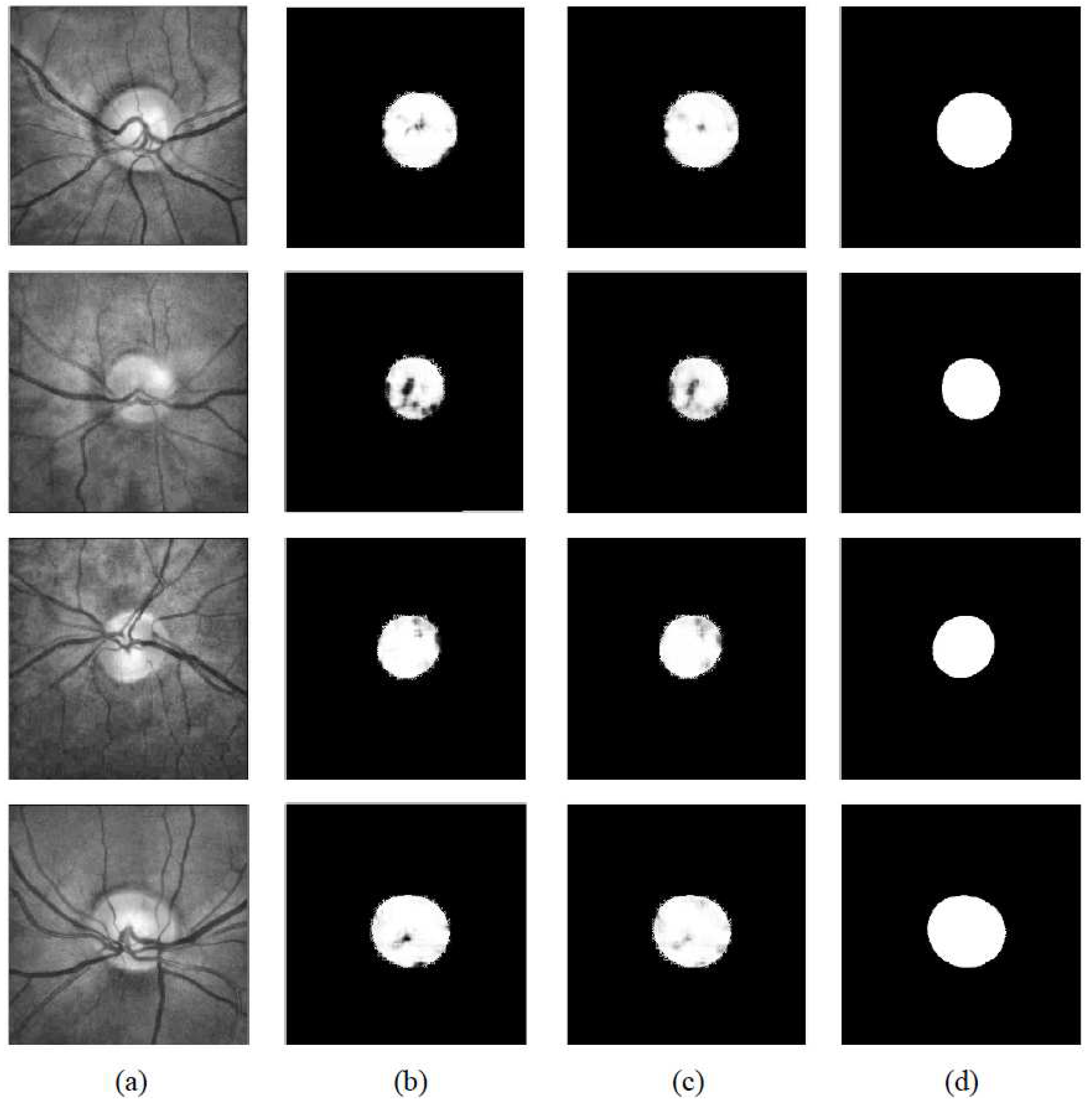

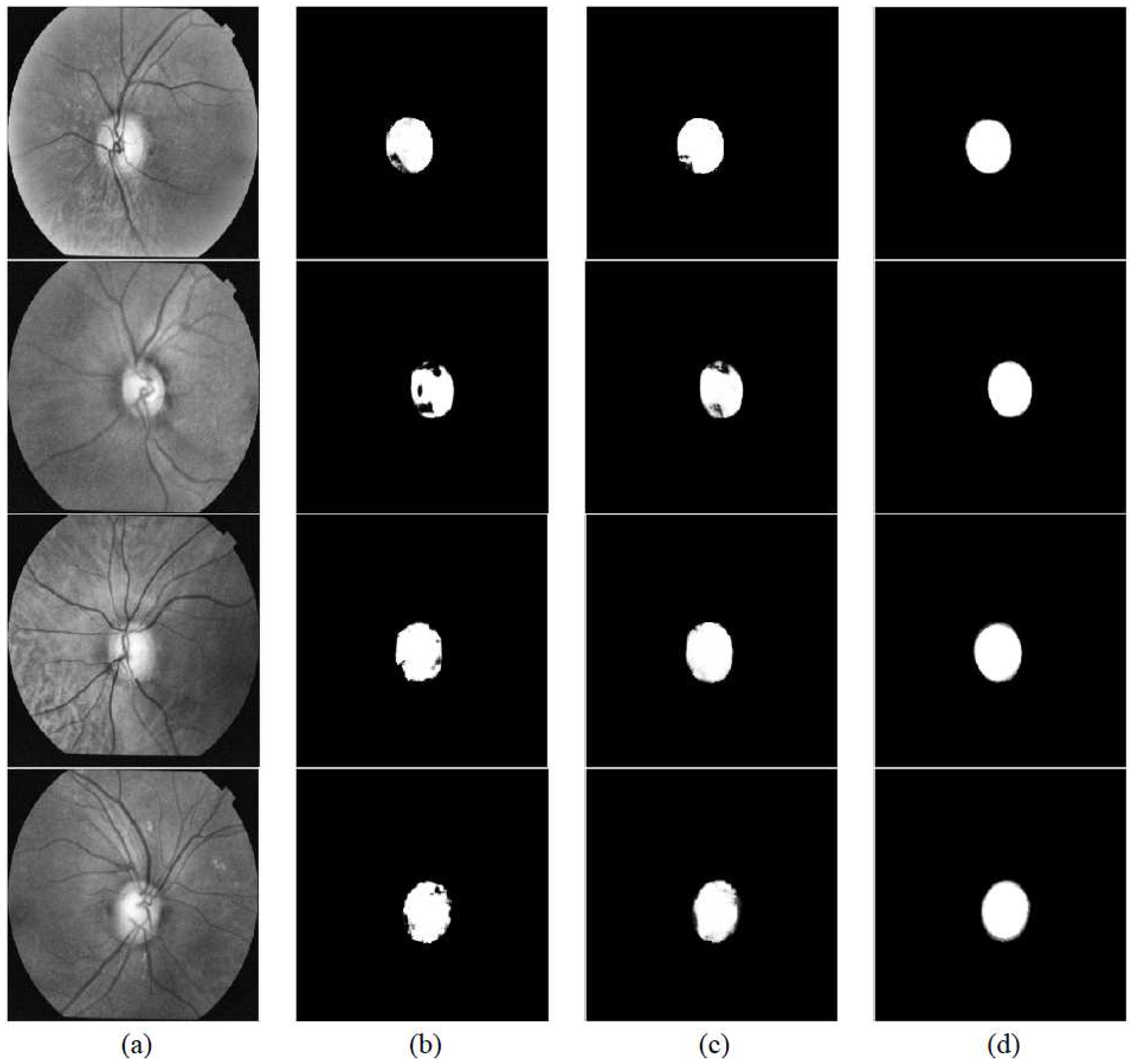

- In this paper, we propose a new method of optic disc segmentation based on weakly supervised learning and semi-supervised learning. The whole method only utilizes the bounding-box level labels and a few groundtruths to train the model. However, it takes a lot of manpower to annotate groundtruth in fully supervised semantic segmentation, and our final experimental results are close to the fully supervised method. This is the main contribution of this paper. We summarize the comparison between our method and the existing methods in Table 1.

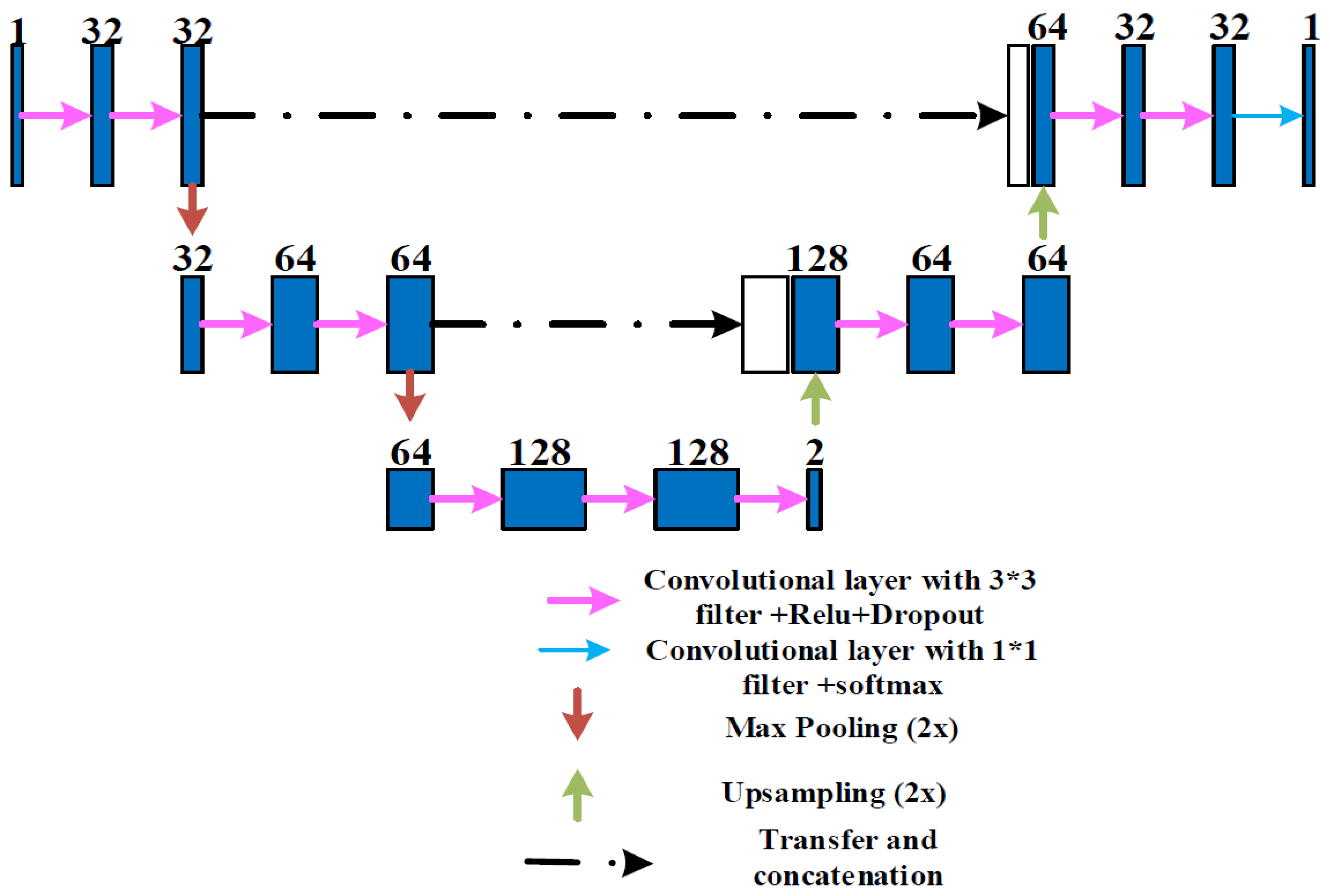

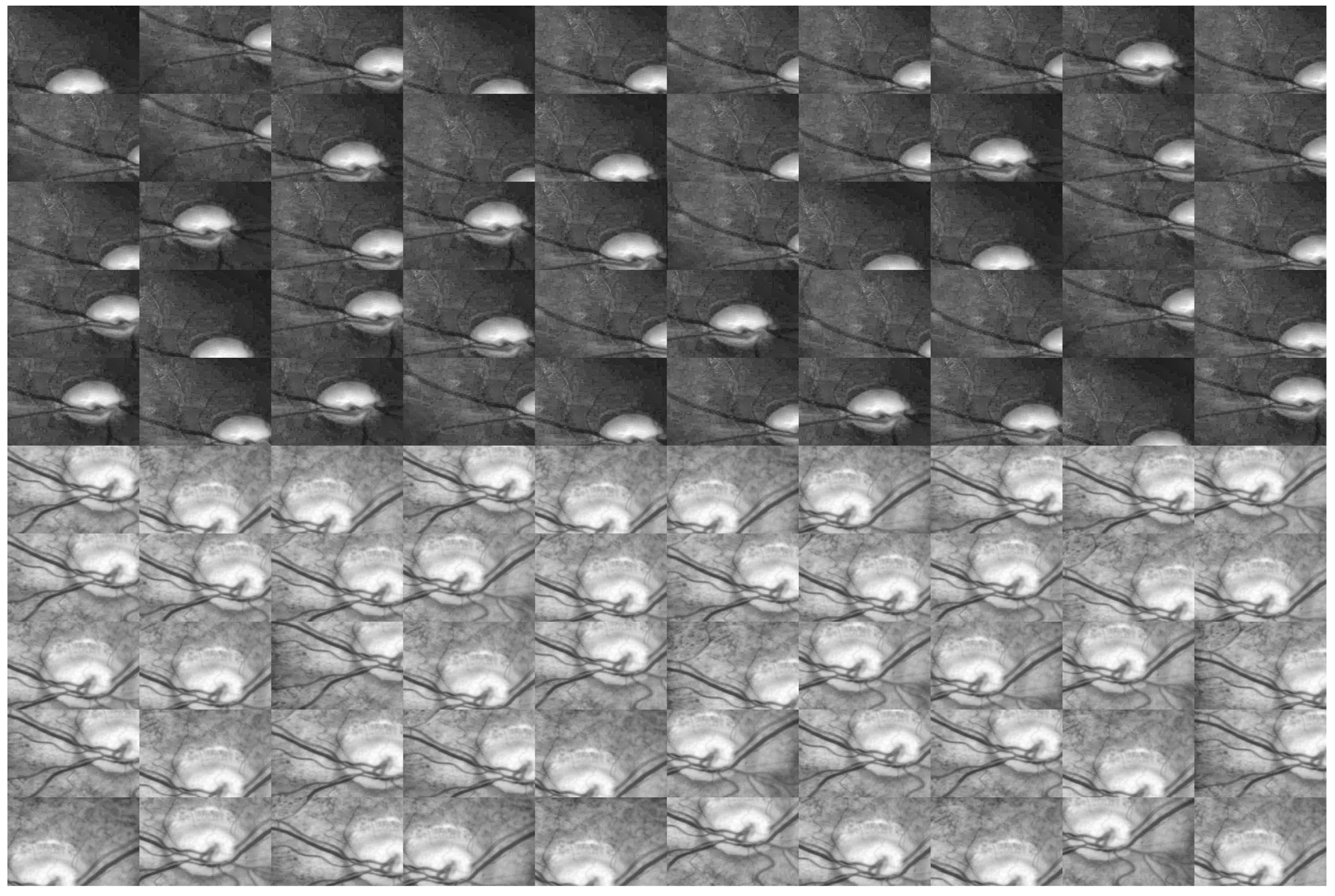

- We crop the original image into dozens of patches to train the network, which increases the amount of data so that the network can learn the features of the optic disc more accurately. We improve the U-net model, by reducing the original U-shape structure, and add a convolutional layer with dimension 2 at the end of the convolutional layer, so that we can reduce the amount of calculation and make full use of the low-level features in training and get better segmentation results.

2. Method

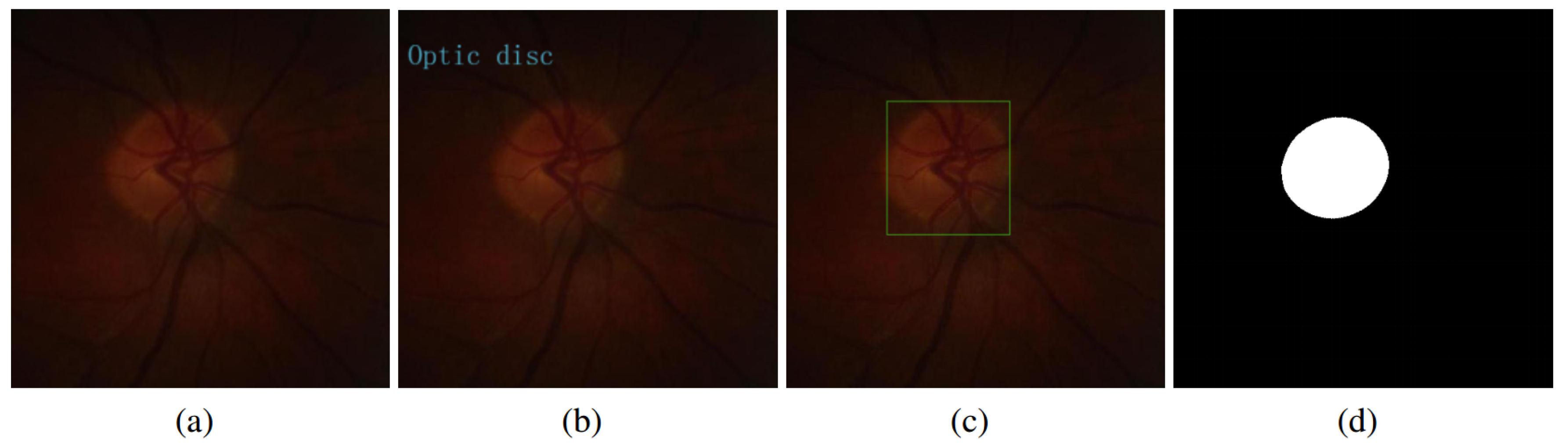

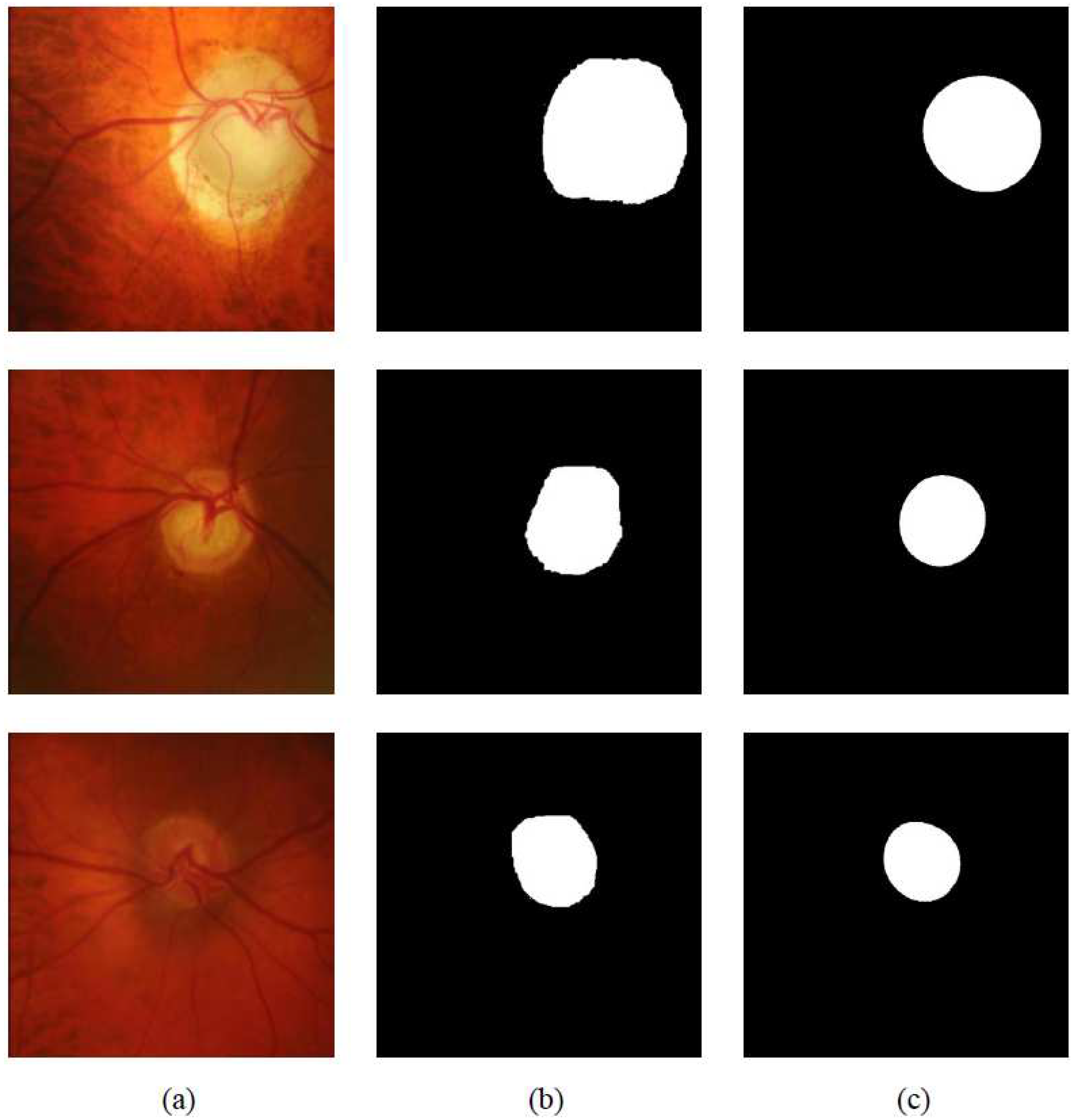

2.1. The Grabcut Baseline

- The user only provides to initialize trimap . The foreground and background are set to respectively. The trimap is specified by the user, , where are the marked background regions, are the marked foreground regions, are the remaining regions.

- Initialize when , initialize when . Each pixel in the image is segmented and represented by ‘opacity’ values . Where N is the number of pixels in the image, n is index which represents a specified pixel and is the corresponding ‘opacity’ value.

- Initialize foreground GMMs and background GMMs with sets and respectively.

- (1) For every n in , pixels are assigned by GMM components:The vector is a component of the GMM model, in order to process GMM in a traceable way. The parameters describe image foreground and background grey-level distributions, and the image is an array of grey values, indexed by the index n. Function is one of the components of Gibbs function E.

- (2) Using data z to learn GMM parameters:where are the parameters of the GMM model, function U is the data term, and it is one of the components of Gibbs energy function E, the meaning of other variables has been described above.

- (3) Use the min cut to solve the following expression: .

- (4) Repeat steps 1 to 3 until the above expression convergence.

- (5) Apply border matting.

- Edit: fix some pixels either to or ; update T at the same time. Execute step 3 above one time in the end.

- Execute the entire iterative minimisation algorithm to refine, and this operation is optional.

2.2. Weakly Supervised and Semi-Supervised Optic Disc Segmentation

| Algorithm 1 Weakly- and semi-supervised segmentation for optic disc of fundus image |

|

3. Experiments

3.1. Datasets

3.2. Implementation Details

3.3. Evaluations

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Maninis, K.K.; Pont-Tuset, J.; Arbeláez, P.; Van Gool, L. Deep retinal image understanding. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016, Athens, Greece, 17–21 October 2016; pp. 140–148. [Google Scholar]

- Datt, J.G.; Jayanthi, S.; Krishnadas, S.R. Optic disk and cup segmentation from monocular color retinal images for glaucoma assessment. IEEE Trans. Med. Imaging 2011, 30, 1192–1205. [Google Scholar]

- Mittapalli, P.S.; Kande, G.B. Segmentation of optic disk and optic cup from digital fundus images for the assessment of glaucoma. Biomed. Signal Process. Control 2016, 24, 34–46. [Google Scholar] [CrossRef]

- Saleh, M.D.; Salih, N.D.; Eswaran, C.; Abdullah, J. Automated segmentation of optic disc in fundus images. In Proceedings of the 2014 IEEE 10th International Colloquium on Signal Processing and Its Applications (CSPA 2014), Kuala Lumpur, Malaysia, 7–9 March 2014; pp. 145–150. [Google Scholar]

- Cheng, J.; Liu, J.; Xu, Y.; Yin, F.; Wong, D.W.; Tan, N.M.; Tao, D.; Cheng, C.Y.; Aung, T.; Wong, T.Y. Superpixel classification based optic disc and optic cup segmentation for glaucoma screening. IEEE Trans. Med. Imaging 2013, 32, 1019–1032. [Google Scholar] [CrossRef] [PubMed]

- Mahapatra, D.; Buhmann, J.M. A field of experts model for optic cup and disc segmentation from retinal fundus images. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), New York, NY, USA, 16–19 April 2015; pp. 218–221. [Google Scholar]

- Yu, H.; Barriga, E.S.; Agurto, C.; Echegaray, S.; Pattichis, M.S.; Bauman, W.; Soliz, P. Fast localization and segmentation of optic disk in retinal images using directional matched filtering and level Sets. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 644–657. [Google Scholar] [CrossRef] [PubMed]

- Zheng, S.H.; Chen, J.; Pan, L.; Yu, L. Optic disc detection on retinal images based on directional local contrast. Chin. J. Biomed. Eng. 2014, 33, 289–296. [Google Scholar]

- Walter, T.; Klein, J.C.; Massin, P.; Erginay, A. A contribution of image processing to the diagnosis of diabetic retinopathy—Detection of exudates in color fundus images of human retina. IEEE Trans. Med. Imaging 2002, 21, 1236–1243. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, M.; Fraz, M.M.; Barman, S.A. Localization and segmentation of optic disc in retinal images using Circular Hough transform and Grow Cut algorithm. Peerj 2016, 4, e2003. [Google Scholar] [CrossRef] [PubMed]

- Adam, H.; Michael, G. Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans. Med. Imaging 2003, 22, 951–958. [Google Scholar]

- Osareh, A.; Mirmehdi, M.; Thomas, B.; Markham, R. Comparison of Colour Spaces for Optic Disc Localisation in Retinal Images. In Proceedings of the 16th International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002. [Google Scholar]

- Kande, G.B.; Savithri, T.S.; Subbaiah, P.V.; Tagore, M.R. Automatic detection and boundary estimation of optic disc in fundus images using geometric active contours. J. Biomed. Sci. Eng. 2009, 2, 90–95. [Google Scholar] [CrossRef]

- Sevastopolsky, A. Optic disc and cup segmentation methods for glaucoma detection with modification of U-net convolutional neural network. Pattern Recognit. Image Anal. 2017, 27, 618–624. [Google Scholar] [CrossRef]

- Tan, J.H.; Acharya, U.R.; Bhandary, S.V.; Chua, K.C.; Sivaprasad, S. Segmentation of optic disc, fovea and retinal vasculature using a single convolutional neural network. J. Comput. Sci 2017, 20, 70–79. [Google Scholar] [CrossRef]

- Kolesnikov, A.; Lampert, C.H. Seed, Expand and Constrain: Three Principles for Weakly-Supervised 4Image Segmentation. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Vezhnevets, A.; Ferrari, V.; Buhmann, J.M. Weakly Supervised Structured Output Learning for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, Rhode Island, 16–21 June 2012. [Google Scholar]

- Rother, C.; Kolmogorov, V.; Blake, A. Grabcut: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Tseng, C.Y.; Wang, S.J.; Lai, Y.Y.; Zhou, Y.C. Image Detail and Color Enhancement Based on Channel-Wise Local Gamma Adjustment. SID Symp. Dig. Tech. Pap. 2009, 40, 1022–1025. [Google Scholar] [CrossRef]

- Fumero, F.; Alayón, S.; Sanchez, J.L.; Sigut, J.; Gonzalez-Hernandez, M. RIM-ONE: An open retinal image database for optic nerve evaluation. In Proceedings of the 24th IEEE International Symposium on Computer-Based Medical Systems, Bristol, UK, 27–30 June 2011. [Google Scholar]

- Sivaswamy, J.; Krishnadas, S.; Chakravarty, A.; Joshi, G.; Tabish, A.S. A comprehensive retinal image dataset for the assessment of glaucoma from the optic nerve head analysis. JSM Biomed. Imaging Data Pap. 2015, 2, 1004. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 2010, 9, 249–256. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems, Lake Tahoe, Spain, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Zahoor, M.N.; Fraz, M.M. Fast optic disc segmentation in retina using polar transform. IEEE Access 2017, 5, 12293–12300. [Google Scholar] [CrossRef]

- Zilly, J.; Buhmann, J.M.; Mahapatra, D. Glaucoma detection using entropy sampling and ensemble learning for automatic optic cup and disc segmentation. Comput. Med. Imaging Graph. 2017, 55, 28–41. [Google Scholar] [CrossRef] [PubMed]

| Traditional | Fully Supervised | Weakly Supervised | Ours | |

|---|---|---|---|---|

| Field of method | traditional method | deep learning | deep learning | deep learning |

| Using groundtruth to train | yes | yes | no | no |

| Involving optic disc image | yes | yes | no | yes |

| Segmentation result | good | very good | - | very good |

| Acc | Sen | Spe | IoU | Prediction Time | |

|---|---|---|---|---|---|

| Sevastopolsky [14] | - | - | - | 0.89 | 0.1 s |

| DRIU [1] | - | - | - | 0.89 | 0.13 s |

| Walter [9] | 0.9689 | 0.6715 | 0.9794 | 0.6227 | - |

| Abdullah [10] | 0.9989 | 0.8508 | 0.9966 | 0.8510 | - |

| Zahoor [25] | 0.9986 | 0.9384 | 0.9994 | 0.8860 | - |

| ours(weakly supervised) | 0.9918 | 0.9124 | 0.9971 | 0.8719 | 4.49 s |

| ours(semi-supervised) | 0.9920 | 0.9015 | 0.9974 | 0.8762 | 4.49 s |

| ours(fully-supervised) | 0.9932 | 0.9038 | 0.9994 | 0.8958 | 4.49 s |

| Acc | Sen | Spe | IoU | Prediction Time | |

|---|---|---|---|---|---|

| Sevastopolsky [14] | - | - | - | 0.90 | 0.1 s |

| Zilly [26] | - | - | - | 0.91 | 5.3 s |

| ours(weakly supervised) | 0.9956 | 0.8917 | 0.9989 | 0.8637 | 4.49 s |

| ours(semi-supervised) | 0.9962 | 0.9149 | 0.9988 | 0.8825 | 4.49 s |

| ours(fully-supervised) | 0.9972 | 0.9287 | 0.9996 | 0.9187 | 4.49 s |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Z.; Chen, D. Weakly Supervised and Semi-Supervised Semantic Segmentation for Optic Disc of Fundus Image. Symmetry 2020, 12, 145. https://doi.org/10.3390/sym12010145

Lu Z, Chen D. Weakly Supervised and Semi-Supervised Semantic Segmentation for Optic Disc of Fundus Image. Symmetry. 2020; 12(1):145. https://doi.org/10.3390/sym12010145

Chicago/Turabian StyleLu, Zheng, and Dali Chen. 2020. "Weakly Supervised and Semi-Supervised Semantic Segmentation for Optic Disc of Fundus Image" Symmetry 12, no. 1: 145. https://doi.org/10.3390/sym12010145

APA StyleLu, Z., & Chen, D. (2020). Weakly Supervised and Semi-Supervised Semantic Segmentation for Optic Disc of Fundus Image. Symmetry, 12(1), 145. https://doi.org/10.3390/sym12010145