Multi-Aspect Embedding for Attribute-Aware Trajectories

Abstract

:1. Introduction

2. Related Work

3. Preliminaries and Problem Statement

4. The MAEAT Approach

4.1. Multi-Aspect Embedding with Aspect Regularization

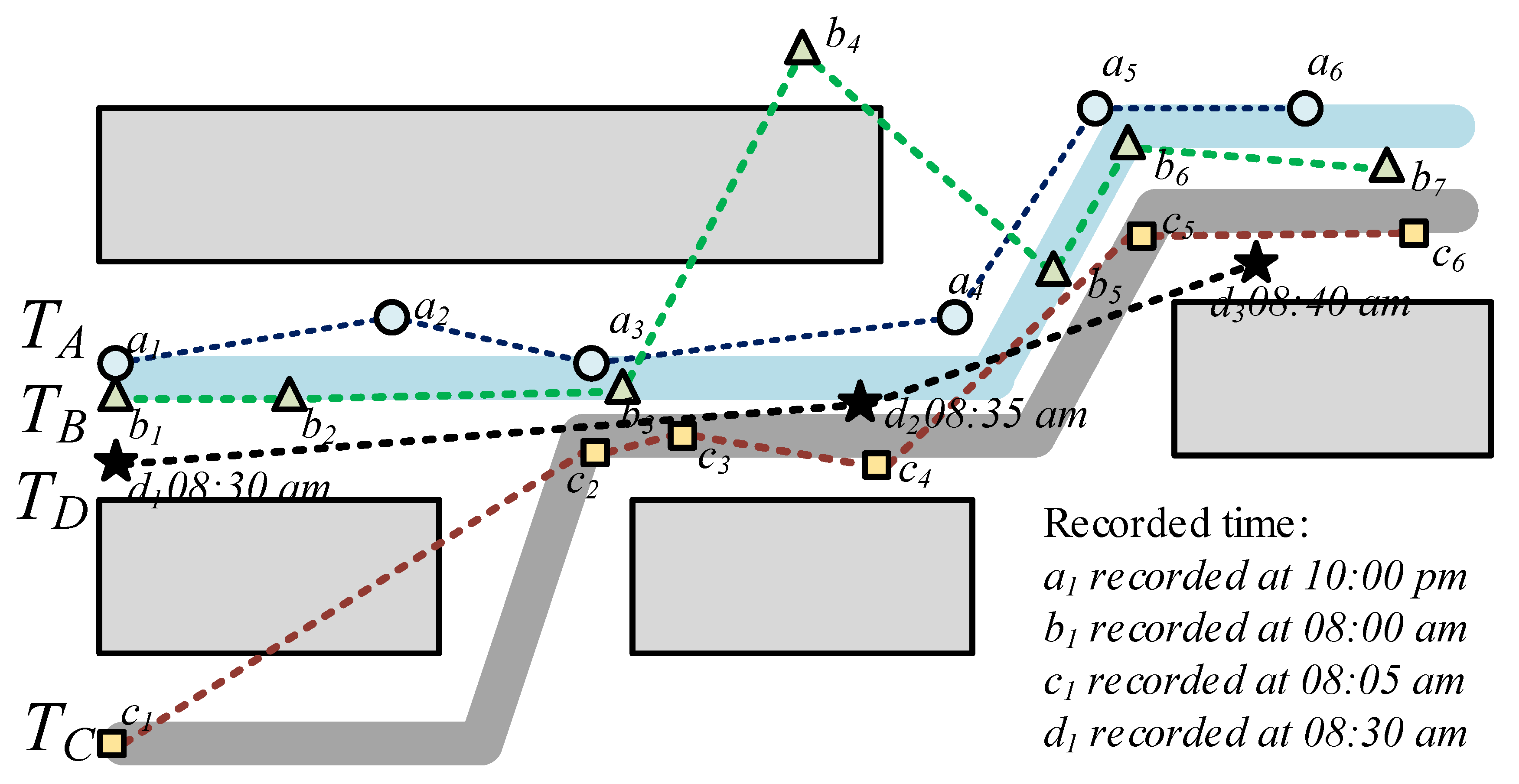

4.2. Aspect Sequence Acquisition

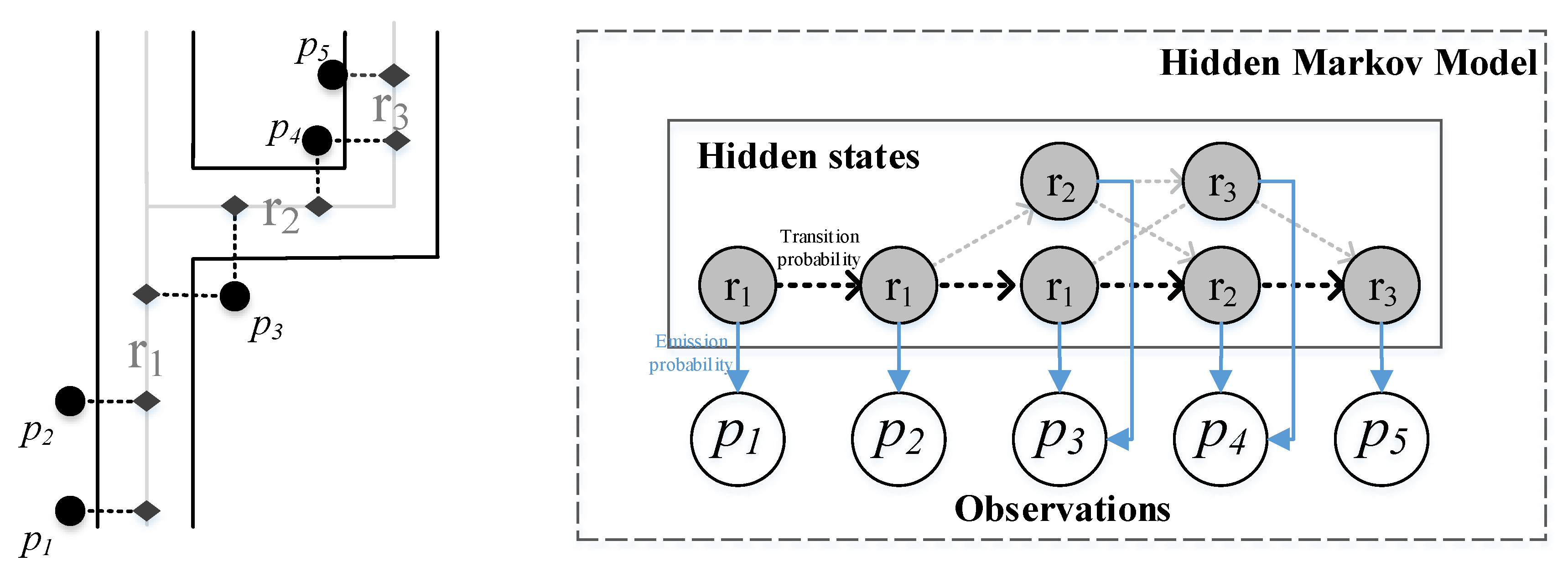

4.2.1. Spatial Aspect Extraction

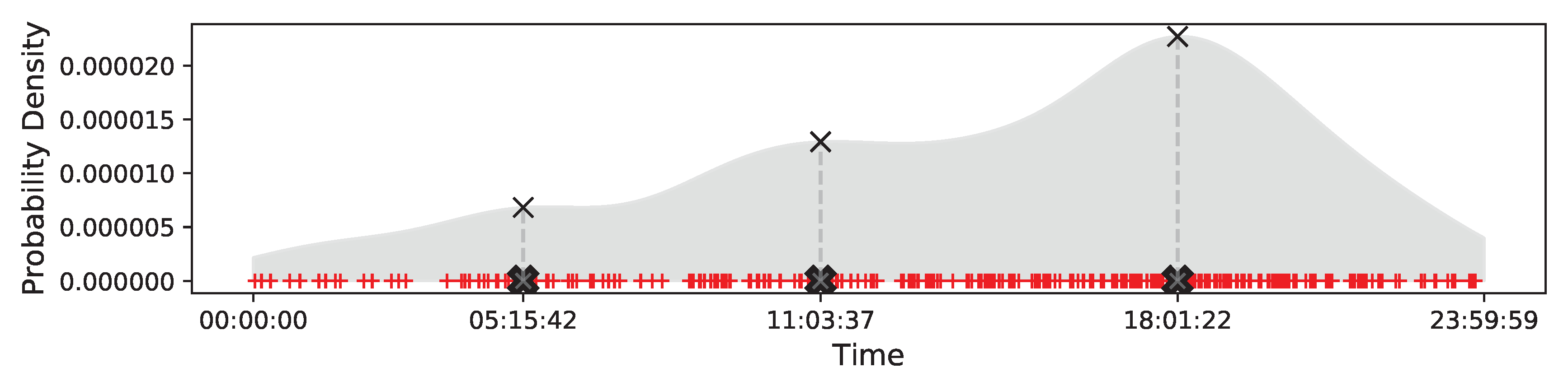

4.2.2. Temporal Aspect Extraction

5. Experiments

5.1. Experimental Settings

5.1.1. Datasets

5.1.2. Compared Methods

- Point-matching methods: Dynamic time warping (DTW), edit distance with real penalty (ERP), longest common subsequences (LCSS) are compared since they are widely adopted methods for trajectory similarity computation.

- Road-network-aware method: DISON [22] is a recent road-network-aware method which computes the similarity based on LCSS score between sequences of road segments.

- Encoder-decoder methods: Recurrent neural network (RNN), gated recurrent unit (GRU) and long-short term memory (LSTM) are compared as three basic encoder-decoder methods. Moreover, we compare T2vec [14] which is a state-of-the-art trajectory embedding method based on the encoder-decoder architecture (https://github.com/boathit/t2vec).

- MAEAT, the methods proposed in this paper: A variant of the proposed MAEAT is meanwhile compared in which only the spatial aspect is considered. We involve such a variant not only for illustrating the effectiveness of multiple aspect embedding but also for the ablation test. We denote this degraded version as MAEAT(S).

5.1.3. Parameters

5.2. Experimental Analysis

5.2.1. Quantitative Analysis

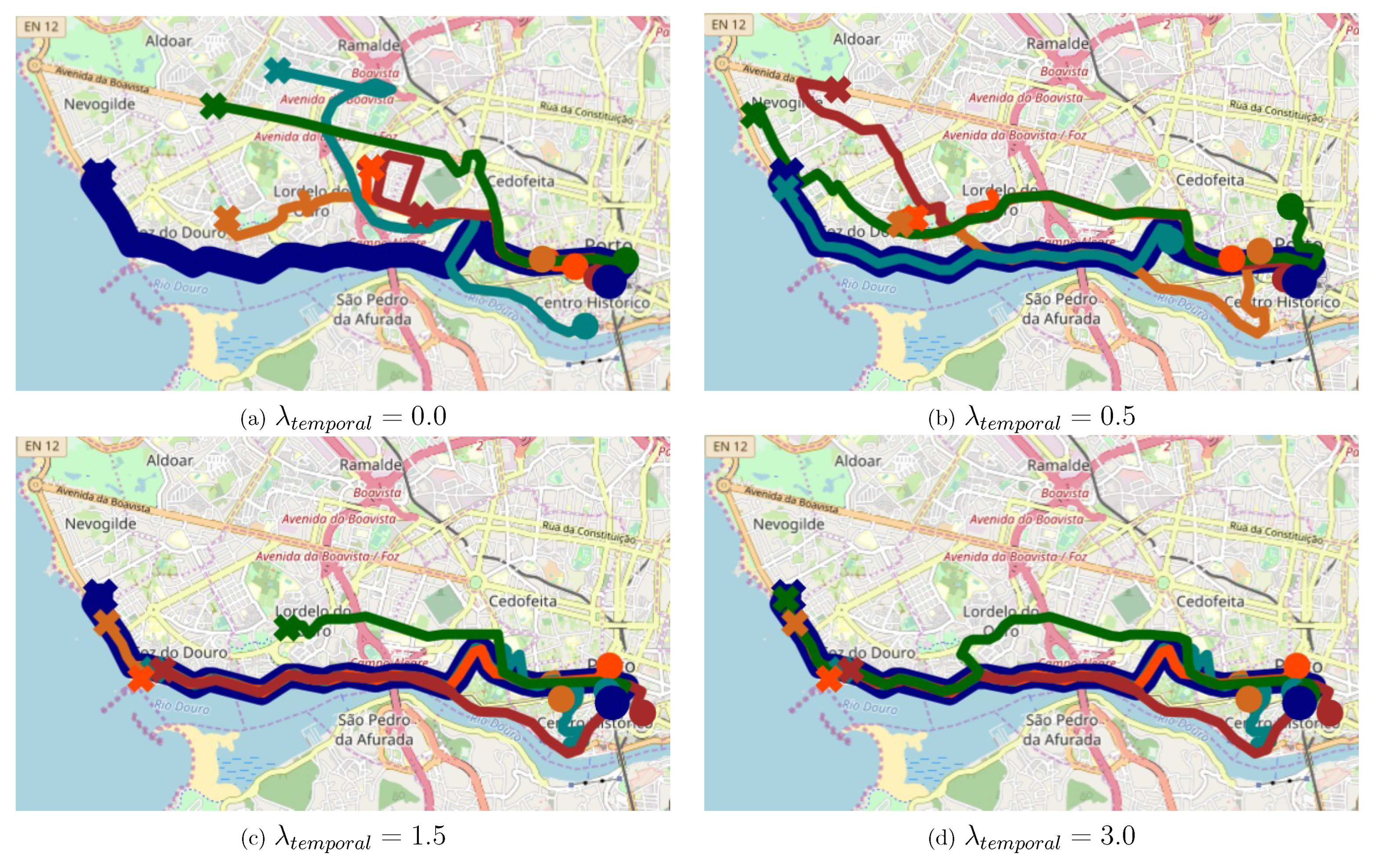

5.2.2. Qualitative Analysis

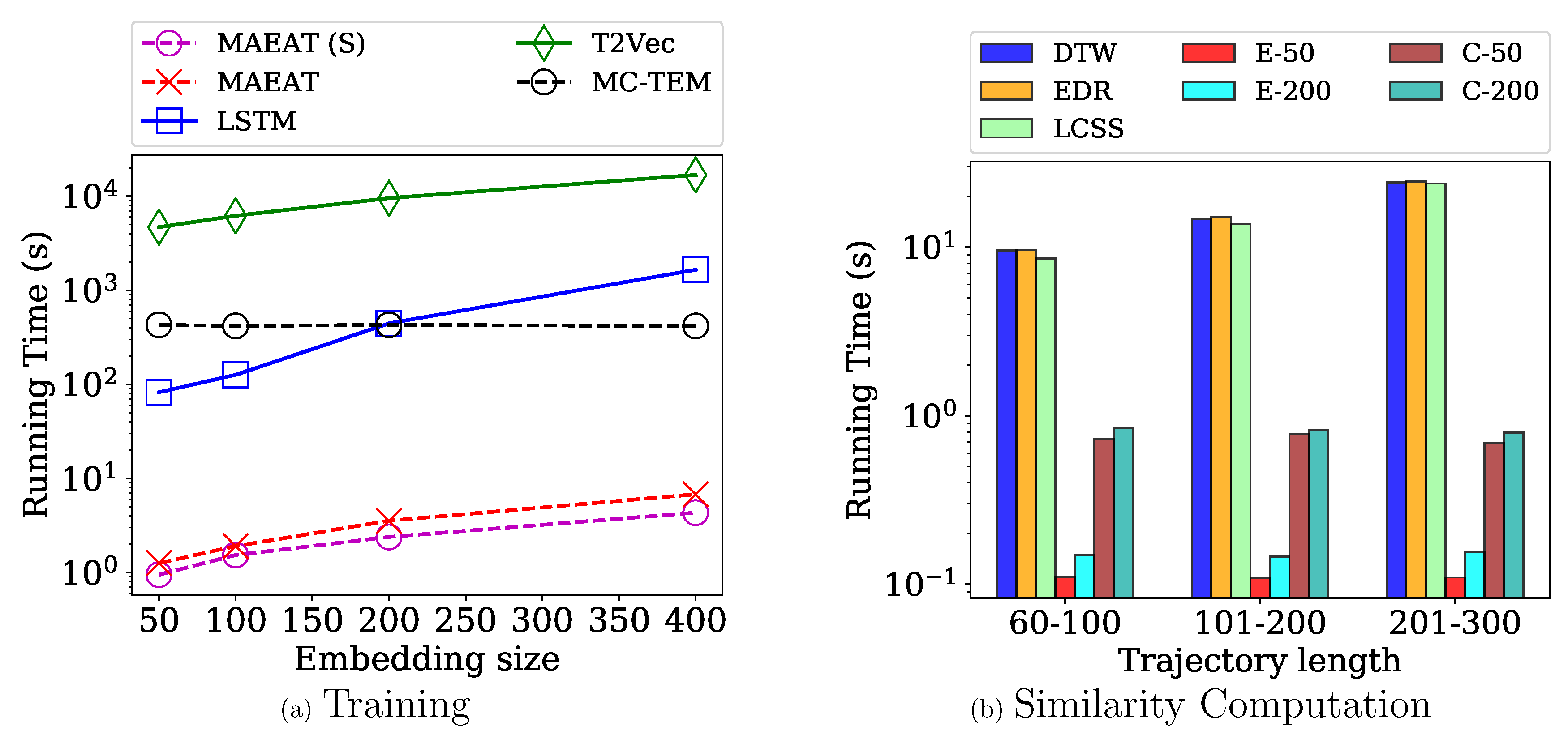

5.2.3. Efficiency Evaluation

5.2.4. Effects of Regularization

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lee, J.G.; Han, J.; Whang, K.Y. Trajectory Clustering: A Partition-and-group Framework. In Proceedings of the 2007 ACM SIGMOD International Conference on Management of Data, Beijing, China, 11–14 June 2007; ACM: New York, NY, USA, 2007; pp. 593–604. [Google Scholar] [CrossRef]

- Andrienko, G.; Andrienko, N.; Fuchs, G.; Garcia, J.M.C. Clustering Trajectories by Relevant Parts for Air Traffic Analysis. IEEE Trans. Vis. Comput. Graph. 2018, 24, 34–44. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.G.; Han, J.; Li, X.; Gonzalez, H. TraClass: Trajectory Classification Using Hierarchical Region-based and Trajectory-based Clustering. Proc. VLDB Endow. 2008, 1, 1081–1094. [Google Scholar] [CrossRef]

- Xia, T.; Yu, Y.; Xu, F.; Sun, F.; Guo, D.; Jin, D.; Li, Y. Understanding Urban Dynamics via State-sharing Hidden Markov Model. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; ACM: New York, NY, USA, 2019; pp. 3363–3369. [Google Scholar] [CrossRef]

- Yao, Z.; Fu, Y.; Liu, B.; Hu, W.; Xiong, H. Representing Urban Functions Through Zone Embedding with Human Mobility Patterns. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 3919–3925. [Google Scholar]

- Zheng, Y. Trajectory Data Mining: An Overview. ACM Trans. Intell. Syst. Technol. 2015, 6, 1–41. [Google Scholar] [CrossRef]

- Laxhammar, R.; Falkman, G. Online Learning and Sequential Anomaly Detection in Trajectories. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1158–1173. [Google Scholar] [CrossRef] [PubMed]

- Shang, S.; Ding, R.; Zheng, K.; Jensen, C.S.; Kalnis, P.; Zhou, X. Personalized trajectory matching in spatial networks. VLDB J. 2014, 23, 449–468. [Google Scholar] [CrossRef]

- Chen, L.; Ng, R. On the Marriage of Lp-norms and Edit Distance. In Proceedings of the 30th International Conference on Very Large Data Bases, VLDB Endowment, Toronto, ON, Canada, 31 August–3 September 2004; Volume 30, pp. 792–803. [Google Scholar]

- Keogh, E.; Ratanamahatana, C.A. Exact indexing of dynamic time warping. Knowl. Inf. Syst. 2005, 7, 358–386. [Google Scholar] [CrossRef]

- Vlachos, M.; Kollios, G.; Gunopulos, D. Discovering Similar Multidimensional Trajectories. In Proceedings of the 18th International Conference on Data Engineering, San Jose, CA, USA, 26 February–1 March 2002; pp. 673–684. [Google Scholar] [CrossRef]

- Chen, L.; Özsu, M.T.; Oria, V. Robust and Fast Similarity Search for Moving Object Trajectories. In Proceedings of the 2005 ACM SIGMOD International Conference on Management of Data, Baltimore, MD, USA, 14–16 June 2005; ACM: New York, NY, USA, 2005; pp. 491–502. [Google Scholar] [CrossRef]

- Dokmanic, I.; Parhizkar, R.; Ranieri, J.; Vetterli, M. Euclidean Distance Matrices: Essential theory, algorithms, and applications. IEEE Signal Process. Mag. 2015, 32, 12–30. [Google Scholar] [CrossRef]

- Li, X.; Zhao, K.; Cong, G.; Jensen, C.S.; Wei, W. Deep Representation Learning for Trajectory Similarity Computation. In Proceedings of the 2018 IEEE 34th International Conference on Data Engineering (ICDE), Paris, France, 16–19 April 2018; pp. 617–628. [Google Scholar]

- Yang, W.; Zhao, Y.; Zheng, B.; Liu, G.; Zheng, K. Modeling Travel Behavior Similarity with Trajectory Embedding. In Lecture Notes in Computer Science, Proceedings of the International Conference on Database Systems for Advanced Applications; Springer: Cham, Switzerland, 2018; pp. 630–646. [Google Scholar]

- Zhou, N.; Zhao, W.X.; Zhang, X.; Wen, J.; Wang, S. A General Multi-Context Embedding Model for Mining Human Trajectory Data. IEEE Trans. Knowl. Data Eng. 2016, 28, 1945–1958. [Google Scholar] [CrossRef]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on International Conference on Machine Learning–Volume 32. JMLR.org, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Sankararaman, S.; Agarwal, P.K.; Mølhave, T.; Pan, J.; Boedihardjo, A.P. Model-driven Matching and Segmentation of Trajectories. In Proceedings of the 21st ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Orlando, FL, USA, 5–8 November 2013; ACM: New York, NY, USA, 2013; pp. 234–243. [Google Scholar] [CrossRef]

- Ranu, S.; Deepak, P.; Telang, A.D.; Deshpande, P.; Raghavan, S. Indexing and matching trajectories under inconsistent sampling rates. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Korea, 13–17 April 2015; pp. 999–1010. [Google Scholar] [CrossRef]

- Su, H.; Zheng, K.; Huang, J.; Wang, H.; Zhou, X. Calibrating trajectory data for spatio-temporal similarity analysis. VLDB J. 2015, 24, 93–116. [Google Scholar] [CrossRef]

- Yao, D.; Cong, G.; Zhang, C.; Bi, J. Computing Trajectory Similarity in Linear Time: A Generic Seed-Guided Neural Metric Learning Approach. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE), Macao, China, 8–11 April 2019; pp. 1358–1369. [Google Scholar]

- Yuan, H.; Li, G. Distributed In-memory Trajectory Similarity Search and Join on Road Network. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE), Macao, China, 8–11 April 2019; pp. 1262–1273. [Google Scholar] [CrossRef]

- Chen, M.; Yu, X.; Liu, Y. MPE: A mobility pattern embedding model for predicting next locations. World Wide Web 2018. [Google Scholar] [CrossRef]

- Zhu, M.; Chen, W.; Xia, J.; Ma, Y.; Zhang, Y.; Luo, Y.; Huang, Z.; Liu, L. Location2vec: A Situation-Aware Representation for Visual Exploration of Urban Locations. IEEE Trans. Intell. Transp. Syst. 2019, 1–10. [Google Scholar] [CrossRef]

- Ying, H.; Wu, J.; Xu, G.; Liu, Y.; Liang, T.; Zhang, X.; Xiong, H. Time-aware Metric Embedding with Asymmetric Projection for Successive POI Recommendation. World Wide Web 2019, 22, 2209–2224. [Google Scholar] [CrossRef]

- Wang, H.; Shen, H.; Ouyang, W.; Cheng, X. Exploiting POI-Specific Geographical Influence for Point-of-Interest Recommendation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Liu, Y.; Pham, T.A.N.; Cong, G.; Yuan, Q. An Experimental Evaluation of Point-of-interest Recommendation in Location-based Social Networks. Proc. VLDB Endow. 2017, 10, 1010–1021. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Wieting, J.; Bansal, M.; Gimpel, K.; Livescu, K. Towards Universal Paraphrastic Sentence Embeddings. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 2–4 May 2016. [Google Scholar]

- Newson, P.; Krumm, J. Hidden Markov Map Matching Through Noise and Sparseness. In Proceedings of the 17th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Washington, DC, USA, 4–6 November 2009; ACM: New York, NY, USA, 2009; pp. 336–343. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: a robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Parzen, E. On Estimation of a Probability Density Function and Mode. Ann. Math. Stat. 1962, 33, 1065–1076. [Google Scholar] [CrossRef]

- Morris, B.; Trivedi, M. Learning trajectory patterns by clustering: Experimental studies and comparative evaluation. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 312–319. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| T | trajectory T |

| the length of trajectory T | |

| the state i of a trajectory | |

| the set of attributes of state i ( = ) | |

| the number of attributes of state i ( = ) | |

| , | j-th attribute of state i in sets , |

| K-aspect trajectory corresponding to trajectory T | |

| K | the number of aspects considered in the model |

| K-aspect Sequence corresponding to | |

| the set of K discrete tokens corresponding to | |

| the discrete token reflecting the attribute | |

| the trajectory database | |

| v | an embedding vector |

| the embedding vectors for discrete token , trajectory T | |

| b-th embedding vector of a-th aspect | |

| tuning parameter that weights the importance of the aspect a | |

| learning rate |

| Dataset | i5sim | i5sim3 | cross | cross2 | cross3 | Porto |

|---|---|---|---|---|---|---|

| #Trajectories | 800 | 1600 | 1900 | 1900 | 1900 | 5000 |

| #Points | 13,745 | 54,011 | 24,420 | 24,420 | 24,420 | 803,717 |

| #Clusters | 8 | 16 | 19 | 13 | 39 | N/A |

| Average Length | 17 | 34 | 13 | 13 | 13 | 161 |

| Compared Method | i5sim | i5sim3 | cross | cross2 | cross3 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Purity | ARI | Purity | ARI | Purity | ARI | Purity | ARI | Purity | ARI | |

| Dynamic time warping (DTW) | 1.0 | 1.0 | 1.0 | 1.0 | 0.974 | 0.955 | 1.0 | 1.0 | 0.402 | 0.320 |

| Edit distance on real sequence (EDR) | 1.0 | 1.0 | 0.999 | 0.997 | 0.610 | 0.518 | 0.692 | 0.564 | 0.4 | 0.339 |

| Longest common subsequence (LCSS) | 1.0 | 1.0 | 0.993 | 0.985 | 0.985 | 0.969 | 0.797 | 0.689 | 0.392 | 0.311 |

| DISON | 1.0 | 1.0 | 0.691 | 0.738 | 0.687 | 0.726 | 0.797 | 0.453 | 0.225 | 0.453 |

| Encoder-Decoder (Recurrent neural network: RNN) | 1.0 | 1.0 | 0.864 | 0.839 | 0.026 | 0.152 | 0.192 | 0.023 | 0.116 | 0.014 |

| Encoder-Decoder (Gated recurrent unit: GRU) | 1.0 | 1.0 | 0.996 | 0.991 | 0.521 | 0.385 | 0.598 | 0.433 | 0.332 | 0.264 |

| Encoder-Decoder (Long-short term memory: LSTM) | 1.0 | 1.0 | 0.997 | 0.995 | 0.669 | 0.604 | 0.821 | 0.752 | 0.397 | 0.342 |

| T2Vec | 1.0 | 1.0 | 0.519 | 0.539 | 0.779 | 0.718 | 0.622 | 0.524 | 0.252 | 0.191 |

| MC-TEM | 1.0 | 1.0 | 0.574 | 0.542 | 0.861 | 0.819 | 0.927 | 0.919 | 0.751 | 0.680 |

| MAEAT(S) | 1.0 | 1.0 | 1.0 | 1.0 | 0.706 | 0.739 | 1.0 | 1.0 | 0.407 | 0.403 |

| MAEAT | - | - | - | - | - | - | - | - | 0.982 | 0.963 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boonchoo, T.; Ao, X.; He, Q. Multi-Aspect Embedding for Attribute-Aware Trajectories. Symmetry 2019, 11, 1149. https://doi.org/10.3390/sym11091149

Boonchoo T, Ao X, He Q. Multi-Aspect Embedding for Attribute-Aware Trajectories. Symmetry. 2019; 11(9):1149. https://doi.org/10.3390/sym11091149

Chicago/Turabian StyleBoonchoo, Thapana, Xiang Ao, and Qing He. 2019. "Multi-Aspect Embedding for Attribute-Aware Trajectories" Symmetry 11, no. 9: 1149. https://doi.org/10.3390/sym11091149