1. Introduction

Nowadays, various models and methodologies are available to calculate theoretical values of options, including the famous Black–Scholes model as well as stochastic volatility models such as the Heston or the Stein and Stein models. These models assume that the price of the underlying asset is represented by a continuous function, which is not always consistent with real market prices. This paper focuses on jump–diffusion models originally suggested by Merton [

1] and later generalized (e.g., in [

2]). These models assume that the spot price

S of the underlying asset at time

follows the jump–diffusion process

where

denotes the left-hand limit of

S at

,

is the drift rate,

is standard Brownian motion and

is the volatility associated with the Brownian component of the process.

is a Poisson process with intensity

that is independent of

, and

is a sequence of independent identically distributed nonnegative random variables such that

has a distribution with the probability density function

g. There are several possible choices for the function

g. In the Merton model

is normally distributed with the mean

and the standard deviation

and

For other models and more details, see [

1,

2,

3]. Let the variable

represent time to maturity and

r be a risk-free rate. Using no arbitrage principle and the standard Itô calculus, one can derive that the market price

of the option at time to maturity

t is the solution of the equation [

1,

2,

3,

4]:

where the operators

and

are given by

and

The parameter represents the expected value . The initial and boundary conditions depend on the type of the option.

In

Section 3, Equation (

3) is transformed to logarithmic prices and restricted to a bounded domain. Then, the Galerkin method combined with the Crank–Nicolson scheme is used for its numerical solution. The stability of the scheme and convergence of the method have already been investigated (see [

4,

5,

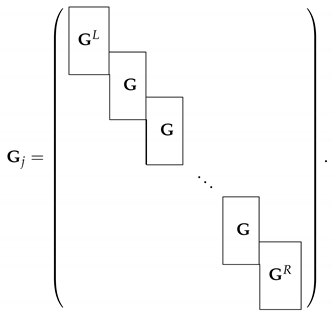

6] and references therein). This paper focuses on the structure of the discretization matrices. It is known that matrices arising from wavelet discretization of differential operators have a so-called finger pattern (see

Figure 1, left). In [

7,

8,

9,

10], cubic and quartic wavelet bases were constructed such that the bi-infinite stiffness and mass matrices have a finite number of nonzero entries in each column and have a similar structure, as shown in

Figure 1 (left). This simplifies the algorithm and increases the efficiency of wavelet-based methods. However, a construction of quadratic spline wavelets with this property has not yet been proposed, and such a construction is the main aim of this paper. It should also be mentioned that the wavelet bases from [

11,

12,

13], which are semiorthogonal with respect to the

-seminorm, lead to banded matrices for the one-dimensional Poisson equation, and the

-orthogonal wavelets from [

14,

15] lead to diagonal mass matrices.

The differential operator

is a special case of an operator

defined by

for

. Let

and

be positive definite on

.

covers the Poisson equation, the Helmholtz equation, the stationary convection diffusion equation and various equations from financial mathematics, such as equations representing the Black–Scholes model and the Heston model semidiscretized in time using the

-scheme.

For

, denote

,

,

,

,

and

where

represents the set of all polynomials on

of degree at most

m. The main aim of this paper is to construct a quadratic spline wavelet basis

on the interval

satisfying homogeneous Dirichlet boundary conditions such that the matrix

where

is the

-inner product, has

nonzero entries in each column if

,

, and

for some

.

Wavelet bases on product domains can be constructed using an isotropic or anisotropic tensor product approach (see, e.g., [

16,

17]). The discretization matrices for such multidimensional wavelet bases also have

of nonzero entries in each row if

Although the property that the matrix has nonzero entries in each column is valid for any , in most applications l should be small.

Since the differential operator

is a special case of the operator

, the discretization matrix for this operator is sparse. This paper also aims to derive decay estimates for the entries of matrix arising from discretization of the integral term using the Galerkin method with the proposed wavelet basis and to show that truncated discretization matrices for the problem in Equation (

3) are sparse. The sparsity of the discretization matrix has two advantages. First, the multiplication of this matrix with a vector requires

floating-point operations, while other quadratic spline wavelet bases require

operations and other bases such as quadratic B-spline basis require

operations because the matrix is typically full. This increases the efficiency of iterative methods for the numerical solution of the resulting discrete system. Second, due to the smaller number of elements, the computation of the discretization matrix is faster for the Galerkin method with the proposed basis than for the Galerkin method with other bases of the same order, e.g., other quadratic spline wavelet bases and quadratic B-spline bases.

In addition to the orthogonality property, it is required that wavelets have vanishing moments and that the wavelet basis is well conditioned. Vanishing wavelet moments determine the decay of entries of discretization matrices. Since the constructed wavelets have three vanishing moments, this decay is fast (see Theorem 5 in

Section 3). Furthermore, due to vanishing moments, the basis can be used in adaptive wavelet methods (see [

17]). It is important that the basis is well-conditioned because the condition numbers of discretization matrices depend on condition numbers of the basis and small condition numbers of system matrices guarantee the stability of computation and influences the number of iterations of iterative methods used for the numerical solution of the resulting system.

Due to these interesting properties, the wavelet basis proposed in this paper can be used in many applications such as the numerical solution of various types of operator equations using the wavelet-Galerkin method, an adaptive wavelet method or a collocation method. For a survey of such applications, refer to [

4,

17,

18].

The paper is organized as follows. In

Section 2, a construction of a wavelet basis satisfying the aforementioned properties is proposed and a rigorous proof of its Riesz basis property is provided. It is shown that the condition numbers of the basis are small with respect to both the

-norm and the

-seminorm. In

Section 3, the problem in Equation (

3) is discretized and the properties of discretization matrices studied. Finally, in

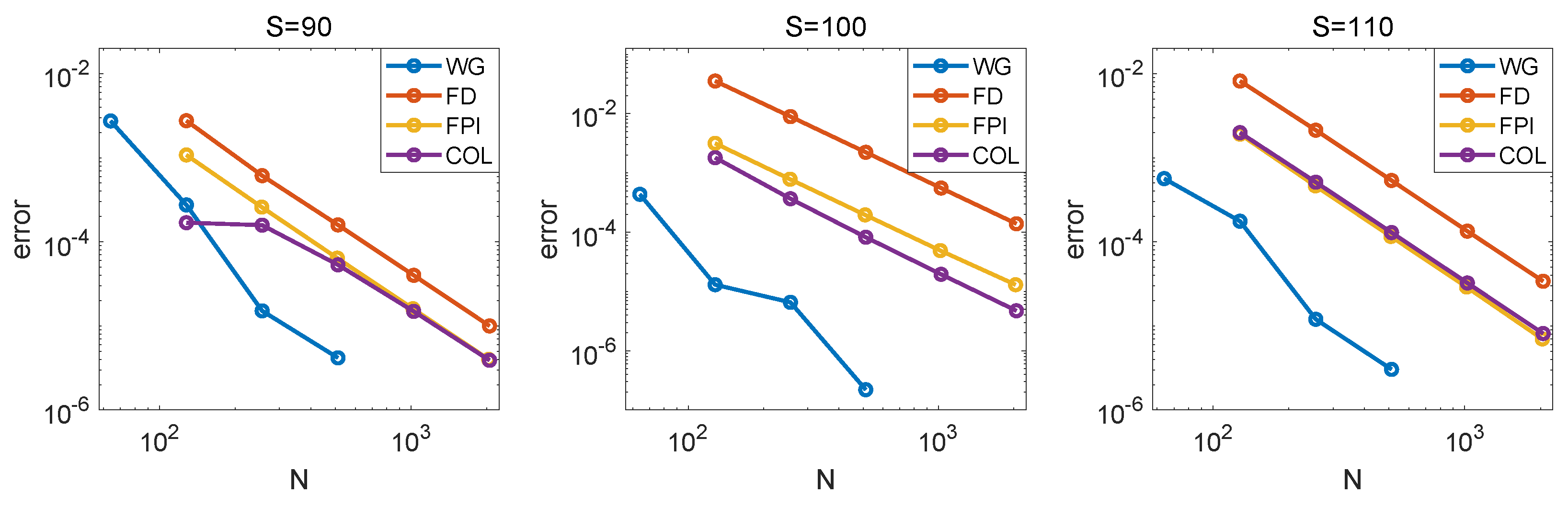

Section 4, numerical experiments are provided for pricing European options under the Merton model, and it is shown that the proposed method is efficient because it can achieve high-order convergence with respect to both time and spatial variables, the number of iterations is small, and due to sparsity of system matrices one iteration only requires a small number of floating-point operations. Furthermore, in comparison with methods from [

19,

20,

21], the proposed method requires a smaller number of degrees of freedom to obtain a sufficiently accurate solution.

2. Construction of Wavelets

First, briefly recall the concept of a wavelet basis. Let

be at most a countable index set such that each index

takes the form

and denote

. The norm of

,

, is defined by

The space of all sequences

with finite norm is denoted by

The symbol denotes the space of square-integrable functions defined on . Let be a real Hilbert space equipped with the inner product and the norm , e.g., H is the Sobolev space of functions that vanish at boundary points and whose first weak derivatives are in . The aim is to construct a wavelet basis for H in the sense of the following definition.

Definition 1. A family is called a wavelet basis of H if:

- (i)

Ψ

is a Riesz basis for H, i.e., the span of Ψ

is dense in H and there exist constants such thatfor all .- (ii)

The functions are local in the sense thatwhere the constant C does not depend on λ, and at a given level j the supports of only finitely many wavelets overlap at any point x. - (iii)

The family Ψ

has the hierarchical structurefor some . - (iv)

There exists such that all functions , , have L vanishing moments, i.e.,

For the two countable sets of functions

, the symbol

denotes the matrix

The constants

are called a lower and upper Riesz bound, respectively, and the number

is called the

condition number of

. In some papers, the squares of norms are used in Equation (

14) and the Riesz bounds are defined as

and

. The Gram matrix

can be finite or biinfinite and it is known that it represents a linear operator that is continuous, positive definite, and self-adjoint, and that the constants

and

satisfy

If

satisfies Equation (

14) but the span of

is not necessarily dense in

H, then

is called a

Riesz sequence in

H.

The definition of a wavelet basis is not unified in the mathematical literature, and Conditions (i)–(iv) from Definition 1 can be generalized. The functions from the set

are called

scaling functions and the functions from the set

,

are called

wavelets on the level

j. Wavelets in the inner part of the interval are typically translations and dilations of one function

or several functions

also called

wavelets, i.e.,

for some

and some

, and similarly the wavelets near the boundary are derived from functions called

boundary wavelets.

In the following, a construction of a new wavelet basis is proposed. Scaling functions are defined as in [

22,

23,

24]. Let

and

be quadratic B-splines on knots

and

, respectively. Then,

and

have the explicit form

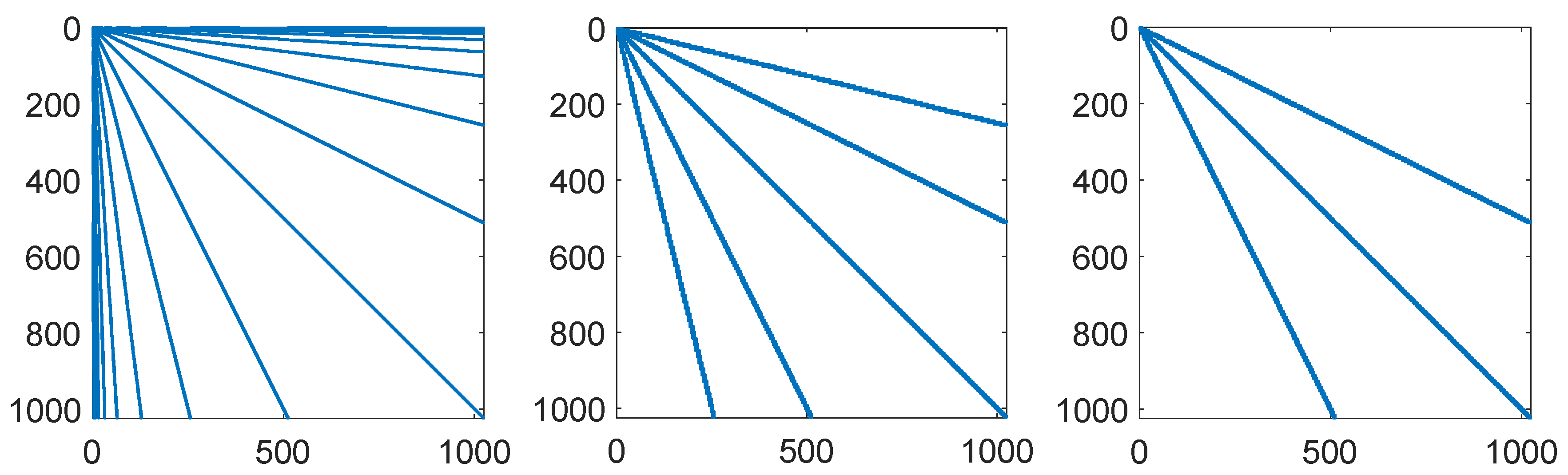

The graphs of the functions

and

are displayed in

Figure 2.

For

, the functions

where

, form the scaling basis

and the spaces

form a multiresolution analysis.

For simplicity, denote

and

. To obtain sparse discretization matrices, dual spaces

and complement spaces

are defined by

where

is the

-orthogonal complement of

.

Lemma 1. The functions and , , , satisfyfor , , and , . Furthermore, the functions and , , , satisfy . Proof. Assume that , , and . Then, , and thus . Since and is orthogonal to , is obtained. Using the similar argument and the relations and , the remaining part of the lemma is proved. ☐

Therefore, if wavelets are defined as basis functions for the spaces

, then the matrices in Equation (

10) will be sparse.

Figure 1 shows the cases

,

and

,

. The inner wavelet generators are defined by

for

and the boundary wavelet generator is defined by

The coefficients

are computed such that

is

-orthogonal to

,

, where

for

. This leads to systems of linear algebraic equations with infinitely many solutions. Using numerical experiments, the coefficients

that lead to a well-conditioned wavelet basis were found, namely

and

,

, for

, and for the boundary wavelet

The graphs of constructed wavelets are displayed in

Figure 2.

The functions and are symmetric; the functions and are antisymmetric; for ; for and all the wavelets have three vanishing moments.

For

, a wavelet basis on the level

j

contains the functions

for

.

For

and

, the sets

are a wavelet basis in the space

and its finite-dimensional subset, respectively. In the following, the proof of the Riesz basis property of

is provided.

Theorem 1. The wavelets have three vanishing moments, and , , are Riesz bases of the spaces such that their lower Riesz bounds and the upper Riesz bounds are uniformly bounded, i.e., they satisfy for some constants c and C independent on j.

Proof. It was already mentioned that , , have three vanishing moments. Indeed, since are defined to be -orthogonal to , , and the polynomials , , restricted to support of are linear combinations of , relation is obtained, and thus have three vanishing moments. Therefore, the wavelets have three vanishing moments as well.

The

-orthogonality of

and

,

, implies the

-orthogonality of

to the functions

,

which form the basis of

and thus

Since the number of elements in

is equal to the dimension of

, the set

is a basis of

. Every finite dimensional basis is a Riesz basis and thus it remains to be proven that Riesz bounds for

are uniformly bounded. Let

and

. The matrix

has similar structure as the matrix

in Equation (42), and since its entries are

-products of piecewise polynomial functions, one is able to compute them precisely or with arbitrary precision. For

, the Gerschgorin circle theorem from [

25] yields

☐

The proof of the Riesz basis property in Equation (

14) for

is based on the following theorem [

8,

10,

26].

Theorem 2. Let and, for , let and be subspaces of the space , such that , , . Let be bases of , be bases of , and be bases of , such that Riesz bounds with respect to the -norm of , and are uniformly bounded, and let Ψ

be composed of and , , as in Equation (31). Furthermore, assume thatis invertible and that the spectral norm of is bounded independently on j. In addition, for some positive constants C, γ and d, such that , letandand similarly let Equations (35) and (36) hold for and on the dual side. Then,is a Riesz sequence in for . The following theorem shows that the spaces and defined above satisfy the assumptions of Theorem 2.

Theorem 3. There exist uniform Riesz bases of such that matrices defined by Equation (34) are invertible and the spectral norms of are bounded independently on j. Proof. Let

be defined by Equation (

24). For

let

such that

Since

for

, the relations in Equation (

38) lead to the system of four linear algebraic equations with four unknown coefficients for each function

. The invertibility of all four system matrices was verified using symbolic computations. Thus, the functions

exist and are unique. Then,

where

is a basis of

and the matrix

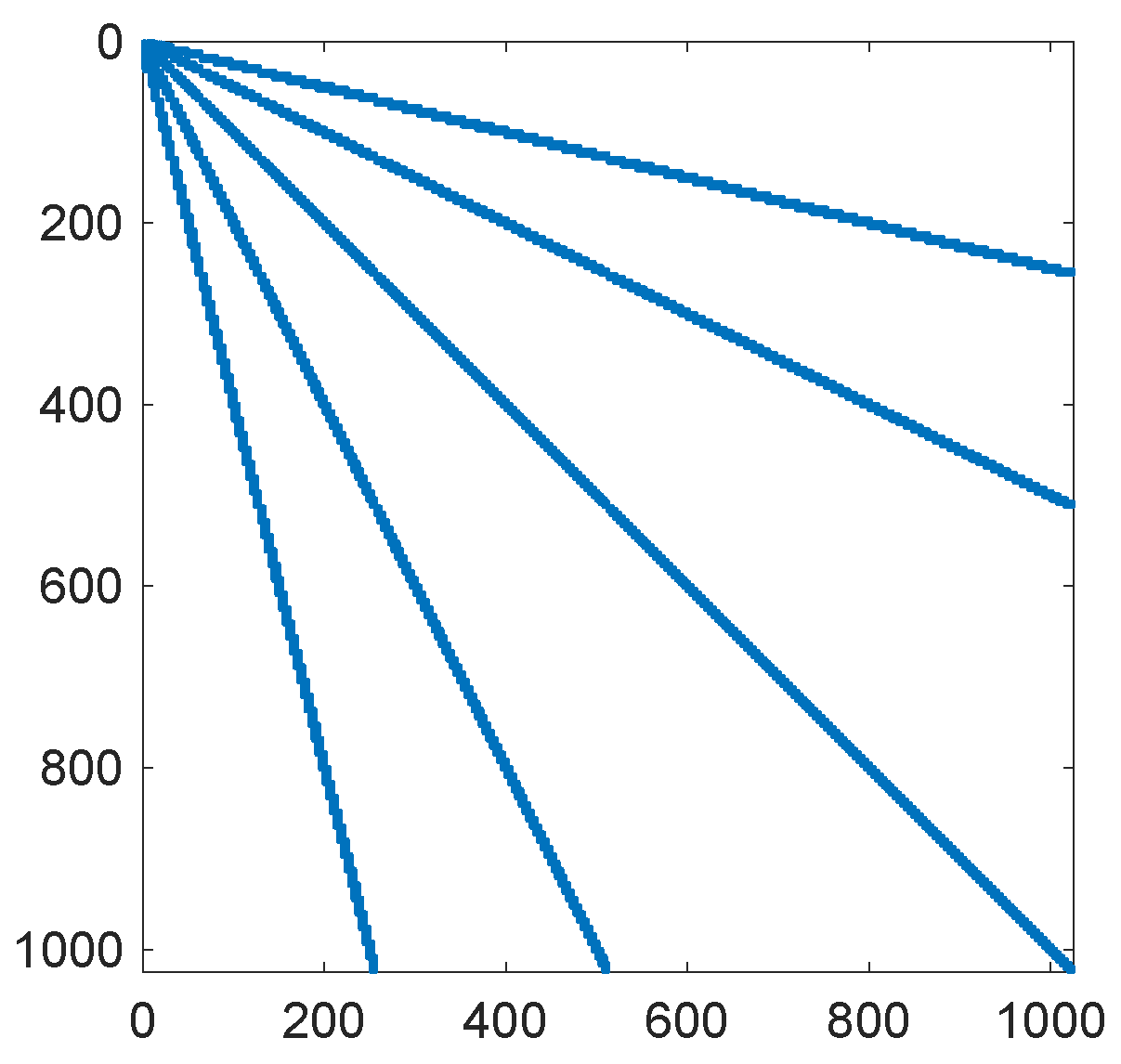

defined by Equation (

34) is tridiagonal and has the structure

Using symbolic computation and rounding the resulting elements of the matrix

to three decimal digits,

Similarly, the matrices

and

are given by

and

Thus, the matrices

and

are diagonally dominant and invertible. Due to the Johnson’s lower bound for the smallest singular value [

27],

where

. Therefore, the spectral norm of the inverse matrix satisfies

It remains to be proven that

are uniform Riesz bases of

. The matrices

are block diagonal matrices with

boundary blocks

and

and inner blocks

that do not depend on

j.

The Riesz lower bound

and the Riesz upper bound

satisfy

☐

Theorem 4. The set Ψ

satisfies Equation (14) for , ; especially, Ψ

is a Riesz basis of the space and Ψ

normalized in the -norm or in the -seminorm is a Riesz basis of the space . Proof. Using the Gershgorin circle theorem similarly to in the proof of Theorem 1, estimates

and

for the Riesz lower and upper bounds of

, respectively, are obtained. The estimates in Equations (

35) and (

36) are satisfied for

,

,

, and

. These parameters depend on the polynomial exactness and smoothness of the primal and dual spaces (see [

26]). Due to these facts, as well as Theorems 2 and 3, the proof is complete. ☐

Table 1 presents the minimal and maximal eigenvalues and the condition numbers (cond) of diagonally preconditioned stiffness and mass matrices, i.e.,

and

where

.

These values correspond to the lower and upper Riesz bounds and the condition numbers of normalized wavelet bases with respect to the

-norm and to the

-seminorm

. Although the aim is to construct a quadratic spline wavelet basis that leads to sparse discretization matrices rather than the optimization of the condition number, the resulting basis is better conditioned than many other quadratic spline wavelet bases (see comparison of quadratic spline wavelet bases in [

16]).

A wavelet basis on a bounded interval

can be constructed from the proposed wavelet basis on the unit interval using the simple linear transform

,

. Wavelet bases on the hypercube that are constructed using an isotropic, anisotropic, or sparse tensor product approach (see, e.g., [

4,

16,

17]) preserve the properties of the wavelet basis on the interval such as the Riesz basis property, vanishing moments, and the sparse structure of the discretization matrices.

3. Discretization of the Jump–Diffusion Option Pricing Models

In this section, the Galerkin method with the constructed wavelet basis is used for valuation of options under jump–diffusion models. The choice of the method is motivated by the fact that the Galerkin method using a wavelet basis, also called the wavelet-Galerkin method, has several advantages for equations containing an integral term. As mentioned above, the discretization matrices for the wavelet-Galerkin method are sparse or quasi-sparse, while most of the standard methods suffer from the fact that the discretization matrices are full. Furthermore, the wavelet-Galerkin method is higher-order accurate if higher-order bases are used and the solution is sufficiently smooth. For many types of equations, the discretization matrices are well-conditioned, which results in a small number of iterations when using iterative methods for solving the resulting discrete system. For details on the methods for the numerical solution of integral equations and operator equations containing the integral term, see [

4,

18].

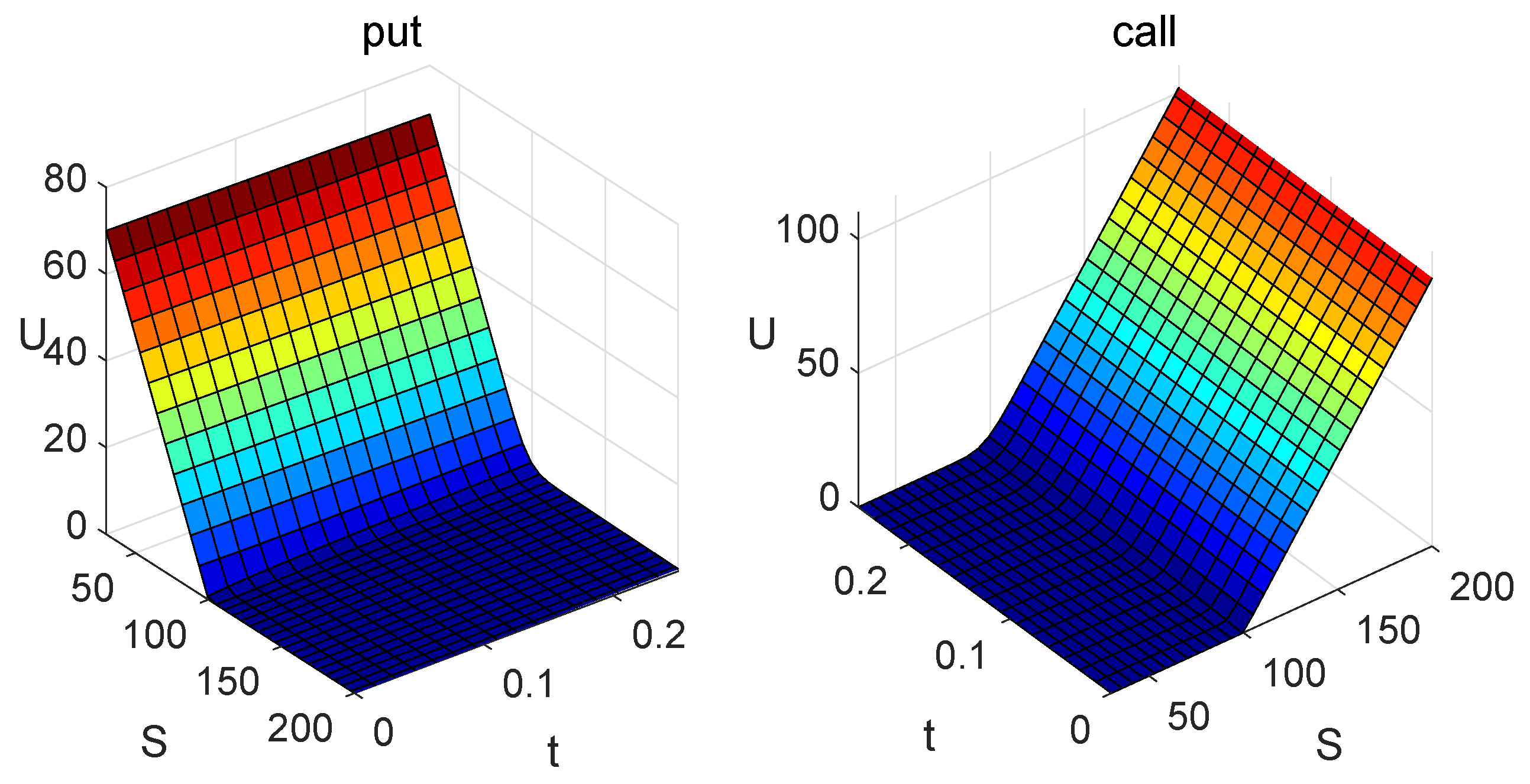

Recall that the jump–diffusion models are represented by the partial integro-differential Equation (

3). The initial and boundary conditions depend on the type of the option. Here, the method is presented for a European put option. The value of a European call option can be computed using the put–call parity [

3]. The initial condition for a vanilla European put option is

where

K is the strike price, and the boundary conditions have the form

,

for

The minimal value

and the maximal value

are chosen such that a domain

approximates the unbounded domain

. Since

for small

S, the boundary conditions at

have the form

It is convenient to transform Equation (

3) to logarithmic prices

because the transformed differential operator

has constant coefficients.

The transformed equation is given by

where

,

,

,

, and

The error caused by localization, i.e., by solving the Equation (

54) on a bounded domain

X instead on the whole real line, was studied in [

4,

28]. Due to decays of a value of a put option and of a probability density function at infinity,

Furthermore, since

for

S close to zero, the integral term

can be approximated by

where

The boundary condition at the point

is a non-homogeneous Dirichlet boundary condition. Therefore, one can transform Equation (

54) into an equation with homogeneous Dirichlet boundary conditions. Let

, where

is the solution of Equation (

54) satisfying the initial and boundary conditions defined above and

W is a function satisfying boundary conditions that is smooth enough. A possible choice of the function

W is

for

and

. Then,

is the solution of the equation

satisfying the initial condition

and boundary conditions

The symbol

denotes the Bochner space of functions

f such that

for

and

with

being the norm in Banach space

B. Let

a be a bilinear form defined by

for all

.

Then, the variational formulation of Equation (

60) reads as:

Find

such that

and

satisfies Equation (

61) and

almost everywhere in

.

It can be shown that the bilinear form

a is continuous and satisfies a Gårding inequality, which implies the existence of a unique solution to this problem (see [

4]).

The Crank–Nicolson scheme is used for time discretization. Let

and denote

The Crank–Nicolson scheme has the form

for

.

Let

be a wavelet basis for the space

such that

normalized in the

-norm is the wavelet basis for the space

. Let

be a finite-dimensional subset of

with

s levels of wavelets, i.e.,

has the structure in Equation (

31), and denote

. The Galerkin method consists in finding

such that

for all

. Setting

and expanding

in a basis

, i.e.,

the vector of coefficients

is the solution of the system of linear algebraic equations

, where

and

It is obvious that and depend on the time level , but for simplicity the index l is omitted.

The stability of the Crank–Nicolson scheme and error estimates for the Galerkin method combined with the Crank–Nicolson scheme have been already studied (see, e.g., [

4,

5,

6] and references therein). For quadratic spline basis functions and for sufficiently smooth solutions, the

-norm of the error depends on the error of approximation of function representing the initial condition in the space

and the term of order

, where

h represents the spatial step that is in this case for

N basis functions given by

.

In the following, the structure of a discretization matrix is studied. Using the Jacobi diagonal preconditioner

, where the diagonal elements of

satisfy

gives the preconditioned system

with

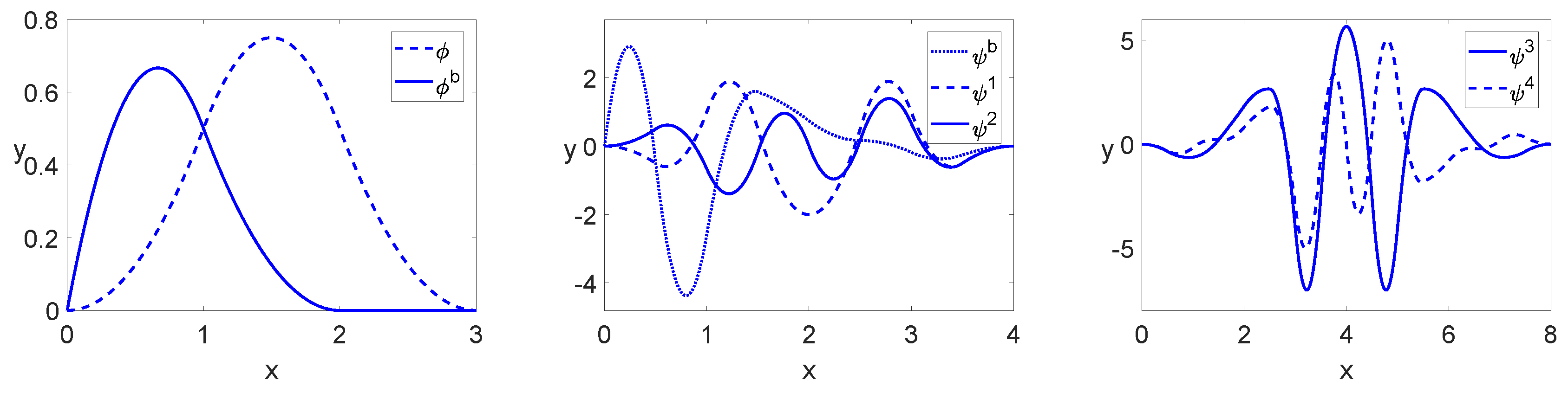

As is already known from the previous section, the matrix arising from discretization of the differential operator

is sparse and has the structure displayed in

Figure 1 (middle). Hence, the main focus is on properties of the matrix

corresponding to the integral, i.e., the matrix

with entries

For the Galerkin method with the standard spline basis, the matrix

is full. However, it is known that, for integral operators with some types of kernels and for wavelet bases with vanishing moments, many entries of discretization matrices are small and can be thresholded and the matrices can be approximated with matrices that are sparse or quasi-sparse (see, e.g., [

4,

18,

29,

30]). The following theorem provides the decay estimates for the entries of the matrix

corresponding to general wavelets with

L vanishing moments.

Theorem 5. Let Ψ

be a wavelet basis with L vanishing moments, i.e., Conditions (i)–(iv) from Definition 1 are satisfied. Let be wavelets that are generated from wavelets , , respectively, via translations and dilations as in Equation (21). Denote by the maximum of the lengths of the supports of and and Denote , , . If , thenwith Consequently, if is a bounded set such that the functionsatisfies , then there exists a constant C independent of such thatfor all wavelets and such that the set satisfies . Proof. The proof is based on Taylor expansion of the kernel in a similar way as in [

18,

29]. Let the centers of the supports of

and

be denoted by

and

, respectively. If

, then the function

K defined by Equation (

80) satisfies

. By the Taylor theorem, there exists a function

P that is a polynomial of degree at most

with respect to

x and a function

Q that is a polynomial of degree at most

with respect to

y such that

where

and

for some

. Due to

L vanishing moments of the wavelets

and

,

is obtained and similarly for

Q.

Using Property (ii) from Definition 1 gives

This proves the theorem. ☐

Let

with

defined by Equation (

73). Then, the discretization matrix

is the sum of the matrix

and the matrix arising from discretization of the differential operator

. Due to Theorem 5, many entries of the matrix

are small and can be thresholded, and thus the matrix

can be represented by a sparse matrix. The structure of the truncated matrix

is presented in

Figure 3. This matrix contains only entries larger than

, and it was computed for the option with parameters from Example 1 and the wavelet basis from this paper containing eight levels of wavelets.

In some papers [

18,

29], decay estimates were derived for integrals with a kernel

K that has a singularity or a maximal value on the diagonal

and decays with

. However, in some models such as the Merton model, with the density from Equation (

2) that is used in numerical experiments in

Section 4, these estimates cannot be used, because the kernel has maximal values for

and is decaying exponentially with

.

Since the matrix

is the same for all time levels, the system matrix can be computed, analyzed and compressed only once as a preprocessing step and then one can work with the compressed matrix. However, since the computation of all integrals in Equation (

76) can be time consuming, it is more convenient to use estimates in Equations (

78) and (

81) to compute only significant entries of the matrix

. More precisely, the following strategy can be used:

- (1)

Choose a tolerance .

- (2)

Compute all the entries for indexes and such that .

- (3)

Based on estimate in Equation (

81), set the level

such that

for any

and

such that

.

- (4)

If

, then use a local estimate in Equation (

81) to compute only entries for which it is not guaranteed that

.

Note that Step (4) enables one to obtain the matrix and thus also with more zero elements. To obtain a sparse matrix, it is sufficient to use Steps (1)–(3), i.e., to compute entries for which and entries in regions where is not smooth, and set to zero any other entries.

The impact of the truncation on the solution of the system in Equation (

74) can be described as follows. Let

be the truncated matrix and

be the solution of the system

. If

then

(see [

31]). Moreover, the matrices

have uniformly bounded condition numbers [

4], i.e.,

with

C independent on

s. Hence, if a threshold is chosen that is small enough, then

will be close to

.