Improved Chaotic Particle Swarm Optimization Algorithm with More Symmetric Distribution for Numerical Function Optimization

Abstract

1. Introduction

2. Review of Previous Work

Particle Swarm Optimizer

3. Chaotic Particle Swarm Optimization- Arctangent Acceleration Coefficient Algorithm

3.1. Chaotic Particle Swarm Optimization (CPSO)

- (1)

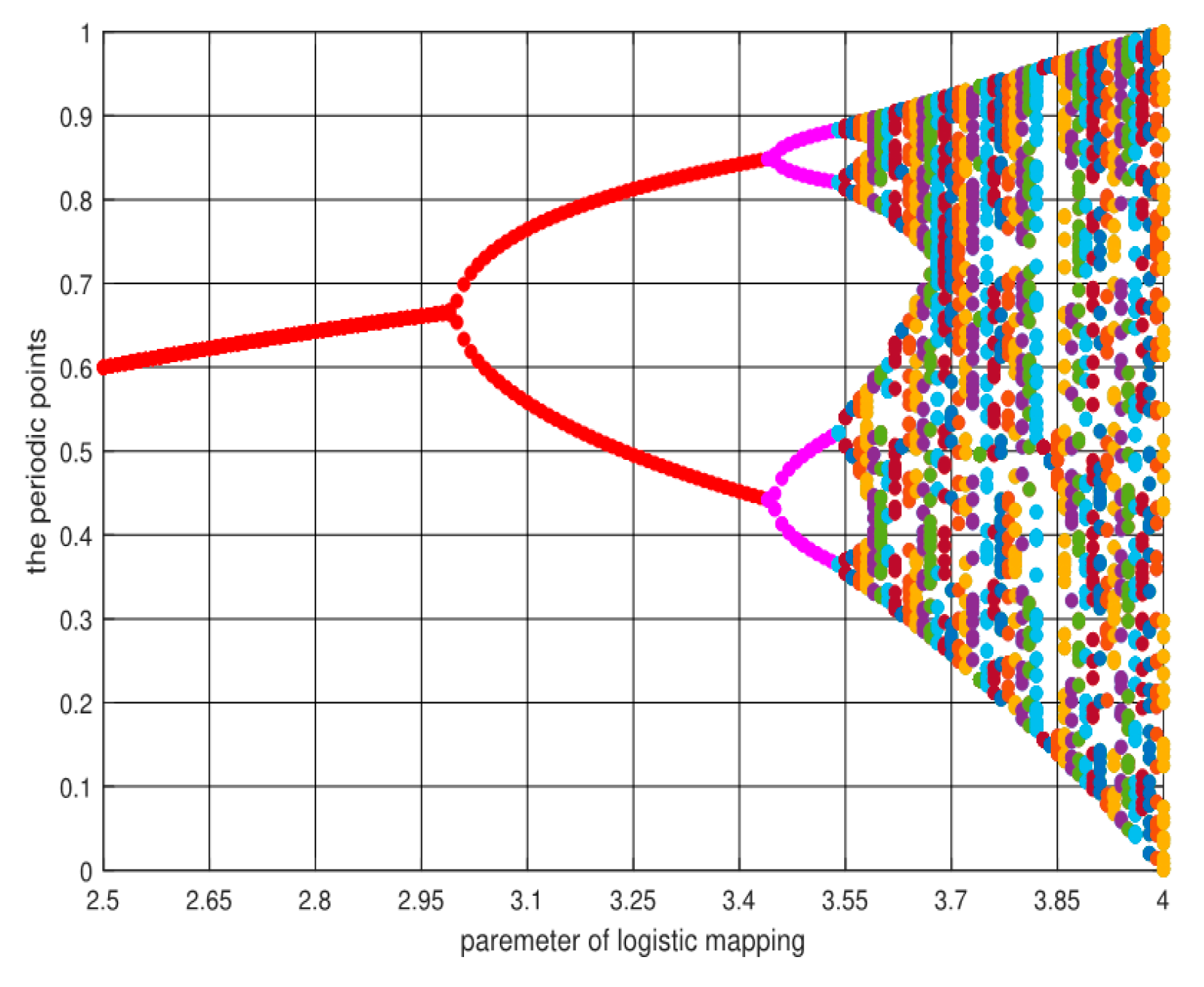

- A random sequence Z between chaotic [0, 1] is generated by an iteration of an initial value between [0, 1] through the iteration of the logistic equation: … Through linear mapping using Equation (6), the chaos is extended to the value range of the optimization variable to achieve traversal of the range of values of the optimized variables.

- (2)

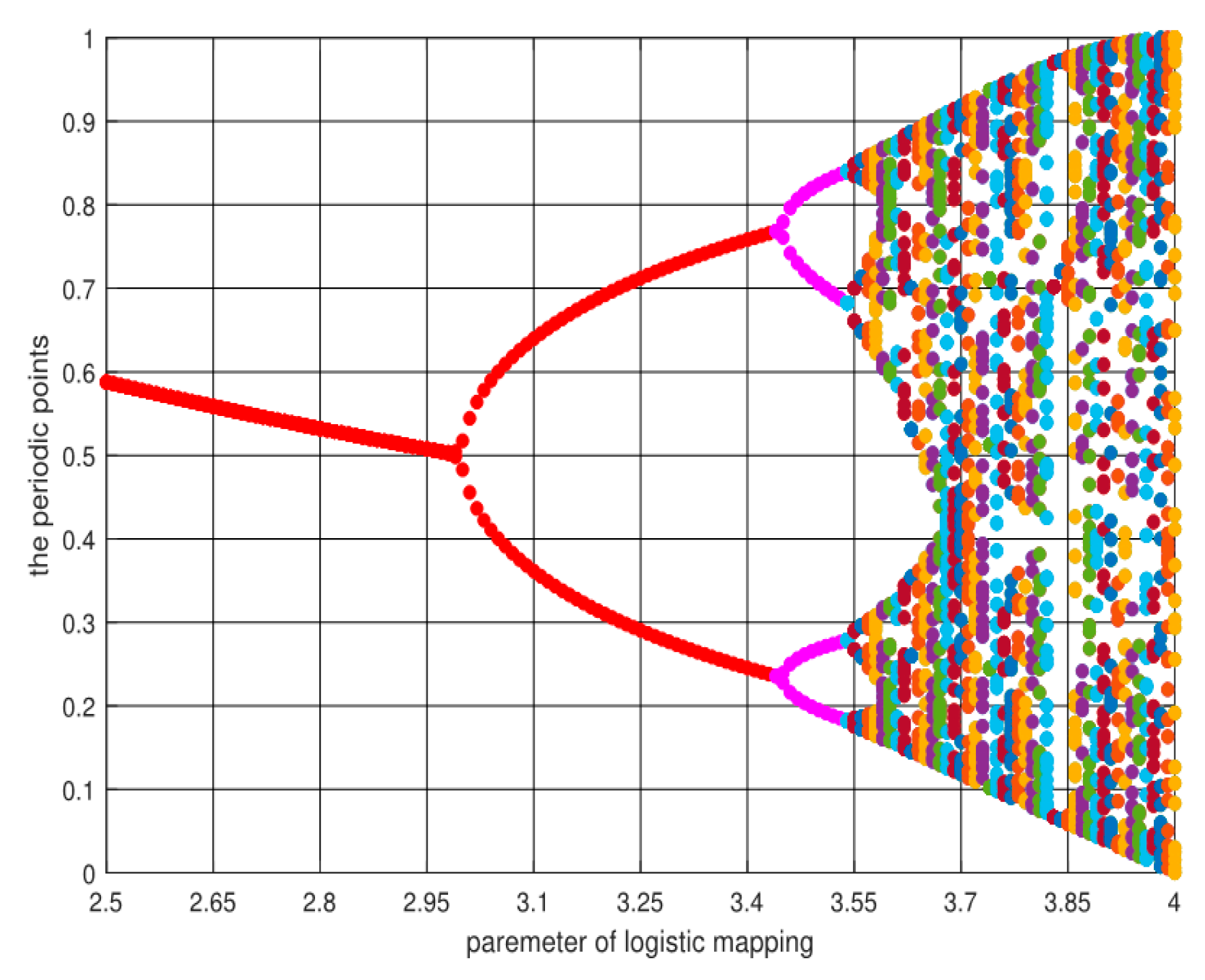

- Generate a chaotic random sequence Z between [0, 1] using the logistic equation, and then pass the carrier map in Equation (7), introducing chaos into , a nearby area, to achieve local chaotic search:where R is the search radius used to control the range of local chaotic search. Through experiments, we found that there was a good optimization effect when .

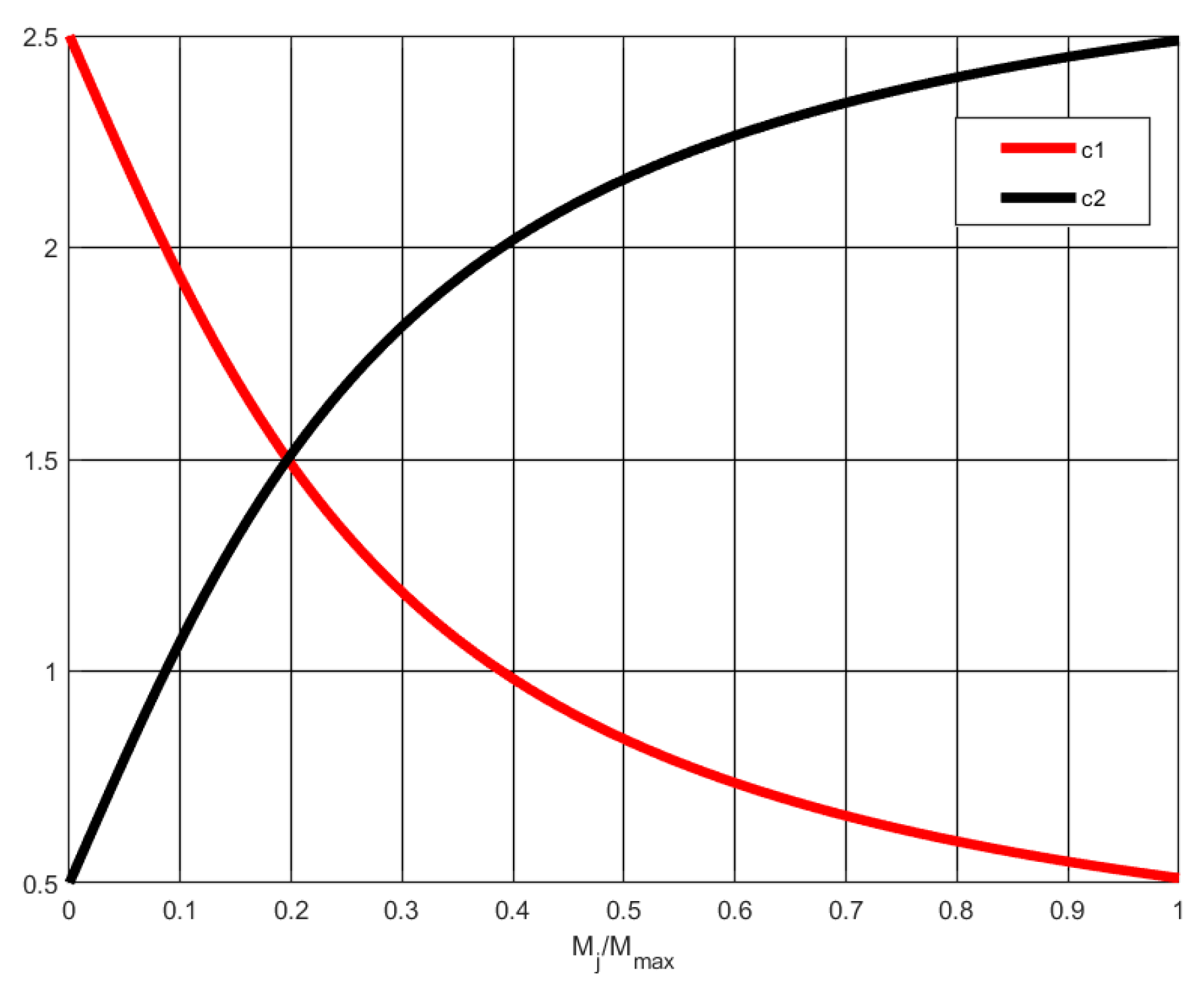

3.2. Arc Tangent Acceleration Coefficients (AT)

3.3. Cosine Map Inertia Weight

| Algorithm 1. Pseudo-code of the Chaotic Particle Swarm Optimization—Arctangent Acceleration algorithm. |

| 1 Initialize the parameters (PS, D, , , , , , , ) 2 The particle swarm positions are initialized by chaos theory by Equation (6) 3 Randomly generate N initial velocities within the maximum range 4 PSO algorithm is used to search for individual extremum and global optimal solutions 5 Local search: 6 While Iter < Mmax do 7 Update the inertia weight ω using Equation (10) 8 Using Equations (8) and (9) to update the cognitive component c1 and social component c2. 9 for i = 1:PS (population size) do 10 The speed of the particle is updated using Equation (1) 11 Use Equation (2) to update the position of particle 12 Calculate the fitness values of the new particle 13 if is superior to 14 Set to be 15 End if 16 if is superior to 17 Set to be 18 End if 19 Global search: 20 Chaotic search K times near 21 Use the following formula (Equation (7)) 22 K chaotic search points near are obtained 23 for j=1:K do 24 if < 25 Set to be 26 End if 27 End for 28 Iter = Iter +1 29 End While |

4. Simulation Experiments, Settings and Strategies

5. Experimental Results and Discussion

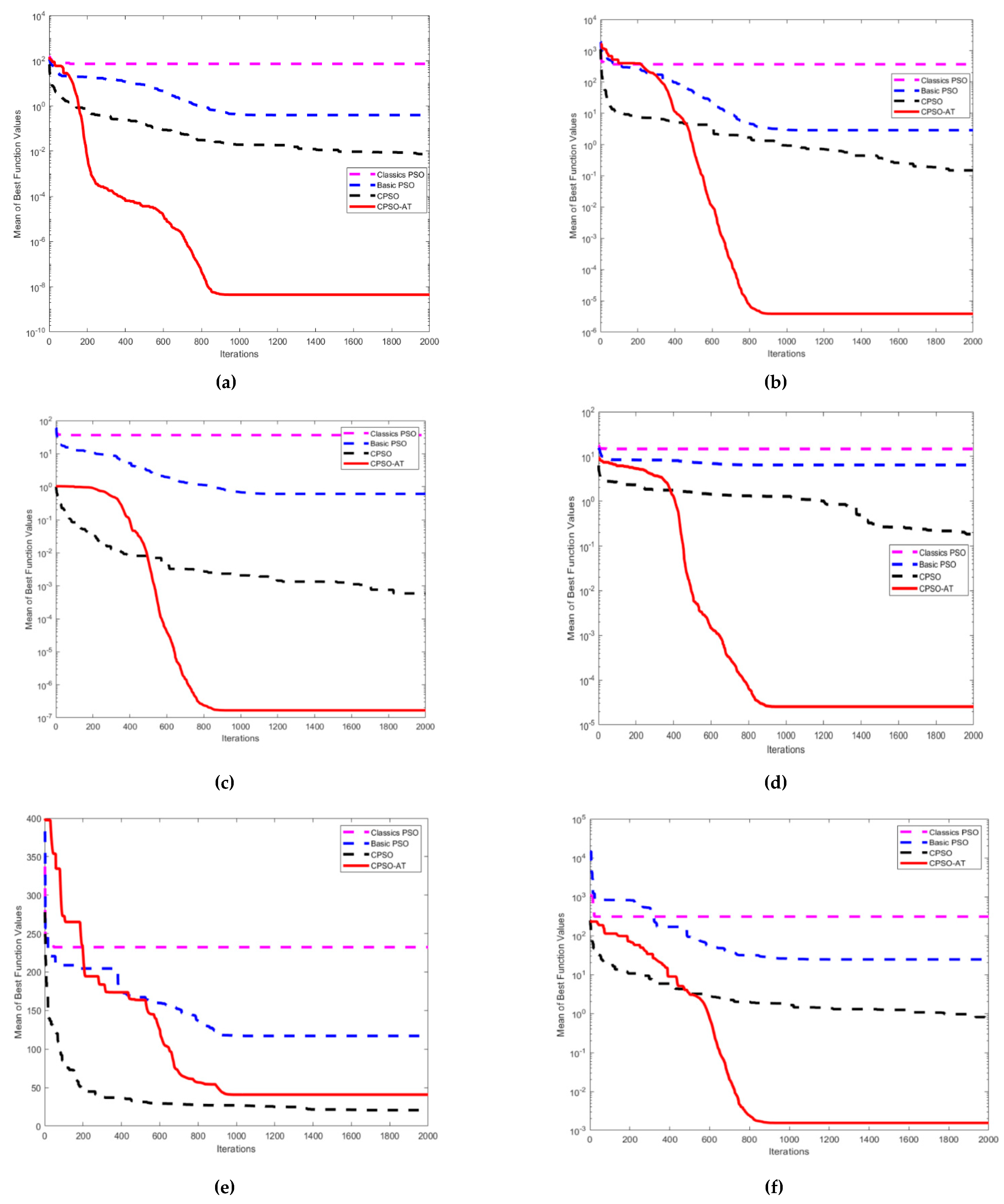

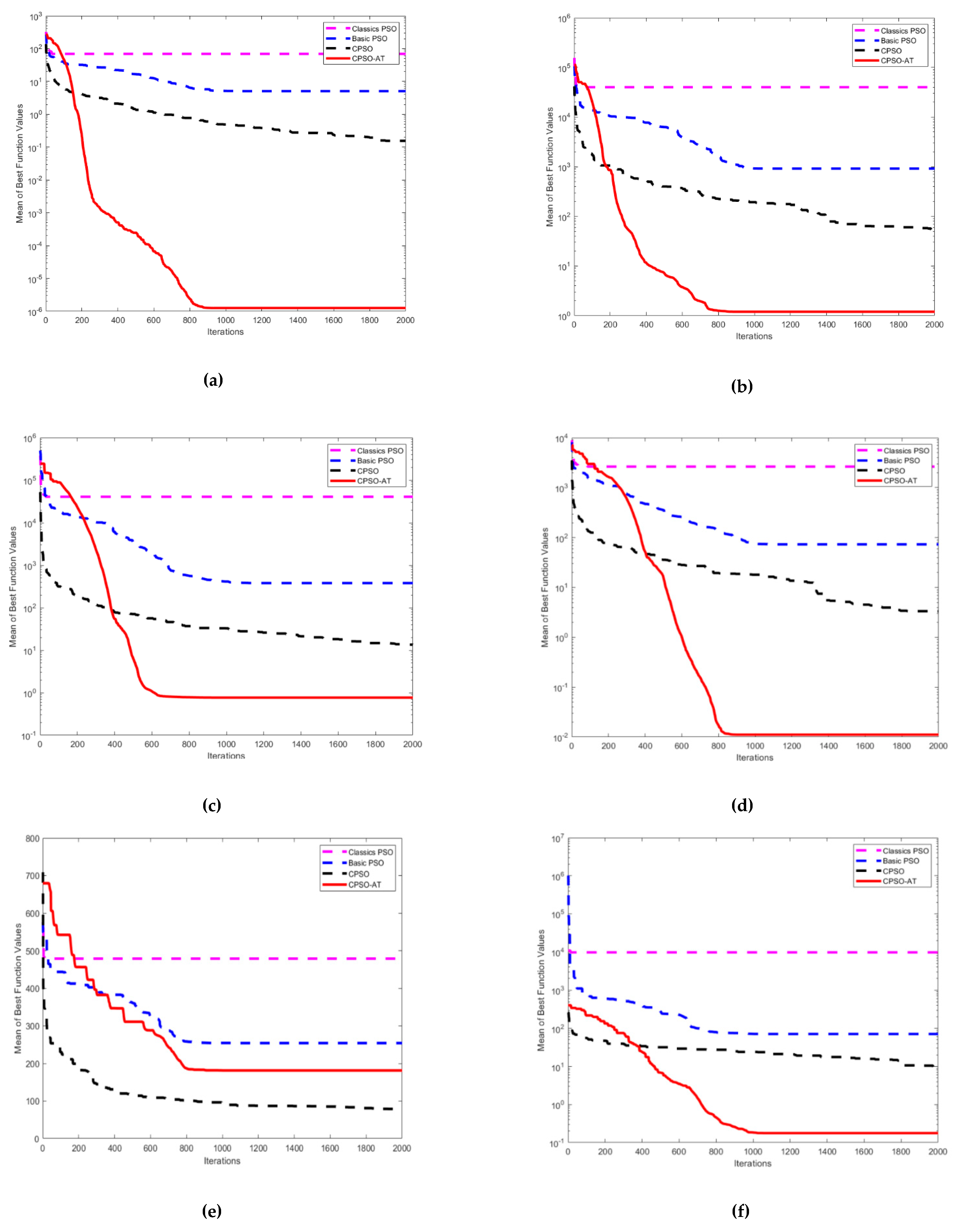

5.1. Comparison of CPSO-AT with Classical PSO, Basic PSO, and CPSO

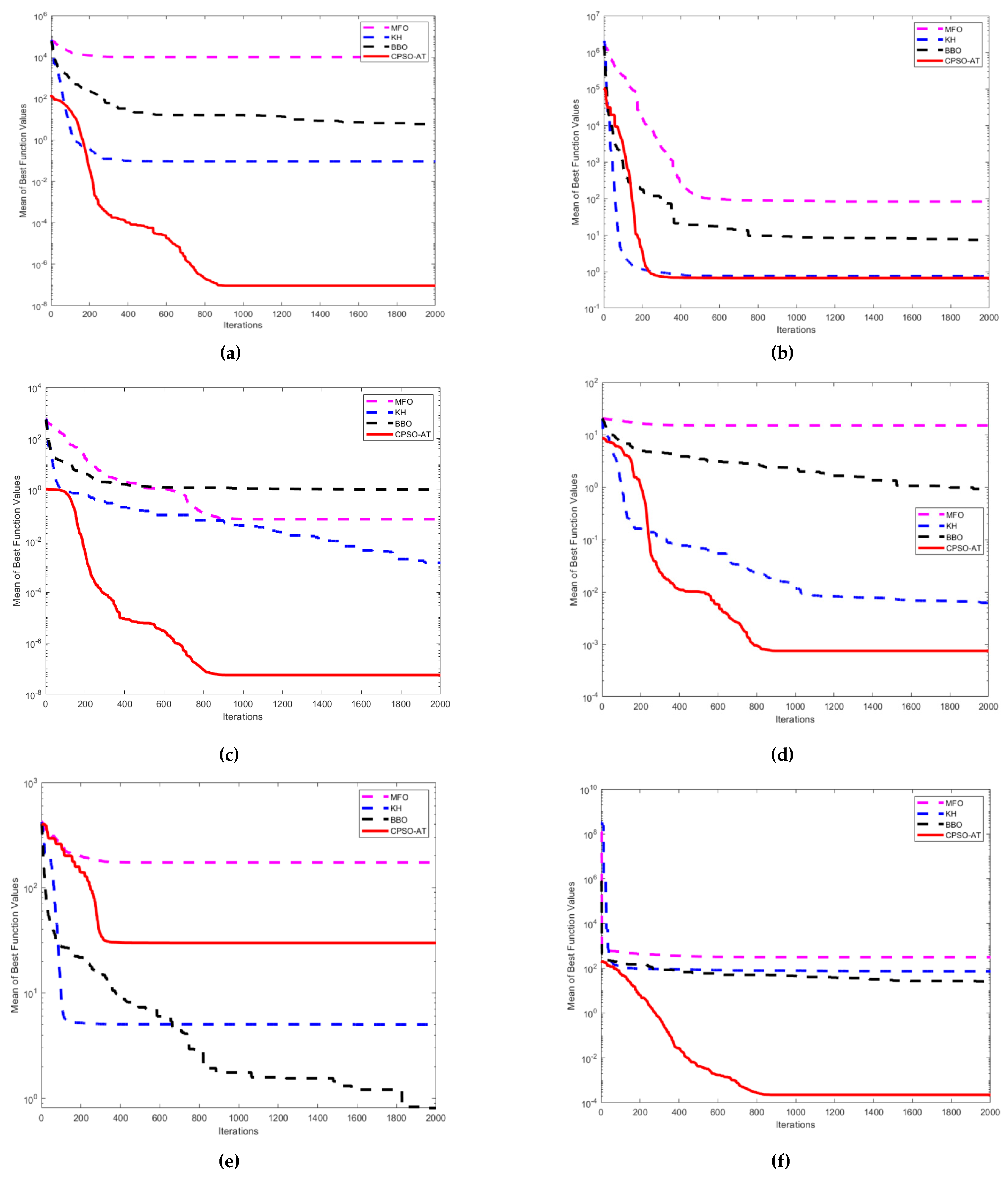

5.2. Comparison of Chaotic Particle Swarm Optimization-Arctangent Acceleration with Moth-Flame Optimization, Krill Herd and Biogeography-Based Optimization

6. Conclusions and Future Work

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Wang, G.; Guo, J.M.; Chen, Y.P.; Li, Y.; Xu, Q. A PSO and BFO-based Learning Strategy applied to Faster R-CNN for Object Detection in Autonomous Driving. IEEE Access 2019, 7, 18840–18859. [Google Scholar] [CrossRef]

- Siano, P.; Citro, C. Designing fuzzy logic controllers for DC–DC converters using multi-objective particle swarm optimization. Electr. Power Syst. Res. 2014, 112, 74–83. [Google Scholar] [CrossRef]

- Yu, Z.H.; Xiao, L.J.; Li, H.Y.; Zhu, X.L.; Huai, R.T. Model Parameter Identification for Lithium Batteries using the Coevolutionary Particle Swarm Optimization Method. IEEE Trans. Ind. Electron. 2017, 64, 569–5700. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, F.Y.; Liu, A.L. Chaotic dynamic weight particle swarm optimization for numerical function optimization. Knowl. Based Syst. 2018, 139, 23–40. [Google Scholar] [CrossRef]

- Lee, C.S.; Wang, M.H.; Wang, C.S.; Teytaud, O.; Liu, J.L.; Lin, S.W.; Hung, P.H. PSO-based Fuzzy Markup Language for Student Learning Performance Evaluation and Educational Application. IEEE Trans. Fuzzy Syst. 2018, 26, 2618–2633. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl. Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2002, 26, 29–41. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-Based Optimization. Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Das, S.; Mullick, S.S.; Suganthan, P.N. Recent advances in differential evolution—An updated survey. Swarm Evol. Comput. 2016, 27, 1–30. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl. Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, F.Y.; Wang, Y.G.; Yin, L. An ameliorated particle swarm optimizer for solving numerical optimization problems. Appl. Soft Comput. 2018, 73, 482–496. [Google Scholar] [CrossRef]

- Bonyadi, M.R.; Michale, Z. Particle Swarm Optimization for Single Objective Continuous Space Problems: A Review. Evol. Comput. 2017, 25, 1–54. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.M.; Feng, T.H.; Niu, Q.S.; Deng, X.J. A Novel Swarm Optimisation Algorithm Based on a Mixed-Distribution Model. Appl. Sci. Basel 2018, 8, 632. [Google Scholar] [CrossRef]

- Ozturk, C.; Hancer, E.; Karaboga, D. A novel binary artificial bee colony algorithm based on genetic operators. Inf. Sci. 2015, 297, 154–170. [Google Scholar] [CrossRef]

- Tey, K.S.; Mekhilef, S.; Seyedmahmoudian, M.; Horan, B.; Oo, A.M.T.; Stojcevski, A. Improved Differential Evolution-Based MPPT Algorithm Using SEPIC for PV Systems Under Partial Shading Conditions and Load Variation. IEEE Trans. Ind. Inform. 2018, 14, 4322–4333. [Google Scholar] [CrossRef]

- Mirjalili, S. The Ant Lion Optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- García-Ródenas, R.; Linares, L.J.; López-Gómez, J.A. A Memetic Chaotic Gravitational Search Algorithm for unconstrained global optimization problems. Appl. Soft Comput. 2019, 79, 14–29. [Google Scholar] [CrossRef]

- Tian, M.; Gao, X. Differential evolution with neighborhood-based adaptive evolution mechanism for numerical optimization. Inf. Sci. 2019, 478, 422–448. [Google Scholar] [CrossRef]

- Hooker, J.N. Testing heuristics: We have it all wrong. J. Heuristics 1995, 1, 33–42. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 453. [Google Scholar] [CrossRef] [PubMed]

- Pepelyshev, A.; Zhigljavsky, A.; Žilinskas, A. Performance of global random search algorithms for large dimensions. J. Glob. Optim. 2018, 71, 57–71. [Google Scholar] [CrossRef]

- Shi, Y.; Eberhart, R. Modified Particle Swarm Optimizer. In Proceedings of the IEEE International Conference on Evolutionary Computation, Anchorage, AK, USA, 4–9 May 1999; pp. 69–73. [Google Scholar]

- Khatami, A.; Mirghasemi, S.; Khosravi, A.; Lim, C.P.; Nahavandi, S. A new PSO-based approach to fire flame detection using K-Medoids clustering. Expert Syst. Appl. 2017, 68, 69–80. [Google Scholar] [CrossRef]

- Lin, C.W.; Yang, L.; Fournier-Viger, P.; Hong, T.P.; Voznak, M. A binary PSO approach to mine high-utility itemsets. Soft Comput. 2017, 21, 5103–5121. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, N.; Xiang, W. Clustering Hierarchy Protocol in Wireless Sensor Networks Using an Improved PSO Algorithm. IEEE Access 2017, 5, 2241–2253. [Google Scholar] [CrossRef]

- Chouikhi, N.; Ammar, B.; Rokbani, N.; Alimi, A.M. PSO-based analysis of Echo State Network parameters for time series forecasting. Appl. Soft Comput. 2017, 55, 211–225. [Google Scholar] [CrossRef]

- Wang, G.G.; Guo, L.; Gandomi, A.H.; Hao, G.S.; Wang, H. Chaotic Krill Herd algorithm. Inf. Sci. 2014, 274, 17–34. [Google Scholar] [CrossRef]

- Niu, P.; Chen, K.; Ma, Y.; Li, X.; Liu, A.; Li, G. Model turbine heat rate by fast learning network with tuning based on ameliorated krill herd algorithm. Knowl. Based Syst. 2017, 118, 80–92. [Google Scholar] [CrossRef]

| Algorithms | Population Size | Dimension | Parameter Settings | Iteration |

|---|---|---|---|---|

| Basic PSO | 100 | 30,50 | 2000 | |

| Classical PSO | 100 | 30,50 | 2000 | |

| CPSO | 100 | 30,50 | 2000 | |

| CPSO-AT | 100 | 30,50 | 2000 |

| Name | Test function | Dim | S | Group | |

|---|---|---|---|---|---|

| sphere | 30 50 | 0 | Unimodal | ||

| Schwefel’s 1.2 | 30 50 | 0 | Unimodal | ||

| Rosenbrock | 30 50 | 0 | Unimodal | ||

| Dixon & Price | 30 50 | 0 | Unimodal | ||

| Sum Squares | 30 50 | 0 | Unimodal | ||

| Griewank | 30 50 | 0 | Multimodal | ||

| Ackley | 30 50 | 0 | Multimodal | ||

| Rastrigin | 30 50 | 0 | Multimodal | ||

| Levy | 30 50 | [−10, 10]n | 0 | Multimodal | |

| Zakharov | 30 50 | [−5, 10]n | 0 | Multimodal |

| Function | Algorithm | k | The best | The worst | Mean | S.D. |

|---|---|---|---|---|---|---|

| f1 | Basic PSO | 1 | 2.6248 × 1001 | 7.7947 × 1001 | 4.6323 × 1001 | 6.7444 × 1001 |

| Classical PSO | 2.0757 × 10−02 | 6.6373 × 10−01 | 1.9719 × 10−01 | 7.8747 × 10−01 | ||

| CPSO | 5.2121 × 10−03 | 2.1287 × 10−02 | 1.1753 × 10−02 | 8.2205 × 10−04 | ||

| CPSO-AT | 6.3925 × 10−10 | 3.4988 × 10−08 | 4.7194 × 10−09 | 3.4958 × 10−08 | ||

| f2 | Basic PSO | 1 | 2.2111 × 1003 | 9.9471 × 1003 | 4.9502 × 1003 | 1.0172 × 1004 |

| Classical PSO | 1.1954 × 1001 | 1.6882 × 1002 | 5.3816 × 1001 | 1.6148 × 1002 | ||

| CPSO | 1.1748 × 1000 | 5.6324 × 1000 | 3.0123 × 1000 | 3.0028 × 10−01 | ||

| CPSO-AT | 2.4951 × 10−05 | 7.2287 × 10−03 | 1.2410 × 10−03 | 8.8665 × 10−03 | ||

| f3 | Basic PSO | 1 | 2.6521 × 1005 | 1.6800 × 1006 | 9.3596 × 1005 | 1.0188 × 1005 |

| Classical PSO | 3.6194 × 1002 | 1.9558 × 1006 | 1.1596 × 1006 | 1.4155 × 1005 | ||

| CPSO | 2.7934 × 1001 | 1.4180 × 1002 | 5.5210 × 1001 | 2.7520 × 1001 | ||

| CPSO-AT | 2.0086 × 1001 | 2.7560 × 1001 | 2.4461 × 1001 | 2.4490 × 10−01 | ||

| f4 | Basic PSO | 1 | 2.6551 × 1003 | 1.1167 × 1005 | 2.3649 × 1004 | 6.2701 × 1003 |

| Classical PSO | 7.7642 × 1000 | 7.8947 × 1004 | 3.3909 × 1004 | 6.8874 × 1003 | ||

| CPSO | 1.2618 × 1000 | 5.2299 × 1000 | 2.9283 × 1000 | 5.3780 × 10−01 | ||

| CPSO-AT | 6.6667 × 10−01 | 6.6939 × 10−01 | 6.6699 × 10−01 | 7.5939 × 10−04 | ||

| f5 | Basic PSO | 1 | 1.0021 × 1003 | 2.0249 × 1003 | 1.5684 × 1003 | 6.4703 × 1001 |

| Classical PSO | 1.7245 × 1001 | 2.2872 × 1003 | 1.3307 × 1003 | 1.8101 × 1002 | ||

| CPSO | 1.2397 × 10−01 | 4.4059 × 10−01 | 2.4581 × 10−01 | 3.8646 × 10−02 | ||

| CPSO-AT | 4.4910 × 10−06 | 4.4064 × 10−04 | 1.1348 × 10−04 | 2.6671 × 10−05 | ||

| f6 | Basic PSO | 1 | 3.2239 × 1001 | 5.5316 × 1001 | 4.0062 × 1001 | 9.2496 × 10−01 |

| Classical PSO | 1.0188 × 1000 | 5.1722 × 1001 | 2.7296 × 1001 | 2.6616 × 1000 | ||

| CPSO | 8.6921 × 10−04 | 1.1118 × 10−02 | 3.1795 × 10−03 | 8.4473 × 10−04 | ||

| CPSO-AT | 1.1528 × 10−08 | 5.5167 × 10−04 | 6.0028 × 10−05 | 2.0000 × 10−05 | ||

| f7 | Basic PSO | 1 | 8.8528 × 1000 | 1.6029 × 1001 | 1.1915 × 1001 | 5.3159 × 10−01 |

| Classical PSO | 7.4734 × 1000 | 1.2232 × 1001 | 1.1736 × 1001 | 2.1728 × 10−01 | ||

| CPSO | 9.3623 × 10−02 | 2.0819 × 1000 | 5.1825 × 10−01 | 2.2687 × 10−01 | ||

| CPSO-AT | 5.2116 × 10−05 | 1.8759 × 10−04 | 1.0254 × 10−04 | 1.6110 × 10−05 | ||

| f8 | Basic PSO | 0 | 2.4279 × 1002 | 3.2104 × 1002 | 2.6438 × 1002 | 3.4627 × 1000 |

| Classical PSO | 6.8569 × 1001 | 3.1272 × 1002 | 2.9253 × 1002 | 4.5919 × 1000 | ||

| CPSO | 2.9624 × 1001 | 4.2594 × 1001 | 3.6522 × 1001 | 1.9274 × 1000 | ||

| CPSO-AT | 9.2920 × 1001 | 1.7194 × 1002 | 1.3559 × 1002 | 5.0030 × 1000 | ||

| f9 | Basic PSO | 1 | 1.0570 × 1001 | 5.6268 × 1001 | 2.9851 × 1001 | 2.6466 × 1000 |

| Classical PSO | 5.2905 × 1000 | 4.9856 × 1001 | 2.1421 × 1001 | 2.8645 × 1000 | ||

| CPSO | 9.4416 × 10−03 | 2.7481 × 1000 | 8.9063 × 10−01 | 1.4012 × 10−01 | ||

| CPSO-AT | 3.5811 × 10−01 | 8.0576 × 10−01 | 5.5508 × 10−01 | 4.5298 × 10−01 | ||

| f10 | Basic PSO | 1 | 8.5199 × 1001 | 2.4830 × 1002 | 1.4751 × 1002 | 2.0541 × 1001 |

| Classical PSO | 5.3003 × 10−01 | 1.1802 × 1002 | 8.4578 × 1001 | 6.7666 × 1000 | ||

| CPSO | 5.7915 × 10−01 | 1.1559 × 1000 | 8.3102 × 10−01 | 5.2794 × 10−02 | ||

| CPSO-AT | 1.3389 × 10−04 | 3.6616 × 10−03 | 1.2061 × 10−03 | 2.1842 × 10−04 |

| Function | Algorithm | k | The best | The worst | Mean | S.D. |

|---|---|---|---|---|---|---|

| f1 | Basic PSO | 1 | 6.2992 × 1001 | 1.4072 × 1002 | 9.6568 × 1001 | 1.1705 × 1002 |

| Classical PSO | 1.9810 × 1000 | 9.4058 × 1000 | 4.7912 × 1000 | 1.0715 × 1001 | ||

| CPSO | 7.9989 × 10−02 | 1.9665 × 10−01 | 1.3500 × 10−01 | 4.0335 × 10−03 | ||

| CPSO-AT | 4.3797 × 10−07 | 1.3892 × 10−05 | 2.6618 × 10−06 | 1.3012 × 10−05 | ||

| f2 | Basic PSO | 1 | 1.7735 × 1004 | 4.4191 × 1004 | 3.3163 × 1004 | 4.0007 × 1004 |

| Classical PSO | 7.0766 × 1002 | 2.4271 × 1003 | 1.3298 × 1003 | 2.2228 × 1003 | ||

| CPSO | 3.4384 × 1001 | 1.9495 × 1002 | 7.9785 × 1001 | 5.0406 × 1000 | ||

| CPSO-AT | 1.3885 × 10−01 | 2.7291 × 1000 | 7.4383 × 10−01 | 3.4377 × 1000 | ||

| f3 | Basic PSO | 1 | 2.9077 × 1006 | 1.4292 × 1007 | 7.9705 × 1006 | 6.3951 × 1005 |

| Classical PSO | 6.9551 × 1003 | 1.6232 × 1007 | 6.8072 × 1006 | 1.1339 × 1006 | ||

| CPSO | 1.0277 × 1002 | 3.5589 × 1002 | 2.3726 × 1002 | 4.4241 × 1001 | ||

| CPSO-AT | 4.5713 × 1001 | 4.8066 × 1001 | 4.6730 × 1001 | 1.3927 × 10−01 | ||

| f4 | Basic PSO | 1 | 8.5353 × 1004 | 6.5400 × 1005 | 2.3039 × 1005 | 4.4620 × 1004 |

| Classical PSO | 2.1823 × 1002 | 2.5940 × 1005 | 1.0611 × 1005 | 1.7552 × 1004 | ||

| CPSO | 8.5470 × 1000 | 3.0465 × 1001 | 2.0967 × 1001 | 3.9523 × 1000 | ||

| CPSO-AT | 6.6783 × 10−01 | 2.0588 × 1000 | 8.4647 × 10−01 | 5.6966 × 10−02 | ||

| f5 | Basic PSO | 1 | 2.9846 × 1003 | 7.5971 × 1003 | 5.4303 × 1003 | 3.7474 × 1002 |

| Classical PSO | 6.5151 × 1001 | 7.6068 × 1003 | 4.5427 × 1003 | 6.5260 × 1002 | ||

| CPSO | 3.1804 × 1000 | 5.6937 × 1000 | 4.2359 × 1000 | 1.9161 × 10−01 | ||

| CPSO-AT | 4.7685 × 10−03 | 6.7831 × 10−02 | 2.0803 × 10−02 | 5.0860 × 10−03 | ||

| f6 | Basic PSO | 1 | 4.3759 × 1001 | 1.0136 × 1002 | 7.8097 × 1001 | 4.6646 × 1000 |

| Classical PSO | 6.1000 × 1000 | 1.0849 × 1002 | 8.7209 × 1001 | 4.9779 × 1000 | ||

| CPSO | 6.9568 × 10−03 | 1.4624 × 10−02 | 9.6156 × 10−03 | 7.3385 × 10−04 | ||

| CPSO-AT | 4.4032 × 10−06 | 5.4671 × 10−04 | 8.9087 × 10−05 | 2.3055 × 10−05 | ||

| f7 | Basic PSO | 1 | 1.3003 × 1001 | 1.5286 × 1001 | 1.3916 × 1001 | 1.7718 × 10−01 |

| Classical PSO | 8.0444 × 1000 | 1.5888 × 1001 | 1.4502 × 1001 | 4.5279 × 10−01 | ||

| CPSO | 4.6800 × 10−01 | 1.9761 × 1000 | 1.5023 × 1000 | 4.2769 × 10−02 | ||

| CPSO-AT | 2.0332 × 10−03 | 8.7914 × 10−01 | 9.1370 × 10−02 | 2.9368 × 10−02 | ||

| f8 | Basic PSO | 0 | 5.1852 × 1002 | 6.0608 × 1002 | 5.7156 × 1002 | 1.6262 × 1000 |

| Classical PSO | 1.1434 × 1002 | 5.7794 × 1002 | 4.5988 × 1002 | 8.5234 × 1000 | ||

| CPSO | 7.4647 × 1001 | 1.4715 × 1002 | 1.1398 × 1002 | 7.3222 × 1000 | ||

| CPSO-AT | 2.0437 × 1002 | 3.2078 × 1002 | 2.6771 × 1002 | 8.6923 × 1000 | ||

| f9 | Basic PSO | 1 | 3.2829 × 1001 | 7.5323 × 1001 | 5.1270 × 1001 | 3.5370 × 1000 |

| Classical PSO | 3.8076 × 1000 | 4.8866 × 1001 | 3.6627 × 1001 | 2.9257 × 1000 | ||

| CPSO | 1.3024 × 1000 | 5.1136 × 1000 | 2.8866 × 1000 | 4.1186 × 10−01 | ||

| CPSO-AT | 6.2676 × 10−01 | 1.4325 × 1000 | 1.0566 × 1000 | 8.0876 × 10−01 | ||

| f10 | Basic PSO | 1 | 4.3138 × 1003 | 9.8686 × 1006 | 1.2329 × 1006 | 4.0793 × 1005 |

| Classical PSO | 7.0378 × 1001 | 2.9135 × 1005 | 6.9641 × 1004 | 2.1887 × 1004 | ||

| CPSO | 6.6807 × 1000 | 1.3292 × 1001 | 1.0134 × 1001 | 8.8523 × 10−01 | ||

| CPSO-AT | 2.2299 × 10−01 | 1.3807 × 1000 | 6.4995 × 10−01 | 3.0979 × 10−02 |

| Algorithm | Population | Maximum Iterations | Dim | Other |

|---|---|---|---|---|

| MFO | 50 | 2000 | 30,50 | is random number in the range [−2,1] |

| KH | 50 | 2000 | 30,50 | |

| BBO | 50 | 2000 | 30,50 | c |

| CPSO-AT | 50 | 2000 | 30,50 | |

| Function | Algorithm | k | The Best | The Worst | Mean | S.D. |

|---|---|---|---|---|---|---|

| f1 | MFO | 1 | 6.3466 × 10−15 | 1.0000 × 1004 | 2.0000 × 1003 | 1.2649 × 1004 |

| KH | 9.6793 × 10−03 | 4.4021 × 10−02 | 2.0048 × 10−02 | 1.3669 × 10−02 | ||

| BBO | 2.7007 × 1000 | 9.8717 × 1000 | 4.2946 × 1000 | 6.1697 × 1000 | ||

| CPSO-AT | 3.1695 × 10−07 | 2.8893 × 10−06 | 8.6404 × 10−07 | 1.1386 × 10−07 | ||

| f2 | MFO | 1 | 3.7805 × 10−13 | 1.1700 × 1006 | 4.7500 × 1005 | 1.4342 × 1006 |

| KH | 7.4251 × 1000 | 7.7804 × 1002 | 3.1717 × 1002 | 3.4905 × 1002 | ||

| BBO | 2.4456 × 1002 | 1.0627 × 1003 | 6.9743 × 1002 | 7.0483 × 1002 | ||

| CPSO-AT | 1.2328 × 10−03 | 1.4670 × 10−01 | 3.7457 × 10−02 | 9.9016 × 10−03 | ||

| f3 | MFO | 1 | 1.6385 × 1003 | 1.6385 × 1003 | 1.6385 × 1003 | 1.7316 × 10−12 |

| KH | 2.9288 × 1001 | 1.2114 × 1002 | 5.5490 × 1001 | 4.0249 × 1001 | ||

| BBO | 1.2701 × 1002 | 6.5835 × 1002 | 3.2701 × 1002 | 5.1255 × 1002 | ||

| CPSO-AT | 2.3345 × 1001 | 2.9404 × 1001 | 2.4992 × 1001 | 9.1291 × 10−02 | ||

| f4 | MFO | 1 | 6.6667 × 10−01 | 2.7370 × 1005 | 3.4679 × 1004 | 2.6107 × 1005 |

| KH | 6.7509 × 10−01 | 1.2386 × 1000 | 8.4426 × 10−01 | 2.3154 × 10−01 | ||

| BBO | 4.4060 × 1000 | 9.1277 × 1000 | 6.9919 × 1000 | 4.7954 × 1000 | ||

| CPSO-AT | 6.6664 × 10−01 | 6.6762 × 10−01 | 6.6692 × 10−01 | 2.1520 × 10−04 | ||

| f5 | MFO | 1 | 1.1144 × 10−14 | 2.9000 × 1003 | 6.9000 × 1002 | 2.6738 × 1003 |

| KH | 8.0391 × 10−03 | 2.6790 × 10−01 | 7.1299 × 10−02 | 1.1182 × 10−01 | ||

| BBO | 3.3696 × 10−01 | 6.0732 × 10−01 | 4.6529 × 10−01 | 2.5268 × 10−01 | ||

| CPSO-AT | 1.9718 × 10−05 | 1.8382 × 10−04 | 8.3644 × 10−05 | 2.0107 × 10−05 | ||

| f6 | MFO | 1 | 9.3259 × 10−15 | 9.0535 × 1001 | 2.7080 × 1001 | 1.3078 × 1002 |

| KH | 2.0406 × 10−03 | 2.3335 × 10−02 | 1.0695 × 10−02 | 8.9323 × 10−03 | ||

| BBO | 1.0110 × 1000 | 1.0698 × 1000 | 1.0388 × 1000 | 4.8482 × 10−02 | ||

| CPSO-AT | 8.4554 × 10−08 | 1.5809 × 10−06 | 3.6554 × 10−07 | 5.6011 × 10−08 | ||

| f7 | MFO | 1 | 7.0957 × 10−09 | 1.9963 × 1001 | 9.4662 × 1000 | 2.9308 × 1001 |

| KH | 2.0726 × 10−03 | 1.6499 × 1000 | 7.0606 × 10−01 | 7.0425 × 10−01 | ||

| BBO | 7.0705 × 10−01 | 1.3181 × 1000 | 9.6088 × 10−01 | 4.6179 × 10−01 | ||

| CPSO-AT | 2.9201 × 10−04 | 1.2453 × 10−03 | 5.9240 × 10−04 | 2.0650 × 10−04 | ||

| f8 | MFO | 0 | 7.6612 × 1001 | 1.9331 × 1002 | 1.3427 × 1002 | 9.8256 × 1001 |

| KH | 5.9930 × 1000 | 1.7947 × 1001 | 1.2761 × 1001 | 4.3635 × 1000 | ||

| BBO | 1.0071 × 1000 | 2.2005 × 1000 | 1.7721 × 1000 | 1.2180 × 1000 | ||

| CPSO-AT | 9.9508 × 1000 | 3.7812 × 1001 | 2.0800 × 1001 | 2.5423 × 1000 | ||

| f9 | MFO | 0 | 2.5135 × 1001 | 3.7618 × 1001 | 3.0205 × 1001 | 9.4966 × 1000 |

| KH | 2.2932 × 10−04 | 8.1506 × 10−01 | 2.0720 × 10−01 | 2.2620 × 10−01 | ||

| BBO | 8.6060 × 10−03 | 2.8077 × 10−02 | 2.1078 × 10−02 | 2.0072 × 10−02 | ||

| CPSO-AT | 2.6859 × 10−01 | 8.0576 × 10−01 | 5.3717 × 10−01 | 6.5790 × 10−01 | ||

| f10 | MFO | 1 | 1.1036 × 10−02 | 4.7619 × 1002 | 2.6749 × 1002 | 4.3400 × 1002 |

| KH | 6.6967 × 1001 | 1.3059 × 1002 | 9.7460 × 1001 | 2.5847 × 1001 | ||

| BBO | 2.3055 × 1001 | 4.4664 × 1001 | 3.3702 × 1001 | 2.0264 × 1001 | ||

| CPSO-AT | 9.4240 × 10−05 | 2.1734 × 10−04 | 1.7080 × 10−04 | 4.8481 × 10−06 |

| Function | Algorithm | k | The Best | The Worst | Mean | S.D. |

|---|---|---|---|---|---|---|

| f1 | MFO | 1 | 9.0418 × 10−05 | 2.0000 × 1004 | 1.0000 × 1004 | 2.0000 × 1004 |

| KH | 9.1848 × 10−02 | 3.8079 × 10−01 | 2.2432 × 10−01 | 1.0846 × 10−01 | ||

| BBO | 6.8687 × 1001 | 1.4266 × 1002 | 9.9904 × 1001 | 6.6770 × 1001 | ||

| CPSO-AT | 1.7020 × 10−05 | 5.5462 × 10−05 | 4.3225 × 10−05 | 8.3417 × 10−07 | ||

| f2 | MFO | 1 | 1.0000 × 1004 | 5.0200 × 1006 | 1.8190 × 1006 | 4.6469 × 1006 |

| KH | 1.0638 × 1003 | 8.3849 × 1003 | 3.1824 × 1003 | 3.0333 × 1003 | ||

| BBO | 1.6172 × 1004 | 5.0529 × 1004 | 2.7620 × 1004 | 2.7312 × 1004 | ||

| CPSO-AT | 1.0216 × 1000 | 4.4131 × 1000 | 2.3541 × 1000 | 2.6353 × 10−01 | ||

| f3 | MFO | 1 | 2.7684 × 1003 | 2.7686 × 1003 | 2.7684 × 1003 | 1.3105 × 10−01 |

| KH | 6.0262 × 1001 | 1.8692 × 1002 | 1.3323 × 1002 | 4.8077 × 1001 | ||

| BBO | 1.4617 × 1003 | 4.8529 × 1003 | 2.5030 × 1003 | 2.7857 × 1003 | ||

| CPSO-AT | 4.5596 × 1001 | 1.0267 × 1002 | 5.2237 × 1001 | 2.1782 × 1000 | ||

| f4 | MFO | 1 | 6.2704 × 1000 | 4.6889 × 1005 | 1.8365 × 1005 | 4.7211 × 1005 |

| KH | 2.3253 × 1000 | 5.6925 × 1000 | 3.2332 × 1000 | 1.4030 × 1000 | ||

| BBO | 4.7422 × 1001 | 1.0958 × 1002 | 7.4611 × 1001 | 4.8684 × 1001 | ||

| CPSO-AT | 6.6895 × 10−01 | 8.9973 × 10−01 | 7.4677 × 10−01 | 4.9214 × 10−02 | ||

| f5 | MFO | 1 | 1.8984 × 10−05 | 9.7000 × 1003 | 2.2300 × 1003 | 8.7864 × 1003 |

| KH | 5.1658 × 10−02 | 4.7215 × 10−01 | 2.2164 × 10−01 | 1.6813 × 10−01 | ||

| BBO | 2.1286 × 1001 | 3.1796 × 1001 | 2.6284 × 1001 | 9.5861 × 1000 | ||

| CPSO-AT | 3.1182 × 10−03 | 3.4762 × 10−02 | 1.5271 × 10−02 | 2.2791 × 10−03 | ||

| f6 | MFO | 1 | 2.6725 × 10−05 | 9.0793 × 1001 | 2.7162 × 1001 | 1.3102 × 1002 |

| KH | 8.7800 × 10−03 | 5.1455 × 10−02 | 2.3202 × 10−02 | 1.7232 × 10−02 | ||

| BBO | 1.8720 × 1000 | 2.5471 × 1000 | 2.2098 × 1000 | 5.5828 × 10−01 | ||

| CPSO-AT | 2.7021 × 10−06 | 7.4758 × 10−03 | 7.5390 × 10−04 | 2.4893 × 10−04 | ||

| f7 | MFO | 1 | 3.1461 × 1000 | 1.9963 × 1001 | 1.7381 × 1001 | 1.5640 × 1001 |

| KH | 1.1614 × 1000 | 2.9876 × 1000 | 1.8086 × 1000 | 6.9580 × 10−01 | ||

| BBO | 2.9456 × 1000 | 3.5964 × 1000 | 3.2432 × 1000 | 5.4301 × 10−01 | ||

| CPSO-AT | 2.4651 × 10−03 | 5.1956 × 10−03 | 3.7252 × 10−03 | 4.6693 × 10−04 | ||

| f8 | MFO | 0 | 2.0610 × 1002 | 4.0942 × 1002 | 2.7372 × 1002 | 1.7473 × 1002 |

| KH | 1.6014 × 1001 | 3.4316 × 1001 | 2.4666 × 1001 | 6.5266 × 1000 | ||

| BBO | 1.6638 × 1001 | 2.8529 × 1001 | 2.4282 × 1001 | 9.1892 × 1000 | ||

| CPSO-AT | 2.4890 × 1001 | 4.4884 × 1001 | 3.4785 × 1001 | 9.8221 × 10−01 | ||

| f9 | MFO | 0 | 1.2546 × 1001 | 8.8610 × 1001 | 3.2530 × 1001 | 6.3687 × 1001 |

| KH | 4.4904 × 10−01 | 2.7557 × 1000 | 1.0771 × 1000 | 8.7791 × 10−01 | ||

| BBO | 3.0271 × 10−01 | 6.0636 × 10−01 | 4.4337 × 10−01 | 2.8994 × 10−01 | ||

| CPSO-AT | 5.3723 × 10−01 | 1.3432 × 1000 | 9.6706 × 10−01 | 7.5773 × 10−01 | ||

| f10 | MFO | 1 | 6.2671 × 1002 | 9.7623 × 1002 | 7.9242 × 1002 | 4.5047 × 1002 |

| KH | 3.0410 × 1002 | 3.9719 × 1002 | 3.4173 × 1002 | 3.5971 × 1001 | ||

| BBO | 7.6162 × 1001 | 1.3712 × 1002 | 1.0980 × 1002 | 4.7729 × 1001 | ||

| CPSO-AT | 9.2969 × 10−03 | 6.3677 × 10−02 | 2.5705 × 10−02 | 1.2072 × 10−02 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Z.; Yuan, X.; Han, S.; Sun, D.; Ma, Y. Improved Chaotic Particle Swarm Optimization Algorithm with More Symmetric Distribution for Numerical Function Optimization. Symmetry 2019, 11, 876. https://doi.org/10.3390/sym11070876

Ma Z, Yuan X, Han S, Sun D, Ma Y. Improved Chaotic Particle Swarm Optimization Algorithm with More Symmetric Distribution for Numerical Function Optimization. Symmetry. 2019; 11(7):876. https://doi.org/10.3390/sym11070876

Chicago/Turabian StyleMa, Zhiteng, Xianfeng Yuan, Sen Han, Deyu Sun, and Yan Ma. 2019. "Improved Chaotic Particle Swarm Optimization Algorithm with More Symmetric Distribution for Numerical Function Optimization" Symmetry 11, no. 7: 876. https://doi.org/10.3390/sym11070876

APA StyleMa, Z., Yuan, X., Han, S., Sun, D., & Ma, Y. (2019). Improved Chaotic Particle Swarm Optimization Algorithm with More Symmetric Distribution for Numerical Function Optimization. Symmetry, 11(7), 876. https://doi.org/10.3390/sym11070876