3D Flow Entropy Contour Fitting Segmentation Algorithm Based on Multi-Scale Transform Contour Constraint

Abstract

1. Introduction

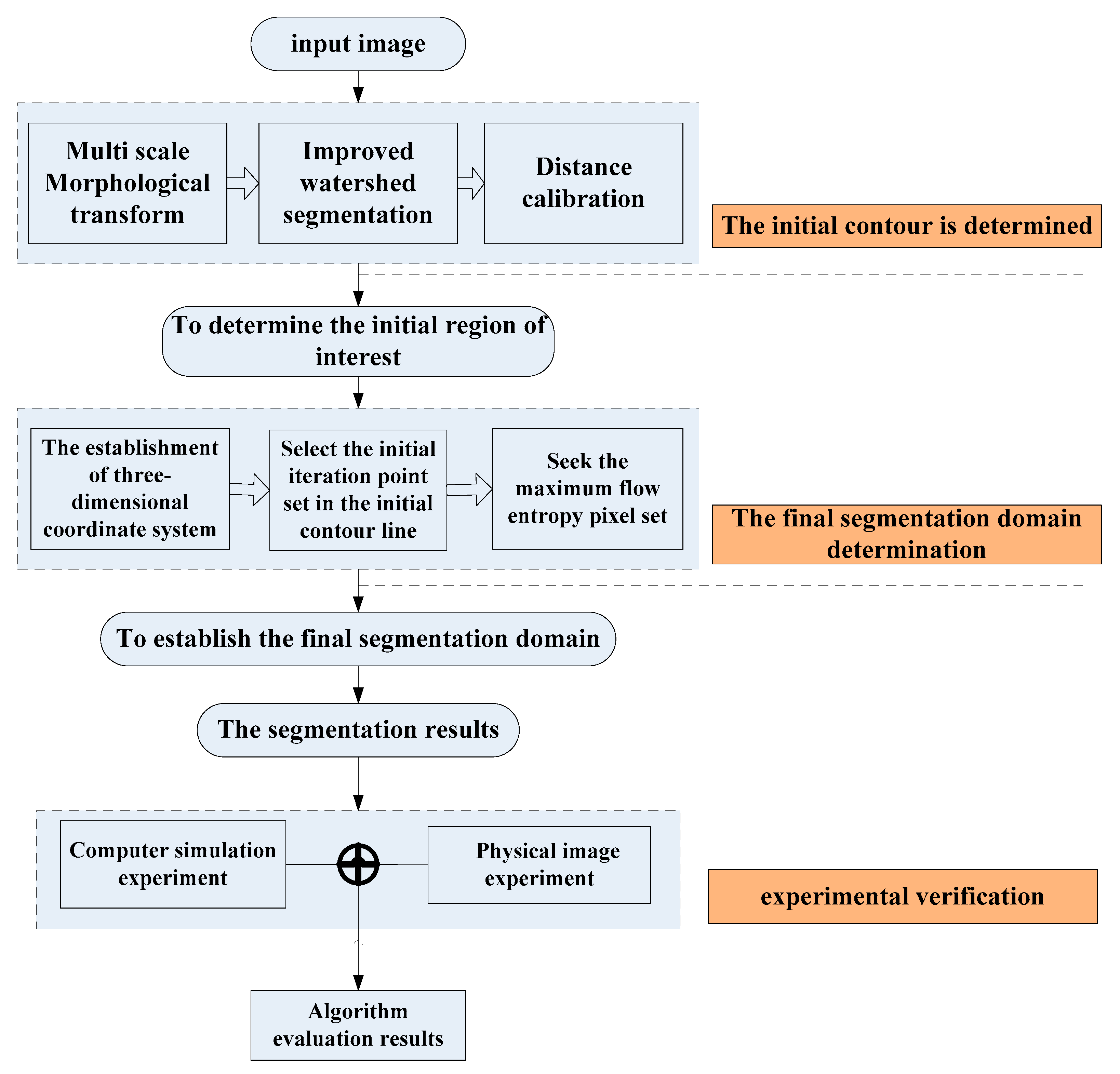

2. The Determination of the Initial Contour by Multi-Scale Morphological Fitting

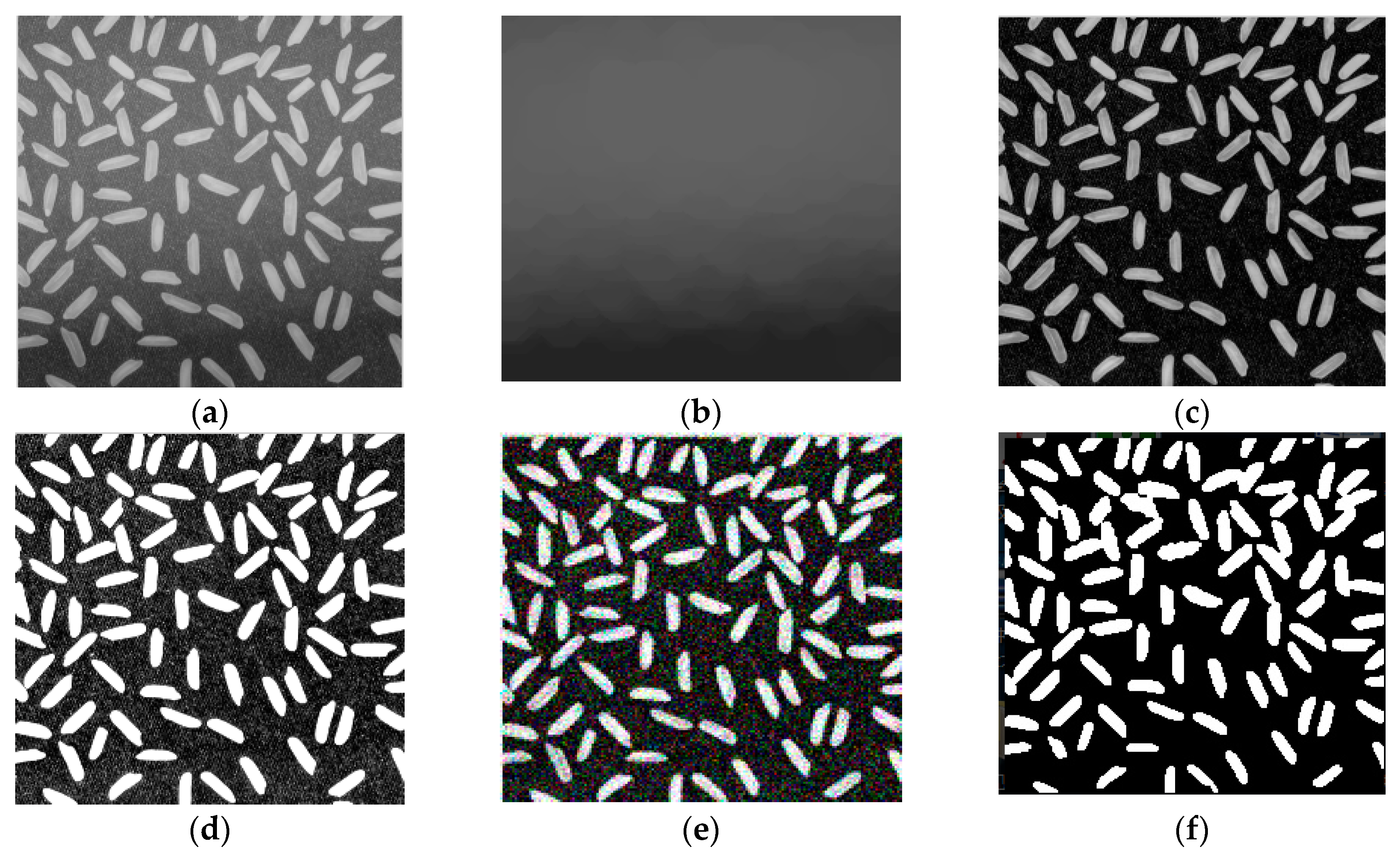

2.1. Improved Top-Hat and Bottom-Hat Transform Sample Pretreatment

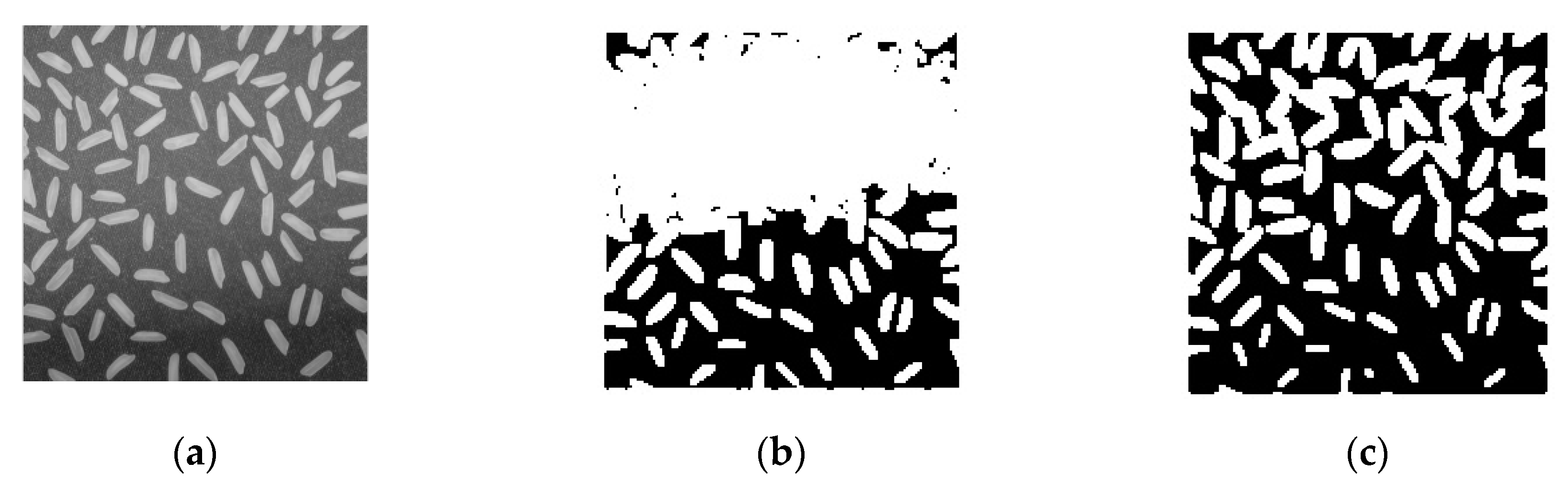

2.2. Improved Morphological Watershed Segmentation Method

3. Flow Entropy Resegmentation Algorithm Based on Three-Dimensional Information Constraints

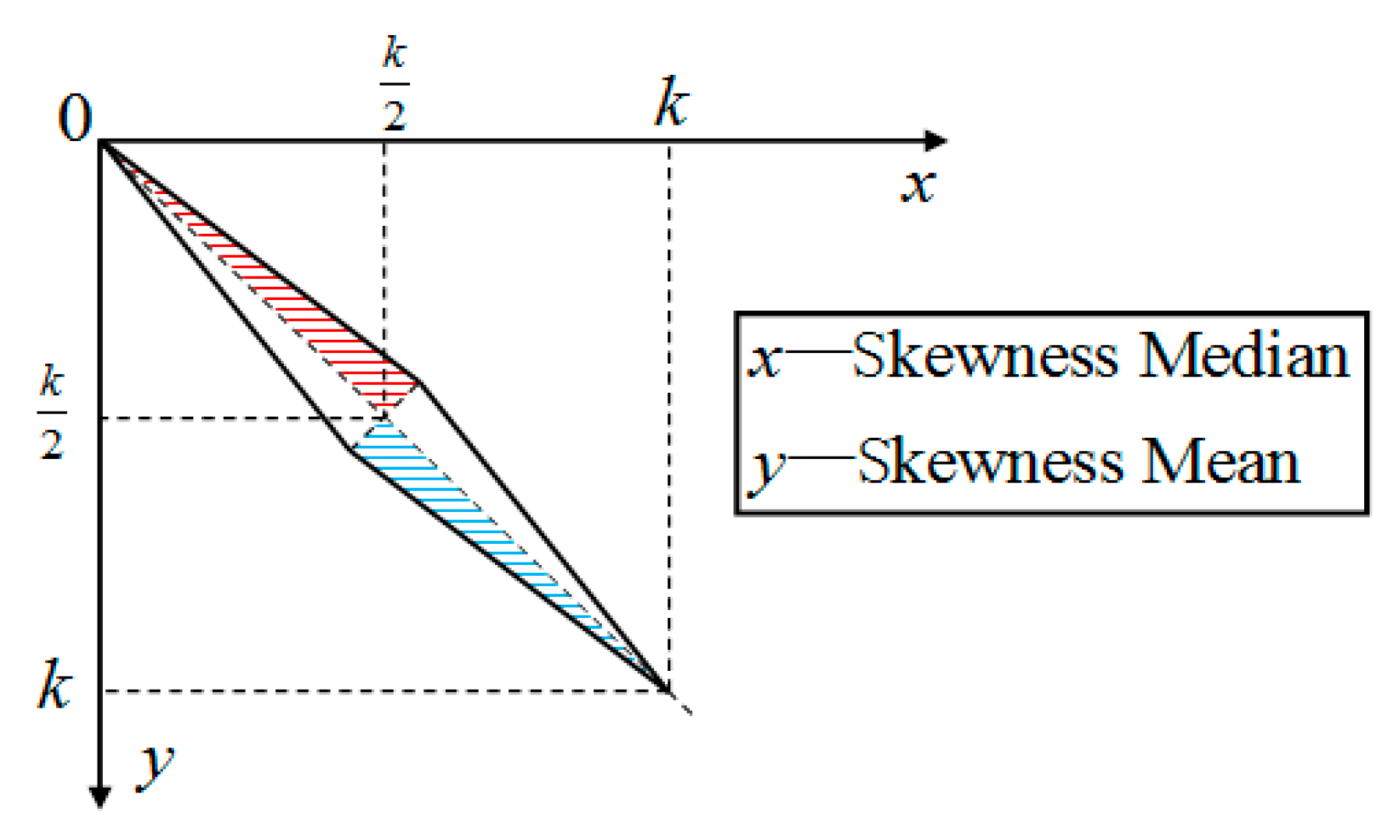

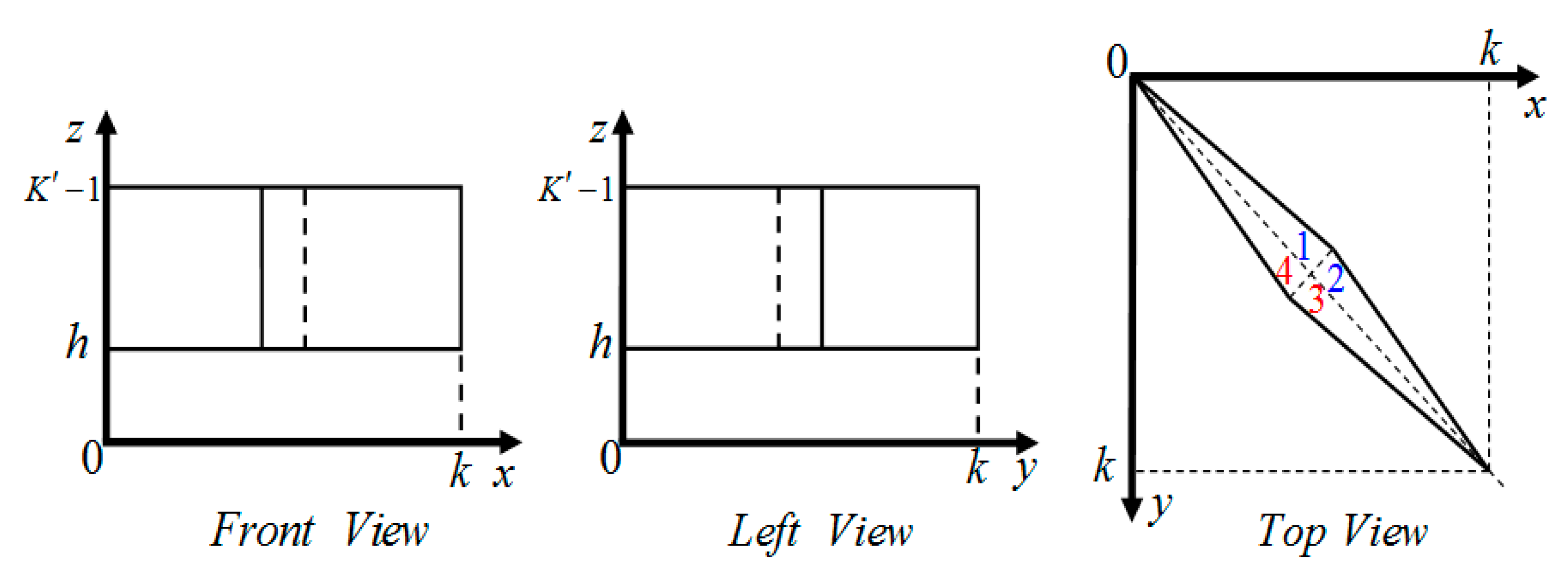

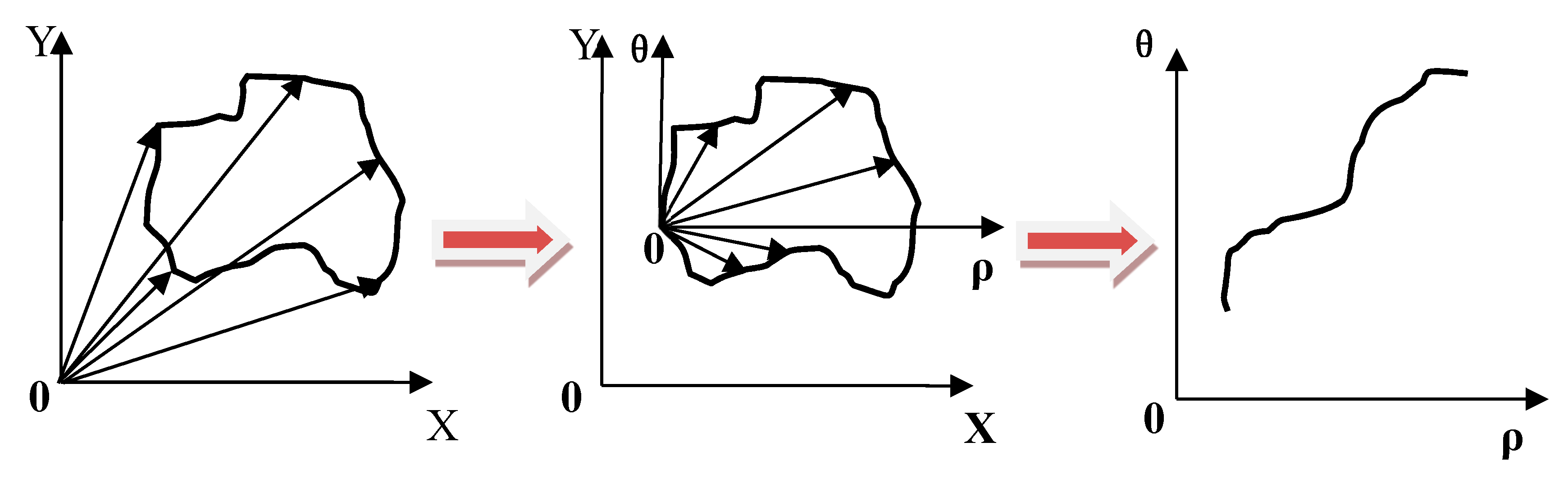

3.1. The Establishment of Three-Dimensional Information Segmentation Model

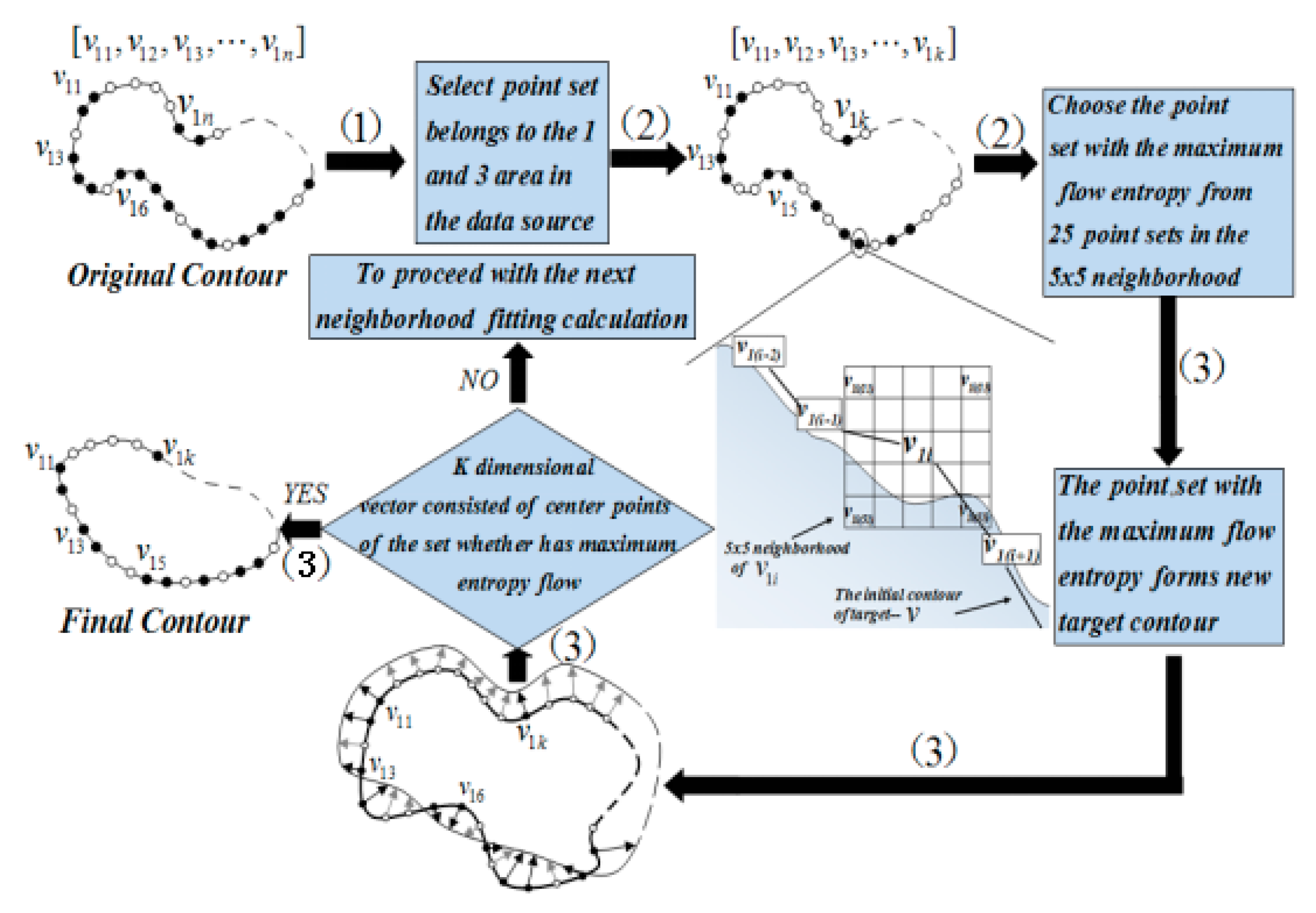

3.2. Using the Energy Theory and the Maximum Information Entropy to Fit the Contour of the Target

- (1)

- Randomly select N points on the obtained contour from the pre-segmentation as the initial iteration data source . represents the ith pixel of the edge line and is the ith 5 × 5 pixels window center.

- (2)

- Pixels belonging to the 1,3 quadrant region in are selected to compose the set . expresses pixel at (i, j) in the 5 × 5 pixels window of . The pixels at the same place of the 5 × 5 neighborhood of each pixel in reform 25 sets of k-dimensional vector. When , calculate the flow entropy of pixel points belong to 1,3 quadrant of the three-dimensional coordinates. When , repeat (1) process.

- (3)

- After one calculation, the set of pixels that with the maximum flow entropy in the 5 × 5 neighborhood is selected as the final contour of the object.

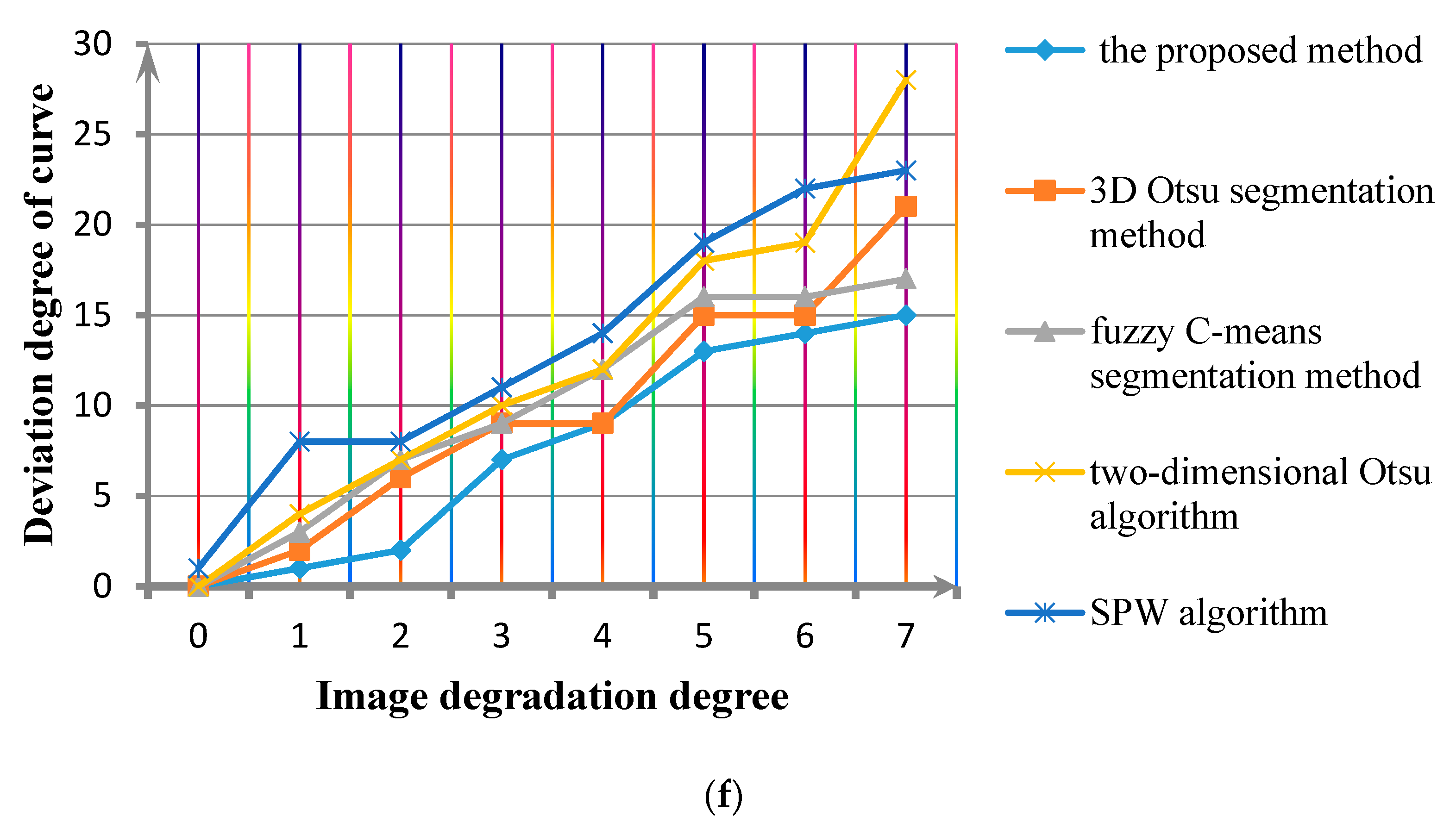

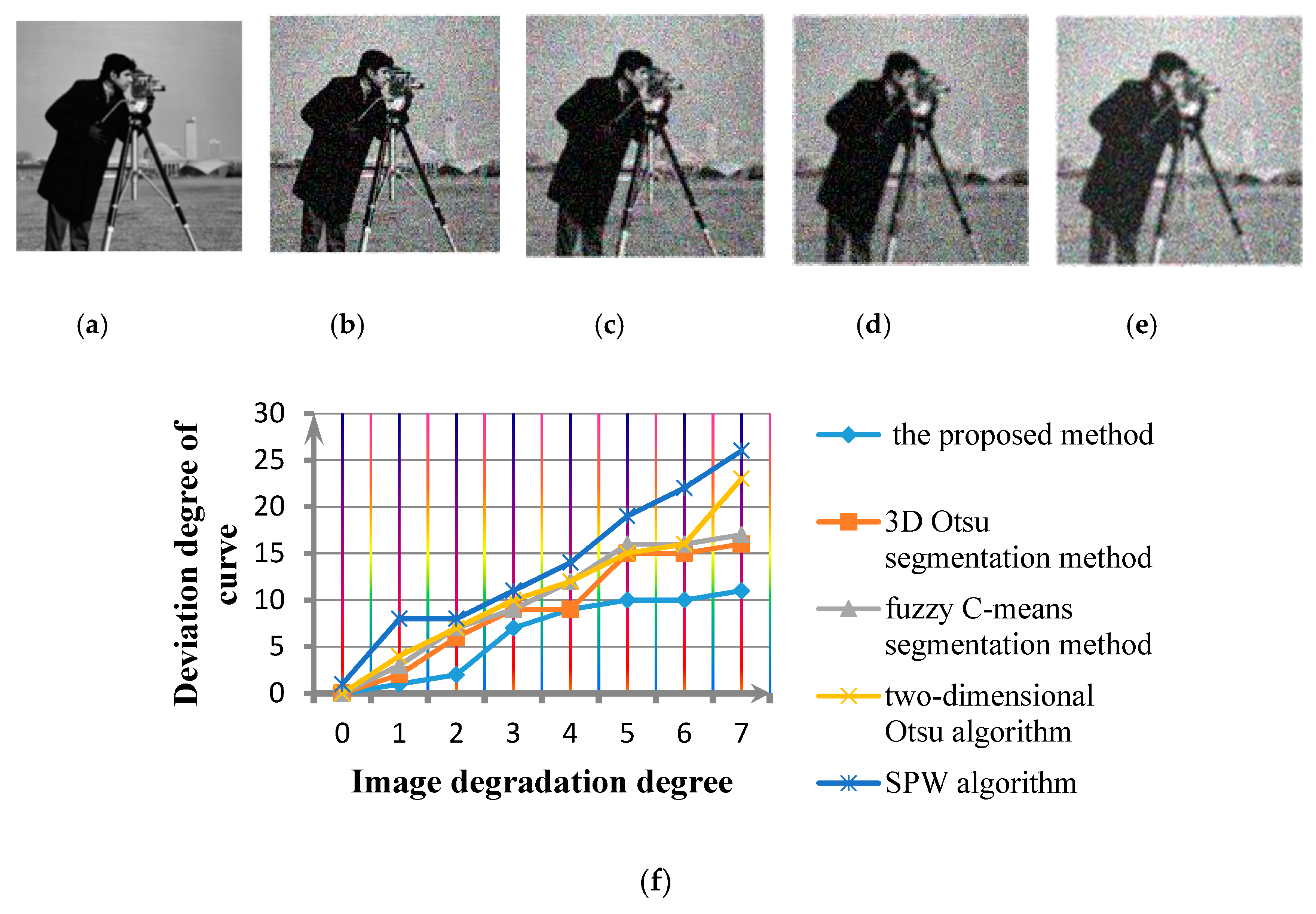

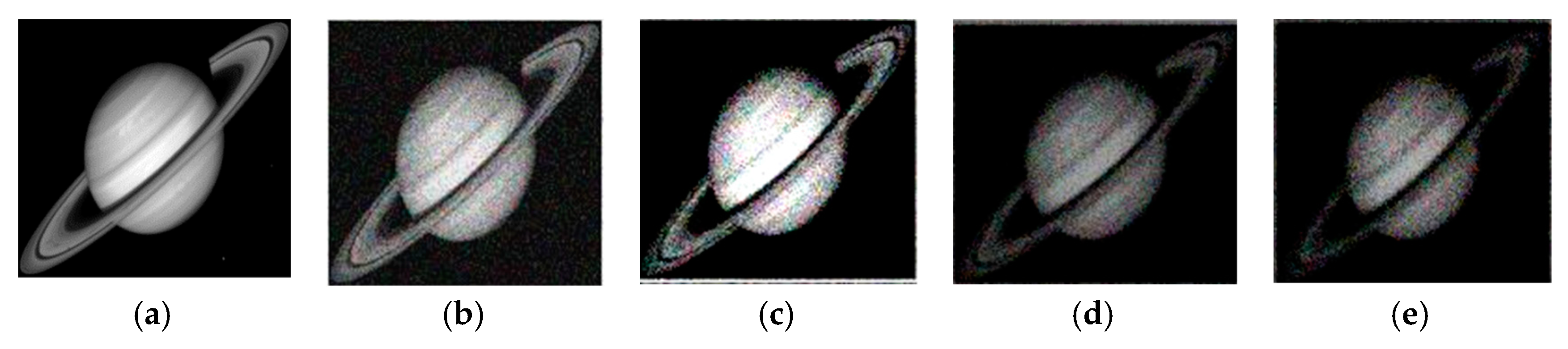

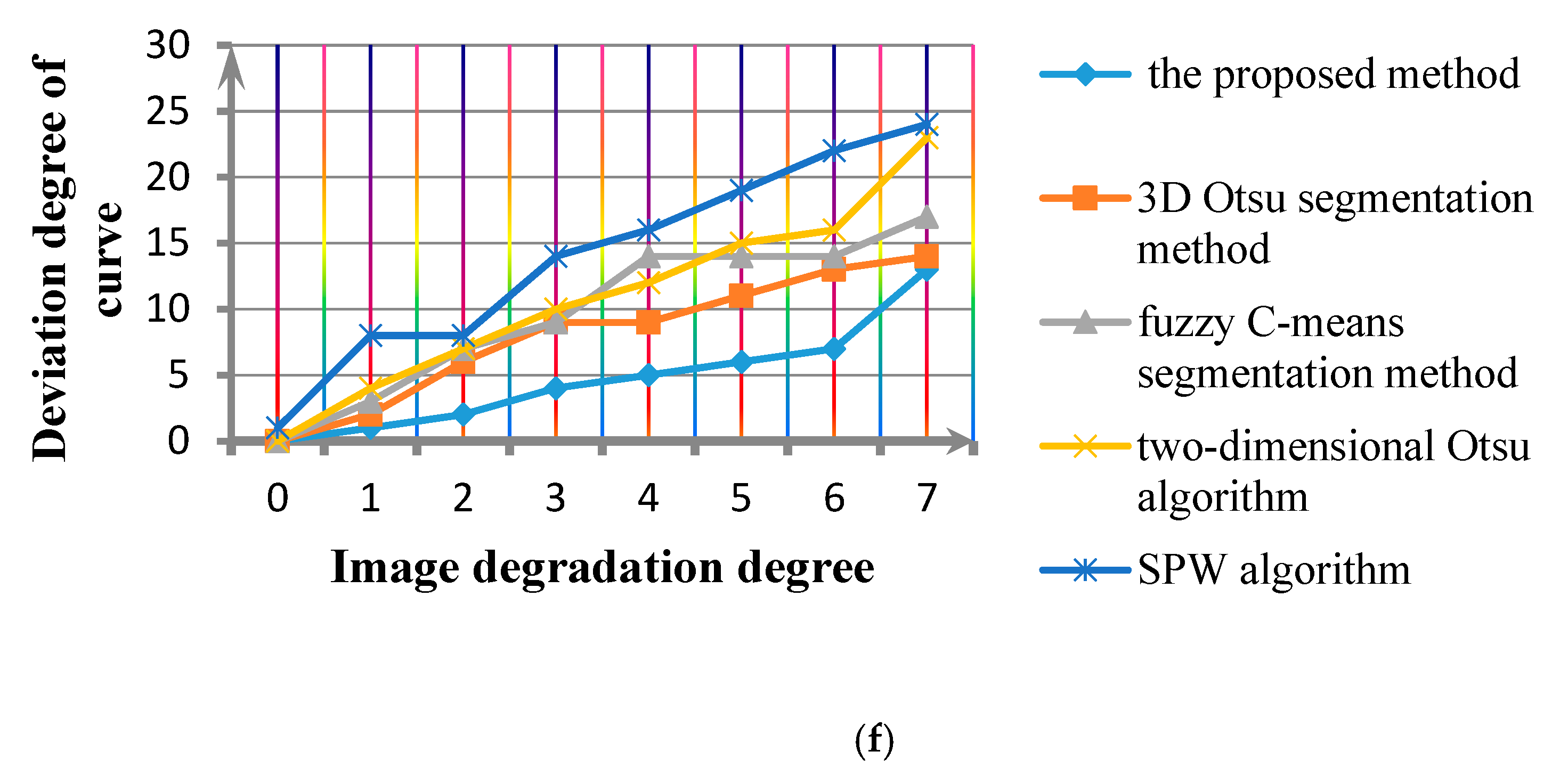

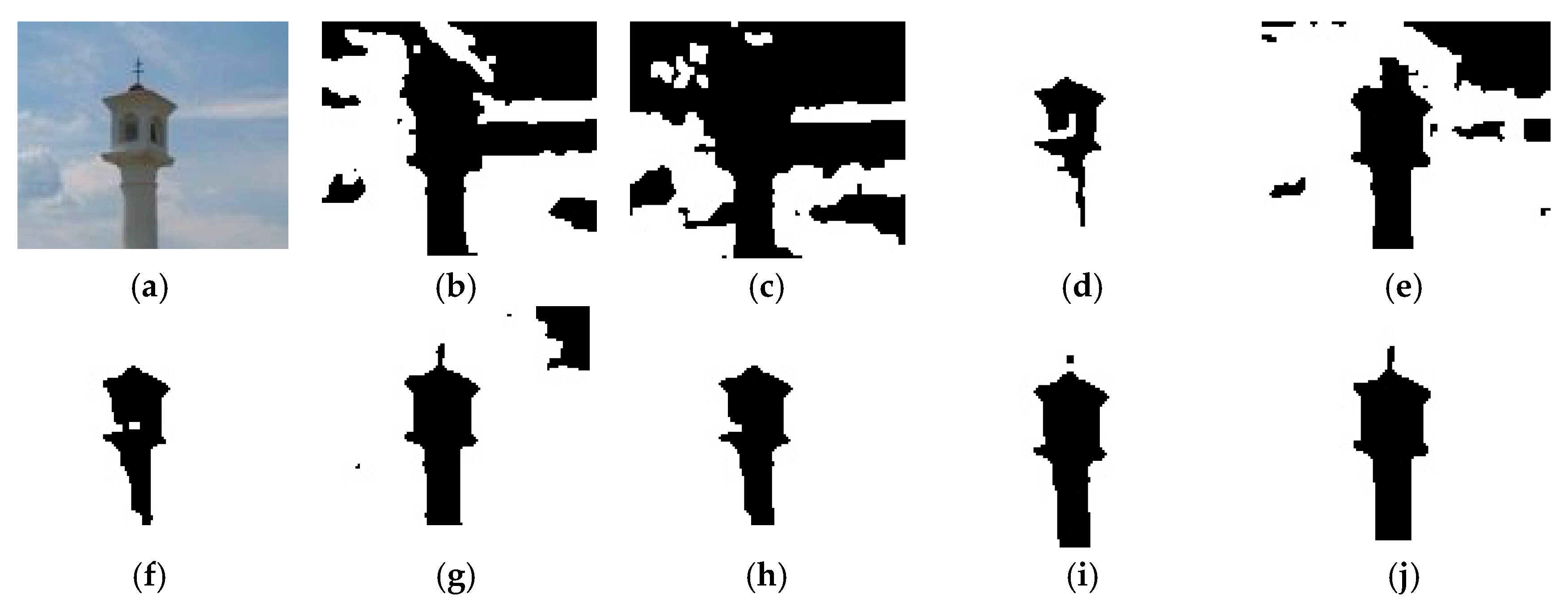

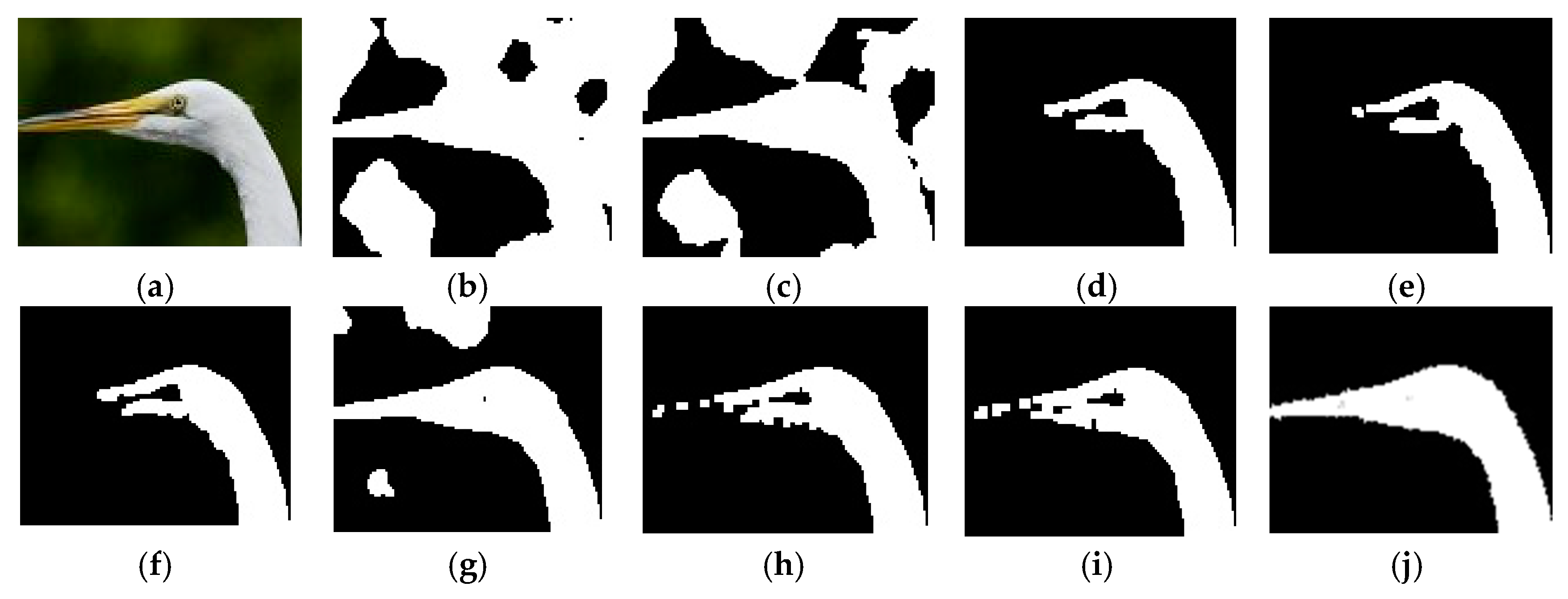

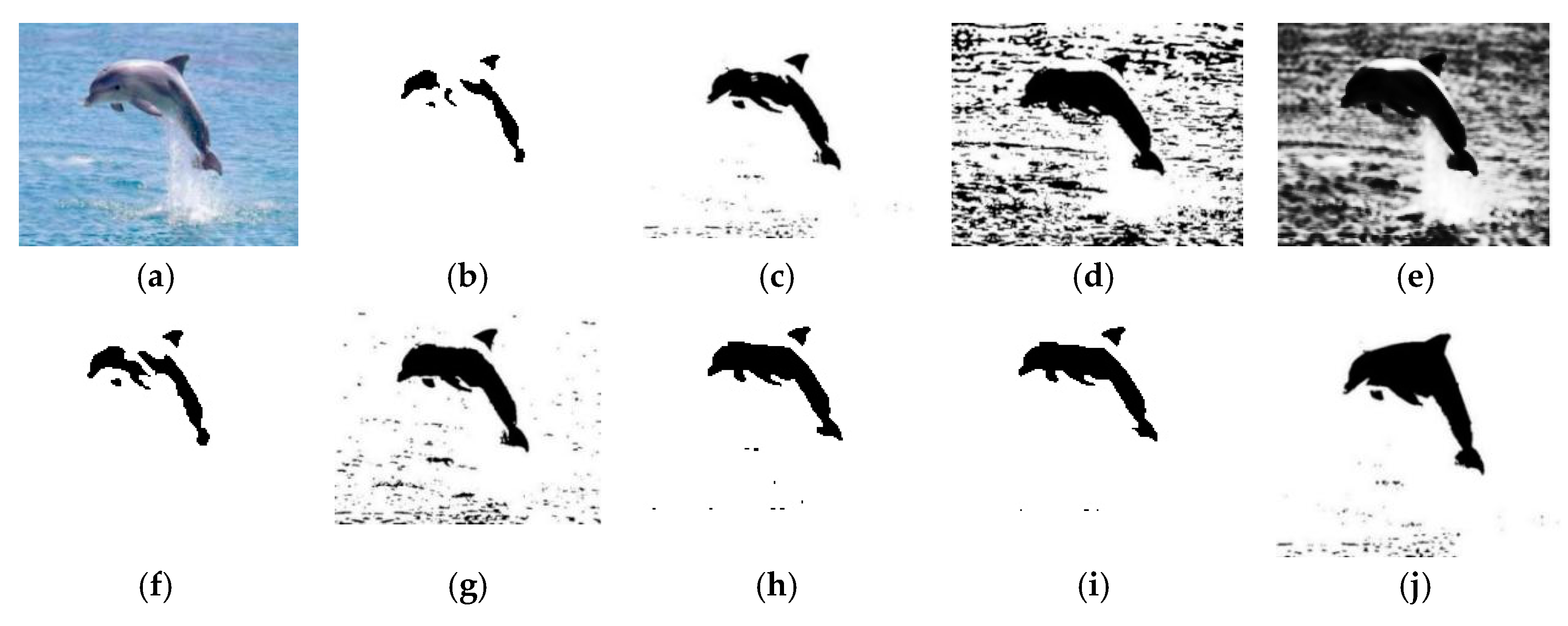

4. Experimental Results

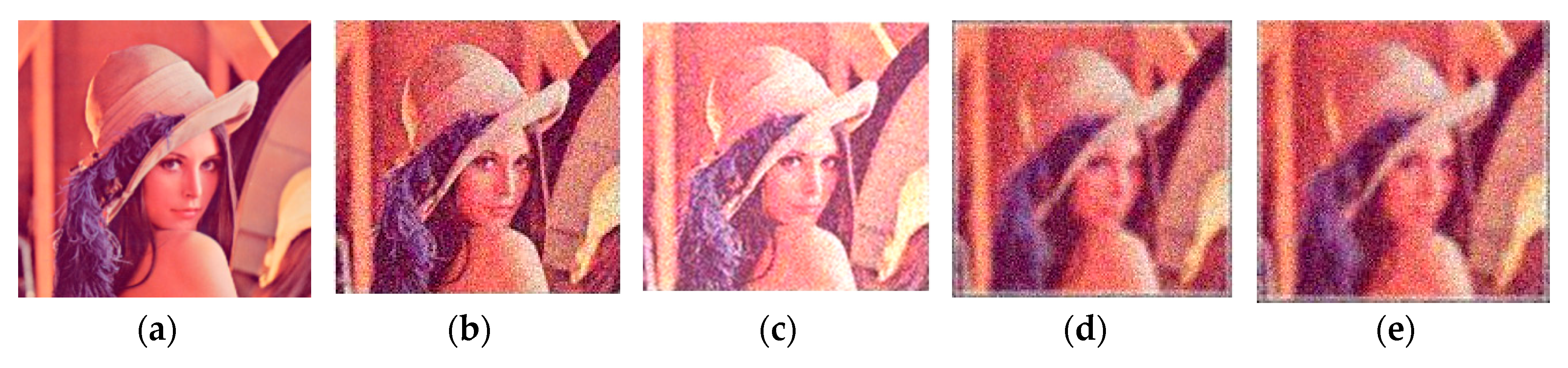

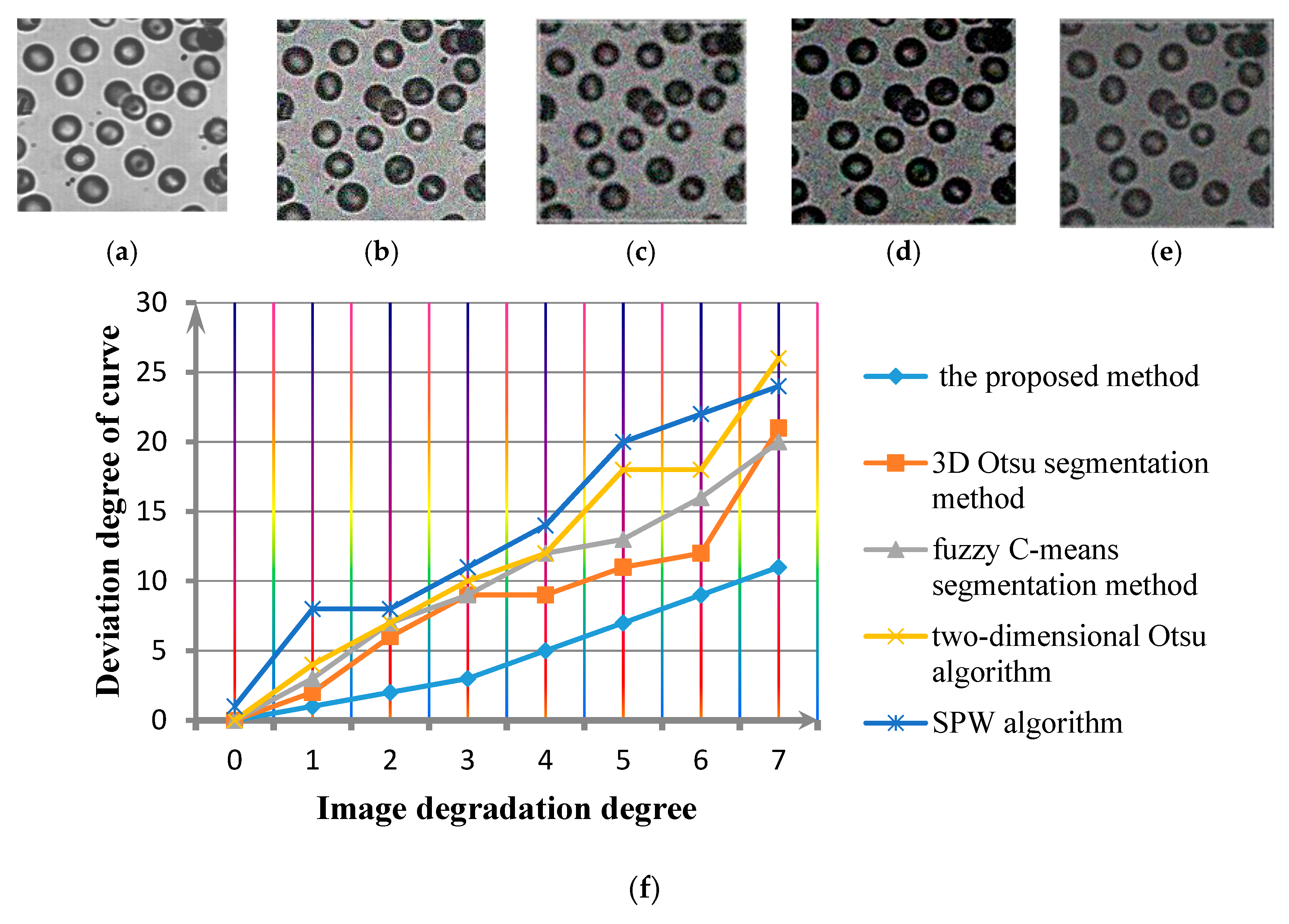

4.1. Computer Simulation Experiment

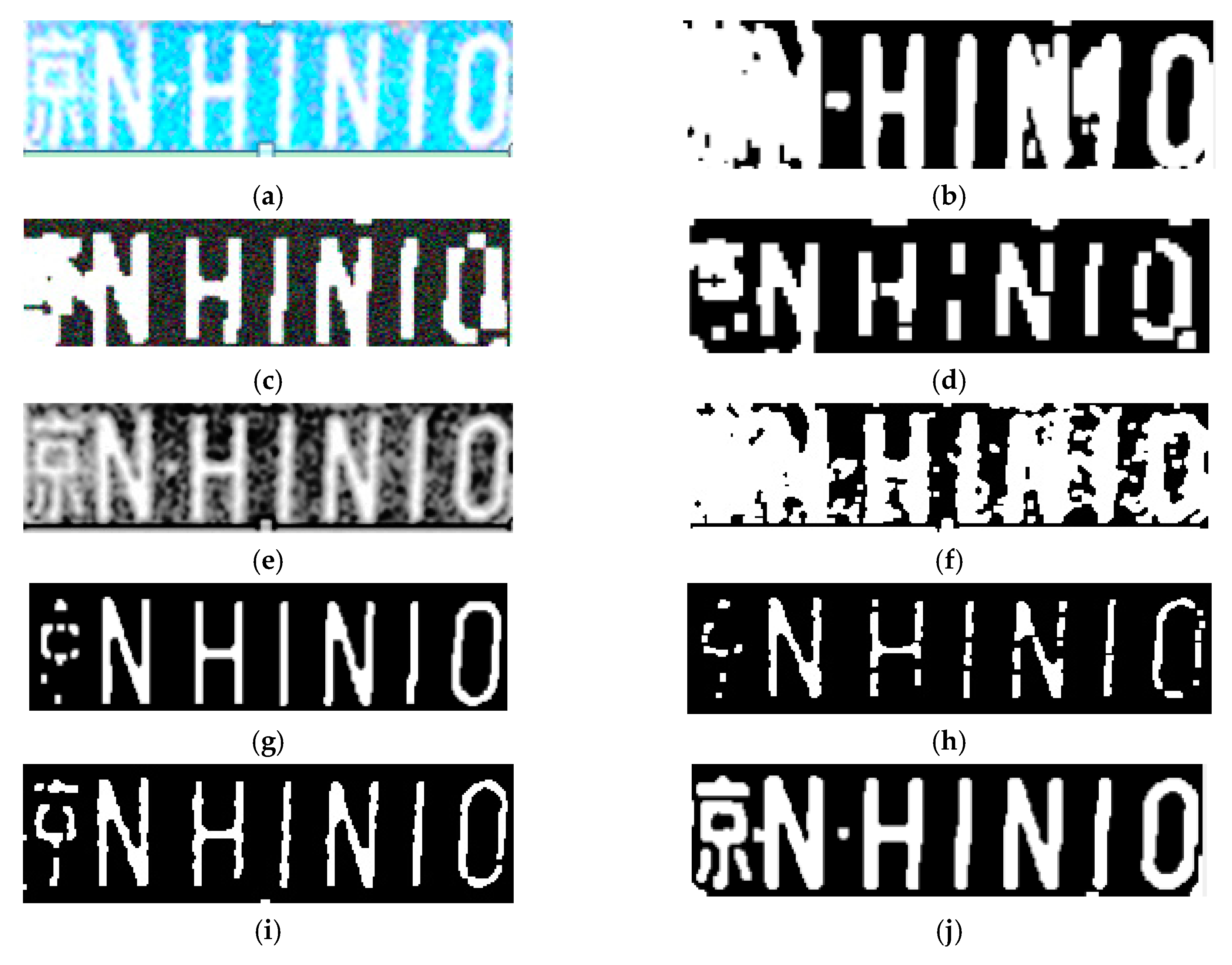

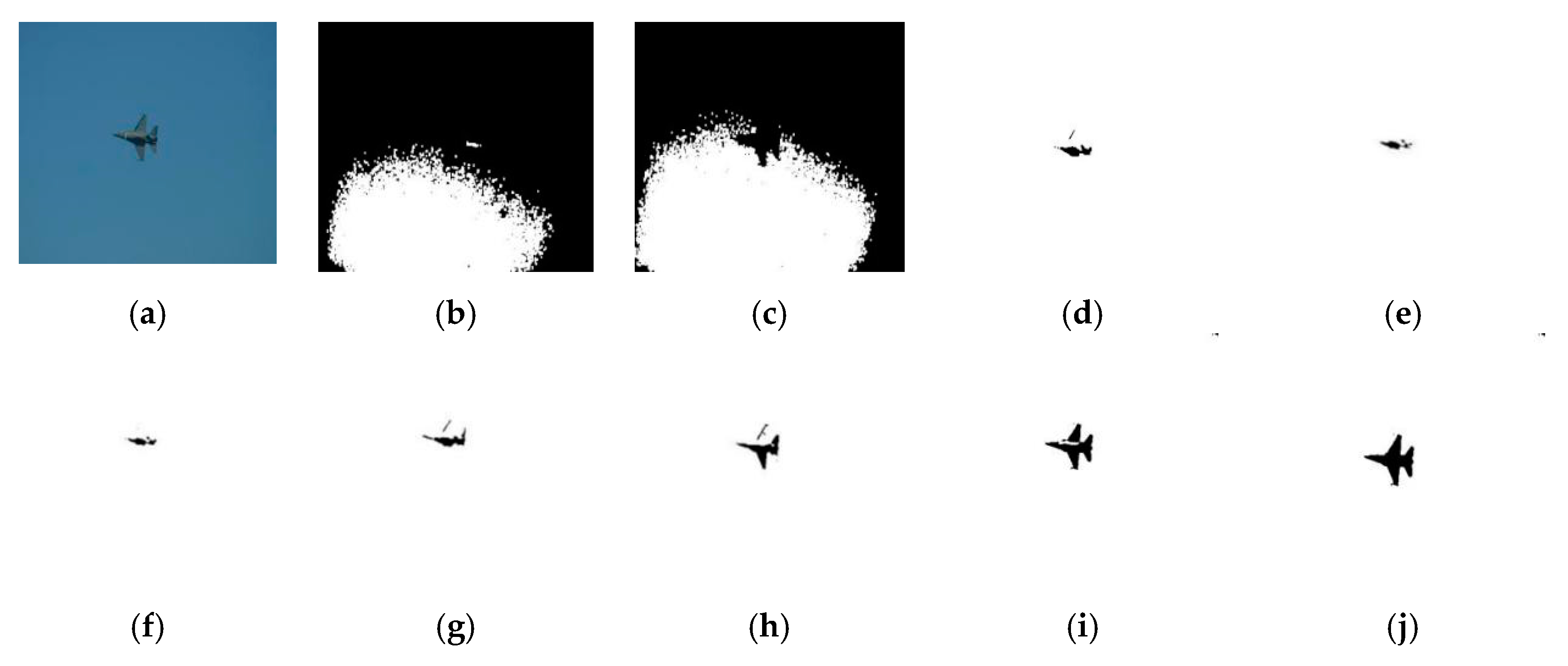

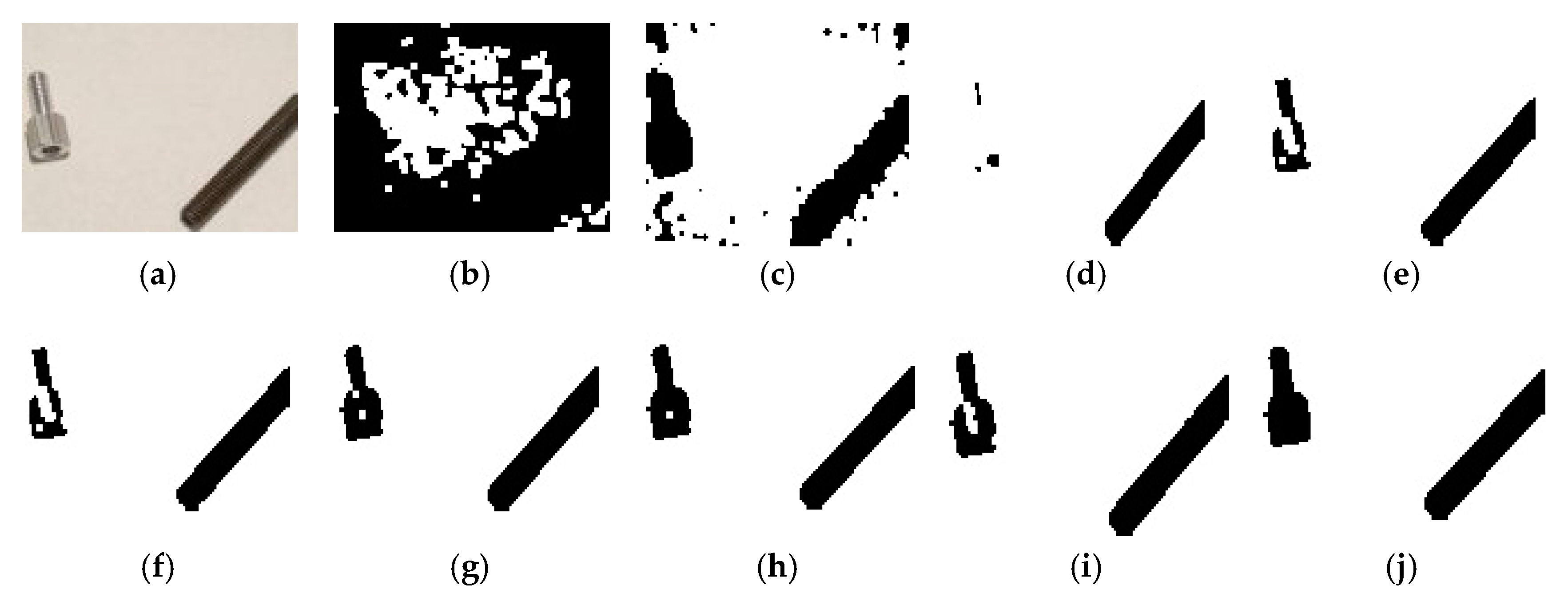

4.2. Physical Image Experiment

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Qi, C.M. Maximum entropy for image segmentation based on an adaptive particle swarm optimization. Appl. Math. Inf. Sci. 2014, 8, 3129–3135. [Google Scholar] [CrossRef]

- Ahmed, L.J.; Jeyakumar, A.E. Image segmentation using a refined comprehensive learning particle swarm optimizer for maximum tsallis entropy thresholding. Int. J. Eng. Technol. 2013, 5, 3608–3616. [Google Scholar]

- Lu, Y.; Zhao, W.; Mao, X. Multi-threshold image segmentation based on improved particle swarm optimization and maximum entropy method. Adv. Mater. Res. 2014, 12, 3649–3653. [Google Scholar] [CrossRef]

- Filho, P.P.R.; Cortez, P.C.; da Silva Barros, A.C.; de Albuquerque, V.H.C. Novel Adaptive Balloon Active Contour Method based on internal force for image segmentation—A systematic evaluation on synthetic and real images. Expert Syst. Appl. 2014, 41, 7707–7721. [Google Scholar] [CrossRef]

- Li, D.; Li, W.; Liao, Q. A fuzzy geometric active contour method for image segmentation. IEICE Trans. Inf. Syst. 2013. [Google Scholar] [CrossRef]

- Padmapriya, B.; Kesavamurthi, T. An approach to the calculation of volume of urinary bladder by applying localising region-based active contour segmentation method. Int. J. Biomed. Eng. Technol. 2013, 13, 177–184. [Google Scholar] [CrossRef]

- Li, M.; Yang, H.; Zhang, J.; Zhou, T.; Tan, Z. Image thresholding segmentation research based on an improved region division of two-dimensional histogram. J. Optoelectron. Laser 2013, 24, 1426–1433. [Google Scholar]

- Fan, J.L.; Zhao, F.; Zhang, X.F. Recursive algorithm for three-dimensional Otsu’s thresholding segmentation method. Acta Electron. Sin. 2017, 35, 1398–1402. [Google Scholar]

- Gao, L.; Yang, S.; Li, H. New unsupervised image segmentation via marker-based watershed. J. Image Graph. 2017, 12, 1025–1032. [Google Scholar]

- Liang, H.; Jia, H.; Xing, Z.; Ma, J.; Peng, X. Modified grasshopper algorithm-based multilevel thresholding for color image segmentation. IEEE Access 2019, 12, 11258–11295. [Google Scholar] [CrossRef]

- Rapaka, S.; Kumar, P.R. Efficient approach for non-ideal iris segmentation using improved particle swarm optimisation-based multilevel thresholding and geodesic active contours. IET Image Process. 2018, 12, 1721–1729. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Y.; Liu, D.; Wang, L. Fast threshold image segmentation based on 2D fuzzy fisher and random local optimized QPSO. IEEE Trans. Image Process. 2017, 26, 1355–1362. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Zheng, M.; Li, F.; Wang, J.; Geng, Y.; Jiang, H. Retinal image segmentation using double-scale non-linear thresholding on vessel support regions. CAAI Trans. Intell. Technol. 2017, 2, 109–115. [Google Scholar] [CrossRef]

- Zheng, L.; Li, G.; Jiang, H. Improvement of the gray image maximum entropy segmentation method. Comput. Eng. Sci. 2010, 32, 53–56. [Google Scholar]

- Wu, J.; Zhang, Y.; Bai, J.; Weng, W.; Wu, Y.; Han, Y.; Li, J. Tongue contour image extraction using a watershed transform and an active contour model. J. Tsinghua Univ. (Sci. Technol.) 2018, 48, 1040–1043. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Zhou, F.; Bai, X. Infrared pedestrian segmentation through background likelihood and object-biased saliency. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2826–2844. [Google Scholar] [CrossRef]

- Chen, B.; Qiu, F.; Wu, B. Image segmentation based on constrained spectral variance difference and edge penalty. Remote Sens. 2015, 7, 5980–6004. [Google Scholar] [CrossRef]

- Montoya, M.; Gil, C.; Garcia, I. The load unbalancing problem for region growing image segmentation algorithms. J. Parallel Distrib. Comput. 2003, 63, 387–395. [Google Scholar] [CrossRef]

- Haris, K.; Efstratiadis, S.; Maglaveras, N. Hybrid image segmentation using watersheds and fast region merging. IEEE Trans. Image Process. 1998, 7, 1684–1699. [Google Scholar] [CrossRef]

- Soille, P. Constrained connectivity for hierarchical image partitioning and simplification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 30, 1132–1145. [Google Scholar] [CrossRef] [PubMed]

- Ma, B.; Ban, X.; Huang, H.; Chen, Y.; Liu, W.; Zhi, Y. Deep learning-based image segmentation for Al-La alloy microscopic images. Symmetry 2018, 10, 107. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Minaee, S.; Wang, Y. Screen content image segmentation using least absolute deviation fitting. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3295–3299. [Google Scholar]

- Chen, L.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to scale: Scale-aware semantic image segmentation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3640–3649. [Google Scholar]

- van Opbroek, A.; Achterberg, H.C.; Vernooij, M.W.; de Bruijne, M. Transfer learning for image segmentation by combining image weighting and kernel learning. IEEE Trans. Med Imaging 2019, 38, 213–224. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. Comput. Vis. Pattern Recognit. 2017, 12, 210–217. [Google Scholar]

- Minaee, S.; Wang, Y. An ADMM approach to masked signal decomposition using subspace representation. IEEE Trans. Image Process. 2019, 28, 3192–3204. [Google Scholar] [CrossRef]

- Braquelaire, J.P.; Brun, L. Image segmentation with topological maps and inter-pixel representation. J. Vis. Commun. Image Represent. 1998, 9, 62–79. [Google Scholar] [CrossRef]

- Arbelaez, P.A.; Cohen, L.D. Energy partitions and image segmentation. J. Math. Imaging Vis. 2004, 20, 43–57. [Google Scholar] [CrossRef]

- Amelio, A.; Pizzuti, C. An evolutionary approach for image segmentation. Evol. Comput. 2014, 22, 525–557. [Google Scholar] [CrossRef]

- Wang, X.; Wan, S.; Lei, T. Brain tumor segmentation based on structuring element map modification and marker-controlled watershed transform. J. Softw. 2014, 9, 2925. [Google Scholar] [CrossRef][Green Version]

- Saini, S.; Arora, K.S. Enhancement of watershed transform using edge detector operator. Int. J. Eng. Sci. Res. Technol. 2014, 3, 763–767. [Google Scholar]

- Zhang, J.; Hou, H.; Zhao, X. A modified Otsu segmentation algorithm based on preprocessing by Top-hat transformation. Sens. World 2011, 17, 9–11. [Google Scholar]

- Liu, R.; Peng, Y.; Tang, C.; Cheng, S. Object auto-segmentation based on watershed and graph cut. J. Beijing Univ. Aeronaut. Astronaut. 2012, 38, 636–640. [Google Scholar]

- Zhu, S. Function extension and application of morphological top-hat transformation and bottom-hat transformation. Comput. Eng. Appl. 2011, 47, 190–192. [Google Scholar]

- Ge, W.; Gao, L.Q.; Shi, Z.G. An algorithm based on wavelet lifting transform for extraction of multi-scale edge. J. Northeast. Univ. (Nat. Sci.) 2017, 4, 3005–3026. [Google Scholar]

- Claveau, V.; Lefèvre, S. Topic segmentation of TV-streams by watershed transform and vectorization. Comput. Speech Lang. 2015, 29, 63–80. [Google Scholar] [CrossRef][Green Version]

- Chen, Q.; Zhao, L.; Lu, J.; Kuang, G. Modified two-dimensional Otsu image segmentation algorithm and fast realization. IET Image Process. 2012, 6, 426–433. [Google Scholar] [CrossRef]

- Nakib, A.; Oulhadj, H.; Siarry, P. A thresholding method based on two-dimensional fractional differentiation. Image Vis. Comput. 2009, 27, 1343–1357. [Google Scholar] [CrossRef]

- Guo, W.; Wang, X.; Xia, X. Two-dimensional Otsu’s thresholding segmentation method based on grid box filter. Optik 2014, 125, 5234–5240. [Google Scholar] [CrossRef]

- Zhang, W.; Kang, J. Neighboring weighted fuzzy C-Means with kernel method for image segmentation and its application. Optik 2013, 13, 2306–2310. [Google Scholar]

- Enguehard, J.; Halloran, P.; Gholipour, A. Semi-supervised learning with deep embedded clustering for image classification and segmentation. IEEE Access 2019, 3, 11093–11104. [Google Scholar] [CrossRef]

- Fehri, H.; Gooya, A.; Lu, Y.; Meijering, E.; Johnston, S.A.; Frangi, A.F. Bayesian polytrees with learned deep features for multi-class cell segmentation. IEEE Trans. Image Process. 2019, 28, 3246–3260. [Google Scholar] [CrossRef] [PubMed]

| Segmentation Algorithms | License Plate Image | Aircraft Image | Fighter Image | The Object Image | The Lighthouse Image | Crane Image | Dolphin Image |

|---|---|---|---|---|---|---|---|

| The proposed method | 0.9011 | 0.9523 | 0.9435 | 0.9501 | 0.8913 | 0.9042 | 0.9098 |

| SPW | 0.8016 | 0.4121 | 0.3117 | 0.5131 | 0.5962 | 0.6858 | 0.8714 |

| 2D-Otsu | 0.4036 | 0.5271 | 0.4015 | 0.7254 | 0.6525 | 0.6555 | 0.8544 |

| Fuzzy C-means | 0.5542 | 0.4651 | 0.4754 | 0.7541 | 0.7288 | 0.7359 | 0.4653 |

| MRM | 0.6654 | 0.4553 | 0.4573 | 0.7252 | 0.7075 | 0.7459 | 0.4943 |

| AAC | 0.7956 | 0.5152 | 0.5565 | 0.7752 | 0.7586 | 0.8785 | 0.7789 |

| 3D-Otsu | 0.8656 | 0.7815 | 0.7153 | 0.7963 | 0.8848 | 0.8796 | 0.8906 |

| S’s method | 0.8215 | 0.9245 | 0.8978 | 0.8645 | 0.8875 | 0.8632 | 0.8514 |

| BL method | 0.8773 | 0.8998 | 0.9015 | 0.9096 | 0.8563 | 0.8731 | 0.8721 |

| Segmentation Algorithms | License Plate Image | Aircraft Image | Fighter Image | The Object Image | The Lighthouse Image | Crane Image | Dolphin Image |

|---|---|---|---|---|---|---|---|

| The proposed method | 92.11% | 94.54% | 90.87% | 95.52% | 89.15% | 90.95% | 84.11% |

| SPW | 82.16% | 37.65% | 44.65% | 55.27% | 54.89% | 60.27% | 64.98% |

| 2D-Otsu | 57.14% | 45.15% | 45.63% | 60.16% | 63.86% | 59.88% | 55.48% |

| Fuzzy C-means | 53.16% | 36.19% | 59.61% | 68.15% | 73.02% | 65.19% | 57.46% |

| MRM | 59.15% | 63.03% | 55.30% | 65.48% | 65.53% | 59.05% | 45.89% |

| AAC | 47.51% | 53.43% | 57.15% | 56.57% | 69.51% | 60.12% | 65.88% |

| 3D-Otsu | 80.19% | 77.64% | 79.65% | 80.22% | 83.91% | 87.51% | 79.81% |

| S’s method | 84.54% | 89.89% | 82.05% | 83.79% | 86.13% | 81.15% | 81.03% |

| BL method | 85.13% | 89.25% | 81.25% | 85.94% | 80.82% | 84.12% | 80.09% |

| Segmentation Algorithms | License Plate Image | Aircraft Image | Fighter Image | The Object Image | The Lighthouse Image | Crane Image | Dolphin Image |

|---|---|---|---|---|---|---|---|

| The proposed method | 0.91 | 0.92 | 0.89 | 0.95 | 0.89 | 0.90 | 0.84 |

| SPW | 0.65 | 0.74 | 0.75 | 0.55 | 0.54 | 0.60 | 0.64 |

| 2D-Otsu | 0.57 | 0.66 | 0.70 | 0.60 | 0.63 | 0.59 | 0.55 |

| Fuzzy C-means | 0.53 | 0.58 | 0.61 | 0.68 | 0.73 | 0.65 | 0.57 |

| MRM | 0.59 | 0.65 | 0.55 | 0.64 | 0.61 | 0.59 | 0.46 |

| AAC | 0.48 | 0.56 | 0.54 | 0.56 | 0.62 | 0.60 | 0.66 |

| 3D-Otsu | 0.80 | 0.78 | 0.83 | 0.80 | 0.81 | 0.86 | 0.77 |

| S’s method | 0.82 | 0.88 | 0.80 | 0.81 | 0.85 | 0.84 | 0.80 |

| BL method | 0.81 | 0.85 | 0.85 | 0.83 | 0.86 | 0.81 | 0.81 |

| Segmentation Algorithms | License Plate Image | Aircraft Image | Fighter Image | The Object Image | The Lighthouse Image | Crane Image | Dolphin Image |

|---|---|---|---|---|---|---|---|

| the proposed algorithm | 9.25 | 9.19 | 8.97 | 8.98 | 9.01 | 9.19 | 8.49 |

| SPW | 8.17 | 8.81 | 8.74 | 7.25 | 7.19 | 8.57 | 8.23 |

| 2D-Otsu | 4.72 | 5.23 | 6.16 | 3.29 | 4.64 | 4.52 | 5.54 |

| Fuzzy C-means | 7.64 | 8.05 | 8.01 | 7.59 | 7.03 | 6.98 | 8.45 |

| MRM | 9.12 | 9.36 | 10.14 | 11.73 | 9.69 | 9.42 | 10.57 |

| AAC | 8.97 | 8.14 | 8.02 | 5.95 | 6.14 | 7.59 | 8.42 |

| 3D-Otsu | 10.12 | 12.14 | 9.69 | 11.37 | 12.49 | 10.91 | 9.56 |

| S’s method | 8.98 | 9.19 | 8.39 | 8.19 | 8.19 | 8.94 | 9.11 |

| BL method | 8.17 | 8.29 | 9.01 | 8.55 | 9.14 | 9.33 | 9.67 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Liu, L.; Lan, J. 3D Flow Entropy Contour Fitting Segmentation Algorithm Based on Multi-Scale Transform Contour Constraint. Symmetry 2019, 11, 857. https://doi.org/10.3390/sym11070857

Wu H, Liu L, Lan J. 3D Flow Entropy Contour Fitting Segmentation Algorithm Based on Multi-Scale Transform Contour Constraint. Symmetry. 2019; 11(7):857. https://doi.org/10.3390/sym11070857

Chicago/Turabian StyleWu, Hongtao, Liyuan Liu, and Jinhui Lan. 2019. "3D Flow Entropy Contour Fitting Segmentation Algorithm Based on Multi-Scale Transform Contour Constraint" Symmetry 11, no. 7: 857. https://doi.org/10.3390/sym11070857

APA StyleWu, H., Liu, L., & Lan, J. (2019). 3D Flow Entropy Contour Fitting Segmentation Algorithm Based on Multi-Scale Transform Contour Constraint. Symmetry, 11(7), 857. https://doi.org/10.3390/sym11070857