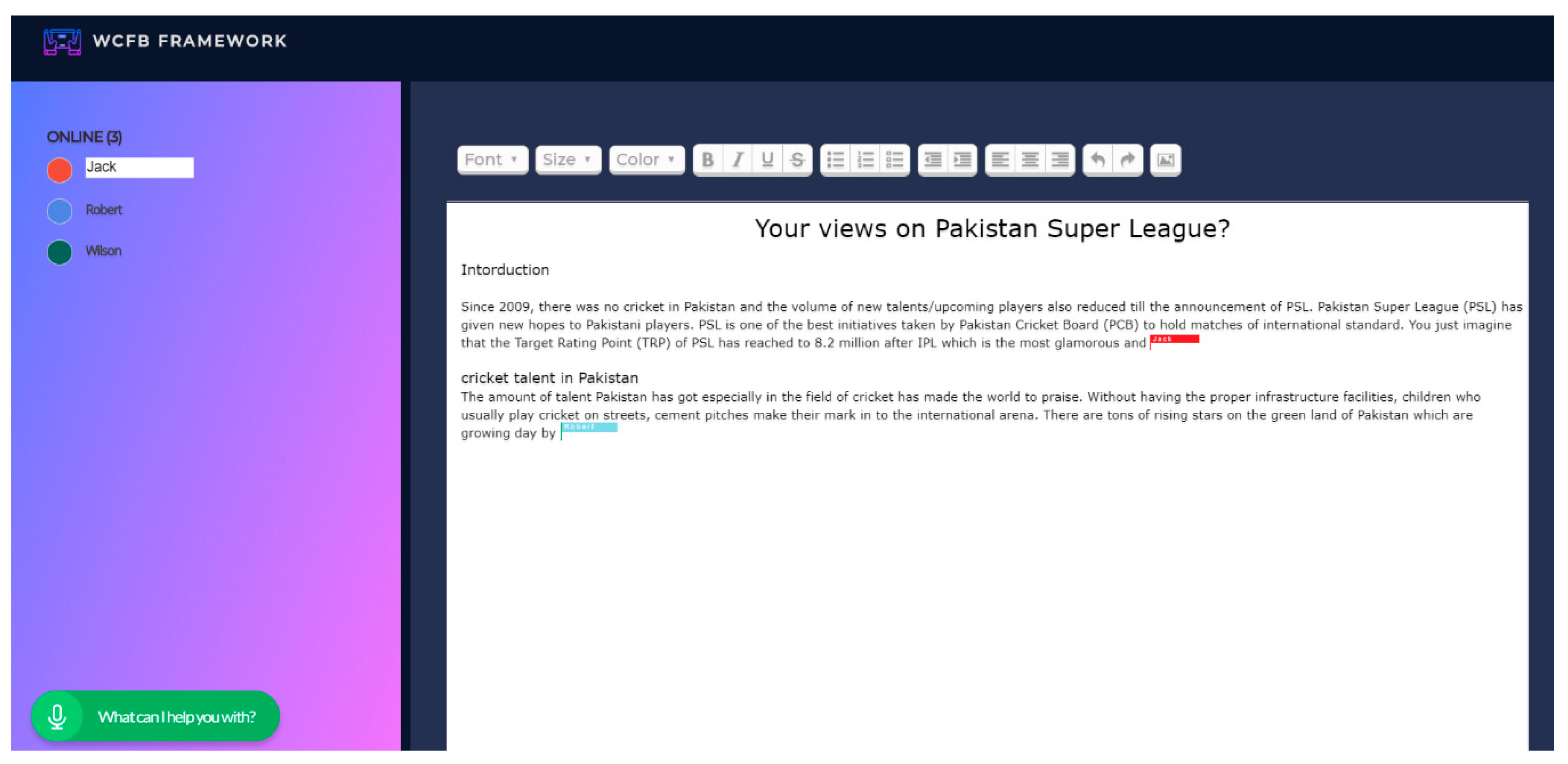

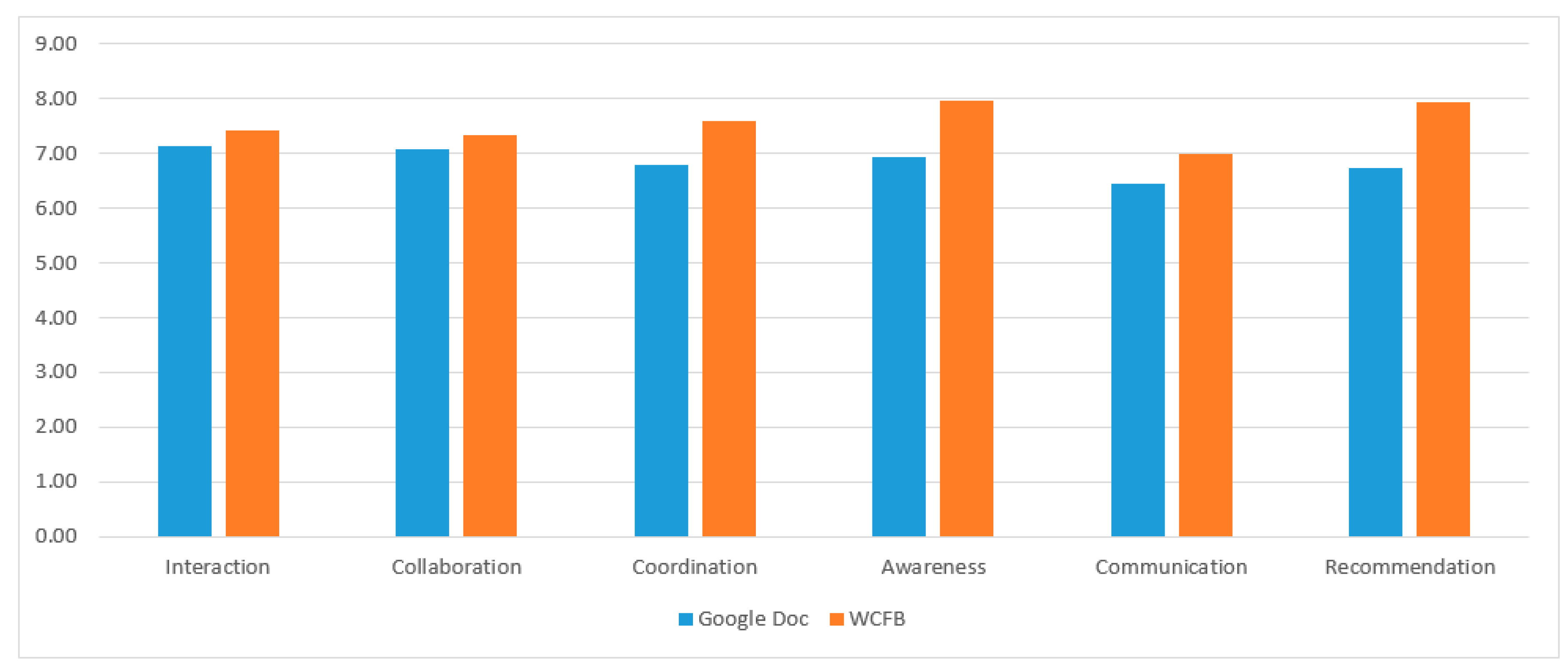

When a group of users works on a single task, the productivity and the quality of the work increases and decision making becomes faster and more efficient [

13]. This is achieved through a groupware environment that has the functions of collaboration and coordination in it [

7]. To achieve a high coordination and collaboration quality, group awareness and the support of coordination are essential. This is related to the activities of each user working in the shared platform, the information of the participating authors, and the effect of each author’s activity on the activity of others [

14].

2.1. Overview of Computer Supported Cooperative Work (CSCW) Systems

CSCW systems are used by many big organizations. They help collaborators because they manage asynchronous as well as synchronous communication [

16]. Java applets made multiuser (JAMM) [

17] and synchronous asynchronous structured shared editor (SASSE) [

18] are examples of these collaborator systems. Inconsistency is considered one of the major issues in these systems. Inconsistency refers to the duplication of the same content in different parts of the document due to collaborative work. Yang et al. [

19] presented a consistency model solution to avoid inconsistency problems. The Clay [

20] system was proposed by Locasto et al. It allows users to work in a synced manner, from different geographic locations.

Gutwin and Greenberg [

21] developed a descriptive theory of awareness that guides the developers of groupware applications about the importance of and need for awareness functions in a shared workspace. The proposed framework helps the designers to understand the concept for the purposes of designing awareness support and improving the quality of group awareness in a collaborative environment. The group awareness knowledge-based system (GAKS) [

5] is a web-based application that enhances the coauthors’ document writing abilities by providing them with elaborated and innovative awareness functionalities. The proposed system provides synchronous/asynchronous contextual communication tools and a work proximity detector for the users to efficiently produce and coordinate their actions. Big Watch (BW) [

22] is a framework that provides flexible and extensible awareness functionalities to its users. The proposed framework can be integrated into any application to enhance its event-based awareness functions. The framework reduces the development cost and extends the awareness information in a unified way.

An extensible markup language (XML) based co-authoring platform was created by Qingzhang et al. [

23] for collaborative working. Another XML based framework was also presented by Ho, Leong et al. [

24]. In XML-based systems, shared documents are converted and stored in XML format. The advantage of using XML is that it stores the information in a structured format that is easy to read for machines and humans. Thus, the processing of the documents becomes easier and it helps to manage resources and access, as well as locking the content of documents. Another system with the name of WoTel [

25] allows its authors to conduct video conferences to share different ideas while working on a shared document. To conduct group communications, multimedia systems are integrated for collaboration.

An asynchronous co-authoring system named TeNDeX was developed by Hodel et al. [

26], which allows its users to edit documents synchronously. In the proposed system, a document’s content is saved in a database, despite the existing conventional co-authoring platforms, which increased the efficiency of data retrieval. Joeris et al. [

27] also proposed another application of synchronous collaboration, which supports the engineering domain. The work done in [

28] allows its users to write mathematical expressions collaboratively. It provides, within the interface, the option of writing formulae, obtaining suggestions from old written formulae, reusing them, and evaluating them. In the case of any difficulty, it also suggests to currently available expert authors to its user so that they may get help from them.

2.2. Technologies and Applications for the Visually Impaired to Help in Document Writing

Assistive applications contain special user interface/user expiries (UI/UX) components, which enable blind users to produce work through them. There might be three ways to manage UI/UX for these kinds of users. Well formatted and good quality visual content for partially blind or color blind people as they have sight, but are unable to see clearly; text to speech function and special assistive hardware devices for the completely blind as they cannot see; and sound/speech based alerts and voice input controls for both are the best ways to interact with applications for persons who are visually impaired. All these things are a challenge to achieve in one framework. However, some frameworks support some individual features. For instance, nowadays, speech-based assistants [

29,

30,

31] are already used, and are specifically designed for visually impaired people; and a similar kind of application may be designed to help said people with their educational and learning activities. A lot of chat bots have arrived, which use natural language processing (NPL) to communicate with users and do not even let the communicator know that there is a bot behind the screen [

32]. One good application of such systems is for the elderly and disabled people, who suffer from loneliness and do not have a vast social life. The system can provide them with the benefit of a distributed network, and help them stay updated with their surroundings [

33].

Google Docs UI developed by Mori et. al. [

9] encountered the major problems faced by people who are blind while using Google Docs [

34] via screen readers. The proposed UI has the same look and feel as the original Google Docs, but the accessibility to interactive elements was improved by integrating a new standard of (X)HTML interactive widgets (links, menu, buttons, and so on). To improve the orientation for the blind, the accessible rich internet applications (ARIA) [

35] landmarks and hidden labels were added in the modified layout. The TinyMCE (Tiny Moxiecode Content Editor) editor was used to replace the existing one. This is more accessible through the keyboard and screen reader. In addition, to provide quick information about the document list, summary attributes were added to the document list tables. The real-time informative message issue was solved by using Ajax scripting.

A Microsoft Word add-in prototype [

36] was developed to improve the usability and accessibility of collaborative writing between visually impaired individuals. The research was initiated with a baseline usability study [

37], conducted to identify the accessibility and usability-related issues that stem from collaborative writing features when they are used by visually impaired people while using Microsoft Word. The author proposed a Word add-in prototype [

8] that utilized Windows message boxes to present the revisions and comments of the document. It is compatible with the Job Access with Speech (JAWS) screen reader and a standard keyboard. In their next proposal, they used an iterative design approach that was conducted in two rounds of one usability study [

38]. A group of blind candidates shared their feedback and suggestions after each iteration to improve the current version of the add-in. Based on the suggested improvements, the authors modified the prototype.

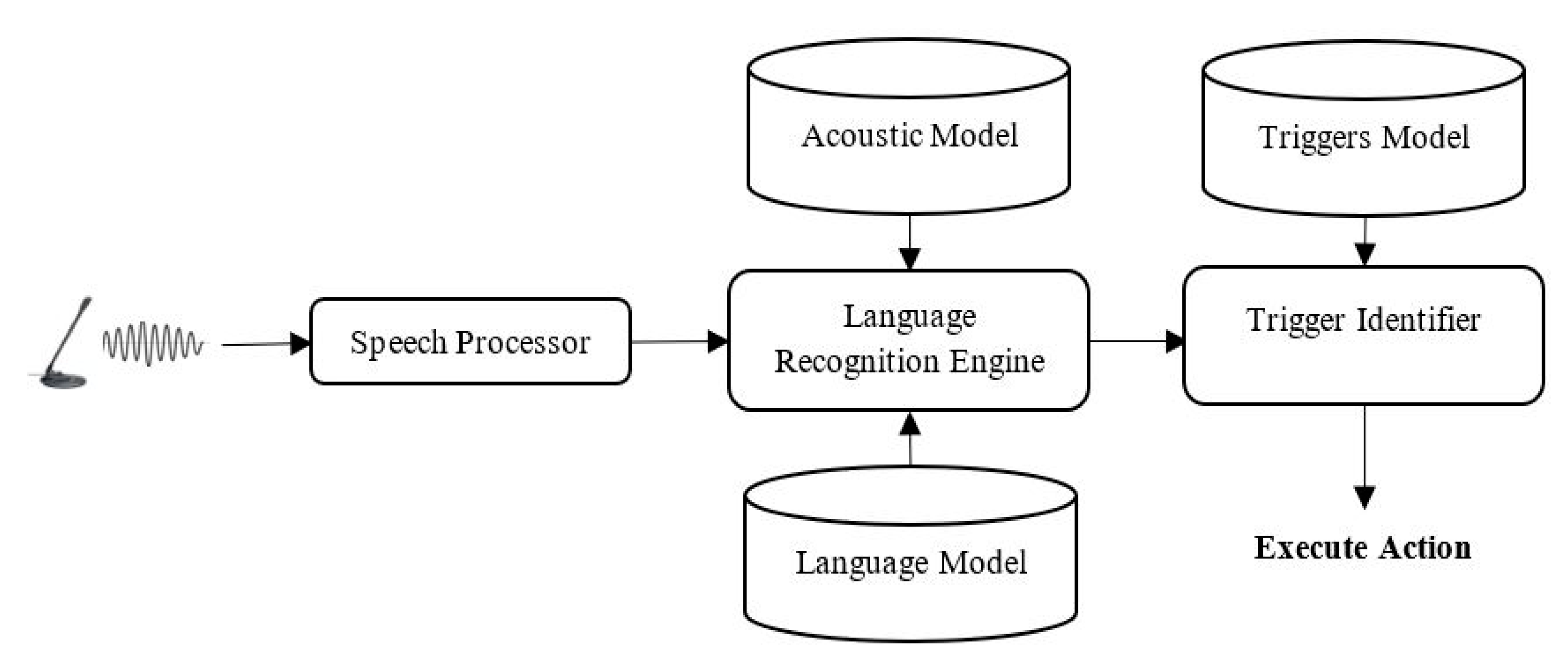

TalkMaths [

39] provides blind people with a system that helps them create and edit high precision mathematical formulas. Automatic speech recognition (ASR) and dragon naturally speaking (DNS) is used to recognize speech and give textual output in the form of “parse tree”. Moreover, TalkMaths can recognize and detect syntactic errors. The initial version of TalkMaths [

40] was designed for English language users only. An editing mode was also devised for the system, where the user can only delete the last typed digit. Work has started on the “DNS select-and-say” topology, like the mouse “point-and-click” strategy, to improve the editing mode. Users then select a specific label by dictating its position, as each box would have a sequence number attached to it. After selecting the appropriate box, the user then dictates the correct statement to overwrite the existing one.

Writing Mathematics by Speech [

41] uses speech input techniques to enable blind students to read, write, and edit their mathematical expressions as quickly and precisely as sighted peers do. The proposed solution is an extension of the linear access to mathematics for braille device and audio-synthesis (LAMBDA) system [

42], which is based on the functional integration of a linear mathematical code and an editor to visualize, write, and manipulate the formulas. It was designed to be used with Braille peripherals and the vocal synthesis. For speech recognition, Dragon Naturally Speaking TM 9 is used. The proposed prototype is made up of a script written for the deployment of Dragon Naturally Speaking TM, two dictionaries (one for text input and the second is for mathematic input), and a python script, which enables the LAMBDA editor to perform actions on the mathematical expressions.

A web-based application [

43] takes input by speech for the writing of mathematical formulas. It is highly accessible with good usability features. The proposed application is context-sensitive, and its functionality is divided into various categories, where each category is forced to use a specific syntax that reduces the risk of errors in speech recognition and ultimately writes an accurate formula. This application requires prosody to minimize voice readout problems, which affect the desired result. On the development side, the author decided to use the JAVA language and Extensible Hypertext Markup Language (XHTML) + Voice Profile which controls voice processing and supports fast voice recognition. The final mathematical formula expression was written using MathML, which is preferred with regards to the existing standards. A two-layered system was introduced; the bottom layer is made from JAVA that runs on Jetty, and for the top layer, graphical user interface (GUI) and Opera Browser were chosen because they support XHTML + Voice Profile technology.

A Software Model to Support Collaboration Mathematical Work between Braille and Sighted Users [

44] provides an environment in which the people who are blind do cooperative work with sighted people. The system synchronizes two different perspectives of a mathematical formula, one for the person who is blind and the other for sighted people. The expression is presented to the blind by using braille, whereas a graphical illustration is used for sighted people. Support functions were included to allow visionless people to perform calculations easily. A hybrid entering method was also used to insert simple expressions via a keyboard and complicated expressions via speech. The Universal Math conversion library is used to deal with the fact that each math-based software has one associated Braille code. Switching from one Braille code to another is made possible through this library. Universal maths conversion library (UMCL) [

45] consists of one major segment whereas the input and output segments depend on the number of mathematical formulae present. The Canonical MathML, a method to unite MathML segments, is used to speed up the evaluation time for the mathematical formulae.

Supporting Cross-Model Collaboration in the Workplace [

46] presents a cross-modal tool for collaborative editing of diagrams between visually-impaired and sighted users. Initially, the authors had designed a single user auditory interface [

47] to construct nodes-and-links diagrams, such as organizational charts, flow diagrams, unified modeling language (UML), transport maps, etc. The proposed system is an extended form of that system. The system has different views: The Graphical View is similar to a typical diagram editor, having a toolbar, mouse clicks, drag and drop functions, and keyboard shortcuts; in the Hierarchical Auditory view, the diagram is translated into auditory form from a tree-like hierarchical data structure to support non-visual interactions. In the Spatial Haptic representation, the PHANTOM Omni (a 3D mouse with a ‘pen’) haptic device is used for displaying the contents on a vertical plane where nodes act as a magnetic point. The user simply traces the stylus across the lines. The system allows haptic and auditory hierarchical views to work together in which the user locates its items and gives a command so that PHANTOM locates them on the virtual plane.

An Initial Investigation into Non-Visual Computer Supported Collaboration [

48] provides a collaborative environment to visually impaired users to interact with and perform manipulations on simple graphs. This is an advancement of an existing application, Graph-Builder [

49], that uses the PHANTOM Omni device to allow browsing and modification of bar graphs via haptic force feedback. The proposed system uses two PHANTOM Omni devices to build a collaborative environment. Two users are allowed to manipulate the same graph simultaneously, but cannot concurrently modify the same bar. Auditory signals are employed for one user to know the other one’s location. For interaction, two features are employed: “Come to Me” (a user uses his Omni device to haptically drag the other one’s device to its current location) and “Go to You” (a user lets his Omni device get dragged to the other user’s proxy).

Some other works have also been conducted along with educational activities to support CSCW between persons who are blind and sighted. Multimodal tools and interfaces [

50] are developed to facilitate intercommunication and interaction between a user who is blind and those who cannot listen. It uses the modality replacement function for information transition and enables communication between the users. Stacy et. al. [

51] explored the creation and management of accessibility in a shared environment and identified the challenges and solutions of collaborative accessibility Based on these experiences, they proposed new methodologies and technologies to support collaborative accessibility in the home. Winberg et al. [

52] reviewed the collaboration between users with visual impairments and sighted individuals across different modalities. They set up an environment in which both types of users play a game. An auditory interface is provided to the candidates who have visual impairments whereas a visual interface is available for sighted users. The issues regarding the collaborative interface were observed and revised design principles were presented for the users with visual impairments. A paper [

53] presents the methodologies used by users with visual impairments while interacting with computer systems and describes the pros and cons. Based on these analyses, they presented recommendations for user interfaces of groupware and chatting applications designed for persons with visual impairments to enhance their usability interaction without losing their interest. Finally, a prototype with the name, Blind Internet Relay Chat (BIRC), was proposed and its advantages and limitations were discussed.

2.3. Limitations and Relevant Recommendations

Some of the systems that we reviewed have implemented basic techniques, such as a screen reader, sound alerts, and popup notifications etc., whereas others have advanced features, like speech-based input, voice alerts, and braille peripherals, etc., to facilitate the visually impaired in performing group activities for document writing, which included text content or mathematical expression.

Table 1 presents the characteristics of the discussed systems based on the attributes chosen for comparative study and analysis.

Application platforms are related to whether an application is accessible to its users through a web portal, whether an installation required, or if it is an add-in that needs to integrate with an already installed application. The application objective states exactly what the application does to support its end user. When designing an application, the consideration of its audience is another important factor. It helps to select and design the best input/output approaches for its users instead of using standard mechanisms. An awareness mechanism should be implemented very efficiently to make an application interactive and responsive. Advance interactive components, such as speech-based input, assistive hardware devices, and voice alerts, are needed for blind users to interact with the application, which are not very common input/output methods [

54]. The user’s workspace describes that either an application is usable by only one user at a time or multiple users are allowed to work simultaneously. Some platforms use the approach where they are used at the same place and time, others differ since they can be used at different times and in different places, while some use a hybrid approach, i.e., a mixture of both [

7]. When users are blind, security and privacy must be a property that is defined and handled.

Various approaches have been used to integrate user interactions and awareness in the system. This may include dialogue boxes, warning messages, sound beeps, speech alerts, text to speech, and so on. Text to speech, sound beeps, and speech alert techniques are very popular. However, the users have to listen to long speeches to obtain their required information [

4], and if, by chance, a user misses a speech or is unable to grasp the information in a timely manner, he must repeat listening to the speech again. Systems must have a function to generate the maximum amount of information from limited speech and have some control over the speech, like stop, repeat, and skip item functions, and so on.

In mathematical writing applications, the major issue is editing an already written formula. There is some basic solutions proposed for this issue, like dictating the position of the content and then updating it [

39] or going through the whole expression and then after reaching the particular area, updating the content. The solution to this problem might be the same as discussed earlier; the user must have control over the spoken content. Another limitation is the extensive structure of the mathematical expression that the user needs to speak, such as in the case of very long and complex expressions. Predictions could be added to speed up the process. Also, memory can be added to memorize frequently used expressions and to re-use them at a single call. The system should be interactive with the user to enhance the application’s usability. For instance, the system should respond to every action undertaken by a visually impaired user. The response time should also be managed and be small so that the system can work efficiently and meet the requirements of the user.

When we work in a collaborative environment, the authentication, authorization, and user’s personal information security becomes an important aspect of that application [

55]. Some systems have privacy and security techniques implemented, but their implementation is at a basic level. More work is needed for security, especially when the user of that application is a blind person. Another approach that can be used to authenticate a user is their voice. As the user of the application is a blind person, the authentication can be completed through spoken words. Some systems have voice input features, but none of them have this feature.

Multiple efforts are in progress to standardize educational software applications for visually impaired users and on the basis that some standards have been developed for the implementation and realization of these applications [

56]. However, still, the doors are open for further research opportunities.