Abstract

In this paper, we propose a new estimation procedure for discovering the structure of Gaussian Markov random fields (MRFs) with false discovery rate (FDR) control, making use of the sorted -norm (SL1) regularization. A Gaussian MRF is an acyclic graph representing a multivariate Gaussian distribution, where nodes are random variables and edges represent the conditional dependence between the connected nodes. Since it is possible to learn the edge structure of Gaussian MRFs directly from data, Gaussian MRFs provide an excellent way to understand complex data by revealing the dependence structure among many inputs features, such as genes, sensors, users, documents, etc. In learning the graphical structure of Gaussian MRFs, it is desired to discover the actual edges of the underlying but unknown probabilistic graphical model—it becomes more complicated when the number of random variables (features) p increases, compared to the number of data points n. In particular, when , it is statistically unavoidable for any estimation procedure to include false edges. Therefore, there have been many trials to reduce the false detection of edges, in particular, using different types of regularization on the learning parameters. Our method makes use of the SL1 regularization, introduced recently for model selection in linear regression. We focus on the benefit of SL1 regularization that it can be used to control the FDR of detecting important random variables. Adapting SL1 for probabilistic graphical models, we show that SL1 can be used for the structure learning of Gaussian MRFs using our suggested procedure nsSLOPE (neighborhood selection Sorted L-One Penalized Estimation), controlling the FDR of detecting edges.

1. Introduction

Estimation of the graphical structure of Gaussian Markov random fields (MRFs) has been the topic of active research in machine learning, data analysis and statistics. The reason is that they provide efficient means for representing complex statistical relations of many variables in forms of a simple undirected graph, disclosing new insights about interactions of genes, users, news articles, operational parts of a human driver, to name a few.

One mainstream of the research is to estimate the structure by maximum likelihood estimation (MLE), penalizing the -norm of the learning parameters. In this framework, structure learning of a Gaussian MRF is equivalent to finding a sparse inverse covariance matrix of a multivariate Gaussian distribution. To formally describe the connection, let us we consider n samples of p jointly Gaussian random variables following , where the mean is zero without loss of generality and is the covariance matrix. The estimation is essentially the MLE of the inverse covariance matrix under an -norm penalty, which can be stated it as a convex optimization problem [1]:

Here, is the sample covariance matrix, and is a tuning parameter that determines the element-wise sparsity of .

The -regularized MLE approach (1) has been addressed quite extensively in literature. The convex optimization formulation has been first discussed in References [2,3]. A block-coordinate descent type algorithm was developed in Reference [4], while revealing the fact that the sub-problems of (1) can be solved in forms of the LASSO regression problems [5]. More efficient solvers have been developed to deal with high-dimensional cases [6,7,8,9,10,11,12,13]. In the theoretical side, we are interested in two aspects—one is the estimation quality of and another is the variable selection quality of . These aspects are still in under investigation in theory and experiments [1,3,14,15,16,17,18,19,20], as new analyses become available for the closely related -penalized LASSO regression in vector spaces.

Among these, our method inherits the spirit of Reference [14,19] in particular, where the authors considered the problem (1) in terms of a collection of local regression problems defined for each random variables.

LASSO and SLOPE

Under a linear data model with a data matrix and , the -penalized estimation of is known as the LASSO regression [5], whose estimate is given by solving the following convex optimization problem:

where is a tuning parameter which determines the sparsity of the estimate . Important statistical properties of is that (i) the distance between the estimate and the population parameter vector and (ii) the detection of non-zero locations of by . We can rephrase the former as the estimation error and the latter as the model selection error. These two types of error are dependent on how to choose the value of the tuning parameter . Regarding the model selection, it is known that the LASSO regression can control the family-wise error rate (FWER) at level by choosing [21]. The FWER is essentially the probability of including at least one entry as non-zero in which is zero in . In high-dimensional cases where , controlling FWER is quite restrictive since it is unavoidable to do false positive detection of nonzero entries. As a result, FWER control can lead to weak detection power of nonzero entries.

The SLOPE is an alternative procedure for the estimation of , using the sorted -norm penalization instead. The SLOPE solves a modified convex optimization problem,

where is the sorted -norm defined by

where and is the kth largest component of in magnitude. In Reference [21], it has been shown that, for linear regression, the SLOPE procedure can control the false discovery rate (FDR) at level of model selection by choosing . The FDR is the expected ratio of false discovery (i.e., the number of false nonzero entries) to total discovery. Since controlling FDR is less restrictive for model selection compared to the FWER control, FDR control can lead to a significant increase in detection power, while it may slightly increase the total number of false discovery [21].

This paper is motivated by the SLOPE method [21] for its use of the SL1 regularization, where it brings many benefits not available with the popular -based regularization—the capability of false discovery rate (FDR) control [21,22], adaptivity to unknown signal sparsity [23] and clustering of coefficients [24,25]. Also, efficient optimization methods [13,21,26] and more theoretical analysis [23,27,28,29] are under active research.

In this paper, we propose a new procedure to find a sparse inverse covariance matrix estimate, we call nsSLOPE (neighborhood selection Sorted L-One Penalized Estimation). Our nsSLOPE procedure uses the sorted -norm for penalized model selection, whereas the existing gLASSO (1) method uses the -norm for the purpose. We investigate our method in two aspects in theory and in experiments, showing that (i) how the estimation error can be bounded, and (ii) how the model selection (more specifically, the neighborhood selection [14]) can be done with an FDR control in the edge structure of the Gaussian Markov random field. We also provide an efficient but straightforward estimation algorithm which fits for parallel computation.

2. nsSLOPE (Neighborhood Selection Sorted L-One Penalized Estimation)

Our method is based on the idea that the estimation of the inverse covariance matrix of a multivariate normal distribution can be decomposed into the multiple regression on conditional distributions [14,19].

For a formal description of our method, let us consider a p-dimensional random vector , denoting its ith component as and the sub-vector without the ith component as . For the inverse covariance matrix , we use and to denote the ith column of the matrix and the rest of without the ith column, respectively.

From the Bayes rule, we can decompose the full log-likelihood into the following parts:

This decomposition allows us a block-wise optimization of the full log-likelihood, which iteratively optimizes while the parameters in are fixed at the current value.

2.1. Sub-Problems

In the block-wise optimization approach we mentioned above, we need to deal with the conditional distribution in each iteration. When , the conditional distribution also follows the Gaussian distribution [30], in particular:

Here denotes the ith column of without the ith row element, denotes the sub-matrix of without the ith column and the ith row and is the ith diagonal component of . Once we define as follow,

then the conditional distribution now looks like:

This indicates that the minimization of the conditional log-likelihood can be understood as a local regression for the random variable , under the data model:

To obtain the solution of the local regression problem (2), we consider a convex optimization based on the sorted -norm [21,25]. In particular, for each local problem index , we solve

Here, the matrix consists of n i.i.d. p-dimensional samples in rows, is the ith column of X and is the sub-matrix of X without the ith column. Note that the sub-problem (3) requires an estimate of , namely , which will be computed dependent on the information on the other sub-problem indices (namely, ). Because of this, the problems (3) for indices are not independent. This contrasts our method to the neighborhood selection algorithms [14,19], based on the -regularization.

2.2. Connection to Inverse Covariance Matrix Estimation

With obtained from (3) as an estimate of for , now the question is how to use the information for the estimation of without an explicit inversion of the matrix. For the purpose, we first consider the block-wise formulation of :

putting the ith row and the ith column to the last positions whenever necessary. There we can see that . Also, from the block-wise inversion of , we have (unnecessary block matrices are replaced with *):

From these and the definition of , we can establish the following relations:

Note that for a positive definite matrix and that (4) implies the sparsity pattern of and must be the same. Also, the updates (4) for are not independent, since the computation of depends on . However, if we estimate based on the sample covariance matrix instead of , (i) our updates (4) no longer needs to explicitly compute or store the matrix, unlike the gLASSO and (ii) our sub-problems become mutually independent and thus solvable in parallel.

3. Algorithm

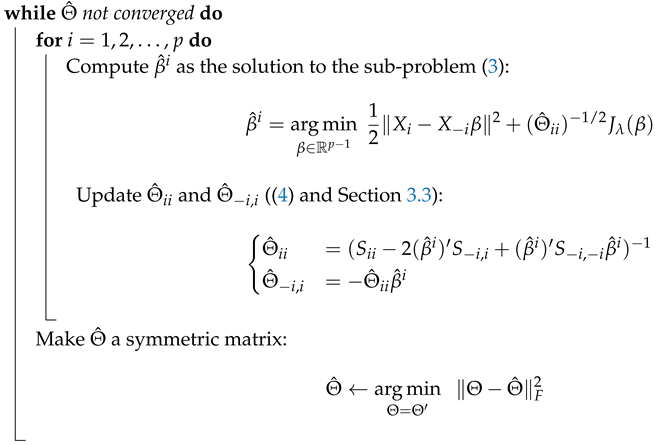

Our algorithm, called nsSLOPE, is summarized in Algorithm 1, which is essentially a block-coordinate descent algorithm [12,31]. Our algorithm may look similar to that of Yuan [19] but there are several important differences—(i) each sub-problem in our algorithm solves a SLOPE formulation (SL1 regularization), while Yuan’s sub-problem is either LASSO or Dantzig selector ( regularization); (ii) our sub-problem makes use of the estimate in addition to .

| Algorithm 1: The nsSLOPE Algorithm |

| Input: with zero-centered columns Input: Input: The target level of FDR q Set according to Section 3.2;  |

3.1. Sub-Problem Solver

To solve our sub-problems (3), we use the SLOPE R package, which implements the proximal gradient descent algorithm of Reference [32] with acceleration based on Nesterov’s original idea [33]. The algorithm requires to compute the proximal operator involving , namely

This can be computed in time using an algorithm from Reference [21]. The optimality of sub-problem is declared by the primal-dual gap and we use a tight threshold value, .

3.2. Choice of

For the sequence of values in sub-problems, we use so-called the Benjamini-Hochberg (BH) sequence [21]:

Here is the target level of FDR control we discuss later and is the th quantile of the standard normal distribution. In fact, when the design matrix in the SLOPE sub-problem (3) is not orthogonal, it is beneficial to use an adjusted version of this sequence. This sequence is generated by:

and if the sequence is non-increasing but begins to increase after the index , we set all the remaining values equal to , so that the resulting sequence will be non-increasing. For more discussion about the adjustment, we refer to Section 3.2.2 of Reference [21].

3.3. Estimation of

To solve the ith sub-problem in our algorithm, we need to estimate the value of . This can be done using (4), that is, . However, this implies that (i) we need to keep an estimate of additionally, (ii) the computation of the ith sub-problem will be dependent on all the other indices, as it needs to access , requiring the algorithm to run sequentially.

To avoid these overheads, we compute the estimate using the sample covariance matrix instead (we assume that the columns of are centered):

This allows us to compute the inner loop of Algorithm 1 in parallel.

3.4. Stopping Criterion of the External Loop

To terminate the outer loop in Algorithm 1, we check if the diagonal entries of have converged, that is, the algorithm is stopped when the -norm different between two consecutive iterates is below a threshold value, . The value is slightly loose but we have found no practical difference by making it tighter. Note that it is suffice to check the optimality of the diagonal entries of since the optimality of ’s is enforced by the sub-problem solver and .

3.5. Uniqueness of Sub-Problem Solutions

When , our sub-problems may have multiple solutions, which may prevent the global convergence of our algorithm. We may adopt the technique in Reference [34] to inject a strongly convex proximity term into each sub-problem objective, so that each sub-problem will have a unique solution. In our experience, however, we encountered no convergence issues using stopping threshold values in the range of for the outer loop.

4. Analysis

In this section, we provide two theoretical results of our nsSLOPE procedure—(i) an estimation error bound regarding the distance between our estimate and the true model parameter and (ii) group-wise FDR control on discovering the true edges in the Gaussian MRF corresponding to .

We first discuss about the estimation error bound, for which we divide our analysis into two parts regarding (i) off-diagonal entries and (ii) diagonal entries of .

4.1. Estimation Error Analysis

4.1.1. Off-Diagonal Entries

From (4), we see that , in other words, when is fixed, the off-diagonal entries is determined solely by , and therefore we can focus on the estimation error of .

To discuss the estimation error of , it is convenient to consider a constrained reformulation of the sub-problem (3):

Hereafter, for the sake of simplicity, we use notations and for , also dropping sub-problem indices in and . In this view, the data model being considered in each sub-problem is as follows,

For the analysis, we make the following assumptions:

- The true signal satisfies for some (this condition is satisfied for example, if and is s-sparse, that is, it has at most s nonzero elements).

- The noise satisfies the condition This will allow us to say that the true signal is feasible with respect to the constraint in (6).

We provide the following result, which shows that approaches the true in high probability:

Theorem 1.

Suppose that is an estimate of obtained by solving the sub-problem (6). Consider the factorization where () is a Gaussian random matrix whose entries are sampled i.i.d. from and is a deterministic matrix such that . Such decomposition is possible since the rows of A are independent samples from . Then we have,

with probability at least , where and .

We need to discuss a few results before proving Theorem 1.

Theorem 2.

Let T be a bounded subset of . For an , consider the set

Then

holds with probability at least , where is a standard Gaussian random vector and .

Proof.

The result follows from an extended general inequality in expectation [35]. □

The next result shows that the first term of the upper bound in Theorem 2 can be bounded without using expectation.

Lemma 1.

The quantity is called thewidthof and is bounded as follows,

Proof.

This result is a part of the proof for Theorem 3.1 in Reference [35]. □

Using Theorem 2 and Lemma 1, we can derive a high probability error bound on the estimation from noisy observations,

where the true signal belongs to a bounded subset . The following corollaries are straightforward extensions of Theorems 3.3 and 3.4 of Reference [35], given our Theorem 2 (so we skip the proofs).

Corollary 1.

Choose to be any vector satisfying that

Then,

with probability at least .

Now we show the error bound for the estimates we obtain by solving the optimization problem (6). For the purpose, we make use of the Minkowski functional of the set ,

If is a compact and origin-symmetric convex set with non-empty interior, then defines a norm in . Note that if and only if .

Corollary 2.

Then

with probability at least .

Finally, we show that solving the constrained form of the sub-problems (6) also satisfies essentially the same error bound in Corollary 2.

Proof of Theorem 1

Since we assumed that , we construct the subset so that all vectors with will be contained in . That is,

This is a sphere defined in the SL1-norm : in this case, the Minkowski functional is proportional to and thus the same solution minimizes both and .

Recall that and we choose . Since , for we have . This implies that . □

4.1.2. Diagonal Entries

We estimate based on the residual sum of squares (RSS) as suggested by [19],

Unlike in Reference [19], we directly analyze the estimation error of based on a chi-square tail bound.

Theorem 3.

For all small enough so that , we have

where for ,

Proof.

Using the same notation as the previous section, that is, and , consider the estimate in discussion,

where the last equality is from . Therefore,

where . The last term is the sum of squares of independent and therefore it follows the chi-square distribution, that is, . Applying the tail bound [36] for a chi-square random variable Z with d degrees of freedom:

we get for all small enough ,

□

4.1.3. Discussion on Asymptotic Behaviors

Our two main results above, Theorems 1 and 3, indicate how well our estimate of the off-diagonal entries and diagonal entries would behave. Based on these results, we can discuss the estimation error of the full matrix compared to the true precision matrix .

From Theorem 1, we can deduce that with and ,

where is the smallest eigenvalue of the symmetric positive definite . That is, using the interlacing property of eigenvalues, we have

where is the spectral radius of . Therefore when , the distance between and is bounded by . Here, we can consider in a way such as as n increases, so that the bound in Theorem 1 will hold with the probability approaching one. That is, in rough asymptotics,

Under the conditions above, Theorem 3 indicates that:

using our assumption that . We can further introduce assumptions on and as in Reference [19], so that we can quantify the upper bound but here we will simply say that , where c is a constant depending on the properties of the full matrices S and . If this is the case, then from Theorem 3 for an such that , we see that

with the probability approaching one.

4.2. Neighborhood FDR Control under Group Assumptions

Here we consider obtained by solving the unconstrained form (3) with the same data model we discussed above for the sub-problems:

with and with . Here we focus on a particular but an interesting case where the columns of A form orthogonal groups, that is, under the decomposition , forms a block-diagonal matrix. We also assume that the columns of A belonging to the same group are highly correlated, in the sense that for any columns and of A corresponding to the same group, their correlation is high enough to satisfy that

This implies that by Theorem 2.1 of Reference [25], which simplifies our analysis. Note that if and belong to different blocks, then our assumption above implies . Finally, we further assume that for .

Consider a collection of non-overlapping index subsets as the set of groups G. Under the block-diagonal covariance matrix assumption above, we see that

where denotes the representative of the same coefficients for all , and . This tells us that we can replace by , if we define containing in its columns (so that and consider the vector of group-representative coefficients .

The regularizer can be rewritten similarly,

where , denoting by the group which has the ith largest coefficient in in magnitude and by the size of the group.

Using the fact that , we can recast the regression model (9) as , and consider a much simpler form of the problem (3),

where we can easily check that satisfies . This is exactly the form of the SLOPE problem with an orthogonal design matrix in Reference [21], except that the new sequence is not exactly the Benjamini-Hochberg sequence (5).

We can consider the problem (3) (and respectively (10)) in the context of multiple hypothesis testing of d (resp. ) null hypothesis (resp. ) for (resp. ), where we reject (resp. ) if and only if (resp. ). In this setting, the Lemmas B.1 and B.2 in Reference [21] still holds for our problem (10), since they are independent of choosing the particular sequence.

In the following, V is the number of individual false rejections, R is the number of total individual rejections and is the number of total group rejections. The following lemmas are slightly modified versions of Lemmas B.1 and B.2 from Reference [21], respectively, to fit our group-wise setup.

Lemma 2.

Let be a null hypothesis and let . Then

Lemma 3.

Consider applying the procedure (10) for new data with and let be the number of group rejections made. Then with ,

Using these, we can show our FDR control result.

Theorem 4.

Consider the procedure (10) we consider in a sub-problem of nsSLOPE as a multiple testing of group-wise hypotheses, where we reject the null group hypothesis when , rejecting all individual hypotheses in the group, that is, all , , are rejected. Using defined as in (5), the procedure controls FDR at the level :

where

Proof.

Suppose that is rejected. Then,

The derivations above are—(i) by using Lemmas 2 and 3; (ii) from the independence between and ; (iii) by taking the smallest term in the summation of multiplied by the number of terms; (iv) due to the assumption that for all i, so that by triangle inequality.

Now, consider the group-wise hypotheses testing in (10) is configured in a way that the first hypotheses are null in truth, that is, for . Then we have:

□

Since the above theorem applies for each sub-problem, which can be considered as for the ith random variable to find its neighbors to be connected in the Gaussian Markov random field defined by , we call this result as neighborhood FDR control.

5. Numerical Results

We show that the theoretical properties discussed above also works in simulated settings.

5.1. Quality of Estimation

For all numerical examples here, we generate random i.i.d. samples from , where is fixed. We plant a simple block diagonal structure into the true matrix , which is also preserved in the precision matrix . All the blocks had the same size of 4, so that we have total 125 blocks at the diagonal of . We set and set the entries , , to a high enough value whenever belongs to those blocks, in order to represent groups of highly correlated variables. All experiments were repeated for 25 times.

The sequence for nsSLOPE has been chosen according to Section 3.2 with respect to a target FDR level . For the gLASSO, we have used the value discussed in Reference [3], to control the family-wise error rate (FWER, the chance of any false rejection of null hypotheses) to .

5.1.1. Mean Square Estimation Error

Recall our discussion of error bounds in Theorem 1 (off-diagonal scaled by ) and Theorem 3 (diagonal), followed by Section 4.1.3 where we roughly sketched the asymptotic behaviors of and .

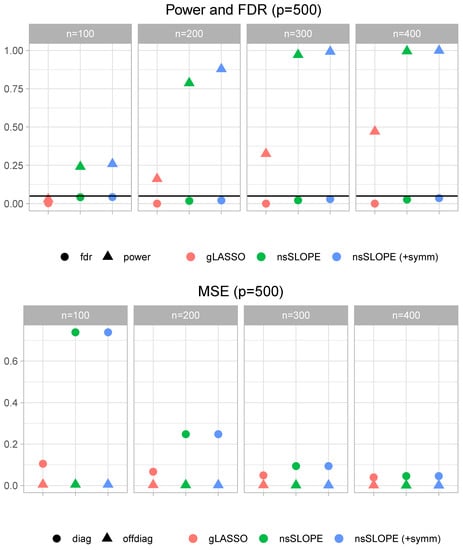

The top panel of Figure 1 shows mean square error (MSE) between estimated quantities and the true models that we have created, where estimates are obtained by our method nsSLOPE, without or with symmetrization at the end of Algorithm 1, as well as by the gLASSO [4] which solves the -based MLE problem (1) with a block coordinate descent strategy. The off-diagonal estimation was consistently good overall settings, while the estimation error of diagonal entries were kept improving as n is being increased, which was we predicted in Section 4.1.3. We believe that our estimation of the diagonal has room for improvement, for example, using more accurate reverse-scaling to compensate the normalization within the SLOPE procedure.

Figure 1.

Quality of estimation. Top: empirical false discovery rate (FDR) levels (averaged over 25 repetitions) and the nominal level of (solid black horizontal line). Bottom: mean square error of diagonal and off-diagonal entries of the precision matrix. was fixed for both panels and , 200, 300 and 400 were tried. (“nsSLOPE”: nsSLOPE without symmetrization, “+symm”: with symmetrization and gLASSO.)

5.1.2. FDR Control

A more exciting part of our results is the FDR control discussed in Theorem 4 and here we check how well the sparsity structure of the precision matrix is recovered. For comparison, we measure the power (the fraction of the true nonzero entries discovered by the algorithm) and the FDR (for the whole precision matrix).

The bottom panel of Figure 1 shows the statistics. In all cases, the empirical FDR was controlled around the desired level by all methods, although our method kept the level quite strictly, having significantly larger power than the gLASSO. This is understandable since FWER control of the gLASSO if often too respective, thereby limiting the power to detect true positive entries. It is also consistent with the results reported for SL1-based penalized regression [21,23], which indeed is one of the key benefits of SL1-based methods.

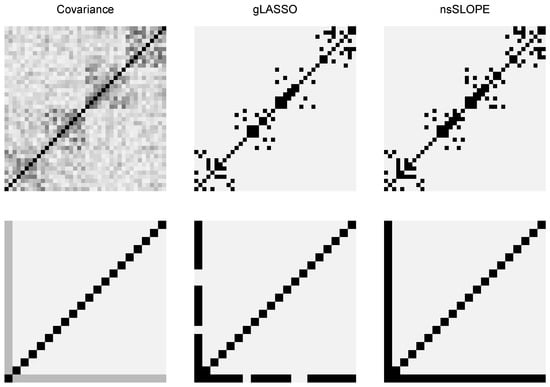

5.2. Structure Discovery

To further investigate the structure discovery by nsSLOPE, we have experimented with two different types of covariance matrices—one with a block-diagonal structure and another with a hub structure. The covariance matrix of the block diagonal case has been constructed using the data.simulation function from the varclust R package, with , , , , (this gives us a data matrix with 100 examples and 40 variables). In the hub structure case, we have created a 20-dimensional covariance matrix with ones on the diagonal and in the first column and the last row of the matrix. Then we have used the mvrnorm function from the MASS R package to sample 500 data points from a multivariate Gaussian distribution with zero mean and the constructed covariance matrix.

The true covariance matrix and the two estimates from gLASSO and nsSLOPE are shown in Figure 2. In nsSLOPE, the FDR control has been used based on the Benjamini-Hochberg sequence (5) with a target FDR value and in gLASSO the FWER control [3] has been used with a target value . Our method nsSLOPE appears to be more sensitive for finding the true structure, although it may contain a slightly more false discovery.

Figure 2.

Examples of structure discovery. Top: a covariance matrix with block diagonal structure. Bottom: a hub structure. True covariance matrix is shown on the left and gLASSO and nsSLOPE estimates (only the nonzero patterns) of the precision matrix are shown in the middle and in the right panels, respectively.

6. Conclusions

We introduced a new procedure based on the recently proposed sorted regularization, to find solutions of sparse precision matrix estimation with more attractive statistical properties than the existing -based frameworks. We believe there are many aspects of SL1 in graphical models to be investigated, especially when the inverse covariance has a more complex structure. Still, we hope our results will provide a basis for research and practical applications.

Our selection of the values in this paper requires independence assumptions on features or blocks of features. Although some extensions are possible [21], it would be desirable to consider a general framework, for example, based on Bayesian inference considering the posterior distribution derived from the loss and the regularizer [37,38], which enables us to evaluate the uncertainty of edge discovery and to find values from data.

Author Contributions

Conceptualization, S.L. and P.S.; methodology, S.L. and P.S.; software, S.L. and P.S.; validation, S.L, P.S. and M.B.; formal analysis, S.L.; writing–original draft preparation, S.L.; writing–review and editing, S.L.

Funding

This work was supported by the research fund of Hanyang University (HY-2018-N).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yuan, M.; Lin, Y. Model selection and estimation in the Gaussian graphical model. Biometrika 2007, 94, 19–35. [Google Scholar] [CrossRef]

- D’Aspremont, A.; Banerjee, O.; El Ghaoui, L. First-Order Methods for Sparse Covariance Selection. SIAM J. Matrix Anal. Appl. 2008, 30, 56–66. [Google Scholar] [CrossRef]

- Banerjee, O.; Ghaoui, L.E.; d’Aspremont, A. Model Selection Through Sparse Maximum Likelihood Estimation for Multivariate Gaussian or Binary Data. J. Mach. Learn. Res. 2008, 9, 485–516. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics 2008, 9, 432–441. [Google Scholar] [CrossRef] [PubMed]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. (Ser. B) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Oztoprak, F.; Nocedal, J.; Rennie, S.; Olsen, P.A. Newton-Like Methods for Sparse Inverse Covariance Estimation. In Advances in Neural Information Processing Systems 25; MIT Press: Cambridge, MA, USA, 2012; pp. 764–772. [Google Scholar]

- Rolfs, B.; Rajaratnam, B.; Guillot, D.; Wong, I.; Maleki, A. Iterative Thresholding Algorithm for Sparse Inverse Covariance Estimation. In Advances in Neural Information Processing Systems 25; MIT Press: Cambridge, MA, USA, 2012; pp. 1574–1582. [Google Scholar]

- Hsieh, C.J.; Dhillon, I.S.; Ravikumar, P.K.; Sustik, M.A. Sparse Inverse Covariance Matrix Estimation Using Quadratic Approximation. In Advances in Neural Information Processing Systems 24; MIT Press: Cambridge, MA, USA, 2011; pp. 2330–2338. [Google Scholar]

- Hsieh, C.J.; Banerjee, A.; Dhillon, I.S.; Ravikumar, P.K. A Divide-and-Conquer Method for Sparse Inverse Covariance Estimation. In Advances in Neural Information Processing Systems 25; MIT Press: Cambridge, MA, USA, 2012; pp. 2330–2338. [Google Scholar]

- Hsieh, C.J.; Sustik, M.A.; Dhillon, I.; Ravikumar, P.; Poldrack, R. BIG & QUIC: Sparse Inverse Covariance Estimation for a Million Variables. In Advances in Neural Information Processing Systems 26; MIT Press: Cambridge, MA, USA, 2013; pp. 3165–3173. [Google Scholar]

- Mazumder, R.; Hastie, T. Exact Covariance Thresholding into Connected Components for Large-scale Graphical Lasso. J. Mach. Learn. Res. 2012, 13, 781–794. [Google Scholar]

- Treister, E.; Turek, J.S. A Block-Coordinate Descent Approach for Large-scale Sparse Inverse Covariance Estimation. In Advances in Neural Information Processing Systems 27; MIT Press: Cambridge, MA, USA, 2014; pp. 927–935. [Google Scholar]

- Zhang, R.; Fattahi, S.; Sojoudi, S. Large-Scale Sparse Inverse Covariance Estimation via Thresholding and Max-Det Matrix Completion; International Conference on Machine Learning, PMLR: Stockholm, Sweden, 2018. [Google Scholar]

- Meinshausen, N.; Bühlmann, P. High-dimensional graphs and variable selection with the Lasso. Ann. Stat. 2006, 34, 1436–1462. [Google Scholar] [CrossRef]

- Meinshausen, N.; Bühlmann, P. Stability selection. J. R. Stat. Soc. (Ser. B) 2010, 72, 417–473. [Google Scholar] [CrossRef]

- Rothman, A.J.; Bickel, P.J.; Levina, E.; Zhu, J. Sparse permutation invariant covariance estimation. Electron. J. Stat. 2008, 2, 494–515. [Google Scholar] [CrossRef]

- Lam, C.; Fan, J. Sparsistency and rates of convergence in large covariance matrix estimation. Ann. Stat. 2009, 37, 4254–4278. [Google Scholar] [CrossRef]

- Raskutti, G.; Yu, B.; Wainwright, M.J.; Ravikumar, P.K. Model Selection in Gaussian Graphical Models: High-Dimensional Consistency of ℓ1-regularized MLE. In Advances in Neural Information Processing Systems 21; MIT Press: Cambridge, MA, USA, 2009; pp. 1329–1336. [Google Scholar]

- Yuan, M. High Dimensional Inverse Covariance Matrix Estimation via Linear Programming. J. Mach. Learn. Res. 2010, 11, 2261–2286. [Google Scholar]

- Fattahi, S.; Zhang, R.Y.; Sojoudi, S. Sparse Inverse Covariance Estimation for Chordal Structures. In Proceedings of the 2018 European Control Conference (ECC), Limassol, Cyprus, 12–15 June 2018; pp. 837–844. [Google Scholar]

- Bogdan, M.; van den Berg, E.; Sabatti, C.; Su, W.; Candes, E.J. SLOPE—Adaptive Variable Selection via Convex Optimization. Ann. Appl. Stat. 2015, 9, 1103–1140. [Google Scholar] [CrossRef] [PubMed]

- Brzyski, D.; Su, W.; Bogdan, M. Group SLOPE—Adaptive selection of groups of predictors. arXiv 2015, arXiv:1511.09078. [Google Scholar] [CrossRef] [PubMed]

- Su, W.; Candès, E. SLOPE is adaptive to unknown sparsity and asymptotically minimax. Ann. Stat. 2016, 44, 1038–1068. [Google Scholar] [CrossRef]

- Bondell, H.D.; Reich, B.J. Simultaneous Regression Shrinkage, Variable Selection, and Supervised Clustering of Predictors with OSCAR. Biometrics 2008, 64, 115–123. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D. Ordered Weighted L1 Regularized Regression with Strongly Correlated Covariates: Theoretical Aspects. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, AISTATS 2016, Cadiz, Spain, 9–11 May 2016; pp. 930–938. [Google Scholar]

- Lee, S.; Brzyski, D.; Bogdan, M. Fast Saddle-Point Algorithm for Generalized Dantzig Selector and FDR Control with the Ordered l1-Norm. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics (AISTATS), Cadiz, Spain, 9–11 May 2016; Volume 51, pp. 780–789. [Google Scholar]

- Chen, S.; Banerjee, A. Structured Matrix Recovery via the Generalized Dantzig Selector. In Advances in Neural Information Processing Systems 29; Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 3252–3260. [Google Scholar]

- Bellec, P.C.; Lecué, G.; Tsybakov, A.B. Slope meets Lasso: Improved oracle bounds and optimality. arXiv 2017, arXiv:1605.08651v3. [Google Scholar] [CrossRef]

- Derumigny, A. Improved bounds for Square-Root Lasso and Square-Root Slope. Electron. J. Stat. 2018, 12, 741–766. [Google Scholar] [CrossRef]

- Anderson, T.W. An Introduction to Multivariate Statistical Analysis; Wiley-Interscience: London, UK, 2003. [Google Scholar]

- Beck, A.; Tetruashvili, L. On the Convergence of Block Coordinate Descent Type Methods. SIAM J. Optim. 2013, 23, 2037–2060. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A Fast Iterative Shrinkage-Thresholding Algorithm for Linear Inverse Problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Nesterov, Y. A Method of Solving a Convex Programming Problem with Convergence Rate O(1/k2). Soviet Math. Dokl. 1983, 27, 372–376. [Google Scholar]

- Razaviyayn, M.; Hong, M.; Luo, Z.Q. A Unified Convergence Analysis of Block Successive Minimization Methods for Nonsmooth Optimization. SIAM J. Optim. 2013, 23, 1126–1153. [Google Scholar] [CrossRef]

- Figueiredo, M.; Nowak, R. Sparse estimation with strongly correlated variables using ordered weighted ℓ1 regularization. arXiv 2014, arXiv:1409.4005. [Google Scholar]

- Johnstone, I.M. Chi-square oracle inequalities. Lect. Notes-Monogr. Ser. 2001, 36, 399–418. [Google Scholar]

- Park, T.; Casella, G. The Bayesian Lasso. J. Am. Stat. Assoc. 2008, 103, 681–686. [Google Scholar] [CrossRef]

- Mallick, H.; Yi, N. A New Bayesian Lasso. Stat. Its Interface 2014, 7, 571–582. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).