Abstract

The nuclear norm minimization (NNM) problem is to recover a matrix that minimizes the sum of its singular values and satisfies some linear constraints simultaneously. The alternating direction method (ADM) has been used to solve this problem recently. However, the subproblems in ADM are usually not easily solvable when the linear mappings in the constraints are not identities. In this paper, we propose a proximity algorithm with adaptive penalty (PA-AP). First, we formulate the nuclear norm minimization problems into a unified model. To solve this model, we improve the ADM by adding a proximal term to the subproblems that are difficult to solve. An adaptive tactic on the proximity parameters is also put forward for acceleration. By employing subdifferentials and proximity operators, an equivalent fixed-point equation system is constructed, and we use this system to further prove the convergence of the proposed algorithm under certain conditions, e.g., the precondition matrix is symmetric positive definite. Finally, experimental results and comparisons with state-of-the-art methods, e.g., ADM, IADM-CG and IADM-BB, show that the proposed algorithm is effective.

1. Introduction

The rank minimization (RM) problem aims to recover an unknown low-rank matrix from very limited information. It has gained am increasing amount of attention rapidly in recent years, since it has a range of applications in many computer vision and machine learning areas, such as collaborative filtering [1], subspace segmentation [2], non-rigid structure from motion [3] and image inpainting [4]. This paper deals with the following rank minimization problem:

where is a linear map and the vector is known. The matrix completion (MC) problem is a special case of the RM problem, where is a sampling operator in the form of , is an index subset, and is a vector formed by the entries of X with indices in .

Although Label (1) is simple in form, directly solving it is an NP-hard problem due to the discrete nature of the rank function. One popular way is replacing the rank function with the nuclear norm, which is the sum of the singular values of a matrix. This technique is based on the fact that the nuclear norm minimization (NNM) is the tightest convex relaxation of the rank minimization problem [5]. The obtained new problem is given by

where denotes the nuclear norm. It has been shown that recovering a low-rank matrix can be achieved by solving Label (2) [1,6].

In practical applications, the observed data may be corrupted with noise, namely , where e contains measurement errors dominated by certain normal distribution. In order to recover the low-rank matrix robustly, problem (2) should be modified to the following inequality constrained problem:

where is the norm of vector and the constant is the noise level. When , problem (3) reduces to the noiseless case (2).

Alternatively, problems (2) and (3) can be rewritten as the nuclear norm regularized least-square (NNRLS) under some conditions:

where is as given parameter.

The studies on the nuclear norm minimization problem are mainly along two directions. The first one is enhancing the precision of a low rank approximation via replacing the nuclear norm by a non-convex regularizer—for instance, the Schatten p-norm [7,8], the truncated nuclear norm [4,9], the log or fraction function based norm [10,11], and so on. The second one is improving the efficiency of solving problems (2), (3) and (4) and their variants. For instance, the authors in [12] treated the problem (2) as a standard linear semidefinite programming (SDP) problem, and proposed the solving algorithm from the dual problem. However, since the SDP solver uses second-order information, with the increase in the size of the matrix, the memory required to calculate the descending direction quickly becomes too large. Therefore, algorithms that use only first-order information are developed, such as the singular value thresholding (SVT) algorithm [13], the fixed point continuation algorithm (FPCA) [14], the accelerated proximal gradient Lagrangian (APGL) method [15], the proximal point algorithm based on indicator function (PPA-IF) [16], the augmented Lagrange multiplier (ALM) algorithm [17] and the alternating direction methods (ADM) [18,19,20,21].

In particular, Chen et al. [18] applied the ADM to solve the nuclear norm based matrix completion problem. Due to the simplicity of the linear mapping , i.e., , all of the ADM subproblems of the matrix completion problem can be solved exactly by an explicit formula; see [18] for details. Here, and hereafter and represent the adjoint of and the identity operator. However, for a generic with , some of the resulting subproblems no longer have closed-form solutions. Thus, the efficiency of the ADM depends heavily on how to solve these harder subproblems.

To solve this difficulty, a common strategy is to introduce new auxiliary variables, e.g., in [19], one auxiliary variable was introduced for solving Label (2), while two auxiliary variables were introduced for Label (3). However, with more variables and more constraints, more memory is required and the convergence of ADM also becomes slower. Moreover, to update auxiliary variables, whose subproblems are least square problems, expensive matrix inversions are often necessary. Even worse, the convergence of ADM with more than two variables is not guaranteed. To mitigate these problems, Yang and Yuan [21] presented a linearized ADM to solve the NNRLS (4) as well as problems (2) and (3), where each subproblems admit explicit solutions. Instead of the linearized technique, Xiao and Jin [19] solve one least square subproblem iteratively by the Barzilai–Borwein (BB) gradient method [22]. Unlike [19], Jin et al. [20] used the linear conjugate gradient (CG) algorithm rather than BB to solve the subproblem.

In this paper, we further investigate the efficiency of ADM in solving the nuclear norm minimization problems. We first reformulate the problems (2), (3) and (4) into a unified form as follows:

where and . In this paper, we always fix . When considering problems (2) and (3), we choose , where denotes the indicator function over , i.e.,

When considering problem (4), we choose . As a result, for a general linear mapping , we only need to solve such a problem whose objective function is a sum of two convex functions and one of them contains an affine transformation.

Motivated by the ADM algorithms above, we then present a unified proximity algorithm with adaptive penalty (PA-AP) to solve Label (5). In particular, we employ the proximity idea to deal with the problems encountered by the present ADM, by adding a proximity term to one of the subproblems. We term the proposed algorithm as a proximity algorithm because we can rewrite it as a fixed-point equation system of proximity operators of f and g. By analyzing the fixed-point equations and applying the “Condition-M” [23], the convergence of the algorithm is proved under some assumptions. Furthermore, to improve the efficiency, an adaptive tactic on the proximity parameters is put forward. This paper is closely related to the works [23,24,25,26]. However, this paper is motivated to improve ADM to solve the nuclear norm minimization problem with linear affine constraints.

The organization of this paper is as follows. In Section 2, a review of ADM and its application on NNM are provided. In addition, the properties about subdifferentials and proximity operators are introduced. To improve ADM, a proximity algorithm with adaptive penalty is proposed, and convergence of the proposed algorithm is obtained in Section 3. Section 4 demonstrates the performance and effectiveness of the algorithm through numerical experiment. Finally, we will make a conclusion in Section 5.

2. Preliminaries

In this section, we give a brief review on ADM and its applications to the NNM problem (2) developed in [19,20]. In addition, some preliminaries on subdifferentials and proximity operators are given. Throughout this paper, linear maps are denoted with calligraphic letters (e.g., ), while capital letters represent matrices (e.g., A), and boldface lowercase letters represent vectors (e.g., ).

We begin with introducing the ADM. The basic idea of ADM goes back to the work of Gabay and Mercier [27]. ADM is designed to solving the separable convex minimization problem:

where , are convex functions, and , and . The corresponding augmented Lagrangian function is

where is the Lagrangian multiplier and is a penalty parameter. ADM is to minimize (8) first with respect to , then with respect to , and finally update iteratively, i.e.,

The main advantage of ADM is to make use of the separability structure of the objective function .

To solve (2) based on ADM, the authors in [19,20] introduced an auxiliary variable Y, and equivalently transformed the original model into

The augmented Lagrangian function to (10) is

where , are Lagrangian multipliers, are penalty parameters and denotes the Frobenius inner product, i.e., the matrices are treated like vectors. Following the idea of ADM, given , the next pair is determined by alternating minimizing (11),

Firstly, can be updated by

which in fact corresponds to evaluating the proximal operator of , i.e., which is defined below.

Secondly, given , can be updated by

which is a least square subproblem. Its solution can be found by solving a linear equation:

However, computing the matrix inverse is too costly to implement. Though [19,20] adopted inverse-free methods, i.e., BB and CG, to solve (14) iteratively, they are still inefficient, which will be shown in Section 4.

Next, we review definitions of subdifferential and proximity operator, which play an important role in the algorithm and convergence analysis. The subdifferential of a convex function at a point is the set defined by

The conjugate function of is denoted by , which is defined by

For , , it holds that

where denotes the domain of a function.

For a given positive definite matrix , the weighted inner product is defined by . Furthermore, the proximity operator of at with respect to [23] is defined by

If , then is reduced to

and is short for . A relation between subdifferentials and proximity operators is that

which is frequently used to construct fixed-point equations and prove convergence of the algorithm.

3. Proximity Algorithm with Adaptive Penalty

In Section 2, it is shown that the current ADM results in expensive matrix inverse computation when solving (2). Therefore, it is desirable to improve it. In this section, we propose a proximity algorithm with adaptive penalty (PA-AP) to solve the unified problem (5).

3.1. Proximity Algorithm

We derive our algorithm from ADM. First of all, we introduce an auxiliary variable , and convert (5) to

The augmented Lagrangian function of (19) is defined by

where is the Lagrangian multiplier and is a penalty parameter. ADM first updates X by minimizing with being fixed and then updates with X fixed at its latest value, until some convergence criteria are satisfied. After some simplification, we get

Note that the subproblem of usually has no closed-form solutions when is not the identity. In order to design efficient algorithms, we add a proximity term to this subproblem. More precisely, we propose the following algorithm:

where is a symmetric positive definite matrix.

The next lemma shows that (22) is equivalent to a fixed-point equation of some proximity operators. The proof is similar to [25].

Lemma 1.

Proof.

By changing the order of iterations, the first-order optimality condition of (22) is

Denote . Then,

We now discuss the choice of Q. To make the first subproblem of (22) have closed-form solutions, in this paper, we simply choose . To make sure Q is positive definite, must satisfy , where is the spectral norm. By substituting Q into (22), we obtain

The subproblems in (30) can be solved explicitly based on proximity operators. Specifically, we have

where is the singular value decomposition (SVD) of , , , and is a diagonal matrix containing the singular values.

Moreover, , which depends on the choice of . If , then

If , then

3.2. Adaptive Penalty

In previous proximity algorithms [23,28,29], the penalty parameter is usually fixed. In view of the linearized ADM, Liu et al. [26] presented an adaptive updating strategy for the penalty parameter . Motivated by it, we update by

where is an upper bound of . The value of is defined as

where and are given.

Based on the above analysis in Section 3.1 and Section 3.2, the proximity algorithm with adaptive penalty (abbr. PA-AP) for solving (5) can be outlined in Algorithm 1.

| Algorithm 1 PA-AP for solving (5) |

| Input: Observation vector , linear mapping , and some parameters , . |

| Initialize: Set and to zero matrix and vector, respectively. Set and . Set . |

| while not converged, do |

| step 1: Update , and in turn by (30). |

| step 2: Update by (34), and let . |

| step 3: . |

| end while |

3.3. Convergence

In this section, we establish the convergence of (22). For problem (5), Li et al. [23] presented a general formula of fixed-point algorithms. Given two symmetric positive definite matrices and , denote

where and E can be treated as linear maps which map into itself.

Defining and , then the solution to (5) is equivalent to

Furthermore, a multi-step proximity algorithm was proposed, which is

where and . Let . Li et al. [23] proved that the sequence generated by (35) converges to a solution of problem (5) if satisfies the “Condition-M”, which refers to

- (i)

- ,

- (ii)

- ,

- (iii)

- , H is symmetric positive definite,

- (iv)

- ,

- (v)

- ,

where and are the null space and the Moore–Penrose pseudo-inverse matrix of H, respectively.

By checking the Condition-M, we prove the convergence of (22).

Theorem 1.

Proof.

We clearly see that Item (i) and Item (ii) of Condition-M hold. Furthermore, , which is symmetric. By Lemma 6.2 in [23], H is positive definite if and only if , which is equivalent to . Hence, Item (iii) of Condition-M also holds.

Proof.

Since if and only if , the result is true by Theorem 1. □

4. Numerical Experiments

In this section, we present some numerical experiments to show the effectiveness of the proposed algorithm (PA-AP). To this end, we test algorithms to solve the nuclear norm minimization problem, the noiseless matrix completion problem , noisy matrix completion and low-rank image recovery problem. We compare PA-AP against the ADM [18], IADM-CG [20] and IADM-BB [19]. All experiments are performed under Windows 10 and MATLAB R2016 running on a Lenovo laptop with an Intel CORE i7 CPU at 2.7 GHz and 8 GB of memory. In the numerical experiments of the first two parts, we use randomly generated square matrices for simulations. We denote the true solution by . We generate the rank-r matrix as a product of , where and are independent matrices with i.i.d. Gaussian entries. For each test, the stopping criterion is

where . The algorithms are also forced to stop when the iteration number exceeds .

Let be the solution obtained by the algorithms. We use the relative error to measure the quality of compared to the original matrix , i.e.,

It is obvious that, in each iteration of computing , PA-AP contains an SVD computation that computes all singular values and singular vectors. However, we actually only need the ones that are bigger than . This causes the main computational load by using full SVD. Fortunately, this disadvantage can be smoothed by using the software PROPACK [30], which is designed to compute the singular values bigger than a threshold and the corresponding vectors. Although PROPACK can calculate the first fixed number of singular values, it cannot automatically determine the number of singular values greater than . Therefore, in order to perform a local SVD, we need to predict the number of singular values and vectors calculated in each iteration, which is expressed by . We initialize , and update it in each iteration as follows:

where is the number of singular values in the singular values that are bigger than .

We use r and p to represent the rank of an matrix and the cardinality of the index set , i.e., , and use to represent the sampling rate. The “degree of freedom” of a matrix with rank r is defined by . For PA-AP, we set , , , , and . In all the experimental results, the boldface numbers always indicate the best results.

4.1. Nuclear Norm Minimization Problem

In this subsection, we use PA-AP to solve the three types of problems including (2)–(4). The linear map is chosen as a partial discrete cosine transform (DCT) matrix. Specifically, in the noiseless model (2), is generated by the following MATLAB scripts:

which shows that maps into . In the noise model (3), we further set

where is the additive Gaussian noise of zero mean and standard deviation . In (3), the noise level is chosen as .

The results are listed in Table 1, where the number of iterations (Iter) and CPU time in seconds (Time) besides RelErr are reported. To further illustrate the efficiency of PA-AP, we test problems with different matrix sizes and sampling rates (). In Table 2, we compare the PA-AP with IADM-CG and IADM-BB for solving the NNRLS problem (4). It shows that our method is more efficient than the other two methods, and thus it is suitable for solving large-scale problems.

Table 1.

PA-AP for noiseless and noisy DCT matrix ().

Table 2.

Comparisons of PA-AP, IADM-CG and IADM-BB for DCT matrix ().

4.2. Matrix Completion

This subsection adopts the PA-AP method to solve the noiseless matrix completion problem (2) and the noisy matrix completion problem (3) to verify its validity. The mapping is a linear projection operator defined as , where is a vector formed by the components of with indices in . The indicators of the selected elements are randomly arranged to form a column vector, and the index set of the first is selected to form the set . For noisy matrix completion problems, we take .

In Table 3, we report the numerical results of PA-AP for noiseless and noisy matrix completion problems, taking and . Only the rank of the original matrix is considered to be and . As can be seen from Table 3, the PA-AP method can effectively solve these problems. Compared with the noiseless problem, PA-AP solves the noisy problems accuracy of the solution dropped. Moreover, the number of iterations and the running time decrease as increases.

Table 3.

PA-AP for noiseless and noisy matrix completion problems ().

To further verify the validity of the PA-AP method, it is compared with ADM, IADM-CG and IADM-BB. When RelChg is lower than , the algorithms are set to terminate. The numerical results of the four methods for solving the noiseless and noisy MC problem are recorded in Table 4 and Table 5, from which we can see that the calculation time of the PA-AP method is much less than IADM-BB and IADM-CG, and the number of iterations and calculation time of PA-AP and ADM are almost the same, while our method is relatively more accurate. From the limited experimental data, the PA-AP method is shown to be more effective than the ADM, IADM-BB and IADM-CG.

Table 4.

Comparisons of PA-AP, ADM, IADM-CG and IADM-BB for noiseless matrix completion.

Table 5.

Comparisons of PA-AP, ADM, IADM-CG and IADM-BB for noisy matrix completion (.

4.3. Low-Rank Image Recovery

In the section, we turn to solve problem (2) for low-rank image recovery. The effectiveness of the PA-AP method is verified by testing three grayscale images. First, the original images are transformed into low-rank images with rank 40. Then, we lose some elements from the low rank matrix to get the damaged image, and restore them by using the PA-AP, ADM, IADM-BB and IADM-CG, respectively. The iteration process is stopped when RelChg falls below . The original images, the corresponding low-rank images, the damaged images, and the restored images by the PA-AP are depicted in Figure 1. Observing the figure, we clearly see that our algorithm performs well.

Figure 1.

Original images (Lena, Pirate, Cameraman) with full rank (first column); Corresponding low rank images with (second column); Randomly masked images from rank 40 images with (third column); Recovered images by PA-AP (last column).

To evaluate the recovery performance, we employ the Peak Signal-to-Noise Ratio (PSNR), which is defined as

where is the infinity norm of , defined as the maximum absolute value of the elements in . From the definition, higher PSNR indicates a better recovery result.

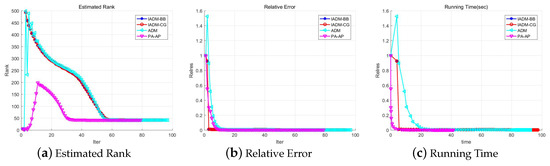

Table 6 shows the cost time, relative error and PSNR of recovery image by different methods. From Table 6, we can note that the PA-AP method is able to obtain higher PSNR as increases. Moreover, the running time of PA-AP is always much less than the other methods with different settings. Figure 2 shows the executing process of the different methods. From Figure 2, it is clear that our method can estimate the rank exactly after 30 iterations, and runs much less time before termination than other methods.

Table 6.

Comparisons of PA-AP, ADM, IADM-CG and IADM-BB for low-rank image recovery.

Figure 2.

Convergence behavior of the four methods (). The first subfigure is the estimated rank; the second is the relative error to the original matrix; and the last is the running time.

5. Conclusions and Future Work

In this paper, a unified model and algorithm for the matrix nuclear norm minimization problem are proposed. In each iteration, the proposed algorithm mainly includes computing matrix singular value decompositions and solving proximity operators of two convex functions. In addition, the convergence of the algorithm is also proved. A large number of experimental results and numerical comparisons show that the algorithm is superior to IADM-BB, IADM-CG and ADM algorithms.

The problem of tensor completion has been widely studied recently [31]. One of our future works is to extend the proposed PA-AP algorithm to tensor completion.

Author Contributions

Methodology, W.H. and W.Z.; Investigation, W.H., W.Z. and G.Y.; Writing–original draft preparation, W.H. and W.Z.; Writing–review and editing, W.H. and G.Y.; Software, W.Z.; Project administration, G.Y.

Funding

This research was funded by the National Natural Science Foundation of China (Nos. 61863001, 11661007, 61702244, 11761010 and 61562003), the National Natural Science Foundation of Jiangxi Province (Nos. 20181BAB202021, JXJG-18-14-11, and 20192BAB205086), the National Science Foundation of Zhejiang Province, China (LD19A010002), Research programme (Nos. 18zb04, YCX18B001) and the ’XieTong ChuangXin’ project of Gannan Normal University.

Acknowledgments

The authors would like to thank the anonymous reviewers that have highly improved the final version of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Candès, E.J.; Recht, B. Exact Matrix Completion via Convex Optimization. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar]

- Liu, G.; Lin, Z.C.; Yu, Y. Robust subspace segmentation by low-rank representation. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 663–670. [Google Scholar]

- Dai, Y.; Li, H.; He, M. A simple prior-free method for non-rigid structure-from-motion factorization. Int. J. Comput. Vis. 2014, 107, 101–122. [Google Scholar] [CrossRef]

- Hu, W.; Wang, Z.; Liu, S.; Yang, X.; Yu, G.; Zhang, J.J. Motion capture data completion via truncated nuclear norm regularization. IEEE Signal Proc. Lett. 2018, 25, 258–262. [Google Scholar] [CrossRef]

- Lin, X.F.; Wei, G. Accelerated reweighted nuclear norm minimization algorithm for low rank matrix recovery. Signal Process. 2015, 114, 24–33. [Google Scholar] [CrossRef]

- Candès, E.J.; Plan, Y. Matrix completion with noise. Proc. IEEE 2010, 98, 925–936. [Google Scholar]

- Nie, F.; Huang, H.; Ding, C.H. Low-rank matrix recovery via efficient schatten p-norm minimization. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 655–661. [Google Scholar]

- Mohan, K.; Fazel, M. Iterative reweighted algorithms for matrix rank minimization. J. Mach. Learn. Res. 2012, 13, 3441–3473. [Google Scholar]

- Zhang, D.; Hu, Y.; Ye, J.; Li, X.; He, X. Matrix completion by truncated nuclear norm regularization. In Proceedings of the Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2192–2199. [Google Scholar]

- Nie, F.; Hu, Z.; Li, X. Matrix completion based on non-convex low rank approximation. IEEE Trans. Image Process. 2019, 28, 2378–2388. [Google Scholar] [CrossRef]

- Cui, A.; Peng, J.; Li, H.; Zhang, C.; Yu, Y. Affine matrix rank minimization problem via non-convex fraction function penalty. J. Comput. Appl. Math. 2018, 336, 353–374. [Google Scholar] [CrossRef]

- Tütüncü, R.H.; Toh, K.C.; Todd, M.J. Solving semidenite-quadratic-linear programs using SDPT3. Math. Program. 2003, 95, 189–217. [Google Scholar] [CrossRef]

- Cai, J.F.; Candés, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Ma, S.; Goldfarb, D.; Chen, L. Fixed point and bregman iterative methods for matrix rank minimization. Math. Progr. 2009, 128, 321–353. [Google Scholar] [CrossRef]

- Toh, K.C.; Yun, S. An accelerated proximal gradient algorithm for nuclear norm regularized least squares problems. Pac. J. Optim. 2010, 6, 615–640. [Google Scholar]

- Geng, J.; Wang, L.; Wang, X. Nuclear norm and indicator function model for matrix completion. J. Inverse Ill-Posed Probl. 2015, 24, 1–11. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, M.; Ma, Y. The augmented Lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Chen, C.; He, B.; Yuan, X.M. Matrix completion via an alternating direction method. IMA J. Numer. Anal. 2012, 32, 227–245. [Google Scholar] [CrossRef]

- Xiao, Y.H.; Jin, Z.F. An alternating direction method for linear-constrained matrix nuclear norm minimization. Numer. Linear Algebra 2012, 19, 541–554. [Google Scholar] [CrossRef]

- Jin, Z.F.; Wang, Q.; Wan, Z. Recovering low-rank matrices from corrupted observations via the linear conjugate gradient algorithm. J. Comput. Appl. Math. 2014, 256, 114–120. [Google Scholar] [CrossRef]

- Yang, J.; Yuan, X.M. Linearized augmented Lagrangian and alternating direction methods for nuclear minimization. Math. Comput. 2013, 82, 301–329. [Google Scholar] [CrossRef]

- Barzilai, J.; Borwein, J.M. Two point step size gradient method. IMA J. Numer. Anal. 1988, 4, 141–148. [Google Scholar] [CrossRef]

- Li, Q.; Shen, L.; Xu, Y. Multi-step fixed-point proximity algorithms for solving a class of optimization problems arising from image processing. Adv. Comput. Math. 2015, 41, 387–422. [Google Scholar] [CrossRef]

- Zhang, X.; Burger, M.; Osher, S. A unified prial-dual algorithm framework based on Bregman iteration. J. Sci. Comput. 2011, 46, 20–46. [Google Scholar] [CrossRef]

- Wang, J.H.; Meng, F.Y.; Pang, L.P.; Hao, X.H. An adaptive fixed-point proximity algorithm for solving total variation denoising models. Inform. Sci. 2017, 402, 69–81. [Google Scholar] [CrossRef]

- Lin, Z.C.; Liu, R.; Su, Z. Linearized alternating direction method with adaptive penalty for low-rank representation. Proc. Adv. Neural Inf. Process. Syst. 2011, 104, 612–620. [Google Scholar]

- Gabay, D.; Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite-element approximations. Comput. Math. Appl. 1976, 2, 17–40. [Google Scholar] [CrossRef]

- Chen, P.; Huang, J.; Zhang, X. A primal-dual fixed point algorithm for convex separable minimization with applications to image restoration. Inverse Probl. 2013, 29, 025011. [Google Scholar] [CrossRef]

- Micchelli, C.A.; Shen, L.; Xu, Y. Proximity algorithms for image models: Denoising. Inverse Probl. 2011, 27, 045009. [Google Scholar] [CrossRef]

- Larsen, R.M. PROPACK-Software for Large and Sparse SVD Calculations. Available online: http://sun.stanfor.edu/srmunk/PROPACK/ (accessed on 1 September 2019).

- Li, X.T.; Zhao, X.L.; Jiang, T.X.; Zheng, Y.B.; Ji, T.Y.; Huang, T.Z. Low-rank tensor completion via combined non-local self-similarity and low-rank regularization. Neurocomputing 2019, 267, 1–12. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).