A Synthetic Fusion Rule for Salient Region Detection under the Framework of DS-Evidence Theory

Abstract

1. Introduction

2. Related Work

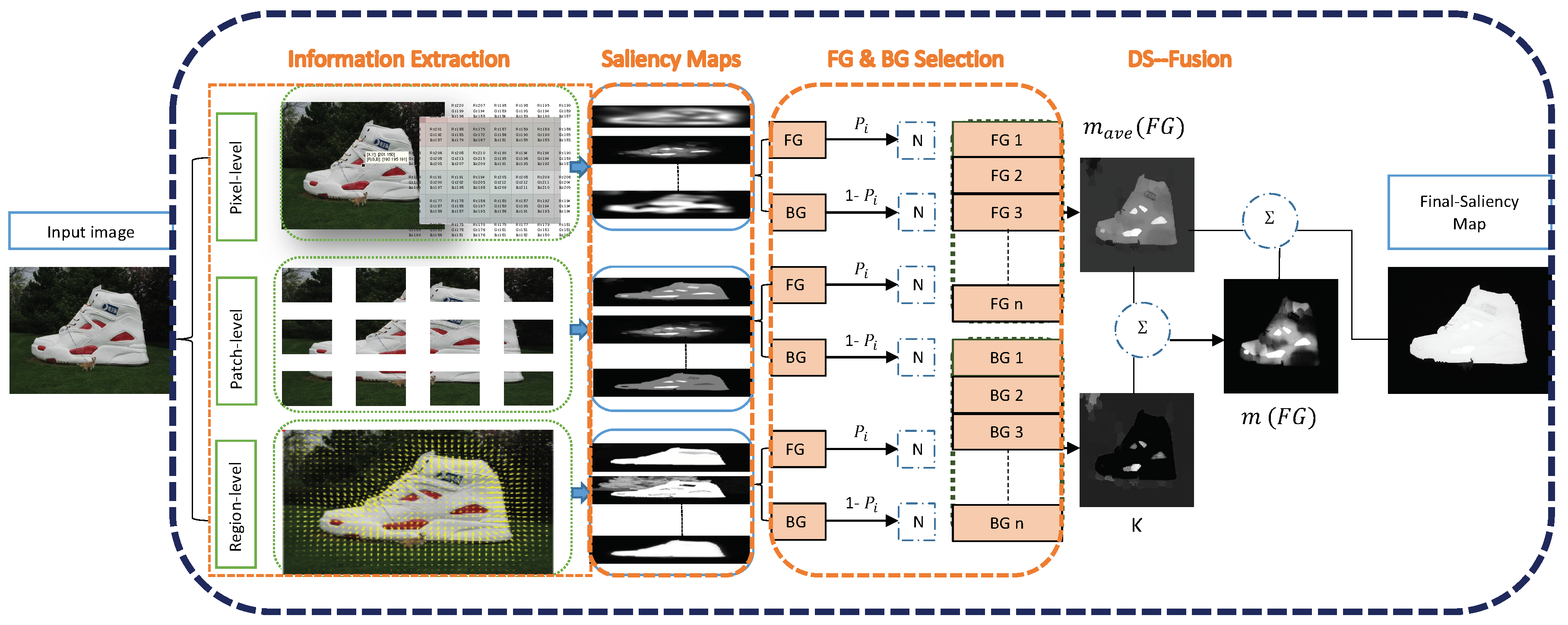

3. Proposed Algorithm

3.1. DS-Evidence Theory Review

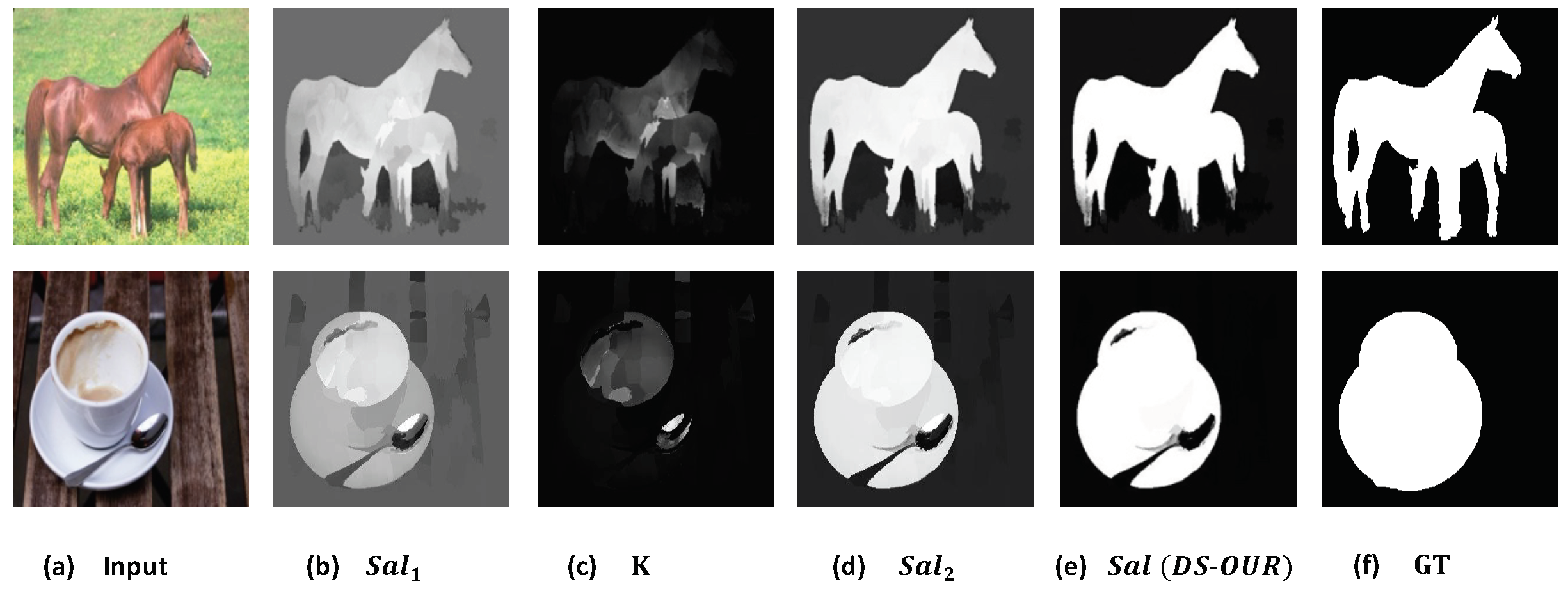

3.2. DS-Fusion Method

| Algorithm 1: DS-Saliency Fusion Algorithm |

|

4. Experiments and Results

4.1. Data-Sets

4.2. Evaluation Metrics

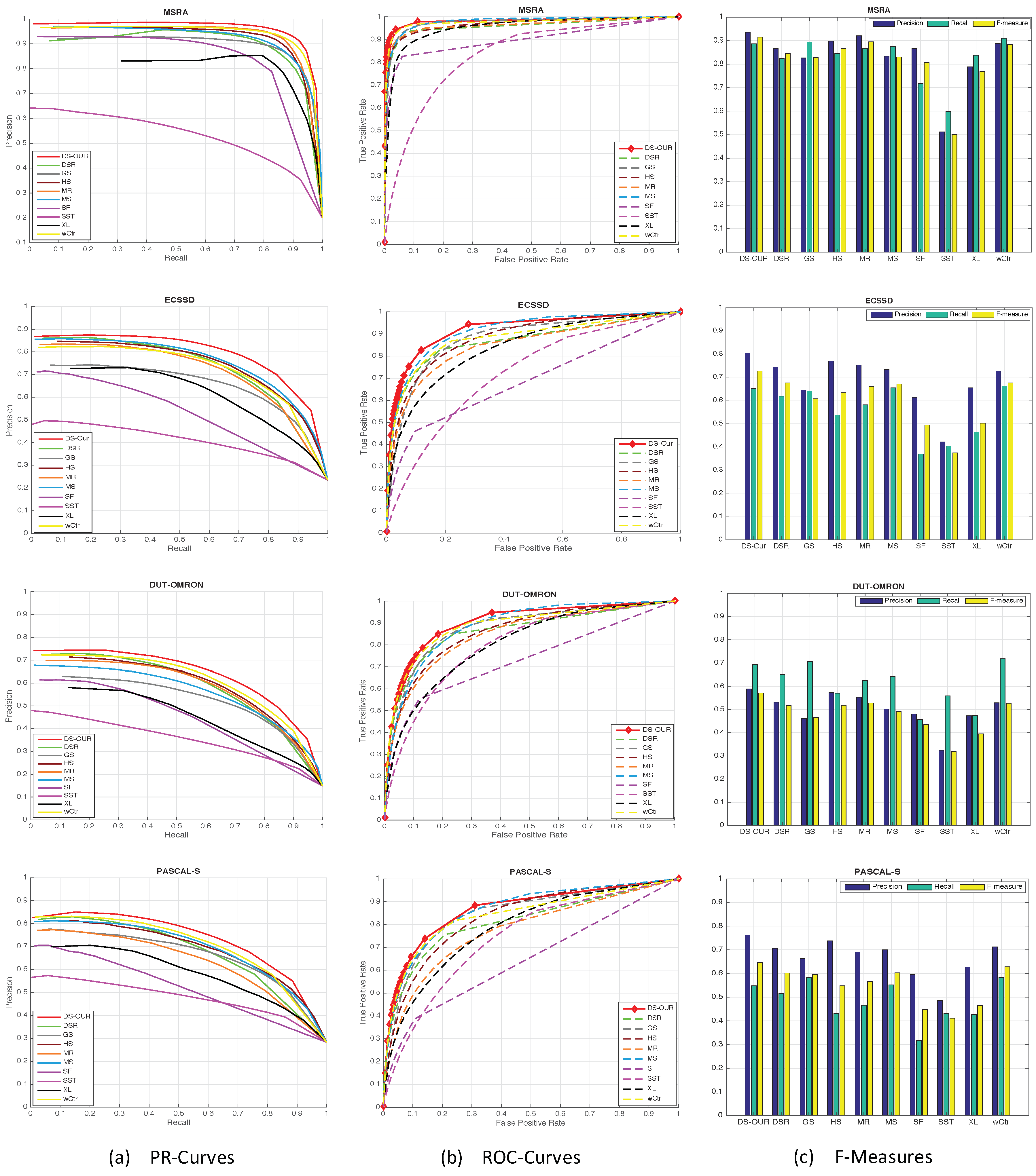

4.2.1. Precision–Recall Curves

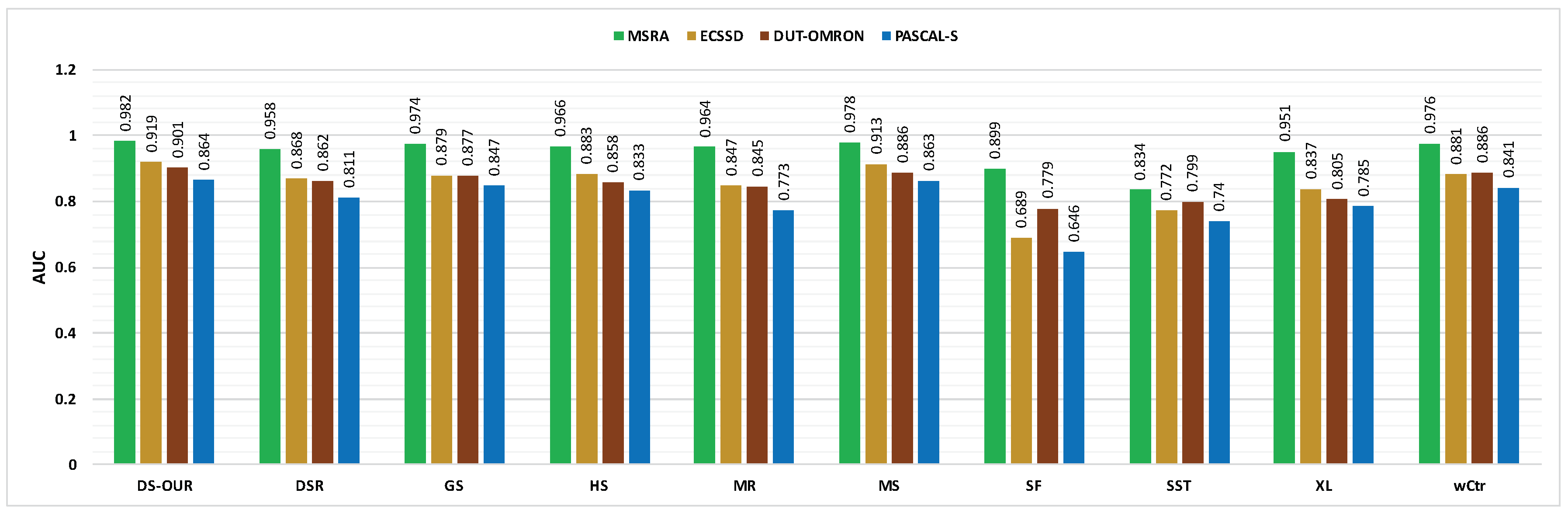

4.2.2. ROC–AUC Curves

4.2.3. F-Measure

4.2.4. MAE Evaluation

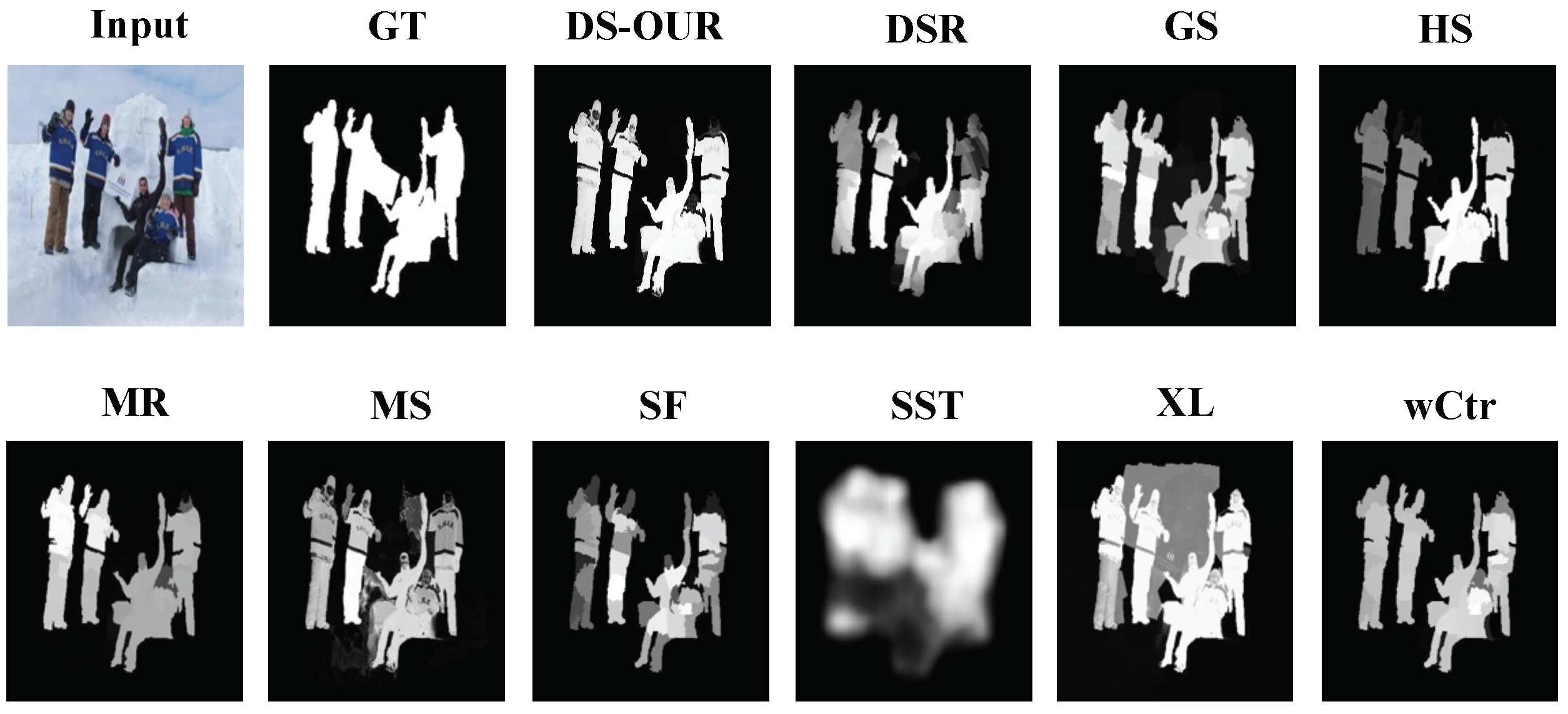

4.3. Performance Comparison

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Zhao, J.; Liu, X.; Sun, J.; Zhou, S. Imaging of Transmission Equipment by Saliency-Based Compressive Sampling. In Proceedings of the 2012 International Conference on Information Technology and Software Engineering, Beijing, China, 8–10 December 2012; Springer: Berlin/Heidelberg, Germany, 2013; pp. 689–696. [Google Scholar] [CrossRef]

- Gu, K.; Wang, S.; Yang, H.; Lin, W.; Zhai, G.; Yang, X.; Zhang, W. Saliency-Guided Quality Assessment of Screen Content Images. IEEE Trans. Multimed. 2016, 18, 1098–1110. [Google Scholar] [CrossRef]

- Harding, P.; Robertson, N.M. Visual Saliency from Image Features with Application to Compression. Cognit. Comput. 2013, 5, 76–98. [Google Scholar] [CrossRef]

- Sima, H.; Liu, L.; Guo, P. Color Image Segmentation Based on Regional Saliency. In Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 142–150. [Google Scholar] [CrossRef]

- Li, L.; Ren, J.; Wang, X. Fast cat-eye effect target recognition based on saliency extraction. Opt. Commun. 2015, 350, 33–39. [Google Scholar] [CrossRef]

- Jia, S.; Zhang, C.; Li, X.; Zhou, Y. Mesh resizing based on hierarchical saliency detection. Graph. Model. 2014, 76, 355–362. [Google Scholar] [CrossRef]

- Yang, X.; Qian, X.; Xue, Y. Scalable Mobile Image Retrieval by Exploring Contextual Saliency. IEEE Trans. Image Process. 2015, 24, 1709–1721. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Liu, X. Foot plant detection for motion capture data by curve saliency. In Proceedings of the Fifth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–13 July 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar] [CrossRef]

- Margolin, R.; Tal, A.; Zelnik-Manor, L. What Makes a Patch Distinct? In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1139–1146. [Google Scholar] [CrossRef]

- Wang, W.; Shen, J.; Shao, L.; Porikli, F. Correspondence Driven Saliency Transfer. IEEE Trans. Image Process. 2016, 25, 5025–5034. [Google Scholar] [CrossRef] [PubMed]

- Wei, Y.; Wen, F.; Zhu, W.; Sun, J. Geodesic Saliency Using Background Priors. In Proceedings of the Computer Vision—ECCV 2012, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 29–42. [Google Scholar] [CrossRef]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar] [CrossRef]

- Cheng, M.M.; Warrell, J.; Lin, W.Y.; Zheng, S.; Vineet, V.; Crook, N. Efficient Salient Region Detection with Soft Image Abstraction. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1529–1536. [Google Scholar] [CrossRef]

- Cheng, M.M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S.M. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Jiang, B.; Zhang, L.; Lu, H.; Yang, C.; Yang, M.H. Saliency Detection via Absorbing Markov Chain. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1665–1672. [Google Scholar] [CrossRef]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M.H. Saliency Detection via Graph-Based Manifold Ranking. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar] [CrossRef]

- Xie, Y.; Lu, H.; Yang, M.H. Bayesian Saliency via Low and Mid Level Cues. IEEE Trans. Image Process. 2013, 22, 1689–1698. [Google Scholar] [CrossRef] [PubMed]

- Ayoub, N.; Gao, Z.; Chen, D.; Tobji, R.; Yao, N. Visual Saliency Detection Based on color Frequency Features under Bayesian framework. KSII Trans. Int. Inf. Syst. 2018, 12, 676–692. [Google Scholar] [CrossRef]

- Mai, L.; Niu, Y.; Liu, F. Saliency Aggregation: A Data-Driven Approach. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1131–1138. [Google Scholar] [CrossRef]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cognit. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- Lu, H.; Li, X.; Zhang, L.; Ruan, X.; Yang, M.H. Dense and Sparse Reconstruction Error Based Saliency Descriptor. IEEE Trans. Image Process. 2016, 25, 1592–1603. [Google Scholar] [CrossRef] [PubMed]

- Seo, H.J.; Milanfar, P. Static and space-time visual saliency detection by self-resemblance. J. Vis. 2009, 9, 15. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Yan, Q.; Xu, L.; Jia, J. Hierarchical Image Saliency Detection on Extended CSSD. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 717–729. [Google Scholar] [CrossRef] [PubMed]

- Tong, N.; Lu, H.; Zhang, L.; Ruan, X. Saliency Detection with Multi-Scale Superpixels. IEEE Signal Process. Lett. 2014, 21, 1035–1039. [Google Scholar] [CrossRef]

- Zhu, W.; Liang, S.; Wei, Y.; Sun, J. Saliency Optimization from Robust Background Detection. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2814–2821. [Google Scholar] [CrossRef]

- Dempster, P.A. Upper and lower probabilities induced by a multivalued mapping. Ann. Math. Stat. 1967, 38, 325–339. [Google Scholar] [CrossRef]

- Barnett, J.A. Computational Methods for a Mathematical Theory of Evidence. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI’81), Vancouver, BC, Canada, 24–28 August 1981; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1981; Volume 2, pp. 868–875. [Google Scholar]

- Lowrance, J.D.; Garvey, T.D.; Strat, T.M. A Framework for Evidential-Reasoning Systems. In Classic Works of the Dempster–Shafer Theory of Belief Functions; Springer: Berlin/Heidelberg, Germany, 2008; pp. 419–434. [Google Scholar]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Beynon, M.; Curry, B.; Morgan, P. The Dempster–Shafer theory of evidence: An alternative approach to multicriteria decision modelling. Omega 2000, 28, 37–50. [Google Scholar] [CrossRef]

- Li, Y.; Hou, X.; Koch, C.; Rehg, J.M.; Yuille, A.L. The Secrets of Salient Object Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 280–287. [Google Scholar] [CrossRef]

| Methods | MSRA | ECSSD | DUT-OMRON | PASCAL-S |

|---|---|---|---|---|

| DS-OUR | 0.982/0.061/0.915 | 0.919/0.160/0.727 | 0.901/0.127/0.572 | 0.864/0.195/0.647 |

| DSR | 0.958/0.096/0.845 | 0.868/0.176/0.676 | 0.862/0.137/0.518 | 0.811/0.205/0.602 |

| GS | 0.974/0.107/0.828 | 0.879/0.206/0.609 | 0.877/0.174/0.466 | 0.847/0.221/0.596 |

| HS | 0.966/0.111/0.866 | 0.883/0.228/0.634 | 0.858/0.227/0.519 | 0.833/0.263/0.549 |

| MR | 0.964/0.075/0.895 | 0.847/0.186/0.660 | 0.845/0.187/0.528 | 0.773/0.229/0.567 |

| MS | 0.978/0.105/0.830 | 0.913/0.204/0.671 | 0.886/0.210/0.491 | 0.863/0.224/0.601 |

| SF | 0.899/0.129/0.808 | 0.689/0.219/0.493 | 0.779/0.147/0.435 | 0.646/0.236/0.448 |

| SST | 0.834/0.223/0.502 | 0.772/0.313/0.374 | 0.799/0.254/0.320 | 0.740/0.302/0.411 |

| XL | 0.951/0.195/0.769 | 0.837/0.307/0.502 | 0.805/0.332/0.395 | 0.785/0.310/0.465 |

| wCtr | 0.976/0.066/ 0.884 | 0.881/0.172/0.677 | 0.886/0.144/0.528 | 0.841/0.199/0.629 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayoub, N.; Gao, Z.; Chen, B.; Jian, M. A Synthetic Fusion Rule for Salient Region Detection under the Framework of DS-Evidence Theory. Symmetry 2018, 10, 183. https://doi.org/10.3390/sym10060183

Ayoub N, Gao Z, Chen B, Jian M. A Synthetic Fusion Rule for Salient Region Detection under the Framework of DS-Evidence Theory. Symmetry. 2018; 10(6):183. https://doi.org/10.3390/sym10060183

Chicago/Turabian StyleAyoub, Naeem, Zhenguo Gao, Bingcai Chen, and Muwei Jian. 2018. "A Synthetic Fusion Rule for Salient Region Detection under the Framework of DS-Evidence Theory" Symmetry 10, no. 6: 183. https://doi.org/10.3390/sym10060183

APA StyleAyoub, N., Gao, Z., Chen, B., & Jian, M. (2018). A Synthetic Fusion Rule for Salient Region Detection under the Framework of DS-Evidence Theory. Symmetry, 10(6), 183. https://doi.org/10.3390/sym10060183