1. Introduction

Data classification is one of the most productive fields of study within the scope of data mining and machine learning. Classification can be applied to scientific and industrial data, handwritten text and multimedia content, biomedical data and social network data. Such a broad scope is due to the fact that the aim of classification is to identify the interrelation among the set of pre-defined input variables (features) and the desired output variable (class label). Some of the most common data classification methods are decision trees, rule-based methods, probabilistic methods, support vector machines and neural networks [

1].

Fuzzy classifiers, which are rule-based classifiers, offer a significant advantage both in terms of their functionality and in terms of subsequent analysis and design. A unique advantage of fuzzy classifiers is associated with the interpretability of classification rules. The key measure of efficiency is classification accuracy that is frequently used in comparative analysis of fuzzy classifiers versus classifiers based on other principles [

2,

3].

Design of any classifier is based on the assumption that the class labels for each instance in the training dataset are known. Class labels in a test dataset are predicted using a classifier designed with a training set. The relation of accurately classified instances to the overall test data is indicative of classification accuracy. However, the large number of features found in datasets results in an increased calculation time and decreased accuracy of prediction. Selection of features makes it possible to reduce the dimensions of the feature input space by identifying and eliminating noise and irrelevant features [

4].

The process of fuzzy classifier design includes the following principal stages: feature selection, structure formation (rule base), optimization of fuzzy rule parameters. Feature selection methods are conventionally grouped into two categories: filters and wrappers [

5], the difference between the two being whether or not a classifier is designed during feature selection. The structure of the classifier is most often formed with the use of clustering methods designed to identify the data structure and build information granules that may be related to linguistic terms [

2]. Parameters of fuzzy rules can be optimized using conventional approaches based on calculation of derivatives or with the help of metaheuristics methods [

6].

No Free Lunch Theorem [

7,

8] tells us that there are no context- or problem-independent reasons to favour one learning or classification method over another. The performance of all the metaheuristics is by and large problem-dependent. The superiority of a classification method depends on dataset properties. If a classifier generalizes better to a certain data set, then it is a result of its better match for a specific problem rather than its supremacy over other classifiers [

9].

A swarm optimization algorithm from the physical field was introduced in [

10]. An algorithm was called Gravitational Search Algorithm (GSA). Its agents represent particles that have masses with different sizes that follow the Newtonian gravity law. GSA was compared with some known metaheuristic search methods.

To solve different kinds of optimization problems, modified versions of GSA have been introduced, including continuous, binary-valued, discrete, multimodal and multi-objective versions of GSA. The efficiency of GSA has been improved using enhanced operators, hybridization of GSA with other metaheuristic algorithms, designing the adaptive algorithms and intelligent techniques [

11]. An adaptive GSA that switches between synchronous and asynchronous update is presented in Reference [

12]. The proposed algorithm combines both synchronous and asynchronous updates. The integration of these iterative strategies changes the behaviour of the particles. In Reference [

13] the authors propose a fuzzy gravitational search algorithm for the design of optimal 8th order IIR filters. The proposed algorithm is a combination between fuzzy techniques and gravitational search. Two Mamdani inference systems tune parameters of GSA, finding a good trade-off between exploration and exploitation of the search process. In Reference [

14], to find trade-off between exploration and exploitation, it was proposed to use an approach, which combines neural network and fuzzy system for the tuning of GSA parameters. In Reference [

15] the authors propose to tune a suitable parameter of GSA through a fuzzy controller whose membership functions are optimized by Genetic Algorithms, Particle Swarm Optimization and Differential Evolution.

The results which were obtained confirmed the high performance of the proposed method in solving various nonlinear functions. It has been demonstrated that the Gravitational Search Algorithm has the ability to find the optimum solution for many benchmarks [

10,

12,

16,

17,

18,

19]. For this reason, this algorithm was chosen to solve the problem of designing a fuzzy-rule-based classifier.

This paper aims at developing the fuzzy-rule-based classifier using Gravitational Search Algorithm.

The main contributions of this work are the following:

A new technique for generating a fuzzy-rule-based classifier.

A method that selects a compact and efficient subset of features.

A new method of tuning fuzzy-rule-based classifier parameters.

A statistical comparison among the results achieved by the fuzzy-rule-based classifiers generated by our technique and by two state-of-the-art learning algorithms.

3. Materials and Methods

A fuzzy classifier is designed in three stages: feature selection, generation of a fuzzy-rule base and optimization of the antecedent parameters of rules. Features are selected with the Binary Gravitational Search Algorithm. The classifier structure is formed by the rule base generation algorithm, using extreme feature values. In the proposed learning method, the related parameters of the proposed classifier are tuned by using the continuous GSA. The performance of the classifier is tested on real-world KEEL datasets. At the final stage, classifiers designed with the proposed method are compared to similar classifiers using the Mann-Whitney-Wilcoxon test as the criterion.

3.1. Fuzzy Classifier

Classification consists in finding such a class label in a set of class labels that would correspond to the vector of the object’s feature values [

38]. In universe

U = (

A,

C), where

A = {

,

, …,

} is a set of input features,

C = {

,

, …,

} is a set of class labels, the object is characterized by its vector of feature values. Let

x =

×

× … ×

∈ ℜ

n be an

n-dimensional feature space.

A fuzzy classifier can be represented as a function that assigns a class label to a point

x in the input feature space with a calculable degree of confidence:

The fuzzy classifier is based on a production rule base that appears as follows:

where

j is the rule index;

R is the number of rules;

Akj is a fuzzy term that characterizes the

k-th feature in the

j-th rule (

k = 1, …,

n);

is the consequent class;

S = (

, , …, ) is the binary vector of features: line

indicates presence (

= 1) or absence (

= 0) of a feature in the classifier.

The class label is defined in the observation table {(

;

),

} as follows:

where

is the membership function value of fuzzy term

Ajk at point

.

3.2. Performance Measures

The classification accuracy measure is defined as a ratio between accurately determined class labels and the number of objects:

where

f(

;

θ,

S) is the fuzzy classifier output with parameters of fuzzy terms

θ and features

S at point

.

The problem of fuzzy classifier design is confined to finding the maximum of the function in space

S and

θ = (

θ1,

θ2, …,

θD):

where

θimin,

θimax are the upper and lower boundaries of the domain of each parameter, correspondingly. This problem is NP-hard; in this paper, we propose to solve it by splitting it into two tasks: feature selection and tuning fuzzy term parameters.

3.3. Binary Gravitational Search Algorithm

The feature selection problem consists in searching for such a subset of the predetermined set of features x that would not cause a decrease in classification accuracy as the number of features is reduced; the solution is represented as a binary vector S = (, , …, )T, where = 0 means that the i-th feature does not participate in classification, = 1 means that the i-th feature is used by the classifier. This problem can be solved with the Binary Gravitational Search Algorithm.

The idea of gravitational search is that the input vector population is presented as a system of elementary particles with gravity forces acting between them [

10]. The higher the accuracy of a vector-based classifier, the higher the mass of a particle corresponding to that vector and the stronger it attracts other particles. But since the particle is affected by gravity forces as well, it will be moving while searching in its local domain.

The binary version of the algorithm is used to find the binary vector of features Sbest that makes it possible to achieve the highest level of classification accuracy.

The input data for gravitational search is the following: vectors of system parameters

θ, number of vectors

P, maximum number of iterations

T, initial value of gravitational constant

G0, coefficients α and small constant ε. The initial population

S = {

S1,

S2, …,

SP} is randomly generated. Before the start, a classifier is built based on each vector and fitness function is evaluated:

The mass, acceleration, velocity and movement of particles are measured at each iteration of the algorithm. The mass of the

i-th particle is calculated with due regard to classification accuracy:

where

m is the mass of the particle,

t is the iteration number,

best(

t) and

worst(

t) are the values of fitness function of the least and the most accurate vectors at the current iteration, correspondingly.

According to Newton’s second law, the total force acting on a particle imparts acceleration to it:

where

is the ordinal number of the vector element;

rand(0; 1) is a random number within the interval [0; 1];

is the normalized mass value of the

j-th particle;

;

is the value of the gravitational constant. The denominator uses the distance and not the distance squared, which, as the authors of the algorithm [

10] believe, makes it possible to achieve better results.

The particle velocity is determined as follows:

Then each particle is updated with the help of the transfer function; a detailed description of the functions is given in

Section 3.4 of this paper. An iteration of the algorithm is deemed to have ended after the vectors are updated and the value of the population classification accuracy is calculated. When the population counter reaches value

T, the algorithm stops and feeds the vector with the highest accuracy value

Sbest to the output.

3.4. The Transfer Functions

In the Binary Gravitational Search Algorithm, the velocity gained by the vector element shows how much the element needs to change to reach the best solution available in the population. If the velocity is high, it can be assumed that the element is far removed from the best solution element and the mass of the particle is rather low. Therefore, the element must be replaced with an inverse element or excluded from the vector by assigning a zero to it. Thus, the vector is updated with a certain probability that is calculated based on velocity [

42] with the help of the transfer function, which is responsible to map a continuous search space to a discrete search space [

43]. The study used four such functions.

The first function S1 belongs to the class of S-shaped asymmetric functions and represents the probability of 0:

The second function S2 makes use of an additional coefficient:

where

The third function V1 belongs to the class of V-shaped symmetric functions:

The last function used, V2, is also a V-shaped function that represents the probability that the vector element value will change to the opposite:

where ⨁ means the logical OR operator.

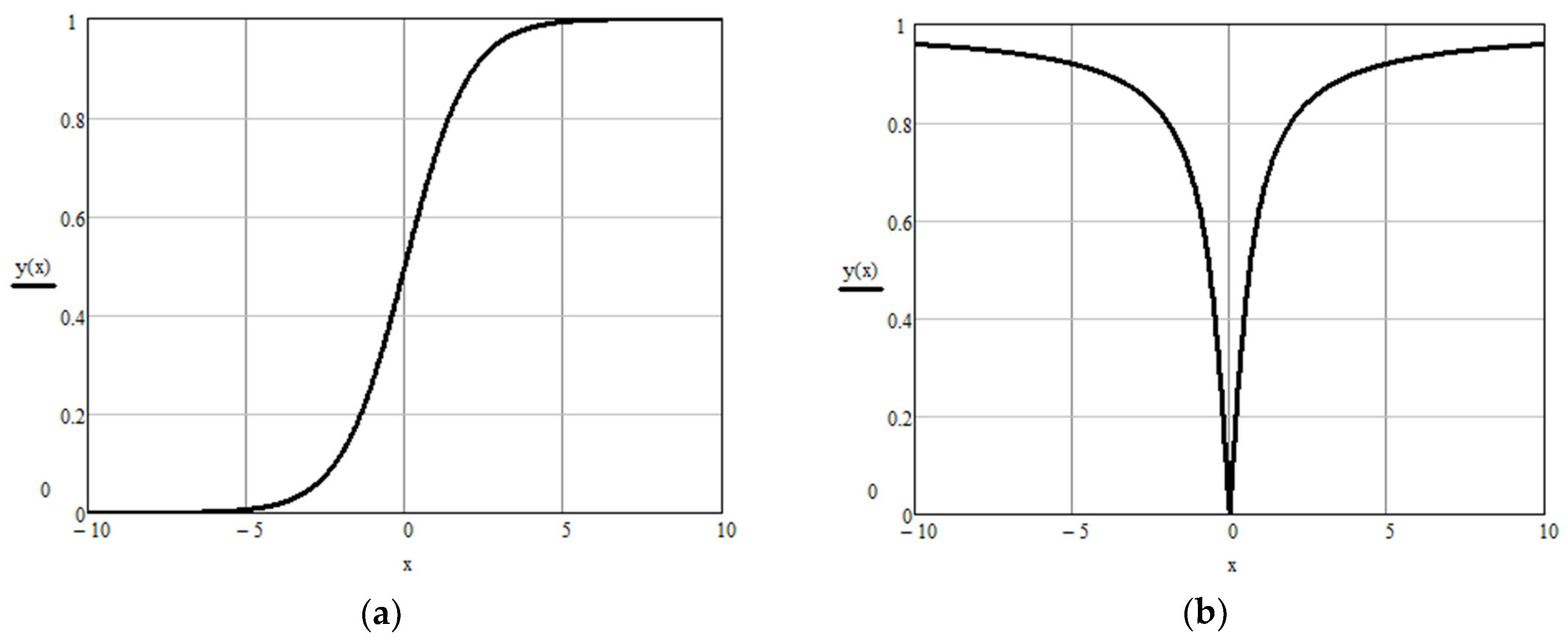

Figure 1 shows typical graphs produced by the functions used, where the S-shaped function is defined as follows:

V-shaped function:

Velocity that is used to calculate the value of the transfer function is a numerical value. One disadvantage of S-shaped transfer functions for the Binary Gravitational Algorithm is that the particle elements that have gained a high negative velocity will with a high probability remain in the vector. V-shaped functions are symmetrical with respect to the axis of ordinates and therefore are free of that disadvantage.

A pseudo code of the Binary Gravitational Search Algorithm is shown in Algorithm 1.

| Algorithm 1. Binary Gravitational Search Algorithm. |

| Input: θ, P, T, G0, α, ε. |

| Output: Sbest. |

| begin |

| Initialize the population S = {S1, S2, …, SP}; |

| while (t < T) |

| by Equation (5) for i = 1, 2, ..., P; |

| find best(t) and worst(t); |

| update G(t) by Equation (9); |

| (t) by Equation (10) for i = 1, 2, ..., P; |

| update the position of particles with one of the Equations (11)–(14); |

| end while |

| output the particle with the best fitness value Sbest; |

| end |

3.5. Algorithm for Generating Rule Base by Extreme Feature Values

The algorithm is designed to form an initial base of rules of a fuzzy classifier containing one rule for each class. The rules are formed based on extreme values of the training sample Tr = {(xp; ), p = 1 ,..., |Tr|}. Let us introduce the following notation: m is the number of classes, n is the number of features, Ω* is the classifier rule base. A pseudo code of the generating algorithm is demonstrated in Algorithm 2.

| Algorithm 2. Algorithm for generating rule base by extreme feature values. |

| Input: m, n, Tr. |

| Output: classifier rule base Ω*. |

| begin |

| Ω:= ∅; |

| do loop j from 1 till m |

| do loop k from 1 till n |

| ; |

| ; |

| ]; |

| end of loop |

| creation of rule R1j on the basis of terms Ajk that refers observation to the class |

| ; |

| Ω*:= Ω ∪ {R1j} |

| end of loop |

| output Ω*. |

| end |

3.6. Continuous Gravitational Search Algorithm

Fuzzy term parameters obtained during the classifier structure generation will not always ensure that the classification is efficient. In order to improve its accuracy, the parameters must be adjusted. This can be achieved by optimizing the vector of fuzzy terms parameters θ using continuous gravitational search.

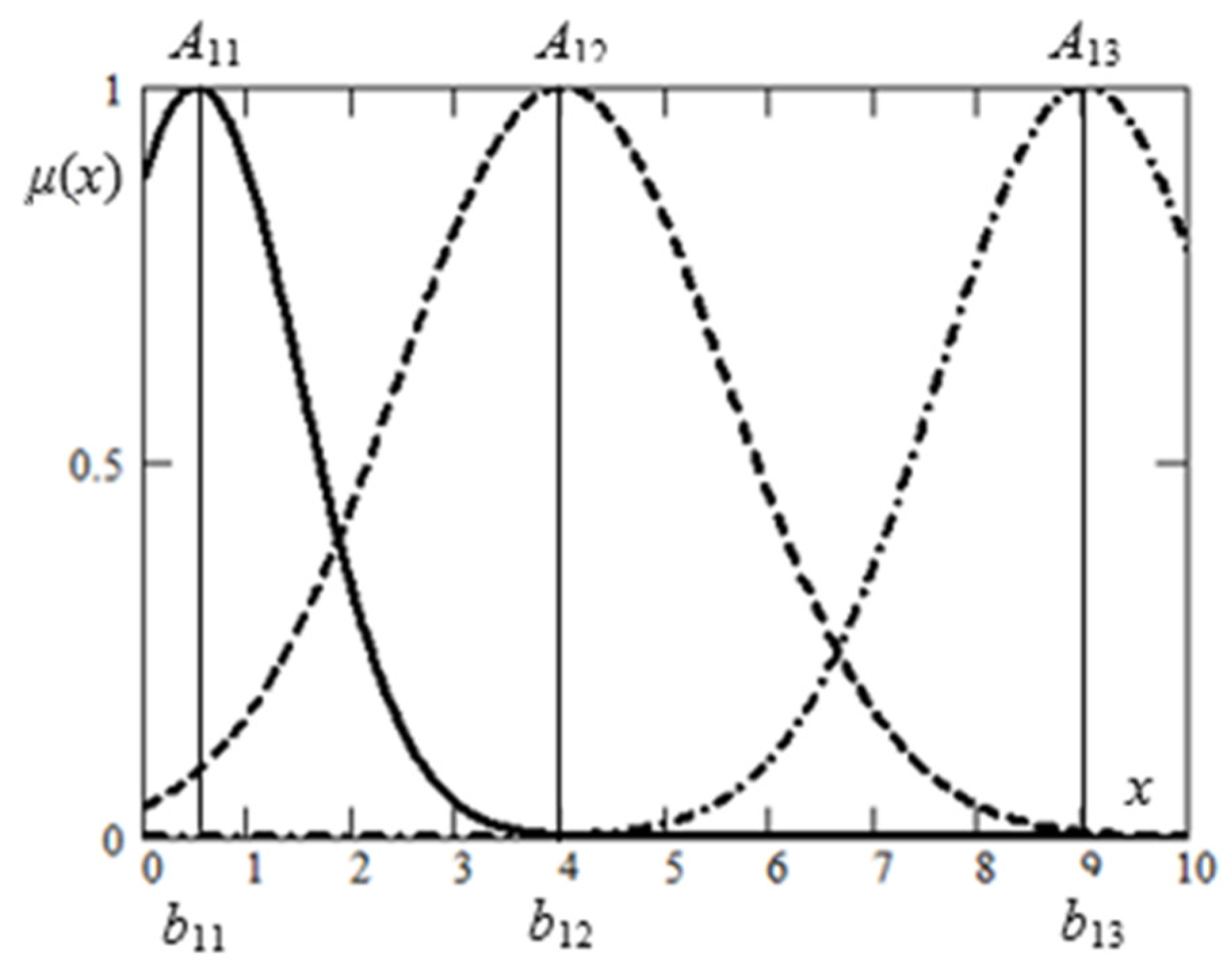

Figure 2 shows an example demonstrating the formation of vector

θ. Feature

a here is represented by three symmetric Gaussian terms, each of them determined by two parameters (

b—the coordinate of the peak on the abscissa,

c—scatter) included in vector

θ = (

,

,

,

,

,

,

,

, …). The use of symmetric membership functions is preferable because of their better interpretability.

Dimensions of the vector θ are determined by the number of input features used in classification and by the number and type of terms describing each feature. For some datasets, asymmetrical types of terms, such as triangular membership functions, can be a better choice.

Population

Θ = {

θ1,

θ2, …,

θP} for the Continuous Gravitational Search Algorithm is created by copying the input vector

θ1, generated by the classifier structure generation algorithm, with normal deviation. The input data for the algorithm is: vector of features

S, number of term parameter vectors

P, maximum number of iterations

T, initial value of gravitational constant

G0, coefficients α and small constant ε. Before the start, a classifier is built based on each vector and classification accuracy is evaluated:

The mass, acceleration, velocity and movement of particles are measured in each iteration as well as in the binary algorithm. According to Newton’s second law, the total force acting on a particle imparts acceleration to it:

where

is the ordinal number of the vector element;

rand(0; 1) is a random number within the interval [0; 1];

is the normalized value of the mass of the

j-th particle;

;

is the value of the gravitational constant.

Vector elements are updated as follows:

where

. After the entire population is updated, classification accuracy is recalculated and the iteration ends.

The algorithm ends when the number of iterations (t = T) is exhausted, or if all vectors are equal. The output data produced by the algorithm is the vector of system parameters θbest that possess the highest level of classification accuracy.

A pseudo code of the Binary Gravitational Search Algorithm is shown in Algorithm 3.

| Algorithm 3. Continuous Gravitational Search Algorithm. |

| Input: S, P, T, G0, α, ε. |

| Output: θbest. |

| begin |

| Initialize the population Θ = {θ1, θ2, …, θP}; |

| while (t < T) |

| by Equation (17) for i = 1, 2, ..., P; |

| find best(t) and worst(t); |

| update G(t) by Equation (9); |

| (t) by Equation (10) for i = 1, 2, ..., P; |

| update the position of particles with the Equation (19); |

| end while |

| output the particle with the best fitness value θbest; |

| end |

3.7. Datasets

The algorithms described above have been validated using real-world datasets from the dataset repository KEEL (

http://keel.es).

Table 1 shows a description of the datasets used.

3.8. Test Phase

Two experiments have been conducted within the framework of the study. The first experiment focused on validation of the Binary Gravitational Search Algorithm in the wrapper mode for a fuzzy classifier while using various transfer functions. The feature selection experiment was designed as follows. Datasets with the number of features exceeding four were grouped into ten training and test sets in accordance with the cross-validation scheme. For each sample, the Binary Gravitational Search Algorithm was started with each of the four transfer functions, one at a time. Then, the resulting feature sets were used to design a fuzzy classifier with the help of a class extremum-based algorithm for all ten samples. The experiment has produced averages of classification accuracy and of the number of features for each transfer function.

The second experiment focused on designing fuzzy classifiers using the Binary and Continuous Gravitational Search Algorithms. Out of the feature set found in the first experiment, the best set in terms of its training accuracy was selected. The selected feature set was used to design a classifier with the help of a class extremum-based algorithm. Then the Continuous Gravitational Search Algorithm was used to optimize parameters of membership functions for the resultant classifier. The results were averaged over five independent runs of the Continuous Gravitational Search Algorithm.

The number of particles in gravitational search populations P is ten, the initial value of the gravitational constant is G0 = 10, coefficient α = 10, small constant ε = 0.01. The maximum number of iterations for the Continuous Binary Search Algorithm is T = 1000. The number of iterations for the Binary Algorithm varied depending on the number of features in the dataset (100 to 1000 iterations). The value of the parameters is determined empirically.

4. Experimental Results

The present study aims to identify different classifiers, which would encounter the performance for the data that was selected.

4.1. Comparison of Feature Selection Results Using the Binary Gravitational Algorithm with Various Transfer Functions

The first experiment focused on validation of the Binary Gravitational Algorithm in the wrapper mode for a fuzzy classifier.

The test accuracy obtained while designing a fuzzy system based on a full set of features (without feature selection) is compared to the test accuracy obtained after selecting features by the Binary Gravitational Search Algorithm for each of the transfer functions described in

Section 3.3.

Table 2 shows the results of the experiment for datasets with the number of features exceeding four. Here, #

F is the number of features, #

T is the classification accuracy percentage for the test data. The best results are in bold.

In all of the datasets used, at least one transfer function makes it possible to achieve an accuracy equal or superior to the classification accuracy obtained on the full dataset. The Wilcoxon signed rank test was used to evaluate the statistical significance of the difference between the resulting accuracy values.

Table 3 shows the values calculated based on pairwise algorithm comparison.

The resulting values of the Wilcoxon test exceed the significance level of 0.05; therefore, there is no statistically significant difference between the test accuracy obtained with full dataset-based fuzzy classifiers and the accuracy values obtained after feature selection using the Binary Gravitational Algorithm. A conclusion can be made that there is no statistically significant difference between the accuracy values obtained on different transfer functions.

Table 4 shows the calculated values of the Wilcoxon test for evaluation of the statistical significance of difference in the number of features in the resulting classifiers.

The above test values show that there is a statistically significant difference between the initial number of features and the number of features selected by any of the transfer functions. There is no statistically significant difference between the number of features selected with the help of different transfer functions.

GSA has the same computational complexity

O(

nd), where

n is the number of agents and

d is the search space dimension [

14]. GSA in our work has not been modified, so it has complexity

O(

Pd), where

P is the number of particles and

d is the size of the dataset.

4.2. Comparison to Similar Solutions

Table 5 shows the experiment results. Here,

#R is the number of rules,

#F is the number of features when using selection, #

L is the classification accuracy percentage on training data,

#T is the classification accuracy percentage on test data. As a comparison,

Table 5 also shows the results for the D-MOFARC and FARC-HD algorithms [

28,

29]. The best results are in bold.

The Wilcoxon signed-rank test was used to assess the statistical significance of differences in the accuracy of fuzzy classifiers formed using the Gravitational Algorithm and using D-MOFARC and FARC-HD.

Table 6 shows the values calculated based on pairwise algorithm comparison.

The resulting values of the Wilcoxon test exceed the significance level of 0.05; therefore, there is no statistically significant difference between the test accuracy obtained with fuzzy classifiers using Gravitational Search Algorithms and accuracy values obtained using D-MOFARC and FARC-HD.

Pairwise comparison of the rule numbers shows that there exists a statistically significant difference between the number of rules in the resulting classifiers and the D-MOFARC algorithm (the test value is 2.47 × 10−9) and the number of rules in the resulting classifiers and the FARC-HD algorithm (the test value is 2.48 × 10−8).

Since the algorithms D-MOFARC and FARC-HD are based on full datasets, it is necessary to compare the number of features in full datasets and the number of features selected by the Binary Gravitational Algorithm. A check with the Wilcoxon signed-rank test produces the value of 1.13 × 10−4, making it possible to conclude that the Binary Gravitational Algorithm demonstrates a high level of performance.

To compare the proposed method with other non-fuzzy classifiers, basic methods and ensemble methods were selected. Basic methods are a logistic regression method (LR), Gaussian Naive Bayes, a

k-nearest-neighbour method (kNN), a Support Vector Machine (SVC), a Multi-Layer Perceptron (MLP), a WiSARD Classifier (WNN). Ensemble methods are a Random Forest (RF), Adaboost (AB), a Gradient Tree Boosting (GTB) [

44].

Table 7 lists the benchmarking methods we have compared to fuzzy classifier using GSA.

Classification accuracies compared by means of a statistical analysis based on Wilcoxon test with a significance level of 0.05 to prove how the fuzzy classifiers using Gravitational Search Algorithms is very close in performance to the best methods of machine learning. The null hypothesis is the following:

H0: The distribution of classification accuracy for the GSA and another method is the same over N datasets; where N = 23.

Pairwise comparisons of methods conducted in the statistical analysis proved that fuzzy classifiers using Gravitational Search Algorithms is very close to Support Vector Machines, while it outperforms Gaussian Naive Bayes (

Table 8).

The numerical experimentations were performed on a personal computer equipped with a 2.40 GHz Intel(R) Core™ i5-2430M with NVIDEA GeForce GT 520MX Graphics processor and 4 GB of RAM. The described method was implemented using C# programming language under Microsoft Windows operating system environment.

5. Conclusions

This paper discusses methods for fuzzy classifier design with feature selection. Features were selected using the Binary Gravitational Algorithm. The classifier structure was formed by the rule base generation algorithm by using extreme feature values. Parameter classifier optimization was achieved by using the Continuous Gravitational Algorithm.

The performance of the fuzzy classifiers adjusted by the algorithms described above is tested on 26 real-world KEEL datasets. The resulting classifiers possess good trainability, which is confirmed by the high percentage of accurate classification on training samples and equally good predictive capability, which is supported by the high percentage of accurate classification on test samples.

The number of features used by the classifiers designed with the help of the algorithms is significantly smaller than the total number of features in datasets.

As can be seen from the above, the classifier design algorithms based on combinations of the algorithms proposed in this paper make it possible to design fuzzy classifiers that use a smaller number of features while offering an accuracy on the reduced number of features that is statistically equivalent to the accuracy of classifiers designed based on a full set of features.

In the future, the authors expect to study other ways to binarize the Gravitational Search Algorithm and increase the number of test datasets. Based on [

45], in our future research a strict computational complexity analysis of GSA

B + GSA

C will be carried out.