1. Introduction

Nowadays, surveillance systems contribute vitally to public security. The development of artificial intelligence, especially artificial intelligence for computer vision [

1], has made it easier to analyze the resulting videos [

2,

3]. Several studies have recently addressed the problem of event detection in video surveillance [

4] which requires the ability to identify and localize specified spatiotemporal patterns. Another problem in surveillance video analysis, which is attracting much research interest, is the person re-identification problem [

5]. Person re-identification describes the task of identifying a person across several images that have been taken using multiple cameras or a single camera. Re-identification is a vital function for surveillance systems as well as human–computer interaction systems in order to facilitate searching of identity from large amounts of videos and images. Likewise, this study addresses the problem of race recognition (RR) in surveillance videos. In several situations, identifying the race of a person may be helpful for surveillance systems to identify the appropriate emergency supporter. Moreover, another application of RR is to video classification/clustering. The race of people in a video can be an important factor for video classification/clustering. To address this challenge, significant efforts have been made toward RR and categorization. Fu et al. [

6] wrote a comprehensive review on the learning of race from the face using many state-of-the-art methods. From their analysis, the problem could be resolved by using two main approaches: single-model RR, which tries to extract both appearance features and local discriminative regions, and multi-model RR, which combines features from both 2D and 3D information, or the fusion of face and gait, etc.

In recent years, social networks have become popular with billions of users around the world, with millions of pieces of information shared daily. Many studies on the application of social networks have been analyzed recently. In 2016, Farnadi et al. [

7] gave a detailed analysis of various state-of-the-art methods for personality recognition in many datasets from Facebook, Twitter, and YouTube. In this year, Nguyen et al. [

8] used a Deep Neural Network (DNN) to meet two types of information needs of response organizations: (i) informative tweet identification and (ii) topical classes classification. They also provided a new learning algorithm using stochastic gradient descent to train DNNs in online learning during disaster situations. Recently, Carvalhoa et al. [

9] presented a smart platform to efficiently collect, manage, mine, and visualize large Twitter corpora.

In recent times, deep learning [

10], which tries to learn good representations from raw data automatically with multilayers stacked on each other, has attracted significant attention from researchers due to its various applications in computer vision [

11,

12,

13,

14,

15], natural language processing [

16,

17,

18], and speech processing [

19]. Convolutional neural networks (CNNs), a type of deep learning model, have recently achieved many promising results in large-scale image and video recognition [

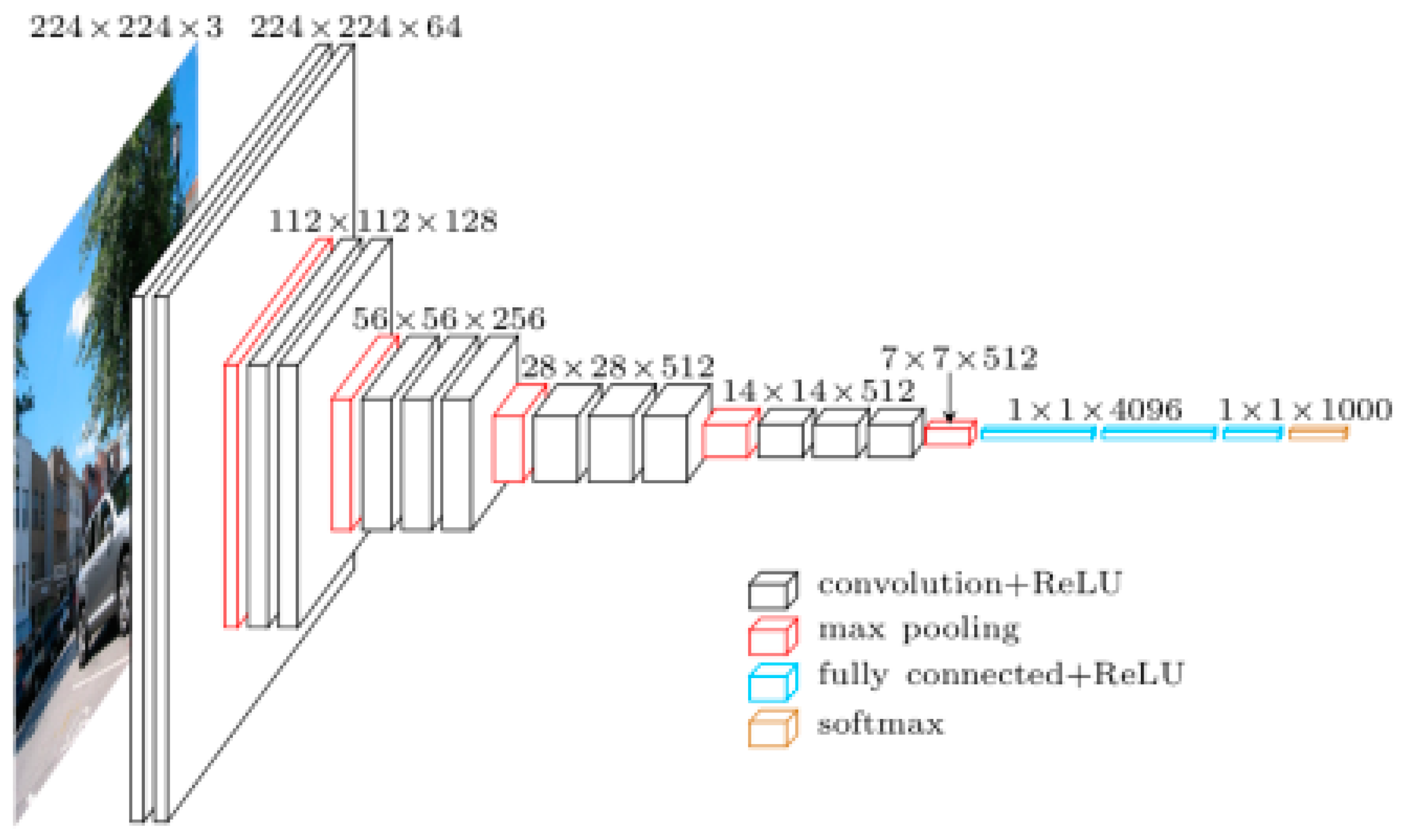

20,

21]. The VGG model [

20], which was first introduced by Simonyan and Zisserman in 2015, achieved very good performance on ImageNet [

22] and has been widely used in computer vision studies.

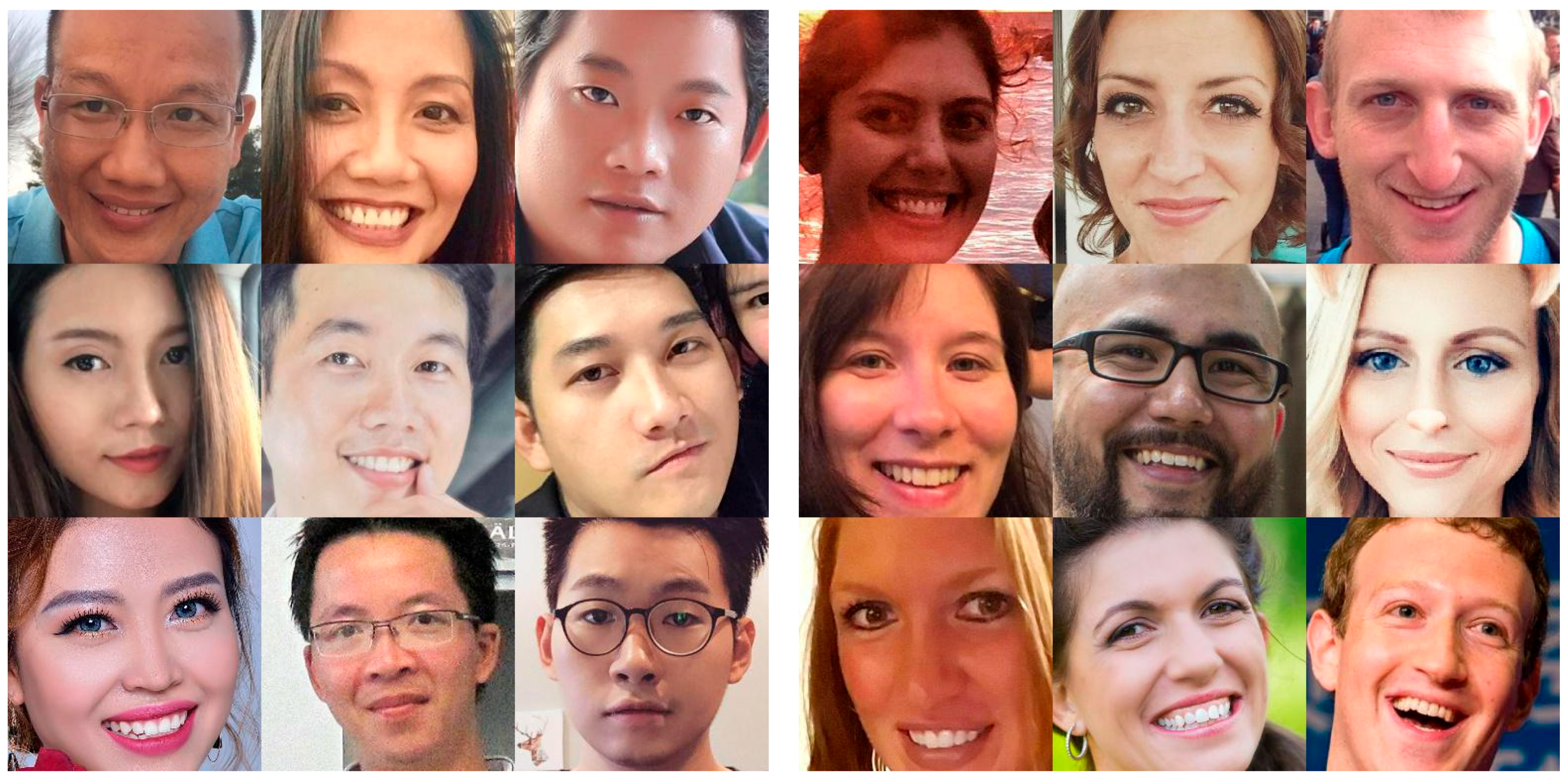

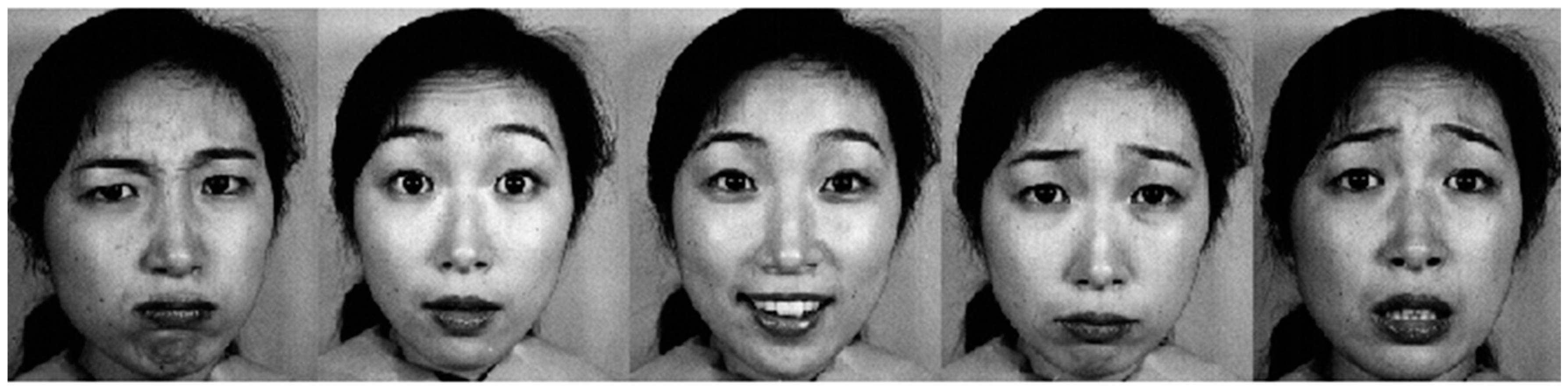

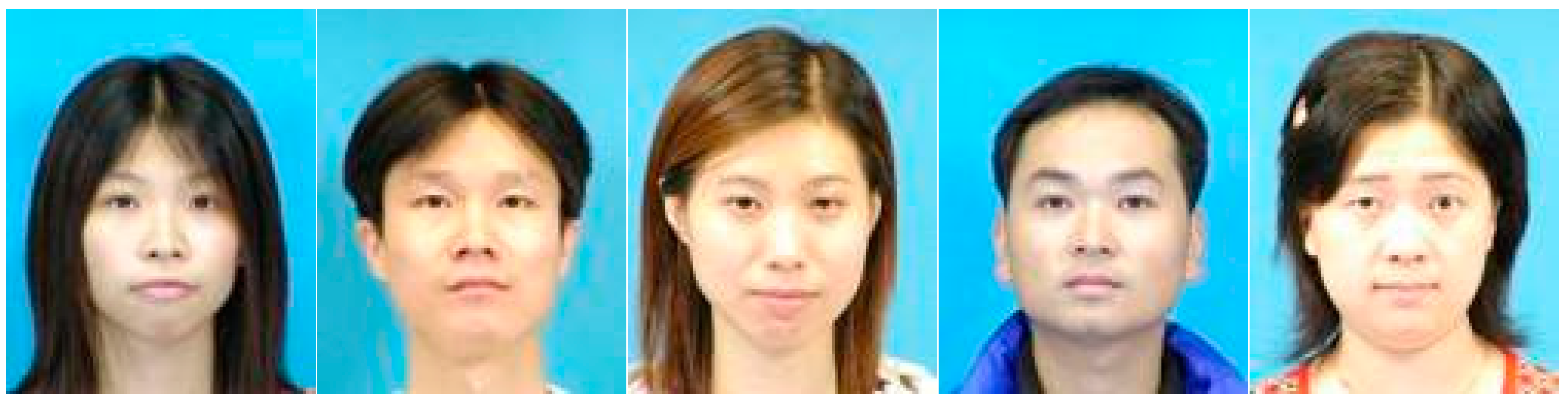

In recent years, many researchers have switched from RR of popular race groups such as African Americans, Caucasians, and Asians to that of sub-ethnic groups such as Koreans, Japanese, and Chinese [

23,

24,

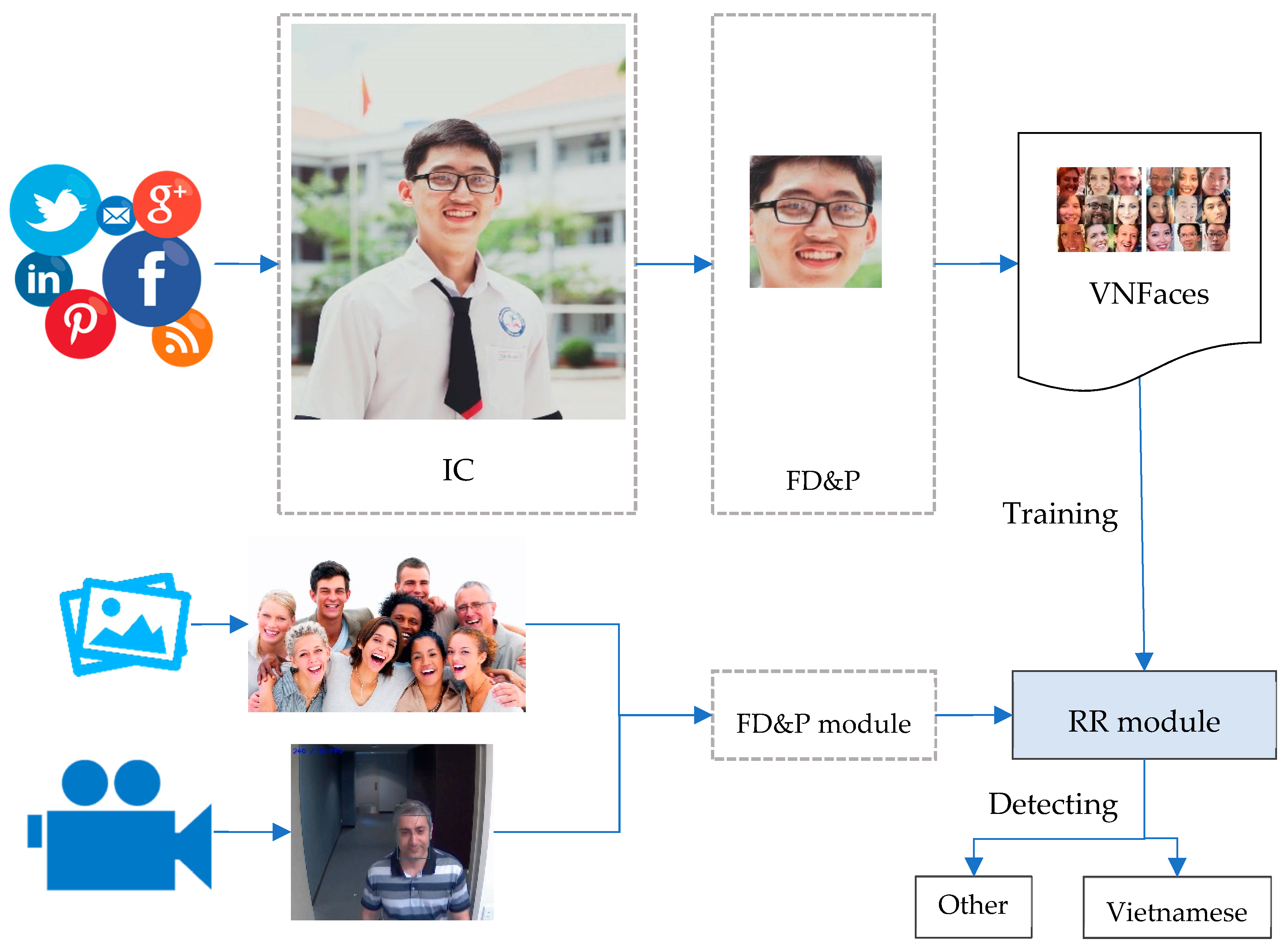

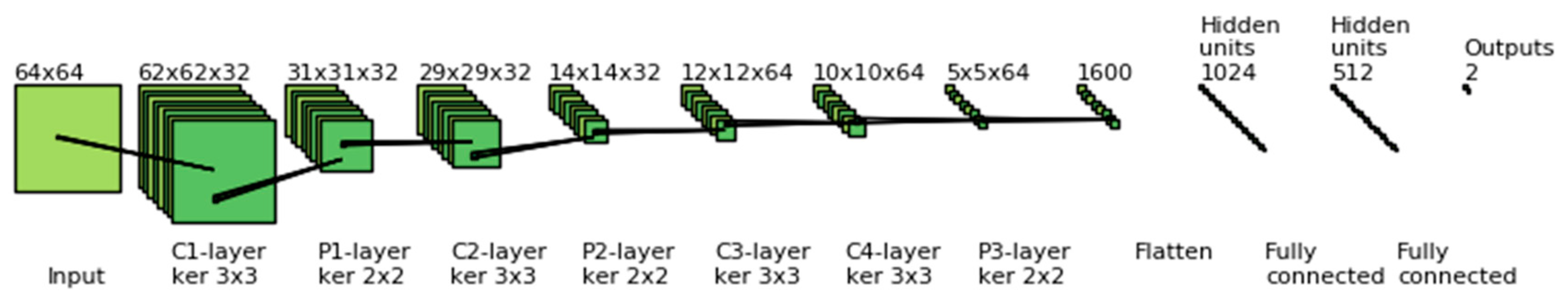

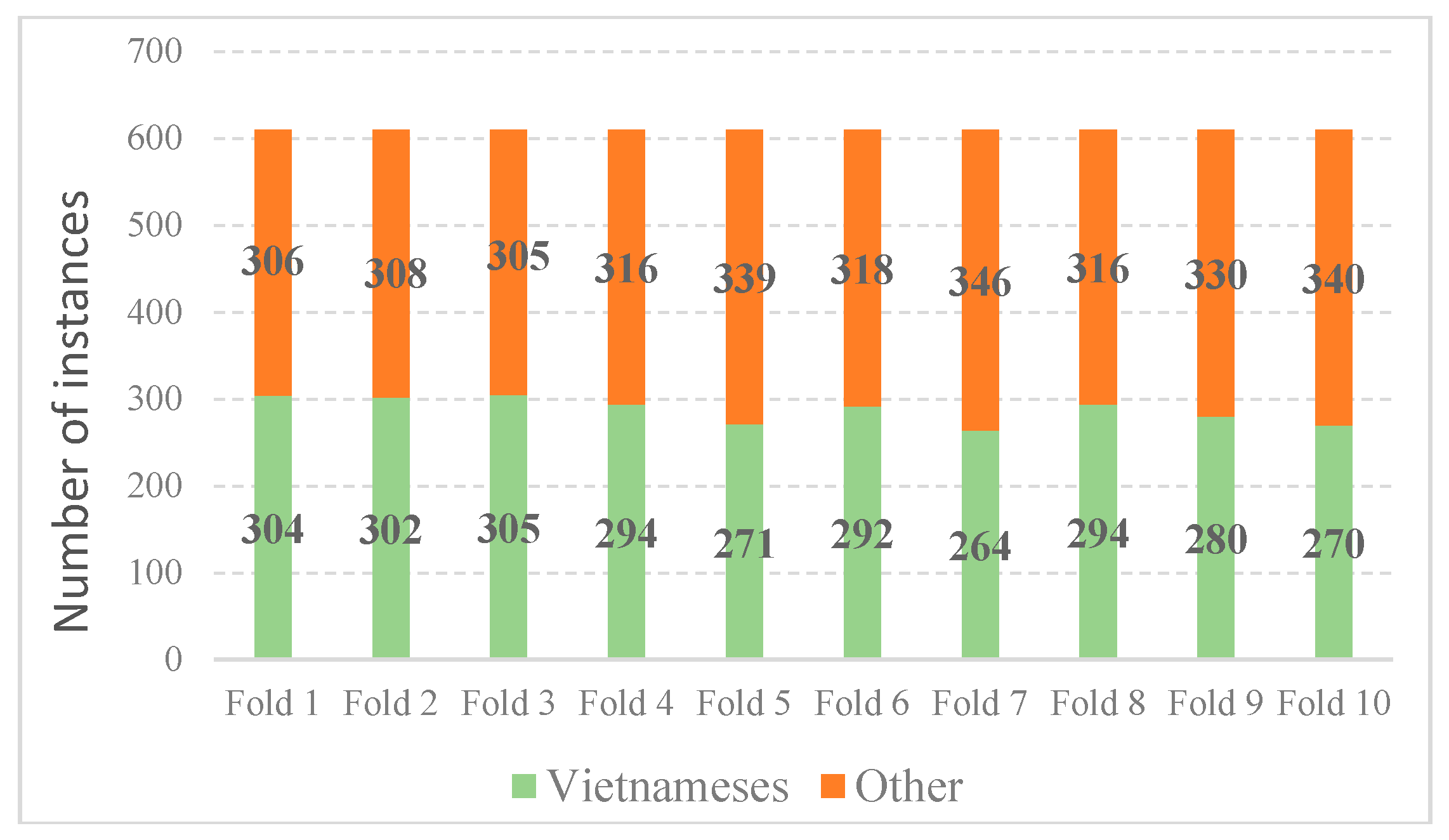

25]. Inspired by this idea, this paper presents a system that has the ability to detect race using deep learning in realistic environments using social network information for the Vietnamese sub-ethnic group. The main contributions of this work are as follows. (1) We introduce a race dataset of Vietnamese people collected from a social network and published for academic use. (2) We propose an efficient framework including three modules for information collection (IC), face detection and preprocessing (FD&P), and RR. (3) For the RR module, we propose two independent models: an RR model using a CNN (RR-CNN) and a fine-tuning model based on VGG (RR-VGG). Experimental results show that our proposed framework achieves promising results for RR in various race datasets including the Vietnamese sub-ethnic group and others such as Japanese, Chinese, and Brazilian. More specifically, the proposed framework with RR-VGG achieves the best accuracy in most of the scenarios.

The remainder of the paper is organized as follows:

Section 2 presents a review of related works including convolutional neural networks and the VGG model.

Section 3 proposes the RR framework. The experimental results are shown in

Section 4, and

Section 5 gives the conclusions of the paper.

5. Conclusions

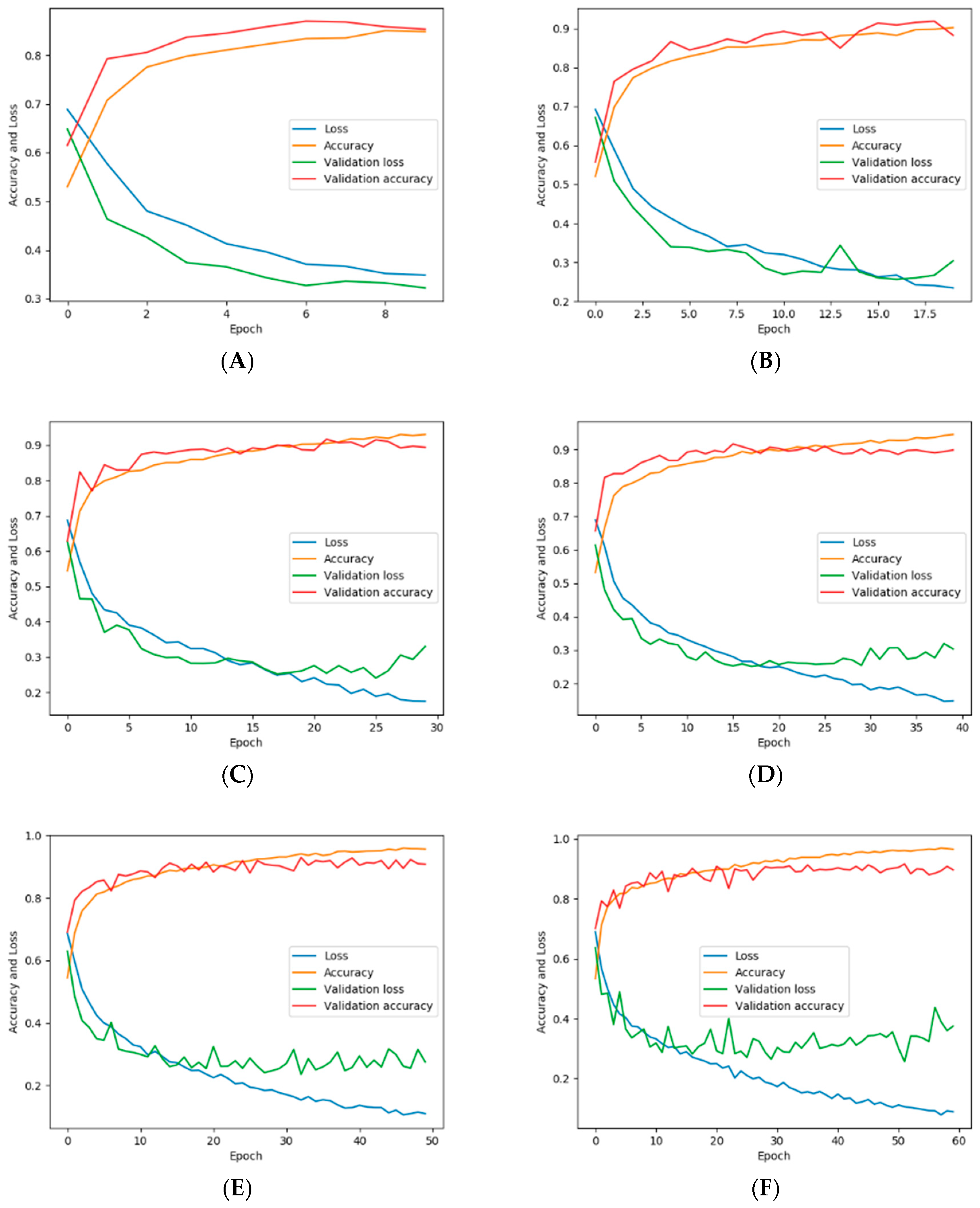

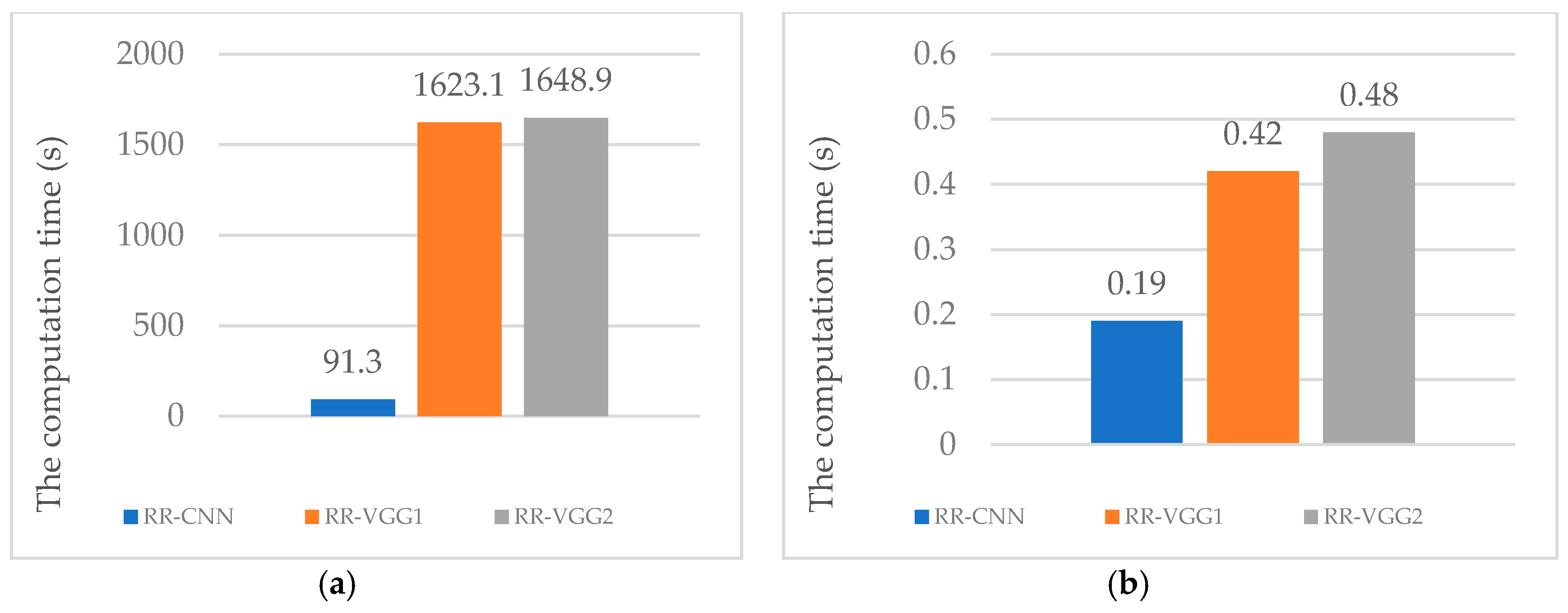

This study proposed an efficient RR Framework consisting of three modules: an information collector, face detection and preprocessing, and RR. For the RR module, this study proposes two independent models: RR-CNN and RR-VGG. To evaluate the proposed framework, we conducted experiments on our Vietnamese dataset collected from Facebook, named VNFaces, to compare the accuracy between the RR-CNN and RR-VGG models. The experimental results show that for the VNFaces dataset, the RR-VGG model with augmented input images yields the best accuracy at 88.87%, while RR-CNN achieves 88.64%. In other examined cases, our proposed models also achieved high accuracy in the classification of other race datasets such as Japanese, Chinese, and Brazilian. Even in the case of classifying people with similar appearances, our models could perform well with overall results are over 80%. In most of the scenarios, the fine-tuning RR-VGG achieved the best accuracy due to its number of deep layers; this suggests that it could be successfully applied to various RR problems.

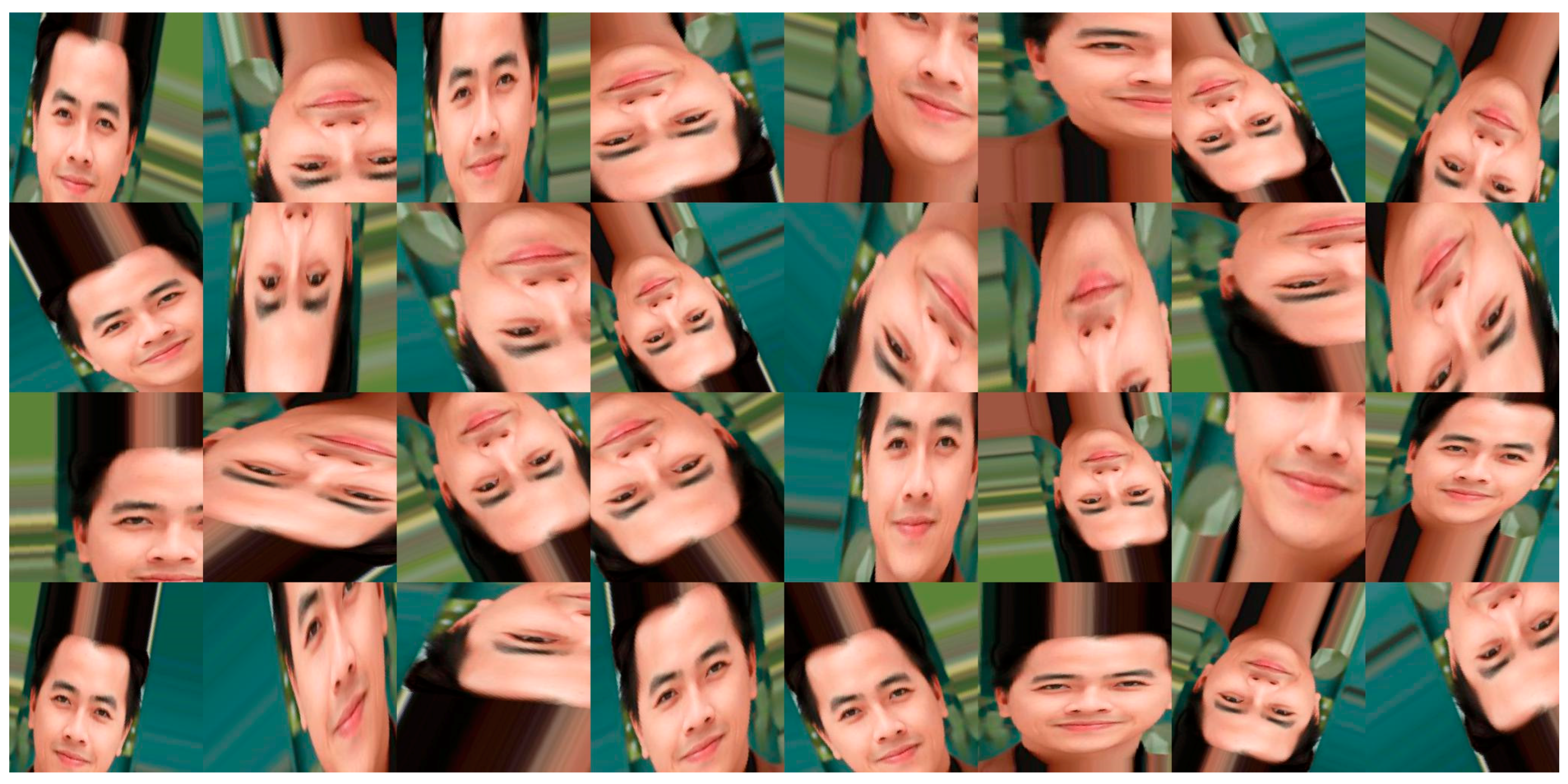

In future work, several related issues will be studied. Firstly, a project that collects a race face dataset based on social network data for RR will be opened for contributions from around the world. Secondly, several preprocessing techniques and deep models to improve the accuracy of classification will be studied.