Abstract

Landscape visualization plays a crucial role in various scientific and artistic fields, including geography, environmental sciences, and digital arts. Recent advancements in computer graphics have enabled more sophisticated approaches to landscape representation. The integration of artificial intelligence (AI) image generation has further improved accessibility for researchers, allowing efficient creation of landscape visualizations. This study presents a comprehensive workflow for the rapid and cost-effective generation of photorealistic still images. The methodology combines AI applications, computational techniques, and photographic methods to reconstruct the historical landscapes of the Great Hungarian Plain, one of Europe’s most significantly altered regions. The most accurate and visually compelling results are achieved by using historical maps and drone imagery as compositional and stylistic references, alongside a suite of AI tools tailored to specific tasks. These high-quality landscape visualizations offer significant potential for scientific research and public communication, providing both aesthetic and informative value. The article, which primarily presents a methodological description, does not contain numerical results. To test the method, we applied a procedure: we ran the algorithm on a current topographic map of a sample area and compared the resulting image with the view model provided by Google Earth.

1. Introduction

For decades, researchers have been expected to present their findings visually. Visualization transforms data, information, or ideas into images, diagrams, graphics, or other visual formats to enhance comprehension and interpretation [1,2]. Its primary goal is to represent complex or abstract concepts in a clear and illustrative manner, facilitating better understanding and analysis.

Scientific research findings are typically presented as numbers, tables, or text, which makes interpretation challenging even for experts. Therefore, it is crucial to depict results and theories visually. Scientific research should be accessible to as many people as possible; however, researchers are generally neither artists nor graphic designers. Consequently, their illustrations tend to be graphically simple, often consisting of basic line (vector) drawings. When presenting complex ecosystems or landscapes, overly simplistic illustrations may undermine the perceived rigor of the research. Aesthetic illustrations are generally considered more effective [3], especially when shared on social media.

Landscape visualization employs a variety of software solutions tailored to different purposes [4], enabling either realistic or stylized representations of the landscape [5].

- Digital painting and illustration: These are common methods for creating realistic or stylized landscape visualizations.

- 3D landscape modeling and simulation: Tools like Blender, ref. [6] a free and open-source software offering numerous features for generating and rendering terrain. Terragen [7] specializes in photorealistic landscape creation and is widely used in films and scientific projects. Procedural landscape generation software such as World Machine [8] and Gaea [9] are particularly advantageous for modeling large-scale terrains.

- Cartographic and GIS visualization: Geographic information systems (GIS) play an increasingly significant role in landscape visualization, especially in geographical and environmental studies. Professional GIS software like ArcGIS [10] offers advanced mapping and data management capabilities, while QGIS [11] serves as a popular open-source alternative. Google Earth Studio [12] enables the creation of realistic 3D map animations, useful for geographical and urban studies.

- Photo manipulation and post-processing: Various image editing software is available for modifying and enhancing existing landscape images. Adobe Lightroom [13], for instance, is optimized for photograph post-processing, providing advanced color correction and retouching tools.

- Artificial Intelligence (AI) applications: AI is becoming increasingly popular in landscape architecture and planning, with new methods emerging annually [5]. AI optimizes the design process by incorporating automated design tools and visual analysis. Computer vision techniques enable more precise evaluation of aesthetic and functional aspects of environments, enhancing both efficiency and accuracy of landscape design.

Three main types of visualizations are commonly used: (a) rendered still images, which display the landscape with high detail and realism but offer limited interactivity; (b) animations, which show changes and movements over time but lack full interactivity; and (c) real-time models, which allow users to move freely in virtual space, enhancing interactivity, though often at the cost of reduced detail due to computational limitations [4].

Procedural landscape generation is a computer graphics technique that automatically creates landscapes using mathematical algorithms and rule-based systems [14]. This method is particularly useful for generating large-scale or highly detailed virtual environments in video games, films, simulations, and cartography. Instead of manually designing landscapes, procedural generation creates them based on parameters such as elevation, terrain type, climate, and textures, often incorporating random elements to ensure uniqueness. Procedural techniques enable the efficient and scalable production of large terrains with high detail, eliminating the need for manual modeling. Some systems can also simulate real-world geological and meteorological processes, such as erosion, river formation, and vegetation distribution. Common applications include optimized terrain and height map generation, offering advanced erosion simulation and texture generation, or specializing in photorealistic landscape rendering commonly employed in film production and high-end visual effects. Overall, procedural landscape generation is a powerful tool that enables the rapid and automated creation of realistic or stylized terrains while giving users control over the final output through adjustable parameters.

Several of the visualization applications integrate AI-powered solutions. Luminar Neo utilizes AI to enhance photos by automatically adjusting lighting, colors, and details in landscapes. Its AI-driven tools include sky replacement, intelligent contrast adjustment, and object removal. Gaea [9] is designed for procedural landscape generation, where AI-based algorithms assist in creating realistic terrain and textures. Adobe Photoshop’s Neural Filters feature enables automatic retouching, color correction, and style transformations through AI. Adobe Lightroom [13] incorporates advanced AI-driven editing tools, such as sky correction and intelligent exposure adjustments. Google Earth Studio [12], primarily used for cartographic animations. Procedural landscape generation software like World Machine and Terragen [7,8] can utilize machine learning-based algorithms, but are not exclusively AI-powered programs.

However, these applications are not widely used among scientists for landscape visualization, because they require in-depth software knowledge. The complexity of these tools exceeds the skill set of most researchers, and the workflow demands more research time (level of effort—LoE) than is typically allocated for visualization. One alternative is to commission studios or artists to create striking images or short films based on the researcher’s guidance, with some studios specializing in archaeological landscape reconstructions. Yet, the demand for illustrations and graphic designers surpasses what traditional means can supply. AI offers a cost-effective and rapid technology for visualization. Generating landscape images with the latest AI applications is not inherently difficult; even users with minimal experience can create visually impressive yet artificial ‘nature photographs’ within minutes. Social media is already flooded with AI-generated pseudo-landscapes, and some nature photography websites occasionally feature such images, which is considered an unethical practice, as they are not genuine nature photographs.

Researchers, however, aim to produce photorealistic and detailed scientific illustrations. Here, detail refers to the representation of intricate visual elements, while realism denotes how closely the visualization mirrors the actual landscape [4]. Cost-effectiveness in this context means achieving the outlined workflow at a tiny fraction (about one hundredth) of the traditional cost of hiring a professional illustrator or animator.

But what makes a landscape reconstruction scientifically sound? Primarily, it depends on a solid scientific foundation, rather than merely knowledge of AI applications. Scientific accuracy is paramount, as the objective is not to depict an imaginary, ‘fairy tale’ landscape. Instead, it is necessary to study geographical, ecological, paleoenvironmental, and landscape history literature to precisely determine the intended outcome. Several scientific disciplines are involved in the historical reconstruction of landscapes and natural environments.

Historical geography investigates the spatial characteristics of social, economic, and natural conditions across different time periods [15]. Its sources include old maps, charters, chronicles, and demographic records. This field enables the mapping of medieval settlement structures, changes in river courses, and the locations of economic centers over centuries. Closely related to this is landscape history, primarily studied by ecologists, which examines the formation and transformation of landscapes, uncovering the impacts of natural processes and human activities on vegetation development over various historical periods [16,17]. Paleoecology focuses on reconstructing past environments, studying changes in climate, vegetation, and fauna across geological epochs, relying mainly on fossils and sedimentary records, and is pursued by geologists and biologists [18]. Archaeology increasingly emphasizes past landscape reconstruction: environmental archaeology examines the natural surroundings of archaeological sites and past human-environment interactions, analyzing the roles of climate, soil conditions, vegetation, and wildlife in shaping human lifestyles. Landscape archaeology investigates historical landscape changes, including natural and anthropogenic elements [19], exploring how societies shaped their landscapes through land use and settlements. Ethnographic research also provides valuable insights, particularly regarding traditional ecological knowledge [20], such as the expertise of shepherds in the Hortobágy ‘puszta’ region of Hungary about the historical conditions of the steppe [21]. Furthermore, botanical and geomorphological knowledge is essential to distinguish which vegetation and landforms are ancient versus modern and natural versus man-made [22].

The main goals of this publication are: (1) to demonstrate how different AI image generation techniques can be effectively used for landscape reconstruction; (2) to highlight common pitfalls of AI image generation and present simple solutions; and (3) to outline necessary directions for further developments. The approaches are illustrated using the Great Hungarian Plain, a landscape fundamentally altered in the 19th century.

2. Materials and Methods

2.1. Regional Setting

The Great Hungarian Plain (GHP) is one of the most developed alluvial plains globally, with the Tisza River as its primary watercourse (Figure 1). The plain features a riverine landscape characterized by an exceptionally flat and uniform relief, averaging only about 20 m in elevation over a distance of 200 km [23,24]. Local relief variations are even more subdued, with elevation differences ranging from 1 to 5 m over short distances [25]. These subtle variations correspond to classical river terrace morphology, formed in response to climatic shifts over the past several tens of thousands of years [26]. The GHP has undergone significant transformation over the past 200 years, making it an ideal subject for AI-assisted landscape reconstructions. Below, we present an outline of the most important steps in the landscape’s alteration to clarify the context of AI-generated images.

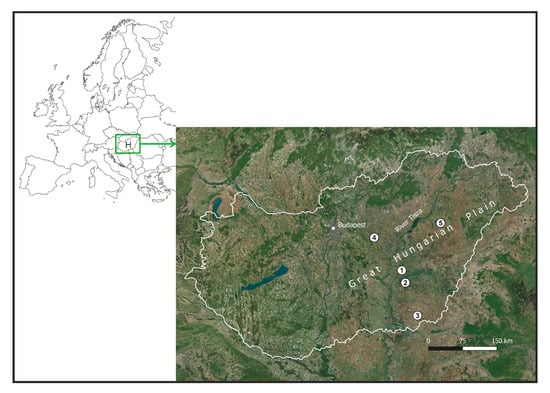

Figure 1.

Location of sites in Europe and Hungary where AI visualizations were tested. (1) River Hármas-Körös floodplain, Öcsöd; (2) Büsér Swamp, Szentes; (3) Királyhegyes Pasture, Makó; (4) Nagy-Nádas Swamp, Farmos; (5) Pentezug Pasture, Hortobágy.

Throughout history, water regulation was largely shaped by prevailing agricultural practices. When livestock farming dominated, extensive water management—particularly flood control—was unnecessary, as natural flooding helped irrigate pastures. However, alongside crop cultivation and livestock farming, a unique and intricate floodplain farming system known as ‘fok’ management emerged. This system relied on maintaining an interconnected network of fens, dams, reservoirs, and ditches to support various agricultural activities, including livestock grazing, crop production, and other forms of natural farming [27]. The decline of this system began during the Ottoman occupation, with floodplain farming retreating to the upper reaches of the Tisza River by the 16th century. The expansion of wetlands during this period may have been significantly influenced by the construction of fortifications. After the Ottomans’ departure, floodplain farming continued to diminish in the central Tisza region, while livestock grazing remained the primary economic activity. Water mills, which became widespread in the early modern period, also contributed to changes in the floodplain landscape. Areas above the dams became waterlogged, and lakes and ponds gradually silted up, transforming into swamps [28].

In the early 19th century, as economic development accelerated, the drainage and conversion of 35,000–40,000 km2 of regularly or intermittently flooded lowland into arable land became a critical political issue for Hungary, which held partial autonomy within the Habsburg Empire. The primary objective of the regulation efforts was to straighten the course of the Tisza River and significantly reduce the width of its extensive floodplain, which in some areas spanned several kilometers, and in others, even tens of kilometers [29]. The main goals of river regulation were threefold: (1) to modify the existing meandering river network and enhance flood discharge capacity by straightening the channels of the Tisza River and, to some extent, its tributaries; (2) to drain and convert approximately 20,000 km2 of flood-prone land along the Tisza into arable farmland through the construction of an embankment system; and (3) to improve navigability, thereby facilitating the transportation of surplus agricultural produce. The engineering techniques employed were relatively rudimentary. Meanders were artificially cut by excavating small trenches along the designated course, which were subsequently widened by the natural erosive force of flowing water, resulting in the formation of a new riverbed. On both sides of the newly straightened channel, embankments composed of compacted clay, approximately four meters high, were constructed manually [30].

Of these three key objectives, the first was largely accomplished by the outbreak of the First World War. The second was also mostly realized, with the final flood-prone sections reinforced by around 1930. Ultimately, both the second and third objectives were fully achieved: despite occasional extreme flood events, which remained relatively infrequent, the historic floodplain (including large wetlands) was effectively isolated from flooding and repurposed for agricultural use. By the 20th century, this transformation had resulted in a landscape dominated by large-scale, industrialized agriculture, initially shaped by market dynamics and historical land tenure structures. Under the communist regime, state-led collectivization further reinforced this shift—a legacy that continues to define agricultural practices in the region today [29].

The most significant consequences of regulation and drainage have been observed in land use and vegetation. Prior to regulation, it is estimated that up to two-thirds of the GHP area was submerged during floods. Despite the region’s generally continental climate, regular flooding supported the development of forest vegetation. Model calculations suggest that soil and climatic conditions were conducive to the establishment of forest vegetation (mainly forest-steppe) on 77% of the GHP. In contrast, it is estimated that only 37% of the GHP area was originally covered by forests, including floodplain and woodland forests. Due to millennia of deforestation and drainage, the total forested area has now been reduced to a mere 1% [31]. Before river regulation, cleared floodplain forests were replaced by swamps and floodplain meadows. The total extent of these meadows, along with the ancient marshes and swamps, once covered about 44% of the plain; by 1960, this had dwindled to only 3–6% [32].

The length of the Tisza River before the regulations was 1211 km; after the cutting of the meanders, it became 758 km. The combined length of the Körös River and its tributaries was 1041 km, which decreased to 462 km. The length of the cuttings on the Tisza River was 136 km, and on the Körös River, 212 km. 2940 km of flood protection embankments were constructed [29]. From a water management perspective, the current system differs drastically from its natural or near-natural state. Annual flooding no longer reaches the former floodplains [33]. The modern hydrological network in flood-protected areas primarily consists of irrigation and drainage canals. These channels often follow the traces of the original watercourses, as they were formed by deepening ancient riverbeds. However, the watercourses of the lowlands prior to regulation differed fundamentally in structure, function, and impact. These natural waterways were extremely wide (30–100 m) and shallow (1–1.5 m), with no fixed course. They could flow in either direction depending on local precipitation or water levels [34].

This is the region whose natural landscape, before river regulation, we depict in AI-generated images.

2.2. Materials and Image Processing

To generate the landscapes, we used photos taken between 2020 and 2024 as samples. Each image was captured with a DSLR camera or a DJI Mini 2 drone, with drone photos taken up to 120 m above the ground. To avoid modern patterns appearing in the photos used as style samples, we cropped them to exclude visible modern objects (e.g., roads). In other cases, such objects were removed later in the workflow.

Archival historical maps helped reconstruct the former landscape conditions and served as structural models. The First Military Survey of the Habsburg Empire was commissioned by Empress Maria Theresa. In the area of Hungary studied here, the survey was conducted later, during Emperor Joseph II’s reign, with map sections depicting the situation between 1783 and 1786 [35]. The survey sections form a full-coverage mosaic, at a scale of 1:28,800 (metric system). The maps can be georeferenced in various ways, with horizontal accuracy around 200 m compared to modern references like Google Earth [36,37]. This poses challenges when analyzing this historical database alongside contemporary maps, but it remains the primary spatial input for this study. The georeferenced content is available on the Arcanum Maps/MAPIRE portal [38].

As noted, the First Military Survey depicts the natural and built environment of the late 18th century, which is closer to the natural state before extensive human intervention than any recent geospatial data, though signs of human impact are already evident. For this study, it is important that the survey uses a limited number of land use/cover types—such as water bodies, wetlands, forests, grasslands, pastures, arable lands, and vineyards—with no allowance of combinations [39,40]. Consequently, we had to decide whether to depict swamp forests near rivers as swamps or forests. Since rivers in the survey are consistently accompanied by swamps, and gallery forest marks are rare, this likely reflects a deliberate choice. The survey’s military purpose meant that accessibility (solid ground versus swamp) was probably more important than terrain type (forest versus non-forest) for infantry warfare of that time.

To write the prompts, we used ChatGPT supplemented with geographical and ecological information (version 4o, ref. [41]). Adobe Firefly’s text-to-image and generative fill functions were employed to generate the images (Image 3 model, ref. [42]). The Krea application was used to upscale the images (Upscale & Enhancer function, ref. [43]). The AI image generation process took place between May and December 2024, using the program versions valid at that time. Finally, the images were slightly enhanced (e.g., sharpness, contrast) using Adobe Lightroom CC.

3. Results and Evaluation

3.1. Writing an Effective Prompt

AI applications generate images based on a textual instruction known as prompts. In the case of landscapes, these prompts include details about topography, vegetation characteristics, and perspective. For example: ‘Create an image depicting a wide, meandering river from a bird’s-eye view. The landscape is experiencing a flood, with the river overflowing its banks, submerging the surrounding fields and trees. At the center of the image, the river meanders, while the floodplain is covered with water. The water reflects the grayish sky, which is overcast with clouds. Trees stand partially submerged, and the grayish-brown hues of the landscape convey a misty, cool atmosphere’ (Figure 2). Writing such detailed prompts requires practice, but AI can assist in refining them. By uploading an existing image, one can request a prompt in ChatGPT to generate a similar image in another application. For example: ‘I uploaded an image for you, please create an accurate description of what is shown in the image. Then give me a prompt to generate the same image in Adobe Firefly.’

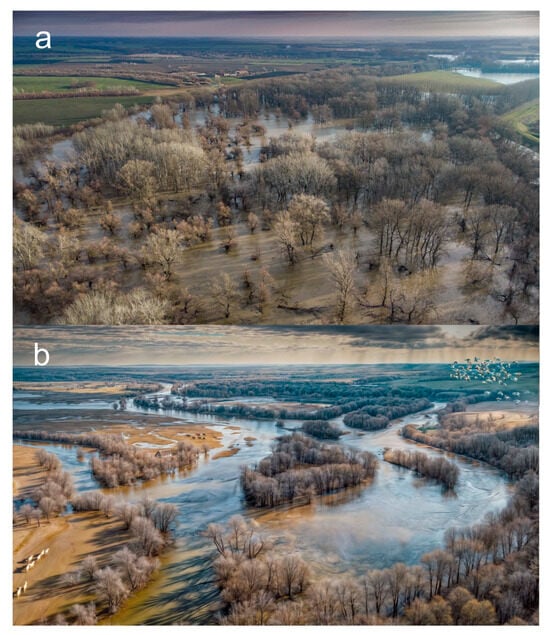

Figure 2.

Reconstruction of the unregulated Hármas-Körös River floodplain at the time of the spring flood in the 18th century. (a) The image endeavors to convey the strip of forest serving as a breaker for the unregulated river and the free flow of the accretion waters. (b) Reconstruction was performed with the help of a photograph taken by a drone near the village Öcsöd and with the prompt mentioned in the text.

While this example uses descriptive natural language, many image generation tools also support compact, comma-separated prompts, often referred to as tag-based or fragmented prompting. These rely on keywords and phrases separated by commas to convey style, content, composition, and atmosphere concisely. Such prompt formats are popular in tools like Midjourney, Leonardo.AI, and ComfyUI due to their speed and modularity. The same visual scene described above can be transformed into a tagged prompt like this: “aerial view, wide meandering river, flooding landscape, submerged fields and trees, gray overcast sky, water reflecting sky, misty atmosphere, cool tones, gray-brown colors, partially submerged trees, dramatic natural disaster scene” This approach allows users to iteratively expand, modify, or refine individual visual elements while maintaining overall coherence.

Effective AI-generated landscape reconstructions require precise wording. Descriptions should specify topography, vegetation, and water bodies using clear terms such as ‘a gently rolling landscape with scattered oak forests and a meandering river’ instead of vague phrases. Employing scientific terminology, such as ‘alluvial plain’ or ‘karst formations,’ enhances accuracy, but may limit the number of suitable sample images. Specifying perspective—whether an ‘aerial view of a plain’ or a ‘low-angle perspective from within a dense forest’—prevents ambiguity. Concise prompts are more effective than overloaded descriptions. Contradictory phrases like ‘a barren desert with lush meadows’ should be avoided in favor of emphasizing a dominant landscape type or gradual transitions, such as ‘a dry steppe with patches of seasonal wetlands.’ Small objects should be described in a grouped context, for example, ‘a herd of sheep grazing on the misty hillside’, to improve AI interpretation. AI models analyze the contextual coherence and cannot process contradictory prompts, such as depicting a small object from a bird’s-eye view or including snow in a summer landscape.

Depth and realism are enhanced when foreground and background elements are harmoniously balanced, and atmospheric effects—such as ‘a twilight sky with scattered cumulus clouds’—are incorporated. Since AI models are predominantly trained on English data, rephrasing rather than direct translation improves clarity. Highlighting distinctive features, for instance, ‘an ancient oak forest with moss-covered trunks’ rather than the more general ‘a temperate forest’ helps generate more specific and accurate outputs. By carefully structuring prompts with these considerations, AI-generated landscape reconstructions can achieve a higher level of realism and scientific accuracy while preserving the unique character of the studied environment (cf. Table 1).

Table 1.

Recommended and discouraged terms and methods in prompts.

AI applications like Adobe Firefly often provide multiple image versions, allowing selection of the best one from a professional perspective and further improvement in subsequent workflow steps. While AI can theoretically generate almost anything, the primary limitation often lies in the precision of the prompt. Writing prompts in English are advisable, as this enables AI to access a broader range of reference images. However, this also exposes a fundamental issue with AI-generated images: users have limited control over the source images the AI draws upon for its visual output, raising copyright and ethical concerns. From a scientific standpoint, a major challenge is that AI-generated images tend to be generic and uninspired, often lacking the distinctive characteristics of the specific landscape under study—features that should make the research subject immediately recognizable. Moreover, errors in image generation are common, as AI does not fully comprehend natural laws, particularly those governing geomorphology and vegetation patterns. For instance, it may inaccurately depict river meanders or vegetation distribution in response to geomorphology. Training AI to understand these processes requires more than a well-crafted prompt.

3.2. Using Style References

The best results can be achieved by combining different techniques and AI applications. Photographs and drone footage of real landscapes can be uploaded as stylistic references for AI tools, enabling researchers to guide the AI by specifying desired topography, hydrography, and vegetation features. Based on these references, AI generates images that better reflect both the unique characteristics of the landscape and the researcher’s creative intent. However, caution is necessary when selecting reference images, as unwanted elements in the originals may inadvertently appear in the generated output. For example, if a paved road is visible in the reference image, the AI may incorporate a similar road into the final image, even if it was not intended to be part of the reconstructed landscape (Figure 2). Therefore, selecting sample photo locations should be preceded by careful field research. It is advisable to use photos from pristine, protected areas that best represent the potential vegetation of the region. These areas are typically core zones of national parks, so consultation with nature conservation authorities is recommended.

One particularly useful tool in AI applications is generative fill, which allows users to modify images by selecting an area and instructing the program to replace it with new content. Currently, Adobe Firefly is the most effective program for this purpose. For example, arable fields can be transformed into grasslands, or a silted-up riverbed can be ‘restored’ to an active watercourse (Figure 3). Additionally, a small, intact landscape spot can be expanded, effectively generating an entire landscape from a single real-world reference (Figure 4). Generative fill can also be used to replace unwanted elements or to add decorative details to enhance realism. For instance, a farmhouse can be inserted into a steppe landscape, or a flock of sheep can be placed on a hillside. Adding such decorative elements is always recommended, as they help convey the spatial depth, making the image more visually engaging and scientifically informative (Figure 5).

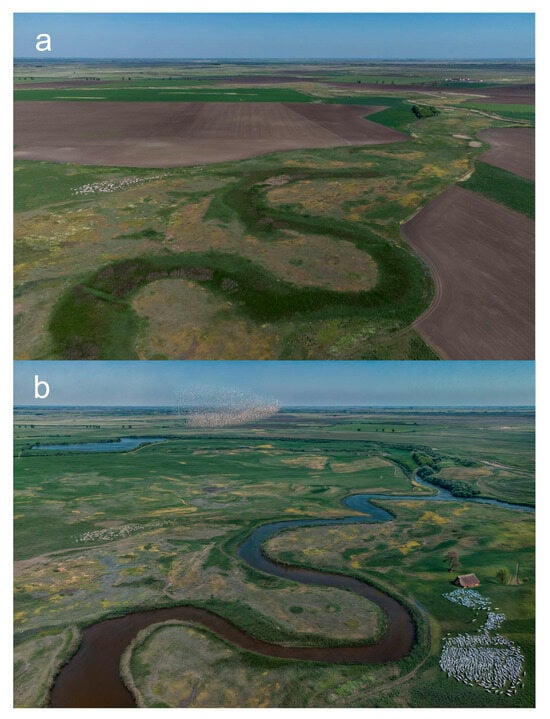

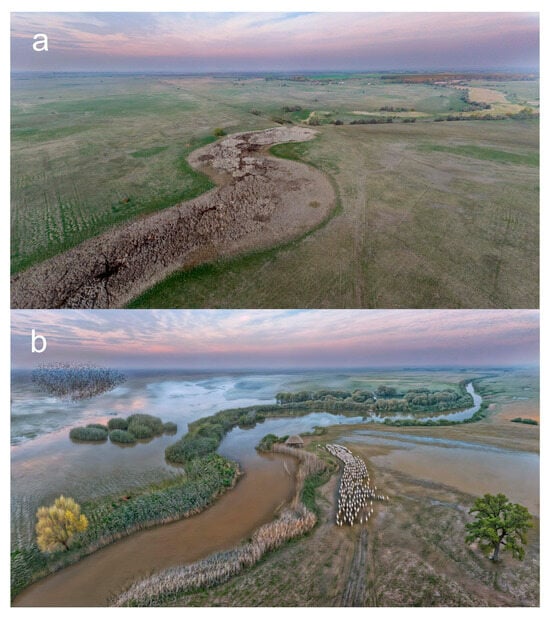

Figure 3.

Királyhegyes pasture in the Middle Ages. In the image, we used generative filling to replace modern arable fields with grasslands and marshes, and replaced the filled-in, dry riverbed with a river, in accordance with medieval archival documents. (a) Original drone photo from May 2024; (b) AI-generated image.

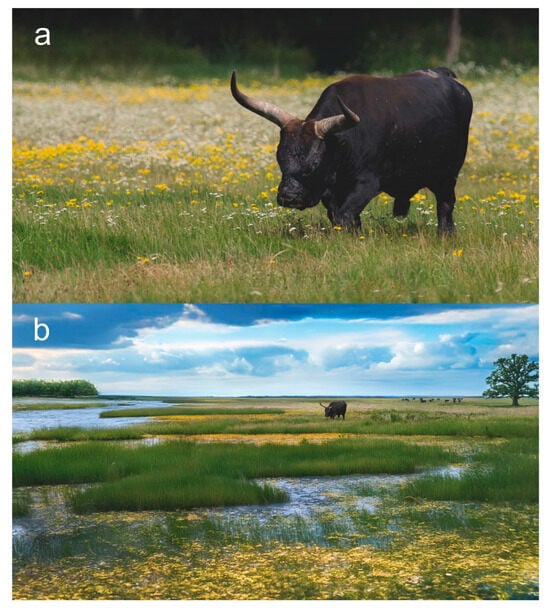

Figure 4.

Group of aurochs (Bos primigenius) in the Great Hungarian Plain. The original image of a Heck cattle was magnified several times using generative filling. (a) Original image from the Hortobágy National Park; (b) AI-generated landscape.

Figure 5.

River Büsér in the Middle Ages. We used generative filling to replace the grasslands with marshes, the dry riverbed with a river, and remove modern drainage channels and roads. (a) Original drone photo from September 2024; (b) AI-generated image.

3.3. Using Compositional References

The highest level of landscape reconstruction is currently achieved by using historical maps or three-dimensional terrain models as compositional (structural) references. This approach allows for a high degree of spatial accuracy in depicting past landscapes. In Hungary, for example, the maps from the First Military Survey are particularly valuable for reconstructing the hydrography and vegetation patterns as they existed 200+ years ago (between 1782 and 1785) [35]. By integrating such historical cartographic sources with AI-generated imagery, researchers can create reconstructions that are not only visually compelling but also scientifically well-founded. The accuracy of terrain features, such as river courses, wetlands, and forested areas, can be significantly improved by aligning AI-generated images with these historical spatial references.

The Arcanum Maps/MAPIRE source mentioned above is considered optimal in terms of area coverage by the published databases (most of Europe in the 18th and 19th centuries and parts of the United States mapped before 1926) and the large scale of the maps [44]. However, georeferenced historical maps are also available on other portals. The largest of these is the David Rumsay Map Collection [45], where the maps presented can be downloaded in georeferenced form, and multi-section maps (e.g., the early Cassini map of France) are available in unified, georeferenced mosaics. A similar technical approach is followed by the USGS Historical Topographic Maps project [46]. An important resource is the OldMapsOnline server [47], developed in the Czech Republic, which processes and presents maps from numerous archival collaborators. Some national archives and universities also publish maps of their main areas of interest independently, notably the National Library of Scotland—Historical Maps [48], which publishes maps of the British Isles.

It is advisable to modify maps before AI image generation. Maps should be rotated for better perspective (Figure 6), and in mountainous regions, they can be adjusted to fit a Digital Elevation Model (DEM). Any prominent labels, grids, or markings should be removed beforehand, as the AI may mistakenly interpret them as landscape features. Instead of using complex maps, creating a simple sketch with strong contrast to serve as a compositional guide would be beneficial. The use of DEM is not presented in this publication due to the flat topography of the landscape, but it would yield similar results to those achieved with maps.

Figure 6.

Nagy-Nádas Swamp at Farmos before water regulations. (a) Detail of the First Military Survey (1782–1785) map that serves as a compositional model; (b) AI-generated image.

3.4. Upscaling

The previous steps leave visible traces on the initial images, and the quality of the generative fill often differs noticeably from the original details. Another potential issue is that the resolution of the generated images may be insufficient for high-quality publications. To address this, upscaling the images is highly recommended. In this publication, all landscapes have been upscaled using the Krea application. This process not only enhances resolution but also ensures consistency in image quality and detail across different areas.

If only minor adjustments are needed, the AI strength can be set to a minimum. A common mistake is setting AI processing too high, which may distort certain objects (e.g., animals) or introduce elements that do not naturally fit the landscape. After this step, it may be necessary to sharpen the images, enhance contrast, or reduce noise. Adobe Lightroom is the easiest tool for finalizing these adjustments.

The final image resolution typically ranges between 10 and 20 megapixels, which is sufficient for most publications, making higher resolutions unnecessary. The images are saved in .png or .jpg format. In some cases, it was necessary to step back into the generative fill workflow to remove or add objects. Importantly, the entire workflow takes no more than 1–2 h, provided the selected input samples are suitable.

4. Discussion and Conclusions

Based on our experience, the most striking results can be achieved by restoring vanished landscape elements, such as the unregulated rivers and wetlands characteristic of the GHP. Representing specific, unique landscape features—such as traditional land-use patterns or folk architectural objects—poses certain challenges. Nevertheless, with appropriate methodologies and innovative approaches, these visualization issues can be progressively resolved.

An interesting question is whether the method described above can be verified to produce at least somewhat realistic images (cf. Figure 7). It is clearly impossible to match the landscape of hundreds of years ago with drone photos that did not exist at that time. However, nothing prevents us from applying our method to today’s maps and comparing the AI-generated images with current images, although some minor aspects (see below) prevent full similarity.

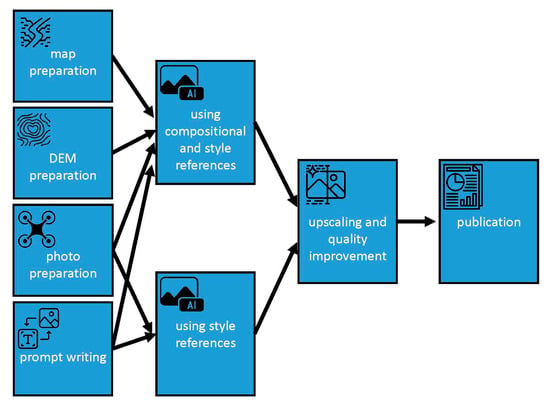

Figure 7.

Flowchart of the methodological approach proposed in this study. It is recommended to discuss the visualization with members of the research team before publication.

- The structural similarity between parts (a) and (c) of Figure 8 is clear; the differences lie in the color scheme and generalization (neglect of minor details). We can therefore conclude that the “historical drone photographs” produced by our method show a similar generalization of the landscape. However, some factors cause differences in general appearance despite structural similarity: the “modern” map used is approximately 40 years old, surveyed in mid-1970s. Minor to medium-scale differences in land use exist, especially regarding the forest cover and the water-affected areas;

- Active wetland restoration is ongoing in this area. The open water areas visible in the summer satellite image relate to this;

- Even the visualization provided by Google Earth requires a certain level of expertise. In contrast, the generated AI image, although somewhat stylized and generalized, clearly illustrates the patterns of land use in a way that is understandable to everyone.

Figure 8.

Verification of the method with modern databases: (a) AI-generated image of Farmos Nagy-Nádas (Central Hungary; zone (4) in Figure 1), based on modern topographic map (b); compared to the Google Earth image with satellite image from June 2021 (c). The land cover types show some medium-level generalization, yet the similarity is evident.

The biggest challenge in AI-generated images is accurately representing spatial relationships. Landscape elements are often misproportioned, with animals, people, and buildings appearing too large relative to their surroundings. Animals, in particular, tend to be distorted. Better results can be achieved by omitting animals from the initial image generation and adding them later using generative fill. Distortions commonly affect legs and horns, which often require correction through additional editing. Interestingly, some animals (e.g., sheep, wolves, pigs) are rendered more successfully than others. For optimal results, animals should be generated in groups—such as herds or packs—from a bird’s-eye view, where minor imperfections are less noticeable. However, AI’s tendency to repeat patterns is evident in these images.

One solution to address local specificity is to add similar elements to the original image using generative fill, e.g., increasing the number of animals (Figure 4). Another technique is to use the name of a somewhat similar common object in the prompt. For example, instead of ‘herd of Hungarian Grey cattle’, we enter ‘group of white bulls’ works better, since from a bird’s eye view the difference is not noticeable (Figure 5). Similarly, replacing the word ‘swallow’, the ‘long thatched farmhouse’ yields more successful results.

A future advancement in AI image generation could be the ability to modify specific parts of an image in real time using prompts, such as adjusting the size of certain objects. Currently, however, options for this functionality are limited. Another useful feature would be the ability to easily change the perspective of an image (e.g., height, direction).

For each new visualization method, it is important to evaluate its suitability for scientific applications. The content of visualizations significantly influences their effectiveness, with authenticity, relevance, and legitimacy being key factors [4]. In The Truthful Art, Alberto Cairo outlines five key quality criteria for effective data visualization [49]:

- Truthful—the visualization accurately and honestly represents the data, avoiding distortions or manipulations;

- Functional—the design effectively communicates the intended message, making the data easy to understand for the audience;

- Beautiful—aesthetics enhance the visualization without compromising clarity or accuracy;

- Insightful—the visualization not only displays data but also reveals new patterns, trends, or relationships;

- Enlightening—it educates the audience by providing context and helping them better understand the topic.

The first criterion—the truthfulness of generated images—can be questioned, as the outcome cannot be predicted with mathematical precision; the described method serves merely as a guideline. Unlike plotting a graph, the final result of image generation is not deterministic. Instead, a creative process is required, involving discussion and ultimately consensus among the research group members. Enhancing credibility requires visualizations to be based on reliable data sources and for creators to maintain transparency about their methods. The authors emphasize that complete objectivity is unattainable, as every visualization embodies a particular perspective. Consequently, involving research participants in the creation process is crucial to ensuring that the content accurately represents diverse viewpoints.

Using style and composition sample photos significantly increases the realistic appearance of the images, but the final result depends on the accuracy of the selected samples. It is also important to emphasize that ethical and copyright issues can be avoided by using one’s own photos.

Mastering AI applications will soon be essential for researchers, enabling them to generate images quickly and affordably. With well-chosen sample images, high-quality visuals can be created within an hour. As user interfaces become more interactive, AI tools will become a routine part of researchers’ skill sets. We believe this method will be most often used for creating graphic abstracts. The technology is advancing rapidly, and the era of AI-generated landscape videos is approaching, allowing experts to visualize landscape processes and phenomena dynamically.

However, striking landscape visualizations are not limited to scientific publications. This approach can also illustrate expected outcomes of habitat conservation reconstructions or depict the future impacts of global climate change. Such visualizations offer remarkable opportunities for communication campaigns as well.

Author Contributions

Conceptualization, G.J. and B.J.; methodology, G.J.; software, G.T.; validation, G.J., E.M., B.J. and G.T.; formal analysis, G.J.; investigation, G.J.; resources, G.J. and G.T.; data curation, G.J. and G.T.; writing—original draft preparation, G.J.; writing—review and editing, G.J. and G.T.; visualization, G.J. and B.J.; supervision, E.M. and G.T.; project administration, E.M.; funding acquisition, G.J. and E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Climate Change Hungarian National Laboratory, grant number RRF-2.3.1-21-2022-00014, under projects NKFIH 129167 and KKP 144209.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Benedek Jakab was employed by the company RAIZEN.Art. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Tufte, E.R. The Visual Display of Quantitative Information; Graphics Press: Chesire, CT, USA, 1983. [Google Scholar]

- Ware, C. Information Visualization—Perception for Design, 5th ed.; Morgan Kaufman: Cambridge, MA, USA, 2019. [Google Scholar]

- Brinch, S. What we talk about when we talk about beautiful data visualizations. In Data Visualization in Society; Amsterdam University Press: Amsterdam, The Netherlands, 2020; pp. 259–276. [Google Scholar] [CrossRef]

- Lovett, A.; Appleton, K.; Warren-Kretzschmar, B.; Von Haaren, C. Using 3D visualization methods in landscape planning: An evaluation of options and practical issues. Landsc. Urban Plan. 2015, 142, 85–94. [Google Scholar] [CrossRef]

- Fernberg, P.; Chamberlain, B. Artificial Intelligence in Landscape Architecture: A Literature Review. Landsc. J. 2023, 42, 13–35. [Google Scholar] [CrossRef]

- Blender 4.4. Action-Packed and Feature-Filled. Available online: https://blender.org (accessed on 16 June 2025).

- Planetside Software: Render Beautifully Realistic CG Environments. Available online: https://planetside.co.uk (accessed on 16 June 2025).

- WorldMachine: Simulate Don’t Sculpt. Available online: https://www.world-machine.com (accessed on 16 June 2025).

- Gaea—Create Anything. Available online: https://quadspinner.com (accessed on 16 June 2025).

- ArcGIS Pro—The World’s Leading Desktop GIS Software. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 16 June 2025).

- QGIS Spatial Without Compromise. Available online: https://qgis.org (accessed on 16 June 2025).

- Google Earth Studio. Available online: https://google.com/earth/studio (accessed on 16 June 2025).

- Adobe Lightroom—Meet a Smarter Mobile Photo Editor. Available online: https://www.adobe.com/products/photoshop-lightroom.html (accessed on 16 June 2025).

- Liu, J.-H.; Zhang, S.-K.; Zhang, C.; Zhang, S.-H. Controllable Procedural Generation of Landscapes. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, Australia, 28 October–1 November 2024; pp. 6394–6403. [Google Scholar] [CrossRef]

- Williams, M. Deforesting the Earth: From Prehistory to Global Crisis; University of Chicago Press: Chicago, IL, USA, 2003. [Google Scholar]

- Muir, R. New Reading the Landscape: Fieldwork in Landscape History; University of Exeter Press: Exeter, UK, 2000. [Google Scholar]

- Taylor, C. Fields in the English Landscape; J. M. Dent: London, UK, 1975. [Google Scholar]

- Birks, H.J.B.; Birks, H.H. Quaternary Palaeoecology; Edward Arnold: London, UK, 1980. [Google Scholar]

- Aston, M. Interpreting the Landscape: Landscape Archaeology and Local History; Routledge: London, UK, 2002. [Google Scholar]

- Berkes, F. Sacred Ecology; Routledge: London, UK, 2017. [Google Scholar]

- Molnár, Z. Hortobágyi pásztorok hagyományos ökológiai tudása a legeltetésről, kaszálásról és ennek természetvédelmi vonatkozásai. Természetvédelmi Közlemények 2011, 17, 12–30. [Google Scholar]

- Bölöni, J.; Molnár Zs Horváth, F.; Illyés, E. Naturalness-based habitat quality of the Hungarian (semi-)natural habitats. Acta Bot. Hung. 2008, 50 (Suppl. S1), 149–159. [Google Scholar] [CrossRef]

- Cholnoky, J. Az Alföld felszíne. Földrajzi Közlemények 1910, 38, 413–436. [Google Scholar]

- Lászlóffy, W. A Tisza-völgy. Vízügyi Közlemények 1932, 14, 108–142. [Google Scholar]

- Timár, G.; Rácz, T. The effects of neotectonic and hydrological processes on the flood hazard of the Tisza region (East Hungary). In Neotectonics and Seismicity of the Pannonian Basin and Surrounding Orogens—A Memoir on the Pannonian Basin; Cloetingh, S., Horváth, F., Bada, G., Lankreijer, A., Eds.; EGU Stephan Mueller Special Publication Series; Copernicus Publications: Katlenburg-Lindau, Germany, 2002; Volume 3, pp. 267–275. [Google Scholar]

- Vandenberghe, J. Timescales, climate and river development. Quat. Sci. Rev. 2005, 14, 631–638. [Google Scholar] [CrossRef]

- Andrásfalvy, B. A Duna Mente Népének ártéri Gazdálkodása: Ártéri Gazdálkodás Tolna és Baranya Megyében az Ármentesítési Munkák Befejezése Előtt; Ekvilibrium: Budapest, Hungary, 2007. [Google Scholar]

- Károlyi, Z. A Tisza Mederváltozásai, Különös Tekintettel az Árvízvédelemre; VITUKI: Budapest, Hungary, 1960. [Google Scholar]

- Lászlóffy, W. A Tisza—Vízi Munkálatok És vízgazdálkodás a Tiszai Vízrendszerben; Akadémiai Kiadó: Budapest, Hungary, 1982. [Google Scholar]

- Bogdánfy, Ö. A középtiszai nyílt árterek és gátszakadások hatása az árvíz magasságára. Vízügyi Közlemények 1916, 6, 163–170. [Google Scholar]

- Bartha, D. Az Alföld jelenkori vegetációjának kialakulása. Hidrológiai Közlöny 1993, 73, 17–19. [Google Scholar]

- Mezősi, G. Magyarország Természetföldrajza; Akadémiai Kiadó: Budapest, Hungary, 2011. [Google Scholar]

- Somogyi, S. Az ármentesítések és folyószabályozások (vázlatos) földrajzi hatásai hazánkban. Földrajzi Közlemények 1967, 91, 145–158. [Google Scholar]

- Timár, G.; Gábris, G. Estimation of the water conducting capacity of the natural flood conducting channels of the Tisza floodplain, the Great Hungarian Plain. Geomorphology 2008, 98, 250–261. [Google Scholar] [CrossRef]

- Paldus, J. Die militärischen Aufnahmen im Bereiche der Habsburgischen Länder aus der Zeit Kaiser Josephs II; Denkschriften 63 Band 2; Akademie der Wissenschaften in Wien, Philosophisch-historische Klasse: Vienna, Austria, 1919; pp. 1–112. [Google Scholar]

- Molnár, G.; Timár, G. Inversion application in cartography: Estimation of the parameters of the best fitting Cassini-projections of the First Habsburg Military Survey. Geosci. Eng. 2015, 6, 36–44. [Google Scholar]

- Timár, G. Possible Projection of the First Military Survey of the Habsburg Empire in Lower Austria and Hungary (Late 18th Century)—An Improvement in Fitting Historical Topographic Maps to Modern Cartographic Systems. ISPRS Int. J. Geo-Inf. 2023, 12, 220. [Google Scholar] [CrossRef]

- Biszak, E.; Kulovits, H.; Biszak, S.; Timár, G.; Molnár, G.; Székely, B.; Jankó, A.; Kenyeres, I. Cartographic heritage of the Habsburg Empire on the web: The MAPIRE initiative. In Proceedings of the 9th International Workshop on Digital Approaches to Cartographic Heritage, Budapest, Hungary, 4–5 September 2014; pp. 26–31. [Google Scholar]

- Jankó, A. Magyarország Katonai Felmérései; Argumentum: Budapest, Hungary, 2007. [Google Scholar]

- Timár, G.; Jakab, G.; Székely, B. A Step from Vulnerability to Resilience: Restoring the Landscape Water-Storage Capacity of the Great Hungarian Plain—An Assessment and a Proposal. Land 2024, 13, 146. [Google Scholar] [CrossRef]

- ChatGPT. Available online: https://chatgpt.com (accessed on 21 April 2025).

- Adobe Firefly. Available online: https://firefly.adobe.com (accessed on 21 April 2025).

- Krea Enhancer. Available online: https://www.krea.ai/enhancer (accessed on 21 April 2025).

- Arcanum Maps—The Historical Map Portal. Available online: https://maps.arcanum.com (accessed on 16 June 2025).

- David Rumsay Map Collections. Available online: https://www.davidrumsey.com/ (accessed on 16 June 2025).

- USGS Historical Topographic Maps—Preserving the Past. Available online: https://www.usgs.gov/programs/national-geospatial-program/historical-topographic-maps-preserving-past (accessed on 16 June 2025).

- Discover History Through OldMapsOnline. Available online: https://www.oldmapsonline.org (accessed on 16 June 2025).

- The National Library of Scotland—Leabharlann Naiseanta na h-Alba, Map Images. Available online: https://maps.nls.uk/ (accessed on 16 June 2025).

- Cairo, A. The Truthful Art: Data, Charts and Maps for Communication; New Riders Publishing: Indianapolis, IN, USA, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).