Landscape Design Outdoor–Indoor VR Environments User Experience

Abstract

1. Introduction

2. VR and Game Engines for Landscape Design

3. Materials and Methods

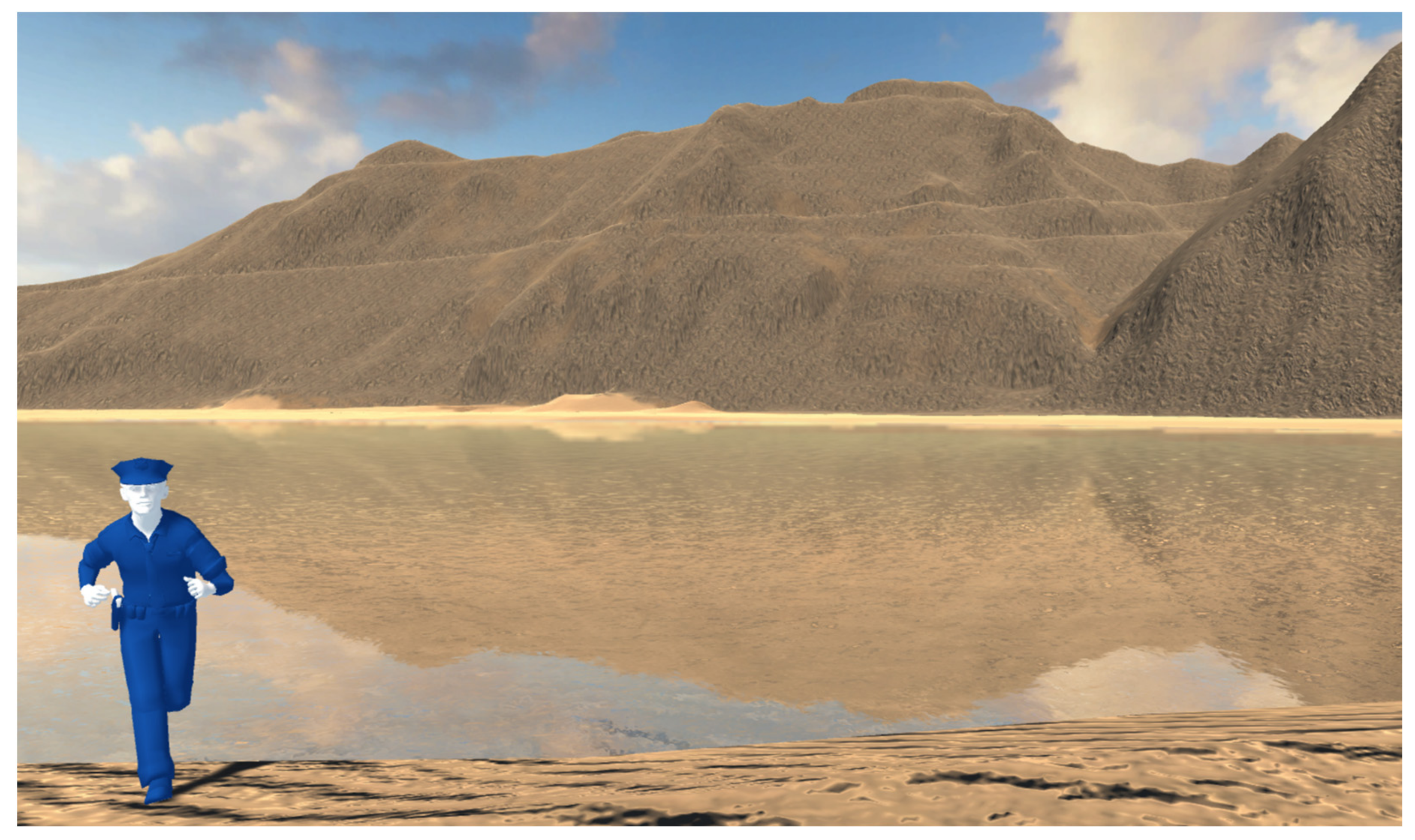

3.1. Unity 3D Game Engine

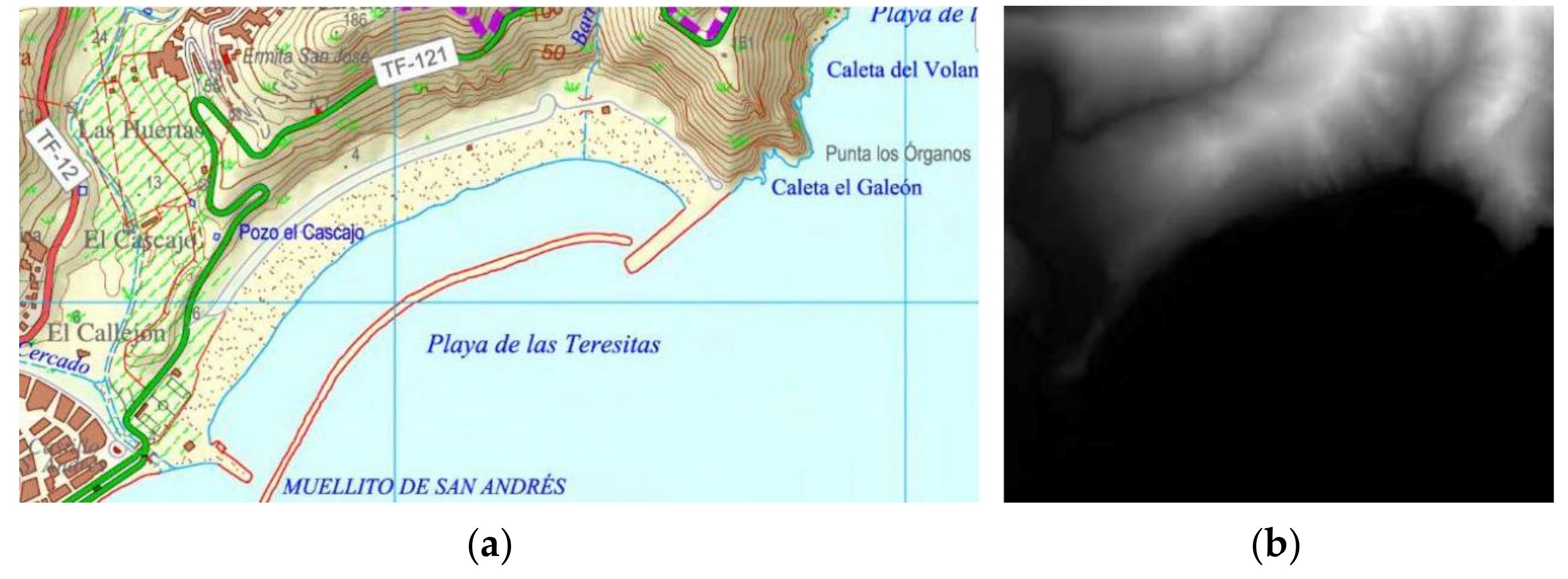

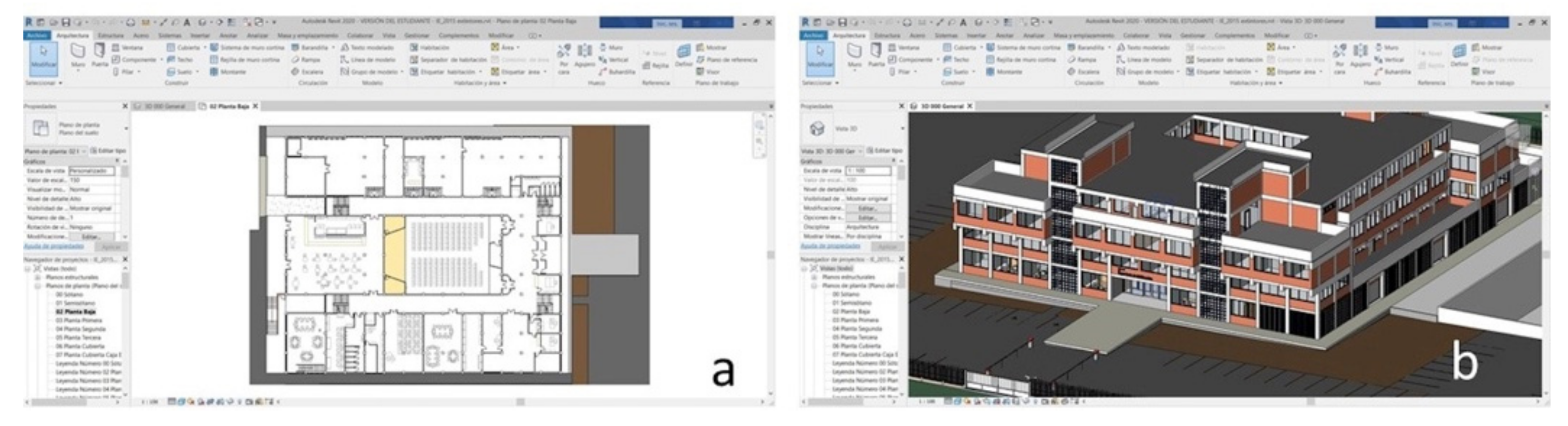

3.2. Data Sources and Instructions for Indoor–Outdoor Workshop Design

3.3. Questionnaire on User eXperience in Immersive Virtual Environments

3.4. Participants

3.5. Procedure

4. Results

Results of the Questionnaire on User eXperience in Immersive Virtual Environments

5. Discussion

6. Conclusions

- Greater levels of user experience were reported in the outdoor workshop than the indoor workshop.

- The differences were found in the presence, immersion, flow and emotion subscales. In these subscales, there were significantly higher scores in open VR environments than in indoor ones.

- Regarding the presence subscale, the existence of obstacles limits the freedom of movement and this causes a lesser sense of presence.

- A more fluid and natural navigation within the virtual environment also generates positive effects on the flow component.

- Regarding immersion, in closed environments, light does not have such a fundamental role for navigation within the environment.

- It is necessary to study the impact on the user’s health (dizziness, fear…) working with VR technology

- Even though the results obtained with both desktop VR environments are higher than 5 (Likert 1–10), it would be interesting to know the user response using high-immersive VR systems.

Author Contributions

Funding

Conflicts of Interest

References

- Xie, H.; Wei, L.; Li, P. Application of Computer Virtual Reality Technology in Design. J. Phys. Conf. Ser. 2020, 1575, 012123. [Google Scholar] [CrossRef]

- Motloch, J.L. Introduction to Landscape Design; John Wiley & Sons: Hoboken, NJ, USA, 2000. [Google Scholar]

- Liu, M.; Nijhuis, S. Mapping landscape spaces: Methods for understanding spatial-visual characteristics in landscape design. Environ. Impact Assess. Rev. 2020, 82, 106376. [Google Scholar] [CrossRef]

- Kara, B. Landscape design and cognitive psychology. Procedia-Soc. Behav. Sci. 2013, 82, 288–291. [Google Scholar] [CrossRef]

- Denerel, S.B.; Birişçi, T. A Research on Landscape Architecture Student Use of Traditional and Computer-Aided Drawing Tools. Amazon. Investig. 2019, 8, 373–385. [Google Scholar]

- Simonds, J.O. Landscape Architecture: A Manual of Site Planning and Design; McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Dee, C. Form and Fabric in Landscape Architecture: A Visual Introduction; Taylor & Francis: Oxfordshire, UK, 2004. [Google Scholar]

- Jiao, J.; Liu, H.; Zhang, N. Research on the urban landscape design based on digital multimedia technology and virtual simulation. Int. J. Smart Home 2016, 10, 133–144. [Google Scholar] [CrossRef]

- Ervin, S.M. Digital landscape modeling and visualization: A research agenda. Landsc. Urban Plan. 2001, 54, 49–62. [Google Scholar] [CrossRef]

- Dinkov, D.; Vatseva, R. 3D modelling and visualization for landscape simulation. In Proceedings of the 6th International Conference on Cartography and GIS, Albena, Bulgaria, 13–17 June 2016. [Google Scholar]

- Bruns, C.R.; Chamberlain, B.C. The influence of landmarks and urban form on cognitive maps using virtual reality. Landsc. Urban Plan. 2019, 189, 296–306. [Google Scholar] [CrossRef]

- Thomas, B.H.; Piekarski, W. Outdoor virtual reality. ISICT 2003, 3, 226–231. [Google Scholar]

- Lacoche, J.; Villain, E.; Foulonneau, A. Evaluating Usability and User Experience of AR applications in VR Simulation. Front. Virtual Real. 2022, 87, 881318. [Google Scholar] [CrossRef]

- George, B.H.; Summerlin, P.R. The Current State of Software Amongst Landscape Architecture Practitioners; Education and Practice Professional Practice Network of the American Society of Land-Scape Architects: Philadelphia, PA, USA, 2018. [Google Scholar]

- Li, Z.; Cheng, Y.; Yuan, Y. Research on the application of virtual reality technology in landscape design teaching. Educ. Sci. Theory Pract. 2018, 18, 1400–1410. [Google Scholar] [CrossRef]

- Zheng, L. Exploring the Application Scenario Test of 3D Software Virtual Reality Technology in Indoor and Outdoor Design. In Proceedings of the 6th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 29–31 March 2022; pp. 1509–1512. [Google Scholar]

- Hill, D.M. How Virtual Reality Impacts the Landscape Architecture Design Process at Various Scales. Ph.D. Thesis, Utah State University, Logan, UT, USA, 2019. [Google Scholar]

- George, B.H.; Sleipness, O.R.; Quebbeman, A. Using virtual reality as a design input: Impacts on collaboration in a university design studio setting. J. Digit. Landsc. Archit. 2017, 2, 252–259. [Google Scholar]

- Gao Chamberlain, B.C. Crash course or course crash: Gaming, VR and a pedagogical approach. J. Digit. Landsc. Archit. 2015, 2015, 354–361. [Google Scholar]

- Gao, T.; Liang, H.; Chen, Y.; Qiu, L. Comparisons of Landscape Preferences through Three Different Perceptual Approaches. Int. J. Environ. Res. Public Health 2019, 16, 4754. [Google Scholar] [CrossRef]

- Dede, C. Immersive interfaces for engagement and learning. Science 2009, 323, 66–69. [Google Scholar] [CrossRef]

- Mikropoulos, T.A.; Natsis, A. Educational virtual environments: A ten-year review of empirical research (1999–2009). Comput. Educ. 2011, 56, 769–780. [Google Scholar] [CrossRef]

- Webster, R. Declarative knowledge acquisition in immersive virtual learning environments. Interact. Learn. Environ. 2016, 24, 1319–1333. [Google Scholar] [CrossRef]

- Lee, E.A.L.; Wong, K.W. A review of using virtual reality for learning. In Transactions on Edutainment I; Springer: Berlin/Heidelberg, Germany, 2008; pp. 231–241. [Google Scholar] [CrossRef]

- Mikropoulos, T.A.; Bellou, J. The unique features of educational virtual environments. In Teaching and Learning with Technology; Routledge: Oxfordshire, UK, 2010; pp. 269–278. [Google Scholar]

- Ragan, E.D.; Bowman, D.A.; Huber, K.J. Supporting cognitive processing with spatial information presentations in virtual environments. Virtual Real. 2012, 16, 301–314. [Google Scholar] [CrossRef]

- Roussou, M.; Oliver, M.; Slater, M. The virtual playground: An educational virtual reality environment for evaluating interactivity and conceptual learning. Virtual Real. 2006, 10, 227–240. [Google Scholar] [CrossRef]

- Jia, D.; Bhatti, A.; Nahavandi, S. The impact of self-efficacy and perceived system efficacy on effectiveness of virtual training systems. Behav. Inf. Technol. 2014, 33, 16–35. [Google Scholar] [CrossRef]

- Sayyad, E.; Sra, M.; Höllerer, T. Walking and teleportation in wide-area virtual reality experiences. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; pp. 608–617. [Google Scholar]

- Halik, Ł. Challenges in converting the Polish topographic database of built-up areas into 3D virtual reality geovisualization. Cartogr. J. 2018, 55, 391–399. [Google Scholar] [CrossRef]

- Griffin, A.L.; Robinson, A.C.; Roth, R.E. Envisioning the future of cartographic research. Int. J. Cartogr. 2017, 3, 1–8. [Google Scholar] [CrossRef]

- Kent, A. Trust me, I’ma cartographer: Post-truth and the problem of acritical cartography. Cartogr. J. 2017, 54, 193–195. [Google Scholar] [CrossRef]

- Hernández, H.H.H.; González, V.M. Comparative analysis of user experience in virtual photographic-based presence platform. In Proceedings of the Latin American Conference on Human Computer Interaction, Córdoba, Argentina, 18–21 November 2015; pp. 1–7. [Google Scholar]

- Carbonell-Carrera, C.; Saorín, J.L. Geospatial Google Street View with virtual reality: A motivational approach for spatial training education. ISPRS Int. J. Geo-Inf. 2017, 6, 261. [Google Scholar] [CrossRef]

- Kim, Y.M.; Rhiu, I. A comparative study of navigation interfaces in virtual reality environments: A mixed-method approach. Appl. Ergon. 2021, 96, 103482. [Google Scholar] [CrossRef]

- Livingston, M.A.; Ai, Z.; Swan, J.E.; Smallman, H.S. Indoor vs. outdoor depth perception for mobile augmented reality. In Proceedings of the 2009 IEEE Virtual Reality Conference, Lafayette, LA, USA, 14–18 March 2009; pp. 55–62. [Google Scholar]

- Yu, D. Interior landscape design and research based on virtual reality technology. J. Phys. Conf. Series. 2020, 1533, 032038. [Google Scholar] [CrossRef]

- Cao, L.; Lin, J.; Li, N. A virtual reality based study of indoor fire evacuation after active or passive spatial exploration. Comput. Hum. Behav. 2019, 90, 37–45. [Google Scholar] [CrossRef]

- Natephra, W.; Motamedi, A.; Fukuda, T.; Yabuki, N. Integrating building information modeling and virtual reality development engines for building indoor lighting design. Vis. Eng. 2017, 5, 19. [Google Scholar] [CrossRef]

- Sharkawi, K.H.; Ujang, M.U.; Abdul-Rahman, A. 3D navigation system for virtual reality based on 3D game engine. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; Volume 37. [Google Scholar]

- Fox, N.; Serrano-Vergel, R.; Van Berkel, D.; Lindquist, M. Towards Gamified Decision Support Systems: In-game 3D Representation of Real-word Landscapes from GIS Datasets. J. Digit. Landsc. Archit. 2022, 7, 356–364. [Google Scholar] [CrossRef]

- Suyu, W.; Xiaogang, C. The Application of Building Information Modeling (BIM) in landscape Architecture Engineering. J. Landsc. Res. 2018, 10, 5. [Google Scholar]

- Carbonell-Carrera, C.; Saorin, J.L.; Jaeger, A.J. Navigation Tasks in Desktop VR Environments to Improve the Spatial Orientation Skill of Building Engineers. Buildings 2021, 11, 492. [Google Scholar] [CrossRef]

- Carbonell-Carrera, C.; Saorin, J.L.; Melián Díaz, D. User VR experience and motivation study in an immersive 3D geovisualization environment using a game engine for landscape design teaching. Land 2021, 10, 492. [Google Scholar] [CrossRef]

- Tcha-Tokey, K.; Loup-Escande, E.; Christmann, O.; Richir, S. A questionnaire to measure the user experience in im-mersive virtual environments. In Proceedings of the 2016 Virtual Reality International Conference, Laval, France, 1–5 March 2016. [Google Scholar]

- Tcha-Tokey, K.; Christmann, O.; Loup-Escande, E.; Richir, S. Proposition and Validation of a Questionnaire to Measure the User Experience in Immersive Virtual Environments. Int. J. Virtual Real. 2016, 16, 33–48. [Google Scholar] [CrossRef]

- Punia, M.; Kundu, A. Three dimensional modelling and rural landscape geo-visualization using geo-spatial science and technology. Neo Geogr. 2014, 3, 1–19. [Google Scholar]

- Koua, E.L.; MacEachren, A.; Kraak, M.J. Evaluating the usability of visualization methods in an exploratory geovisualization environment. Int. J. Geogr. Inf. Sci. 2006, 20, 425–448. [Google Scholar] [CrossRef]

- Golebiowska, I.; Opach, T.; Rød, J.K. Breaking the Eyes: How Do Users Get Started with a Coordinated and Multiple View Geovisualization Tool? Cartogr. J. 2020, 57, 235–248. [Google Scholar] [CrossRef]

- Griffon, S.; Nespoulous, A.; Cheylan, J.P.; Marty, P.; Auclair, D. Virtual reality for cultural landscape visualization. Virtual Real. 2011, 15, 279–294. [Google Scholar] [CrossRef]

- Portman, M.E.; Natapov, A.; Fisher-Gewirtzman, D. To go where no man has gone before: Virtual reality in architecture, landscape architecture and environmental planning. Comput. Environ. Urban Syst. 2015, 54, 376–384. [Google Scholar] [CrossRef]

- Shin, I.S.; Beirami, M.; Cho, S.J.; Yu, Y.H. Development of 3D terrain visualization for navigation simulation using a Unity 3D development tool. J. Adv. Mar. Eng. Technol. 2015, 39, 570–576. [Google Scholar] [CrossRef]

- Herwig, A.; Paar, P. Game engines: Tools for landscape visualization and planning. Trends GIS Virtualiz. Environ. Plan. Des. 2002, 161, 172. [Google Scholar]

- Lange, E.; Hehl-Lange, S.; Brewer, M.J. Scenario-visualization for the assessment of perceived green space qualities at the urban–rural fringe. J. Environ. Manag. 2008, 89, 245–256. [Google Scholar] [CrossRef]

- Mól, A.C.A.; Jorge, C.A.F.; Couto, P.M. Using a game engine for VR simulations in evacuation planning. IEEE Comput. Graph. Appl. 2008, 28, 6–12. [Google Scholar] [CrossRef] [PubMed]

- Williams, K.D. The effects of dissociation, game controllers, and 3D versus 2D on presence and enjoyment. Comput. Hum. Behav. 2014, 38, 142–150. [Google Scholar] [CrossRef]

- Shiratuddin, M.F.; Thabet, W. Utilizing a 3D game engine to develop a virtual design review system. Electron. J. Inf. Technol. Constr. 2011, 16, 39–68. [Google Scholar]

- Laksono, D.; Aditya, T. Utilizing a game engine for interactive 3D topographic data visualization. ISPRS Int. J. Geo-Inf. 2019, 8, 361. [Google Scholar] [CrossRef]

- Regenbrecht, H.; Schubert, T. Real and illusory interactions enhance presence in virtual environments. Presence Teleoper. Virtual Environ. 2002, 11, 425–434. [Google Scholar] [CrossRef]

- Kalisperis, L.N.; Muramoto, K.; Balakrishnan, B.; Nikolic, D.; Zikic, N. Evaluating relative impact of virtual reality system variables on architectural design comprehension and presence-A variable-centered approach using fractional factorial experiment. In Proceedings of the 24th International Conference on Education and Research in Computer Aided Architectural Design in Europe (eCAADe), Volos, Greece, 6–9 September 2006. [Google Scholar]

- Santos, B.S.; Dias, P.; Pimentel, A.; Baggerman, J.W.; Ferreira, C.; Silva, S.; Madeira, J. Head-mounted display versus desktop for 3D navigation in virtual reality: A user study. Multimed. Tools Appl. 2009, 41, 161–181. [Google Scholar] [CrossRef]

- Fonseca, D.; Cavalcanti, J.; Peña, E.; Valls, V.; Sanchez-Sepúlveda, M.; Moreira, F.; Navarro, I.; Redondo, E. Mixed assessment of virtual serious games applied in architectural and urban design education. Sensors 2021, 21, 3102. [Google Scholar] [CrossRef]

- Botella, C.; García-Palacios, A.; Quero, S.; Baños, R.M.; Bretón-López, J.M. Realidad virtual y tratamientos psicológicos: Una revisión. Psicol. Conduct. 2006, 14, 491–509. [Google Scholar]

- McCall, R.; O’Neil, S.; Carroll, F. Measuring Presence in Virtual Environments; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Strand, I. Virtual Reality in Design Processes: A literature review of benefits, challenges, and potentials. FormAkademisk 2020, 13, 3874. [Google Scholar] [CrossRef]

- Tussyadiah, I.P.; Jung, T.H.; Tom Dieck, M.C. Embodiment of wearable augmented reality technology in tourism experiences. J. Travel Res. 2018, 57, 597–611. [Google Scholar] [CrossRef]

- Zhao, J.; Sensibaugh, T.; Bodenheimer, B.; McNamara, T.P.; Nazareth, A.; Newcombe, N.; Klippel, A. Desktop versus immersive virtual environments: Effects on spatial learning. Spat. Cogn. Comput. 2020, 20, 328–363. [Google Scholar] [CrossRef]

- Petridis, P.; Dunwell, I.; Panzoli, D.; Arnab, S.; Protopsaltis, A.; Hendrix, M.; de Freitas, S. Game engines selection framework for high-fidelity serious applications. Int. J. Interact. Worlds 2012, 2012, 418638. [Google Scholar] [CrossRef]

- Carbonell-Carrera, C.; Gunalp, P.; Saorin, J.L.; Hess-Medler, S. Think Spatially With Game Engine. ISPRS Int. J. Geo Inf. 2020, 9, 159. [Google Scholar] [CrossRef]

- Azarby, S.; Rice, A. Understanding the Effects of Virtual Reality System Usage on Spatial Perception: The Potential Impacts of Immersive Virtual Reality on Spatial Design Decisions. Sustainability 2022, 14, 10326. [Google Scholar] [CrossRef]

- Holmes, C.A.; Marchette, S.A.; Newcombe, N.S. Multiple views of space: Continuous visual flow enhances small-scale spatial learning. J. Exp. Psychol. Learn. Mem. Cogn. 2017, 43, 851–861. [Google Scholar] [CrossRef]

- Kozhevnikov, M.; Motes, M.A.; Rasch, B.; Blajenkova, O. Perspective-taking vs. mental rotation transformations and how they predict spatial navigation performance. Appl. Cogn. Psychol. 2006, 20, 397–417. [Google Scholar] [CrossRef]

- Weisberg, S.M.; Schinazi, V.R.; Newcombe, N.S.; Shipley, T.F.; Epstein, R.A. Variations in cognitive maps: Understanding individual differences in navigation. J. Exp. Psychol. Learn. Mem. Cogn. 2014, 40, 669–682. [Google Scholar] [CrossRef]

- Friedman, A.; Kohler, B.; Gunalp, P.; Boone, A.P.; Hegarty, M. A computerized spatial orientation test. Behav. Res. Methods 2020, 52, 799–812. [Google Scholar] [CrossRef]

- Jaalama, K.; Fagerholm, N.; Julin, A.; Virtanen, J.-P.; Maksimainen, M.; Hyyppä, H. Sense of presence and sense of place in perceiving a 3D geovisualization for communication in urban planning–Differences introduced by prior familiarity with the place. Landsc. Urban Plan. 2021, 207, 103996. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring presence in virtual environments: A presence questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Heutte, J. La Part du Collectif Dans la Motivation ET Son Impact Sur Le Bien-êTre Comme Médiateur de la Réussite Des éTudiants: Complémentarités ET Contributions Entre L’Autodétermination, L’Auto-Efficacité ET L’Autotélisme. Ph.D. Thesis, Université Paris-Nanterre, Nanterre, France, 2011. [Google Scholar]

- Pekrun, R.; Goetz, T.; Frenzel, A.C.; Barchfeld, P.; Perry, R.P. Measuring emotions in students’ learning and performance: The Achievement Emotions Questionnaire (AEQ). Contemp. Educ. Psychol. 2011, 36, 36–48. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. In Mensch & Computer 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 187–196. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- George, D.; Mallery, M. SPSS for Windows Step by Step: A Simple Guide and Reference; Allyn & Bacon: Boston, MA, USA, 2003. [Google Scholar]

- Hruby, F.; Ressl, R.; de la Borbolla Del Valle, G. Geovisualization with immersive virtual environments in theory and practice. Int. J. Digit. Earth 2019, 12, 123–136. [Google Scholar] [CrossRef]

- Hegazy, M.; Yasufuku, K.; Abe, H. Evaluating and visualizing perceptual impressions of daylighting in immersive virtual environments. J. Asian Archit. Build. Eng. 2021, 20, 768–784. [Google Scholar] [CrossRef]

| Subscale | Is Defined as… | Origin Questionnaire |

|---|---|---|

| Presence | “The user’s ‘sense of being there’ in the virtual environment” | The Presence Questionnaire [76] The Immersive Tendency Questionnaire (ITQ) [76] |

| Engagement | “The energy in action, the connection between a person and its activity consisting of a behavioral, emotional and cognitive form” | |

| Immersion | The “illusion” that “the virtual environment technology replaces the user’s sensory stimuli by the virtual sensory stimuli” | |

| Flow | “A pleasant psychological state of sense of control, fun and joy” that the user feels when interacting with the virtual environment | Flow 4D16 [77] |

| Emotion | “The feelings (of joy, pleasure, satisfaction, frustration, disappointment, anxiety, etc.) of the user in the virtual environment” | Achievement Emotions Questionnaire (AEQ) [78] |

| Usability | “The ease of learning (learnability and memorizing) and the ease of using (efficiency, effectiveness and satisfaction) the virtual environment” | System Usability Scale (SUS) [79] |

| Technology adoption | “The actions and decisions taken by the user for a future use or intention to use the virtual environment” | Unified Technology Acceptance and Use of Technology (UTAUT) [80] |

| Judgment | “The overall judgment of the experience in the virtual environment” | AttracDiff 2 questionnaire [81] |

| Experience consequence | “The symptoms (e.g., the “simulator sickness”, stress, dizziness, headache, etc.) the user can experience in the virtual environment” | Simulator Sickness Questionnaire (SSQ) [82] |

| Workshop Structure | |||||

|---|---|---|---|---|---|

| Week 1 | Week 2 | Week 3 | |||

| Phase 1. Instruction. | Outdoor Workshop | Phase 2. 2 h. Navigation Tasks | Indoor Workshop | Phase 2. 2 h. Navigation Tasks | |

| Creation of the 3D environment. 1 h | Operation with FPS controller. 1 h. | Phase 3. 1 h 30 m. Questionnaire | Phase 3. 1 h 30 m. Questionnaire | ||

| Questionnaire on User Experience in Immersive Virtual Environments: Presence Subscale | |||

|---|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) | t Value |

| 1. “The virtual environment was responsive to actions that I initiated” | 9.23 (1.50) | 8.66 (1.68) | 1.51 |

| 2. “My interactions with the virtual environment seemed natural” | 7.37 (1.52) | 7.40 (1.97) | −0.09 |

| 3. “The devices (gamepad or keyboard), which controlled my movement in the virtual environment, seemed natural” | 7.89 (1.41) | 7.37 (2.06) | 1.86 |

| 4. “I was able to actively survey the virtual environment” | 9.03 (1.34) | 8.00 (2.18) | 3.20 ** |

| 5. “I was able to examine objects closely” | 8.71 (1.32) | 8.03 (1.89) | 2.22 * |

| 6. “I could examine objects from multiple viewpoints” | 9.09 (1.40) | 8.74 (1.31) | 1.28 |

| 7. “I felt proficient in moving and interacting with the virtual environment at the end of the experience” | 8.89 (1.41) | 8.06 (2.17) | 2.33 * |

| 8 “The visual display quality distracted me from performing assigned tasks” | 5.31 (3.05) | 5.71 (3.05) | −0.69 |

| 9. “The devices (gamepad or keyboard), which controlled my movement, distract me from performing assigned tasks” | 5.00 (3.21) | 4.86 (3.01) | 0.26 |

| 10. “I could concentrate on the assigned tasks rather than on the devices (gamepad or keyboard)” | 8.09 (2.16) | 7.89 (2.25) | 0.63 |

| Questionnaire on User Experience in Immersive Virtual Environments: Engagement Subscale | ||

|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) |

| 1. “The visual aspects of the virtual environment involved me” | 7.29 (1.71) | 7.09 (2.17) |

| 2. “The sense of moving around inside the virtual environment was compelling” | 8.17 (1.46) | 7.40 (2.38) |

| 3. “I was involved in the virtual environment experience” | 7.80 (1.89) | 7.54 (2.15) |

| Questionnaire on User Experience in Immersive Virtual Environments: Immersion Subscale | |||

|---|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) | t Value |

| 1. “I felt stimulated by the virtual environment” | 7.09 (2.03) | 6.06 (2.31) | 2.04 * |

| 2. “I become so involved in the virtual environment that I was not aware of things happening around me” | 5.29 (2.90) | 4.31 (2.71) | 1.86 |

| 3. “I identified to the character I played in the virtual environment” | 6.26 (3.10) | 5.89 (2.85) | 0.89 |

| 4. “I become so involved in the virtual environment that it is if I was inside the game rather than manipulating a gamepad and watching a screen” | 5.97 (2.68) | 4.86 (2.61) | 3.12 ** |

| 5. “I felt physically fit in the virtual environment” | 6.37 (2.70) | 5.94 (2.85) | 0.80 |

| 6. “I got scared by something happening in the virtual environment” | 3.80 (2.96) | 1.91 (1.52) | 3.66 *** |

| 7. “I become so involved in the virtual environment that I lose all track of time” | 4.54 (2.88) | 3.14 (2.14) | 2.94 ** |

| Questionnaire on User Experience in Immersive Virtual Environments: Flow Subscale | |||

|---|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) | t Value |

| 1. “I felt I could perfectly control my actions” | 7.57 (1.97) | 6.74 (2.56) | 1.92 |

| 2. “At each step, I knew what to do” | 8.83 (1.50) | 8.03 (2.23) | 2.41 * |

| 3. “I felt I controlled the situation” | 8.94 (1.59) | 8.06 (2.04) | 3.13 ** |

| 4. “Time seemed to flow differently than usual” | 5.23 (2.68) | 4.00 (2.78) | 2.38 * |

| 5. “Time seemed to speed up” | 4.43 (2.56) | 3.71 (2.57) | 1.84 |

| 6. “I was losing the sense of time” | 4.03 (2.82) | 3.29 (2.23) | 2.15 * |

| 7. “I was not worried about other people’s judgment” | 6.74 (3.16) | 6.29 (3.37) | 0.80 |

| 8. “I was not worried about what other people would think of me” | 7.43 (2.93) | 6.40 (3.34) | 2.05 * |

| 9. “I felt I was experiencing an exciting moment” | 5.09 (2.62) | 4.40 (2.44) | 1.57 |

| 10. “This experience was giving me a great sense of well-being” | 5.97 (2.32) | 5.09 (2.31) | 2.11 * |

| 11. “When I mention the experience in the virtual environment, I feel emotions I would like to share” | 5.83 (2.26) | 4.91 (2.67) | 2.47 * |

| Questionnaire on User Experience in Immersive Virtual Environments: Usability Subscale | ||

|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) |

| 1. “I thought the interaction devices (Oculus headset, gamepad, and/or keyboard) was easy to use” | 7.91 (2.28) | 7.89 (2.21) |

| 2. “I thought there was too much inconsistency in the virtual environment” | 4.54 (2.29) | 4.89 (2.61) |

| 3. “I found the interaction devices (Oculus headset, gamepad, and/or keyboard) very cumbersome to use” | 3.11 (2.62) | 3.31 (2.55) |

| Questionnaire on User Experience in Immersive Virtual Environments: Emotion Subscale | |||

|---|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) | t Value |

| 1. “I enjoyed being in this virtual environment” | 7.26 (1.69) | 6.60 (2.26) | 1.56 |

| 2. “I got tense in the virtual environment” | 3.54 (2.59) | 3.14 (2.93) | 0.95 |

| 3. “It was so exciting that I could stay in the virtual environment for hours” | 3.31 (2.77) | 3.31 (2.51) | 0.00 |

| 4. “I enjoyed the experience so much that I feel energized” | 4.40 (2.86) | 3.74 (2.68) | 1.61 |

| 5. “I felt nervous in the virtual environment” | 3.00 (2.43) | 3.11 (2.65) | −0.26 |

| 6. “I got scared that I might do something wrong” | 2.86 (2.46) | 2.23 (1.80) | 2.09 * |

| 7. “I worried whether I was able to cope with all the instructions that was given to me” | 2.69 (2.07) | 2.57 (1.94) | 0.38 |

| 8 “I felt like distracting myself in order to reduce my anxiety” | 3.80 (3.21) | 3.57 (2.71) | 0.79 |

| 9. “I found my mind wandering while I was in the virtual environment” | 4.54 (2.68) | 3.66 (2.55) | 1.73 |

| 10. “The interaction devices (Oculus headset, gamepad, and/or keyboard) bored me to death” | 3.40 (2.32) | 3.40 (2.44) | 0.00 |

| 11. “When my actions were going well, it gave me a rush” | 5.71 (2.19) | 4.80 (2.55) | 1.98 |

| 12. “While using the interaction devices (Oculus headset, gamepad, and/or keyboard), I felt like time was dragging” | 5.83 (2.35) | 4.57 (2.43) | 2.81 ** |

| 13. “I enjoyed the challenge of learning the virtual reality interaction devices (Oculus headset, gamepad, and/or keyboard) | 6.23 (2.64) | 5.43 (2.70) | 1.65 |

| 14. “The virtual environment scared me since I do not fully understand it” | 2.51 (2.19) | 2.77 (2.06) | −0.59 |

| 15. “I enjoyed dealing with the interaction devices (Oculus headset, gamepad, and/or keyboard)” | 6.40 (2.39) | 6.03 (2.73) | 0.74 |

| Questionnaire on User Experience in Immersive Virtual Environments: Judgment Subscale | ||

|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) |

| 1. “Personally, I would say the virtual environment is practical” | 8.17 (1.64) | 8.00 (1.97) |

| 2. “Personally, I would say the virtual environment is clear (no confusing)” | 8.43 (1.70) | 8.06 (1.81) |

| 3. “Personally, I would say the virtual environment is manageable” | 8.26 (1.80 | 7.34 (2.09) |

| 4. “I found that this virtual environment was original” | 7.86 (2.20) | 7.71 (2.02) |

| 5. “I found that this virtual environment was lame/exciting” | 6.63 (2.04) | 6.86 (2.40) |

| 6. “I found that this virtual environment was easy (1)/challenging (10)” | 3.49 (2.80) | 4.46 (2.70) |

| 7. “I found this virtual environment amateurish (1)/professional (10)” | 4.94 (2.22) | 5.83 (2.13) |

| 8 “I found this virtual environment gaudy (1)/classy (10)” | 6.29 (1.51) | 6.86 (1.96) |

| 9. “I found this virtual environment unpresentable (1)/presentable (10” | 7.40 (1.91) | 7.54 (2.01) |

| 10. “I found that this virtual environment is ugly (1)/beautiful (10)” | 6.94 (1.97) | 6.34 (2.22) |

| 11. “I found that this virtual environment is disagreeable (1)/likeable (10)” | 7.74 (1.80) | 7.11 (1.97) |

| 12. “I found that this virtual environment is discouraging (1)/motivating (10)” | 6.91 (1.76) | 6.94 (1.95) |

| Questionnaire on User Experience in Immersive Virtual Environments: Experience Consequence Subscale | ||

|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) |

| 1. “I suffered from fatigue during my interaction with the virtual environment” | 2.37 (2.34) | 2.29 (2.15) |

| 2. “I suffered from headache during my interaction with the virtual environment” | 2.34 (2.47) | 2.29 (2.32) |

| 3. “I suffered from eyestrain during my interaction with the virtual environment” | 2.66 (2.63) | 2.80 (2.75) |

| 4. “I felt an increase of my salivation during my interaction with the virtual environment” | 2.29 (2.30) | 2.14 (2.48) |

| 5. “I felt an increase of my sweat during my interaction with the virtual environment” | 2.00 (2.14) | 2.00 (2.17) |

| 6. “I suffered from nausea during my interaction with the virtual environment” | 2.14 (2.24) | 1.89 (2.01) |

| 7. “I suffered from ‘fullness of the head’ during my interaction with the virtual environment” | 2.26 (2.36) | 2.09 (2.28) |

| 8 “I suffered from dizziness with eye open during my interaction with the virtual environment” | 2.11 (2.30) | 1.91 (2.09) |

| 9. “I suffered from vertigo during my interaction with the virtual environment” | 1.94 (2.00) | 1.89 (2.14) |

| Questionnaire on User Experience in Immersive Virtual Environments: Technology Adoption Subscale | ||

|---|---|---|

| Item | Outdoor Workshop Score (1–10) (s.d.) | Indoor Workshop Score (1–10) (s.d.) |

| 1. “If I use again the same virtual environment, my interaction with the environment would be clear and understandable for me” | 8.26 (2.13) | 8.14 (1.94) |

| 2. “It would be easy for me to become skillful at using the virtual environment” | 8.11 (2.14) | 7.80 (2.11) |

| 3. “Learning to operate the virtual environment would be easy for me” | 8.40 (1.87) | 7.86 (1.87) |

| 4. “Using the interaction devices (Oculus headset, gamepad, and/or keyboard) is a bad idea” | 2.11 (1.69) | 2.54 (1.92) |

| 5. “The interaction devices (Oculus headset, gamepad, and/or keyboard) would make work more interesting” | 8.06 (2.35) | 8.23 (2.10) |

| 6. “I would like working with the interaction devices (Oculus headset, gamepad, and/or keyboard)” | 8.49 (2.36) | 8.60 (1.61) |

| 7. “I have the resources necessary to use the interaction devices (Oculus headset, gamepad, and/or keyboard)” | 6.49 (2.91) | 5.80 (2.78) |

| 8 “I have the knowledge necessary to use the interaction devices (Oculus headset, gamepad, and/or keyboard)” | 7.40 (2.26) | 6.71 (2.32) |

| 9. “The interaction devices (Oculus headset, gamepad, and/or keyboard) are not compatible with other technologies I use” | 4.49 (2.99) | 4.37 (2.99) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saorin, J.L.; Carbonell-Carrera, C.; Jaeger, A.J.; Díaz, D.M. Landscape Design Outdoor–Indoor VR Environments User Experience. Land 2023, 12, 376. https://doi.org/10.3390/land12020376

Saorin JL, Carbonell-Carrera C, Jaeger AJ, Díaz DM. Landscape Design Outdoor–Indoor VR Environments User Experience. Land. 2023; 12(2):376. https://doi.org/10.3390/land12020376

Chicago/Turabian StyleSaorin, Jose Luis, Carlos Carbonell-Carrera, Allison J. Jaeger, and Dámari Melián Díaz. 2023. "Landscape Design Outdoor–Indoor VR Environments User Experience" Land 12, no. 2: 376. https://doi.org/10.3390/land12020376

APA StyleSaorin, J. L., Carbonell-Carrera, C., Jaeger, A. J., & Díaz, D. M. (2023). Landscape Design Outdoor–Indoor VR Environments User Experience. Land, 12(2), 376. https://doi.org/10.3390/land12020376