A Semi-Automated Framework for Flood Ontology Construction with an Application in Risk Communication

Abstract

1. Introduction

2. Related Works

2.1. Ontologies in Flood-Related Applications

2.2. Ontologies for Flood-Related Communication

2.3. Ontology Construction Methods

| Ref. | Year | Input Data Source | Goal/Task | LLM Role/Strategy | Methodology/Pipeline | Automation | Technology | Evaluation |

|---|---|---|---|---|---|---|---|---|

| [46] | 2025 | Unstructured RAM technical documents (e.g., Semiconductor Draft Document 6578) plus user-provided targeted knowledge snippets | Interactive ontology extraction and subsequent knowledge graph generation tailored to Reliability and Maintainability domain | OpenAI LLM with adaptive iterative Chain-of-Thought prompting inside a conversational user interface | Dialogue collection → CoT ontology extraction (concepts, relations, properties) → KG create and review → Cypher export → Neo4j load | Semi-automatic: human validates ontology steps; KG generation and database import automated | OpenAI API, adaptive CoT algorithm, Neo4j graph DB, Cypher MERGE, interactive web UI | Case study on Semiconductor Draft Document 6578; qualitative human review; future competency question evaluation planned |

| [47] | 2024 | IEEE Thesaurus v1.02 PDF + IEEE-Rel-1K (1000 topic pairs) | Relation classification (broader, narrower, same-as, other) for topic ontology | 17 LLMs zero-shot; standard and chain-of-thought prompts with one/two-way heuristics | Prompt generation → LLM inference → heuristic aggregation → metric computation | Fully automatic; experts only build gold standard | Python scripts via Amazon Bedrock, OpenAI API, KoboldAI | Precision, recall, F1 on IEEE-Rel-1K |

| [48] | 2024 | Natural language wine domain description and competency questions | Automatic ontology generation (specification, conceptualization, implementation) | GPT-3.5 CoT, role-play, few-shot prompting with iterative self-repair | Draft generation → RDFLib syntax check → HermiT consistency check → OOPS pitfall resolution | Fully automatic, post-hoc human analysis | GPT-3.5 API; RDFLib; HermiT; OOPS API; Turtle; metaphactory | Comparison to Stanford wine ontology using OntoMetrics counts and structural/inference analysis |

| [49] | 2024 | Reuters Nord Stream pipeline news article (first 12 sentences) | Ontology extraction (classes, individuals, properties) from unstructured text | GPT-4o zero-shot prompts at T = 0.3; direct, sequential, sentence-level variants | Direct one-shot → Sequential (class→individuals→relations) → Sentence-level extraction → Merge | Fully automatic extraction; no human in loop | GPT-4o API; RDF/Turtle; Python scripts for merging and metrics | Precision, recall, F1; average degree score; qualitative inspection against ground truth |

| [50] | 2023 | LLM-as-source; GPT-3.5 latent knowledge seeded by a single domain concept | End-to-end concept-hierarchy (taxonomy) induction from scratch for a chosen domain | GPT-3.5 generates lists, descriptions, and self-verifies relations via zero-/few-shot prompting with frequency sampling | Seed concept → existence check → subconcept listing → description → multi-query verification → KRIS-based insertion into hierarchy | Fully automatic batch run; no human intervention during construction | Python + OpenAI GPT-3.5 API; parallel calls; KRIS insertion algorithm; output ontologies in OWL (RDF/XML) | Manual subjective inspection; Structural stats (concepts, subsumptions, prompts/concept, cost) |

| [51] | 2023 | WordNet WN18RR terms; GeoNames categories; UMLS (NCI, MEDCIN, SNOMED CT) concepts; Schema.org type taxonomy | Zero-shot term typing, taxonomy discovery, and non-taxonomic relation extraction to construct ontologies | Seven LLMs queried with cloze/prefix prompts; FLAN instruction-tuning evaluated for gains | Prompt design → LLM inference → compare outputs to gold ontologies via MAP@1 or F1 metrics | Fully automatic zero-shot runs; domain experts only planned for later validation | HuggingFace models (BERT, BART, BLOOM, Flan-T5) and GPT-3; open-source Python codebase | Gold WordNet, GeoNames, UMLS, Schema.org sets; MAP@1 for typing, F1 for taxonomy and relations |

| [39] | 2024 | Wikipedia titles and summaries; arXiv titles and abstracts (2020–2022); each document annotated with categories | End-to-end taxonomy induction—discovering concepts and taxonomic is-a relations from scratch | Mistral-7B finetuned via LoRA; custom frequency-masked loss; generates document-level subgraph paths | Linearise relevant paths → LLM outputs subgraphs → sum edge weights → prune loops, inverses, low-weights → final ontology | Fully automatic batch pipeline; no human-in-the-loop after data collection | LoRA-adapted Mistral, vLLM runtime, Sentence-BERT embeddings, Hungarian assignment, simple graph convolutions for metrics | Literal, Fuzzy, Continuous, Graph F1 plus motif distance against Wikipedia and arXiv gold taxonomies |

| [52] | 2024 | Rule sets from seven ontologies—Wine, Economy, Olympics, Transport, SUMO, FoodOn, Gene Ontology | Ontology completion—predict missing concept-inclusion axioms within each ontology | Fine-tuned or zero-shot LLMs used as NLI classifiers on verbalised rules; act as fallback judge in hybrid system | Extract rule templates → build concept graph → GNN scores candidates → NLI classifier → hybrid combines GNN first, LLM when no template match | Fully automatic pipeline; human effort limited to annotating hard negative test rules | DeepOnto BERTSubs; RoBERTa, Llama-2, Mistral, Vicuna; GCN/GAT/R-GCN with ConCN embeddings | F1 on manually validated hard negatives across seven ontologies; inter-annotator k up to 0.83 for negatives |

| [53] | 2025 | Relational database schemas, natural-language schema documentation, external BioPortal ontologies | Iterative ontology generation and enrichment from relational database schemas | Gen-LLM with hybrid recursive RAG; Judge-LLM or expert refinement; zero-shot prompts | Table traversal → RAG retrieval → prompt → delta ontology → judge validation → merge → iterate | Mostly automatic; optional human or Judge-LLM review of each fragment | OWL 2 DL (Manchester), Faiss ANN index, SBERT embeddings, Protégé, HermiT reasoner | Protégé syntax, HermiT consistency, OOPS pitfalls, structural metrics, semantic coverage, CQ scores on two medical databases |

| [35] | 2025 | Six Kaggle CSV datasets on airlines, Amazon beauty ratings, BigBasket products, Brazilian e-commerce orders, consumer complaints, UK e-commerce sales | Semi-automatic ontology construction plus RML mapping and RDF knowledge-graph materialisation from tabular data | GPT-4 multi-agent prompting (Prompt Crafter, Plan Sage, OntoBuilder, OntoMapper) with iterative self-repair of mappings | GUI interaction → data preprocessing → schema definition → ontology building (Turtle) → RML mapping → KGen RDF generation with feedback loop | Semi-automatic; LLM drives tasks while users iteratively refine prompts and validate results via Assist Bot GUI | Python OntoGenix MVC GUI, GPT-4 (gpt-4-1106-preview), OWL/Turtle, RML, KGen, Morph-KGC; code on GitHub/Zenodo | Compared six OntoGenix vs. human ontologies using 19 OQuaRE metrics, OOPS! pitfalls, expert review, and time-saving analysis |

| [54] | 2024 | PubMed breast-cancer research articles and NCCN treatment guidelines | Expand seed ontology and populate breast-cancer treatment knowledge graph | ChatGPT-3.5 fine-tuned on domain texts; prompts generate CQs; RAG answers; LLM judge scores outputs | Seed ontology → LLM CQs → expert check → RAG retrieval → redundancy pruning → LLM triple extraction → KG assembly | Semi-automatic with domain-expert validation of LLM-generated CQs and triples | Protégé editor, PubMed RAG pipeline, ChatGPT-3.5 backend | Five PubMed articles manually tagged; LLM judge scored accuracy, completeness, relevance, consistency (1–5) |

| [55] | 2025 | Elicited user stories and competency questions plus the existing Music Meta OWL ontology text | Conversational support for requirement elicitation, CQ extraction, analysis, and testing of ontologies | GPT-3.5-turbo with one-shot/few-shot prompts acting as elicitor, generator, clusterer, and judge for CQ verification | Persona chat → CQ generation and refinement → redundancy removal and clustering → ontology verbalisation → prompt-based CQ unit tests | Semi-automatic; human-in-loop refinement and confirmation, with automatic clustering and testing stages | Python 3.11, Gradio UI on HuggingFace Spaces, OpenAI GPT-3.5 API, OWL verbaliser module | Music Meta case study; N = 6 experts and N = 8 engineers surveys; CQ test accuracy 87.5% (P 88%, R 85.7%) |

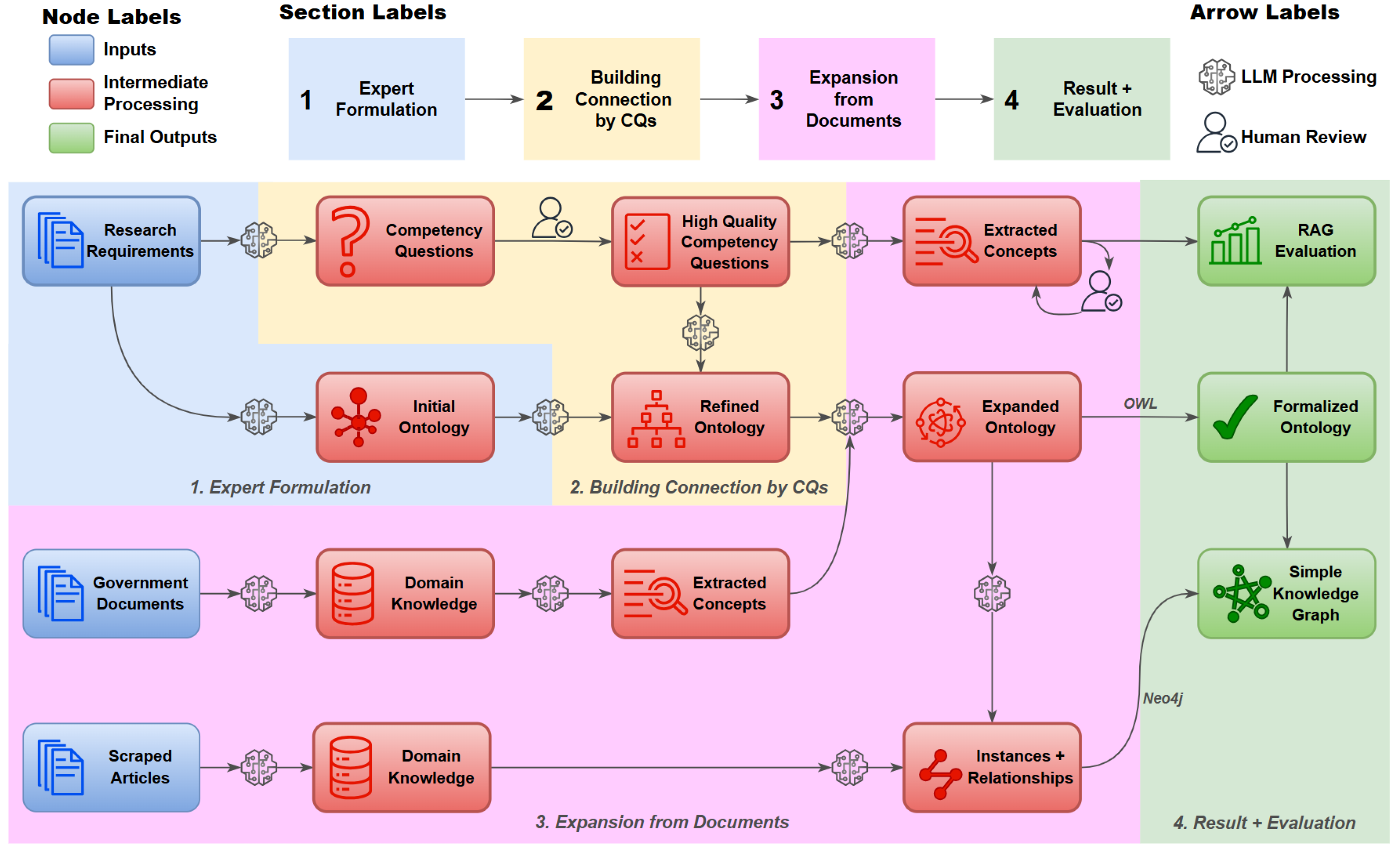

3. Methodology

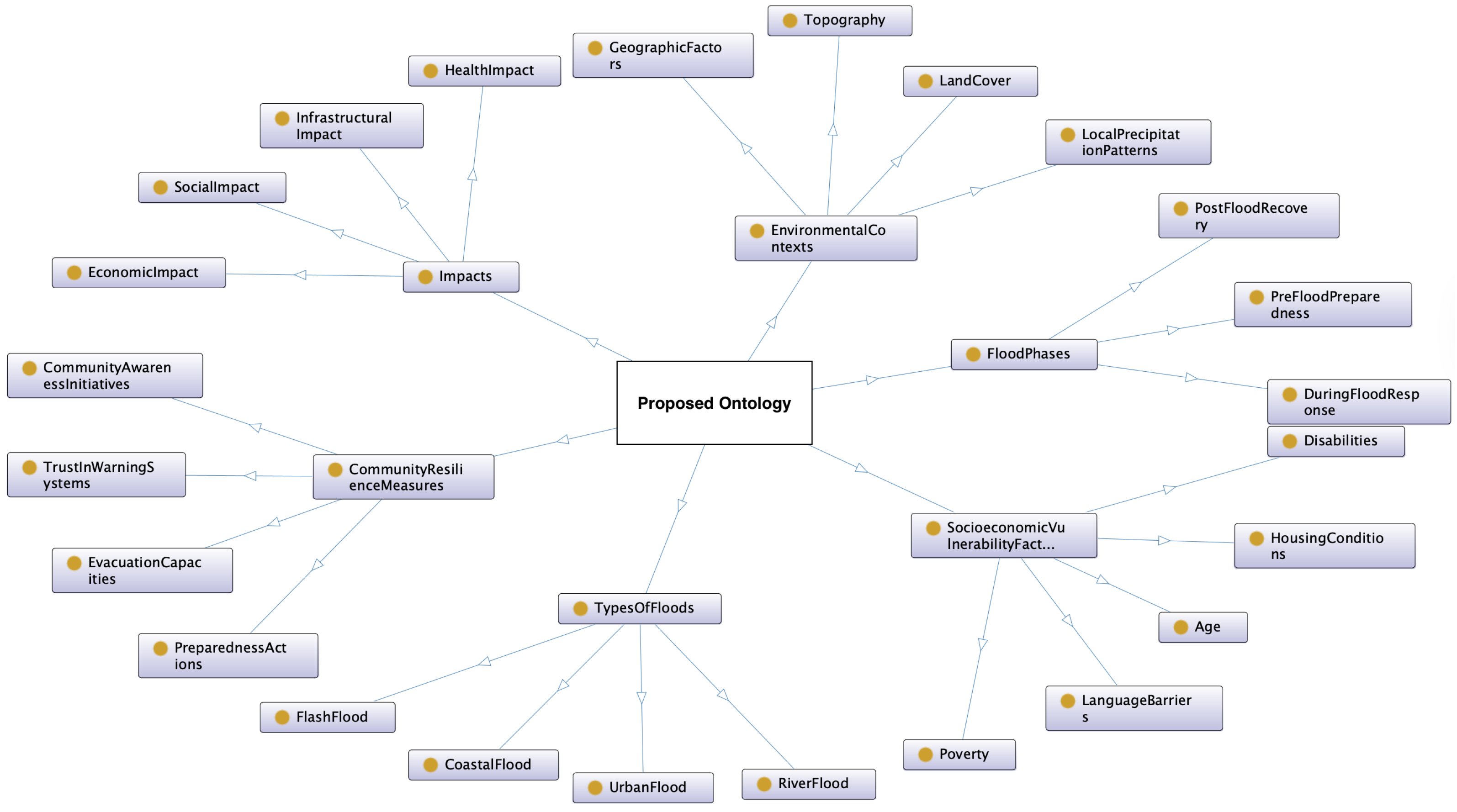

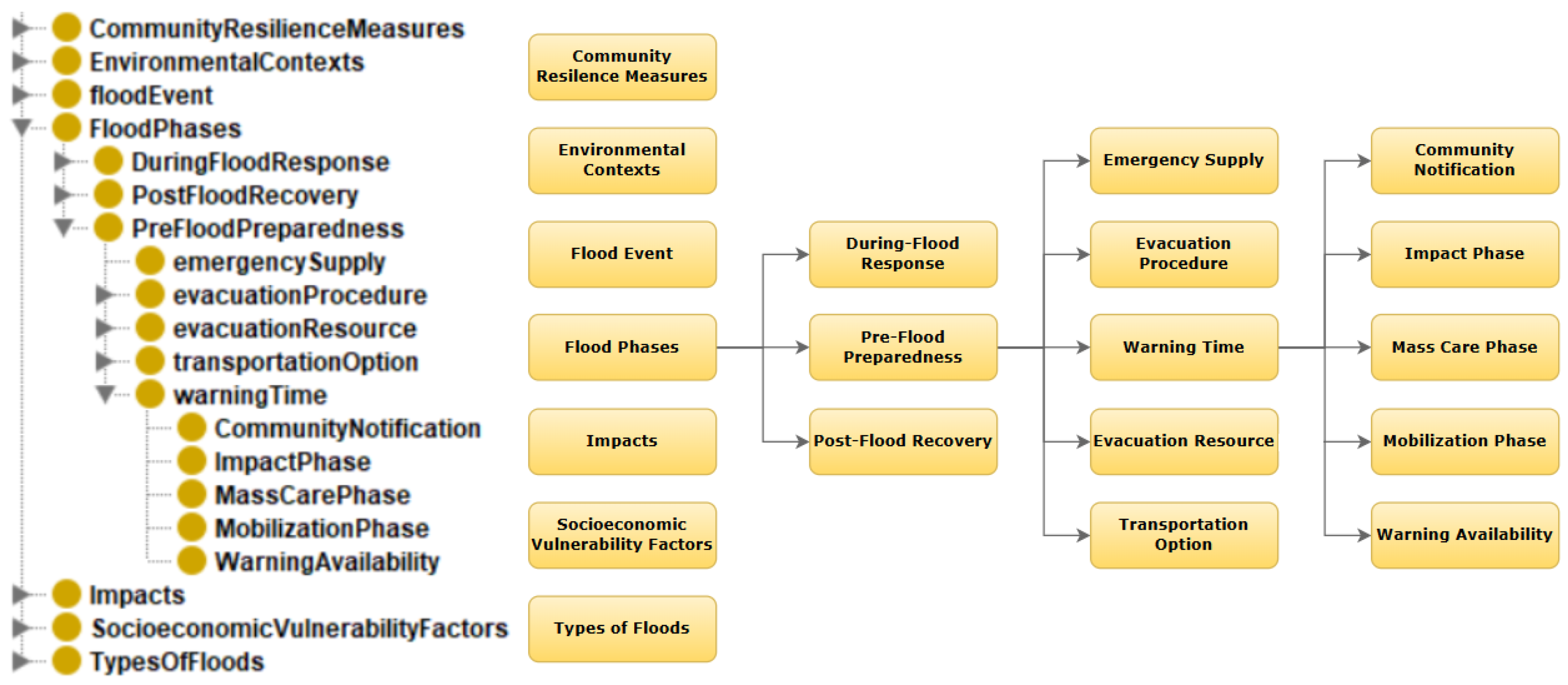

3.1. Stage 1: Expert Formulation of the Initial Ontology

3.2. Stage 2: Competency Question-Driven Ontology Expansion with LLMs

3.3. Stage 3: Schema Enrichment from Authoritative Documents

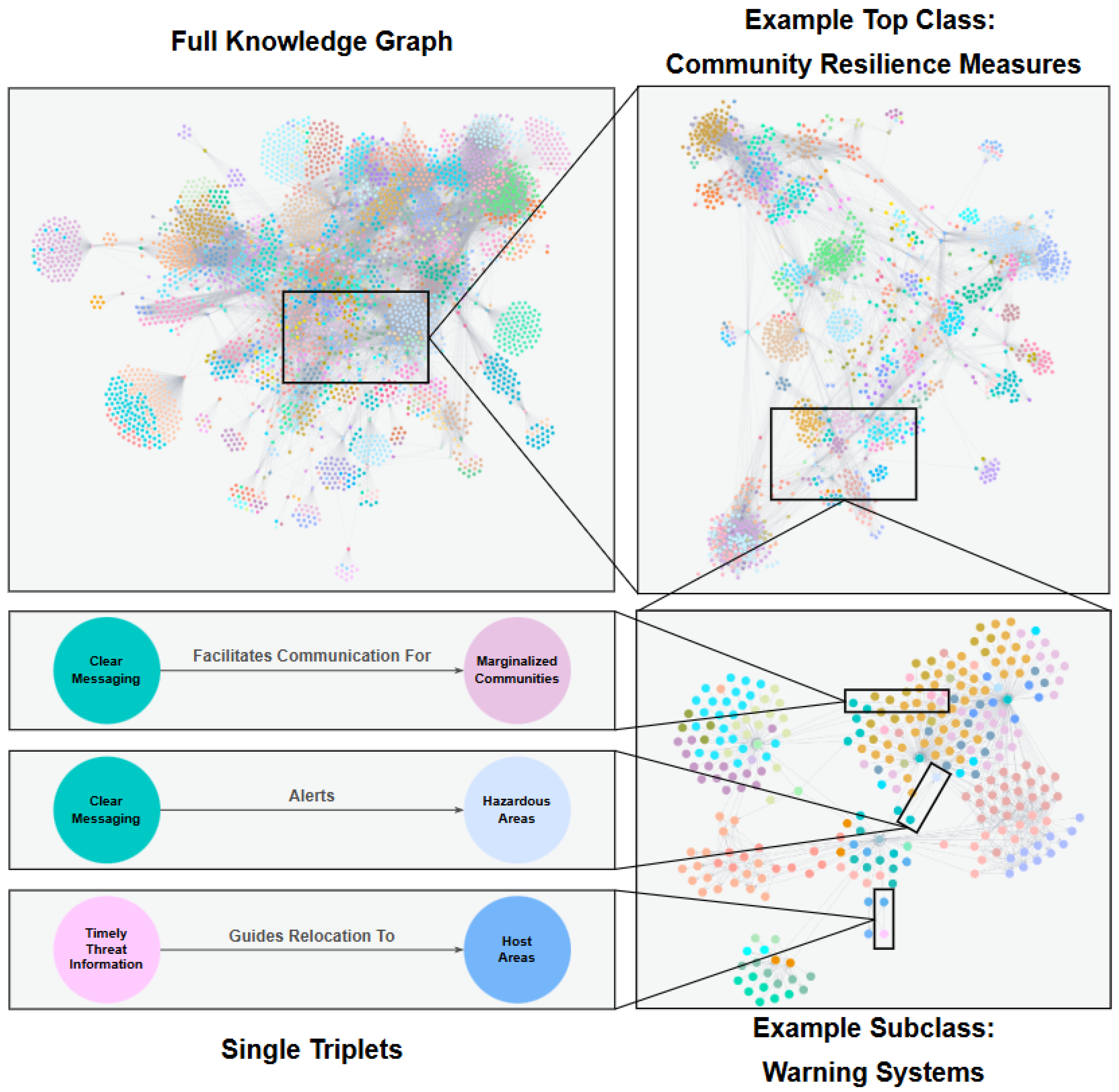

3.4. Stage 4: Instance Population from Web-Scraped Articles

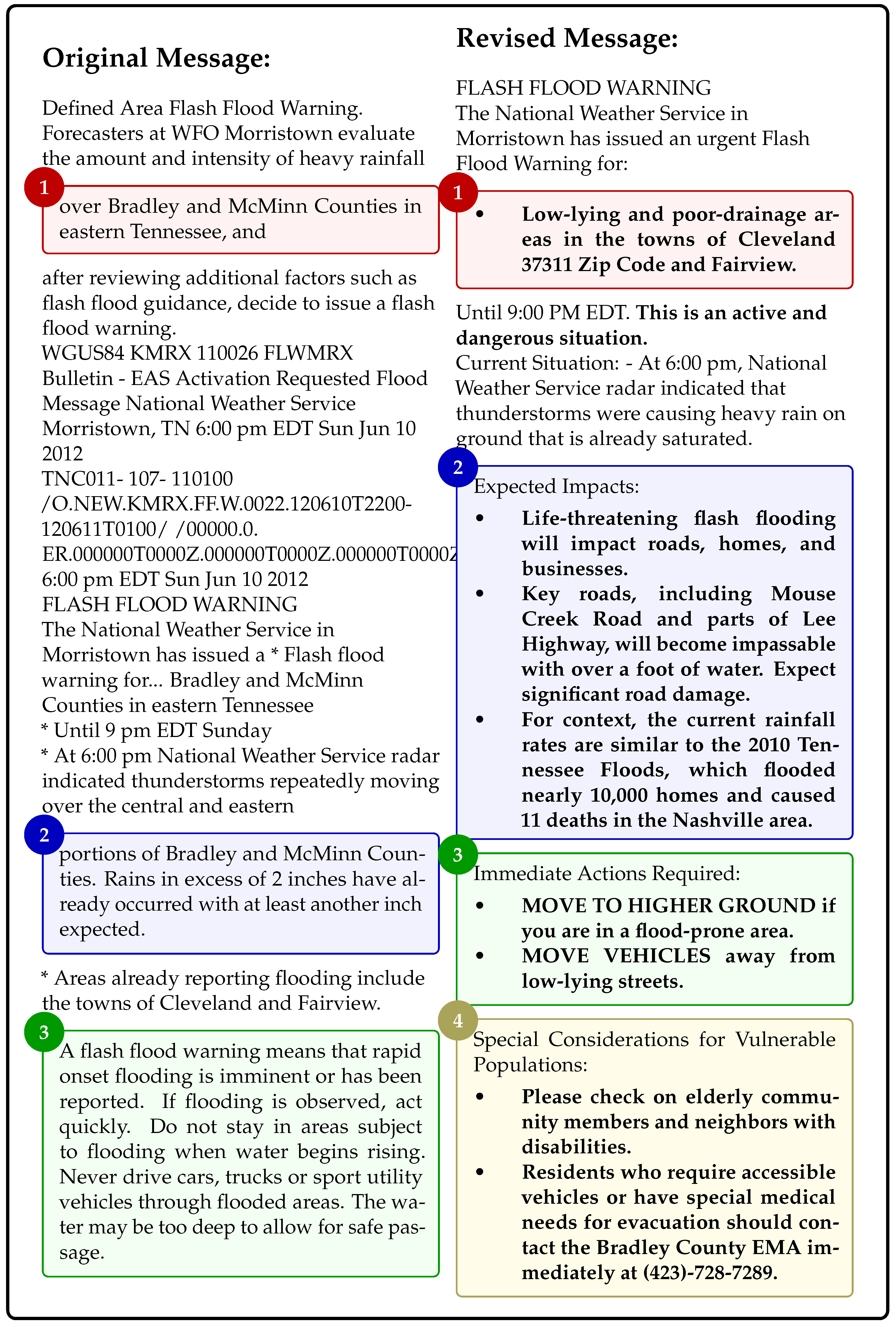

3.5. Stage 5: Case Study: Demonstrating Ontology Application

3.6. Domain Adaptability and Generalization

4. Evaluation Results

4.1. Structural Evaluation Using OntoQA Metrics

4.2. Concept Coverage Evaluation via Competency Questions

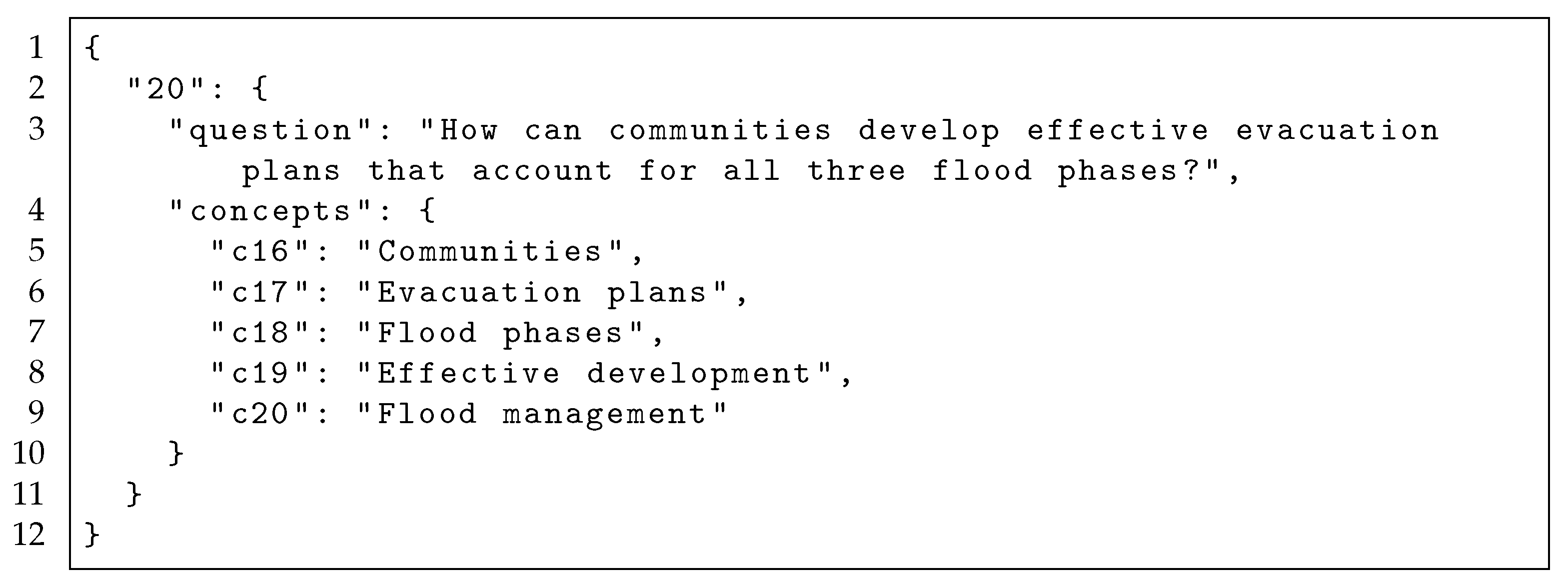

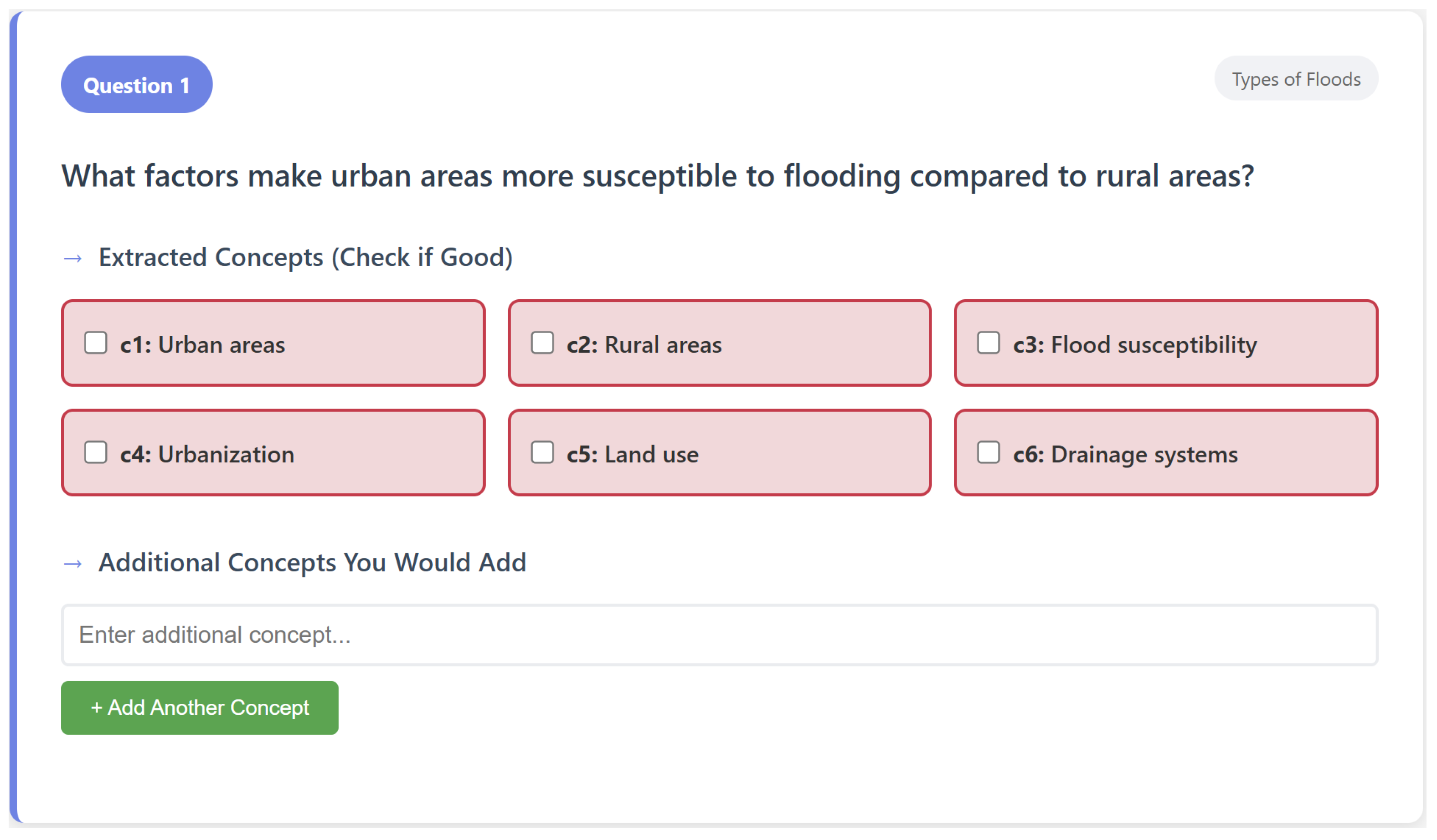

4.2.1. Concept Extraction

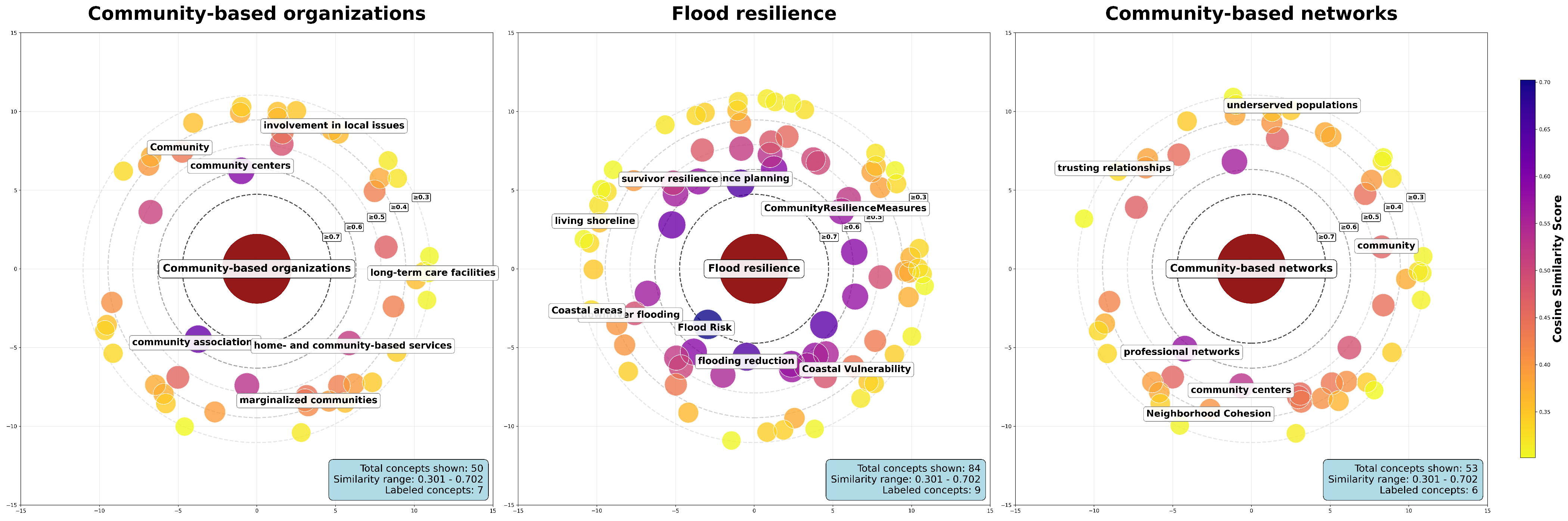

4.2.2. Evaluation and Results

| Algorithm 1 Ontology Coverage Evaluation | |

| Require: | |

| 1: Competency Questions | |

| 2: Ontology Concepts | |

| 3: Ontology Concept Descriptions | |

| 4: Threshold Set | |

| 5: LLM Prompt P | |

| Ensure: Coverage results for each | |

| Pre-processing | |

| 6: | ▹ label embeddings |

| 7: | ▹ description embeddings |

| 8: | ▹ label+desc embeds |

| 9: for each do | |

| 10: | ▹ question embed |

| 11: | ▹ concepts in q |

| 12: | ▹ concept embeds |

| Coverage Evaluation | |

| 13: for each do | |

| 14: R1: | ▹ c vs. label |

| 15: R2: | ▹ c vs. desc |

| 16: R3: | ▹ c vs. label+desc |

| 17: R4: | ▹ question vs. label+desc |

| 18: end for | |

| 19: end for | |

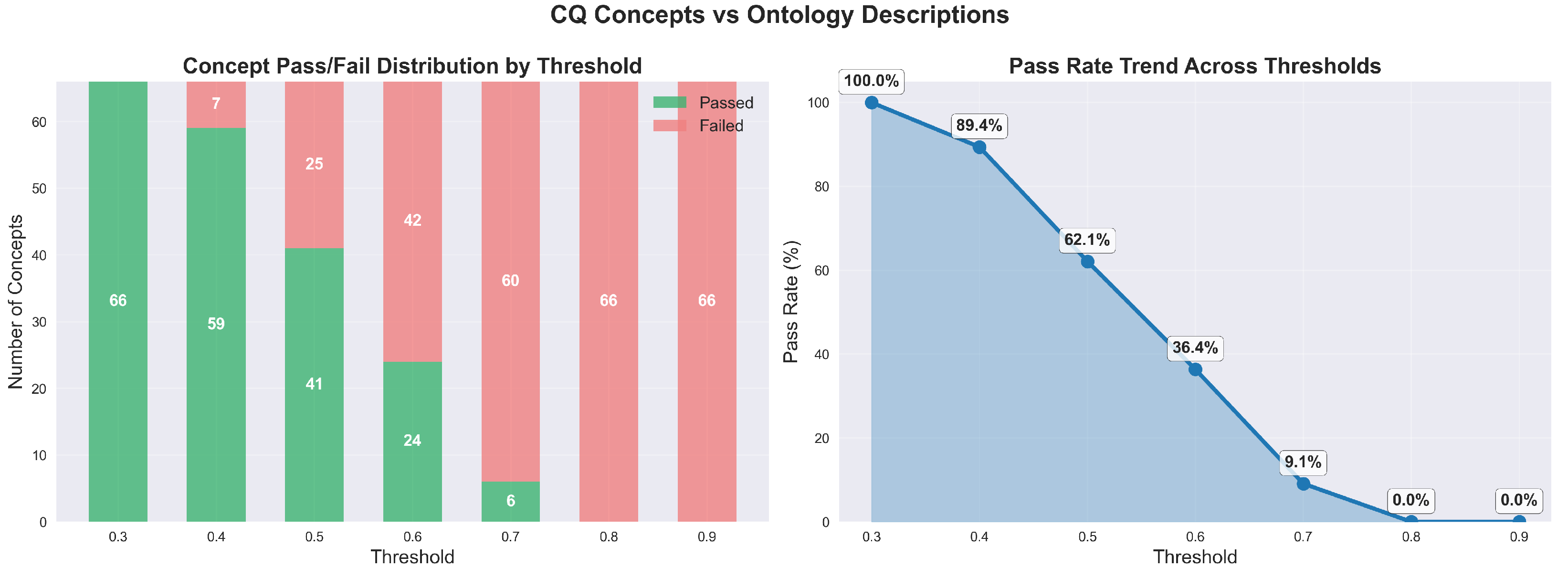

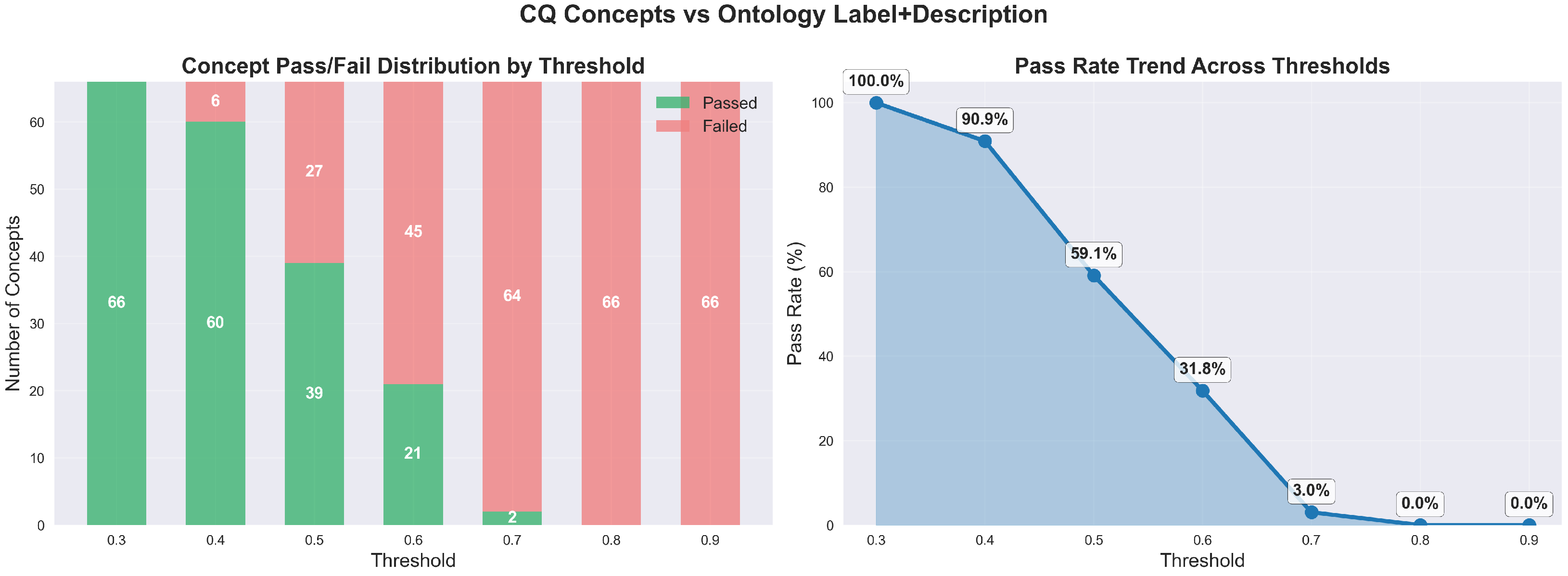

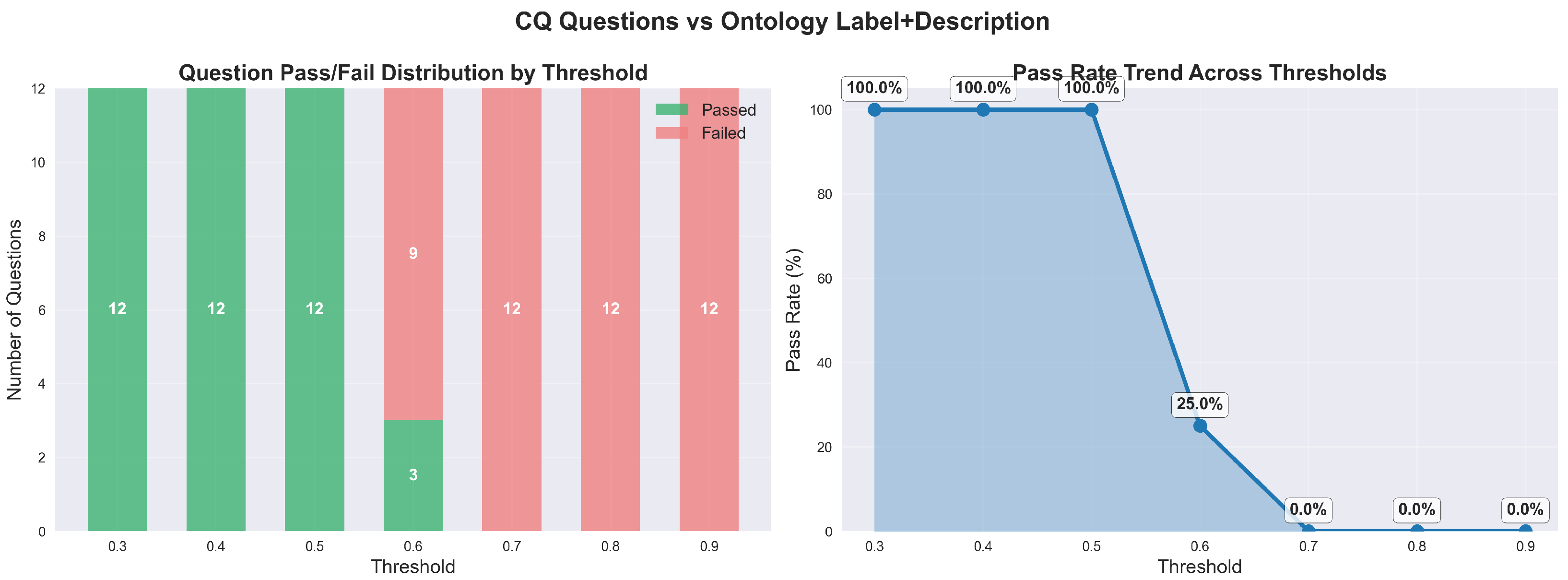

4.2.3. Analysis

5. Discussion

5.1. A Critical Comparison of Ontological Structure and Purpose

5.2. Bridging Documented Gaps in Flood Risk Communication

5.3. Advancing Semi-Automated Ontology Construction Methodologies

5.4. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. LLM Prompts

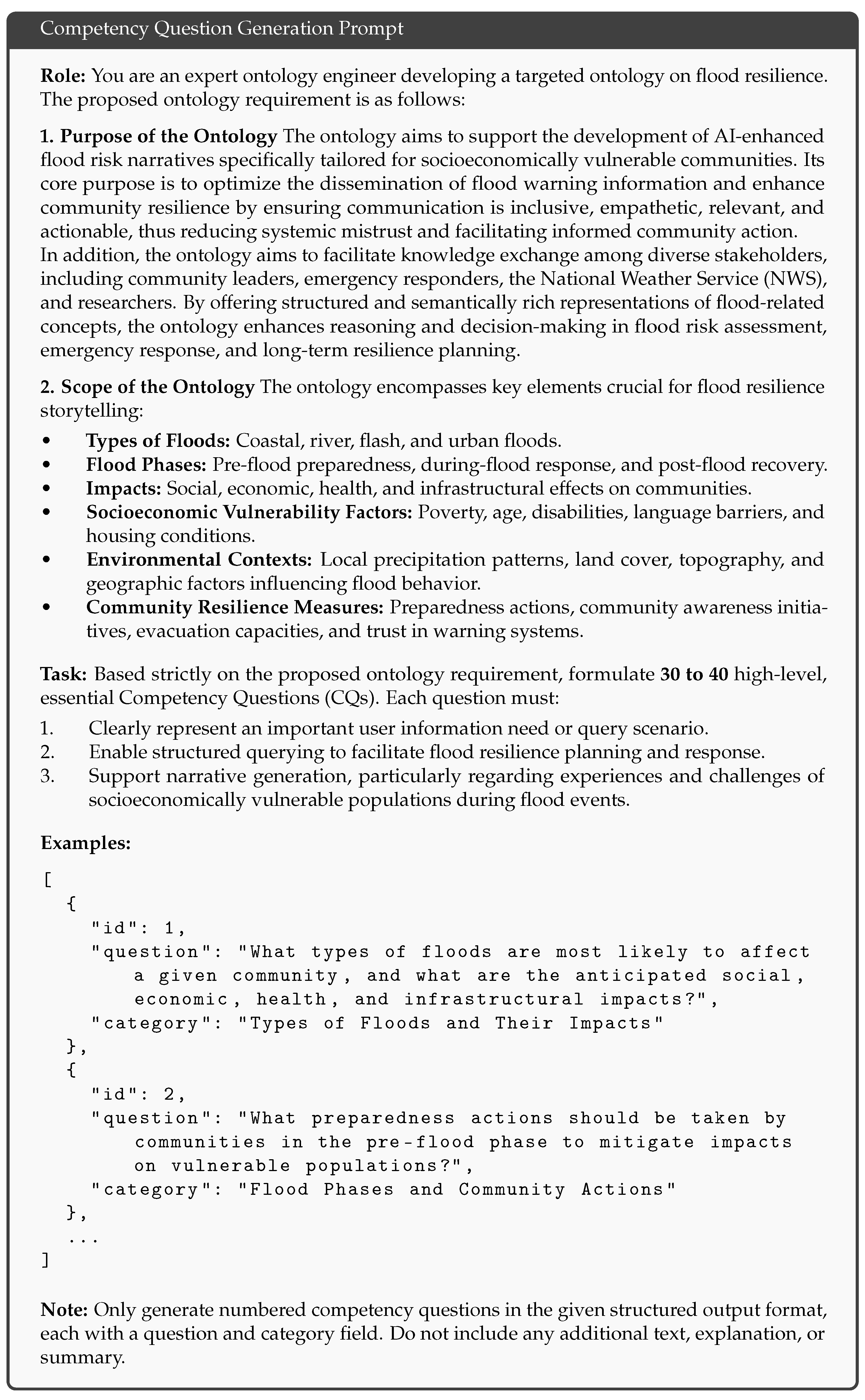

Appendix A.1. Competency Question Generation

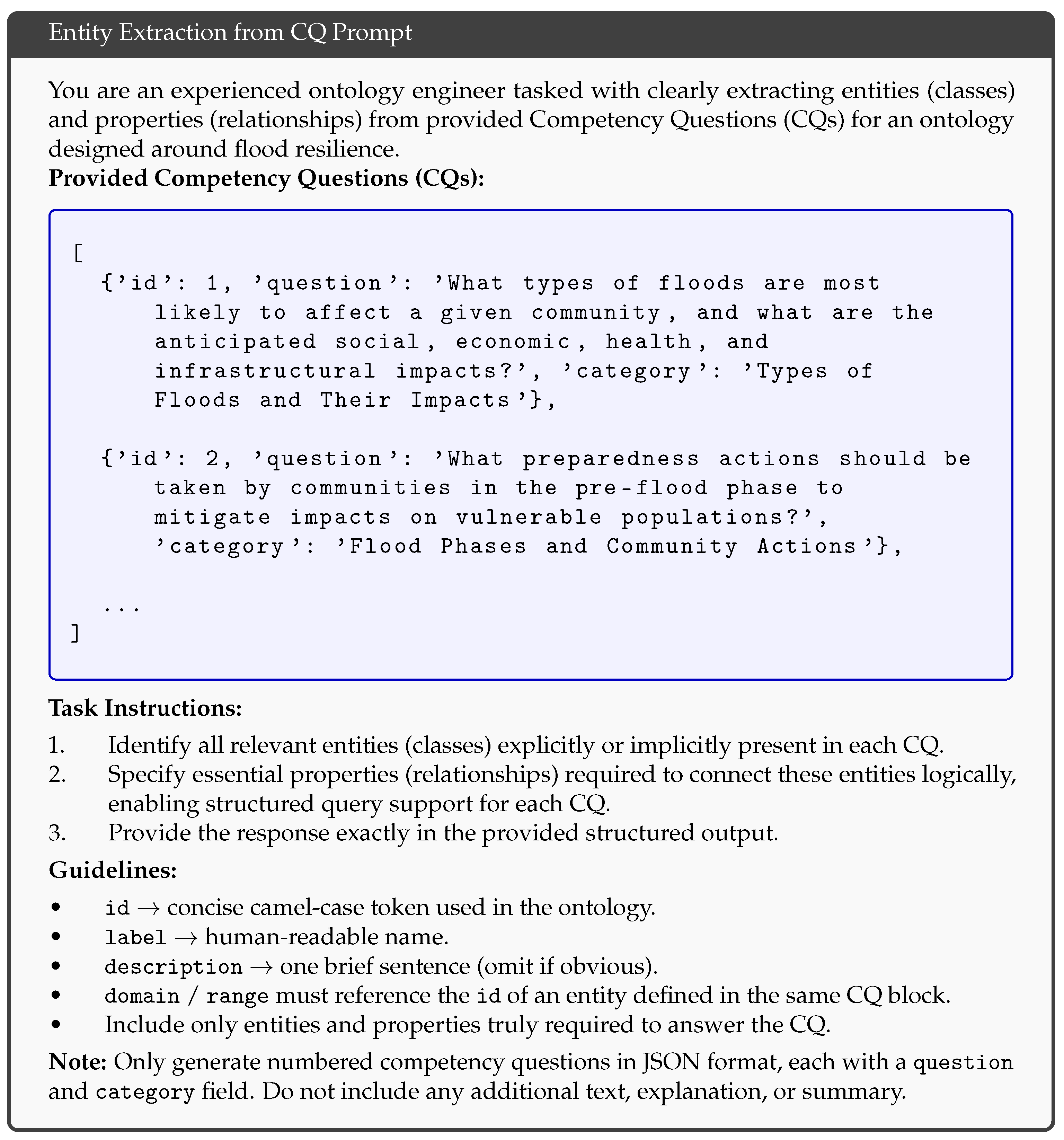

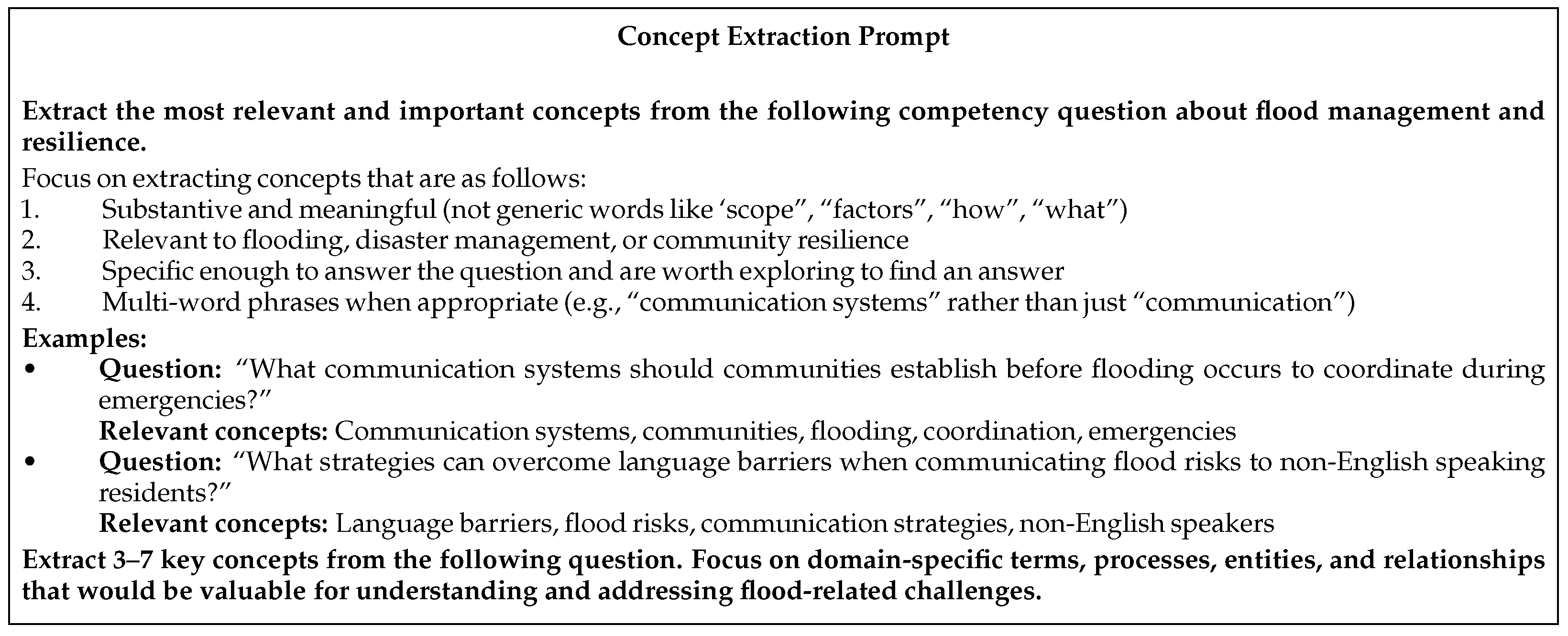

Appendix A.2. Entity Extraction from Competency Questions

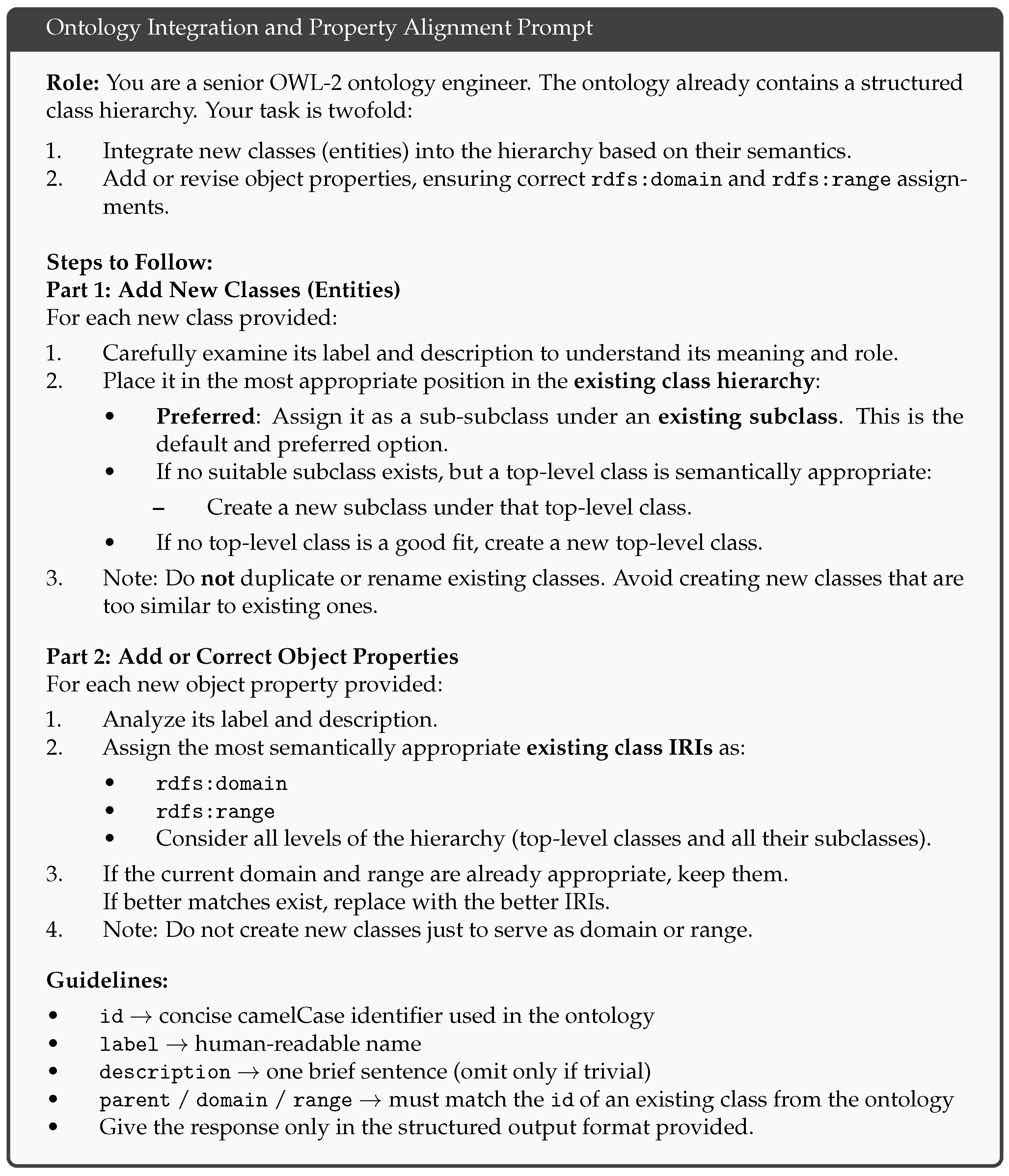

Appendix A.3. Ontology Integration and Property Alignment

References

- Chang, S.E. Socioeconomic Impacts of Infrastructure Disruptions. In Oxford Research Encyclopedia of Natural Hazard Science; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Hao, S.; Wang, W.; Ma, Q.; Li, C.; Wen, L.; Tian, J.; Liu, C. Analysis on the Disaster Mechanism of the “8.12” Flash Flood in the Liulin River Basin. Water 2022, 14, 2017. [Google Scholar] [CrossRef]

- Stephens, K.K.; Blessing, R.; Tasuji, T.; McGlone, M.S.; Stearns, L.N.; Lee, Y.; Brody, S.D. Investigating ways to better communicate flood risk: The tight coupling of perceived flood map usability and accuracy. Environ. Hazards 2024, 23, 92–111. [Google Scholar] [CrossRef]

- Merz, B.; Vorogushyn, S.; Uhlemann, S.; Viglione, A.; Blöschl, G. Understanding Heavy Tails of Flood Peak Distributions. Water Resour. Res. 2022, 58, e2021WR030506. [Google Scholar] [CrossRef]

- Elmhadhbi, L.; Ghedira, C.; Bouaziz, R. An Ontological Approach to Enhancing Information Sharing in Disaster Response. Information 2021, 12, 432. [Google Scholar] [CrossRef]

- Du, W.; Liu, C.; Xia, Q.; Wen, M.; Hu, Y. OFPO & KGFPO: Ontology and knowledge graph for flood process observation. Environ. Model. Softw. 2025, 185, 106317. [Google Scholar] [CrossRef]

- Raman, R.; Kowalski, R.; Achuthan, K.; Iyer, A.; Nedungadi, P. Navigating Artificial General Intelligence Development: Societal, Technological, Ethical, and Brain-Inspired Pathways. Sci. Rep. 2025, 15, 8443. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Liu, T. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 42. [Google Scholar] [CrossRef]

- Gruber, T.R. A Translational Approach to Portable Ontology Specifications. Knowl. Acquis. 1993, in press.

- Noy, N.F.; McGuinness, D.L. Ontology Development 101: A Guide to Creating Your First Ontology; Technical Report KSL-01-05; Stanford Knowledge Systems Laboratory: Stanford, CA, USA, 2001. [Google Scholar]

- Guarino, N.; Oberle, D.; Staab, S. What Is an Ontology? In Handbook on Ontologies; Staab, S., Studer, R., Eds.; Springer: Berlin, Germany, 2009; pp. 1–17. [Google Scholar]

- Elmhadhbi, L.; Karray, M.-H.; Archimède, B.; Otte, J.; Smith, B. A modular ontology for semantically enhanced interoperability in operational disaster response. In Proceedings of the 16th International Conference on Information Systems for Crisis Response and Management—ISCRAM 2019, Valencia, Spain, 19–22 May 2019. [Google Scholar]

- Khantong, S.; Sharif, M.N.A.; Mahmood, A.K. An ontology for sharing and managing information in disaster response: An illustrative case study of flood evacuation. Int. Rev. Appl. Sci. Eng. 2020, 11, 22–33. [Google Scholar] [CrossRef][Green Version]

- Bu Daher, J.; Huygue, T.; Stolf, P.; Hernandez, N. An ontology and a reasoning approach for evacuation in flood disaster response. In Proceedings of the 17th International Conference on Knowledge Management (IKCM 2022), Potsdam, Germany, 23–24 June 2022; pp. 117–131. [Google Scholar][Green Version]

- Shukla, D.; Azad, H.K.; Abhishek, K.; Shitharth, S. Disaster management ontology—An ontological approach to disaster management automation. Sci. Rep. 2023, 13, 8091. [Google Scholar][Green Version]

- Hofmeister, M.; Bai, J.; Brownbridge, G.; Mosbach, S.; Lee, K.F.; Farazi, F.; Hillman, M.; Agarwal, M.; Ganguly, S.; Akroyd, J.; et al. Semantic agent framework for automated flood assessment using dynamic knowledge graphs. Data-Centric Eng. 2024, 5, e14. [Google Scholar] [CrossRef]

- Dutta, B.; Sinha, P.K. An ontological data model to support urban flood disaster response. J. Inf. Sci. 2023, 49, 1–22. [Google Scholar] [CrossRef]

- Mughal, M.H.; Shaikh, Z.A.; Wagan, A.I.; Khand, Z.H.; Hassan, S. ORFFM: An Ontology-Based Semantic Model of River Flow and Flood Mitigation. IEEE Access 2021, 9, 44003–44029. [Google Scholar] [CrossRef]

- Yahya, H.; Ramli, R. Ontology for Evacuation Center in Flood Management Domain. In Proceedings of the 2020 8th International Conference on Information Technology and Multimedia (ICIMU 2020), Selangor, Malaysia, 24–25 August 2020; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020; pp. 288–291. [Google Scholar]

- Sermet, Y.; Demir, I. Towards an information centric flood ontology for information management and communication. Earth Sci. Inform. 2019, 12, 541–551. [Google Scholar] [CrossRef]

- Kurte, K.R.; Durbha, S.S. Spatio-Temporal Ontology for Change Analysis of Flood Affected Areas Using Remote Sensing Images. In Proceedings of the 10th International Conference on Formal Ontology in Information Systems (FOIS 2016), Annecy, France, 6–10 July 2016. Paper ONTO-COMP-D2. [Google Scholar]

- Agresta, A.; Fattoruso, G.; Pollino, M.; Pasanisi, F.; Tebano, C.; De Vito, S.; Di Francia, G. An Ontology Framework for Flooding Forecasting. In Proceedings of the 14th International Conference on Computational Science and Its Applications (ICCSA 2014), University of Minho, Campus de Azurém, Guimarães, Portugal, 30 June–3 July 2014; Lecture Notes in Computer Science, Volume 8582. Springer International Publishing: Cham, Switzerland, 2014; pp. 417–428. [Google Scholar]

- van Ruler, B. Communication Theory: An Underrated Pillar on Which Strategic Communication Rests. Int. J. Strateg. Commun. 2018, 12, 367–381. [Google Scholar] [CrossRef]

- Rowley, J. The Wisdom Hierarchy: Representations of the DIKW Hierarchy. J. Inf. Sci. 2007, 33, 163–180. [Google Scholar] [CrossRef]

- MacKinnon, J.; Heldsinger, N.; Peddle, S. A Community Guide to Effective Flood Risk Communication; Partners for Action: Waterloo, ON, Canada, 2018. [Google Scholar]

- Rollason, E.; Bracken, L.J.; Hardy, R.J.; Large, A.R.G. Rethinking flood risk communication. Nat. Hazards 2018, 92, 1665–1686. [Google Scholar] [CrossRef]

- Zajac, M.; Kulawiak, C.; Li, S.; Erickson, C.; Hubbell, N.; Gong, J. Unifying Flood-Risk Communication: Empowering Community Leaders Through AI-Enhanced, Contextualized Storytelling. Hydrology 2025, 12, 204. [Google Scholar] [CrossRef]

- Steen-Tveit, K. Identifying Information Requirements for Improving the Common Operational Picture in Multi-Agency Operations. In Proceedings of the 17th ISCRAM Conference, Blacksburg, VA, USA, 24–27 May 2020; pp. 252–263. [Google Scholar]

- Dorasamy, M.; Raman, M.; Kaliannan, M. Knowledge management systems in support of disasters management: A two-decade review. Technol. Forecast. Soc. Change 2013, 80, 1834–1853. [Google Scholar] [CrossRef]

- Guarino, N. Formal Ontology and Information Systems. In Proceedings of the Formal Ontology in Information Systems (FOIS’98), Trento, Italy, 6–8 June 1998; pp. 3–15. [Google Scholar]

- National Weather Service (NWS). CAP Documentation–NWS Common Alerting Protocol. Available online: https://vlab.noaa.gov/web/nws-common-alerting-protocol/cap-documentation (accessed on 28 August 2025).

- Asim, M.N.; Wasim, M.; Khan, M.U.G.; Mahmood, W.; Abbasi, H.M. A Survey of Ontology Learning Techniques and Applications. Database 2018, 2018, bay101. [Google Scholar] [CrossRef]

- Ghidalia, S.; Labbani Narsis, O.; Bertaux, A.; Nicolle, C. Combining Machine Learning and Ontology: A Systematic Literature Review. arXiv 2024, arXiv:2401.07744. [Google Scholar] [CrossRef]

- Zulkipli, Z.Z.; Maskat, R.; Teo, N.H.I. A Systematic Literature Review of Automatic Ontology Construction. Indones. J. Electr. Eng. Comput. Sci. 2022, 28, 878–889. [Google Scholar] [CrossRef]

- Val-Calvo, M.; Egaña-Aranguren, M.; Mulero-Hernández, J.; Almagro-Hernández, I.; Deshmukh, P.; Bernabé-Díaz, J.A.; Espinoza-Arias, P.; Sánchez-Fernández, J.L.; Mueller, J.; Fernández-Breis, G.T. OntoGenix: Leveraging Large Language Models for enhanced ontology engineering from datasets. Inf. Process. Manag. 2025, 62, 104042. [Google Scholar] [CrossRef]

- Castro, A.; Pinto, J.; Reino, L.; Pipek, P.; Capinha, C. Large language models overcome the challenges of unstructured text data in ecology. Ecol. Inform. 2024, 82, 102742. [Google Scholar] [CrossRef]

- Abid, S.K.; Sulaiman, N.; Chan, S.W. Present and Future of Artificial Intelligence in Disaster Management. In Proceedings of the International Conference on Engineering Management of Communication and Technology (EMCTECH), Vienna, Austria, 16–18 October 2023; IEEE: Kuala Lumpur, Malaysia, 2023; pp. 1–8. [Google Scholar]

- Kommineni, V.K.; König-Ries, B.; Samuel, S. From human experts to machines: An LLM-supported approach to ontology and knowledge graph construction. arXiv 2024, arXiv:2403.08345. [Google Scholar]

- Lo, A.; Jiang, A.Q.; Li, W.; Jamnik, M. End-to-End Ontology Learning with Large Language Models. In Proceedings of the 38th Conference on Neural Information Processing Systems (NeurIPS 2024), Vancouver, BC, Canada, 10–15 December 2024; NeurIPS Foundation: Vancouver, BC, Canada, 2024. [Google Scholar]

- Raees, M.; Meijerink, I.; Lykourentzou, I.; Khan, V.-J.; Papangelis, K. From explainable to interactive AI: A literature review on current trends in human-AI interaction. Int. J. Hum.-Comput. Stud. 2024, 189, 103301. [Google Scholar] [CrossRef]

- Mazarakis, A.; Bernhard-Skala, C.; Braun, M.; Peters, I. What is critical for human-centered AI at work?—Toward an interdisciplinary theory. Front. Artif. Intell. 2023, 6, 1257057. [Google Scholar] [CrossRef]

- Karanjit, R.; Samadi, V.; Hughes, A.; Murray-Tuite, P.; Stephens, K. Converging human intelligence with AI systems to advance flood evacuation decision making. Nat. Hazards Earth Syst. Sci. Discuss. 2024. in review. [Google Scholar]

- Lokala, U.; Lamy, F.; Daniulaityte, R.; Gaur, M.; Gyrard, A.; Thirunarayan, K.; Kursuncu, U.; Sheth, A. Drug Abuse Ontology to Harness Web-Based Data for Substance Use Epidemiology Research: Ontology Development Study. JMIR Public Health Surveill. 2022, 8, e24938. [Google Scholar] [CrossRef]

- Tsaneva, S.; Sabou, M. Enhancing Human-in-the-Loop Ontology Curation Results through Task Design. ACM J. Data Inf. Qual. 2024, 16, 4. [Google Scholar] [CrossRef]

- Lippolis, A.S.; Saeedizade, M.J.; Keskisärkkä, R.; Zuppiroli, S.; Ceriani, M.; Gangemi, A.; Blomqvist, E.; Nuzzolese, A.G. Ontology Generation Using Large Language Models. arXiv 2025, arXiv:2503.05388. [Google Scholar] [CrossRef]

- Abolhasani, M.S.; Pan, R. OntoKGen: A Genuine Ontology and Knowledge Graph Generator Using Large Language Model. In Proceedings of the Annual Reliability & Maintainability Symposium (RAMS), Destin, FL, USA, 27–30 January 2025; pp. 20–25. [Google Scholar]

- Aggarwal, T.; Salatino, A.; Osborne, F.; Motta, E. Large Language Models for Scholarly Ontology Generation: An Extensive Analysis in the Engineering Field. Inf. Process. Manag. 2024, submitted. [CrossRef]

- Fathallah, N.; Das, A.; De Giorgis, S.; Poltronieri, A.; Haase, P.; Kovriguina, L. NeOn-GPT: A Large Language Model-Powered Pipeline for Ontology Learning. In Proceedings of the Semantic Web: ESWC 2024 Satellite Events, Hersonissos, Crete, Greece, 26–30 May 2024; Meroño Peñuela, A., Corcho, O., Groth, P., Simperl, E., Tamma, V., Nuzzolese, A.G., Poveda-Villalón, M., Sabou, M., Presutti, V., Celino, I., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2025; Volume 15344, pp. 36–50. [Google Scholar]

- Bakker, R.M.; Di Scala, D.L.; de Boer, M.H.T. Ontology Learning from Text: An Analysis on LLM Performance. In Proceedings of the NLP4KGC: 3rd International Workshop on Natural Language Processing for Knowledge Graph Creation, in conjunction with SEMANTiCS 2024 Conference, Amsterdam, The Netherlands, 17–19 September 2024; CEUR Workshop Proceedings: Aachen, Germany, 2024. [Google Scholar]

- Funk, M.; Hosemann, S.; Jung, J.C.; Lutz, C. Towards Ontology Construction with Language Models. arXiv 2023, arXiv:2309.09898. [Google Scholar] [CrossRef]

- Babaei Giglou, H.; D’Souza, J.; Auer, S. LLMs4OL: Large Language Models for Ontology Learning. In Proceedings of the 22nd International Semantic Web Conference, Athens, Greece,, 6–10 November 2023; Proceedings, Part II. [Google Scholar]

- Li, N.; Bailleux, T.; Bouraoui, Z.; Schockaert, S. Ontology Completion with Natural Language Inference and Concept Embeddings: An Analysis. arXiv 2024, arXiv:2403.17216. [Google Scholar] [CrossRef]

- Nayyeri, M.; Yogi, A.A.; Fathallah, N.; Thapa, R.B.; Tautenhahn, H.-M.; Schnurpel, A.; Staab, S. Retrieval-Augmented Generation of Ontologies from Relational Databases. arXiv 2025, arXiv:2506.01232. [Google Scholar] [CrossRef]

- Yang, H.; Liu, Z.; Xiao, L.; Chen, J.; Zhu, R. An LLM Supported Approach to Ontology and Knowledge Graph Construction. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024; pp. 5240–5246. [Google Scholar]

- Zhang, B.; Carriero, V.A.; Schreiberhuber, K.; Tsaneva, S.; Sánchez González, L.; Kim, J.; de Berardinis, J. OntoChat: A Framework for Conversational Ontology Engineering Using Language Models. In Proceedings of the 21st European Semantic Web Conference (ESWC 2024), Hersonissos, Crete, Greece, 26–30 May 2024; pp. 102–121. [Google Scholar]

- Benson, C.-B.; Sculley, A.; Liebers, A.; Beverley, J. My Ontologist: Evaluating BFO-Based AI for Definition Support. In Proceedings of the Workshop on the Convergence of Large Language Models and Ontologies, 14th International Conference on Formal Ontology in Information Systems (FOIS 2024), Enschede, The Netherlands; 2024; pp. 1–10. [Google Scholar]

- Li, J.; Garijo, D.; Poveda-Villalón, M. Large Language Models for Ontology Engineering: A Systematic Literature Review. Semant. Web J. 2025, submitted.

- David, A.O.; Ndambuki, J.M.; Muloiwa, M.; Kupolati, W.K.; Snyman, J. A Review of the Application of Artificial Intelligence in Climate Change-Induced Flooding—Susceptibility and Management Techniques. CivilEng 2024, 5, 1185–1198. [Google Scholar] [CrossRef]

- Wang, B.; Xu, C.; Zhao, X.; Ouyang, L.; Wu, F.; Zhao, Z.; Xu, R.; Liu, K.; Qu, Y.; Shang, F.; et al. Mineru: An Open-Source Solution for Precise Document Content Extraction. arXiv 2024, arXiv:2409.18839. [Google Scholar]

- Tartir, S.; Arpinar, I.B.; Moore, M.; Sheth, A.; Aleman-Meza, B. OntoQA: Metric-Based Ontology Quality Analysis. In Proceedings of the IEEE Workshop on Evaluation of Ontologies for the Web (EON), Houston, TX, USA, 27 November 2005. [Google Scholar]

- Alirezaie, M.; Khameneh, A.M.; Nagel, T.; Pileggi, S.F. An Ontology-Based Reasoning Framework for Querying Satellite Images for Disaster Monitoring. Sensors 2017, 17, 2545. [Google Scholar] [CrossRef] [PubMed]

| Ref. | Year | Domain | Location | Informed-by | Methodology | Intended Users | Use Case | Evaluation | Formality Score | Technology |

|---|---|---|---|---|---|---|---|---|---|---|

| [6] | 2025 | Flood-process observation | China | UNISDR stages; China Disaster-Relief Plan (2023); OGC Time + GeoSPARQL; W3C SSN; domain experts | Bottom-up, reuse-based design (Protégé); No AI | Emergency managers; flood GIS analysts | Integrated query/decision support across flood stages | Henan 2021 case-study + OntoQA metrics | 5/5 | OWL in Protégé; GraphDB |

| [16] | 2024 | Flood-impact assessment | UK | Existing ontologies (ENVO,SWEET); GeoSPARQL; Public APIs (EA, Met Office, HM Land Registry) | Hybrid top–down/bottom-up, competency-question driven; No AI | Emergency planners; City Planners; Software agents | Real-time flood-risk impact assessment | Competency questions; HermiT reasoning | 5/5 | OWL in Protégé; Blazegraph |

| [15] | 2023 | Disaster-general | India | National Disaster Management Plan (India); National Disaster Management Authority matrix; BFO; literature | BFO-aligned custom modelling; OWL-DL + SWRL; No AI | Government disaster managers | Responsibility allocation and relief-decision support | Scenario-based reasoning tests | 5/5 | OWL-DL + SWRL in Protégé |

| [17] | 2023 | Flood-response | Bangalore, India | Authoritative Documents (NDMP, KSDMP); Competency Questions; Existing Ontologies (FOAF, EM-DAT) | YAMO + NeOn methods; No AI | Emergency responders | Urban-flood rescue/relief coordination | Reasoners; OOPS!; SPARQL CQs | 5/5 | OWL DL (Protégé) |

| [14] | 2022 | Flood disaster response & evacuation | France (Pyrénées) | Prior models; domain experts (firefighters); institutional databases (BD TOPO); hydraulic models | NeOn design methodology; No AI | Firefighters; emergency managers | Decision support for generating flood evacuation priorities | Real-world case study; performance testing (execution time); visualization | 5/5 | OWL (Protégé); SHACL; SPARQL; Virtuoso |

| [18] | 2021 | Flood-mitigation | Pakistan (Indus River) | Govt reports (NDMA/PDMA), irrigation manuals, existing ontologies, domain experts | UPON + METHONTOLOGY; No AI | Irrigation & disaster managers | River-flow/flood-mitigation coordination | Competency questions; HermiT reasoner | 5/5 | OWL 2 DL (Protégé) |

| [13] | 2020 | Flood-evacuation | Thailand | Foundational ontologies (UFO, DEMO); academic literature | Design Science Research; Uschold & King; Gómez-Pérez et al.; No AI | Flood response stakeholders | Structuring & sharing information for disaster response | Expert-based (semi-structured interviews) | 5/5 | OWL/OWL-S in Protégé; UML |

| [19] | 2020 | Flood-evacuation-center | Malaysia | Academic literature; existing ontologies; JKM domain input; Previous research | Conceptual modelling (no stated framework); No AI | Emergency managers (JKM/NADMA) | Shared victim-profile data | None | 3/5 | Modeling Diagrams |

| [12] | 2019 | Operational disaster response | France | Interviews with experts; feedback documents; BFO; CCO; prior ontologies | METHONTOLOGY; modularization; competency questions; No AI | Emergency responders | Cross-agency semantic messaging for operational response | HermiT consistency checks; SPARQL over competency questions (Richter-65) | 5/5 | OWL (Protégé) |

| [20] | 2019 | Flood-information | – | NOAA/FEMA/USGS docs; prior flood ontologies; domain experts | Top-down UML → XMI; No AI | Information-system developers; emergency managers | NLQ knowledge engine; data exchange; Communication | Application-based + data-driven | 4/5 | UML/XMI (GenMyModel) |

| [21] | 2016 | Flood-change detection | – | Existing ontologies (BFO 2.0, W3C Time); Spatial&Temporal models(RCC-8, Allen interval algebra); Domain Observations | Ontology reuse; rule-based encoding; No AI | Remote-sensing analysts; emergency managers | Spatio-temporal flood detection in RS images | Automated reasoning tests (Pellet) | 5/5 | OWL-DL + SWRL (Protégé) |

| [22] | 2014 | Flood-forecasting | – | Existing ontologies (SSN,SWEET); hydro/hydraulic literature; domain experts | Uschold–Gruninger (skeletal, middle-out); No AI | Authorities; risk managers | Interoperable sensor–hydraulic flood forecast/alert | None | 5/5 | OWL in Protégé |

| Rejected Proposal (Entity/Hierarchy) | Rejection Reason | Explanation (Human Reviewer’s Rationale) |

|---|---|---|

| Hierarchy: SocioeconomicVulnerabilityFactors → Age → Gender → Race | Hallucination/Logical Error | The LLM incorrectly created a hierarchical chain where Race is a subclass of Gender, and Gender is a subclass of Age. This is a nonsensical, logically flawed structure. The human reviewer rejects this hierarchy and restructures them as parallel sibling classes, all of which are direct subclasses of SocioeconomicVulnerabilityFactors. |

| Entities: CommunityResilienceMeasures → Community; EnvironmentalContexts → GeographicArea → Community | Ambiguity | The LLM generated two concepts with nearly identical labels but placed them in different parts of the ontology. This creates significant ambiguity. A human reviewer consolidates these, likely keeping Community as a subclass of geographicArea and relating it to CommunityResilienceMeasures through an object property (e.g., community -hasResilienceMeasure-> ...), rather than making it a subclass. |

| Entity: EnvironmentalContexts → GeographicFactors → Geography | Semantic Drift | The concept “Geography” refers to an entire academic discipline and is far too broad for the specific scope of this ontology. It has “drifted” from the core topic of flood risk. The human reviewer rejects this entity in favor of more specific and relevant concepts like Topography or Watershed. |

| Entity: FloodEvent → FloodCharacteristic → KonaStorm | Semantic Drift | “Kona Storm” is a highly specific type of cyclone that primarily affects Hawaii. Unless the ontology’s scope is explicitly global or focused on that region, this concept is too specific and not generalizable. The human reviewer rejects it to maintain the ontology’s focus on more broadly applicable flood concepts. |

| Entity: TypesOfFloods → CoastalFlood → 1%-annual-chance-flood-level | Hallucination/Logical Error | The parent class CoastalFlood describes a physical event (the inundation of land), while the proposed subclass 1%-annual-chance flood level is a statistical metric used to measure risk. A metric is a characteristic of a flood or a floodplain, not a type of flood itself. The human reviewer rejects it. |

| Ontology | Object Properties (P) | Subclass Relations () | No. of Classes (C) | No. of Subclasses | Axiom Count | ||

|---|---|---|---|---|---|---|---|

| FDSO | 114 | 607 | 0.16 | 403 | 607 | 1 | 2683 |

| DMDO | 12 | 1001 | 0.01 | 366 | 1001 | 1 | 4075 |

| OntoCity | 17 | 78 | 0.18 | 56 | 96 | 0.81 | 196 |

| Our Ontology | 473 | 343 | 0.58 | 350 | 343 | 1 | 3754 |

| Test Question | Extracted Concepts | Type 1 | Type 2 | Type 3 | Type 4 |

|---|---|---|---|---|---|

| What factors make urban areas more susceptible to flooding compared to rural areas? | Urban areas | Coastal areas; 0.6188 | areas inhabited by homeless people; 0.4778 | areas inhabited by homeless people; 0.4691 | UrbanFlood; 0.5367 |

| Rural areas | rural residents; 0.6994 | rural residents; 0.5519 | rural residents; 0.5231 | SocioeconomicVulnerabilityFactors; 0.5353 | |

| Flood susceptibility | Flood Risk; 0.7509 | SocioeconomicVulnerabilityFactors; 0.7287 | SocioeconomicVulnerabilityFactors; 0.6889 | EnvironmentalContexts; 0.4759 | |

| Urbanization | UrbanFlood; 0.4161 | Development Type; 0.3980 | Development Type; 0.3751 | Vulnerable Population; 0.4756 | |

| Land use | LandCover; 0.5916 | zoning; 0.5000 | zoning; 0.5182 | Age; 0.4682 | |

| Drainage systems | EvacuationCapacities; 0.4116 | Warning System; 0.4275 | Warning System; 0.4153 | Extreme Rainfall; 0.4669 | |

| Risk factors | Danger Factor; 0.5673 | socially vulnerable; 0.4989 | socially vulnerable; 0.5092 | Poverty; 0.4664 | |

| How can communities identify which type of flooding poses the greatest risk to their specific location? | Communities | Community; 0.6570 | well-known community; 0.5149 | Social Network; 0.5022 | Vulnerable Population; 0.5541 |

| Type of flooding | TypesOfFloods; 0.7795 | TypesOfFloods; 0.6840 | TypesOfFloods; 0.6607 | CommunityResilienceMeasures; 0.5445 | |

| Greatest risk | Health Risk; 0.5677 | socially vulnerable; 0.4122 | socially vulnerable; 0.4126 | Community Impact; 0.5334 | |

| Specific location | location; 0.6841 | Geographic Area; 0.5397 | Geographic Area; 0.4652 | GeographicFactors; 0.5304 | |

| Flood identification | Flood Event; 0.7138 | Geographic Area; 0.6244 | TypesOfFloods; 0.6140 | Flood Behavior; 0.5258 | |

| What communication systems should communities establish before flooding occurs to coordinate during emergencies? | Communication systems | Reunification Systems; 0.4454 | Warning System; 0.4238 | mainstream media access; 0.3950 | LanguageBarriers; 0.5811 |

| Communities | Community; 0.6570 | well-known community; 0.5149 | Social Network; 0.5022 | Warning System; 0.5593 | |

| Emergency coordination | Animal evacuation coordination; 0.6710 | unified evacuation orders; 0.6202 | unified evacuation orders; 0.6143 | EvacuationCapacities; 0.5228 | |

| Pre-flood phase | FloodPhases; 0.6231 | Impact Phase; 0.4933 | Impact Phase; 0.4781 | disaster information; 0.5087 | |

| Pre Flood Preparedness | PreFloodPreparedness; 0.8294 | Emergency Supply; 0.6055 | Emergency Supply; 0.5861 | Evacuating Jurisdictions; 0.5073 | |

| During/After Flood Needs | DuringFloodResponse; 0.6909 | meet basic human needs; 0.6454 | meet basic human needs; 0.6282 | reduce losses; 0.5044 | |

| How can communities develop effective evacuation plans that account for all three flood phases? | Communities | Community; 0.6571 | well-known community; 0.5149 | Social Network; 0.5022 | Evacuating Jurisdictions; 0.5846 |

| Evacuation plans | evacuation plan; 0.8730 | plan compliance; 0.6200 | plan compliance; 0.5991 | Evacuation Procedure; 0.5528 | |

| Flood phases | FloodPhases; 0.8984 | FloodPhases; 0.6546 | FloodPhases; 0.6101 | EvacuationCapacities; 0.5503 | |

| Effective development | Development Speed; 0.6358 | Development Type; 0.4732 | Development Type; 0.4000 | CommunityResilienceMeasures; 0.5422 | |

| Flood management | floodplain management tool; 0.7074 | FloodPhases; 0.6242 | flooding reduction; 0.6002 | FloodPhases; 0.5153 |

| CQ Concepts vs. Ontology Labels | Q14 | Q15 | Q19 | Q20 | Q24 | Q25 | Q29 | Q30 | Q34 | Q35 | Q39 | Q40 | Total | Q-Cov | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.3 | 7/7 | 5/5 | 6/6 | 5/5 | 4/4 | 7/7 | 5/5 | 7/7 | 5/5 | 5/5 | 7/7 | 3/3 | 100.0% | 12/12 | |

| 0.4 | 7/7 | 5/5 | 6/6 | 5/5 | 4/4 | 7/7 | 5/5 | 7/7 | 5/5 | 4/5 | 7/7 | 3/3 | 98.5% | 12/12 | |

| 0.5 | 5/7 | 5/5 | 5/6 | 5/5 | 4/4 | 6/7 | 5/5 | 7/7 | 5/5 | 4/5 | 7/7 | 3/3 | 92.4% | 12/12 | |

| 0.6 | 3/7 | 4/5 | 5/6 | 5/5 | 4/4 | 2/7 | 5/5 | 6/7 | 4/5 | 3/5 | 7/7 | 2/3 | 75.8% | 12/12 | |

| 0.7 | 1/7 | 2/5 | 1/6 | 3/5 | 3/4 | 2/7 | 4/5 | 5/7 | 2/5 | 3/5 | 3/7 | 1/3 | 45.5% | 12/12 | |

| 0.8 | 0/7 | 0/5 | 1/6 | 2/5 | 2/4 | 0/7 | 3/5 | 4/7 | 1/5 | 2/5 | 1/7 | 0/3 | 24.2% | 8/12 | |

| 0.9 | 0/7 | 0/5 | 0/6 | 0/5 | 1/4 | 0/7 | 1/5 | 2/7 | 0/5 | 1/5 | 0/7 | 0/3 | 7.6% | 4/12 | |

| CQ Concepts vs. Ontology Descriptions | Q14 | Q15 | Q19 | Q20 | Q24 | Q25 | Q29 | Q30 | Q34 | Q35 | Q39 | Q40 | Total | Q-Cov | |

| 0.3 | 7/7 | 5/5 | 6/6 | 5/5 | 4/4 | 7/7 | 5/5 | 7/7 | 5/5 | 5/5 | 7/7 | 3/3 | 100.0% | 12/12 | |

| 0.4 | 6/7 | 5/5 | 6/6 | 5/5 | 4/4 | 4/7 | 5/5 | 7/7 | 5/5 | 4/5 | 5/7 | 3/3 | 89.4% | 12/12 | |

| 0.5 | 3/7 | 4/5 | 4/6 | 4/5 | 3/4 | 3/7 | 4/5 | 6/7 | 2/5 | 2/5 | 3/7 | 3/3 | 62.1% | 12/12 | |

| 0.6 | 1/7 | 2/5 | 3/6 | 3/5 | 1/4 | 2/7 | 3/5 | 3/7 | 2/5 | 2/5 | 1/7 | 1/3 | 36.4% | 12/12 | |

| 0.7 | 1/7 | 0/5 | 0/6 | 0/5 | 0/4 | 1/7 | 0/5 | 2/7 | 1/5 | 0/5 | 0/7 | 1/3 | 9.1% | 5/12 | |

| 0.8 | 0/7 | 0/5 | 0/6 | 0/5 | 0/4 | 0/7 | 0/5 | 0/7 | 0/5 | 0/5 | 0/7 | 0/3 | 0.0% | 0/12 | |

| 0.9 | 0/7 | 0/5 | 0/6 | 0/5 | 0/4 | 0/7 | 0/5 | 0/7 | 0/5 | 0/5 | 0/7 | 0/3 | 0.0% | 0/12 | |

| CQ Concepts vs. Ontology Label+Description | Q14 | Q15 | Q19 | Q20 | Q24 | Q25 | Q29 | Q30 | Q34 | Q35 | Q39 | Q40 | Total | Q-Cov | |

| 0.3 | 7/7 | 5/5 | 6/6 | 5/5 | 4/4 | 7/7 | 5/5 | 7/7 | 5/5 | 5/5 | 7/7 | 3/3 | 100.0% | 12/12 | |

| 0.4 | 6/7 | 5/5 | 5/6 | 4/5 | 4/4 | 5/7 | 5/5 | 7/7 | 5/5 | 4/5 | 7/7 | 3/3 | 90.9% | 12/12 | |

| 0.5 | 4/7 | 3/5 | 4/6 | 4/5 | 3/4 | 2/7 | 4/5 | 5/7 | 2/5 | 3/5 | 3/7 | 2/3 | 59.1% | 12/12 | |

| 0.6 | 1/7 | 2/5 | 2/6 | 3/5 | 1/4 | 2/7 | 3/5 | 2/7 | 2/5 | 2/5 | 1/7 | 1/3 | 31.8% | 12/12 | |

| 0.7 | 0/7 | 0/5 | 0/6 | 0/5 | 0/4 | 0/7 | 0/5 | 2/7 | 0/5 | 0/5 | 0/7 | 0/3 | 3.0% | 1/12 | |

| 0.8 | 0/7 | 0/5 | 0/6 | 0/5 | 0/4 | 0/7 | 0/5 | 0/7 | 0/5 | 0/5 | 0/7 | 0/3 | 0.0% | 0/12 | |

| 0.9 | 0/7 | 0/5 | 0/6 | 0/5 | 0/4 | 0/7 | 0/5 | 0/7 | 0/5 | 0/5 | 0/7 | 0/3 | 0.0% | 0/12 | |

| CQ Questions vs. Ontology Label+Description | Q14 | Q15 | Q19 | Q20 | Q24 | Q25 | Q29 | Q30 | Q34 | Q35 | Q39 | Q40 | Total | Q-Cov | |

| 0.3 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | 100.0% | 12/12 | |

| 0.4 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | 100.0% | 12/12 | |

| 0.5 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | 100.0% | 12/12 | |

| 0.6 | – | – | – | – | Y | – | – | Y | – | – | – | Y | 25.0% | 3/12 | |

| 0.7 | – | – | – | – | – | – | – | – | – | – | – | – | 0.0% | 0/12 | |

| 0.8 | – | – | – | – | – | – | – | – | – | – | – | – | 0.0% | 0/12 | |

| 0.9 | – | – | – | – | – | – | – | – | – | – | – | – | 0.0% | 0/12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Erickson, C.; Zajac, M.; Guo, X.; Duan, Q.; Gong, J. A Semi-Automated Framework for Flood Ontology Construction with an Application in Risk Communication. Water 2025, 17, 2801. https://doi.org/10.3390/w17192801

Li S, Erickson C, Zajac M, Guo X, Duan Q, Gong J. A Semi-Automated Framework for Flood Ontology Construction with an Application in Risk Communication. Water. 2025; 17(19):2801. https://doi.org/10.3390/w17192801

Chicago/Turabian StyleLi, Shenglin, Caleb Erickson, Michal Zajac, Xiaoming Guo, Qiuhua Duan, and Jiaqi Gong. 2025. "A Semi-Automated Framework for Flood Ontology Construction with an Application in Risk Communication" Water 17, no. 19: 2801. https://doi.org/10.3390/w17192801

APA StyleLi, S., Erickson, C., Zajac, M., Guo, X., Duan, Q., & Gong, J. (2025). A Semi-Automated Framework for Flood Ontology Construction with an Application in Risk Communication. Water, 17(19), 2801. https://doi.org/10.3390/w17192801