Deep Learning-Based Retrieval of Chlorophyll-a in Lakes Using Sentinel-1 and Sentinel-2 Satellite Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Stie

2.2. Data Collection

2.2.1. Sentinel-1 & Sentinel-2

2.2.2. Chl-a Concentration

- Filter an appropriate volume of sample (from 100 mL to 2000 mL) through a glass fiber filter (GF/F, 47 mm).

- Transfer the filter paper and an appropriate volume of acetone solution (9:1 ratio, 5 to 10 mL) into a tissue grinder and homogenize the mixture.

- Place the homogenized sample in a stoppered centrifuge tube, seal it, and store it in darkness at 4 °C for 24 h.

- After 24 h, centrifuge the sample at a centrifugal force of 500 g for 20 min, or filter it using a solvent-resistant syringe filter.

- Transfer an appropriate volume of the supernatant from the centrifuged sample into a 10 mm path-length absorption cell. Measure the absorbance at 663 nm, 645 nm, 630 nm, and 750 nm, using acetone (9:1) as a blank.

- Calculate the Chl-a concentration based on the measured absorbance values using Equation (1).

2.3. Data Curation

2.3.1. Preprocessing Satellite Imagery

2.3.2. Construct Chl-a Retrieval Algorithm Datasets

2.4. Deep Learning-Based Retrieval of Chl-a

2.4.1. Construct CNN Models

2.4.2. Model Evaluation

2.4.3. Model Explanations

3. Results

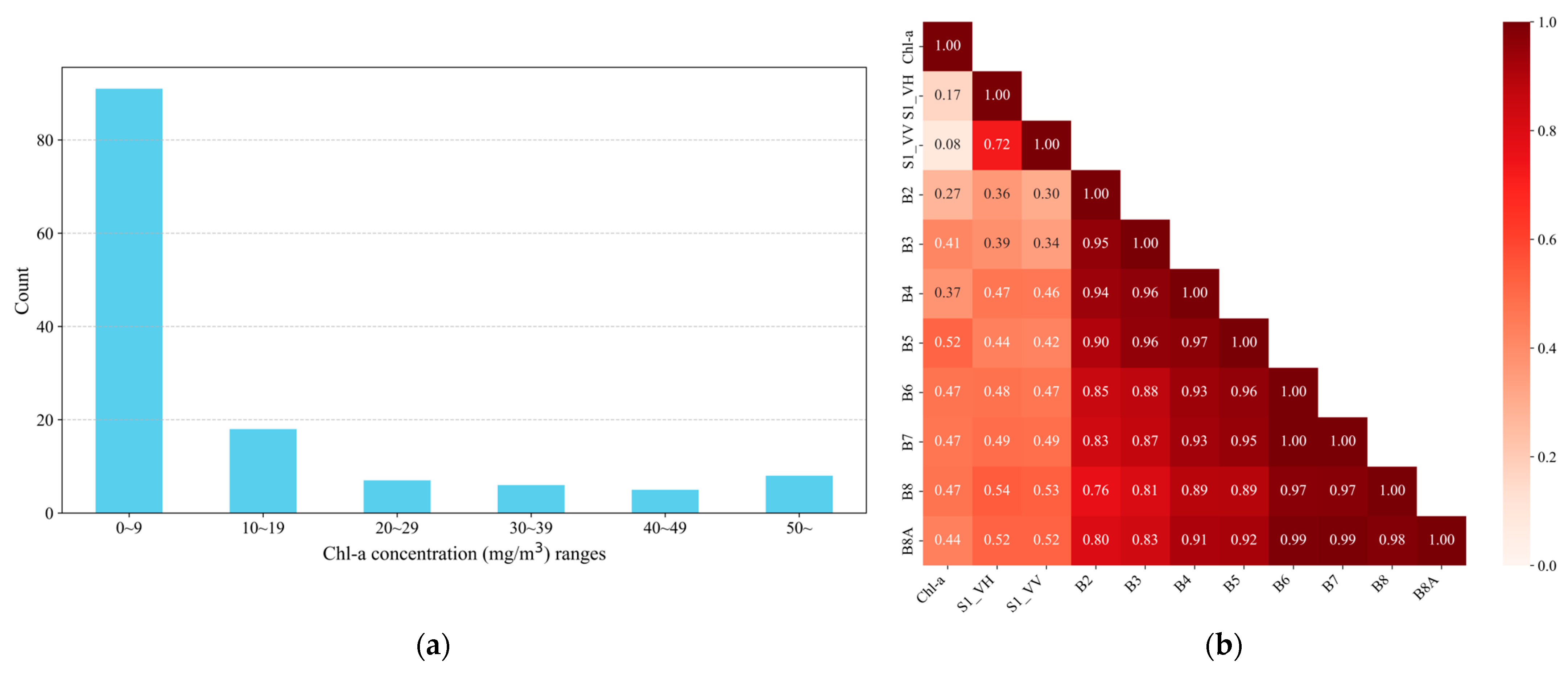

3.1. Characteristics of the Chl-a Retrieval Algorithm Datasets

3.2. Performance of the Chl-a Retrieval Algorithm

3.3. Evaluation of Variable Importance

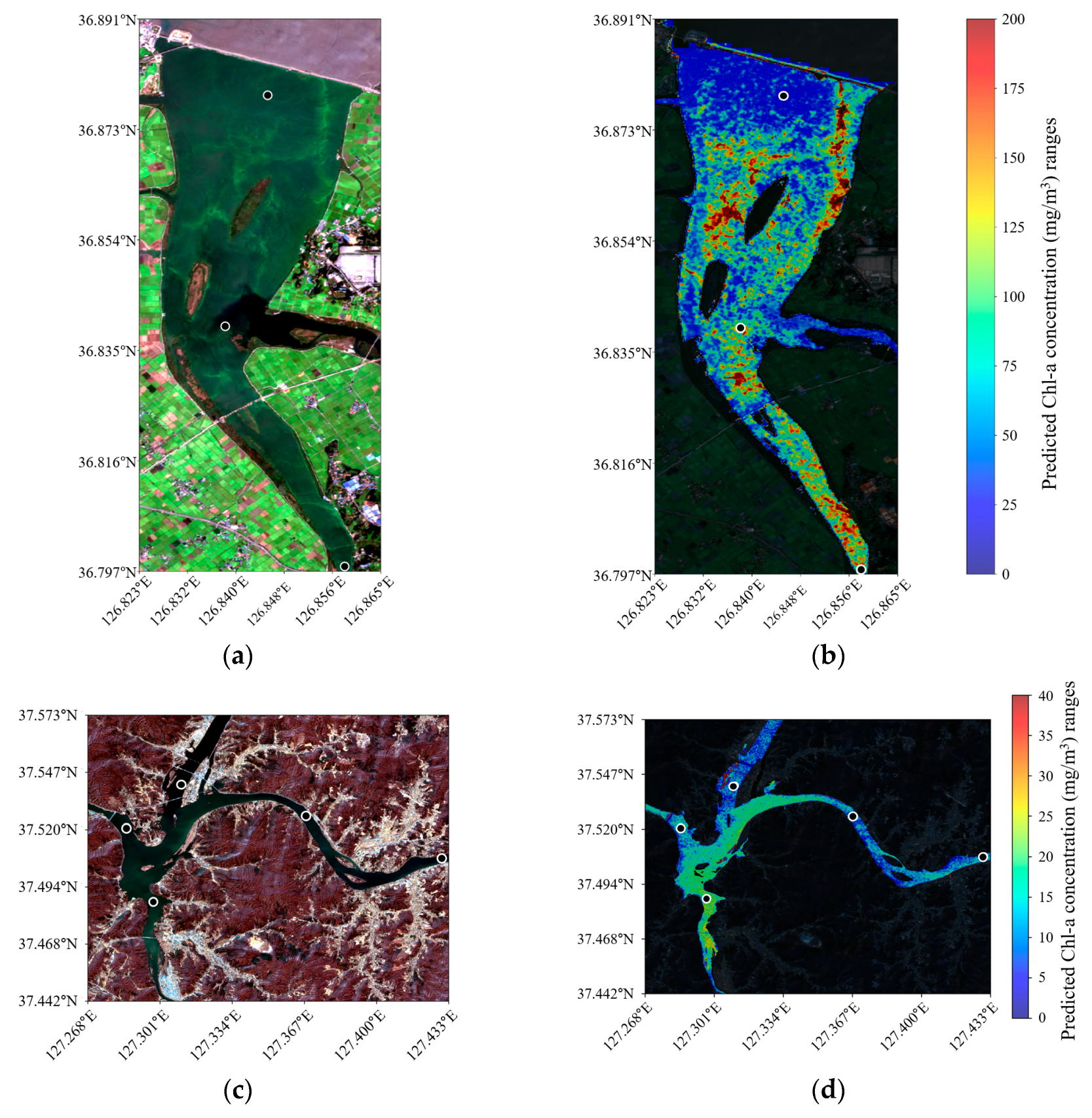

3.4. Spatial Distribution of Chl-a Concentration

4. Discussion

4.1. Effect of SAR Data on Chl-a Retrieval

4.2. Evaluation of SAR and Optical Imagery-Based Remote Monitoring

4.3. Effect of Small Dataset on Model Performance

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Chl-a | Chlorophyll-a |

| SAR | Synthetic-aperture radar |

| GRD | Ground range detected |

| SCL | Scene classification layer |

| CNN | Convolutional neural network |

| R2 | R-squared |

| RMSE | Root mean square error |

Appendix A

| Image Date | Sentinel-1 Relative Orbit Number | Sentinel-2 Tile ID | No. of Samples | No. of Lakes | Chl-a (mg/m3) |

|---|---|---|---|---|---|

| 28 January 2019 | 54 | T52SCG T52SDF | 4 | 3 | 5.93 (11.38) |

| 3 April 2019 | 127 | T52SCG T52SBF T52SDE T52SBD | 12 | 4 | 7.15 (22.21) |

| 3 May 2019 | 134 | T52SCE T52SCH T52SDF | 9 | 4 | 2.09 (3.25) |

| 2 July 2019 | 134 | T52SBF T52SCF | 5 | 3 | 46 (1552.63) |

| 1 August 2019 | 127 | T52SCF | 2 | 1 | 3 (2) |

| 8 August 2019 | 54 | T52SDG | 1 | 1 | 64.6 |

| 30 September 2019 | 127 | T52SBF T52SDF | 4 | 2 | 104.43 (9310.18) |

| 6 November 2019 | 61 | T52SDE T52SDD | 7 | 3 | 9.29 (52.50) |

| 11 November 2019 | 134 | T52SDD | 2 | 1 | 1.05 (0.604) |

| 4 February 2020 | 54 | T52SDE | 2 | 2 | 0.95 (1.13) |

| 5 March 2020 | 61 | T52SDF | 2 | 1 | 1 (0.02) |

| 23 March 2020 | 54 | T52SCG T52SCF T52SDF | 11 | 5 | 17.07 (102.62) |

| 8 June 2020 | 127 | T52SDF | 1 | 1 | 1.1 |

| 25 August 2020 | 134 | T52SCF T52SCE | 8 | 3 | 17.3 (277.72) |

| 6 October 2020 | 127 | T52SDE | 3 | 1 | 1.17 0.04) |

| 23 November 2020 | 127 | T52SCF T52SCD T52SDG | 3 | 3 | 3.93 (9.16) |

| 4 January 2021 | 134 | T52SDF | 1 | 1 | 1.1 |

| 3 February 2021 | 127 | T52SDF | 1 | 1 | 1.1 |

| 18 March 2021 | 54 | T52SCH | 3 | 1 | 1.07 (0.30) |

| 23 March 2021 | 127 | T52SCG | 1 | 1 | 3.4 |

| 21 June 2021 | 134 | T52SCH T52SCG | 7 | 2 | 16.31 (227.26) |

| 21 July 2021 | 127 | T52SBF | 4 | 2 | 82.73 (6106.68) |

| 19 October 2021 | 134 | T52SCD | 1 | 1 | 6.2 |

| 17 January 2022 | 127 | T52SDG | 1 | 1 | 1.9 |

| 24 January 2022 | 54 | T52SDG | 1 | 1 | 1.7 |

| 17 May 2022 | 127 | T52SCD T52SDF | 2 | 2 | 1.6 (0.02) |

| 8 November 2022 | 54 | T52SCG T52SDE | 6 | 3 | 1.87 (8.53) |

| 13 March 2023 | 127 | T52SDF T52SCG T52SCF | 8 | 4 | 16.68 (249.75) |

| 20 March 2023 | 54 | T52SCF | 1 | 1 | 5.7 |

| 8 November 2023 | 127 | T52SDF T52SDD | 4 | 2 | 1.65 (0.04) |

| 20 November 2023 | 127 | T52SDF | 1 | 1 | 1.6 |

| 7 March 2024 | 127 | T52SBF | 1 | 1 | 31 |

| 5 July 2024 | 127 | T52SCG | 1 | 1 | 0.4 |

| 3 September 2024 | 127 | T52SCF | 12 | 1 | 32.27 (39.40) |

| 10 September 2024 | 54 | T52SDG T52SDE | 3 | 8 | 17.48 (1520.31) |

References

- Zhang, Y.; Hallikainen, M.; Zhang, H.; Duan, H.; Li, Y.; San Liang, X. Chlorophyll-a estimation in turbid waters using combined SAR Data with hyperspectral reflectance Data: A case study in Lake Taihu, China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1325–1336. [Google Scholar] [CrossRef]

- Xiong, J.; Lin, C.; Cao, Z.; Hu, M.; Xue, K.; Chen, X.; Ma, R. Development of remote sensing algorithm for total phosphorus concentration in eutrophic lakes: Conventional or machine learning? Water Res. 2022, 215, 118213. [Google Scholar] [CrossRef] [PubMed]

- Ayele, H.S.; Atlabachew, M. Review of characterization, factors, impacts, and solutions of Lake eutrophication: Lesson for lake Tana, Ethiopia. Environ. Sci. Pollut. Res. 2021, 28, 14233–14252. [Google Scholar] [CrossRef] [PubMed]

- Dodds, W.K.; Bouska, W.W.; Eitzmann, J.L.; Pilger, T.J.; Pitts, K.L.; Riley, A.J.; Schloesser, J.T.; Thornbrugh, D.J. Eutrophication of US freshwaters: Analysis of potential economic damages. Environ. Sci. Technol. 2009, 43, 12–19. [Google Scholar] [CrossRef]

- Riza, M.; Ehsan, M.N.; Pervez, M.N.; Khyum, M.M.O.; Cai, Y.; Naddeo, V. Control of eutrophication in aquatic ecosystems by sustainable dredging: Effectiveness, environmental impacts, and implications. Case Stud. Chem. Environ. Eng. 2023, 7, 100297. [Google Scholar] [CrossRef]

- Kim, H.G.; Hong, S.; Chon, T.-S.; Joo, G.-J. Spatial patterning of chlorophyll a and water-quality measurements for determining environmental thresholds for local eutrophication in the Nakdong River basin. Environ. Pollut. 2021, 268, 115701. [Google Scholar] [CrossRef]

- Suresh, K.; Tang, T.; Van Vliet, M.T.; Bierkens, M.F.; Strokal, M.; Sorger-Domenigg, F.; Wada, Y. Recent advancement in water quality indicators for eutrophication in global freshwater lakes. Environ. Res. Lett. 2023, 18, 063004. [Google Scholar] [CrossRef]

- Duan, H.; Zhang, Y.; Zhang, B.; Song, K.; Wang, Z. Assessment of chlorophyll-a concentration and trophic state for Lake Chagan using Landsat TM and field spectral data. Environ. Monit. Assess. 2007, 129, 295–308. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Q.; Li, G.; He, M.; Dong, J.; Yan, H.; Wang, Z.; Duan, Z. A novel multi-source data fusion method based on Bayesian inference for accurate estimation of chlorophyll-a concentration over eutrophic lakes. Environ. Model. Softw. 2021, 141, 105057. [Google Scholar] [CrossRef]

- Park, J.; Khanal, S.; Zhao, K.; Byun, K. Remote sensing of chlorophyll-a and water quality over Inland Lakes: How to alleviate geo-location error and temporal discrepancy in model training. Remote Sens. 2024, 16, 2761. [Google Scholar] [CrossRef]

- Yang, Z.; Reiter, M.; Munyei, N. Estimation of chlorophyll-a concentrations in diverse water bodies using ratio-based NIR/Red indices. Remote Sens. Appl. Soc. Environ. 2017, 6, 52–58. [Google Scholar] [CrossRef]

- Gons, H.J.; Auer, M.T.; Effler, S.W. MERIS satellite chlorophyll mapping of oligotrophic and eutrophic waters in the Laurentian Great Lakes. Remote Sens. Environ. 2008, 112, 4098–4106. [Google Scholar] [CrossRef]

- Dall’Olmo, G.; Gitelson, A.A. Effect of bio-optical parameter variability on the remote estimation of chlorophyll-a concentration in turbid productive waters: Experimental results. Appl. Opt. 2005, 44, 412–422. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Knight, B.R.; Cornelisen, C.; Barter, P.; Kudela, R. Simplifying regional tuning of MODIS algorithms for monitoring chlorophyll-a in coastal waters. Front. Mar. Sci. 2017, 4, 151. [Google Scholar] [CrossRef]

- Cao, Q.; Yu, G.; Sun, S.; Dou, Y.; Li, H.; Qiao, Z. Monitoring water quality of the Haihe River based on ground-based hyperspectral remote sensing. Water 2021, 14, 22. [Google Scholar] [CrossRef]

- Cao, Z.; Ma, R.; Duan, H.; Pahlevan, N.; Melack, J.; Shen, M.; Xue, K. A machine learning approach to estimate chlorophyll-a from Landsat-8 measurements in inland lakes. Remote Sens. Environ. 2020, 248, 111974. [Google Scholar] [CrossRef]

- Ha, N.T.T.; Thao, N.T.P.; Koike, K.; Nhuan, M.T. Selecting the best band ratio to estimate chlorophyll-a concentration in a tropical freshwater lake using sentinel 2A images from a case study of Lake Ba Be (Northern Vietnam). ISPRS Int. J. Geo-Inf. 2017, 6, 290. [Google Scholar] [CrossRef]

- Pyo, J.; Hong, S.M.; Jang, J.; Park, S.; Park, J.; Noh, J.H.; Cho, K.H. Drone-borne sensing of major and accessory pigments in algae using deep learning modeling. GIScience Remote Sens. 2022, 59, 310–332. [Google Scholar] [CrossRef]

- Llodrà-Llabrés, J.; Martínez-López, J.; Postma, T.; Pérez-Martínez, C.; Alcaraz-Segura, D. Retrieving water chlorophyll-a concentration in inland waters from Sentinel-2 imagery: Review of operability, performance and ways forward. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103605. [Google Scholar] [CrossRef]

- Shen, M.; Luo, J.; Cao, Z.; Xue, K.; Qi, T.; Ma, J.; Liu, D.; Song, K.; Feng, L.; Duan, H. Random forest: An optimal chlorophyll-a algorithm for optically complex inland water suffering atmospheric correction uncertainties. J. Hydrol. 2022, 615, 128685. [Google Scholar] [CrossRef]

- Wu, L.; Sun, M.; Min, L.; Zhao, J.; Li, N.; Guo, Z. An improved method of algal-bloom discrimination in Taihu Lake using Sentinel-1A data. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019; pp. 1–5. [Google Scholar]

- Zahir, M.; Su, Y.; Shahzad, M.I.; Ayub, G.; Rehman, S.U.; Ijaz, J. A review on monitoring, forecasting, and early warning of harmful algal bloom. Aquaculture 2024, 593, 741351. [Google Scholar] [CrossRef]

- Gao, L.; Li, X.; Kong, F.; Yu, R.; Guo, Y.; Ren, Y. AlgaeNet: A deep-learning framework to detect floating green algae from optical and SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2782–2796. [Google Scholar] [CrossRef]

- Lavrova, O.Y.; Mityagina, M. Manifestation specifics of hydrodynamic processes in satellite images of intense phytoplankton bloom areas. Izv. Atmos. Ocean. Phys. 2016, 52, 974–987. [Google Scholar] [CrossRef]

- Xin, Y.; Luo, J.; Xu, Y.; Sun, Z.; Qi, T.; Shen, M.; Qiu, Y.; Xiao, Q.; Huang, L.; Zhao, J. SSAVI-GMM: An automatic algorithm for mapping submerged aquatic vegetation in shallow lakes using Sentinel-1 SAR and Sentinel-2 MSI data. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4416610. [Google Scholar] [CrossRef]

- Cen, H.; Jiang, J.; Han, G.; Lin, X.; Liu, Y.; Jia, X.; Ji, Q.; Li, B. Applying deep learning in the prediction of chlorophyll-a in the East China Sea. Remote Sens. 2022, 14, 5461. [Google Scholar] [CrossRef]

- Hamze-Ziabari, S.M.; Foroughan, M.; Lemmin, U.; Barry, D.A. Monitoring mesoscale to submesoscale processes in large lakes with Sentinel-1 SAR imagery: The case of Lake Geneva. Remote Sens. 2022, 14, 4967. [Google Scholar] [CrossRef]

- Qi, L.; Wang, M.; Hu, C.; Holt, B. On the capacity of Sentinel-1 synthetic aperture radar in detecting floating macroalgae and other floating matters. Remote Sens. Environ. 2022, 280, 113188. [Google Scholar] [CrossRef]

- Kim, J.; Lee, T.; Seo, D. Algal bloom prediction of the lower Han River, Korea using the EFDC hydrodynamic and water quality model. Ecol. Model. 2017, 366, 27–36. [Google Scholar] [CrossRef]

- Shin, J.; Lee, G.; Kim, T.; Cho, K.H.; Hong, S.M.; Kwon, D.H.; Pyo, J.; Cha, Y. Deep learning-based efficient drone-borne sensing of cyanobacterial blooms using a clique-based feature extraction approach. Sci. Total Environ. 2024, 912, 169540. [Google Scholar] [CrossRef]

- Lee, S.; Choi, B.; Kim, S.J.; Kim, J.; Kang, D.; Lee, J. Relationship between freshwater harmful algal blooms and neurodegenerative disease incidence rates in South Korea. Environ. Health 2022, 21, 116. [Google Scholar] [CrossRef]

- Kim, Y.W.; Kim, T.; Shin, J.; Lee, D.-S.; Park, Y.-S.; Kim, Y.; Cha, Y. Validity evaluation of a machine-learning model for chlorophyll a retrieval using Sentinel-2 from inland and coastal waters. Ecol. Indic. 2022, 137, 108737. [Google Scholar] [CrossRef]

- Seo, A.; Lee, K.; Kim, B.; Choung, Y. Classifying plant species indicators of eutrophication in Korean lakes. Paddy Water Environ. 2014, 12, 29–40. [Google Scholar] [CrossRef]

- Gomarasca, M.A.; Tornato, A.; Spizzichino, D.; Valentini, E.; Taramelli, A.; Satalino, G.; Vincini, M.; Boschetti, M.; Colombo, R.; Rossi, L. Sentinel for applications in agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 91–98. [Google Scholar] [CrossRef]

- Filipponi, F. Sentinel-1 GRD Preprocessing Workflow. Proceedings 2019, 18, 11. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 data for land cover/use mapping: A review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Sola, I.; García-Martín, A.; Sandonís-Pozo, L.; Álvarez-Mozos, J.; Pérez-Cabello, F.; González-Audícana, M.; Llovería, R.M. Assessment of atmospheric correction methods for Sentinel-2 images in Mediterranean landscapes. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 63–76. [Google Scholar] [CrossRef]

- Kwong, I.H.; Wong, F.K.; Fung, T. Automatic mapping and monitoring of marine water quality parameters in Hong Kong using Sentinel-2 image time-series and Google Earth Engine cloud computing. Front. Mar. Sci. 2022, 9, 871470. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN variants for computer vision: History, architecture, application, challenges and future scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Segal-Rozenhaimer, M.; Li, A.; Das, K.; Chirayath, V. Cloud detection algorithm for multi-modal satellite imagery using convolutional neural-networks (CNN). Remote Sens. Environ. 2020, 237, 111446. [Google Scholar] [CrossRef]

- Song, J.; Gao, S.; Zhu, Y.; Ma, C. A survey of remote sensing image classification based on CNNs. Big Earth Data 2019, 3, 232–254. [Google Scholar] [CrossRef]

- Xue, M.; Hang, R.; Liu, Q.; Yuan, X.-T.; Lu, X. CNN-based near-real-time precipitation estimation from Fengyun-2 satellite over Xinjiang, China. Atmos. Res. 2021, 250, 105337. [Google Scholar] [CrossRef]

- Li, F.; Wang, L.; Liu, J.; Wang, Y.; Chang, Q. Evaluation of leaf N concentration in winter wheat based on discrete wavelet transform analysis. Remote Sens. 2019, 11, 1331. [Google Scholar] [CrossRef]

- Watanabe, F.; Alcântara, E.; Imai, N.; Rodrigues, T.; Bernardo, N. Estimation of chlorophyll-a concentration from optimizing a semi-analytical algorithm in productive inland waters. Remote Sens. 2018, 10, 227. [Google Scholar] [CrossRef]

- Mosca, E.; Szigeti, F.; Tragianni, S.; Gallagher, D.; Groh, G. SHAP-based explanation methods: A review for NLP interpretability. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 4593–4603. [Google Scholar]

- Zhang, J.; Ma, X.; Zhang, J.; Sun, D.; Zhou, X.; Mi, C.; Wen, H. Insights into geospatial heterogeneity of landslide susceptibility based on the SHAP-XGBoost model. J. Environ. Manag. 2023, 332, 117357. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems, Proceedings of the NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Bramich, J.; Bolch, C.J.; Fischer, A. Improved red-edge chlorophyll-a detection for Sentinel 2. Ecol. Indic. 2021, 120, 106876. [Google Scholar] [CrossRef]

- Tran, M.D.; Vantrepotte, V.; Loisel, H.; Oliveira, E.N.; Tran, K.T.; Jorge, D.; Mériaux, X.; Paranhos, R. Band ratios combination for estimating chlorophyll-a from sentinel-2 and sentinel-3 in coastal waters. Remote Sens. 2023, 15, 1653. [Google Scholar] [CrossRef]

- Gregorutti, B.; Michel, B.; Saint-Pierre, P. Correlation and variable importance in random forests. Stat. Comput. 2017, 27, 659–678. [Google Scholar] [CrossRef]

- Janse, R.J.; Hoekstra, T.; Jager, K.J.; Zoccali, C.; Tripepi, G.; Dekker, F.W.; Van Diepen, M. Conducting correlation analysis: Important limitations and pitfalls. Clin. Kidney J. 2021, 14, 2332–2337. [Google Scholar] [CrossRef]

- Namatēvs, I. Deep convolutional neural networks: Structure, feature extraction and training. Inf. Technol. Manag. Sci. 2017, 20, 40–47. [Google Scholar] [CrossRef]

- Zhang, T.; Hu, H.; Ma, X.; Zhang, Y. Long-term spatiotemporal variation and environmental driving forces analyses of algal blooms in Taihu Lake based on multi-source satellite and land observations. Water 2020, 12, 1035. [Google Scholar] [CrossRef]

- Kowatsch, D.; Müller, N.M.; Tscharke, K.; Sperl, P.; Bötinger, K. Imbalance in Regression Datasets. arXiv 2024, arXiv:2402.11963. [Google Scholar] [CrossRef]

- Szeto, M.; Werdell, P.; Moore, T.; Campbell, J. Are the world’s oceans optically different? J. Geophys. Res. Ocean. 2011, 116, C00H04. [Google Scholar] [CrossRef]

- Pasupa, K.; Sunhem, W. A comparison between shallow and deep architecture classifiers on small dataset. In Proceedings of the 2016 8th International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 5–6 October 2016; pp. 1–6. [Google Scholar]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Shi, X.; Gu, L.; Li, X.; Jiang, T.; Gao, T. Automated spectral transfer learning strategy for semi-supervised regression on Chlorophyll-a retrievals with Sentinel-2 imagery. Int. J. Digit. Earth 2024, 17, 2313856. [Google Scholar] [CrossRef]

- Rani, V.; Nabi, S.T.; Kumar, M.; Mittal, A.; Kumar, K. Self-supervised learning: A succinct review. Arch. Comput. Methods Eng. 2023, 30, 2761–2775. [Google Scholar] [CrossRef]

| Name | Lacation | No. of Sampling Sites | Lake Area | Chl-a (mg/m3) | |

|---|---|---|---|---|---|

| Latitude | Longitude | ||||

| Ganwol | 36°61′68″ | 126°47′8″ | 2 | 26.4 | 69.97/63.48 |

| Gyeongcheonji | 36°02′35″ | 127°23′93″ | 2 | 3.2 | 8.17/7.5 |

| Gyeongpo | 37°79′94″ | 128°90′98″ | 2 | 0.9 | 16.58/25.39 |

| Gwangdong | 37°34′16″ | 128°94′98″ | 1 | 1 | 5.87/5.45 |

| Gimcheon Buhang | 35°98′51″ | 127°99′49″ | 1 | 2.5 | 5.46/4.56 |

| Nakdong estuary | 37°00′07″ | 127°99′64″ | 2 | 2.2 | 21.73/16.26 |

| Namgang | 35°10′19″ | 128°01′53″ | 3 | 23.6 | 3.41/2.70 |

| Dalbang | 37°50′67″ | 129°03′43″ | 0.5 | 4.57/3.94 | |

| Daeahji | 35°98′12″ | 127°26′19″ | 3 | 2.3 | 4.67/3.02 |

| Daecheong | 36°37′11″ | 127°49′56″ | 6 | 72.8 | 7.63/10.10 |

| Dae | 36°99′71″ | 126°46′97″ | 3 | 60.4 | 33.49/29.47 |

| Doam | 37°36′14″ | 128°42′27″ | 2.2 | 2.2 | 25.03/33.11 |

| Milyang | 38°25′44″ | 128°55′64″ | 2 | 3 | 2.87/2.15 |

| Boryeong | 36°24′15″ | 126°65′59″ | 3 | 5.8 | 4.69/5.30 |

| Bohyeonsan | 35°84′61″ | 129°27′1″ | 1 | 1.5 | 11.55/9.03 |

| Bunam | 36°62′86″ | 126°36′26″ | 3 | 1.4 | 39.31/27.51 |

| Sapgyo | 36°37′11″ | 127°49′56″ | 3 | 28.3 | 48.08/45.54 |

| Soyang | 35°83′53″ | 129°50′95″ | 5 | 70 | 1.60/1.59 |

| Asan | 36°91′43″ | 126°92′33″ | 3 | 24.3 | 21.78/26.60 |

| Yongdam | 36°02′35″ | 127°23′93″ | 4 | 36.2 | 6.38/4.17 |

| Unmun | 37°08′12″ | 127°26′87″ | 1 | 7.8 | 6.16/3.95 |

| Woncheonji | 34°82′39″ | 128°63′66″ | 3 | 0.4 | 18.19/9.02 |

| Uiam | 35°98′51″ | 127°99′49″ | 3 | 17 | 7.52/6.29 |

| Imha | 36°24′15″ | 126°65′59″ | 3 | 26.4 | 1.85/0.89 |

| Jangseong | 36°62′86″ | 126°36′26” | 2 | 6.9 | 9.32/7.36 |

| Jangheung | 35°54′59″ | 127°53′63″ | 4 | 10.3 | 5.02/2.94 |

| Junam | 37°72′42″ | 127°42′58″ | 1 | 7.8 | 65.15/57.20 |

| Juam | 35°67′71″ | 126°55′97″ | 3 | 33 | 8.03/8.72 |

| Cheongpyeong | 37°72′42″ | 127°42′58″ | 3 | 17.6 | 3.54/3.66 |

| Chuncheon | 37°97′90″ | 127°65′10″ | 3 | 2.7 | 5.94/4.63 |

| Chungju | 37°00′07″ | 127°99′64″ | 4 | 97 | 3.06/3.69 |

| Chungju jojeongji | 37°40′19″ | 127°86′36″ | 1 | 3.4 | 3.56/3.93 |

| Paldang | 35°98′12″ | 127°26′19″ | 5 | 36.5 | 18.30/17.89 |

| Hapcheon | 36°57′99″ | 128°78′21″ | 3 | 25 | 0.73/0.54 |

| Hwacheon | 35°84′61″ | 129°27′1″ | 3 | 38.2 | 2.04/1.11 |

| Processing | Parameter | Value |

|---|---|---|

| Apply Orbit File | Polynomial Degree | 33 |

| Thermal Noise Removal | Remove Thermal Noise | True |

| Border Noise Removal | Border Limit | 500 |

| Trim Threshold | 0.5 | |

| Calibration | Output Format | Sigma0 |

| Speckle-Filter | Filter Type | Lee Sigma |

| Filter Size | 3 × 3 | |

| Window Size | 7 × 7 | |

| Sigma Value | 0.9 | |

| Terrain Correction | DEM | SRTM 3Sec |

| Resampling Method | Bilinear Interpolation | |

| Pixel Spacing | 10.0 m |

| Hyperparameter | Value |

|---|---|

| Epoch | 1000 |

| Batch size | 10 |

| Learning rate | 0.001 |

| Layer | Model A | Model B |

|---|---|---|

| Conv2D + ReLU + Batch normalization | (10, 120) | (8, 88) |

| Conv2D + ReLU + Batch normalization | (120, 120) | (88, 88) |

| Conv2D + ReLU + Batch normalization | (120, 80) | (88, 56) |

| Conv2D + ReLU + Batch normalization | (80, 80) | (56, 56) |

| Conv2D + ReLU + Batch normalization | (80, 72) | (56, 32) |

| Flatten | (72, 72) | (32, 32) |

| Linear + ReLU | (72, 80) | (32, 56) |

| Linear + ReLU | (80, 120) | (56, 88) |

| Linear + ReLU | (120, 1) | (88, 1) |

| Model A | Model B | |||

|---|---|---|---|---|

| Train | Test | Train | Test | |

| R2 | 0.8958 | 0.7992 | 0.8939 | 0.7075 |

| RMSE (mg/m3) | 11.3303 | 10.3282 | 11.2962 | 12.4649 |

| RPD | 3.0604 | 2.2315 | 3.0696 | 1.8489 |

| Bias (mg/m3) | −0.0529 | −0.4360 | 0.6826 | 0.1625 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, B.; Lee, S.; Heo, J.; Lee, J.; Lee, M.-J. Deep Learning-Based Retrieval of Chlorophyll-a in Lakes Using Sentinel-1 and Sentinel-2 Satellite Imagery. Water 2025, 17, 1718. https://doi.org/10.3390/w17111718

Jeong B, Lee S, Heo J, Lee J, Lee M-J. Deep Learning-Based Retrieval of Chlorophyll-a in Lakes Using Sentinel-1 and Sentinel-2 Satellite Imagery. Water. 2025; 17(11):1718. https://doi.org/10.3390/w17111718

Chicago/Turabian StyleJeong, Bongseok, Sunmin Lee, Joonghyeok Heo, Jeongho Lee, and Moung-Jin Lee. 2025. "Deep Learning-Based Retrieval of Chlorophyll-a in Lakes Using Sentinel-1 and Sentinel-2 Satellite Imagery" Water 17, no. 11: 1718. https://doi.org/10.3390/w17111718

APA StyleJeong, B., Lee, S., Heo, J., Lee, J., & Lee, M.-J. (2025). Deep Learning-Based Retrieval of Chlorophyll-a in Lakes Using Sentinel-1 and Sentinel-2 Satellite Imagery. Water, 17(11), 1718. https://doi.org/10.3390/w17111718