Abstract

In the practical production environment, the complexity and variability of hydroelectric units often result in a need for more fault data, leading to inadequate accuracy in fault identification for data-driven intelligent diagnostic models. To address this issue, this paper introduces a novel fault diagnosis method tailored for unbalanced small-sample states in hydroelectric units based on the Wasserstein generative adversarial network (W-GAN). Firstly, the fast Fourier transform is used to convert the signal from the time domain to the frequency domain to obtain the spectral data, and the W-GAN is trained to generate false spectral data with the same probability distribution as the real fault data, which are combined with the actual data and inputted into the 1D-CNN for feature extraction and fault diagnosis. In order to assess the effectiveness of the proposed model, a case study was conducted using actual data from a domestic hydropower plant, and the experimental results show that the sample features can be effectively enriched via data enhancement performed on small-sample data to improve the accuracy of fault diagnosis, which verifies the effectiveness of the method proposed in this paper.

1. Introduction

The study of hydropower unit fault diagnosis is significant in ensuring hydropower units’ safe and stable operation. Once a hydropower unit fails, this may lead to the instability and insecurity of the power station and the power grid and even produce irreversible effects [1]. By carrying out fault diagnosis research, unit faults can be found in time to ensure the unit’s safe operation and prolong the unit’s service life. In addition, fault diagnosis research can also improve the reliability and efficiency of unit operations and reduce the maintenance costs and energy consumption, which is also significant for the sustainable development and utilization of hydropower energy [2,3].

Research indicates that most of the mechanical failures in hydroelectric units can be detected via vibration analysis [4,5]. Vibration signals constitute a crucial monitoring indicator for assessing the operational status of hydroelectric units. These signals encapsulate vital information about the internal functioning and potential faults within the units. By analyzing and processing vibration signals, it is possible to detect equipment anomalies in a timely manner, predict potential failures, reduce downtime, and enhance the availability and operational efficiency of the units. This ensures their safe and stable operation [6,7]. As the failure of rotating machinery, such as hydroelectric units, will lead to changes in the frequency distribution of the vibration signal, some scholars use the Fourier change as the core of the frequency domain analysis method, the signal from the time domain into the frequency domain, to obtain the spectrum. The spectrum contains the signal amplitude of the frequency components phase and other information; the method of rotating machinery in the field of fault feature extraction occupies a vital position [8]. Scholars at home and abroad have conducted in-depth studies on this. For example, Attoui et al. applied the theory of discrete wavelet transform (DWT) and fast Fourier transform (FFT) to extract the amplitude of the primary bearing defect frequency as a fault feature from the vibration signals of rotating machinery [9]. Zhang et al. proposed an intelligent fault diagnosis method with rolling bearings based on the short-time Fourier transform and convolutional neural network [10]. Although these methods can visualize the frequency distribution of vibration signals to analyze the possible causes of faults, they require complete a priori knowledge of spectral analysis for fault diagnosis, and thus are inefficient for fault diagnosis.

With the rapid development of machine learning and deep learning, data-driven fault diagnosis methods represented by convolutional neural networks (CNNs) are widely used in the field of fault diagnosis [11,12,13,14]. These intelligent algorithms do not require too much expert experience. They can perform adaptive feature dimensionality reduction, feature extraction, and classification on the data, and the diagnostic efficiency is high. For example, Kolar et al. use Bayesian optimization to optimize the hyperparameters of convolutional neural networks; by using the optimized hyperparameters, the CNN model can classify eight different machine states and two rotational speeds, which effectively improves the diagnostic accuracy [11]. Wang et al. proposed an intelligent fault diagnosis method based on Radargram and GoogleNet, improved using depth-separated convolution, to solve the problems of low recognition accuracy and slow computation speed in the current hydroelectric generator fault diagnostic model [12].

However, these data-driven algorithms represented by deep learning need a large number of data for training [15,16]. In contrast, the number of faults in hydropower units is relatively small, and due to the complexity and variability of the working environment of hydropower units, the actual fault data are often limited, which restricts the model’s accuracy and generalization ability. For fault diagnosis under unbalanced small-sample conditions, many scholars have utilized generative adversarial networks (GANs) in other fields to implement data augmentation for a limited number of faulty samples. For example, Zhang et al. used GAN to enhance the vibration signals in wind turbines to solve the problem of unbalanced data [17]. Yang et al. extended the sample set by learning the true distribution of bearing fault samples through conditional generative adversarial networks (CGANs) and then used a convolutional neural network to improve the accuracy of fault diagnosis [18]. Chen et al. employed GAN to solve the problem of insufficient data labeling and then improved the accuracy of wind turbine fault diagnosis by optimizing long short-term memory (LSTM) networks with Bayesian methods [19]. These scholars have effectively used GAN to overcome the problem of feature scarcity due to insufficient samples, providing ample data for fault diagnosis and presenting a viable approach to improving the accuracy of diagnosing faults in small-sample conditions.

Up until now, the field of fault diagnosis for hydroelectric generating units has faced a similar issue: fault samples for these units are often scarce and difficult to obtain. This leads to a situation where normal samples are abundant, while fault samples make up a very small proportion, which is disadvantageous for fault diagnosis. Additionally, ac-cording to the author’s understanding, there is still limited research on the imbalanced small-sample fault diagnosis for hydroelectric generating units. Therefore, it is necessary to conduct fault diagnosis research for hydroelectric generating units under the condition of small-sample imbalance to explore how to effectively augment data under limited fault sample conditions, thereby significantly improving the accuracy of fault diagnosis and filling the research gap concerning fault diagnosis for hydroelectric units under small-sample conditions.

In this paper, in the proposed W-GAN-based fault diagnosis method for hydroelectric units in an imbalanced small-sample state, firstly, the signal is converted from the time domain to the frequency domain using the FFT to obtain the spectral information for the vibration signal, the samples expand the spectrum through the W-GAN, and the data generated by the W-GAN in different epochs are combined with the actual data, respectively. The combined data are inputted into the one-dimensional convolutional neural network (1D-CNN) to diagnose the fault, thus improving the fault diagnosis accuracy. The main innovations are as follows:

- (1)

- Aiming to solve the problem of fewer sample data for hydropower unit faults, we propose the W-GAN hydropower unit data augmentation approach;

- (2)

- We effectively expand the sample features and improve the accuracy of fault diagnosis;

- (3)

- For cases in which the training data are sufficiently small or the sample features are single, we make full use of the data generated through the W-GAN training process Generator in different epochs, combining them with the actual data to enrich the sample features.

The paper is organized as follows: Section 2 briefly introduces the mathematical principles of GAN, W-GAN, and 1D-CNN. Section 3 focuses on the implementation process of the methodology proposed in this paper. Section 4 verifies the validity and superiority of the methodology of this paper through engineering cases. Finally, Section 5 gives the conclusion.

2. Theoretical Background

2.1. W-GAN

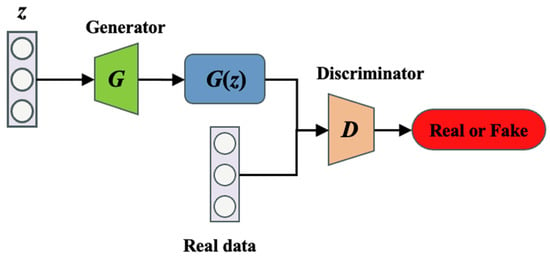

GAN is an unsupervised deep-learning architecture proposed by Ian Goodfellow and other scholars in 2014 [20]. As shown in Figure 1, the definitions of specific parameters in the network are shown in Table 1; GAN mainly comprises a Generator and a Discriminator. The core idea of this network is to establish an adversarial relationship between the Generator and the Discriminator. In this case, the Generator generates false data, while the Discriminator’s task is to distinguish whether the input data originate from a real dataset or is generated by the Generator. Throughout many epochs, the Generator improves the veracity of the data, while the Discriminator continuously improves its classification accuracy. Theoretically, when the model reaches Nash equilibrium, the data generated by the Generator will be consistent with the distribution of the actual data, making it impossible for the Discriminator to differentiate and mark the model as having reached an optimal training state.

Figure 1.

Generating adversarial network structure.

Table 1.

Constituent elements and their roles in generative adversarial networks (GANs).

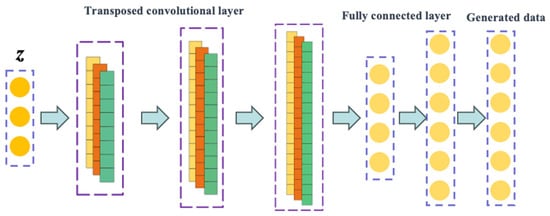

The Generator usually consists of network layers, such as a fully connected layer, in-verse convolution layer, pooling layer, batch normalization layer, etc. [21]. Its network structure is shown in Figure 2. Its primary role is to convert a random, uniformly distributed vector input into an output similar to the actual data. During the training process, the Generator’s goal is to deceive the Discriminator so that it cannot determine whether the generated data are true or false. Therefore, the structure and parameters of the Generator are adjusted to ensure that the output of the Generator is as close as possible to the distribution of the actual data.

Figure 2.

Generator structure.

The primary role of the Discriminator is to distinguish whether the Generator or actual data generate the input data; the structure is similar to the convolutional neural network, consisting of a fully connected layer, convolutional layer, pooling layer, batch normalization layer, and other network layers. When the input data comprise a one-dimensional signal input, a one-dimensional convolution operation is first performed on the input signal to extract the feature information for the signal. Then, the extracted feature vector is inputted into the fully connected layer, which is mapped to a value between 0 and 1 via a nonlinear transformation, indicating the probability that the input signal is an actual signal.

W-GAN is based on GAN using Wasserstein distance as a loss function, calculated as shown in Equation (1). Wasserstein distance provides a more meaningful measure of the difference between two distributions. When the Wasserstein loss value decreases, the similarity of the generated data increases. Therefore, compared to the traditional loss function, W-GAN has the advantages of a smoother gradient, more meaningful loss, and a reduced probability of pattern collapse during the training process.

where and represent real and generated samples, denotes the set of all joint distributions , where and are the marginal distributions of and .

2.2. 1D-CNN

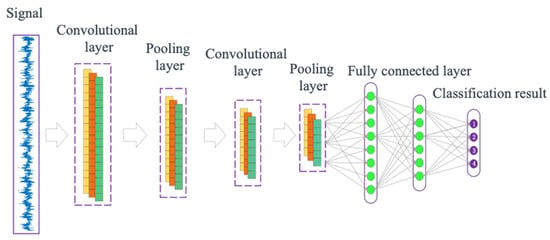

The network 1D-CNN is mainly used to process one-dimensional sequence data, such as text, time series data, etc. [22], Moreover, its network structure is shown in Figure 3. To perform convolution operations on the input data to extract features, 1D-CNN utilizes a one-dimensional convolution kernel. In 1D-CNN, the convolution kernel moves in one direction along the sequence and convolves with only a portion of the sequence to extract local features, which are then compressed into a fixed-length vector via pooling operations. Generally, the convolutional layer and pooling layer alternate; the feature vector extracted by the convolutional layer is further condensed and extracted by the pooling layer to obtain a deeper feature vector, and the fully connected layer performs a nonlinear computation on the extracted feature vector and ultimately outputs the classification result.

Figure 3.

One-dimensional convolutional neural network structure.

Usually, 1D-CNN uses cross entropy as the loss function. The mathematical expression of the loss function is shown in Equation (2).

where is the predicted value; is the actual value; and is the number of input data samples.

3. Proposed Method

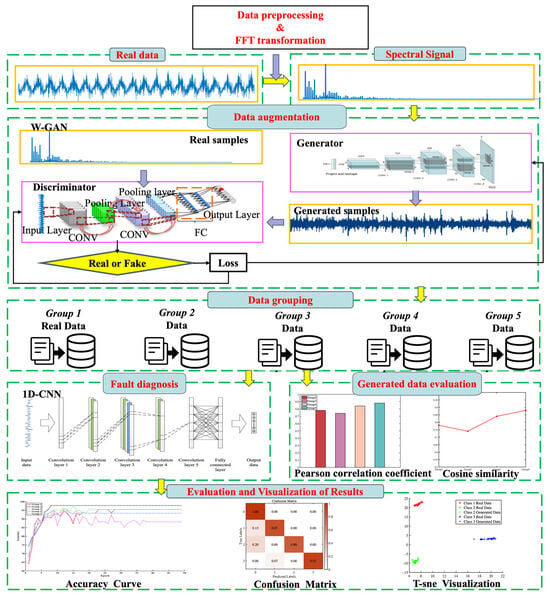

This paper proposes a small-sample fault diagnosis method based on W-GAN to improve the low accuracy of the small-sample fault diagnosis of hydroelectric unit imbalance. Firstly, FFT converts the vibration signal from the time domain to the frequency domain. Then, the actual data are expanded via W-GAN to achieve the purpose of enriching the sample features. The expanded samples are inputted into 1D-CNN to carry out feature extraction and fault diagnosis to further improve the accuracy of fault diagnosis in the hydropower unit.

In addition, to address the problem of the low fault diagnosis rate due to fewer features in the un-balanced small-sample state of the hydropower unit, this paper also explores the combination of the data generated by the W-GAN generator under different epochs with the actual data in order to improve the accuracy of fault diagnosis. In this paper, the flowchart for the unbalanced small-sample fault diagnosis method based on W-GAN hydroelectric units is shown in Figure 4, and the specific steps are as follows:

Figure 4.

Flow diagram of the proposed method.

- The collection of vibration waveform data from mechanical vibrations associated with hydroelectric units using sensors;

- The zero-mean preprocessing of collected data on different fault types in hydroelectric units;

- The preprocessed waveform data are converted to frequency domain via FFT, and the spectrum is obtained;

- The spectral dataset is divided into a training set and a test set according to the proportion of unbalanced small-sample states, where the training set is used to train W-GAN and the test set is used to validate the model for fault diagnosis;

- We train W-GAN using the training set, obtain the data generated via W-GAN in different epochs, and expand the dataset for the training set;

- The expanded dataset trains 1D-CNN, and the test set is inputted to 1D-CNN for fault diagnosis.

4. Experimental Verification

4.1. Introduction of Experimental Data

On 28 August 2015, Unit 3 of a power station in China suffered a hydraulic imbalance failure when the annular steel plate of the unit’s rotor chamber fell off. The failure is shown in Figure 5. Based on the status of the unit before and after the failure and the on-site maintenance report, experts determined that the unit operated normally until 26 August 2015. On 26 August 2015, the X-direction swing amplitude of the unit’s hydraulic conductor showed significant variation. On 28 August 2015, the X-direction swing channel of the unit’s hydraulic conductor was alarmed. The turbine model of the unit is ZZA315-LJ-800 with a rated speed of 107.1 rpm, a rated power of 200 MW, and a rated head of 47 m. In conclusion, the operation process of the above mentioned unit was classified into three states. Before 26 August 2015, it was in the normal state; between 26 August 2015 and 28 August 2015, it was in the fault warning state; and after 28 August 2015, it was in the fault state. During this operation, the X-direction swing channel of the water guide is sensitive to the change of the unit’s operating state. Therefore, the data for the X-direction waveform signal of the water guide oscillations in the three states were collected from the historical database for analysis.

Figure 5.

Failure of Unit 3 of SK Power Station.

4.2. Data Preprocessing

In this study, a total of 40 samples of normal operation states (hereinafter referred to as Class 1), 30 fault warning states (hereinafter referred to as Class 2), and 30 fault states (hereinafter referred to as Class 3) were collected. Each waveform contains 16 key phases and a total of 4096 points, and the sampling frequency is 458 Hz.

- (a)

- Zero-mean normalization

In the data preprocessing step, this paper adopts the zero-mean normalization method, which is a widely recognized and applied technique in the fields of signal processing, image processing, and machine learning. The reasons for choosing this method are as follows: it effectively removes the overall bias from the dataset, making the data more stable and easier to process, and secondly, zero-mean normalization provides a consistent distribution and a concentrated range for the data [23].

For GAN, data normalization is a more critical preprocessing step. Because the GAN model training process depends on the distribution and range of the data, an uneven data distribution or large data range may lead to poor training results. Data normalization can make the data distribution more uniform and the range more concentrated, which makes it easier to train a stable Generator and Discriminator. Typically, the normalization method used in GANs is to zero-mean the data, i.e., subtract the data from its mean and divide by the standard deviation. This means that the data have a mean of 0 and a standard deviation of 1, making the model easier to train. The zero-mean treatment is shown in Equation (3):

where represents the waveform data, is the mean of the data, and is the standard deviation of the data.

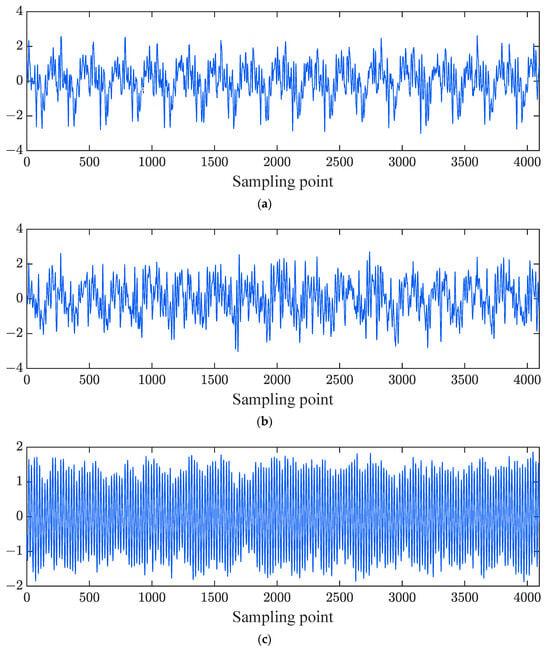

The time-domain waveforms of each type of data after zero-mean normalization are shown in Figure 6, and the normalized amplitudes are unitless normalized values.

Figure 6.

Time domain waveform in different states. (a) Time domain waveform of Class 1 data. (b) Time domain waveform of Class 2 data. (c) Time domain waveform of Class 3 data.

- (b) Fourier Transform

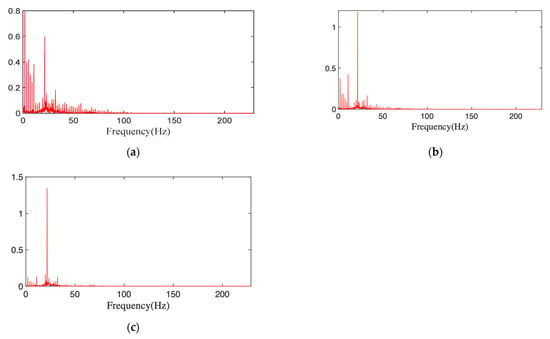

The FFT allows the hydroelectric unit vibration signal to be transferred from the time domain to the frequency domain, thus visualizing the intensity of the individual frequency components. This is particularly important for fault diagnosis, as different failure modes or mechanical problems produce specific frequency components. Many features can be presented in the frequency domain regarding the characteristics of the vibration signal, such as the dominant frequency, energy distribution, etc. These features are valuable in applications such as fault diagnosis, health monitoring, and predictive maintenance. After the FFT transform, the actual data with a sampling point count of 4096 and a sampling frequency of 458 Hz are converted to the frequency domain, where the window size of the FFT is set to 1 s to match the sampling frequency and to ensure the integrity of the signal. The time domain waveforms of the four types of vibration data and the frequency spectra obtained via FFT variation are shown in Figure 7.

Figure 7.

Spectrum in different states. (a) Spectrum of Class 1 data. (b) Spectrum of Class 2 data. (c) Spectrum of Class 3 data.

4.3. Data Augmentation Based on W-GAN

The method proposed in this paper takes the vibration signal spectrum of the hydropower unit as the dataset, so before training the W-GAN, it is necessary to divide the four classes of spectral signal dataset first. The purpose of the training set is to train W-GAN to generate data, while the test set is used for fault diagnosis accuracy testing. In order to achieve the purpose of unbalancing the small size of the dataset, the number of Class 2 data and Class 3 data in the training set is lower than the number of Class 1 data; the specific number is shown in Table 2

Table 2.

Experimental data dataset.

This experiment is based on the deep learning framework Pytorch, and the detailed parameters of the Generator and Discriminator network architecture are shown in Table 3. The network learning rate is 0.001, the number of training epochs is 300, and the batch size is 2. During training, a Gaussian distribution generates a random noise vector as the input to the Generator. The size of vector is 1 × 200. After processing by several linear layers inside the Generator, the final false spectrum data are obtained with a length of 1 × 2048. The generated false spectrum data and actual data are jointly inputted into the Discriminator, and a convolution layer is added to the Discriminator to increase the Discriminator’s ability to discriminate. The convolutional layer alternates with the pooling layer, and the feature vectors extracted by the convolutional layer are further condensed and extracted by the pooling layer. The deeper feature vectors are inputted into the linear layer for discrimination. The Generator and Discriminator learn against each other. Eventually, the Discriminator establishes the best decision boundary between the actual and generated data distribution, and the data generated by the Generator tend to the probability distribution of the actual data.

Table 3.

W-GAN network structure parameter.

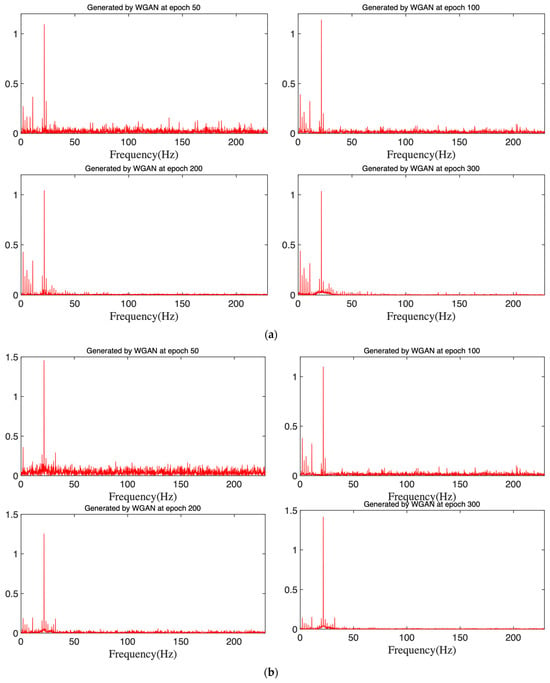

However, due to the small size of the original dataset or lack of diversity or the training process W-GAN in the incomplete convergence, the Generator-generated data distribution is more random, perhaps increasing the diversity of the distribution of sample features, which can help to achieve the purpose of expanding the samples. Therefore, this experiment was performed with three types of fault spectrum data, training 300 rounds of storage WGAN for 50, 100, 200, or 300 epochs when the Generator generates the spectrum of the data, as shown in Figure 8.

Figure 8.

Data generated via W-GAN at different epochs. (a) Class 2 data generated via W-GAN at different epochs. (b) Class 3 data generated via W-GAN at different epochs.

Comparing Figure 7 and Figure 8, it can be seen that as the epoch increases, the generated data are gradually similar to the original data, indicating that the generator in the model learns the probability distribution of the original data through the Westminster distance from the discriminator, and the model is able to capture the latent structure in the original data without overfitting or introducing unnecessary noise during the learning process, and accurately reproduces the intrinsic structure of the data in the multidimensional data space.

4.4. Evaluation of the Data Generated

Pearson’s correlation coefficient (PCC) and cosine similarity (CS) are commonly used to quantify the degree of similarity between two variables. Therefore, in order to assess the similarity between the probability distribution of the generated spectral data and the actual data, this paper quantitatively describes the similarity of the generated data by calculating the mean square error between the generated data and the actual data with the PCC and CS.

- (a)

- PCC

PCC is a statistic used to measure the strength and direction of the linear relationship between two variables, and its value ranges between -1 and 1. The larger the value of PCC, the higher the similarity between the two variables, and its mathematical formula is shown in Equation (4).

where and are the ith values of the vectors , , are vector , mean values, , is the number of observations in the dataset.

- (b)

- Cosine similarity

CS is a similarity measure commonly used in machine learning and data mining, especially in text mining. It measures the cosine of the angle between two vectors. Its value ranges from −1 to 1. When two vectors have a high cosine similarity, it means that these two vectors are very similar in distribution.

where is the inner product of vectors and , and and are the Euclidean norms of vectors A and B.

We calculated the PCC and CS for the generated and actual data; the results are shown in Table 4. As the number of epochs increases, the PCC and CS of the generated data and actual data gradually increase, indicating that the Generator gradually learns the probability distribution of the actual data so that the generated data becomes gradually similar to the actual data, which is consistent with the observation in Figure 8. In order to evaluate the quality of the generated data, it is necessary to further utilize the generated data for feature extraction fault diagnosis through 1D-CNN.

Table 4.

Calculation of PCC and CS for generated data and real data with different numbers of epochs.

The enhanced fault dataset is shown in Table 5. Group 1 includes the data before enhancement, and the remaining groups comprise the enhanced dataset. Since it can be seen from Figure 8 that at an epoch of 50, the generated data are more different from the original data, the generated data at an epoch of 50 are discarded to go, and we merge the rest of the generated data with the data in the actual training set in Table 3 to form three groups of data: group 2, group 3, and group 4. The number of samples in each group in the training set is shown in Table 5, and 10 groups of data are added for each fault type, so that each type of data has the same proportion of samples in the training set, and there are a total of 60 samples in each group in the training set, in which the proportion of samples of each type is equal.

Table 5.

Dataset after data augmentation.

4.5. Fault Diagnosis and Result Analysis

With the rapid development of big data and deep learning, intelligent algorithms such as CNN are widely used in fault diagnosis to perform adaptive feature dimensionality reduction and feature extraction on data. In this paper, the structure of the 1D-CNN network built using the deep learning framework Pytorch is shown in Table 6. Since CNN training is performed in batches, the training set is randomly divided to ensure eight sets of sample data are included in each batch. Stochastic gradient descent (SGD) is the optimization function in the model’s training process. In order to measure the difference between the predictive accuracy of the model and the actual labels, the cross-entropy loss (CEL) function is used. The initial learning rate was set to 0.005 to balance the model’s convergence speed and training stability.

Table 6.

The 1D-CNN network structure.

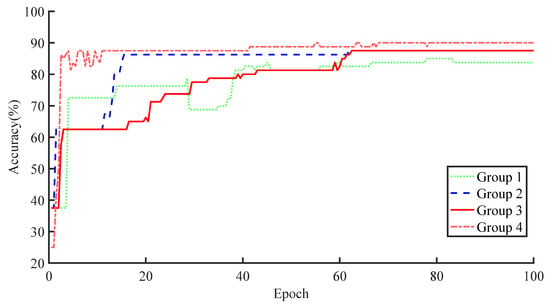

To explore the differences in the data features generated by the generator at 100, 200, and 300 epochs in Section 4.3. The data in Table 6 are fed into 1D-CNN for classification, respectively, and the performance of 1D-CNN in the test set is recorded, and the accuracy curve is shown in Figure 9, where the X-axis represents the number of epochs during training and the Y-axis represents the test set in 1D-CNN accuracy, and the curve corresponding to group 1 represents the accuracy of 1D-CNN on the test set without extended samples. In contrast, the curves corresponding to the rest of the groups are the accuracies of 1D-CNN on the test set after being trained with extended samples, so the extended sample approach improves the accuracy of fault diagnosis relative to the accuracy of the unexpanded samples. The improved fault diagnosis accuracy relative to the accuracy of the unexpanded samples verifies the effectiveness of the method proposed in this paper. With the increase in W-GAN training epochs, the generator gradually learns the probability distribution of the actual data, and the extended sample features are increased, so the accuracy of 1D-CNN in the test set is gradually improved.

Figure 9.

Accuracy of different group data in the test set in 1D-CNN.

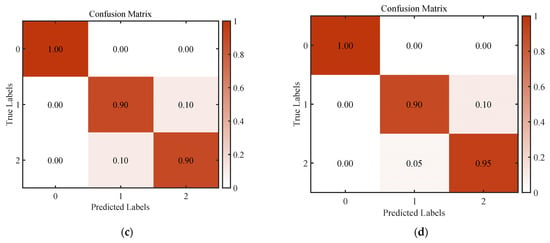

The confusion matrix, a tool commonly used to evaluate the performance of classification models, provides an intuitive way of understanding model performance by comparing the model’s predictions with actual results. The confusion matrix shows the performance of the classification model in various categories, including correct and incorrect predictions. Figure 10 shows the results of the confusion matrix, demonstrating the accuracy of the test set in the model as the 1D-CNN converges in Figure 9.

Figure 10.

Confusion matrix of 1D-CNN performance on different group test sets. (a) Group 1. (b) Group 2. (c) Group 3. (d) Group 4.

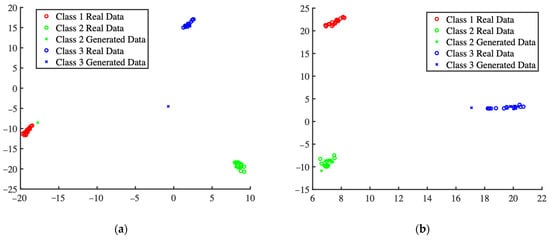

Figure 11 shows the feature visualization of Group 2 and Group 4 data after the dimensionality reduction process in the fully connected layer of 1D-CNN; from Figure 11a, we can see that the features of a few generated data from Group 2 show a large difference from the features of the actual data, from Figure 11b, we can see that the distribution of the generated data and the distribution of the actual data gradually overlap with the distribution of the actual data. The features are more similar when the W-GAN is fully converged.

Figure 11.

Visualization of T-sne downscaling of generated data with real data. (a) Group 2 data visualization results of dimensionality reduction before network classification. (b) Group 4 data visualization results of dimensionality reduction before network classification.

In order to further validate the superiority of the method proposed in this paper, comparative experiments are also conducted. Group 1 in Table 6 represents the actual data without data augmentation, and Group 4 represents the data after inputting Group 1 into W-GAN for data augmentation. So, in order to highlight the effectiveness of the proposed method in small-sample fault diagnosis, Group 1 in Table 6 is inputted into SVM, 1D-CNN, and BPNN, and Group 4 data are inputted into BPNN and 1D-CNN for classification testing, respectively. BPNN and 1D-CNN are used for the classification test. The above experiment was repeated 10 times, the average accuracy was calculated, and the results are shown in Table 7.

Table 7.

Results of comparative analysis of different methods.

The results in Table 7 show that after the same data augmentation, the recognition accuracy of spectral data input into 1D-CNN is higher than the input into BPNN, which indicates the powerful function of CNN in feature extraction; on the other hand, the ac-curacy of both methods is higher than that of the method without data augmentation, which indicates that it is feasible for this paper to use W-GAN for data augmentation. This enriches the sample features effectively, resulting in the accuracy of the input into 1D-CNN being higher than that of the other methods.

5. Conclusions

In this paper, an intelligent fault diagnosis scheme for hydroelectric units based on small-sample imbalance is proposed. The effectiveness of the proposed scheme and its superiority over existing fault diagnosis methods are verified through actual data. Several conclusions can be summarized as follows:

- The small-sample fault diagnosis method based on W-GAN proposed in this paper realizes the augmentation of small-sample hydropower unit imbalance data by combining the fault data of the No. 3 hydropower unit of a power station in China. The results show that the features of the enhanced samples are more abundant. The accuracy of fault diagnosis has been improved by 7% on average compared with that of the unenhanced fault diagnosis;

- By combining the data generated by W-GAN in different iterations with the actual data, it was found that the more iterations of the model, the richer the sample features, and the higher the accuracy in CNN troubleshooting identification.

Author Contributions

Conceptualization, W.S.; Data curation, B.X.; Formal analysis, Z.X.; Methodology, W.S. and Z.X.; Software, W.S. and Y.Z.; Validation, W.S. and Y.W.; Visualization, H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 51979204).

Data Availability Statement

The data used to support the findings of this study are not available because they involve the parameters and full characteristic data of the actual hydropower station.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- de Santis, R.B.; Costa, M.A. Extended isolation forests for fault detection in small hydroelectric plants. Sustainability 2020, 12, 6421. [Google Scholar] [CrossRef]

- Yuan, Z.; Xiong, G.; Fu, X. Artificial Neural Network for Fault Diagnosis of Solar Photovoltaic Systems: A Survey. Energies 2022, 15, 8693. [Google Scholar] [CrossRef]

- Chen, Q.; Han, Y.; Wu, J.; Gan, Y. Energy-Saving Task Scheduling Based on Hard Reliability Requirements: A Novel Approach with Low Energy Consumption and High Reliability. Sustainability 2022, 14, 6591. [Google Scholar] [CrossRef]

- Tian, H.; Yang, L.; Ji, P. Intelligent Analysis of Vibration Faults in Hydroelectric Generating Units Based on Empirical Mode Decomposition. Processes 2023, 11, 2040. [Google Scholar] [CrossRef]

- Dao, F.; Zeng, Y.; Zou, Y.; Li, X.; Qian, J. Acoustic vibration approach for detecting faults in hydroelectric units: A review. Energies 2021, 14, 7840. [Google Scholar] [CrossRef]

- Liao, G.-P.; Gao, W.; Yang, G.-J.; Guo, M.-F. Hydroelectric generating unit fault diagnosis using 1-D convolutional neural network and gated recurrent unit in small hydro. IEEE Sens. J. 2019, 19, 9352–9363. [Google Scholar] [CrossRef]

- Zhang, F.; Guo, J.; Yuan, F.; Shi, Y.; Li, Z. Research on Denoising Method for Hydroelectric Unit Vibration Signal Based on ICEEMDAN–PE–SVD. Sensors 2023, 23, 6368. [Google Scholar] [CrossRef] [PubMed]

- Yao, Q.; Liu, Y. Vibration fault diagnosis of hydroelectric unit based on LS-SVM and information fusion technology. In Proceedings of the 2016 4th International Conference on Electrical & Electronics Engineering and Computer Science (ICEEECS 2016), Jinan, China, 15–16 October 2016; Atlantis Press: Amsterdam, The Netherlands, 2016; pp. 720–725. [Google Scholar]

- Attoui, I.; Boutasseta, N.; Fergani, N.; Oudjani, B.; Deliou, A. Vibration-based bearing fault diagnosis by an integrated DWT-FFT approach and an adaptive neuro-fuzzy inference system. In Proceedings of the 2015 3rd International Conference on Control, Engineering & Information Technology (CEIT), Tlemcen, Algeria, 25–27 May 2015; IEEE: Piscataway, NJ, USA, 2015; p. 16. [Google Scholar]

- Zhang, Q.; Deng, L. An intelligent fault diagnosis method of rolling bearings based on short-time Fourier transform and convolutional neural network. J. Fail. Anal. Prev. 2023, 23, 795–811. [Google Scholar] [CrossRef]

- Kolar, D.; Lisjak, D.; Pająk, M.; Gudlin, M. Intelligent fault diagnosis of rotary machinery by convolutional neural network with automatic hyper-parameters tuning using Bayesian optimization. Sensors 2021, 21, 2411. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zou, Y.; Hu, W.; Chen, J.; Xiao, Z. Intelligent fault diagnosis of hydroelectric units based on radar maps and improved GoogleNet by depthwise separate convolution. Meas. Sci. Technol. 2023, 35, 025103. [Google Scholar] [CrossRef]

- Yu, G.; You, Y.; Ma, B.; Han, Y. Intelligent Fault Diagnosis for Unknown Faults of Rotating Machinery based on the CNN and the DCGAN. In Proceedings of the 2023 IEEE 12th Data Driven Control and Learning Systems Conference (DDCLS), Xiangtan, China, 12–14 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 72–77. [Google Scholar]

- Gao, Y.; Chai, C.; Li, H.; Fu, W. A deep learning framework for intelligent fault diagnosis using AutoML-CNN and image-like data fusion. Machines 2023, 11, 932. [Google Scholar] [CrossRef]

- Mushtaq, S.; Islam, M.M.; Sohaib, M. Deep learning aided data-driven fault diagnosis of rotatory machine: A comprehensive review. Energies 2021, 14, 5150. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Wu, B.; Zhou, Q.; Hu, Y. A novel data-driven method based on sample reliability assessment and improved CNN for machinery fault diagnosis with non-ideal data. J. Intell. Manuf. 2023, 34, 2449–2462. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, P.; He, J.; Lou, S.; Gao, J. A gan based fault detection of wind turbines gearbox. In Proceedings of the 2020 7th International Conference on Information, Cybernetics, and Computational Social Systems (ICCSS), Guangzhou, China, 13–15 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 271–275. [Google Scholar]

- Yang, J.; Liu, J.; Xie, J.; Wang, C.; Ding, T. Conditional GAN and 2-D CNN for bearing fault diagnosis with small samples. IEEE Trans. Instrum. Meas. 2021, 70, 3525712. [Google Scholar] [CrossRef]

- Chen, B. A Research on Fault Diagnosis of Wind Turbine CMS Based on Bayesian-GAN-LSTM Neural Network. J. Phys. Conf. Ser. 2022, 2417, 012031. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Barua, S.; Erfani, S.M.; Bailey, J. FCC-GAN: A fully connected and convolutional net architecture for GANs. arXiv 2019, arXiv:1905.02417. [Google Scholar]

- Eren, L.; Ince, T.; Kiranyaz, S. A generic intelligent bearing fault diagnosis system using compact adaptive 1D CNN classifier. J. Signal Process. Syst. 2019, 91, 179–189. [Google Scholar] [CrossRef]

- Pandian, R.; Sabarivani, A.; Ramadevi, R.; Krishnamoorthy, N. Effect of data preprocessing in the detection of epilepsy using machine learning techniques. J. Sci. Ind. Res. 2022, 80, 1066–1077. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).