Abstract

Groundwater hydraulic head (H) measurements and point-estimates of hydraulic conductivity (K) both contain information about the K field. There is no simple, a priori estimate of the relative worth of H and K data. Thus, there is a gap in our conceptual understanding of the value of the K inversion procedure. Here, using synthetic calibration experiments, we quantified the worth of H and K data in terms of reducing calibrated K errors. We found that normalized K error could be approximated by a polynomial function with first-order terms of H and K data densities and , which have been normalized by the correlation lengths of the K field, and a mutually inhibitive interaction term. This equation can be applied to obtain a rough estimate of the uncertainty prior to the inversion for a case with a similar configuration. The formulation suggests that the inversion is valuable even without K data. The relative worths of H and K depend heavily on existing data densities and heterogeneity. K can be ten times more informative when it is sparse. Noise perturbation experiments show that we should incorporate noisy K data when K is sparse, but not a large amount of low-quality K estimates. Our conclusions establish a crude, baseline estimate of the value of calibration. A similar assessment method for information content can be employed for more complex problems.

1. Introduction

The hydraulic conductivity (K) is a porous media property that is of great importance to various applications including integrated hydrologic modeling [1,2], contaminant fate and transport predictions, evaluation of groundwater resources [3], and analytical modeling [4]. On the one hand, K can be inferred from pumping tests [5] or lithological estimates. Typically, K values are not independently distributed but auto-correlated in space [6]. Therefore, one K measurement provides some information not only about the site, but also about the adjacent region. On the other hand, K can also be estimated inversely by calibrating a suitable groundwater flow model to an observed hydraulic head (H) using a prescribed recharge. There are mature inversion packages such as model-independent parameter estimation and uncertainty analysis (PEST) [7], which adjust K values, so that simulated H is close to observed data. Often, they make use of available (but sparse) known K data points and geostatistical models to constrain (or, in machine learning terminology, regularize) the inversion process. Therefore, both H and scattered K data help reduce the uncertainty, i.e., they both carry information content about the K field.

Despite a significant body of literature on the information content (or data worth) of K measurements, which we will summarize below, a conceptual knowledge gap exists regarding the relative value of H data and the K inversion process. Recently, integrated hydrologic modeling studies, e.g., [8,9,10,11], have relied on large-scale estimates of hydraulic conductivity, e.g., [12], for parameterizing K, but these estimates may not offer adequate resolution and accuracy for local groundwater modeling purposes. Integrated modeling studies often skip the step of K inversion. A part of the reason may be that, prior to conducting the calibration, there is no method to estimate how much uncertainty can be reduced by this process. On the other hand, it is common to find studies where the calibrated K fields are presented without associated uncertainty estimates. Given the amount of available data, how much uncertainty in the K field can be reduced by calibrating K to H? What is the value of adding point-scale K measurements, which are inherently noisy, for improving the accuracy of calibration? What is the value of securing more K or H measurements? A-priori answers to these questions may help gauge the expected return of the calibration effort prior to carrying out the work.

Sifting through literature, we found that there are no simple answers to these inquiries. Previous studies on optimal experimental designs have provided valuable contributions to understanding the data worth of K measurements [13,14,15]. Their objectives were mostly to design the optimal way of obtaining new K data points for different purposes. For instance, James et al. [13] used a Bayesian data-worth framework to determine the location of data points that maximize their value in reducing the total remediation cost, where the pollutant plume was uncertain with regard to both location and extent. Neuman et al. [15] extended data-worth analysis by using the Bayesian model averaging approach to maximize the cost-benefit of K data points. Freeze et al. [16] also optimized the locations of measurements to minimize uncertainties with aquitard continuity and K distribution, while considering minimized economic regret. Tucciarelli et al. [17] employed a chance-constrained stochastic technique to find the best number and locations of additional measurements, which could result in a minimum total cost of data acquisition and groundwater reclamation. One similar chance-constrained model was coupled to an integer-programing sampling network design model in Wagner [14] in order to optimize pumping and sampling strategies. The worth of data can also be evaluated via sequential data assimilation methods such as ensemble Kalman filtering [18,19].

Despite the large body of literature, to the best of our knowledge, no work compared the value of H data in the calibration of K. In other words, the information content of H data was not given sufficient attention. However, the comparison between H and K values can be both challenging and nuanced due to the many strategies that were utilized to optimize spatial locations of data points, each with different objectives, constraints, and results. Therefore, we turn to a more modest question, “With a fixed, regularly spaced configuration of data locations, what is the value of H and K data, respectively?”

Centered around the abovementioned main question, this paper is organized to answer the following three sub-questions: (1) In a uniformly distributed data setting, can we describe calibrated K and H errors as functions of (preferably dimensionless) data densities, and are errors functions of recharge and boundary conditions? (2) How do the worths of K and H compare under different scenarios of H and K densities? (3) How do uncertainties with known K values impact our analyses? In the interest of reducing the dimensionality of the problem, we seek to non-dimensionalize data densities and errors with respect to the spatial heterogeneity of K. We will verify that the resulting numbers are truly non-dimensional characterizations of system features. Dimensionless numbers have been used in hydrological analysis with success. For example, see Haitjema and Mitchell-Bruker [20] for the water table ratio and Li et al. [21] for the six dimensionless numbers characterizing watershed hydrologic response types. These dimensional numbers, when verified, reveal underlying hydrologic dynamics and allow the conclusion to be migrated to different scales.

The advantage of the simple configuration is that the cause (data density and other factors) and effects (calibrated K error) can be clearly ascertained. While we acknowledge that such a configuration is rare, and the real-world is much more complex, our effort at least provides a baseline scenario that sheds insights and allows comparison with other strategies. As a result, it is a step forward in improving our understanding. Although they play important roles in groundwater modeling, the storage coefficient and transient modeling are outside of the scope of the present study. Furthermore, even though groundwater can be studied using Richards equation [22,23,24,25,26] as an integrated component of the hydrologic system [27], or the Boussinesq approximation [28], we focus on the saturated groundwater flow.

2. Methodology

2.1. Experimental Design

As discussed above, we used uniformly distributed H observations to make the study tractable. A prescribed fraction of the H data points were randomly sampled and assumed to have K estimates. Several realizations of such selections were prepared and tested in the calibrations. The collocation of H and K data points occurs because when a fine-scale K estimate is recorded, e.g., from pumping test, a water level reading can normally be obtained. We assumed a steady-state, 2D unconfined aquifer with uniform recharge.

The worth of data, in the context of this paper, is defined as the reduction of calibrated H and K error due to the inclusion of more data. As inverse modeling error is relative to the range of K variability, its absolute value has limited meaning, and is also not transferrable. Hence, we defined a dimensionless calibrated K error, , as the normalized root-mean-squared logarithmic error (RMSLE) between log-transformed calibrated and synthetic K:

where and are the synthetic and calibrated hydraulic conductivity values at the i-th location in the domain, respectively. N is the total number of cells in the domain, and and are the 90th and 10th percentiles of synthetic conductivity values, with their differences characterizing the variability. Using these values instead of maximum and minimum values avoids skewing the error estimate by extreme values in the stochastically generated K fields. Other percentiles may also be considered. We will show that the normalization removes the dependence of error on the variability of the K field.

Similarly, the normalized error of H, , was calculated for the collection of synthetic wells, as a normalized root mean squared error (RMSE).

where and are the “observed” and simulated groundwater head at the i-th synthetic H observation locations, respectively, and and are the maximum and minimum groundwater head across the domain corresponding to the synthetic K field.

2.1.1. Synthetic Domain

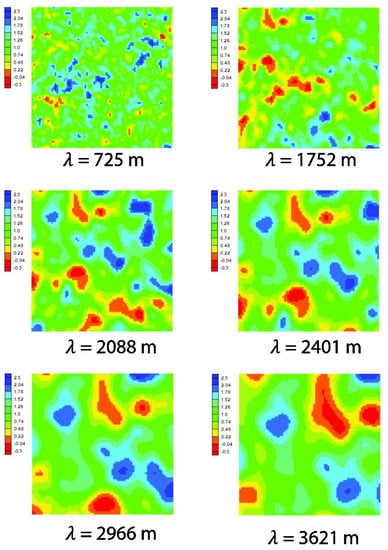

Our computational domain is a rectangular unconfined aquifer. Six random K fields (Figure 1) distinguished by different spatial correlation lengths, , were generated with FIELDGEN, a supplemental utility for the model water flow and balance simulation model (WaSiM) [29]. We manually varied the mean, standard deviation, and correlation length when running FIELDGEN to generate the fields with different . The number of fields was chosen as a balance of the total work load and the representation of heterogeneity. The range of that we tested ensured that, at the largest , there are at least several clusters of high and low K values. Further increasing the range of will make it too labor intensive and computationally expensive to complete our numerical experiments. The K fields are all of log-normal distribution, with K values between 0.5 m day−1 to 200 m day−1. It needs to be noted that the absolute value of this range is irrelevant if non-dimensionalization of the system is valid, which means the conclusions are only dependent on the dimensionless numbers. Then, we fitted a spherical model to the extracted empirical semi-variogram. The formula for the spherical model is:

where is the empirical semi-variogram calculated from the generated field, h is the lag distance, c is the sill, and is the correlation length. We used a bound-constrained version of the “fminsearch” command from Matlab® [30] to find the c and that minimized the sum of squared differences between the theoretical model in Equation (3) and the empirical variogram. We cut off the empirical semi-variogram at 8000 m before fitting the variogram model.

Figure 1.

Random log(K) fields generated for our experiments. λ is the correlation length of the log(K) field. Smaller λ produces more heterogeneous fields and larger distances between data points.

The model domain spans 8000 m in both horizontal coordinate directions. The top and bottom elevations were 150 m and −100 m, respectively. Except for the ones that test the effects of recharge (Section 2.4), all experiments employed a uniform recharge of 500 mm/year. Again, if the dimensionless analysis is found to be valid, what is important for the groundwater flow system is the ratio of recharge to conductivity. By default, the eastern side was assigned a specified head (Dirichlet) boundary condition, with a water head of 130 m, while the three other sides were set as no-flow boundaries. However, the eastern and western boundary conditions were varied to test the effects of different recharge and boundary conditions.

2.1.2. Synthetic Observations of H and K

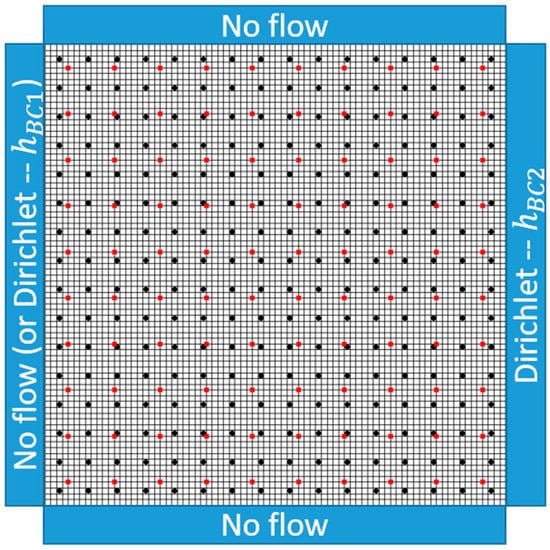

The calibration of a K field requires observed H values and optionally known K values [31,32]. The locations of synthetic H observations were evenly distributed throughout the domain, with an interval of 500 m leading to 256 virtual wells (Figure 2). While the data may look dense, if the correlation length is small, the data is relatively sparse. In terms of calibrated K error, as we will show later, the problem is dimensionless, in the sense that only the ratio between data density and correlation length matters. For a field with a small as in Figure 1a, the data is not dense after all. The water head at each observation well was extracted from a forward simulation with the aforementioned synthetic K fields, recharge, and boundary conditions. We randomly assigned known K values to x% of the observation wells.

Figure 2.

Locations of observation points (black) and pilot points (red). Boundaries of cells constituting the domain grid are shown with black grid lines. The elevation of the ground surface is uniformly set at 150 m. North and south sides of the domain are no-flow boundary conditions while the eastern side is Dirichlet with a value of hBC2; hBC2 is 130 m by default but varied during the tests for the effects of recharge and boundary conditions (Section 2.4). The Western side of the domain is no-flow by default but Dirichlet was also tested during in the experiments about boundary conditions.

2.2. Inverse Modeling

We used MODFLOW-2000 [33] as the groundwater flow model, and the model-independent parameter estimation and uncertainty analysis (PEST) [34] as the inverse modeling tool. PEST estimates K with the assistance of pilot points, each of which carries an initial K value that is used in calibrations and interpolated to all the grid cells in the domain. We set pilot points at the centroid of each 600 × 600 square box of the domain (see Figure 2). The inversion procedure in PEST adjusts K with an iterative approach to minimize the following objective function:

where is the unregularized objective function, is a regularization term with as a parameter, is the global objective function, vector contains the conductivity values of the field, is the observation, and Q is a weight matrix used to define greater contributions of certain pairs of observations. In the present simulation, a uniform weight is assigned to all K observations. is the model that predicts the system responses, given the parameter set , and is the calibrated K field that minimizes .

Without , the inversion process can be non-unique, which means that different sets of parameter values may produce similar outcomes. K could be overfitted, which may lead to large errors when used in the predictive mode [31,34]. To reduce overfitting, implements a penalization procedure called Tikhonov Regularization [35], which introduces geospatial structure as a constraint in the calibration. ‘Regularization’ here is synonymous to ‘penalization.’ The regularization term is:

where Qr is a diagonal matrix consisting of squared weights assigned to each observation, R is a regularization operator that expresses a certain geostatistical constraint, e.g., the difference between a trial parameter value and the parameter value at a site, given neighbors’ values (and a variogram model), and is a ‘system-preferred’ state, which is 0 in this case.

In PEST, is estimated together with calibration. With the help of a definition for an ‘acceptably good’ value, , PEST found the that minimizes while satisfying that is no greater than . This regularization penalizes parameter values that are far from the value expected of geostatistical models (built from data). The system can estimate more pilot points than there are observations, because each new pilot points is automatically accompanied by a spatially interpolated value. The algorithm attempts to ensure that the extra degrees of freedom, which carry little information, are discarded [36]. The interpolation method we selected was Kriging with a spherical model. The number of pilot points was set to 40% of H data points across all experiments. Considering the objective of the study, we did not test the fraction of pilot points as a control variable.

2.3. Non-Dimensionalization of Data Density and Errors

If we draw our conclusions as a function of dimensionless numbers, they are more broadly applicable than dimensional ones. The density of observation data needs to be examined relative to the spatial heterogeneity of the field, which is characterized by . Smaller indicates a more rapid varying K in space, which requires more observational data to constrain. To reduce the degrees of freedom, we propose a dimensionless number, the effective data density, , which quantifies the ratio between the correlation length and square root of data density:

where A is the domain area, n is the number of wells where H is measured, and measures the average distance between data points. can be interpreted as “the square root of the number of H data points in a square box with an area of ”. A greater indicates a slower variation of K relative to the distance between measurement points, and thus, more information about the H field.

Similarly, we can quantify the relative density of wells with known conductivity values, which are implemented by randomly assigning synthetic K values to x% of observation wells. It gives rise to the average distance between known K values, :

where x is the fraction of H observation wells with known K values. Then, similar to Equation (6), the dimensionless factor to quantify conductivity data acquisition is derived as:

The use of and allows us to greatly reduce the number of experiments and simplify the experimental design. If they can effectively and adequately characterize the system, we can avoid simultaneously varying the number of data points and spatial heterogeneity. Instead, we can adjust only the latter. To verify the validity of the dimensionless numbers, we compared the errors of calibrated K from a series of experiments, which contained different combinations of , , and and produced similar dimensionless numbers. Five different values and five different were tested while keeping other variables constant. For each x% value other than zero, three sets of random locations were chosen for known K, leading to three separate calibrations. The results were then averaged to obtain the final calibration errors.

2.4. Recharge and Boundary Conditions

We examined the impacts of recharge and boundary conditions (BC) on calibration errors. Six recharge levels ranging between 100 mm/year and 1000 mm/year and two values of were tested. To prevent the recharge from raising the water table above the ground surface, the Dirichlet boundary was set to 115 m. Other model settings were identical to the default.

We also tested the impacts of the Dirichlet boundary conditions. For this test and this test only, we applied another Dirichlet boundary to the western domain boundary (Figure 2 right panel). We ran model calibrations with six values, while fixing at zero. We also ran five values while keeping at 4.18. We compared calibrated H and K errors from these different recharges and BCs.

2.5. Experimental Design and Multivariate Polynomial Curve Fitting

We tested a total of 31 pairs of (). For each pair, where is non-zero, we ran three random realizations of K fields. This experimental design resulted in a total of 79 calibration experiments. All experiments had the same domain geometries and locations of water head observations. Different was achieved by employing K fields with different (Figure 1), while keeping n constant.

We fitted error as a polynomial function (maximum second order) of and values:

where P is a vector of coefficients in polynomial fitting, is the vector of predictors, and is the calibration error. Our experiments were constrained within the range of values. P was fitted using Matlab® curvefitting Toolbox. The term in Equation (9) represents an interaction term. A probability value (p-value) was calculated for the null hypothesis that the coefficient is equation to zero based on t-tests for each of the curve fitting coefficients. Furthermore, the goodness of fit was evaluated using the coefficient of determination (R2) and the root mean of squared error between the calibrated K/H errors and the values predicted by the polynomial function.

2.6. The Influence of Measurement Noise

The known K values that are supplied to the inversion algorithm can be estimated from pumping tests, lithology, specific capacity, and drawdown data, or conductivity test of samples [5]. In practice, regardless of which method is used, there will be errors. To study the impacts of K measurement noise on the calibrated K, we conducted perturbation experiments, where we added a synthetic noise to the observations. Since the K field is assumed to be log-normally distributed, we perturbed K values as:

where is the true conductivity value, K is what is supplied to the calibration algorithm, and is a Gaussian noise with a standard deviation of . If is 0.2, it means 33% of the data points are perturbed to be either 50% larger or 37% smaller than . If , then 33% of the K data points are perturbed by more than an order of magnitude. The influence of the noise is quantified as an amplification factor:

where is the average calibrated K error of the calibrations with noise-free K data, as defined in Equation (1), and is the error for calibrations with added noise. The experiment was very labor-intensive, as we tested M realizations of the noise and random selections of K positions. We could not regularly sample the three-dimensional parameter space of () space as we did earlier. Instead, we explored a few lines in that space. In addition, since the readings of groundwater head (or depth to the water table) are generally more accurate compared to the K noise, we ignored H errors to reduce the dimensionality of the analysis. For comparing the results to the cases without any K data, we also defined :

is the ratio of errors between cases calibrated “without K data” and “with noise-free K data.” It can be compared with other s because they have common denominators.

2.7. Relative Data Worth

We can calculate the reduction of K error with respect to one measurement point: and . These values can be approximated numerically by calculating the increments in or (given by Equation (9)), as the number of data points increase. They can also be derived from the fitted polynomials in Equation (9). Then, we can examine two ratios that measure the relative worth of data:

is the relative data worth ratio with respect to a unit increase in or and is only a function of these two factors. Since is a nonlinear function of , far more data points are needed to increase a unit of when is high. This relationship can make difficult to interpret and use. indicates whether it is more beneficial to add an H or a K data point, which has a direct practical meaning. However, has three control variables: , and . We will show the influence of , under two different values.

We considered the following two scenarios when calculating : (A) A new K data point is always accompanied by a new H data point, which is relevant when we plan to install new wells. In this scenario, ; (B) we can add a K measurement without adding H data. This scenario is relevant when we can conduct a pumping test from an existing well, or extract K estimates from interpreting existing literature. Under this scenario, is 0. We denoted the data worth ratio calculated under scenario (B).

3. Results and Discussion

3.1. Verification of the Effectiveness of Non-Dimensionalization

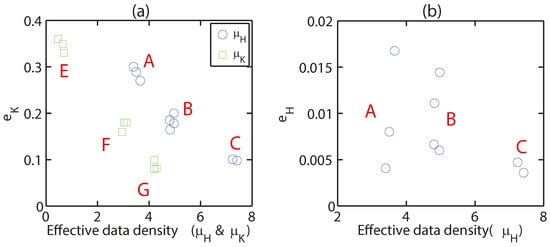

Given similar and values, the normalized conductivity error is tightly clustered (Figure 3), although , , and x% varied substantially (Table A1). This behavior verified that and are effective dimensionless numbers to characterize , which allowed us in only altering in later experiments. In addition, Figure 3 suggested can be described as a smooth function of and . In these preliminary experiments, we did not observe any non-monotonicity or fluctuations. Such smoothness and monotonicity serve as the basis of fitting a polynomial function to the relationships between normalized error and data densities.

Figure 3.

Verifications of normalized data densities as effective dimensionless numbers for (a) and (b). A–G annotate clusters. When we tested the effectiveness of (blue circles), was kept constant. Similarly, when we tested (green squares), was kept constant. In the three blue circle clusters, water head is the only synthetic observational data in the model. Thus, their are zero. From the clustering pattern in the left panel, it is clear that is similar for similar (, ) pairs, even though the correlation lengths and the number of data points are different. This figure suggests (, ) are effective dimensionless parameters controlling . However, it is not the case for . The experimental configurations and results for the clusters on these figures are provided in Table A1.

However, we did not obtain a clustering pattern for water head error. The values showed obvious scattering even with similar and . Therefore, it is meaningless to further test . In summary, and are effective dimensionless numbers to characterize the system for but not for . As a result, the conclusions to be drawn later for as a function of and were applicable to different , , n combinations, while those for were only valid for and values that we specified.

3.2. Impact of Recharge and Boundary Condition on Model Calibration Errors

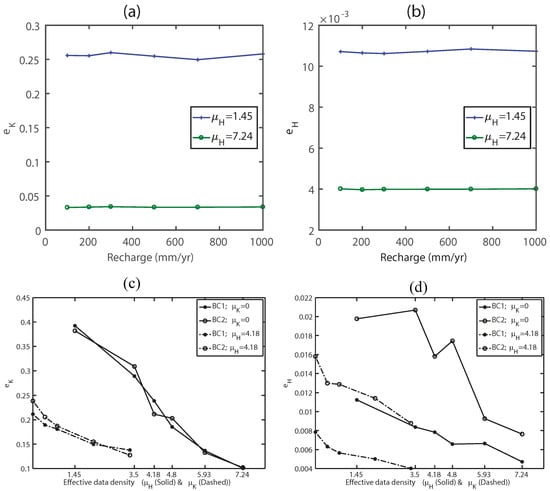

Normalized errors are independent of recharge (Figure 4a,b). Various recharge inputs lead to different water head ranges and, consequently, different absolute error values. However, after the error is normalized on the ranges of H and K, they become flat and non-responsive to recharge. In summary, these experiments show that the influence of recharge is linear, and can be removed by normalizing the error with respect to the range of values in the domain. Therefore, we no longer considered recharge in our factorial experiments.

Figure 4.

Rescaled water head error (a) and conductivity error (b) as functions of recharge under two different H data densities. The errors are obviously impacted by , but at each level, recharge does not influence or . This pattern allows us to remove recharge as a control variable from our experiments. (c,d) (c) and (d) as functions of normalized data densities. BC1 means domain with one Dirichlet boundary and BC2 stands for the domain with two Dirichlet boundaries. Since different boundary conditions generate the same curves, it indicates our analysis of can be valid for different boundary conditions.

Comparing and curves obtained with two different boundary conditions, we noted that the boundary condition had little impact and the two sets of curves almost overlapped (Figure 4c). The two boundary conditions tested were Dirichlet and Neumann, which approximate lakes, rivers (Dirichlet), impervious mountain blocks (Neumann), and so on. However, the same cannot be said about : There are gaps between Dirichilet and Neumann BCs and the gaps are not constant (Figure 4d). The addition of Dirichlet boundary increases the water head error and makes calibration results more stochastic. These patterns mean that the conclusions to be drawn later in this paper with regard to , but not , can be generalized for many different situations, with little impact from the environmental settings. As we will primarily focus on , we will remove BCs from further consideration.

3.3. Errors as a Function of Normalized Data Densities

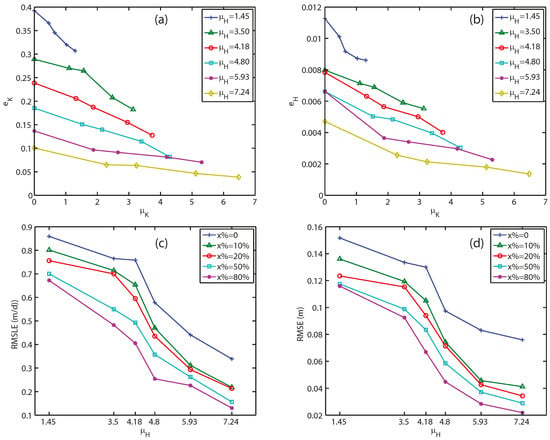

When we hold constant and increase , gradually decreases as one expects (Figure 5a). The decline in is almost linear. The slopes of the equi- lines decrease slightly for higher values, and the gaps between the lines become smaller at higher , indicating a moderate interaction between and . The curves become flatter when is higher, suggesting that when is higher, the marginal gain attained by the addition of decreases. shows a generally similar trend, but there is a more noticeable quadratic trend (Figure 5b).

Figure 5.

Normalized K error (a) and H error (b), and dimensionalized K error (c) and H error (d), as functions of normalized data densities. Each point on the plot represents the mean of three calibrations, except when . It is obvious that decreases as or increases and at each level is almost linear.

Toward higher , we should be able to build a more accurate variogram model for the interpolation procedure during calibration. However, at least in the tested range between and , such a benefit is hardly observable. At the same time, as is computed from comparing “observed” and calibrated K of the entire domain, the monotonously and smoothly varying suggests the regularization approach is effective in reducing overfitting errors.

Viewing the data in a different way, when we keep a constant x%, the error apparently decreases smoothly, as we increase the effective data densities (Figure 5c,d). In this Figure, as increases, also increases proportionally. When is increased from 0 m to 10%, the reduction of both RMSE and RMSLE are more significant than when it increased from 10% to 20%. As mentioned previously, this pattern is perhaps due to the moderate interaction between and .

3.4. Multivariate Polynomial Curve Fitting

Stepwise multiple regressions show that high-order terms (i.e., the second order) are statistically insignificant for so that the system is mostly linear in the range of tested and (Table 1). The small value of the coefficient for compared to the other terms confirms that the interaction between the two variables is mild. We created a 3D surface using three terms: , , and . Therefore, the final fitted equation can be written as:

where to are fitting parameters contained in P of Equation (9). Meanwhile, the quadratic term, , is statistically significant (p-value = 0.001) for . However, the R2 without the quadratic term is adequately high, and adding the term does not increase it notably. In the interest of parsimony, we chose not to include in the fitted formula for either. As there are no effective dimensionless numbers that characterize , we focused on .

Table 1.

Multivariate polynomial curve fitting for the following equation: . p-value is the probability of the null hypothesis that the corresponding coefficient is equal to 0 according to the t-statistic.

For the first-order terms, the difference between and is~15%. Therefore, in regions where the first-order terms dominate and and are similar, the data worth of new H and K data points are comparable. This finding suggests, at least across the range tested, groundwater head observations have great value in reducing uncertainties in the K field, and we do not necessarily require knowledge of K values to reduce . Also, since is normalized to the range of variability of K, we cannot transfer to dimensionalized uncertainty estimates without some knowledge about the K field. Meanwhile, since and are both negative and is positive, the interaction terms exert a mutually inhibitive effect: The existence of each type of data reduces the marginal benefit of the other type of data. For example, when H observations are dense, because , additional K observations do not help as much as when H data density is lower. This almost linear relationship with mutual inhibition is a novel finding. Although we might have an intuitive expectation of an inhibitive relationship, this study is the first to quantitatively determine its relative magnitude.

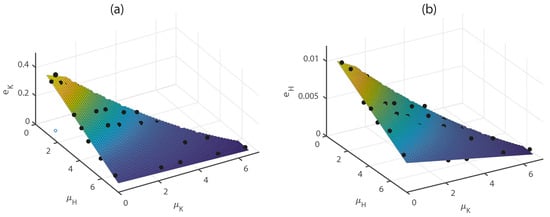

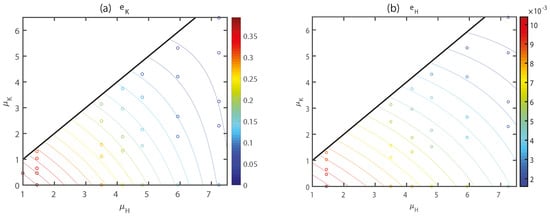

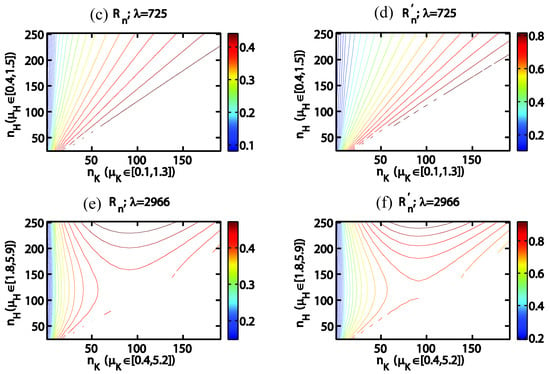

The fitted surface well describes the errors as a function of and (Figure 6), as most points are scattered closely to the surface. As we assumed cannot be greater than , the valid region is limited to the left lower triangle of the ~ plane. We also provided a contour plot for the surface for a more numerically accurate representation (Figure 7). The contour patterns between and are similar. The contours are denser near the lower left corner, because the gradients of error with respect to s are larger when is small as a result of smaller mutual inhibition effects.

Figure 6.

3D visualization of adjusted multiple polynomial curve fitting of (a) and (b) as a function of normalized data densities. The data fall close to the surface. Some data points fall below the surface and are not visible at the shown angles.

Figure 7.

Contour representation of the surface fitted to (a) and (b) as functions of data densities. Scattered points indicate the data points used to construct the contours.

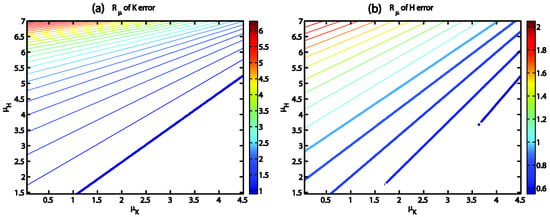

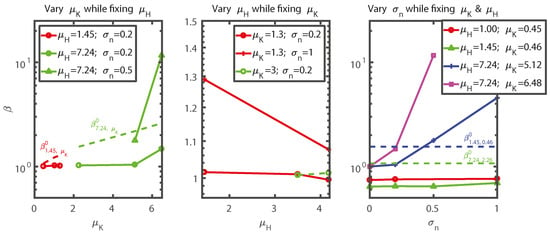

3.5. Relative Data Value

With either numerical approximation or analytical derivation, we can estimate the ratio between marginal error reduction rates to provide us some insights. For example, becomes , which is clearly a function of both and . The tested scatter mostly within the range between 0 to 4.5 (Figure 5). Given a unit increase in the effective data density, H data appears to be more effective in reducing (Figure 8). is >1 for the greater part of the plane, especially for K error. It rises quickly toward the high , low region near the upper left corner of the figure. This pattern results from the calculation of : is not a linear function of . To increase one unit in when is high, many data points are required.

Figure 8.

of K error (a) and H error (b) as functions of normalized data densities and . Bold lines highlight the less-than-one values among the contours. (c–f) and as functions of , , and λ. is calculated assuming each new K data point entails a new H data point. is calculated assuming new K and H data points are independent of each other.

is easier to interpret as it shows the ratio of information content brought in by the next data point of H vs. K, but to examine it, we must consider the nonlinear influence of . When is small (highly heterogeneous field, Figure 8c,d), ranges between 0.1 and 0.8, which means a K data point will always bring in more information content than an H data point. This difference may be counter-intuitive, considering the magnitude of coefficients (−0.053) is only slightly smaller than (−0.061). An important factor is that is always smaller than in our tested ranges, which is normally the case with available groundwater data. contours radiate out almost linearly in the shape of a fan and is dense near the left edge of the figure. The linear pattern of the contours in Figure 8a,b suggest is almost a function of x% for this high-heterogeneity case (). When , , meaning K measurement is sparse compared to H, there is 10 times more information value in new K data points than H. However, when is large (more homogeneous field), we are in a relatively data-rich environment, where the mutual inhibition effect becomes more important. becomes markedly larger and more nonlinear (Figure 8e,f). Toward the left-edge, contours are dense and still mostly vertical. In that region, mainly depends on the amount of K data, and H density has little impact. then increases toward the upper-right corner. If other conditions are equal, new K points bring in more relative value in the small- case than in the large- case. As the fraction of K data points increases, new H data points become increasingly useful relative to K.

3.6. The Influence of Noise with K Observations

Although we looked for predictive formulations to describe the relationship between and its three control variables (, , ), we have not been able to find a simple and reliable predictive formulation with . However, we can draw some inferences from our results.

- When is fixed, , in general, grows as a function of increasing (Figure 9), but when , the error amplification is almost close to 1 and is not very sensitive. The largest impact in this category is with the case (, , ). Recall in this case, 33% of the K data points have been perturbed more than an order of magnitude, but the impact on calibrated K nonetheless appears limited (). However, when is larger than 3, the errors grow significantly. At (, , ), (the upper-rightmost point in Figure 9), which means the inversion essentially failed. Another such case is (, , ) and (visible in Figure 9 blue line). At (, , ), even if the perturbation is moderate, it still causes a significant error amplification (, the rightmost circle on the lower solid green line).

Figure 9. Error amplification factor , the ratio of errors between “with noisy K data,” and “with noise-free data” as functions of , , and . As explained before, since this experiment is very expensive, we could only afford to explore a few lines. For comparison, dashed lines indicate .

Figure 9. Error amplification factor , the ratio of errors between “with noisy K data,” and “with noise-free data” as functions of , , and . As explained before, since this experiment is very expensive, we could only afford to explore a few lines. For comparison, dashed lines indicate . - Larger can help inhibit error amplification. When and are kept the same and is increased, always decreases. However, this effect is small when because the amplification is already small.

- Even though larger increases the error amplification from a noise-free baseline (Figure 9), incorporating K data points nonetheless reduces error compared to corresponding cases with (note in Figure 9), as long as is not too large. For example, under a sparse-data scenario ( or ), even if , which means significant noise, the error is still less than the case without incorporating K data.

- Under the combined conditions of high () (again, this means there are on average 3.52 = 12.25 conductivity data points in an area of ) and high noise (), the error amplification skyrockets and dominates over the information content of K. For example, (, , ), , and which is greater than the case with the same but without K data (). At and , even a of 0.5 is sufficient to bring the error amplification factor to 11.6. For these cases, the errors are too large, and the inversion failed.

Based on these observations, we conclude that, perhaps counter-intuitively, noise with K estimates are more malicious under high scenarios. When there are only a few K data points, we should incorporate them, even if we know they may have significant noise, provided that the log-standard deviation of that noise is not more than 1 order of magnitude. However, when there are a great number of low-quality K estimates, it is, in fact, better not to use them during the inversion and completely rely on H data. It is possible that the low-quality K has made it very difficult for the inversion process to infer a usable variogram.

4. Conclusions

The main contribution of the paper is to expose the functional form of inverse modeling error as dependent on data densities and the interaction between the density terms for the simplest case. The simple dependence of calibrated K error (but not H error) on the normalized densities have not been shown before. The conclusions of this study, i.e., Equation (14) with coefficients in Table 1, can be applied a priori to roughly estimate the value of groundwater model calibration. For consultants reading others’ works, which resulted from a calibration but without details concerning uncertainty, the formula here can be helpful. We found that the calibrated K error, , can be well described by the sum of a linear function of and , and a mild, mutually inhibitive interaction term, which indicates if H density is high, the marginal value of K is reduced and vice versa. This functional form fills a knowledge gap about the value of data and the inversion procedure itself. As the formula is derived in dimensionless forms, it can be applied to various scales and heterogeneity settings. However, the calibrated H error cannot be similarly described by dimensionless numbers. BCs and recharge are found to have little impact on normalized K error. The absolute value of the coefficients of the first-order terms are similar, but relative data worth ratio (H:K) for the next data point, , is strongly dependent on the existing data densities. If we assume that a new K observation must entail a new H observation, across most of the tested parameter range, a new H measurement has less than 40% of the data worth of a new K measurement (). When K is sparse, this ratio can be less than 10% (). In a domain with higher heterogeneity, is mostly determined by the fraction of wells with K measurements.

Considering the noise inherent with known K estimates, we should incorporate K data when there are relatively few K data points, or if the data quality is high. Especially, some knowledge about the range of K variability is required to convert the normalized error estimates to ones with units. However, if there are a great number of K data points with low quality, it is, in fact, better not to incorporate them because they make it difficult to estimate a valid variogram.

5. Limitations

The findings help to build a first-order, conceptual understanding, but we must realize real-world situations are far more complex. Certainly, the method employed in this work is simple and empirical. The geometric configuration of the domain and measurement points is simplistic. Therefore, it only represents a rough, a priori estimate, and it needs to be used with caution. More advanced methods should be used to determine the optimum location to place new data points. Finally, our experiments were carried out using the PEST algorithm in the environment of groundwater modeling system software, and the results are, thus, conditioned on some decisions of the program, e.g., the choice of the regularization parameter.

Author Contributions

Conceptualization, C.S.; methodology, K.F.; software, N.S.; validation, N.S. and C.S.; writing—original draft preparation, N.S.; writing—review and editing, C.S.; visualization, N.S.; supervision, C.S.; project administration, C.S.; funding acquisition, C.S.

Funding

This work was support by the U.S Bureau of Land Management under Interagency Agreement L11PG00354, as part of Work for Others funding from Lawrence Berkeley National Lab, provided by the U.S. Department of Energy, Office of Science, under Award Number DE-AC02-05CH11231.

Acknowledgments

Many thanks to the anonymous reviewers whose comments have helped to improve this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Experimental configurations and errors for the verifications of dimensionless numbers are shown in Figure 3.

Table A1.

Experimental configurations and errors for the verifications of dimensionless numbers are shown in Figure 3.

| Cluster | |||||||

|---|---|---|---|---|---|---|---|

| A | 3.4 | 0 | 725 | 1444 | 0 | 0.0041 | 0.3 |

| 3.5 | 0 | 1752 | 256 | 0 | 0.008 | 0.29 | |

| 3.66 | 0 | 2088 | 196 | 0 | 0.017 | 0.27 | |

| B | 4.8 | 0 | 2088 | 361 | 0 | 0.006 | 0.19 |

| 5 | 0 | 2401 | 256 | 0 | 0.007 | 0.2 | |

| 4.8 | 0 | 2966 | 169 | 0 | 0.011 | 0.17 | |

| 5 | 0 | 3621 | 121 | 0 | 0.014 | 0.08 | |

| C | 7.2 | 0 | 3621 | 256 | 0 | 0.101 | 0.005 |

| 7.4 | 0 | 2966 | 169 | 0 | 0.980 | 0.004 | |

| E | 1.5 | 0.45 | 725 | 256 | 25 | N/A | 0.36 |

| 1.4 | 0.64 | 725 | 256 | 49 | N/A | 0.35 | |

| 1.5 | 0.69 | 1751 | 49 | 16 | N/A | 0.33 | |

| F | 3.1 | 2.95 | 1751 | 256 | 196 | N/A | 0.18 |

| 3.4 | 3.13 | 2088 | 169 | 144 | N/A | 0.18 | |

| 4.3 | 3.04 | 2088 | 256 | 121 | N/A | 0.16 | |

| G | 4.8 | 4.3 | 2401 | 256 | 196 | N/A | 0.081 |

| 5.9 | 4.194 | 2966 | 256 | 121 | N/A | 0.08 | |

| 4.7 | 4.2 | 2088 | 324 | 256 | N/A | 0.1 |

References

- Maxwell, R.M.; Putti, M.; Meyerhoff, S.; Delfs, J.-O.; Ferguson, I.M.; Ivanov, V.; Kim, J.; Kolditz, O.; Kollet, S.J.; Kumar, M.; et al. Surface-subsurface model intercomparison: A first set of benchmark results to diagnose integrated hydrology and feedbacks. Water Resour. Res. 2014, 50, 1531–1549. [Google Scholar] [CrossRef]

- Shen, C.; Niu, J.; Phanikumar, M.S. Evaluating controls on coupled hydrologic and vegetation dynamics in a humid continental climate watershed using a subsurface-land surface processes model. Water Resour. Res. 2013, 49, 2552–2572. [Google Scholar] [CrossRef]

- Freeze, R.A.; Cherry, J.A. Groundwater; Prentice-Hall: Upper Saddle River, NJ, USA, 1979. [Google Scholar]

- Atangana, A. Analytical solutions for the recovery tests after constant-discharge tests in confined aquifers. Water 2014, 40, 595. [Google Scholar] [CrossRef]

- De Marsily, G. Quantitative Hydrogeology: Groundwater Hydrology for Engineers; Academic Press: Ann Arbor, MI, USA, 1986. [Google Scholar]

- Rehfeldt, K.R.; Boggs, J.M.; Gelhar, L.W. Field study of dispersion in a heterogeneous aquifer: 3. Geostatistical analysis of hydraulic conductivity. Water Resour. Res. 1992, 28, 3309–3324. [Google Scholar] [CrossRef]

- Doherty, J. PEST: Model Independent Parameter Estimation; Watermark Numerical Computing: Brisbane, Australia, 2010; Available online: http://www.pesthomepage.org/Downloads.php (accessed on 7 June 2019).

- Shen, C.; Riley, W.J.; Smithgall, K.R.; Melack, J.M.; Fang, K. The fan of influence of streams and channel feedbacks to simulated land surface water and carbon dynamics. Water Resour. Res. 2016, 52, 880–902. [Google Scholar] [CrossRef]

- Maxwell, R.M.; Condon, L.E.; Kollet, S.J.; Maher, K.; Haggerty, R.; Forrester, M.M.; Maher, K. The imprint of climate and geology on the residence times of groundwater. Geophys. Res. Lett. 2016, 43, 701–708. [Google Scholar] [CrossRef]

- Shen, C.; Chambers, J.Q.; Melack, J.M.; Niu, J.; Riley, W.J. Interannual Variation in Hydrologic Budgets in an Amazonian Watershed with a Coupled Subsurface–Land Surface Process Model. J. Hydrometeorol. 2017, 18, 2597–2617. [Google Scholar] [CrossRef]

- Ji, X.; Lesack, L.F.W.; Melack, J.M.; Wang, S.; Riley, W.J.; Shen, C. Seasonal and Interannual Patterns and Controls of Hydrological Fluxes in an Amazon Floodplain Lake With a Surface-Subsurface Process Model. Water Resour. Res. 2019, 55, 3056–3075. [Google Scholar] [CrossRef]

- Gleeson, T.; Moosdorf, N.; Hartmann, J.; Van Beek, L.P.H. A glimpse beneath earth’s surface: GLobal HYdrogeology MaPS (GLHYMPS) of permeability and porosity. Geophys. Res. Lett. 2014, 41, 3891–3898. [Google Scholar] [CrossRef]

- James, B.R.; Gorelick, S.M. When enough is enough: The worth of monitoring data in aquifer remediation design. Water Resour. Res. 1994, 30, 3499–3513. [Google Scholar] [CrossRef]

- Wagner, B.J. Evaluating Data Worth for Ground-Water Management under Uncertainty. J. Water Resour. Plan. Manag. 1999, 125, 281–288. [Google Scholar] [CrossRef]

- Neuman, S.P.; Xue, L.; Ye, M.; Lu, D. Bayesian analysis of data-worth considering model and parameter uncertainties. Adv. Water Resour. 2012, 36, 75–85. [Google Scholar] [CrossRef]

- Freeze, R.A.; James, B.; Massmann, J.; Sperling, T.; Smith, L. Hydrogeological Decision Analysis: 4. The Concept of Data Worth and Its Use in the Development of Site Investigation Strategies. Ground Water 1992, 30, 574–588. [Google Scholar] [CrossRef]

- Pinder, G.; Tucciarelli, T. Optimal data acquisition strategy for the development of a transport model for groundwater remediation. Water Resour. Res. 1991, 27, 577–588. [Google Scholar] [CrossRef]

- Zeng, L.; Chang, H.; Zhang, D. A Probabilistic Collocation-Based Kalman Filter for History Matching. SPE J. 2011, 16, 294–306. [Google Scholar] [CrossRef]

- Dai, C.; Xue, L.; Zhang, D.; Guadagnini, A. Data-worth analysis through probabilistic collocation-based Ensemble Kalman Filter. J. Hydrol. 2016, 540, 488–503. [Google Scholar] [CrossRef]

- Haitjema, H.M.; Mitchell-Bruker, S. Are Water Tables a Subdued Replica of the Topography? Ground Water 2005, 43, 781–786. [Google Scholar] [CrossRef]

- Li, H.-Y.; Sivapalan, M.; Tian, F.; Harman, C. Functional approach to exploring climatic and landscape controls of runoff generation: 1. Behavioral constraints on runoff volume. Water Resour. Res. 2014, 50, 9300–9322. [Google Scholar] [CrossRef]

- Van Dam, J.C.; Feddes, R.A. Numerical simulation of infiltration, evaporation and shallow groundwater levels with the Richards equation. J. Hydrol. 2000, 233, 72–85. [Google Scholar] [CrossRef]

- Fahs, M.; Younes, A.; Lehmann, F. An easy and efficient combination of the Mixed Finite Element Method and the Method of Lines for the resolution of Richards’ Equation. Environ. Model. Softw. 2009, 24, 1122–1126. [Google Scholar] [CrossRef]

- Farthing, M.W.; Ogden, F.L. Numerical Solution of Richards’ Equation: A Review of Advances and Challenges. Soil Sci. Soc. Am. J. 2017, 81, 1257. [Google Scholar] [CrossRef]

- Richards, L.A. CAPILLARY CONDUCTION OF LIQUIDS THROUGH POROUS MEDIUMS. Physics 1931, 1, 318. [Google Scholar] [CrossRef]

- Zhou, J.; Liu, F.; He, J.-H. On Richards’ equation for water transport in unsaturated soils and porous fabrics. Comput. Geotech. 2013, 54, 69–71. [Google Scholar] [CrossRef]

- Shen, C.; Phanikumar, M.S. A process-based, distributed hydrologic model based on a large-scale method for surface–subsurface coupling. Adv. Water Resour. 2010, 33, 1524–1541. [Google Scholar] [CrossRef]

- Su, N. The fractional Boussinesq equation of groundwater flow and its applications. J. Hydrol. 2017, 547, 403–412. [Google Scholar] [CrossRef]

- Schulla, J. Model Description (WaSiM); Institute for Atmospheric and Climate Science, Swiss Federal Institute of Technology: Zurich, Switzerland, 2015; Available online: http://www.wasim.ch/en/index.html (accessed on 7 June 2019).

- D’Errico, J. Bound Constrained Optimization Using Fminsearch. Matlab Central [Internet]. 2012. Available online: https://www.mathworks.com/matlabcentral/fileexchange/8277-fminsearchbnd-fminsearchcon (accessed on 7 June 2019).

- Tonkin, M.; Doherty, J. Calibration-constrained Monte Carlo analysis of highly parameterized models using subspace techniques. Water Resour. Res. 2009, 45, 1–17. [Google Scholar] [CrossRef]

- Tonkin, M.; Doherty, J.; Moore, C. Efficient nonlinear predictive error variance for highly parameterized models. Water Resour. Res. 2007, 43, 1–15. [Google Scholar] [CrossRef]

- Harbaugh, B.A.W.; Banta, E.R.; Hill, M.C.; Mcdonald, M.G. MODFLOW-2000, The US Geological Survey Modular Graound-Water Model—User Guide to Modularization Concepts and the Ground-Water Flow Process; U.S. Geological Survey: Reston, WV, USA, 2000. Available online: https://pubs.usgs.gov/of/2000/0092/report.pdf (accessed on 7 June 2019).

- Doherty, J. Ground Water Model Calibration Using Pilot Points and Regularization. Ground Water 2003, 41, 170–177. [Google Scholar] [CrossRef]

- Tikhonov, A.; Arsenin, V. Solutions of Ill-Posed Problems; V.H. Winston: Washington, DC, USA, 1977. [Google Scholar]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).