1. Introduction

Cloud particle data, predominantly collected through advanced airborne instruments like the two-dimensional stereo (2D-S) optical array probe, are crucial for understanding atmospheric processes [

1,

2,

3]. These datasets provide critical insights into the size, shape, and distribution of cloud particles, which are essential for improving weather forecasting, refining climate models, and supporting weather modification practices [

4,

5,

6,

7,

8,

9,

10,

11]. Accurate interpretation of these data is essential, primarily involving the detection and classification of cloud particles. Among these, the detection of cloud particles serves as the foundation, as it directly impacts the reliability of subsequent classification and the overall accuracy of cloud microphysical analysis. Current algorithms for cloud particle detection suffer from issues such as insufficient robustness in multi-scale recognition and a tendency to miss small particles. These problems can lead to consequences of varying severity in different scenarios, for example, missed detection of small particles may underestimate convective potential, resulting in delayed warnings for heavy precipitation, while misjudgment of large particle sizes can reduce the efficiency of artificial precipitation enhancement operations. Thus, robust detection methods are vital to ensuring the quality and applicability of cloud particle data for advancing atmospheric science. To this end, the core objective of this study is to propose a precise detection method tailored to the characteristics of cloud particles. By optimizing key technical links in the detection process, it aims to address issues such as missed detection of multi-scale particles and recognition deviations of complex-shaped particles, enhance the accuracy and stability of cloud particle detection, and provide reliable data support for high-quality cloud microphysical analysis and related applications.

The two main categories of target detection algorithms are traditional detection methods and deep learning-based detection methods. Traditional methods, such as morphology-based techniques, edge detection, and Constant False Alarm Rate (CFAR) algorithms, rely on manually designed features [

12,

13,

14,

15,

16,

17,

18] and often struggle with fragmented particles, varying sizes, and overlapping structures, making them unsuitable for complex cloud particle morphologies.

Deep learning has revolutionized target detection by enabling models to automatically extract hierarchical features from complex data. Convolutional Neural Networks (CNNs) form the foundation of many advanced detection algorithms. Improved methods, such as Faster R-CNN and YOLO, have achieved notable advancements in detection accuracy and efficiency [

19,

20,

21,

22]. Among these, the SSD has gained prominence for its ability to generate multi-scale anchor boxes, accommodating objects of varying sizes [

23].

Building on these multi-scale anchor based-approaches, SSD has been widely adopted due to its ability to accommodate size variations in target objects through predefined anchor boxes [

24,

25,

26,

27,

28]. While several SSD variants have been enhanced with methods such as integrating ResNet-50 as the backbone, incorporating feature fusion modules, attention mechanisms, and focal loss functions, these primarily aim to improve accuracy in general object detection tasks. However, they still rely on fixed grid-based anchor generation, which presents challenges when applied to cloud particle detection. The irregular shapes, overlapping structures, and fragmented characteristics of cloud particles often lead to suboptimal alignment between fixed anchors and actual target regions. This mismatch can result in poor coverage of cloud particles, particularly for split particles, where fragments are detected as separate entities rather than a cohesive whole. These limitations reduce detection accuracy and complicate subsequent particle classification and analysis. Therefore, we propose an adaptive anchor SSD model that leverages adaptive anchor generation to address the misalignment issues inherent in fixed grid-based detection methods. By integrating multi-scale morphological transformations with adaptive anchor center point generation, our method achieves precise alignment between anchor center points and the spatial distribution of cloud particles, enabling more accurate detection of fragmented, overlapping, and irregularly shaped particles.

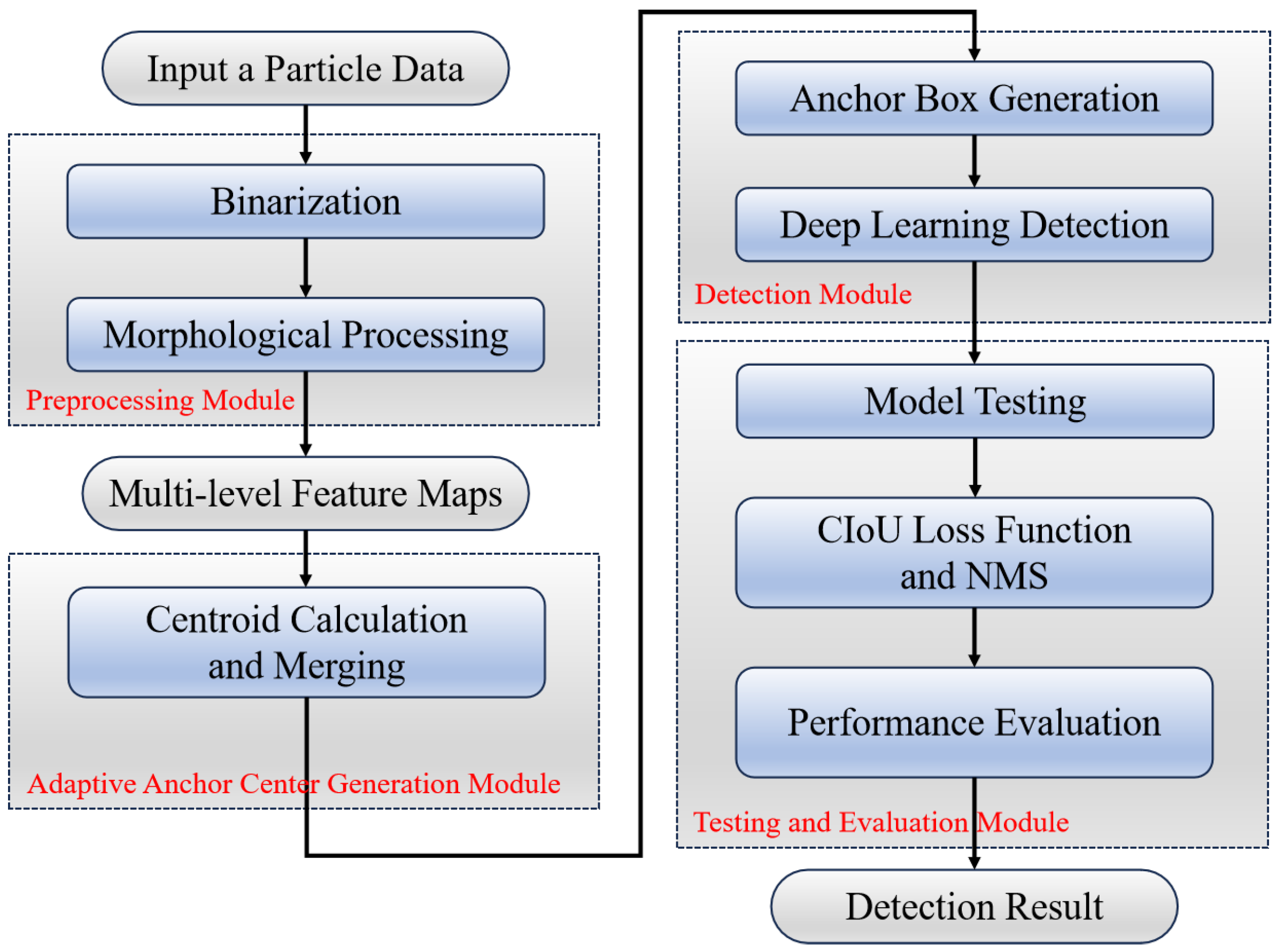

The proposed method consists of four main modules: the preprocessing module, the adaptive anchor center generation module, the detection module, and the testing and evaluation module. The preprocessing module generates multi-scale image information and filters out interference factors such as borders and small broken particles using multi-level morphological processing. In the adaptive anchor center generation module, the geometric centers and the mass centers are derived from the processed images and merged to form adaptive anchor centers. In the detection module, a pre-trained and partially frozen ResNet-50 is chosen as the backbone for its deep residual feature extraction ability. Then, an optimized CIoU-based loss function is employed for comprehensive optimization of bounding boxes. Finally, the testing and evaluation module applies NMS to filter out overlapping anchor frames, and uses mAP, recall, and average IoU value to evaluate the performance of the detection algorithm.

In the experiment part, 2D-S data which we used were obtained by meteorological aircraft crossing clouds in real world. The experiment results show that the proposed method performs well in all three metrics used in the testing and evaluation module. Specifically, achieved approximately a 4% increase in detection accuracy over SSD. Furthermore, the method proves particularly effective in treating split particles as whole entities, tackling a critical challenge in cloud particle detection. These results highlight the robustness and versatility of our approach in analyzing real-world atmospheric data. The main contributions of this paper are summarized as follows:

- (1)

An adaptive anchor SSD model is built to tackle cloud particle detection, which employs multi-scale morphological processing and hybrid center fusion techniques, addressing the limitation of fixed grid-based anchors in SSD by generating adaptive anchor points aligned with cloud particle distributions, effectively resolving the challenges of split-particle detection and morphological diversity.

- (2)

An advanced morphological transformation method is proposed to address the challenges in cloud particle detection, eliminating imaging artifacts, enhancing particle structural features, and solving the problem of interference from transparency and imaging noise in traditional methods.

- (3)

A CIoU-optimized detection strategy is designed by integrating a ResNet-50 backbone to enhance localization accuracy through Complete Intersection over Union (CIoU) loss functions, overcoming the insufficient robustness of traditional SSD in handling scale variations.

In the remaining sections, the details of the proposed method are discussed. In

Section 2, related work is introduced and summarized.

Section 3 presents a novel cloud particle detection approach.

Section 4 describes the experimental steps and results, comparing the proposed approach with traditional and deep learning-based approaches. Finally,

Section 5 concludes the paper.

2. Related Work

The accuracy of cloud particle detection results directly affect the ability to extract meaningful morphological information. Recently, with the development of deep learning, computer vision has been widely used to identify cloud particles. However, the current research mainly focuses on integrating detection and classification tasks simultaneously, with a particular emphasis on cloud particle classification rather than standalone detection. In [

29], Rong et al. proposed a CNN-based method to detect and classify the 2D-S cloud particles. Their approach classified particles into eight types, including spherical, irregular, aggregate, dendrite, graupel, plate, linear, and donut, and achieved an mAP@0.5 of 96.9%. In [

30], Sun et al. proposed a mixed particle detection framework that integrates interferometric particle imaging (IPI) with an enhanced YOLOv7 based model. Their method achieved an mAP@0.5 of 96.9%. In [

31], Wang et al. applied deep learning techniques to the classification and processing of interferometric particle imaging (IPI) data in icing wind tunnel experiments. By combining YOLO and the Segment Anything Model (SAM), they achieved automated detection, segmentation, and phase classification of mixed-phase particles into four categories, including droplet, ice, noise, and particle, reducing the phase classification error rate by 10% compared to manual methods. In [

32], Wu et al. proposed an optimized intelligent algorithm for classifying cloud particles recorded by a Cloud Particle Imager (CPI). By combining morphological processing, principal component analysis (PCA), and support vector machine (SVM) classifiers, their method achieved classification of cloud particles into nine shape types with an average accuracy of 95%.

These studies mainly identified cloud particles in fixed types, ranging from four to nine types. Currently, there is no unified standard for the types of cloud particles. This is partly because different atmospheric environments may contain highly diverse cloud particle types. Different tasks have different requirements for cloud particle types. In [

33], Wendisch et al. investigated the impact of different ice crystal habits on thermal infrared radiative properties, divided cloud particles into seven types. In [

34], Kikuchi et al. expanded the types of cloud particles from 80 to 121. Among them, 28 types represent newly identified snow crystal types discovered in polar regions since 1986, while 6 types correspond to frozen cloud particles, drizzle droplets, and other similar particles. In [

35], Vazquez-Martin et al. further expanded the types to 135 by integrating global ice and snow crystal observation data with Dual Ice Crystal Imager (D-ICI) measurements. The study mainly focused on the physical processes involved in ice crystal formation, such as deposition, riming, aggregation, and collision.

Although deep learning-based algorithms for cloud particle identification have achieved some considerable results, most existing methods are designed for fixed predefined cloud particle types. Particles outside these predefined types are typically labeled as “irregular,” which limits the applicability of such methods in diverse atmospheric environments or specific tasks. To address this limitation, this study separates detection from classification and focuses solely on the cloud particle detection stage to improve the general applicability of the method. The types for classification can then be flexibly adapted according to specific application needs.

3. Methodology

In this study, we proposed a comprehensive methodology for detecting cloud particles using a adaptive anchor Single Shot Multibox Detector (SSD) [

23], specifically tailored for cloud particle imaging data. Our approach is divided into four main modules, each focused on a distinct phase of the detection process, as shown in the flowchart (

Figure 1).

3.1. Preprocessing Module

First, the preprocessing module prepares the particle data through binarization and advanced morphological processing, generating multi-level feature maps that capture particle structures at different scales. Since 2D-S images are grayscale images, binarization helps to clearly separate foreground particles, which we define as all pixels with intensity greater than the Otsu threshold, from the background, which not only clarifies the particle boundaries but also reduces computational complexity for subsequent morphological processing.

The purpose of this module is to clean the interference factors in the 2D-S data and prepare the data to address the problem of the wide range of cloud particle sizes. As shown in

Figure 1, this module involves the initial cleaning of the data. The interference factors that need to be cleaned mainly include the timestamps, borders, and small broken particles, which are cloud particles fragmented due to the aircraft itself or the airflow generated by the aircraft during flight. The detailed steps of this module are described as follows.

The preprocessing workflow starts with binarization based on Otsu’s method [

36], followed by a series of morphological operations. These steps are elaborated in the following subsections.

3.1.1. Binarization with Otsu’s Thresholding

The binarization method is based on Otsu’s thresholding, which automatically calculates an optimal threshold

to separate the foreground from the background based on the image’s intensity histogram. The threshold is determined by maximizing the between-class variance

where

is the between-class variance, defined as

In this equation, and are the probabilities of the two classes separated by threshold , and are the mean intensities of the foreground and background, respectively, and N is the total number of pixels in the image.

3.1.2. Multi-Level Morphological Processing

After binarization, the images are subjected to baseline processing, involving morphological operations such as erosion and dilation with a small structuring element. This step is designed to eliminate peripheral artifacts, such as borders and numeric annotations, commonly found in raw data. By removing these non-particle elements without compromising the visibility of the cloud particles, this method helps clean the image in preparation for further processing. Based on the characteristics of the cloud particles and the need to preserve their structure, we apply a rectangular structuring element of size pixels for erosion and dilation in this baseline processing step.

Secondly, multi-level morphological transformations are applied to the baseline processing images, which aim to address the problem of the wide range of cloud particle sizes. The multi-level morphological transformations include four levels of dilation and erosion; each level is tailored to respond to different target sizes effectively. Specifically, the erosion and dilation operations use rectangular structuring elements of varying sizes (e.g., , , , and pixels) to capture different particle scales. For the large scale cloud particles, four levels of erosion are used, and in this way, the important features of the large-scale cloud particles for detection are preserved, attention is gradually focused on large-scale targets, and the small-scale cloud particles are gradually eliminated. On the other hand, for the small-scale cloud particles, another four levels of dilation are used. The four levels of morphological operations are defined by a list , where each level corresponds to a different number of iterations for the dilation and erosion operations. This ensures the previously overlooked small-scale targets are gradually strengthened, preventing the important features of small-scale particles from being overlooked and enhancing their visibility to minimize the missed detection rate. These multi-level morphological transformations ensure that both small and large cloud particles are effectively captured, thus preparing the data for subsequent adaptive anchor center generation. With the implementation of the multi-level morphological transformations, nine morphological processing images containing different scales targets are obtained.

3.2. Adaptive Anchor Center Generation Module

As depicted in

Figure 1, the adaptive anchor center generation module is the core part of the proposed method. Adaptive anchors are center points that are dynamically generated based on the spatial distribution and morphological characteristics of cloud particles, enabling more precise anchor box placement during detection. In this part, our main goal is to generate different numbers and characteristics of anchor center points for cloud particle targets with different sizes in the image, to later generate anchor frames based on the center points. These anchor frames will be used in the subsequent detection module to define the possible locations, sizes, and aspect ratios of cloud particles, allowing the detection to focus on specific regions. This module primarily consists of a two-stage fusion process, which we will introduce in detail below.

In the first stage, geometric and mass centers are derived from all nine morphological processing images, which include one baseline processed image and eight morphological images. Through this step, each cloud particle target in each image can produce more than one anchor center point. Then, in order to avoid an excessive number of anchor center points, which may lead to a large computational load in the subsequent dynamic anchor generation, the anchor center points will be merged when their distance is too small, for example, within nine pixels. The distance between centers is measured using the Euclidean distance in the image coordinate space. After the first stage of fusion, the cloud particle targets in nine morphological processing images generate anchor center points.

In the second stage, the anchor center points in all the morphological processing images are mapped back to the original image. Then, in the original image, the larger the size of the target, the more anchor center points it will contain, because large size targets have not been eliminated in the nine images. At the same time, in the original image, most small targets also have their own center points because small targets are well preserved in the dilation processing results. To address computational load concerns, a second fusion stage is applied where the center points in the original image that are too close to each other (e.g., within five pixels) are merged. After the second stage of fusion, regardless of the size of the cloud particle targets, each target has its own adaptive anchor centers.

This two-stage merging process is specially designed to ensure that both large and small cloud particles can be noticed. In the first stage, anchor center points generated in the nine morphological processing images can reduce the possibility of ignoring smaller cloud particles. And the two-stage merging process, where all anchor center points are fused according to specific conditions, aims to minimize the redundancy of detecting larger particles. It reduces the appearance of multiple centers of a single large particle, thus reducing the computational load in the detection module.

3.3. Detection Module

As shown in

Figure 1, this module corresponds to the component labeled ‘Detection Module’ in the overall architecture. This detection module mainly contains two parts: generating anchor boxes with different sizes and proportions based on the adaptive anchor center points which are generated in the previous module, and building a deep learning model to predict the types of objects in the anchor boxes and the offset of the anchor boxes. This section describes the methods used and the structural components of the model, starting from adaptive anchor box preparation and extending to the final predictions.

3.3.1. Adaptive Anchor Box Generation

In this module, the adaptive anchor boxes are first generated around the adaptive anchor center points, and each center may have more than one anchor box. These boxes are created with predefined scales and aspect ratios to accommodate the wide range of cloud particle sizes observed in 2D-S imaging. Three aspect ratios—1 (square), 0.5 (vertically elongated), and 1.8 (horizontally elongated)—are employed alongside three scales: 0.03, 0.06, and 0.12. These parameters are designed to detect particles of varying scales and orientations.

Due to inherent variations in cloud particle density and types within actual cloud formations, the number of cloud particle targets varies across different 2D-S imaging data. Additionally, varying flight missions, cloud types, and altitudes introduce further disparities in particle counts within the images. Given these factors, along with the limitations of our deep learning detection system which requires a consistent number of anchor boxes for effective training and inference, it becomes necessary to standardize the number of adaptive anchor boxes across images.

To account for these variations while maintaining consistency, we normalize the number of adaptive anchor boxes per image to a fixed value. This value is based on empirical data that reflects typical cloud particle counts in 2D-S images and is further adjusted according to specific observational factors such as cloud type, flight altitude, and geographical region. For the purposes of this study, we have standardized the anchor box count to 900.

If the number of anchor boxes generated from the existing adaptive anchor center points is lower than the the preset number, it means the number of adaptive anchor center points is lower. We will supplement some virtual center points at the center of the original image to generate enough anchor boxes. On the contrary, if the number of adaptive anchor center points exceeds the preset limit, we prioritize generating anchor boxes by first assigning a default box (scale = 0.06, aspect ratio = 1:1) to all centers, then adding diversified boxes (scales 0.03/0.12, aspect ratios 1:2/1.8:1) to centers with high aspect ratio variance to ensure morphological coverage. This process ensures that the selected anchors represent the typical characteristics of the cloud particle targets while maintaining robustness.

3.3.2. Model Architecture and Detection Process

This subsection elaborates on the model architecture and detection process adopted by “Deep Learning Detection” in

Figure 1. The detection model integrates a pre-trained backbone, specialized transition layers, an upsampling mechanism, as well as localization and classification layers.

Figure 2 details the structure of this detection model, and the detailed functions of each component are described in the following sections:

Firstly, the pre-trained backbone of the detection module is a partially frozen ResNet-50 network [

37]. We chose this network due to its deep residual learning capabilities and strong feature extraction ability, which are essential for capturing detailed, hierarchical features from cloud particle images. In the partially frozen ResNet-50 network, the early layers are frozen to retain general feature extraction knowledge, while the deeper layers are unfrozen for fine-tuning and adapting the model specifically to cloud particle detection.

Secondly, the transition layers consist of two 1 × 1 convolutions followed by ReLU activation functions. The function of these layers is to streamline the feature maps from 2048 to 512 channels and introduce non-linearity. These transition layers improve the model’s computational efficiency and prepare the feature maps for precise localization and classification tasks.

Thirdly, an upsampling mechanism is used to further adjust the spatial dimensions of the feature maps. This module ensures that the feature map dimensions align with the number of dynamic anchor frames. Since the number of cloud particle targets in each image varies and the dimensions of the feature maps output by the detection model are usually fixed, it is challenging to adapt them to the number of targets in the image. Therefore, we reshape the feature maps to match the number of anchor boxes.

Finally, the outputs of the detection module are produced by the localization and classification layers. The localization layer adjusts the offsets and sizes of the dynamic anchor boxes to improve their alignment with actual cloud particle targets. The classification layer predicts the likelihood that each anchor box contains a target or background.

Overall, the proposed model structure is designed to accommodate the characteristics of adaptive anchor center points and cloud particle data. The diagram of this architecture is shown in

Figure 2, which clearly illustrates the workflow of our detection model.

3.4. Testing and Evaluation Module

As shown in

Figure 1, this module is represented in the fourth box. This module mainly expounds how to test and evaluate the detection model, which includes three aspects: loss function used in the training process, NMS technique used to filter out overlapping anchor boxes, and the selection of three performance metrics used to evaluate the effectiveness of the detection model.

3.4.1. CIoU Loss Function

In the detection model based on deep learning, it is very important to choose an appropriate loss function to deal with the diverse characteristics of the cloud particle data. The CIoU loss function [

38] is adaptive, which adjusts the alignment between the predicted and ground truth boxes by considering the consistency of overlap, distance, and aspect ratio, and then optimizes the alignment of dynamically generated anchor boxes with ground truth.

The traditional IoU loss function only measures the overlap between the predicted anchor box and the ground truth box. In contrast, the CIoU loss function considers the smallest enclosing box that covers both the predicted and ground truth boxes. Additionally, the CIoU loss function uses an additional variable to evaluate the consistency of the aspect ratio between the two boxes. This additional function is particularly useful for cloud particle detection, as the wide range of cloud particle target sizes often leads to cases where two bounding boxes do not overlap well or have different aspect ratios. And the CIoU not only can improve the overlap measurement, but also reduce the distance between disjoint boxes and adjust their aspect ratios. This is especially beneficial for the small or broken particles, as the CIoU loss function helps refine anchor boxes that initially did not cover the target well.

The mathematical formulation of the CIoU loss is given by

where IoU is the intersection over union of the bounding boxes,

represents the Euclidean distance between the centers of the predicted and ground truth boxes,

c is the diagonal length of the smallest enclosing box, and

v measures the consistency of the aspect ratio, weighted by

, a parameter that depends on IoU.

measures aspect-ratio consistency,

weights that term (so

), and

,

denote the predicted and ground-truth boxes as

.

In general, the CIoU loss function can effectively improve the model’s ability to correct the anchor box positions in cloud particle detection task. It can effectively reduce the gap between the prediction boxes and the ground truth boxes, and at the same time make the shapes between the two boxes better aligned.

3.4.2. Performance Evaluation

In the performance evaluation part, the main concern is on whether all the cloud particle targets in the image, which have not been filtered out during the preprocessing module, are detected without being missed. Then, it is crucial whether the detected target can be accurately framed by the anchor box, which means that each anchor box can fit the target well, there is only one target in a anchor box, and other targets will not be framed. Therefore, the evaluation metrics we selected include mAP, recall, and average IoU, which are very important to quantify the detection accuracy, reliability, and robustness of the model under complex atmospheric conditions.

The precision metric is used to measure the accuracy of the predicted anchor boxes. It indicates how many of the results that the model considers as target are real targets.

where TP denotes true positives and FP denotes false positives.

The recall metric indicates how many real cloud particle targets the model can correctly identify without missing them.

where FN denotes false negatives.

The final mAP is calculated by averaging the AP (average precision) values over all classes, typically using IoU thresholds of 0.5 (mAP@50).

The average IoU metric is used to measure the consistency between the model’s predicted boxes and the ground truth boxes. It reflects the positioning accuracy of the model by calculating the proportion of the overlapping parts between all predicted boxes and ground truth boxes, and then taking the average value.

where

N is the number of the detect target,

is the predicted anchor box, and

is the corresponding ground truth box.

is the area of their intersection

, and

is the area of their union

.

In general, the accuracy and recall metrics can clearly reflect the key performance of the model in detecting cloud particle targets. The average IoU metric evaluates the matching degree between the predicted anchor boxes and the ground truth boxes, indicating the ability of the model to encapsulate cloud particles correctly. Together, these metrics provide a comprehensive view of the model performance and help us evaluate its detection accuracy and working ability under different conditions.

4. Experimental Results

4.1. Dataset Description

The data used in the training, validation, and testing processes of the cloud particle detection model constructed in this study were obtained from the cloud-penetrating experiment observations of 10 sorties of meteorological aircraft in North China. The model of this meteorological aircraft is King Air, which is equipped with a 2D-S probe (two-dimensional stereo optical array probe). This probe can rapidly image cloud particles (at a frequency of 0.1–1 Hz), detect cloud particles with a size of 10 microns, and generate high-resolution images. The dataset comprises 1000 high-resolution 2D-S cloud particle images, which cover various atmospheric conditions and cloud particle types from the ten flight missions, including a wide range of morphologies from spherical to broken shapes, ensuring the diversity and representativeness of the dataset. This dataset was labeled by cloud microphysics researchers using labelImg software as the ground truth labels. In the experiment, the dataset was divided into training set, validation set, and test set at a ratio of 8:1:1.

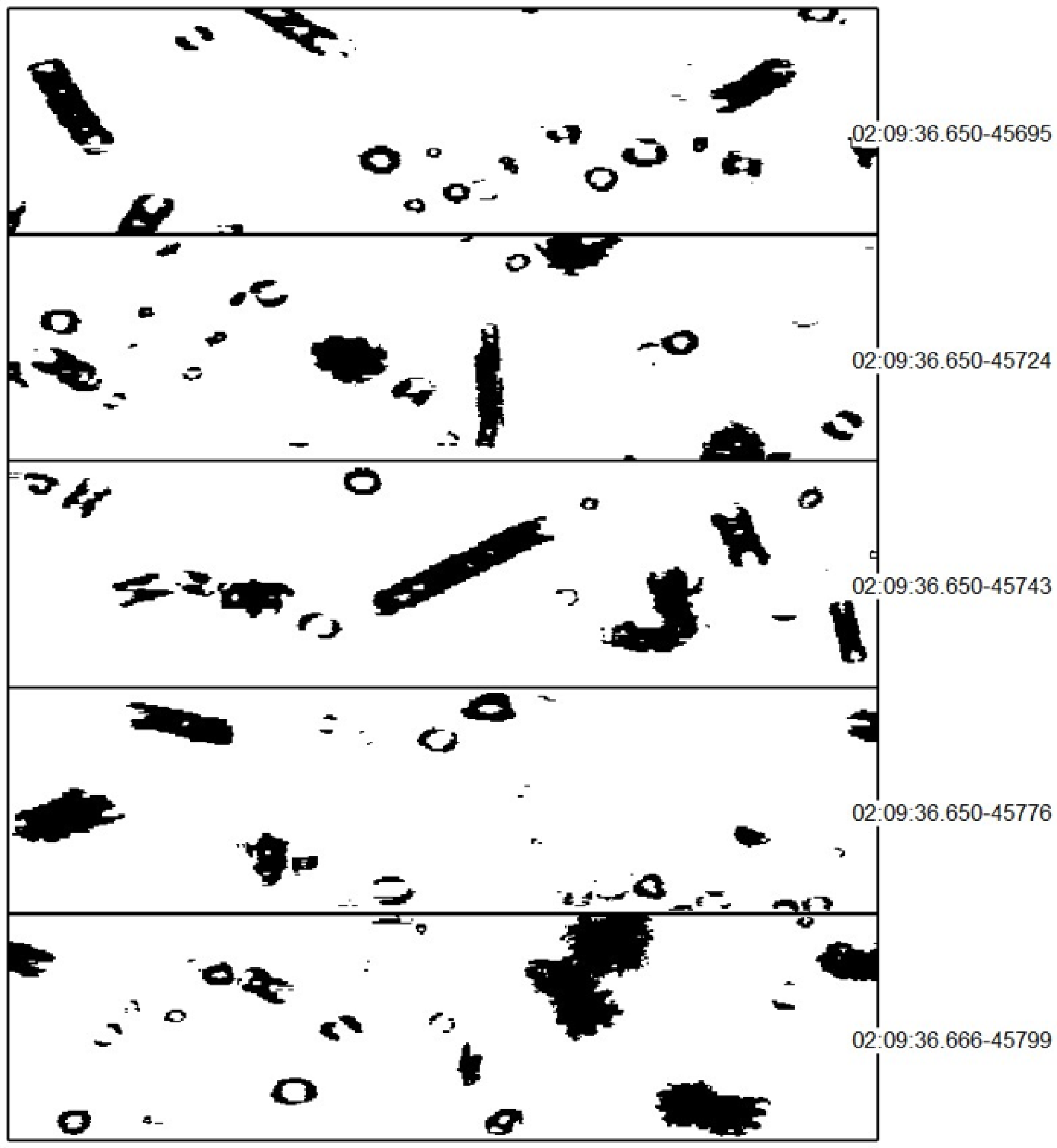

Figure 3 shows a cloud particle image from the 2D-S dataset used in our detection experiments. This image was collected in July 2022 and processed using the proposed detection pipeline.

4.2. Experimental Setup

4.2.1. Setup of Preprocessing Module

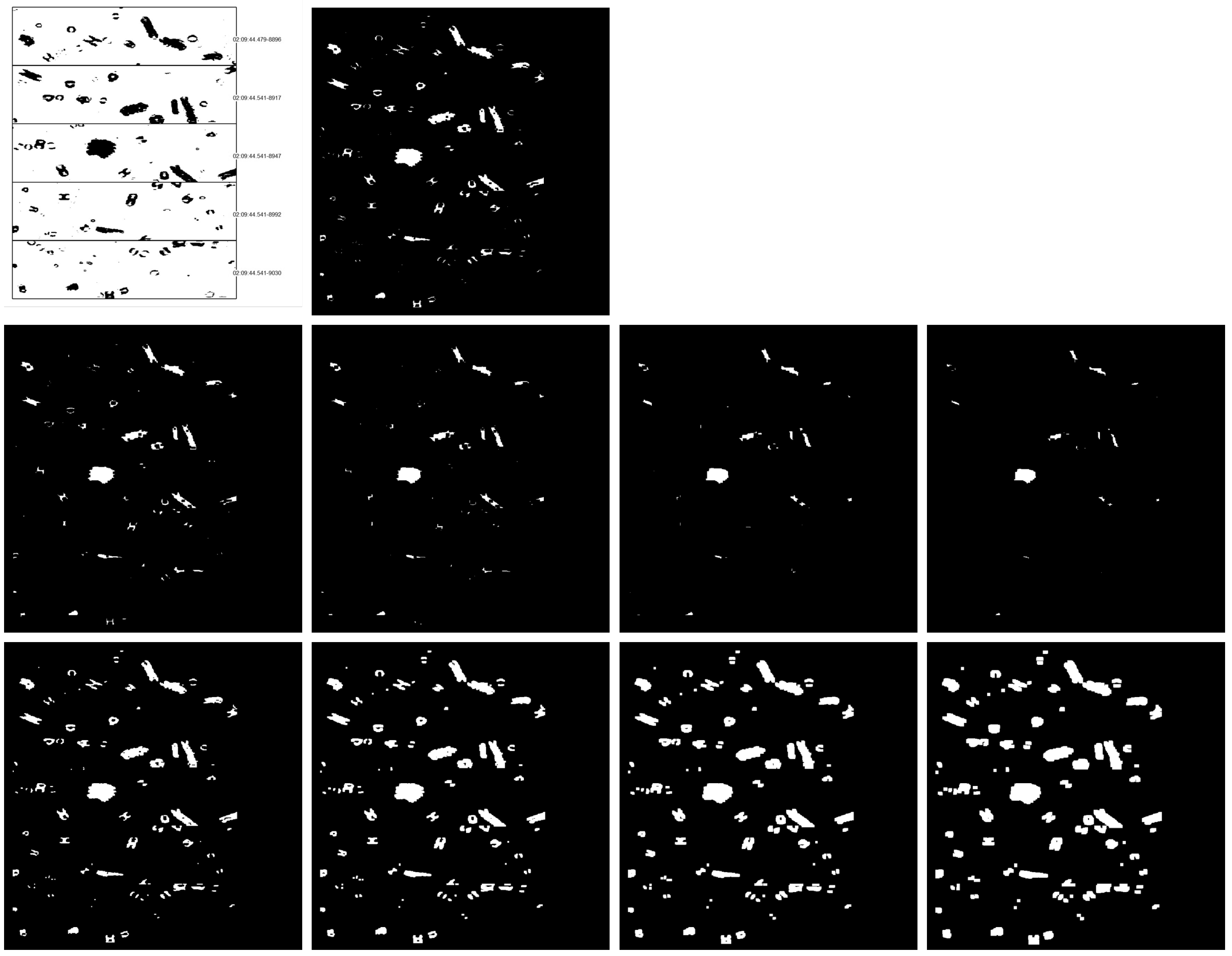

As shown in

Figure 4, these are the processing results of the preprocessing module. In this figure, the left image in the first row is the original image. Correspondingly, the right image in the first row is the baseline processing image, demonstrating the removal of interference such as the timestamps, borders, and small broken particles. In the next two rows of the figure, the results of the multi-level morphological transformations, including four levels of dilation and erosion, applied to the baseline processing are shown respectively. Specifically, in the second row, the images from left to right show increasing levels of erosion for the baseline image, which enhances the visibility of large particles. This means that deeper erosion removes smaller artifacts while retaining the basic characteristics of larger particles. On the other hand, in the third row, the images from left to right show that the dilation level for the baseline image increases, which enhances the visibility of small particles. This means that deeper dilation ensures small targets are not ignored in the detection process.

In general, these experimental results demonstrate how the preprocessing module processes the input data for the detection model. The results indicate that multi-level morphological transformations can effectively handle the size differences of particles, ensuring that all cloud particles, regardless of their size, are properly detected. The choice of erosion and dilation levels is based on the typical size distribution of cloud particles in nature and is designed to optimize the balance between removing noise and preserving particle structures.

4.2.2. Setup of of Adaptive Anchor Center Generation Module

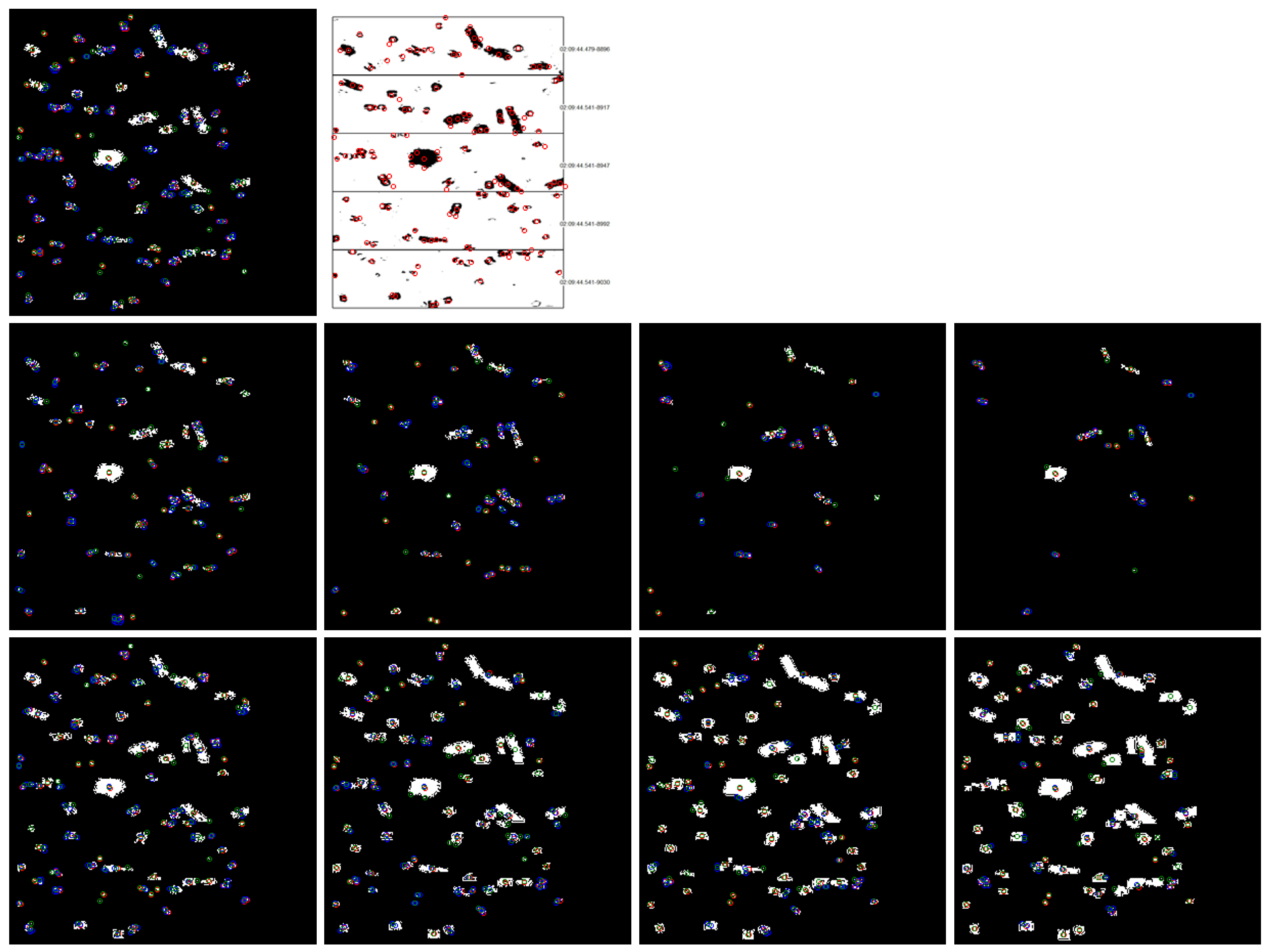

Figure 5 shows the results of merging anchor center points in the two stages, which include centers generated from the nine morphological processing images, merged, mapped to the original image, and finally merged again within the original image. In this figure, all the centers are marked in different colors. The geometric center is represented by a red circle, the center of mass by a blue circle, and the fusion center by a green circle.

In the first row of this figure, the left image shows the three types of centers generated from the baseline image. And the right image shows the final fusion centers on the original image, represented by the red circle. In the second row of this figure, we can see that as the erosion level deepens, fewer cloud particles remain, and the corresponding center points also decrease. But when these center points are finally mapped to the original image, the number of center points belonging to the larger cloud particles becomes the largest. Following the same principle, as shown in the third row, with the increase in dilation level, small targets become increasingly clear, and more center points are generated for them, so that small cloud particles are not ignored.

Especially, the right image in the first row displays the final set of centers. These centers come from two sources. First, they are derived from the baseline image. Second, additional centers are generated from eight other images created through multi-level morphological transformations. In the first stage of merging, centers within a nine-pixel threshold from each other are combined to reduce redundancy. In the second stage, centers from all nine processed images are mapped back to the original image coordinates and merged again if they are still within the threshold. The resulting combined center image offers a refined set of centers, which will be used to generate anchor boxes during the detection phase. This adaptive process aims to generate anchor centers that better correspond to actual particle distributions, thereby enhancing detection performance while controlling redundancy.

4.2.3. Setup of Adaptive Anchor Box

The generation of adaptive anchor boxes within our model hinges on the identified centers derived from the processed cloud particle images. Each center facilitates the generation of multiple anchor boxes, utilizing predefined scales and aspect ratios to cater to a diverse range of cloud particle dimensions observed in 2D-S imaging. Three aspect ratios—1 (square), 0.5 (vertically elongated), and 1.8 (horizontally elongated)—are employed alongside three scales: 0.03, 0.06, and 0.12. These parameters are designed to detect particles of varying scales and orientations.

Due to inherent variations in cloud particle density and types within actual cloud formations, the number of cloud particle targets varies across different 2D-S imaging datasets. Additionally, varying flight missions, cloud types, and altitudes introduce further disparities in particle counts within the images. Given these factors, along with the limitations of our deep learning detection system, which requires a consistent number of anchor boxes for effective training and inference, it becomes necessary to standardize the number of anchor boxes across images.

To accommodate these variations while maintaining model consistency, we normalize the number of anchor boxes per image to a fixed preset value of 900. This value was determined based on empirical data reflecting typical cloud particle counts in 2D-S images, as well as the combined effect of the imaging system’s performance and the aircraft’s flight parameters. The preset settings can be adjusted according to the cloud type, flight altitude and geographical area.

Figure 6 shows the adaptive anchor boxes generated for a typical original image. As can be seen from the figure, many anchor boxes with different sizes and aspect ratios have been generated on most of the cloud particles, while very little cloud particles, as well as the borders and timestamps, do not generate anchor boxes. This is because these interference factors are filtered out in the preprocessing module, resulting in no center points being generated for them, and no corresponding anchor boxes being generated. For different cloud particle images, the position and number of anchor boxes vary depending on the targets.

4.2.4. Handling of Split Cloud Particles

In cloud particle detection, split or fragmented particles pose a major challenge. These particles often appear as smaller pieces due to imaging artifacts. Traditional methods struggle to detect them as a single particle. This can lead to inaccurate particle counts and unreliable cloud microphysical analysis.

To solve the above problems, the proposed method is used. First, by labeling the cloud particle dataset, the trained detection model can partially solve the problem of detecting split or broken cloud particles as a complete target. In addition, the introduction of multi-level morphological processing and adaptive anchor center points can further ensure that the center points of the anchor boxes are generated at the correct positions for split particles, and improving the detection accuracy of the split or broken particles. This capability is very important for enhancing the accuracy of cloud particle data and reducing errors caused by debris detection.

4.2.5. Evaluation of Split Particle Detection

Figure 7 shows the comparison results between the Constant False Alarm Rate (CFAR) method, a traditional thresholding method, and the proposed method when detecting split cloud particles. The left image in the figure displays the detection result of the traditional method. It can be clearly seen that the split cloud particles are detected as two separate targets. A large number of such detection results will not only lead to deviations in cloud particle statistics but also significantly impact the analysis of cloud particle proportions.

Correspondingly, the detection result on the right image shows that the split cloud particles are successfully detected as a single target. This demonstrates that our method can effectively detect broken or split cloud particles as a complete target, thus improving the detection accuracy and the reliability of cloud microphysical analysis.

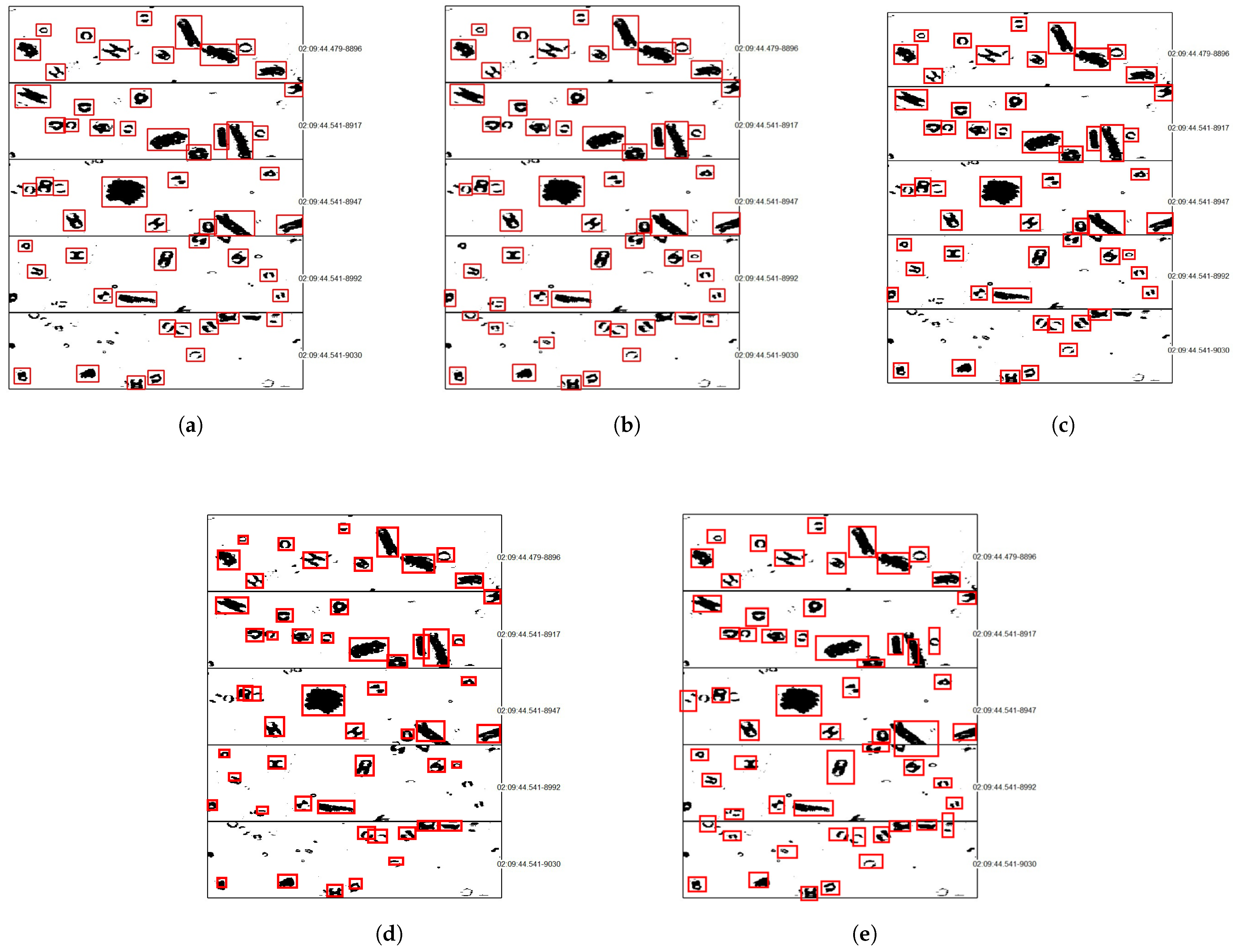

4.3. Comparison of Detection Algorithms

In this section, we evaluate the proposed method and compare it with other common detection algorithms including YOLOv5, Faster R-CNN, DETR, and traditional SSD. These algorithms are selected because they cover a wide range of technologies. YOLOv5 is a popular single-stage detection algorithm, and the design of its anchor box is different from the SSD algorithm. Its clustering design can better adapt to datasets; Faster R-CNN belongs to the classic two-stage algorithm. DETR is based on the transformer architecture, which is significantly different from the CNN-based algorithms. Through the comparison of these algorithms, we aim to evaluate the performance of the proposed method within major contemporary algorithms. For model training, the key hyperparameters include a learning rate of 0.0001, a batch size of 32, and the use of the Adam optimizer.

To quantitatively evaluate the performance of these detection algorithms, we calculate the precision, recall, and average IoU values for each method. All these indicators are expressed as percentages, and the mean average precision and average IoU are calculated using a threshold of 0.5.

As shown in

Table 1, the proposed SSD method achieves the highest precision (0.934); recall (0.905) is only slightly lower than that of DETR, and the average IoU (0.632) is better than that of the traditional SSD. The overall effect has obvious advantages over the comparison algorithms, which proves the effectiveness of the adaptive center point generation and anchor box optimization technologies adopted in our method. On the other hand, Faster R-CNN has good comprehensive performance in precision (0.926), recall (0.895), and average IoU (0.716), but its two-stage model structure makes its training speed the slowest among these models while having good effects. In contrast, although the YOLOv5 model has lower precision (0.906) and recall (0.847) than Faster R-CNN, it has the fastest training speed due to its lightweight model structure. DETR is based on the transformer architecture. Although the results of precision (0.909) and average IoU (0.655) are not excellent, recall (0.933) is the highest. Finally, the traditional SSD algorithm is inferior to other algorithms in precision (0.893), recall (0.812), and average IoU (0.573). However, due to the high proportion of small and medium cloud particles in our task and the large improvement space of SSD, we finally choose to improve the SSD algorithm.

In addition to seeing the performance of proposed SSD through the comparison of model evaluation indicators, we can also compare the actual effects of various algorithms from the prediction result images. It can be seen that the detection effect of the proposed method (

Figure 8e) is obviously better than that of other models, especially for small and medium particles. However, the model still has difficulty handling a small number of extremely fragmented or small particles, which may be due to the merging of centers caused by their proximity, or because these particles may not have enough visual details for accurate detection. These undetected particles may have little impact on cloud microphysical property analysis because they may be filtered out due to low imaging quality or unrepresentative data. As shown in

Figure 8a–c, it can be seen that different model architectures or prediction box generation principles lead to differences in precision and detection performance. Compared with Fast R-CNN (

Figure 8b) and DETR (

Figure 8c), YOLOv5 (

Figure 8a) has a slightly higher target miss rate. Fast R-CNN has the best detection effect among the three, but there are also particles that Fast R-CNN did not detect, but which were detected by YOLOv5.

In conclusion, although tiny particles are occasionally missed, the proposed method has significantly improved the detection of small and medium targets, improving the overall accuracy and reliability of cloud particle detection, which is crucial for subsequent cloud microphysical analysis.

4.4. Discussion

Although the proposed method performs well on the 2D-S dataset, we think there is still a limitation in the dataset on which the method is evaluated. This limitation mainly stems from the focus on a single sensor dataset. And in future work, we need to further enhance the generalization of the proposed method by testing its effectiveness on datasets collected from different sensor types.

In the proposed method, we use the ResNet-50 as the backbone network to extract image features in conjunction with adaptive anchor generation. And we emphasize that the proposed method is a flexible and general framework for cloud particle detection. The adaptive anchor center points generation and multi-scale morphological transformations are not tied to any specific network architecture. The model can be adapted to different network backbones such as MobileNet, EfficientNet, or deeper variants of ResNet. Furthermore, the number of layers, hyperparameters, and morphological processing levels can be adjusted to accommodate various data resolutions and cloud types.

In future work, we would like to evaluate the model on more diverse datasets, which include different atmospheric conditions and various cloud particle detectors. In addition, we will explore sensitivity analysis of the network structure and hyperparameters (e.g., number of layers, structuring element size, and adaptive anchor center generation parameters) to further improve the general applicability of the model.