1. Introduction

The recent rapid urbanization and industrialization of China have led to increasing levels of air pollution resulting from the release of many toxic and harmful substances into the atmosphere [

1]. These substances affect the quality of urban air and pose a great threat to public health [

2,

3]. Air Quality Index (AQI), which acts as an index for evaluating pollutant concentration in the atmosphere, is calculated from the concentration of individual pollutants such as carbon monoxide (CO), sulfur dioxide (SO

2), nitrogen dioxide (NO

2), ozone (O

3), and particulate matter (PM

2.5 and PM

10) in the air [

4].

Table 1 shows the AQI classification criteria, comprising six levels [

5]. Air pollution poses a serious threat to human health, mainly in the form of respiratory diseases and skin diseases [

6,

7]. According to the latest report released by the National Health Commission of People’s Republic of China, malignant tumor ranks first among causes of death for urban residents in China, among which lung cancer ranks first [

8].

Especially for environments with high pollution levels, it is necessary to well monitor, model, and accurately predict air quality for a clear understanding of pollution levels and associated future health risks [

9]. Therefore, most Chinese cities have established air quality monitoring systems. However, the high price of monitoring equipment adds to the financial burden of the government, and real-time monitoring of air pollution does not fully address the air pollution problem. Accurate prediction of future air quality is necessary to help cities develop sustainably and protect residents’ physical and mental health [

10]. Therefore, it is necessary to construct a scientific and accurate air quality prediction model to provide some basis for the management and comprehensive protection of urban air environment.

Air quality is difficult to predict, as it is affected by multiple factors [

11,

12]. Currently, the most commonly used methods to predict air quality are statistical models and machine learning models (MLMs). Statistical models include the autoregressive integrated moving average (ARIMA) [

13] and multiple linear regression algorithm [

14]. Although both ARIMA models and multiple linear regression models are extensively used to predict air quality [

15], they have low prediction accuracy if the series is nonlinear or irregular [

16]. MLMs include both support vector machines [

17] and artificial neural networks (ANNs) [

18]. Support vector machines have been used for nonlinear regression prediction, as the algorithm is capable of quickly finding the global optimal solution, but its parameters can be hardly determined to accurately predict air quality [

19]. The core advantage of the random forest algorithm in AQI prediction lies in its excellent feature importance analysis capability, which enables researchers to deeply understand the impact of various environmental factors on air quality. In practical applications, the algorithm is widely used to deal with the complex correlations between various air indicators such as AQI, PM

2.5 concentration, and total nitrogen oxide (NO

X) concentration, and the model performance is comprehensively evaluated through multi-dimensional indicators such as correlation coefficient and determination coefficient [

20]. Random forests are gradually being integrated into more complex hybrid model systems. For example, in the study of air quality-meteorological correlation modeling, the fitting effect of the fusion model and the actual observations is extremely ideal [

21].

In recent years, researchers have started to use nonlinear models (e.g., ANNs) to predict air quality [

22]. The related literature shows that nonlinear models can obtain good prediction results [

23,

24]. Among them, ANN is a powerful tool to describe nonlinear phenomena, which has the characteristics of massively parallel processing, strong learning ability, and obvious nonlinearity. Therefore, ANNs have been used extensively for predicting air quality. Wang [

25] et al. adopted back propagation neural networks (BPNNs) for predicting PM

2.5 concentration in the environment, but their training time was long; and the influence of temperature, humidity, and other factors in the air on PM

2.5 concentration prediction was not considered, so their prediction accuracy was low. Maleki [

26] constructed artificial neural network to predict PM

2.5 concentration, but, as BPNNs are prone to falling into local minima, the models showed low prediction accuracy.

BPNNs face obvious disadvantages, such as a propensity to being caught in local minima, the need for long-term learning, and weak convergence rates. However, the prediction accuracy of BPNNs is closely associated with the setting of its parameters, which include hidden layer parameters, initial weights, bias, and learning rate [

27]. As metaheuristic algorithms perform well in optimizing parameters, some scholars have used them (e.g., genetic algorithms (GAs), artificial bee colony algorithms, and sparrow optimization algorithms) for such purposes [

28]. Yang [

29] used a GA to search for the optimal parameters of a BPNN, resulting in a higher prediction accuracy. Xu [

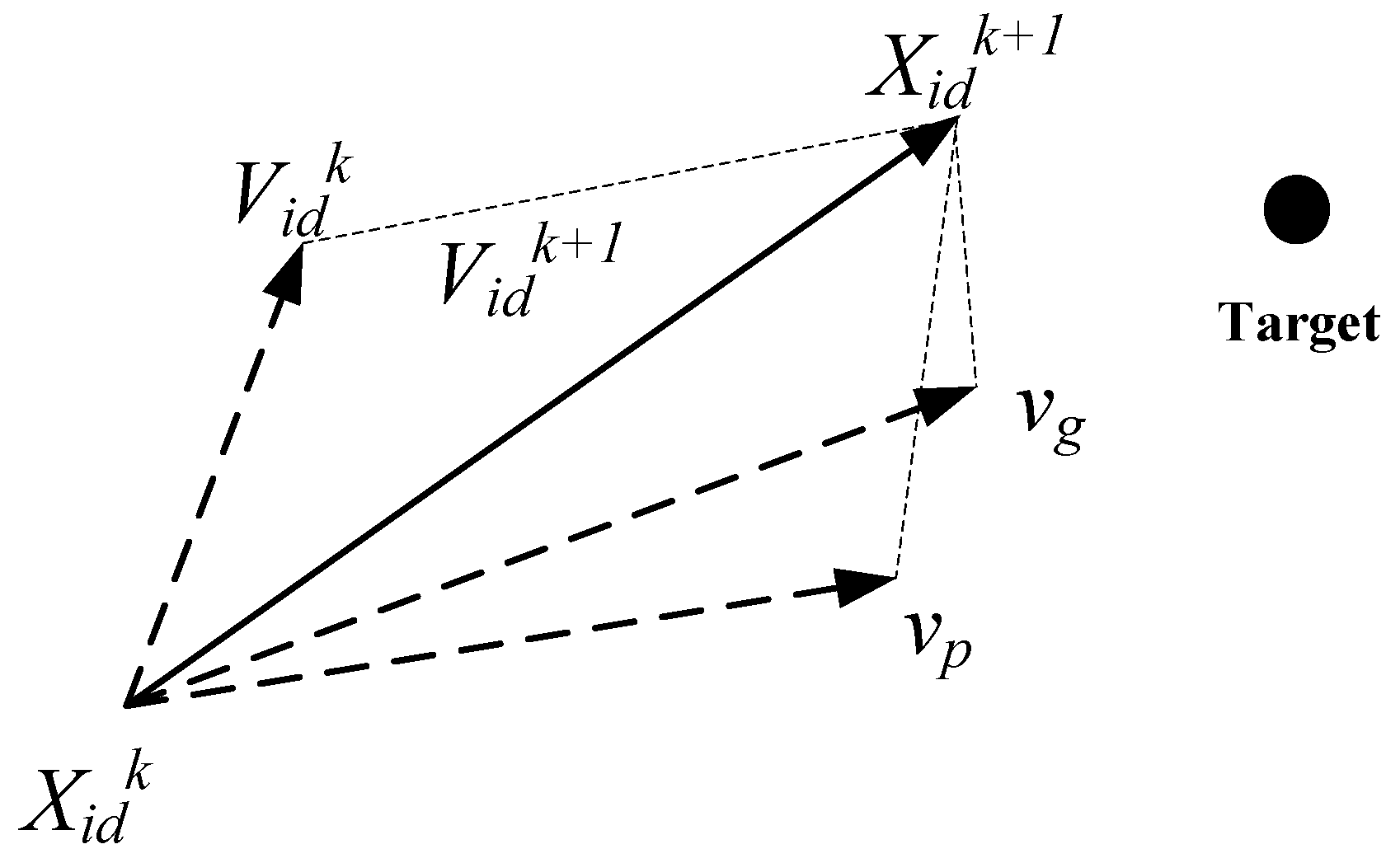

30] used a sparrow search algorithm to optimize the parameters, leading to the improvement of substation project cost prediction. The above models outperformed the traditional BPNN in terms of convergence speed and prediction accuracy. Compared with the above methods, the particle swarm optimization (PSO) algorithm has become the most popular method among many metaheuristic algorithms because of its high accuracy, fast convergence, and simplicity [

31,

32]. Xiao [

33] used a PSO algorithm to optimize a BPNN for pavement performance prediction, with the hybrid prediction model showing higher accuracy compared to the single model. Li [

27] introduced PSO to a BPNN to improve network training applicability and reduce learning time. Guo [

34] used PSO to optimize a BPNN for predicting NO

2 concentration in air, and the prediction accuracy was greatly improved compared with the original BPNN. Cai et al. [

35] applied an improved particle swarm optimization algorithm to optimize the parameters of long and short-term memory networks for chaos prediction.

Although the traditional PSO algorithm applied to BPNNs is capable of both reducing the network learning time and improving prediction accuracy, it still inevitably exhibits low convergence speed, local optimums, and premature convergence when solving complex optimization problems. Therefore, researchers have designed a variety of different improved PSOs to improve performance [

36]. Jiang [

37] introduced immune cloning into the PSO algorithm to enhance the global search capability of particles. Sabat et al. [

38] proposed an integrated learning PSO algorithm, which exhibits a high convergence speed but easily fell into local minima. Li [

39] studied a chaotic PSO algorithm that enhanced the global and local search ability exhibited by particles, but its convergence speed was much lower than other methods.

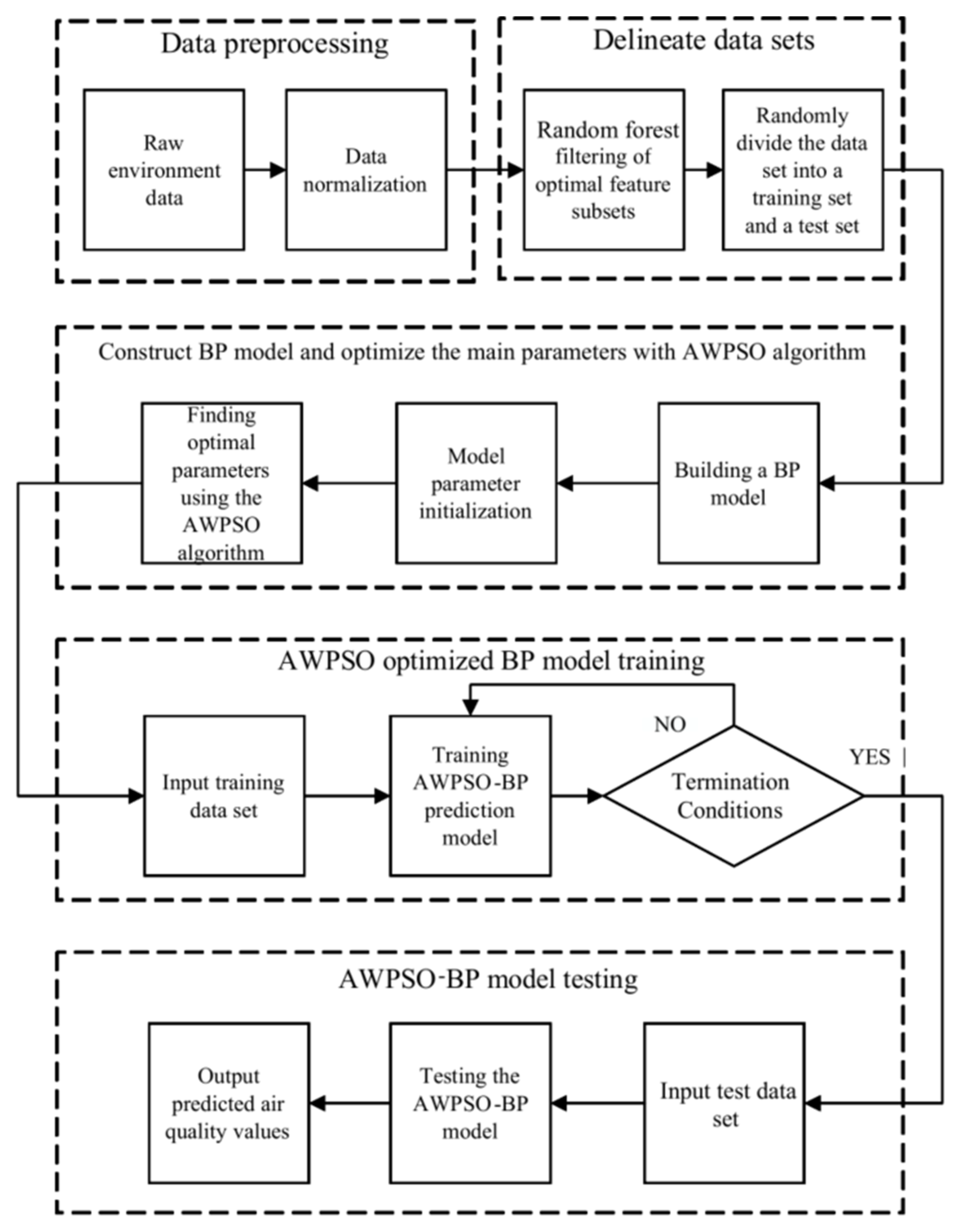

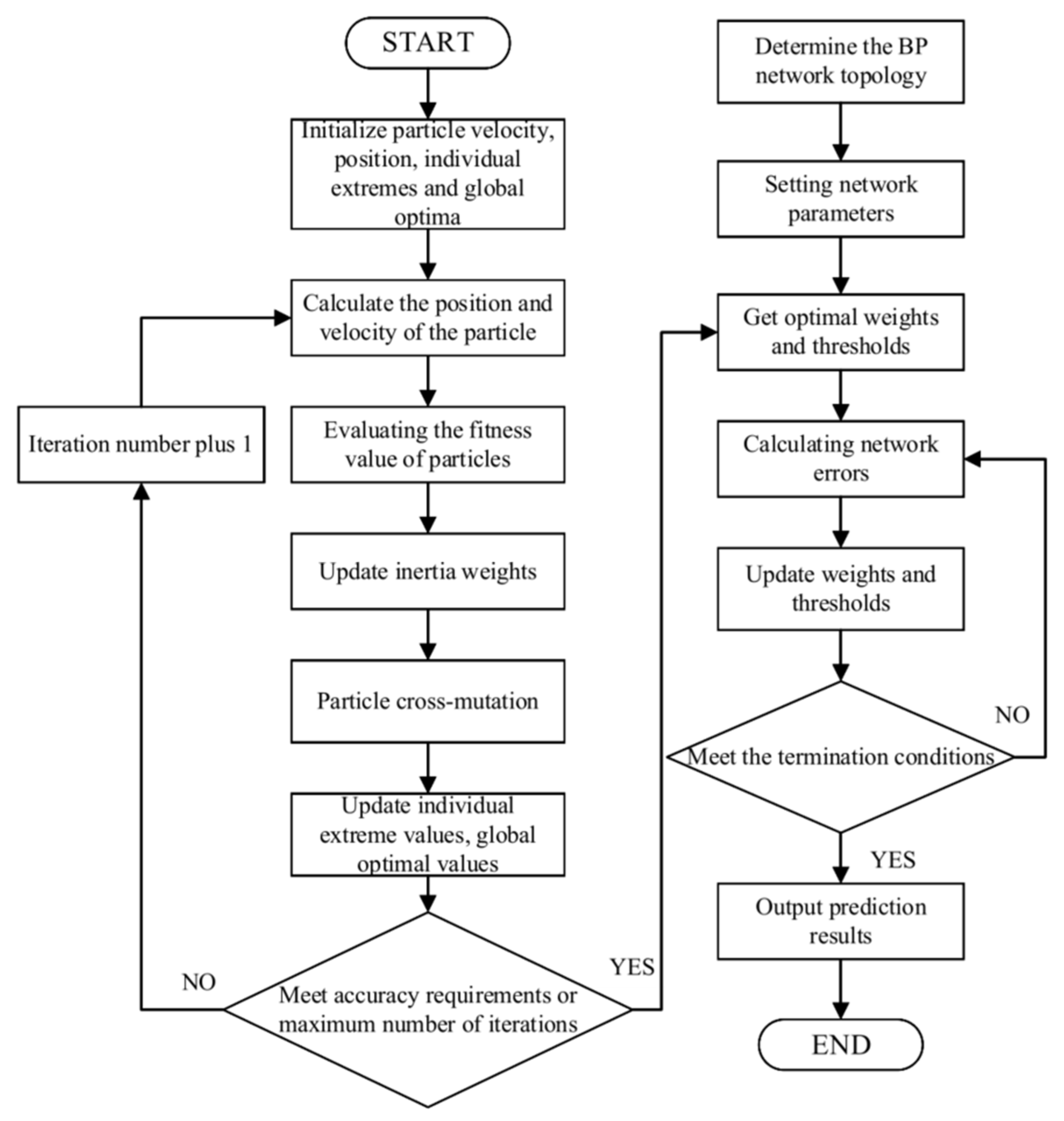

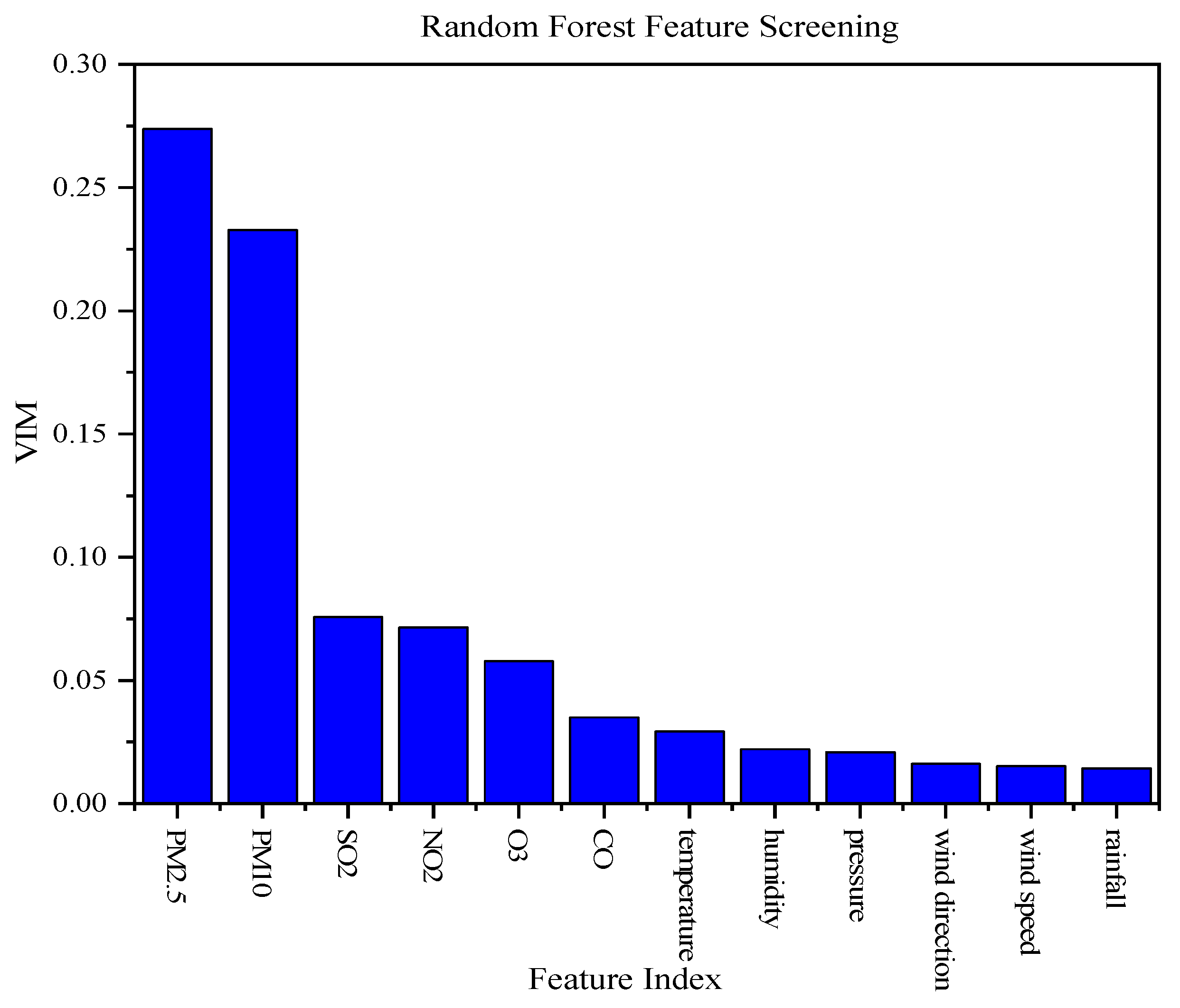

Despite extensive research efforts to enhance learning strategies, topological structures, and update mechanisms of PSO algorithms, conventional approaches continue to exhibit significant limitations, including slow convergence rates, susceptibility to local optima entrapment, and premature convergence phenomena. To address these computational challenges and enhance air quality prediction accuracy, this study proposes a comprehensive three-stage hybrid optimization framework. The methodology initially employs Random Forest (RF) algorithm to identify the optimal feature subset, effectively reducing dimensionality and eliminating redundant variables that may compromise prediction performance. Subsequently, the traditional PSO algorithm is enhanced through dynamically adaptive inertia weights and learning factors, while incorporating an adaptive mutation mechanism during particle search processes, thereby significantly improving global search capability and convergence efficiency while mitigating premature convergence risks. Finally, this enhanced PSO algorithm is utilized to simultaneously optimize both connection weights and threshold parameters of the BPNN, establishing a synergistic hybrid model that combines superior global optimization capabilities with robust nonlinear mapping strengths. Experimental validation conducted using air quality data from China, demonstrates that the proposed framework achieves faster convergence while effectively circumventing local optima limitations. The resulting model exhibits stable, rapid, efficient, and accurate air quality prediction capabilities, thereby overcoming the inherent deficiencies of traditional temporal prediction models and conventional hybrid approaches.

The rest of the paper is organized into five sections:

Section 2 discusses the basic theory and methods of this work, including RFs, deep learning models, and improvements to the standard PSO algorithm.

Section 3 describes building the prediction model and data preprocessing, followed by the results of filtering the input variables.

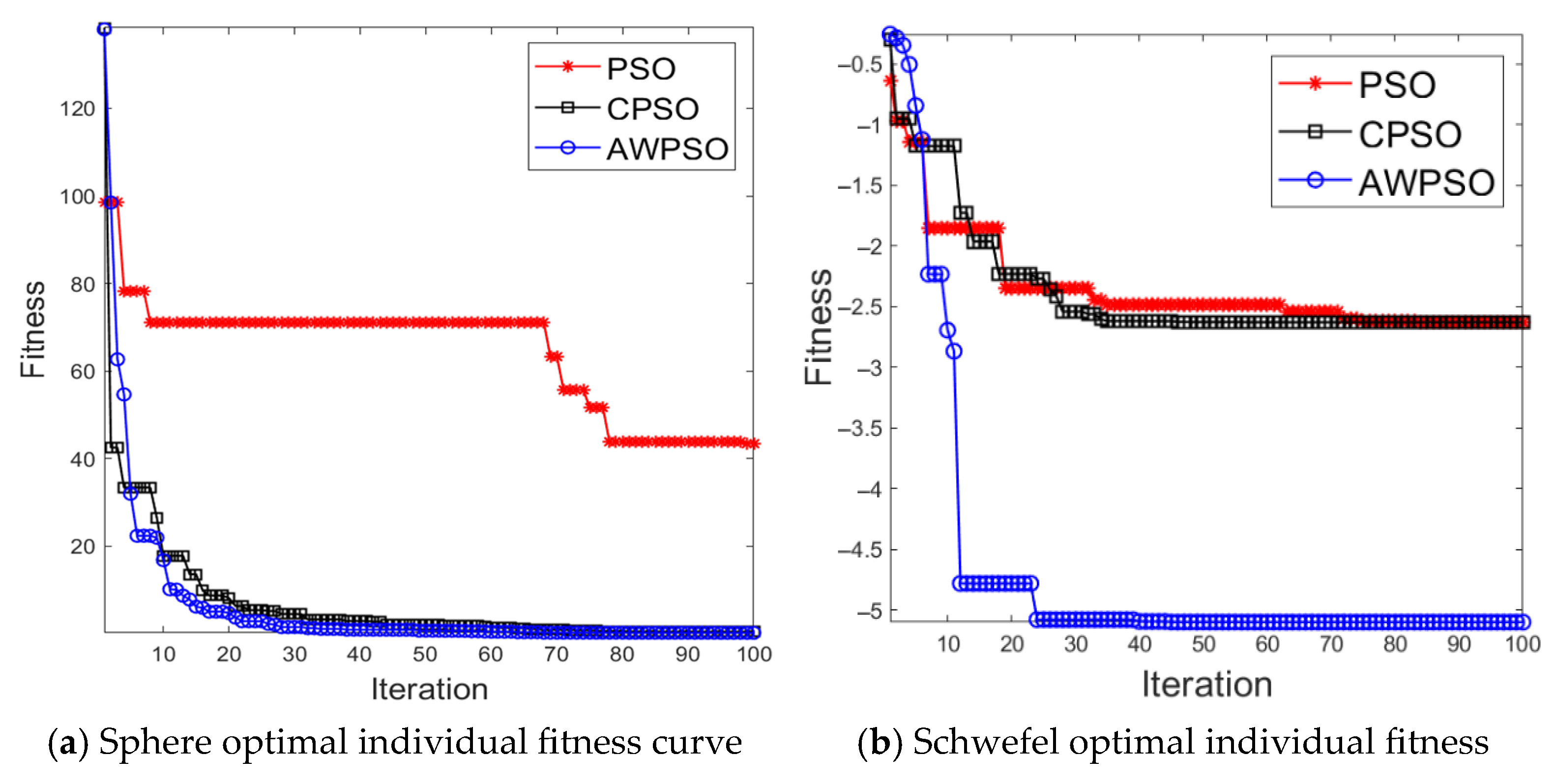

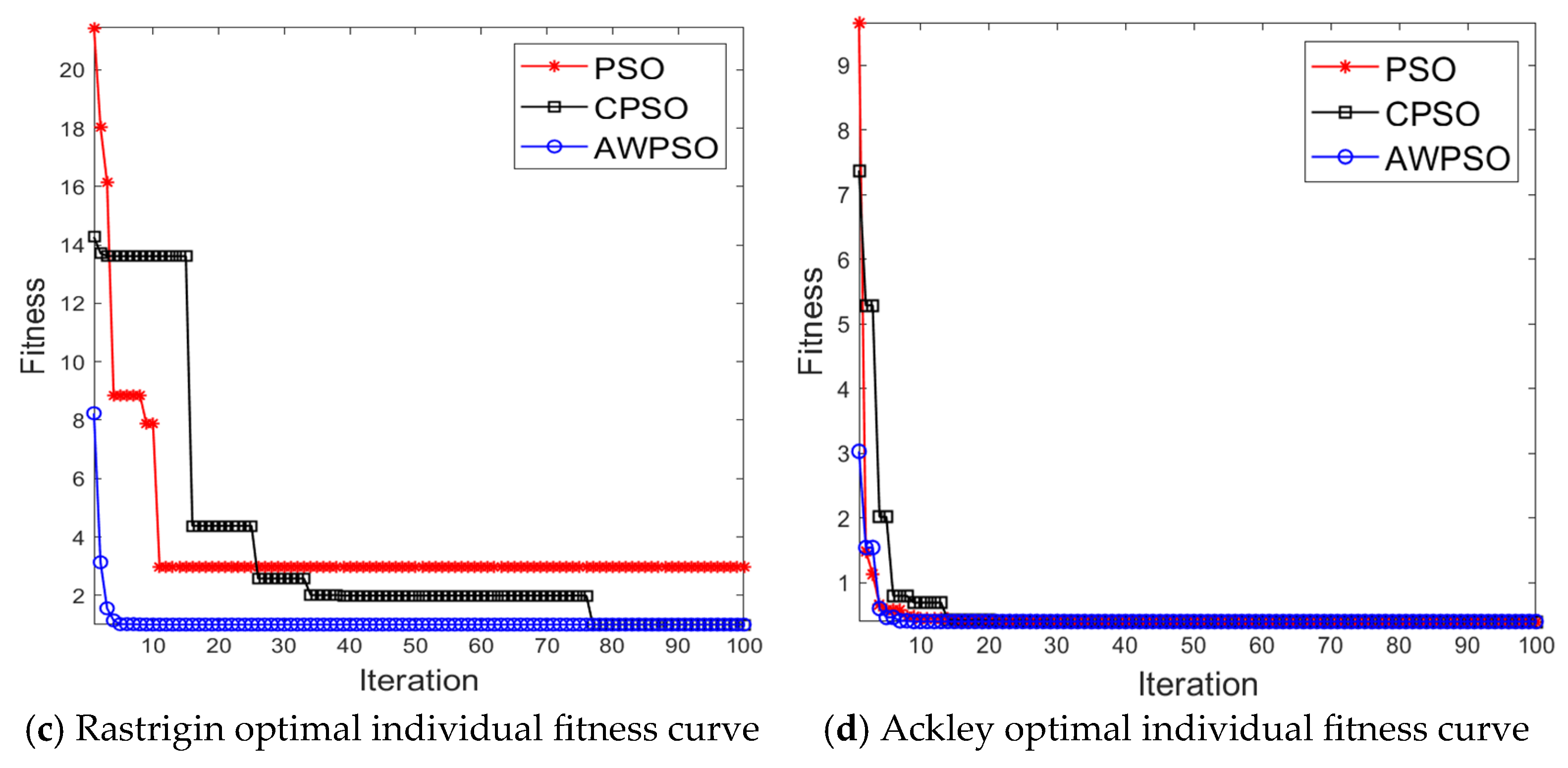

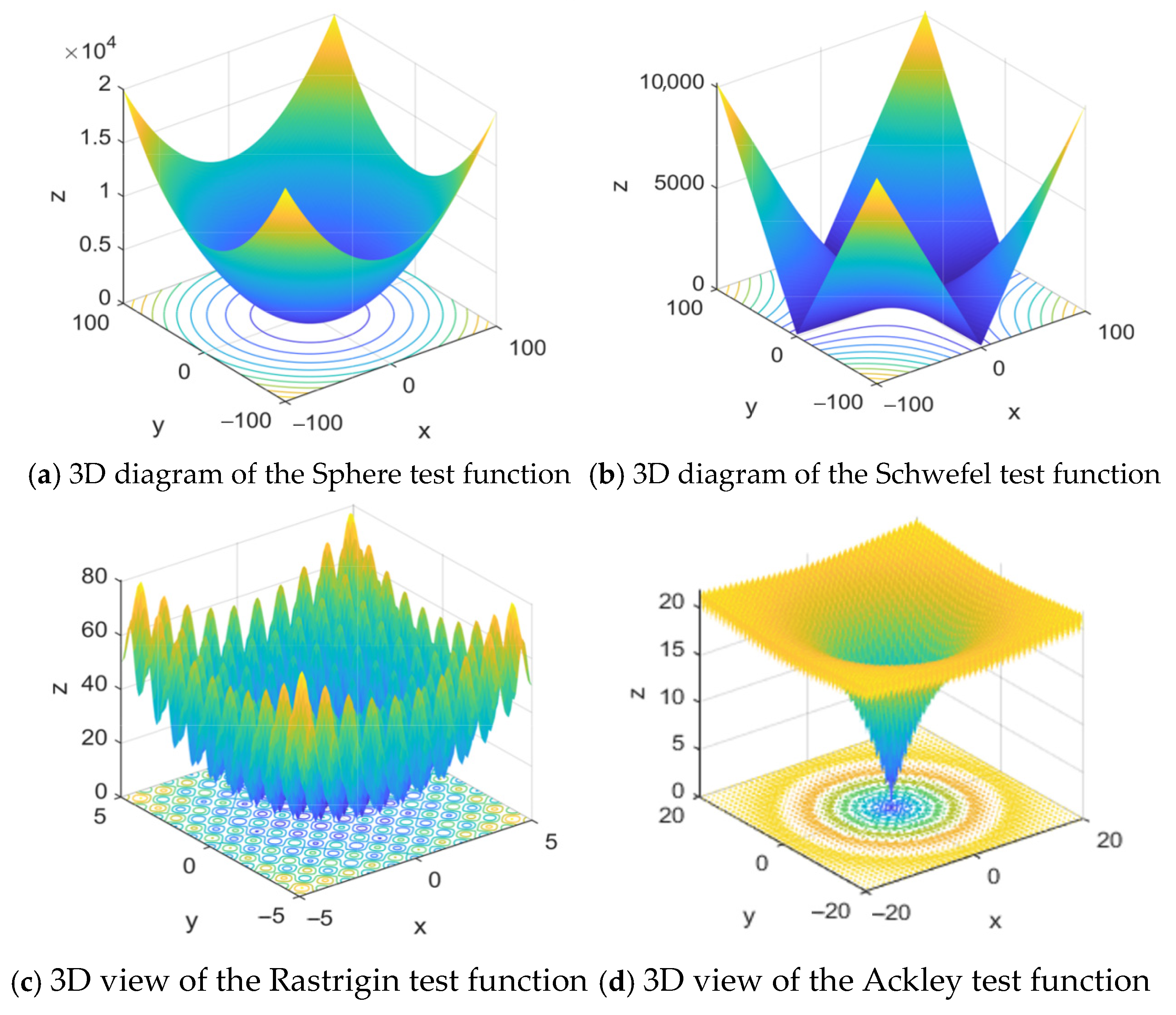

Section 4 presents simulation results and analysis, including the validation of the adaptive-weight particle swarm algorithm (AWPSO) using four benchmark functions, and the simulation experimental results and discussion using air quality data from Shijiazhuang City.

Section 5 presents the conclusions of the paper.

5. Conclusions

As the problem of air pollution has seriously affected people’s health and economic development, the prediction and control of air pollution have now become an urgent direction in research today. Although the traditional BP model is a widely used prediction method, it is prone to being caught in minimal value and slow convergence speed, adding difficulty in accurately predicting AQI. The combined prediction model based on RF-AWPSO-BPNN proposed in this paper is capable of improving the accuracy of air quality prediction. By analyzing and comparing AWPSO simulation tests and experimental data, the following conclusions are drawn.

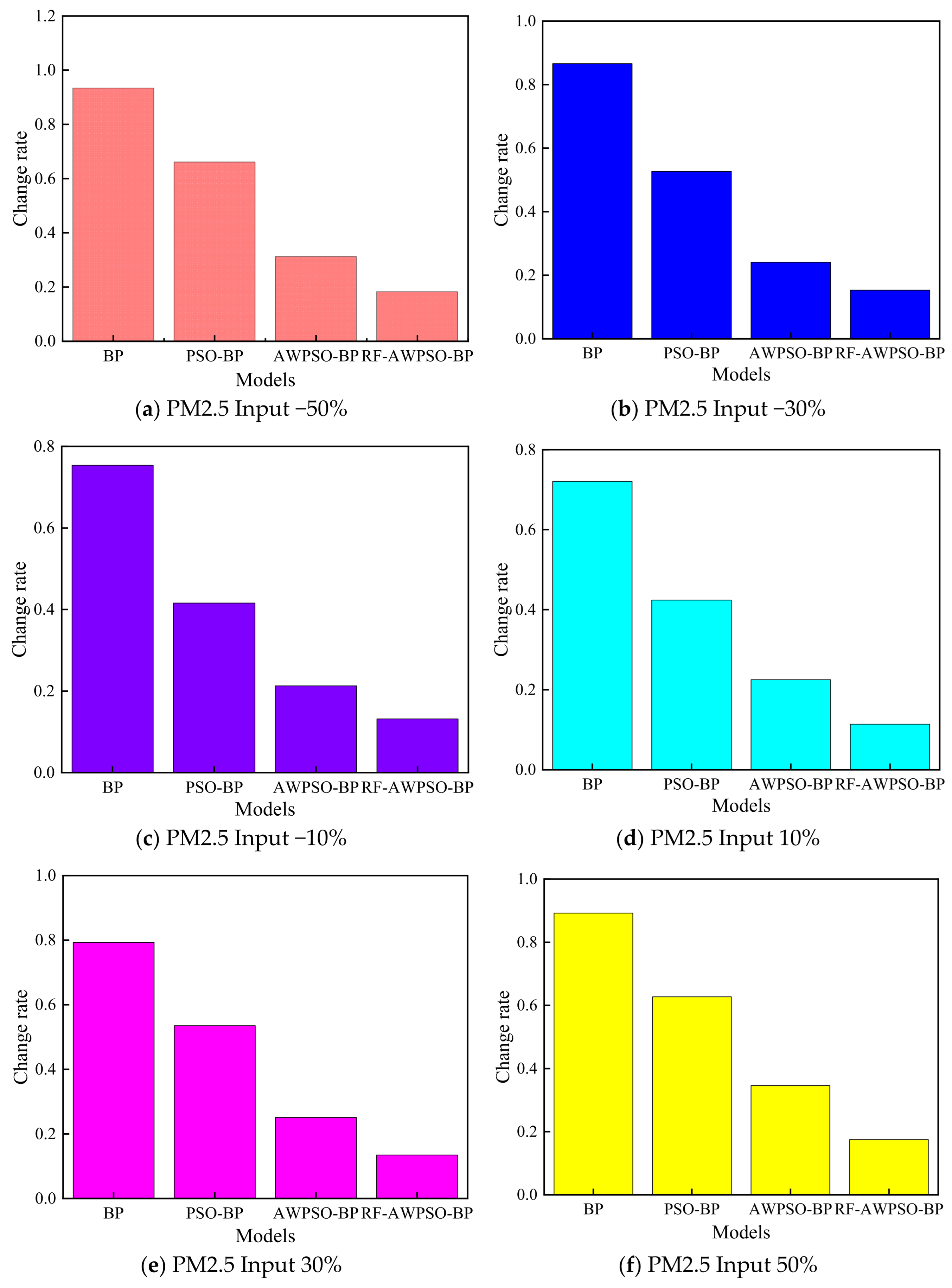

(1) The optimal feature subset is obtained by feature extraction of the original data through RF, which contributes to an effective improvement in AQI prediction accuracy.

(2) The PSO algorithm is chosen to optimize the BPNN for its defects and obtaining the best weights and thresholds.

(3) Due to the low convergence speed, local optimization, and premature maturation of the PSO algorithm, the study improves the inertia weights and learning factors and introduces the crossover and variation operations of GA in the particle search process to enhance the particle convergence speed and improve the particle search capability.

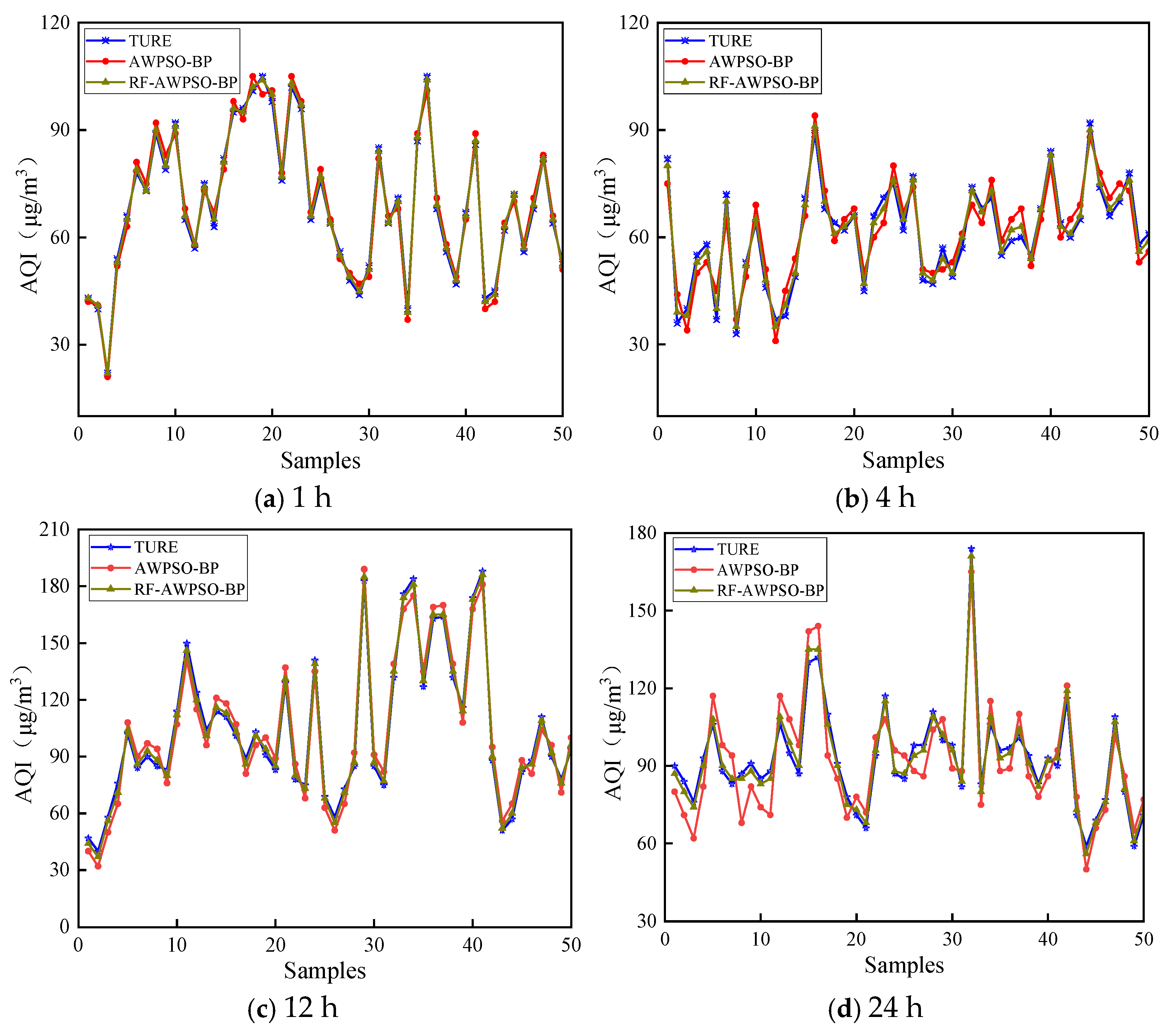

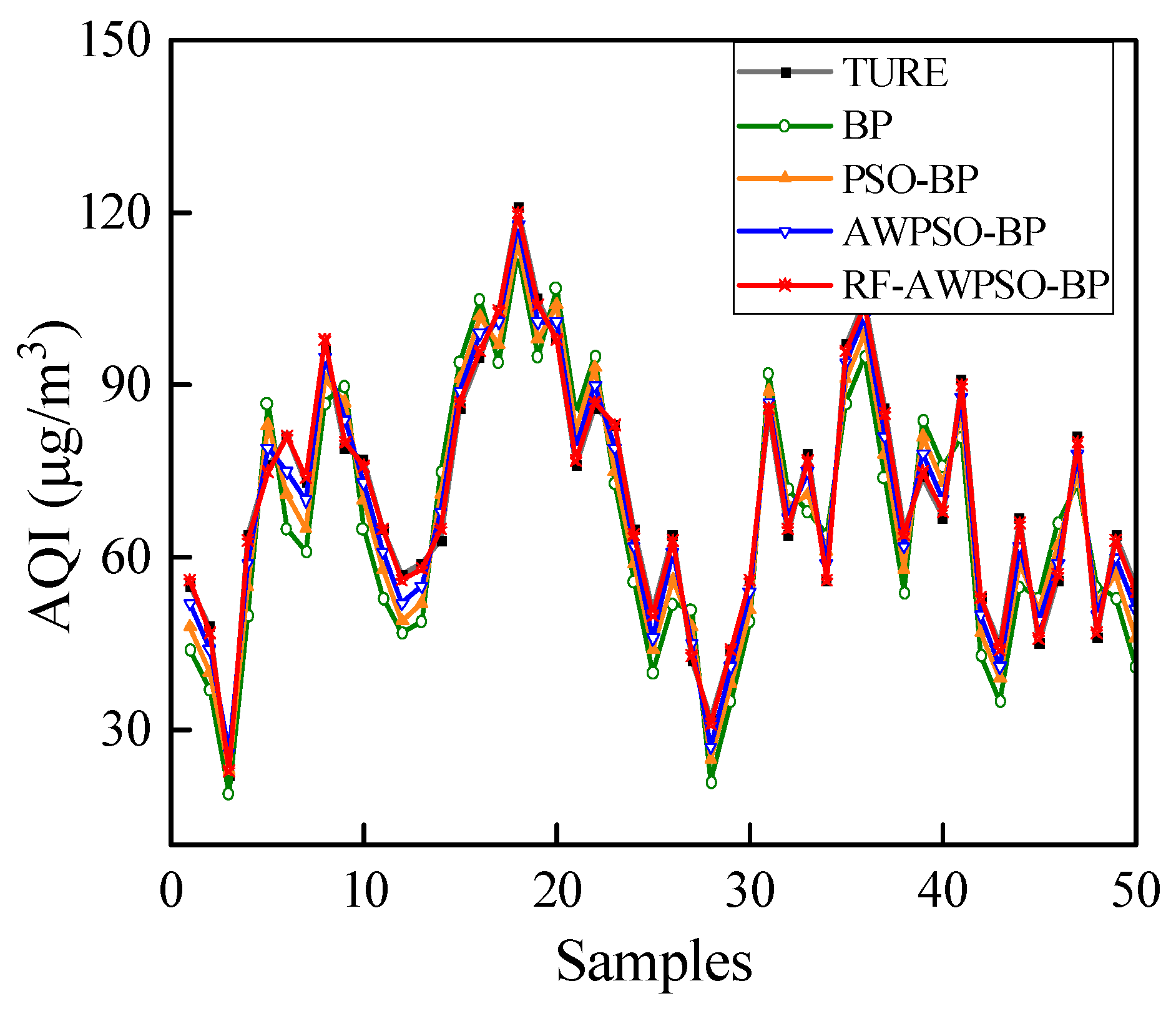

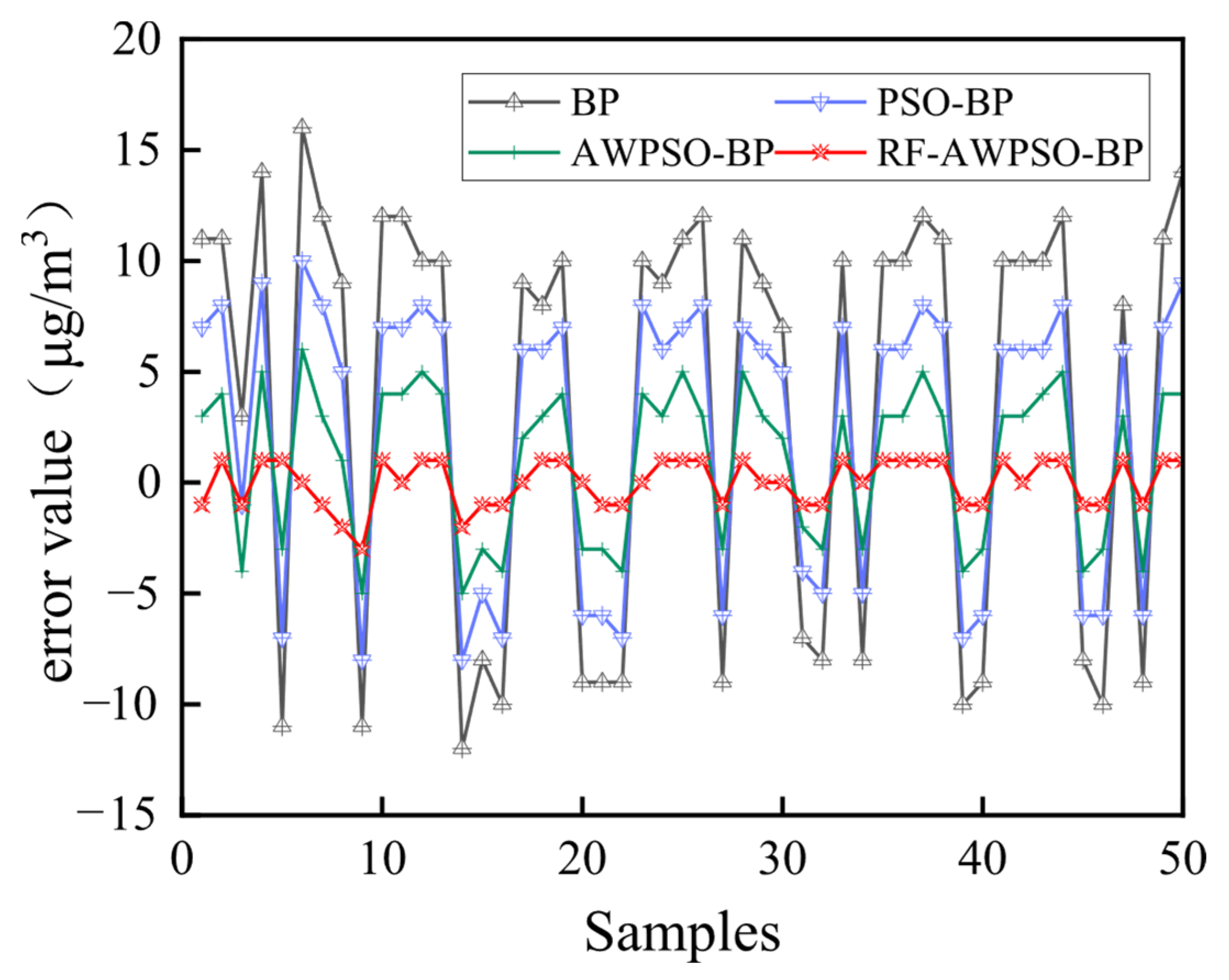

(4) Through comparison experiments, it is found that the proposed RF-AWPSO-BP hybrid model greatly improves the prediction performance, and the predicted and true values exhibit a strong fitting degree.

The above findings prove that the hybrid prediction model has high accuracy for AQI prediction, which is of practical application for the prediction and control of air pollution.

The proposed model offers significant practical value to multiple stakeholders in environmental management and public health sectors. Environmental protection agencies and regulatory bodies can leverage the improved AQI prediction accuracy to enhance their air quality monitoring capabilities and develop more effective pollution control policies. Public health officials can utilize the reliable forecasting results to establish better early warning systems and health advisories. Additionally, weather and environmental forecasting services can incorporate our model to provide more accurate air quality forecasts to the general public, enabling citizens to make informed decisions about outdoor activities and health protection measures. The research also contributes to the broader scientific community by advancing methodological approaches in environmental prediction modeling, while offering practical solutions for smart city initiatives.

However, the air quality of a location is not only affected by its own historical data (temporal dimension), but also significantly affected by the transport of pollutants from surrounding areas (spatial dimension). This manuscript does not adequately consider the interactions in geospatial space. Future work will focus on improving long-term prediction capabilities. We plan to explore multi-scale modeling strategies to hierarchically process prediction tasks at different time scales. In addition, we will study how to effectively integrate multi-source external data such as weather forecasts to enhance the model’s ability to predict environmental changes. By combining ensemble learning methods with the long-term memory advantages of deep learning models, it is expected that a more robust long-term AQI prediction system will be built to provide decision support for environmental management and public health policy making.