Global Ionospheric TEC Map Prediction Based on Multichannel ED-PredRNN

Abstract

1. Introduction

2. Data and Data Preprocessing

2.1. Data Description

2.2. Data Alignment

2.3. Data Normalization

2.4. Sample Production

3. Methodology

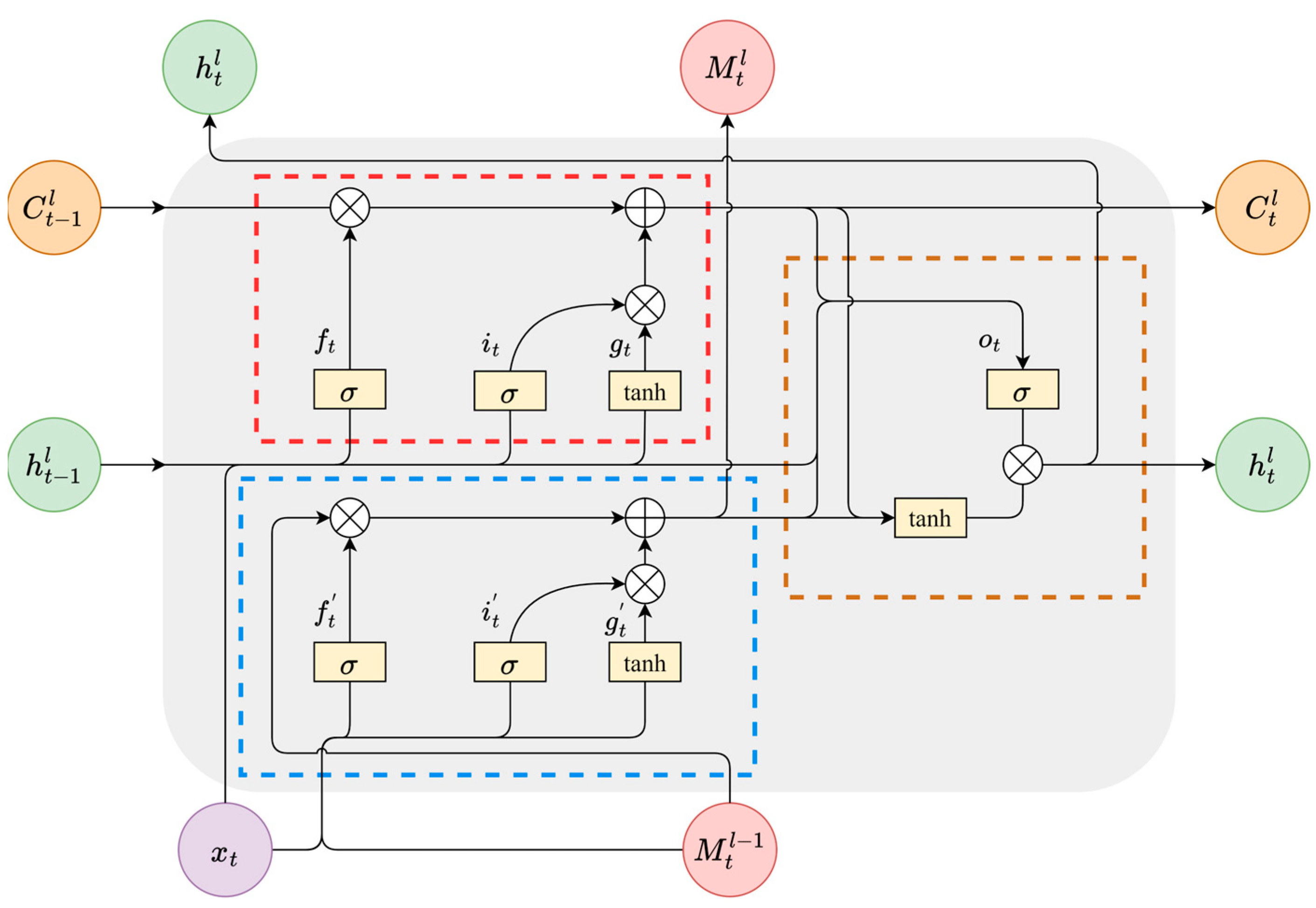

3.1. ST-LSTM

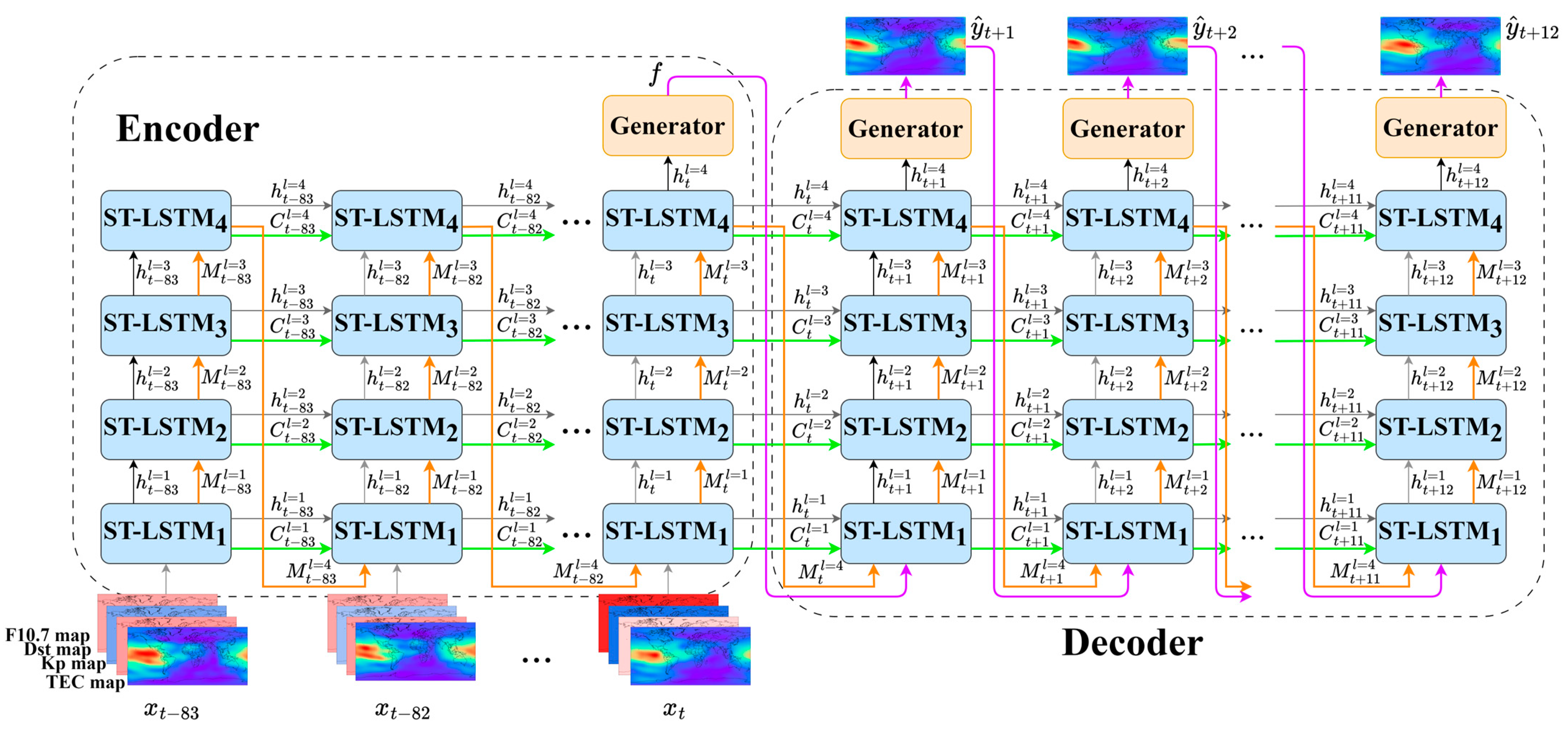

3.2. PredRNN

3.3. Encoder–Decoder Structure

3.4. The Proposed Multichannel ED-PredRNN

4. Experiments Setting

4.1. Evaluation Metrics

4.2. Structure of Comparative Models

4.3. Model Optimization

5. Result and Discussion

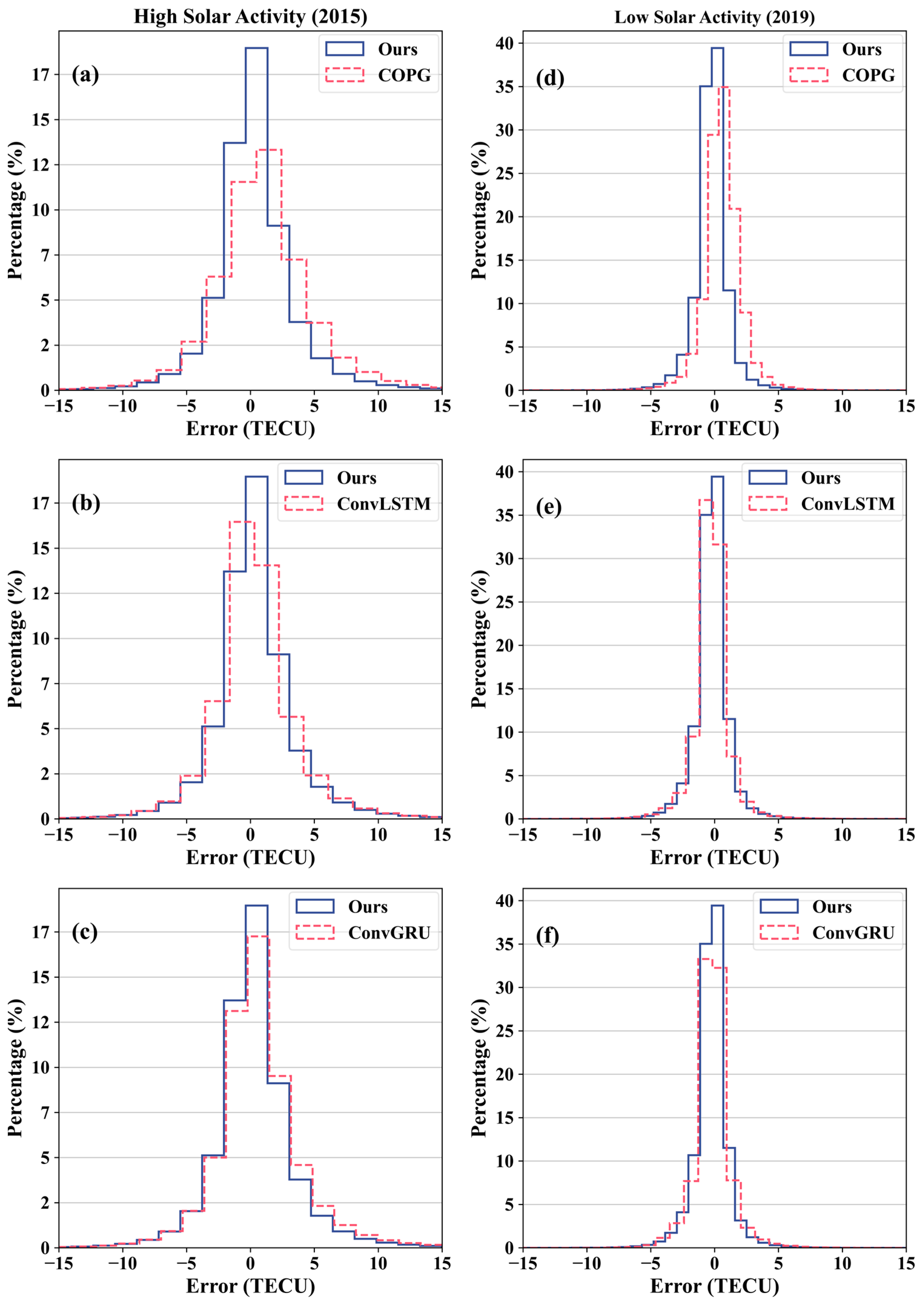

5.1. Overall Comparison Under Different Solar Activities

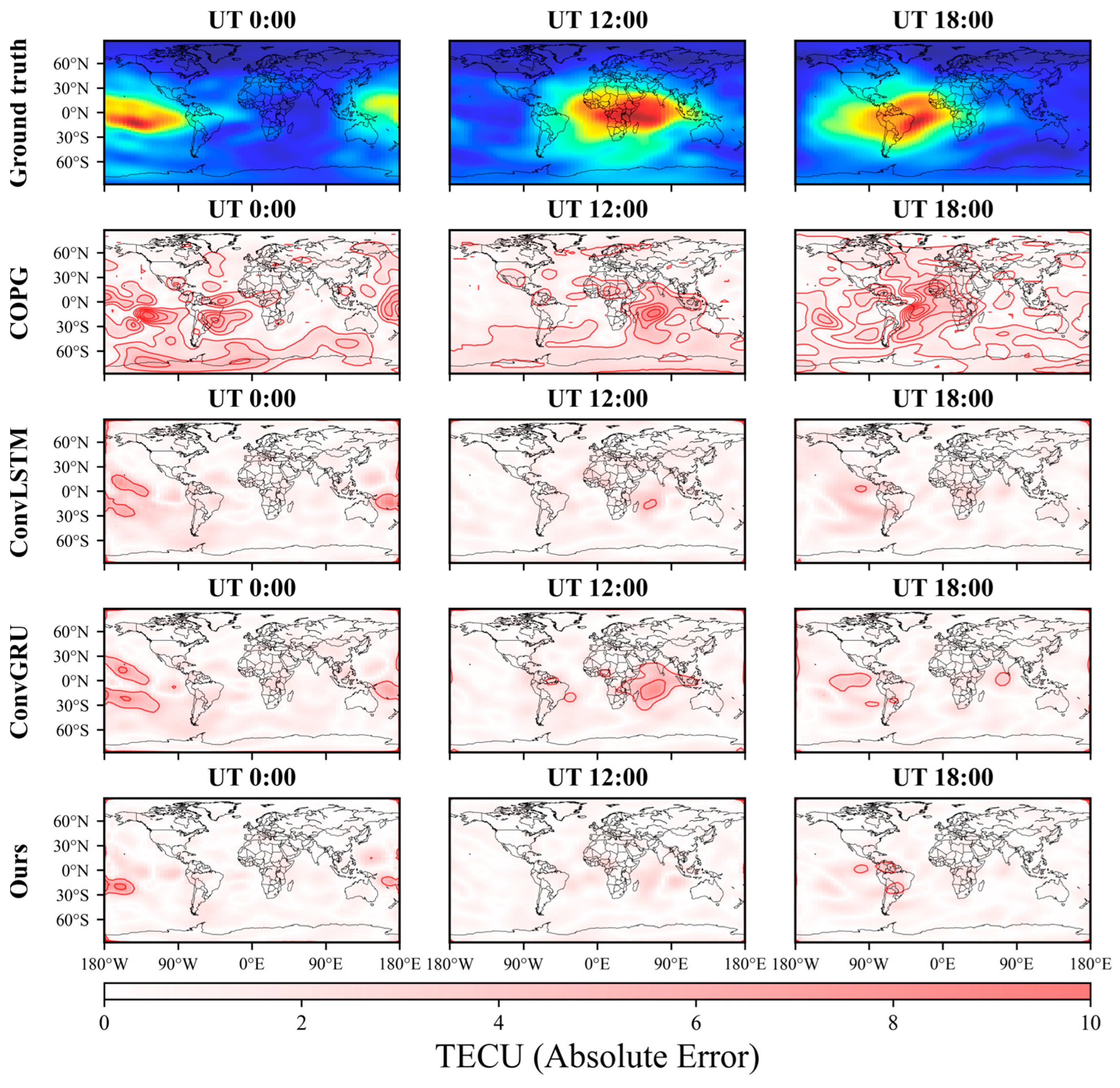

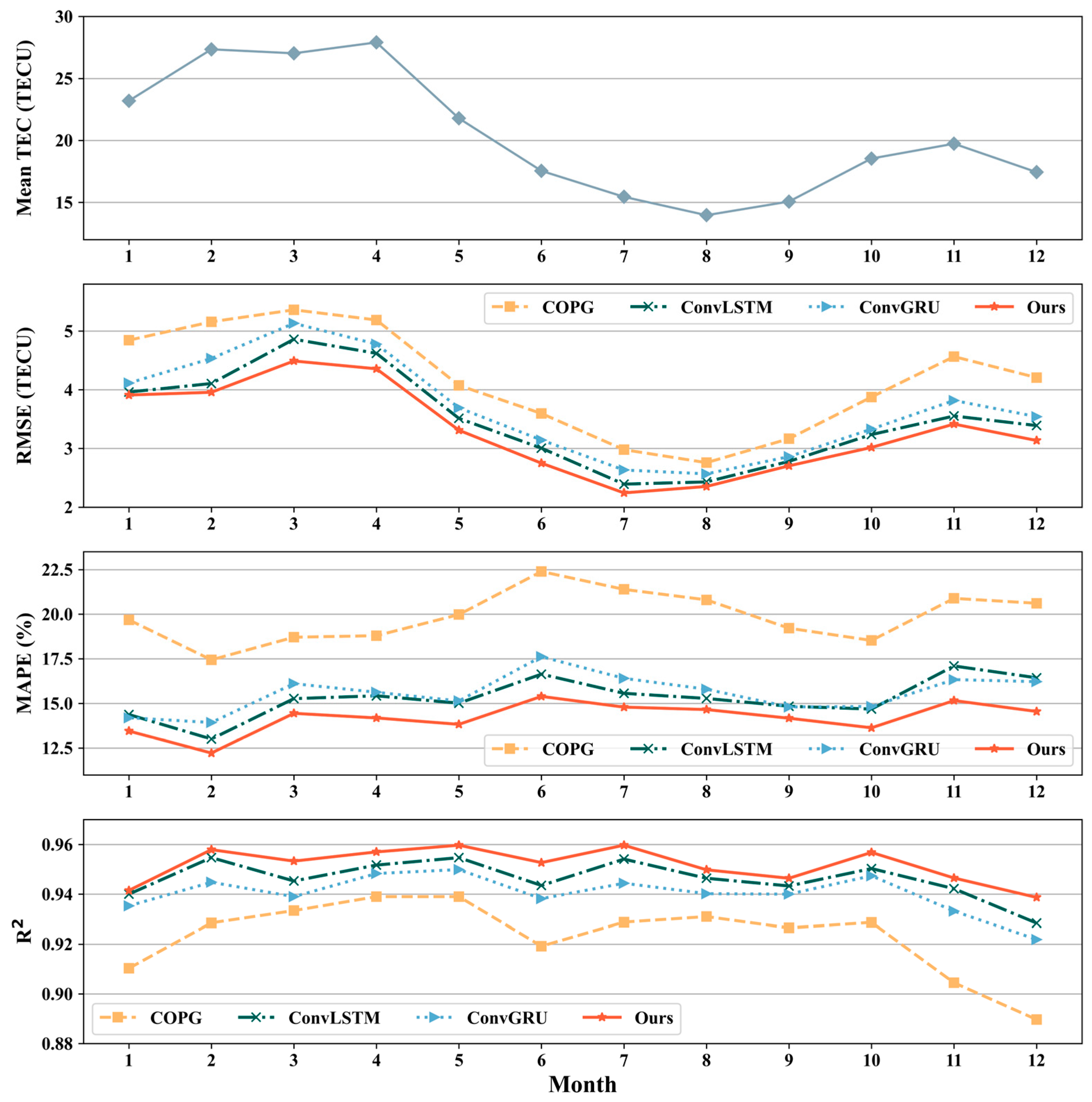

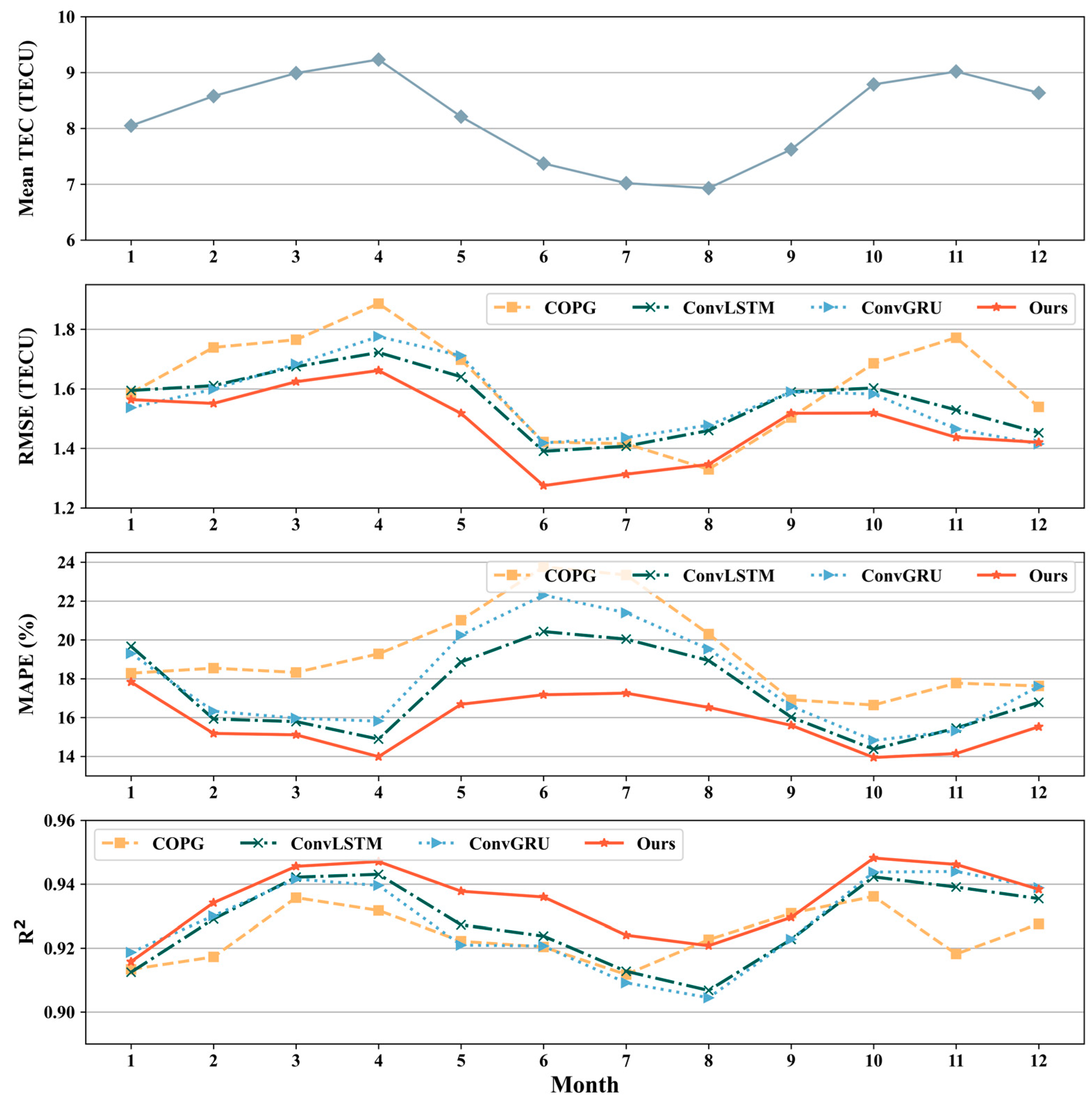

5.2. Comparison at Different Times

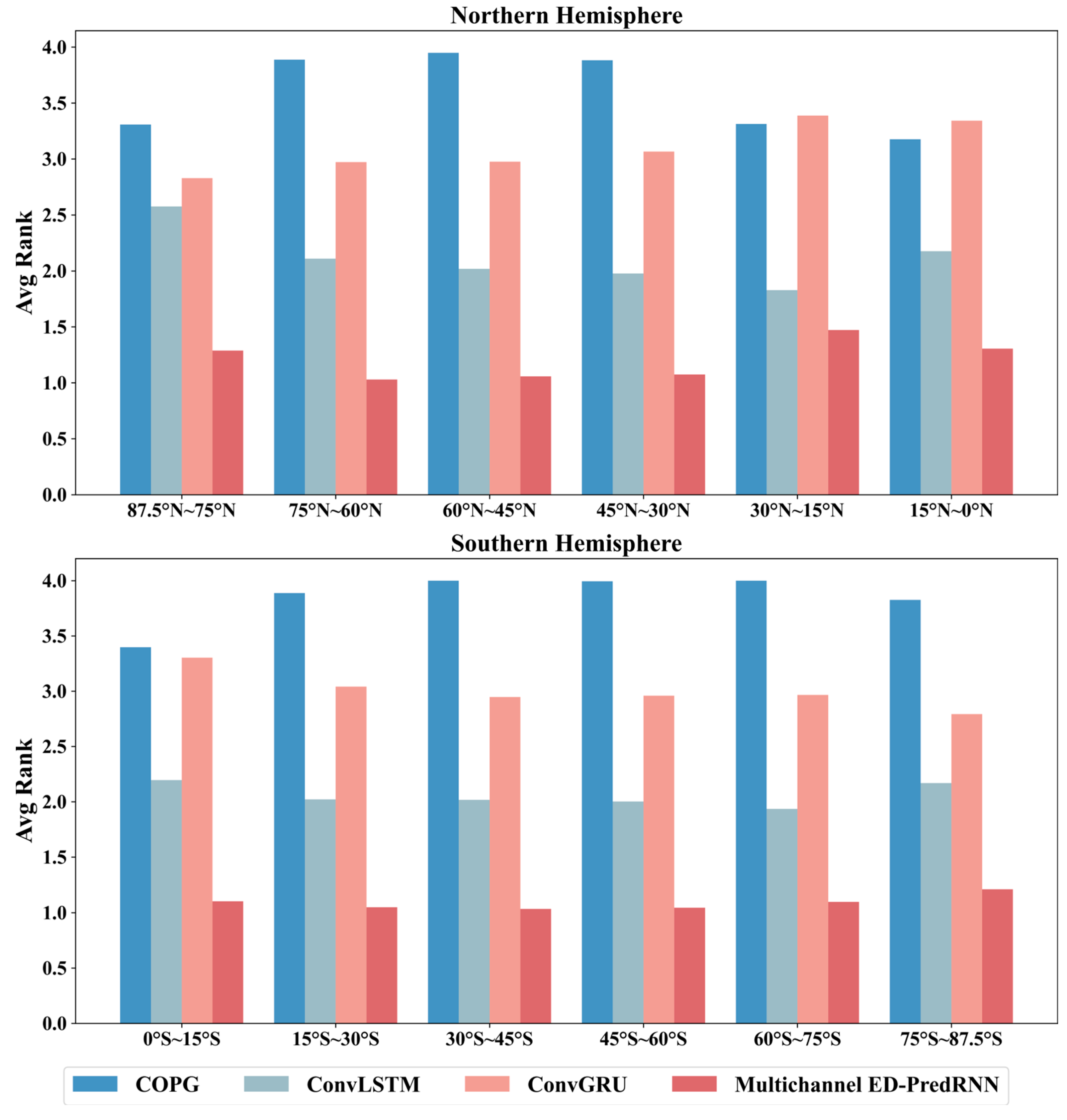

5.3. Comparison at Different Spatial Locations

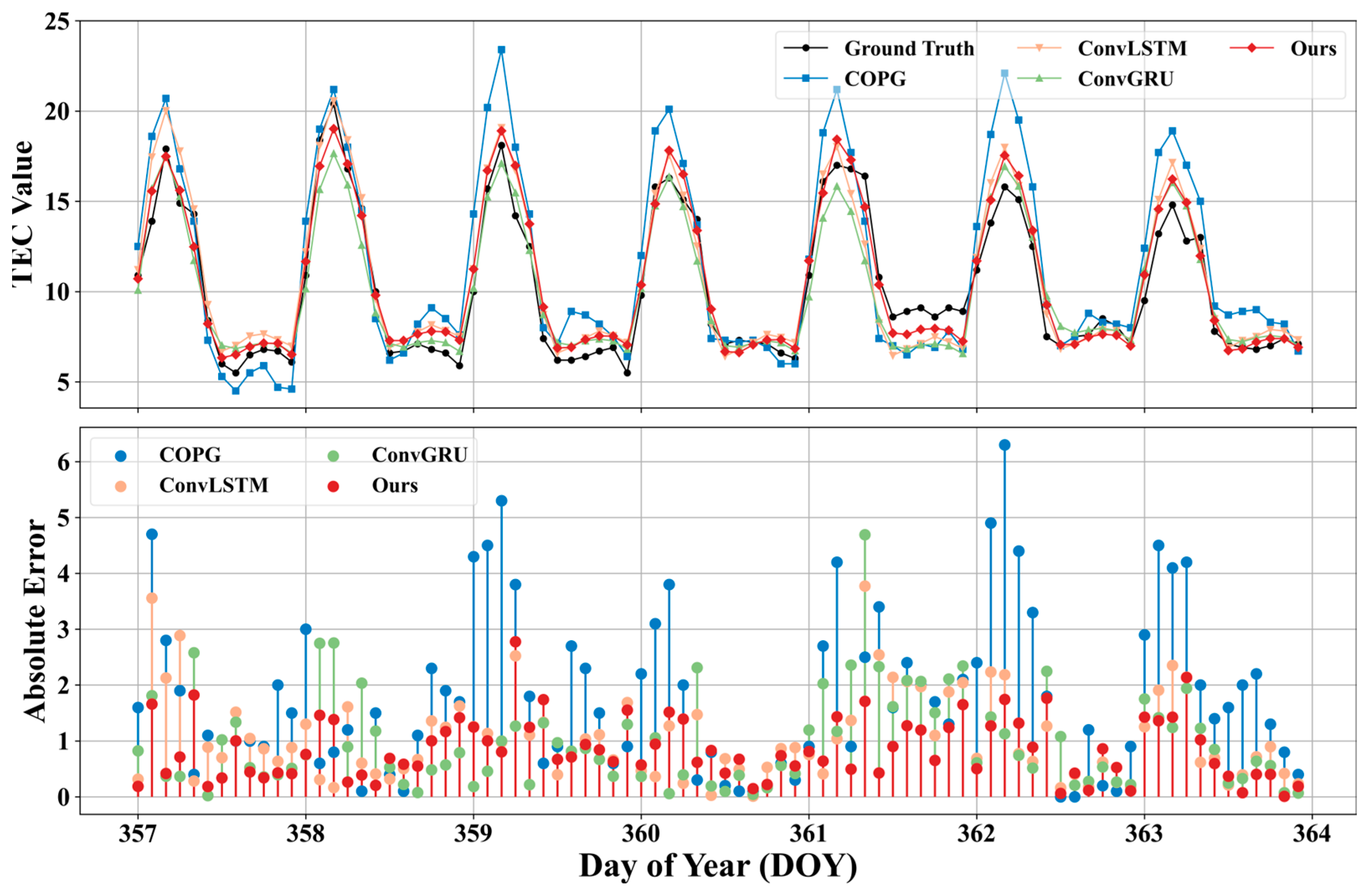

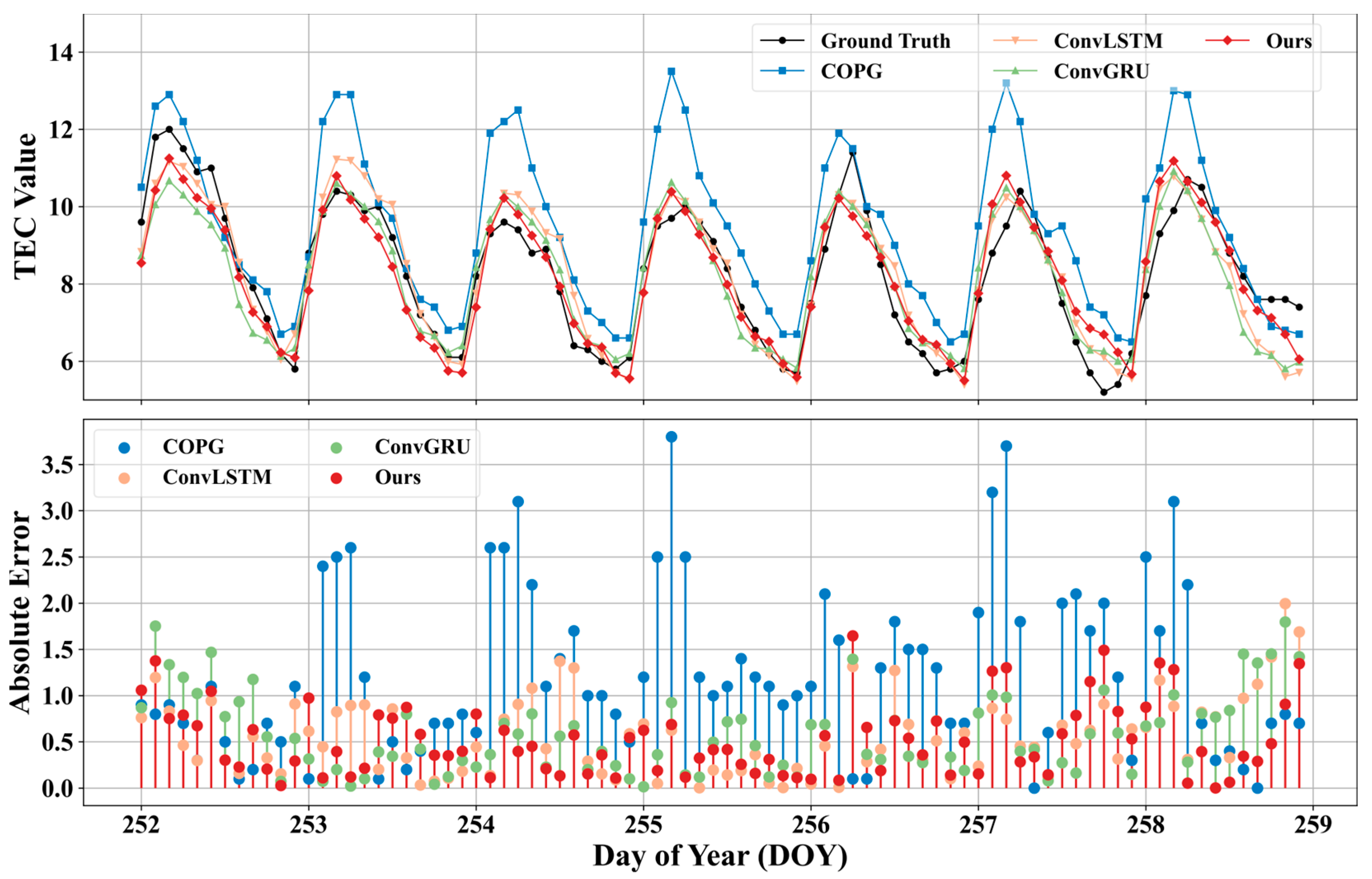

5.4. Single Site Prediction Analysis

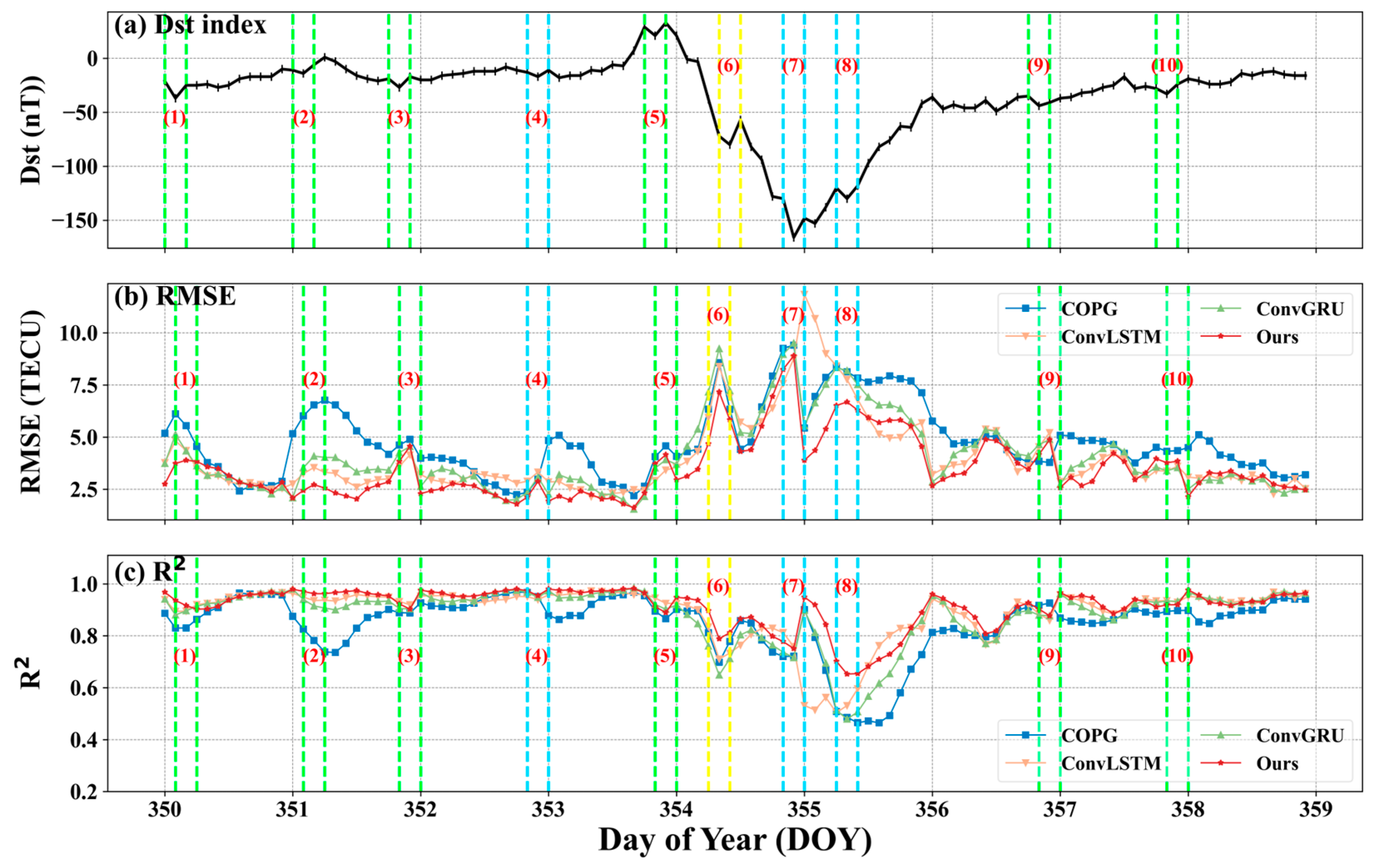

5.5. Comparison Under Extreme Situations

6. Conclusions

- The overall comparison between high and low solar activity years shows that Multichannel ED-PredRNN outperforms COPG, ConvLSTM, and ConvGRU. Comparing these three models, the of Multichannel ED-PredRNN decreased by 20.18%, 5.30%, and 10.19% in 2015 (high solar activity), and by 8.34%, 4.87%, and 5.00% in 2019 (low solar activity).

- Comparison at different times indicates that the of each model is linearly positively correlated with the monthly mean of TEC. In all 12 months of 2015 and 8 months of 2019, Multichannel ED-PredRNN performs the best.

- Comparison at different spatial locations shows that Multichannel ED-PredRNN ranks first in all 12 subregions globally.

- The single station prediction using Beijing Station as an example shows that in the vast majority of cases, Multichannel ED-PredRNN outperforms the comparative models, especially at the peaks of TEC.

- The comparison in extreme cases (such as geomagnetic storms) shows that the predictive performance of all models is affected by geomagnetic disturbances, while Multichannel ED-PredRNN is least affected by geomagnetic disturbances.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Monte-Moreno, E.; Yang, H.; Hernández-Pajares, M. Forecast of the Global TEC by Nearest Neighbour Technique. Remote Sens. 2022, 14, 1361. [Google Scholar] [CrossRef]

- Xiong, B.; Li, X.; Wang, Y.; Zhang, H.; Liu, Z.; Ding, F.; Zhao, B. Prediction of ionospheric TEC over China based on long and short-term memory neural network. Chin. J. Geophys. 2022, 65, 2365–2377. [Google Scholar] [CrossRef]

- Ray, S.; Huba, J.D.; Kundu, B.; Jin, S. Influence of the October 14, 2023, Ring of Fire Annular Eclipse on the Ionosphere: A Comparison Between GNSS Observations and SAMI3 Model Prediction. JGR Space Phys. 2024, 129, e2024JA032710. [Google Scholar] [CrossRef]

- Sun, Y.-Y.; Shen, M.M.; Tsai, Y.-L.; Lin, C.-Y.; Chou, M.-Y.; Yu, T.; Lin, K.; Huang, Q.; Wang, J.; Qiu, L.; et al. Wave Steepening in Ionospheric Total Electron Density Due to the 21 August 2017 Total Solar Eclipse. J. Geophys. Res. Space Phys. 2021, 126, e2020JA028931. [Google Scholar] [CrossRef]

- Bilitza, D.; Altadill, D.; Truhlik, V.; Shubin, V.; Galkin, I.; Reinisch, B.; Huang, X. International Reference Ionosphere 2016: From Ionospheric Climate to Real-time Weather Predictions. Space Weather 2017, 15, 418–429. [Google Scholar] [CrossRef]

- Hochegger, G.; Nava, B.; Radicella, S.; Leitinger, R. A Family of Ionospheric Models for Different Uses. Phys. Chem. Earth Part C Sol. Terr. Planet. Sci. 2000, 25, 307–310. [Google Scholar] [CrossRef]

- Nava, B.; Coïsson, P.; Radicella, S.M. A New Version of the NeQuick Ionosphere Electron Density Model. J. Atmos. Sol.-Terr. Phys. 2008, 70, 1856–1862. [Google Scholar] [CrossRef]

- Bent, R.B.; Llewellyn, S.K.; Nesterczuk, G.; Schmid, P.E. The Development of a Highly-Successful Worldwide Empirical Ionospheric Model and Its Use in Certain Aspects of Space Communications and Worldwide Total Electron Content Investigations; Naval Research Laboratory: Washington, DC, USA, 1975; pp. 13–28. [Google Scholar]

- Rawer, K.; Bilitza, D.; Ramakrishnan, S. Goals and Status of the International Reference Ionosphere. Rev. Geophys. 1978, 16, 177–181. [Google Scholar] [CrossRef]

- Lin, X.; Wang, H.; Zhang, Q.; Yao, C.; Chen, C.; Cheng, L.; Li, Z. A Spatiotemporal Network Model for Global Ionospheric TEC Forecasting. Remote Sens. 2022, 14, 1717. [Google Scholar] [CrossRef]

- Bilitza, D. International Reference Ionosphere: Recent Developments. Radio Sci. 1986, 21, 343–346. [Google Scholar] [CrossRef]

- Li, Z.-G.; Cheng, Z.-Y.; Feng, C.-G.; Li, W.-C.; Li, H.-R. A Study of Prediction Models for Ionosphere. Chin. J. Geophys. 2007, 50, 307–319. [Google Scholar]

- Ratnam, D.V.; Otsuka, Y.; Sivavaraprasad, G.; Dabbakuti, J.R.K.K. Development of Multivariate Ionospheric TEC Forecasting Algorithm Using Linear Time Series Model and ARMA over Low-Latitude GNSS Station. Adv. Space Res. 2019, 63, 2848–2856. [Google Scholar] [CrossRef]

- Mandrikova, O.V.; Fetisova, N.V.; Al-Kasasbeh, R.T.; Klionskiy, D.M.; Geppener, V.V.; Ilyash, M.Y. Ionospheric Parameter Modelling and Anomaly Discovery by Combining the Wavelet Transform with Autoregressive Models. Ann. Geophys. 2015, 58, 1. [Google Scholar] [CrossRef]

- Kaselimi, M.; Voulodimos, A.; Doulamis, N.; Doulamis, A.; Delikaraoglou, D. Deep Recurrent Neural Networks for Ionospheric Variations Estimation Using GNSS Measurements. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5800715. [Google Scholar] [CrossRef]

- Akhoondzadeh, M. A MLP Neural Network as an Investigator of TEC Time Series to Detect Seismo-Ionospheric Anomalies. Adv. Space Res. 2013, 51, 2048–2057. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Ruwali, A.; Kumar, A.J.S.; Prakash, K.B.; Sivavaraprasad, G.; Ratnam, D.V. Implementation of Hybrid Deep Learning Model (LSTM-CNN) for Ionospheric TEC Forecasting Using GPS Data. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1004–1008. [Google Scholar] [CrossRef]

- Sun, W.; Xu, L.; Huang, X.; Zhang, W.; Yuan, T.; Chen, Z.; Yan, Y. Forecasting of Ionospheric Vertical Total Electron Content (TEC) Using LSTM Networks. In Proceedings of the 2017 International Conference on Machine Learning and Cybernetics (ICMLC), IEEE, Ningbo, China, 9–12 July 2017; pp. 340–344. [Google Scholar]

- Tang, J.; Li, Y.; Yang, D.; Ding, M. An Approach for Predicting Global Ionospheric TEC Using Machine Learning. Remote Sens. 2022, 14, 1585. [Google Scholar] [CrossRef]

- Xiong, P.; Zhai, D.; Long, C.; Zhou, H.; Zhang, X.; Shen, X. Long Short-Term Memory Neural Network for Ionospheric Total Electron Content Forecasting Over China. Space Weather 2021, 19, e2020SW002706. [Google Scholar] [CrossRef]

- Tang, R.; Zeng, F.; Chen, Z.; Wang, J.-S.; Huang, C.-M.; Wu, Z. The Comparison of Predicting Storm-Time Ionospheric TEC by Three Methods: ARIMA, LSTM, and Seq2Seq. Atmosphere 2020, 11, 316. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.; Woo, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. 2015. Available online: https://proceedings.neurips.cc/paper/2015/hash/07563a3fe3bbe7e3ba84431ad9d055af-Abstract.html (accessed on 9 March 2025).

- Gao, X.; Yao, Y. A Storm-Time Ionospheric TEC Model with Multichannel Features by the Spatiotemporal ConvLSTM Network. J. Geod. 2023, 97, 9. [Google Scholar] [CrossRef]

- Liu, L.; Morton, Y.J.; Liu, Y. Machine Learning Prediction of Storm-Time High-Latitude Ionospheric Irregularities From GNSS-Derived ROTI Maps. Geophys. Res. Lett. 2021, 48, e2021GL095561. [Google Scholar] [CrossRef]

- Li, L.; Liu, H.; Le, H.; Yuan, J.; Shan, W.; Han, Y.; Yuan, G.; Cui, C.; Wang, J. Spatiotemporal Prediction of Ionospheric Total Electron Content Based on ED-ConvLSTM. Remote Sens. 2023, 15, 3064. [Google Scholar] [CrossRef]

- Hanson, A.; Pnvr, K.; Krishnagopal, S.; Davis, L. Bidirectional Convolutional LSTM for the Detection of Violence in Videos. In Computer Vision—ECCV 2018 Workshops; Leal-Taixé, L., Roth, S., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11130, pp. 280–295. ISBN 978-3-030-11011-6. [Google Scholar]

- Chang, Y.; Luo, B. Bidirectional Convolutional LSTM Neural Network for Remote Sensing Image Super-Resolution. Remote Sens. 2019, 11, 2333. [Google Scholar] [CrossRef]

- Knol, D.; De Leeuw, F.; Meirink, J.F.; Krzhizhanovskaya, V.V. Deep Learning for Solar Irradiance Nowcasting: A Comparison of a Recurrent Neural Network and Two Traditional Methods. In Computational Science—ICCS 2021; Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M.A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12746, pp. 309–322. ISBN 978-3-030-77976-4. [Google Scholar]

- Yao, R.; Zhang, Y.; Gao, C.; Zhou, Y.; Zhao, J.; Liang, L. Lightweight Video Object Segmentation Based on ConvGRU. In Pattern Recognition and Computer Vision; Lin, Z., Wang, L., Yang, J., Shi, G., Tan, T., Zheng, N., Chen, X., Zhang, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11858, pp. 441–452. ISBN 978-3-030-31722-5. [Google Scholar]

- Tang, J.; Zhong, Z.; Hu, J.; Wu, X. Forecasting Regional Ionospheric TEC Maps over China Using BiConvGRU Deep Learning. Remote Sens. 2023, 15, 3405. [Google Scholar] [CrossRef]

- Chen, J.; Zhi, N.; Liao, H.; Lu, M.; Feng, S. Global Forecasting of Ionospheric Vertical Total Electron Contents via ConvLSTM with Spectrum Analysis. GPS Solut. 2022, 26, 69. [Google Scholar] [CrossRef]

- Sivakrishna, K.; Venkata Ratnam, D.; Sivavaraprasad, G. A Bidirectional Deep-Learning Algorithm to Forecast Regional Ionospheric TEC Maps. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4531–4543. [Google Scholar] [CrossRef]

- Xia, G.; Liu, M.; Zhang, F.; Zhou, C. CAiTST: Conv-Attentional Image Time Sequence Transformer for Ionospheric TEC Maps Forecast. Remote Sens. 2022, 14, 4223. [Google Scholar] [CrossRef]

- Zhukov, A.V.; Yasyukevich, Y.V.; Bykov, A.E. GIMLi: Global Ionospheric Total Electron Content Model Based on Machine Learning. GPS Solut. 2021, 25, 19. [Google Scholar] [CrossRef]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. PredRNN: Recurrent Neural Networks for Predictive Learning Using Spatiotemporal LSTMs. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Sydney, Australia, 2017; Volume 30. [Google Scholar]

- Li, W.; Zhu, H.; Shi, S.; Zhao, D.; Shen, Y.; He, C. Modeling China’s Sichuan-Yunnan’s Ionosphere Based on Multichannel WOA-CNN-LSTM Algorithm. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5705018. [Google Scholar] [CrossRef]

- Xu, C.; Ding, M.; Tang, J. Prediction of GNSS-Based Regional Ionospheric TEC Using a Multichannel ConvLSTM With Attention Mechanism. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1001405. [Google Scholar] [CrossRef]

- Feng, J.; Zhang, Y.; Li, W.; Han, B.; Zhao, Z.; Zhang, T.; Huang, R. Analysis of Ionospheric TEC Response to Solar and Geomagnetic Activities at Different Solar Activity Stages. Adv. Space Res. 2023, 71, 2225–2239. [Google Scholar] [CrossRef]

- Huang, L.; Wu, H.; Lou, Y.; Zhang, H.; Liu, L.; Huang, L. Spatiotemporal Analysis of Regional Ionospheric TEC Prediction Using Multi-Factor NeuralProphet Model under Disturbed Conditions. Remote Sens. 2022, 15, 195. [Google Scholar] [CrossRef]

- Ren, X.; Yang, P.; Mei, D.; Liu, H.; Xu, G.; Dong, Y. Global Ionospheric TEC Forecasting for Geomagnetic Storm Time Using a Deep Learning-Based Multi-Model Ensemble Method. Space Weather 2023, 21, e2022SW003231. [Google Scholar] [CrossRef]

- Tapping, K.F. The 10.7 Cm Solar Radio Flux (F10.7). Space Weather 2013, 11, 394–406. [Google Scholar] [CrossRef]

- Viereck, R.; Puga, L.; McMullin, D.; Judge, D.; Weber, M.; Tobiska, W.K. The Mg II Index: A Proxy for Solar EUV. Geophys. Res. Lett. 2001, 28, 1343–1346. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, H.; Zhang, J.; Gao, Z.; Wang, J.; Yu, P.S.; Long, M. PredRNN: A Recurrent Neural Network for Spatiotemporal Predictive Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 2208–2225. [Google Scholar] [CrossRef]

- Chen, G.; Hu, L.; Zhang, Q.; Ren, Z.; Gao, X.; Cheng, J. ST-LSTM: Spatio-Temporal Graph Based Long Short-Term Memory Network For Vehicle Trajectory Prediction. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), IEEE, Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 608–612. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Noll, C.E. The Crustal Dynamics Data Information System: A Resource to Support Scientific Analysis Using Space Geodesy. Adv. Space Res. 2010, 45, 1421–1440. [Google Scholar] [CrossRef]

| Data Set | Training Set | Test Set | ||

|---|---|---|---|---|

| High Solar Activity (2013, 2014) | Low Solar Activity (2017, 2018) | High Solar Activity (2015) | Low Solar Activity (2019) | |

| Number of samples Total | 723 | 723 | 365 | 365 |

| 1446 | 730 | |||

| Model | Hyper-Parameter Setting | |

|---|---|---|

| Filter | Kernel Size | |

| Multichannel ED-PredRNN | 9 | 9 |

| ConvLSTM | 16 | 3 |

| ConvGRU | 12 | 3 |

| Year and Solar Activity | Model | RMSE (TECU) | R2 | MAPE (%) |

|---|---|---|---|---|

| 2015, high solar activity | COPG | 4.227 | 0.9321 | 19.89 |

| ConvLSTM | 3.563 | 0.9518 | 15.32 | |

| ConvGRU | 3.757 | 0.9464 | 15.59 | |

| Multichannel ED-PredRNN | 3.374 | 0.9567 | 14.22 | |

| 2019, low solar activity | COPG | 1.618 | 0.9268 | 19.32 |

| ConvLSTM | 1.559 | 0.9320 | 17.29 | |

| ConvGRU | 1.561 | 0.9319 | 17.96 | |

| Multichannel ED-PredRNN | 1.483 | 0.9385 | 15.76 |

| Solar Activity | ||

|---|---|---|

| High (2015) | 96.06% | |

| 98.71% | ||

| 90.18% | ||

| Low (2019) | 92.07% | |

| 80.28% | ||

| 71.87% |

| Pearson Correlation Coefficient | COPG | ConvLSTM | ConvGRU | Multichannel ED-PredRNN |

|---|---|---|---|---|

| 2015 | 0.9397 | 0.9517 | 0.9651 | 0.9555 |

| 2019 | 0.9284 | 0.6921 | 0.5285 | 0.7363 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Ma, Y.; Le, H.; Li, L.; Zhou, R.; Xiao, J.; Shan, W.; Wu, Z.; Li, Y. Global Ionospheric TEC Map Prediction Based on Multichannel ED-PredRNN. Atmosphere 2025, 16, 422. https://doi.org/10.3390/atmos16040422

Liu H, Ma Y, Le H, Li L, Zhou R, Xiao J, Shan W, Wu Z, Li Y. Global Ionospheric TEC Map Prediction Based on Multichannel ED-PredRNN. Atmosphere. 2025; 16(4):422. https://doi.org/10.3390/atmos16040422

Chicago/Turabian StyleLiu, Haijun, Yan Ma, Huijun Le, Liangchao Li, Rui Zhou, Jian Xiao, Weifeng Shan, Zhongxiu Wu, and Yalan Li. 2025. "Global Ionospheric TEC Map Prediction Based on Multichannel ED-PredRNN" Atmosphere 16, no. 4: 422. https://doi.org/10.3390/atmos16040422

APA StyleLiu, H., Ma, Y., Le, H., Li, L., Zhou, R., Xiao, J., Shan, W., Wu, Z., & Li, Y. (2025). Global Ionospheric TEC Map Prediction Based on Multichannel ED-PredRNN. Atmosphere, 16(4), 422. https://doi.org/10.3390/atmos16040422