Deep Learning for Atmospheric Modeling: A Proof of Concept Using a Fourier Neural Operator on WRF Data to Accelerate Transient Wind Forecasting at Multiple Altitudes

Abstract

1. Introduction

2. Literature Review

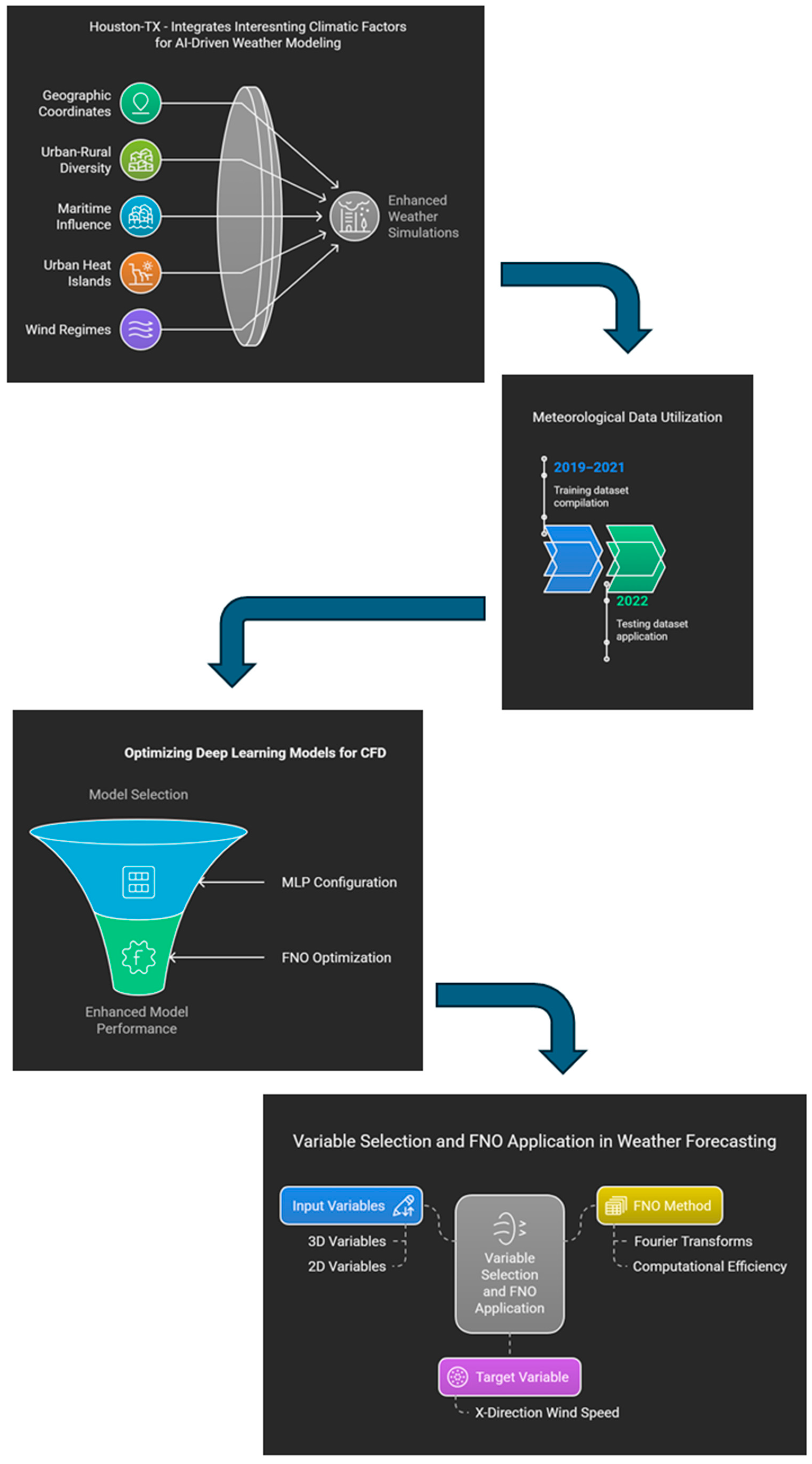

3. Methodology

3.1. The Study Area

3.2. Data Specifications and Variable Definitions

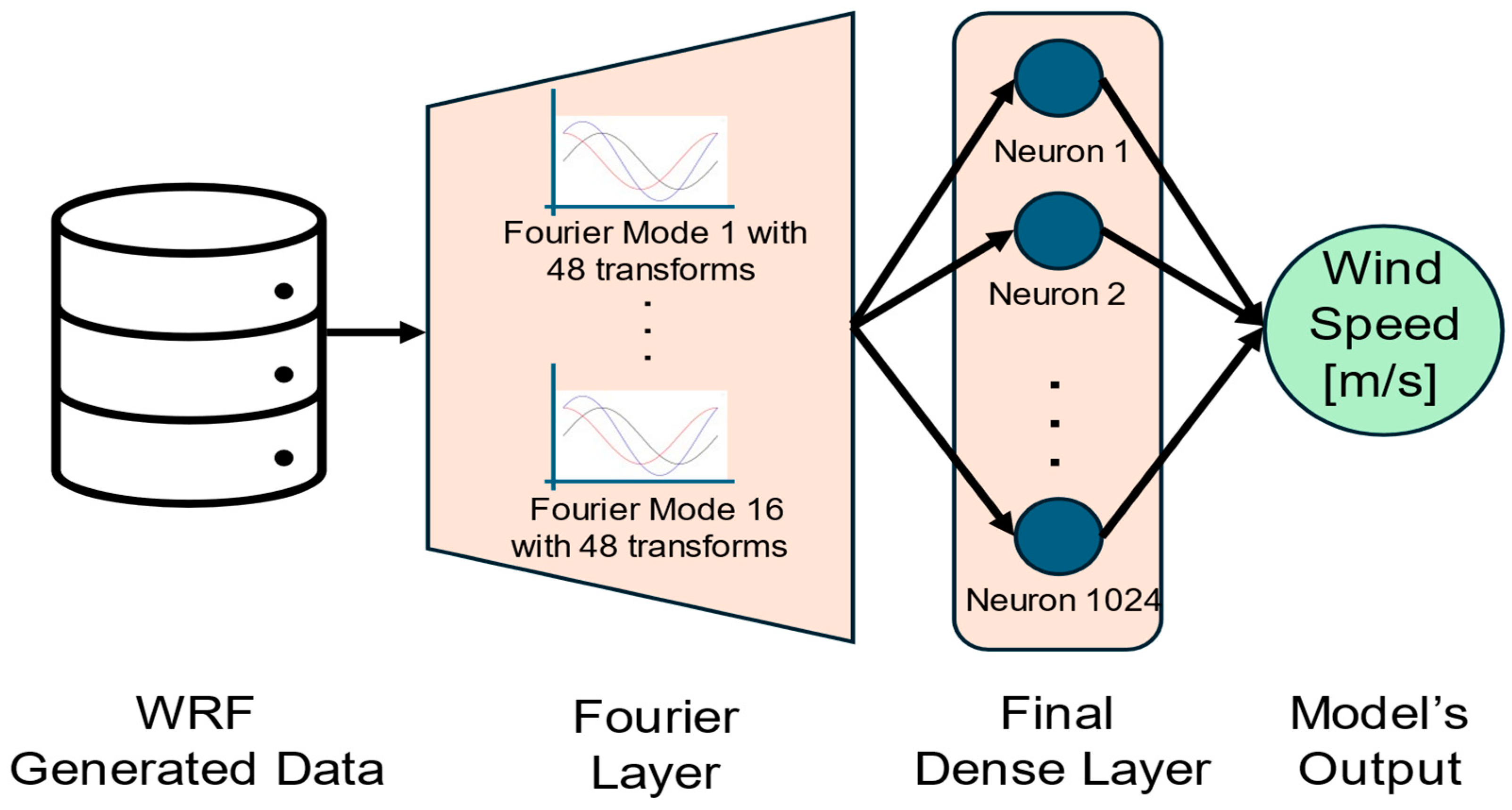

3.3. Applied Deep Learning Models

- The size of the final hidden layer;

- The number of Fourier modes, in powers of 2 (from 1 to 16);

- The width, defined as the number of transforms applied to the Fourier modes;

- The number of stacked Fourier layers, also in powers of 2 (from 1 to 16).

- 3D variables: U, V, and W (wind speed components in X, Y, and Z directions) and T (temperature);

- 2D variables: latitude–longitude, while U10 and V10 (wind speed components at 10 m above the surface) were used to provide information on surface conditions.

3.4. Variable Selection

4. Results and Discussion

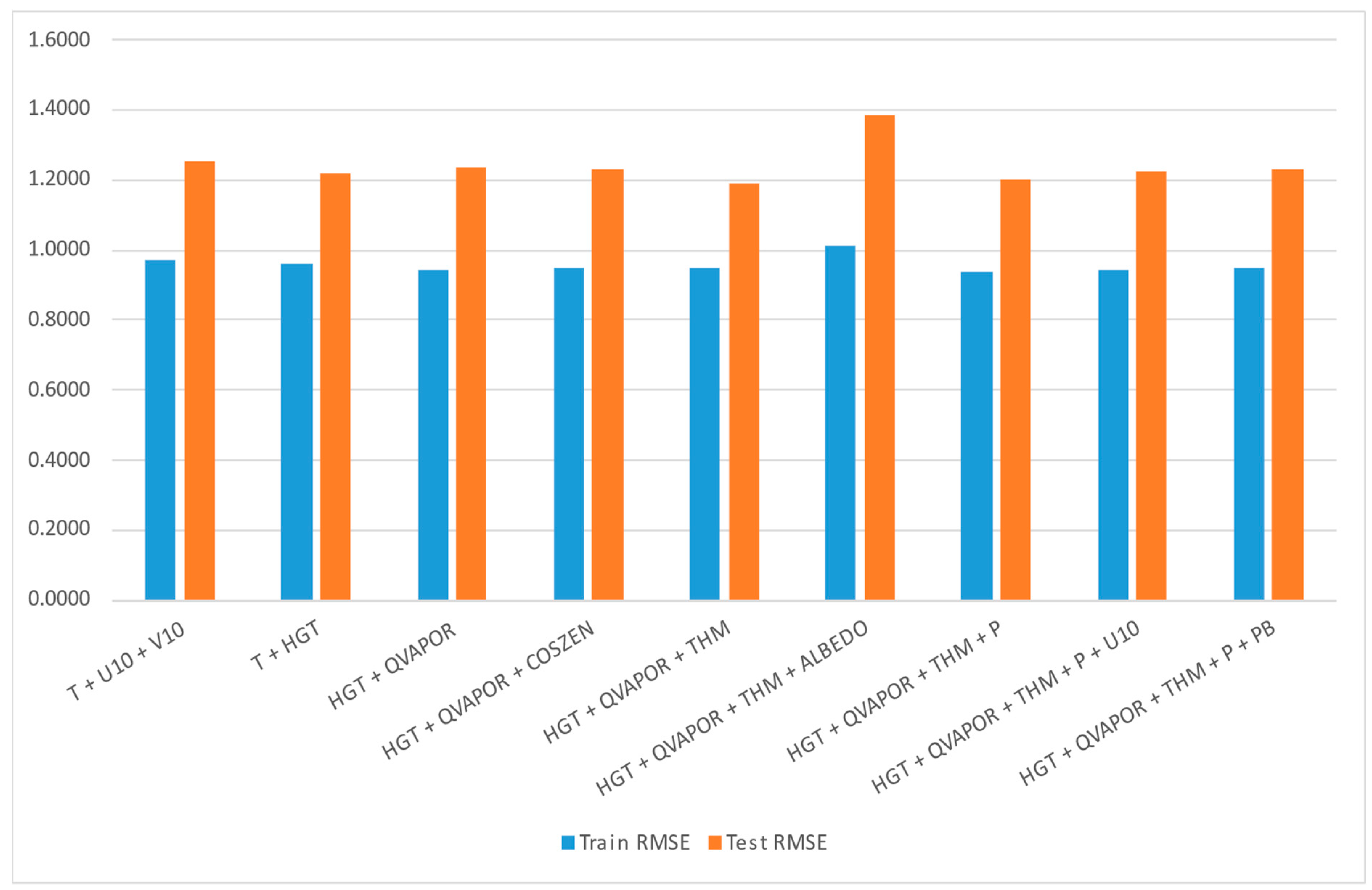

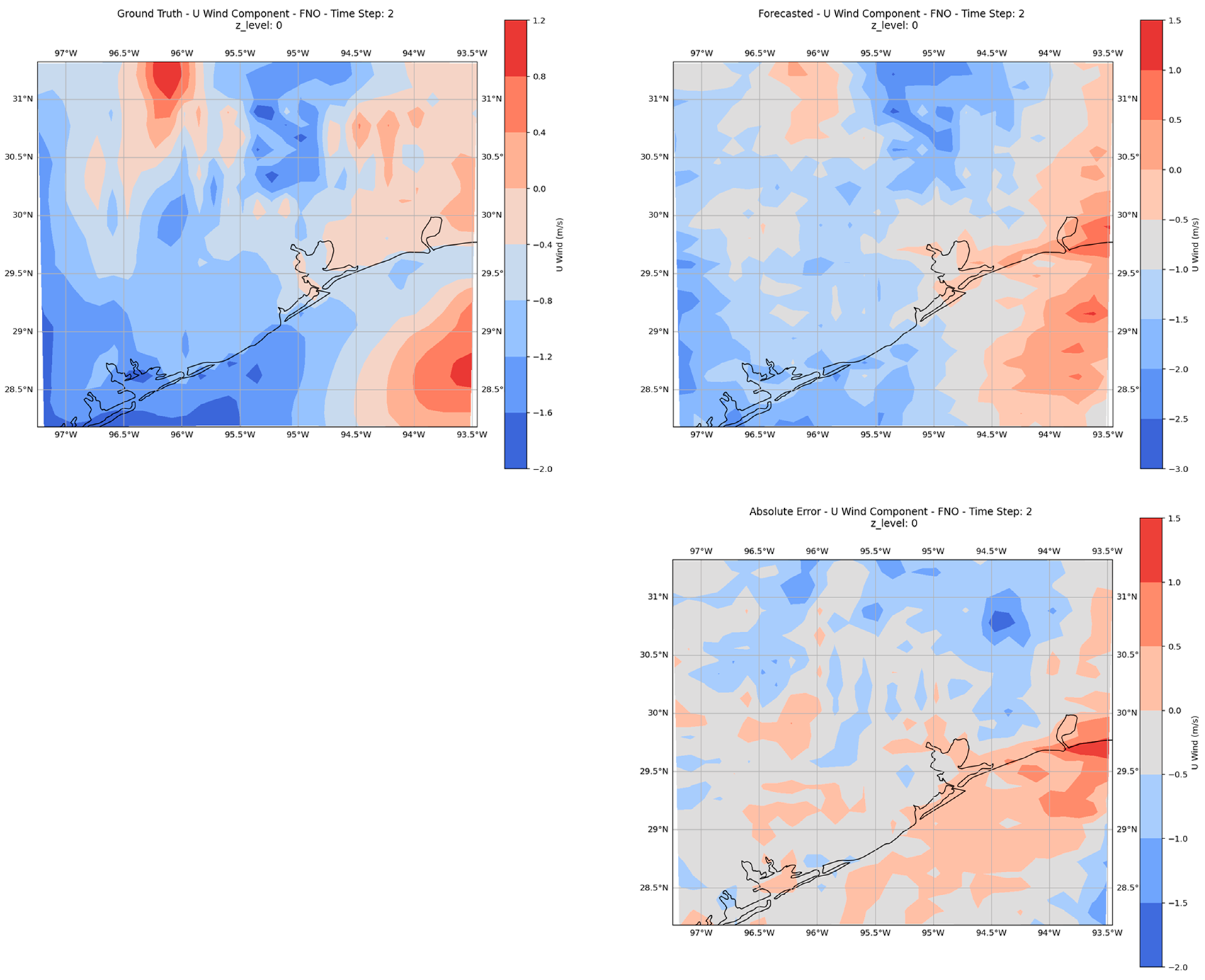

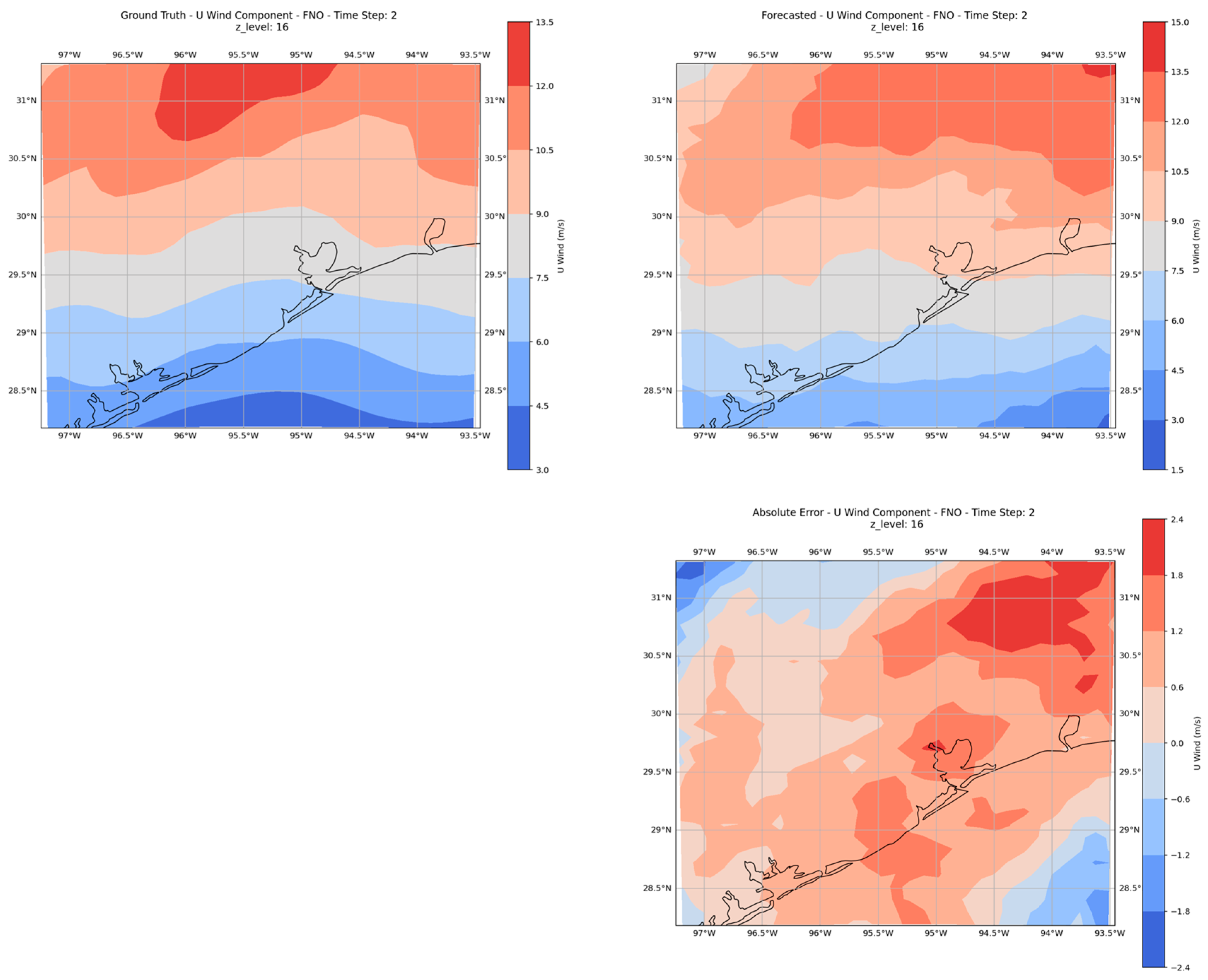

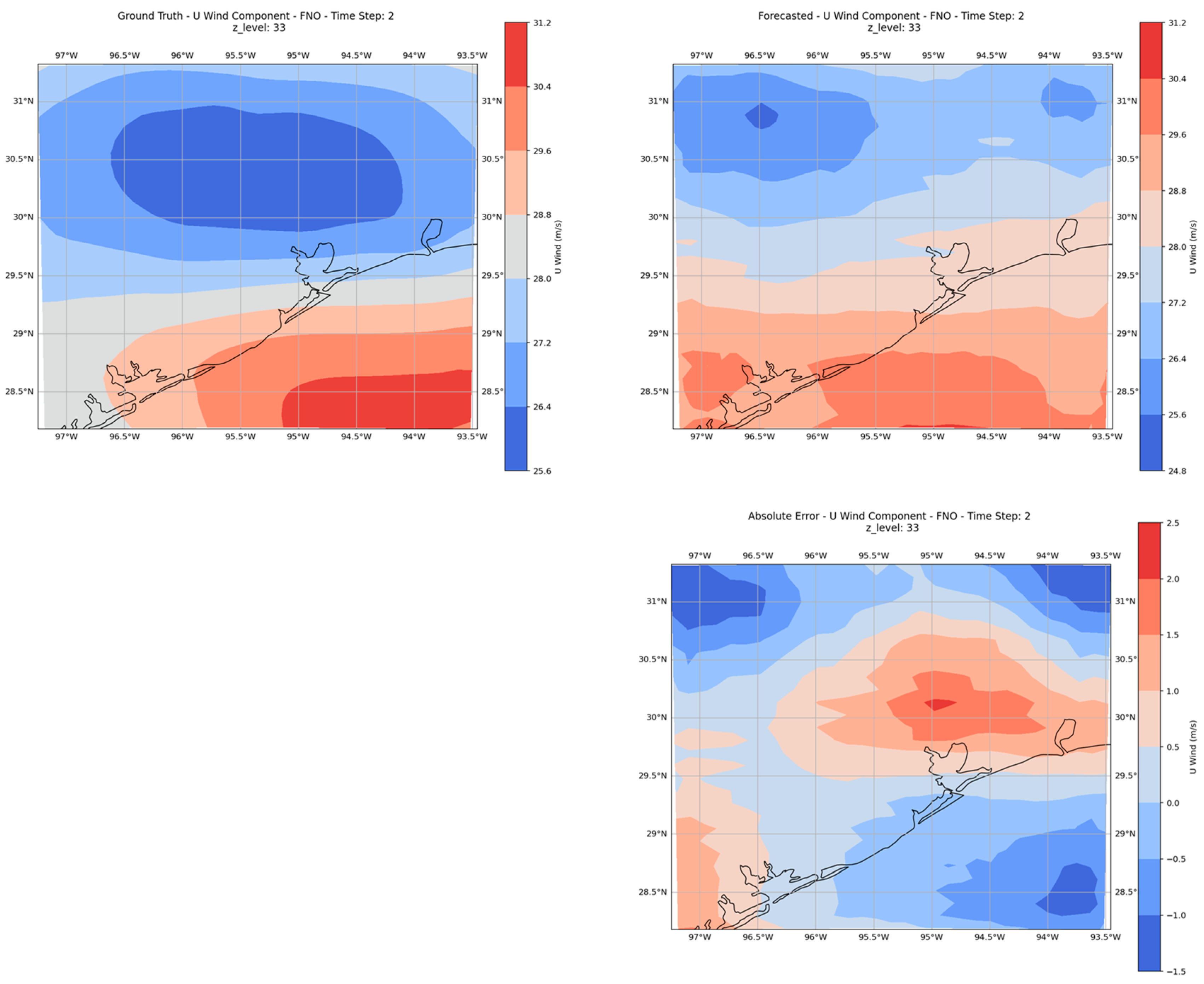

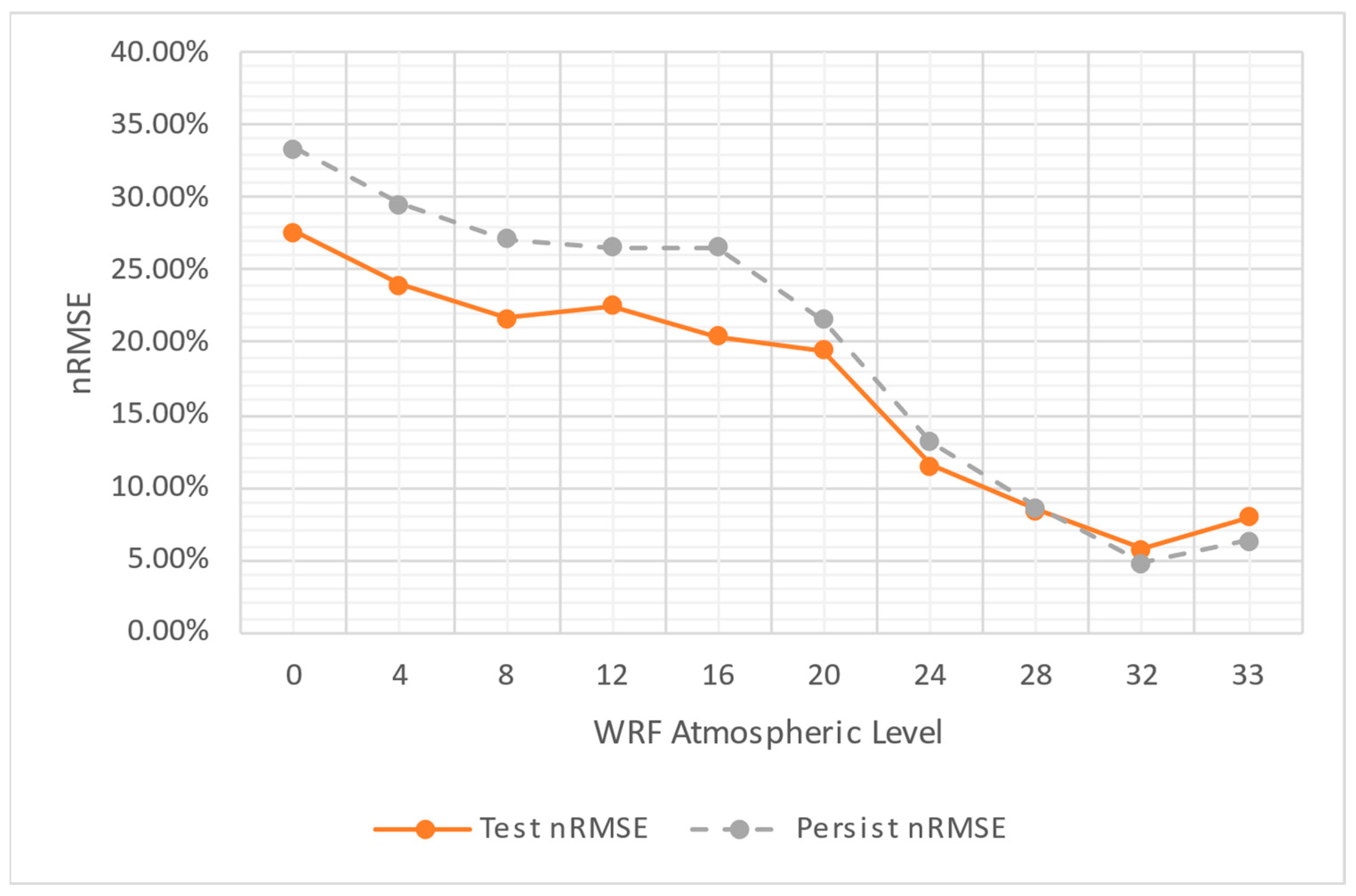

4.1. Global Model Performance

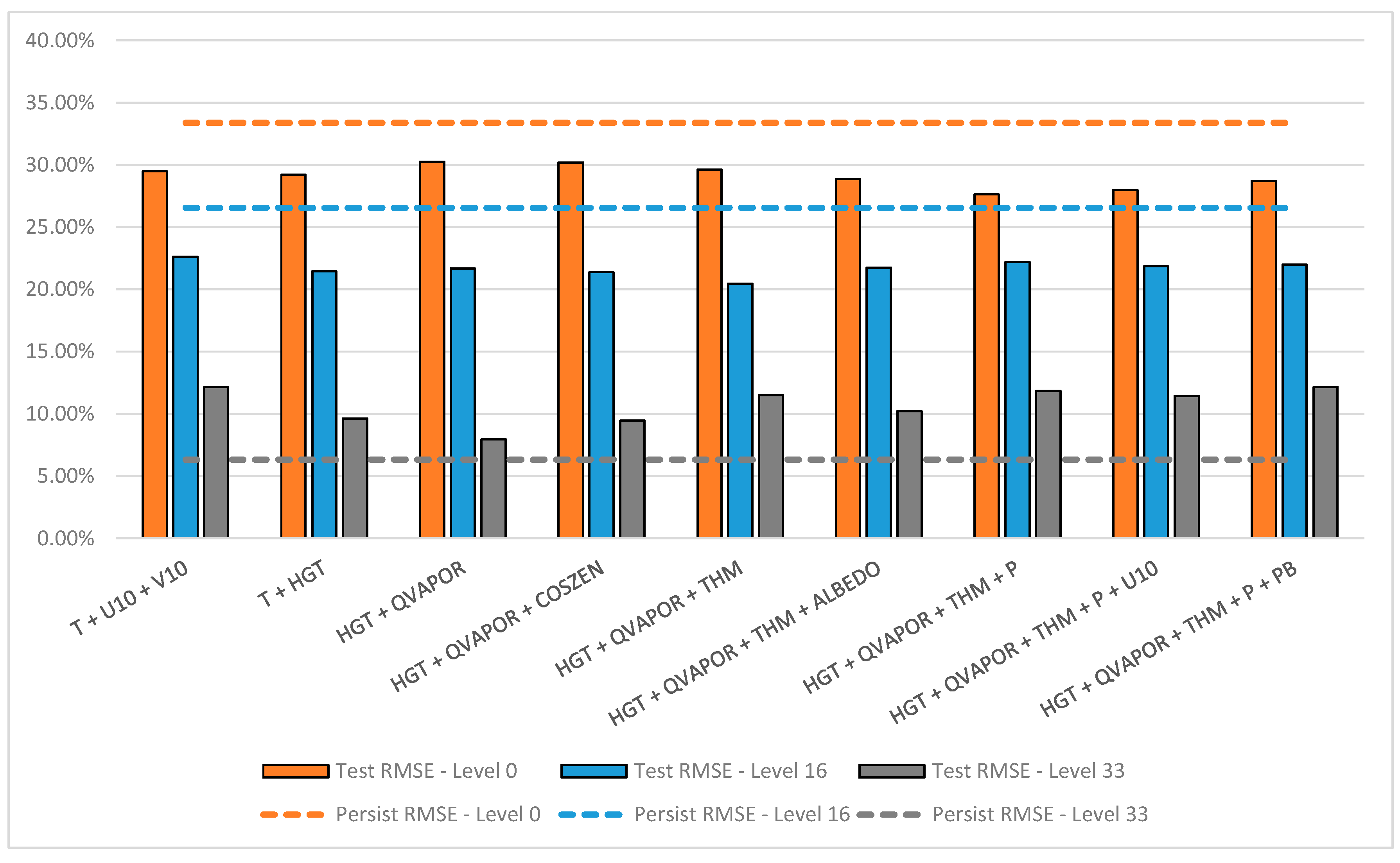

- Lack of height-specific modeling: The persistence model benefits from the natural continuity of the atmospheric flow, whereas the deep learning model needs to generalize across all heights. Studies such as [58] suggest that stratified modeling can significantly enhance predictive performance, where different models are trained for different atmospheric layers.

- Boundary layer effects: Near-surface wind flows are influenced by topography, land use, and temperature variations, which cause strong local gradients and turbulence [59]. The inability of the global model to properly learn these effects might be a reason why it underperformed compared to persistence at Z-level 0.

- Predictability of atmospheric layers: The results at Z-level = 16 show that the atmosphere is more predictable at mid-altitudes, which aligns with previous findings that suggest lower turbulence and more stable wind regimes at these heights [60]. This explains why persistence and the global model performed similarly.

- Impact of training data and physical constraints: The FNO model was trained using PINN principles, yet its ability to fully integrate the governing physics of the atmosphere might still be limited compared to conventional numerical weather prediction (NWP) models. Hybrid approaches that combine FNO architectures with physics-based constraints have shown promise in recent literature [61].

4.2. Specific Z-Levels Ad Hoc Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

List of Abbreviations

| AI | artificial intelligence |

| CFD | computational fluid dynamics |

| DL | deep learning |

| ECMWF | European Centre for Medium-Range Weather Forecasts |

| ERA5 | ECMWF Reanalysis v5 |

| FNO | Fourier neural operator |

| ML | machine learning |

| MLP | multilayer perceptron |

| NCAR | National Center for Atmospheric Research |

| nRMSE | normalized root mean squared error |

| NWP | numerical weather prediction |

| ODE | ordinary differential equation |

| OpenFOAM | open field operation and manipulation |

| PDE | partial differential equation |

| PINN | physics-informed neural network |

| RMSE | root mean squared error |

| WRF | weather research and forecasting |

References

- Cabrera, D.; Quinteros, M.; Cerrada, M.; Sánchez, R.-V.; Guallpa, M.; Sancho, F.; Li, C. Rainfall Forecasting Using a Bayesian Framework and Long Short-Term Memory Multi-Model Estimation Based on an Hourly Meteorological Monitoring Network. Case of Study: Andean Ecuadorian Tropical City. Earth Sci. Inform. 2023, 16, 1373–1388. [Google Scholar] [CrossRef]

- Tahir Bahadur, F.; Rasool Shah, S.; Rao Nidamanuri, R. Air Pollution Monitoring, and Modelling: An Overview. Environ. Forensics 2024, 25, 309–336. [Google Scholar] [CrossRef]

- Roy, C.; Rahman, M.R.; Ghosh, M.K.; Biswas, S. Tropical Cyclone Intensity Forecasting in the Bay of Bengal Using a Biologically Inspired Computational Model. Model. Earth Syst. Environ. 2024, 10, 523–537. [Google Scholar] [CrossRef]

- Singh, H.; Ang, L.-M.; Lewis, T.; Paudyal, D.; Acuna, M.; Srivastava, P.K.; Srivastava, S.K. Trending and Emerging Prospects of Physics-Based and ML-Based Wildfire Spread Models: A Comprehensive Review. J. For. Res. 2024, 35, 135. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, K.V.; Caloiero, T.; Mehta, D.J.; Singh, K. Comprehensive Overview of Flood Modeling Approaches: A Review of Recent Advances. Hydrology 2023, 10, 141. [Google Scholar] [CrossRef]

- Wu, J.; Wang, Z.; Dong, J.; Cui, X.; Tao, S.; Chen, X. Robust Runoff Prediction with Explainable Artificial Intelligence and Meteorological Variables From Deep Learning Ensemble Model. Water Resour. Res. 2023, 59, e2023WR035676. [Google Scholar] [CrossRef]

- Mitreska Jovanovska, E.; Batz, V.; Lameski, P.; Zdravevski, E.; Herzog, M.A.; Trajkovik, V. Methods for Urban Air Pollution Measurement and Forecasting: Challenges, Opportunities, and Solutions. Atmosphere 2023, 14, 1441. [Google Scholar] [CrossRef]

- Kalajdjieski, J.; Trivodaliev, K.; Mirceva, G.; Kalajdziski, S.; Gievska, S. A Complete Air Pollution Monitoring and Prediction Framework. IEEE Access 2023, 11, 88730–88744. [Google Scholar] [CrossRef]

- Federico, S.; Torcasio, R.C.; Popova, J.; Sokol, Z.; Pop, L.; Lagasio, M.; Lynn, B.H.; Puca, S.; Dietrich, S. Improving the Lightning Forecast with the WRF Model and Lightning Data Assimilation: Results of a Two-Seasons Numerical Experiment over Italy. Atmos. Res. 2024, 304, 107382. [Google Scholar] [CrossRef]

- Paul, D.J.; Janani, S.P.; Ancy Jenifer, J. Advanced Weather Monitoring and Disaster Mitigation System. In Proceedings of the 2024 International Conference on Cognitive Robotics and Intelligent Systems (ICC—ROBINS), Coimbatore, India, 17–19 April 2024; pp. 540–546. [Google Scholar]

- Leung, X.Y.; Islam, R.M.; Adhami, M.; Ilic, D.; McDonald, L.; Palawaththa, S.; Diug, B.; Munshi, S.U.; Karim, M.N. A Systematic Review of Dengue Outbreak Prediction Models: Current Scenario and Future Directions. PLoS Negl. Trop. Dis. 2023, 17, e0010631. [Google Scholar] [CrossRef]

- Dieng, M.D.B.; Tompkins, A.M.; Arnault, J.; Sié, A.; Fersch, B.; Laux, P.; Schwarz, M.; Zabré, P.; Munga, S.; Khagayi, S.; et al. Process-Based Atmosphere-Hydrology-Malaria Modeling: Performance for Spatio-Temporal Malaria Transmission Dynamics in Sub-Saharan Africa. Water Resour. Res. 2024, 60, e2023WR034975. [Google Scholar] [CrossRef]

- Abrego-Perez, A.L.; Pacheco-Carvajal, N.; Diaz-Jimenez, M.C. Forecasting Agricultural Financial Weather Risk Using PCA and SSA in an Index Insurance Model in Low-Income Economies. Appl. Sci. 2023, 13, 2425. [Google Scholar] [CrossRef]

- Costa Rocha, P.A.; Oliveira Santos, V.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. Application of Graph Neural Networks to Forecast Urban Flood Events: The Case Study of the 2013 Flood of the Bow River, Calgary, Canada. Int. J. River Basin Manag. 2024, 1–18. [Google Scholar] [CrossRef]

- Luo, Z.; Liu, J.; Zhang, S.; Shao, W.; Zhang, L. Research on Climate Change in Qinghai Lake Basin Based on WRF and CMIP6. Remote Sens. 2023, 15, 4379. [Google Scholar] [CrossRef]

- Kadaverugu, R. A Comparison between WRF-Simulated and Observed Surface Meteorological Variables across Varying Land Cover and Urbanization in South-Central India. Earth Sci. Inform. 2023, 16, 147–163. [Google Scholar] [CrossRef]

- Chen, L.; Chen, Z.; Zhang, Y.; Liu, Y.; Osman, A.I.; Farghali, M.; Hua, J.; Al-Fatesh, A.; Ihara, I.; Rooney, D.W.; et al. Artificial Intelligence-Based Solutions for Climate Change: A Review. Environ. Chem. Lett. 2023, 21, 2525–2557. [Google Scholar] [CrossRef]

- Powers, J.G.; Klemp, J.B.; Skamarock, W.C.; Davis, C.A.; Dudhia, J.; Gill, D.O.; Coen, J.L.; Gochis, D.J.; Ahmadov, R.; Peckham, S.E.; et al. The Weather Research and Forecasting Model: Overview, System Efforts, and Future Directions. Bull. Am. Meteorol. Soc. 2017, 98, 1717–1737. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Duda, M.G.; Huang, X.-Y.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3. NCAR Tech. Note 2008, 475, 10–5065. [Google Scholar] [CrossRef]

- Sosa-Tinoco, I.; Prósper, M.A.; Miguez-Macho, G. Development of a Solar Energy Forecasting System for Two Real Solar Plants Based on WRF Solar with Aerosol Input and a Solar Plant Model. Sol. Energy 2022, 240, 329–341. [Google Scholar] [CrossRef]

- Sarvestan, R.; Karami, M.; Javidi Sabbaghian, R. Evaluation of Meteorological Microphysical Schemas Based on the WRF Model for Simulation of Rainfall in the Northeastern Region of Iran. J. Hydrol. Reg. Stud. 2023, 50, 101524. [Google Scholar] [CrossRef]

- Stathopoulos, C.; Chaniotis, I.; Patlakas, P. Assimilating Aeolus Satellite Wind Data on a Regional Level: Application in a Mediterranean Cyclone Using the WRF Model. Atmosphere 2023, 14, 1811. [Google Scholar] [CrossRef]

- Hallaji, H.; Bohloul, M.R.; Peyghambarzadeh, S.M.; Azizi, S. Measurement of Air Pollutants Concentrations from Stacks of Petrochemical Company and Dispersion Modeling by AERMOD Coupled with WRF Model. Int. J. Environ. Sci. Technol. 2023, 20, 7217–7236. [Google Scholar] [CrossRef]

- López-Noreña, A.I.; Berná, L.; Tames, M.F.; Millán, E.N.; Puliafito, S.E.; Fernandez, R.P. Influence of Emission Inventory Resolution on the Modeled Spatio-Temporal Distribution of Air Pollutants in Buenos Aires, Argentina, Using WRF-Chem. Atmos. Environ. 2022, 269, 118839. [Google Scholar] [CrossRef]

- Qi, Q.; Wang, S.; Zhao, H.; Kota, S.H.; Zhang, H. Rice Yield Losses Due to O3 Pollution in China from 2013 to 2020 Based on the WRF-CMAQ Model. J. Clean. Prod. 2023, 401, 136801. [Google Scholar] [CrossRef]

- Carneiro, F.O.M.; Moura, L.F.M.; Costa Rocha, P.A.; Pontes Lima, R.J.; Ismail, K.A.R. Application and Analysis of the Moving Mesh Algorithm AMI in a Small Scale HAWT: Validation with Field Test’s Results against the Frozen Rotor Approach. Energy 2019, 171, 819–829. [Google Scholar] [CrossRef]

- Ajani, C.K.; Zhu, Z.; Sun, D.-W. Recent Advances in Multiscale CFD Modelling of Cooling Processes and Systems for the Agrifood Industry. Crit. Rev. Food Sci. Nutr. 2021, 61, 2455–2470. [Google Scholar] [CrossRef]

- Costa Rocha, P.A.; Johnston, S.J.; Oliveira Santos, V.; Aliabadi, A.A.; Thé, J.V.G.; Gharabaghi, B. Deep Neural Network Modeling for CFD Simulations: Benchmarking the Fourier Neural Operator on the Lid-Driven Cavity Case. Appl. Sci. 2023, 13, 3165. [Google Scholar] [CrossRef]

- Kehrein, P.; Van Loosdrecht, M.; Osseweijer, P.; Posada, J.; Dewulf, J. The SPPD-WRF Framework: A Novel and Holistic Methodology for Strategical Planning and Process Design of Water Resource Factories. Sustainability 2020, 12, 4168. [Google Scholar] [CrossRef]

- Pasche, O.C.; Wider, J.; Zhang, Z.; Zscheischler, J.; Engelke, S. Validating Deep Learning Weather Forecast Models on Recent High-Impact Extreme Events. Artif. Intell. Earth Syst. 2025, 4, e240033. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Y.; Zhang, C.; Li, N. Machine Learning Methods for Weather Forecasting: A Survey. Atmosphere 2025, 16, 82. [Google Scholar] [CrossRef]

- Bonavita, M. On Some Limitations of Current Machine Learning Weather Prediction Models. Geophys. Res. Lett. 2024, 51, e2023GL107377. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Wang, S.; Perdikaris, P.; Karniadakis, G.E. Physics-Informed Neural Networks for Heat Transfer Problems. J. Heat Transf. 2021, 143, 060801. [Google Scholar] [CrossRef]

- Osorio, J.D.; Florio, M.D.; Hovsapian, R.; Chryssostomidis, C.; Karniadakis, G.E. Physics-Informed Machine Learning for Solar-Thermal Power Systems. Energy Convers. Manag. 2025, 327, 119542. [Google Scholar] [CrossRef]

- McClenny, L.D.; Braga-Neto, U.M. Self-Adaptive Physics-Informed Neural Networks. J. Comput. Phys. 2023, 474, 111722. [Google Scholar] [CrossRef]

- Zou, Z.; Meng, X.; Karniadakis, G.E. Uncertainty Quantification for Noisy Inputs–Outputs in Physics-Informed Neural Networks and Neural Operators. Comput. Methods Appl. Mech. Eng. 2025, 433, 117479. [Google Scholar] [CrossRef]

- Fang, Z. A High-Efficient Hybrid Physics-Informed Neural Networks Based on Convolutional Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 5514–5526. [Google Scholar] [CrossRef]

- Beck, H.E.; Zimmermann, N.E.; McVicar, T.R.; Vergopolan, N.; Berg, A.; Wood, E.F. Present and Future Köppen-Geiger Climate Classification Maps at 1-Km Resolution. Sci. Data 2018, 5, 180214. [Google Scholar] [CrossRef] [PubMed]

- Kyropoulou, M. Thermal Comfort and Indoor Air Quality in Higher Education: A Case Study in Houston, TX, during Mid-Season. J. Phys. Conf. Ser. 2023, 2600, 102022. [Google Scholar] [CrossRef]

- Google LLC Google Earth. Available online: https://earth.google.com/earth/d/1QR_q78kghHqoVdYX6M2lsY-F3mK0wmkp?usp=sharing (accessed on 28 January 2025).

- Li, X.; Fu, D.; Nielsen-Gammon, J.; Gangrade, S.; Kao, S.-C.; Chang, P.; Morales Hernández, M.; Voisin, N.; Zhang, Z.; Gao, H. Impacts of Climate Change on Future Hurricane Induced Rainfall and Flooding in a Coastal Watershed: A Case Study on Hurricane Harvey. J. Hydrol. 2023, 616, 128774. [Google Scholar] [CrossRef]

- Chang, H.; Ross, A.R. Climate Change, Urbanization, and Water Resources: Towards Resilient Urban Water Resource Management; Springer International Publishing: Cham, Switzerland, 2024; ISBN 978-3-031-49629-5. [Google Scholar]

- Chakraborty, T.; Hsu, A.; Manya, D.; Sheriff, G. Disproportionately Higher Exposure to Urban Heat in Lower-Income Neighborhoods: A Multi-City Perspective. Environ. Res. Lett. 2019, 14, 105003. [Google Scholar] [CrossRef]

- Mohammad Harmay, N.S.; Choi, M. The Urban Heat Island and Thermal Heat Stress Correlate with Climate Dynamics and Energy Budget Variations in Multiple Urban Environments. Sustain. Cities Soc. 2023, 91, 104422. [Google Scholar] [CrossRef]

- Darby, L.S. Cluster Analysis of Surface Winds in Houston, Texas, and the Impact of Wind Patterns on Ozone. J. Appl. Meteorol. 2005, 44, 1788–1806. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. Spatiotemporal Air Pollution Forecasting in Houston-TX: A Case Study for Ozone Using Deep Graph Neural Networks. Atmosphere 2023, 14, 308. [Google Scholar] [CrossRef]

- Patel, Y. Levelized Cost of Repurposing Oil and Gas Infrastructure for Clean Energy in the Gulf of Mexico. Renew. Sustain. Energy Rev. 2025, 209, 115115. [Google Scholar] [CrossRef]

- Lamer, K.; Mages, Z.; Treserras, B.P.; Walter, P.; Zhu, Z.; Rapp, A.D.; Nowotarski, C.J.; Brooks, S.D.; Flynn, J.; Sharma, M.; et al. Spatially Distributed Atmospheric Boundary Layer Properties in Houston—A Value-Added Observational Dataset. Sci. Data 2024, 11, 661. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Thé, J.V.G.; Gharabaghi, B. Optimizing the Architecture of a Quantum–Classical Hybrid Machine Learning Model for Forecasting Ozone Concentrations: Air Quality Management Tool for Houston, Texas. Atmosphere 2025, 16, 255. [Google Scholar] [CrossRef]

- Baïle, R.; Muzy, J.-F. Leveraging Data from Nearby Stations to Improve Short-Term Wind Speed Forecasts. Energy 2023, 263, 125644. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009; ISBN 978-0-387-84858-7. [Google Scholar]

- Deisenroth, M.P.; Faisal, A.A.; Ong, C.S. Mathematics for Machine Learning; Cambridge University Press: Cambridge, UK, 2020; ISBN 978-1-108-47004-9. [Google Scholar]

- Bodnar, C.; Bruinsma, W.P.; Lucic, A.; Stanley, M.; Vaughan, A.; Brandstetter, J.; Garvan, P.; Riechert, M.; Weyn, J.A.; Dong, H.; et al. A Foundation Model for the Earth System. arXiv 2024. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R.; Taylor, J. An Introduction to Statistical Learning: With Applications in Python; Springer International Publishing: Berlin/Heidelberg, Germany, 2023; ISBN 978-3-031-38746-3. [Google Scholar]

- HOLTON, J.R. An Introduction to Dynamic Meteorology. Introd. Dyn. Meteorol. 1992, 48, 1–497. [Google Scholar] [CrossRef]

- Johnson, P.L.; Wilczek, M. Multiscale Velocity Gradients in Turbulence. Annu. Rev. Fluid Mech. 2024, 56, 463–490. [Google Scholar] [CrossRef]

- Holton, J.R. An Introduction to Dynamic Meteorology; Academic Press: Cambridge, MA, USA, 2004; ISBN 978-0-12-354015-7. [Google Scholar]

- Ranade, R.; Hill, C.; Pathak, J. DiscretizationNet: A Machine-Learning Based Solver for Navier–Stokes Equations Using Finite Volume Discretization. Comput. Methods Appl. Mech. Eng. 2021, 378, 113722. [Google Scholar] [CrossRef]

- Cant, S. S. B. Pope, Turbulent Flows, Cambridge University Press, Cambridge, U.K., 2000, 771 pp. Combust. Flame 2001, 125, 1361–1362. [Google Scholar] [CrossRef]

- Stevens, B.; Bony, S. What Are Climate Models Missing? Science 2013, 340, 1053–1054. [Google Scholar] [CrossRef]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-Informed Machine Learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Bochenek, B.; Ustrnul, Z. Machine Learning in Weather Prediction and Climate Analyses—Applications and Perspectives. Atmosphere 2022, 13, 180. [Google Scholar] [CrossRef]

- Hoolohan, V.; Tomlin, A.S.; Cockerill, T. Improved near Surface Wind Speed Predictions Using Gaussian Process Regression Combined with Numerical Weather Predictions and Observed Meteorological Data. Renew. Energy 2018, 126, 1043–1054. [Google Scholar] [CrossRef]

- Gentine, P.; Pritchard, M.; Rasp, S.; Reinaudi, G.; Yacalis, G. Could Machine Learning Break the Convection Parameterization Deadlock? Geophys. Res. Lett. 2018, 45, 5742–5751. [Google Scholar] [CrossRef]

- Chen, C.; Tian, G.; Qin, S.; Yang, S.; Geng, D.; Zhan, D.; Yang, J.; Vidal, D.; Wang, L.L. Generalization of Urban Wind Environment Using Fourier Neural Operator Across Different Wind Directions and Cities. arXiv 2025, arXiv:2501.05499. [Google Scholar]

- Valsaraj, P.; Thumba, D.A.; Kumar, S. Machine Learning-Based Simplified Methods Using Shorter Wind Measuring Masts for the Time Ahead Wind Forecasting at Higher Altitude for Wind Energy Applications. Renew. Energy Environ. Sustain. 2022, 7, 24. [Google Scholar] [CrossRef]

- Pathak, J.; Subramanian, S.; Harrington, P.; Raja, S.; Chattopadhyay, A.; Mardani, M.; Kurth, T.; Hall, D.; Li, Z.; Azizzadenesheli, K.; et al. FourCastNet: A Global Data-Driven High-Resolution Weather Model Using Adaptive Fourier Neural Operators. arXiv 2022, arXiv:2202.11214. [Google Scholar]

- Qin, S.; Zhan, D.; Geng, D.; Peng, W.; Tian, G.; Shi, Y.; Gao, N.; Liu, X.; Wang, L.L. Modeling Multivariable High-Resolution 3D Urban Microclimate Using Localized Fourier Neural Operator. Build. Environ. 2025, 273, 112668. [Google Scholar] [CrossRef]

| WRF Name | Units | Description |

|---|---|---|

| “Times” | hh:mm:ss | Time |

| “XLAT” | degree | Latitude of the collocated grid |

| “XLONG” | degree | Latitude of the collocated grid |

| “XLAT_U” | degree | Latitude of the staggered grid in the X direction |

| “XLONG_U” | degree | Longitude of the staggered grid in the X direction |

| “XLAT_V” | degree | Latitude of the staggered grid in the Y direction |

| “XLONG_V” | degree | Longitude of the staggered grid in the Y direction |

| “COSZEN” | degree | Cosine of the zenith angle of the Sun |

| “PSFC” | Pa | Surface pressure |

| “T2” | K | 2-m temperature |

| “Q2” | kg water vapor/kg air | 2-m specific humidity |

| “U10” | m/s | 10-m U-component of wind |

| “V10” | m/s | 10-m V-component of wind |

| “SST” | K | Sea surface temperature |

| “TSK” | K | Skin temperature |

| “TSLB” | K | Soil temperature at specified layers |

| “HGT” | m | Geopotential height |

| “ALBEDO” | Fraction (0-1) | Surface albedo |

| WRF Name | Units | Description |

|---|---|---|

| “U” | m/s | U-component of wind |

| “V” | m/s | V-component of wind |

| “W” | m/s | W-component of wind |

| “T” | K | Air temperature |

| “THM” | K | Moist potential temperature |

| “QVAPOR” | kg water/kg dry air | Water vapor mixing ration |

| “QCLOUD” | kg water/kg air | Cloud water mixing ration |

| “QRAIN” | kg rainwater/kg air | Rainwater mixing ratio |

| “P” | Pa | Perturbation pressure |

| “P_HYD” | Pa | Hydrostatic pressure |

| “PB” | Pa | Perturbation base-state pressure |

| Model | Methodology | References |

|---|---|---|

| FNO | Implementation of an FNO model for forecasting wind in different urban configurations and wind scenarios | [65] |

| Support Vector Machine, K-Nearest Neighbor, and Gradient Boosting Machine | Implementation of different traditional machine learning approaches for wind speed forecasting using data collected from different altitudes. | [66] |

| FourCastNet | Implementation of a Fourier-based approach to forecast atmospheric variables, including wind speed, precipitation, and water vapor, using deep learning. | [67] |

| Local-FNO | Precise modeling of urban microclimates, including wind velocity and temperature. | [68] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costa Rocha, P.A.; Thé, J.V.G.; Oliveira Santos, V.; Gharabaghi, B. Deep Learning for Atmospheric Modeling: A Proof of Concept Using a Fourier Neural Operator on WRF Data to Accelerate Transient Wind Forecasting at Multiple Altitudes. Atmosphere 2025, 16, 394. https://doi.org/10.3390/atmos16040394

Costa Rocha PA, Thé JVG, Oliveira Santos V, Gharabaghi B. Deep Learning for Atmospheric Modeling: A Proof of Concept Using a Fourier Neural Operator on WRF Data to Accelerate Transient Wind Forecasting at Multiple Altitudes. Atmosphere. 2025; 16(4):394. https://doi.org/10.3390/atmos16040394

Chicago/Turabian StyleCosta Rocha, Paulo Alexandre, Jesse Van Griensven Thé, Victor Oliveira Santos, and Bahram Gharabaghi. 2025. "Deep Learning for Atmospheric Modeling: A Proof of Concept Using a Fourier Neural Operator on WRF Data to Accelerate Transient Wind Forecasting at Multiple Altitudes" Atmosphere 16, no. 4: 394. https://doi.org/10.3390/atmos16040394

APA StyleCosta Rocha, P. A., Thé, J. V. G., Oliveira Santos, V., & Gharabaghi, B. (2025). Deep Learning for Atmospheric Modeling: A Proof of Concept Using a Fourier Neural Operator on WRF Data to Accelerate Transient Wind Forecasting at Multiple Altitudes. Atmosphere, 16(4), 394. https://doi.org/10.3390/atmos16040394