1. Introduction

Visibility refers to the maximum distance at which a target object can be clearly seen by the human eye under specific atmospheric conditions. According to the definition provided by the International Civil Aviation Organization (ICAO), this concept specifically refers to the visibility of a black object of suitable size against a uniform sky background during daylight or moonless nights. In foggy conditions, airborne water droplets scatter light, reducing the contrast and brightness of distant objects and thus degrading visibility. Similarly, haze and particulate matter also degrade visual clarity through both absorption and scattering effects.

Haze and fog significantly impact the transportation industry by reducing visibility. Under such conditions, the risk of traffic accidents increases substantially. In particular, on highways, airport runways, and during maritime navigation, drivers and operators often struggle to perceive obstacles or road conditions ahead, leading to a heightened risk of severe collisions [

1]. The Federal Highway Administration (FHWA) estimated that more than 38,700 road accidents occur each year due to foggy conditions. Over 16,300 people are injured in foggy weather incidents every year, with more than 600 fatalities occurring each year [

2]. Statistical data indicate that the number of accidents caused by fog each year constitutes a considerable proportion of all accidents, posing serious challenges to public safety.

In this context, accurate visibility estimation becomes particularly crucial. It enables authorities to issue timely warnings and take preventive actions to reduce the risk of accidents. For instance, in the aviation industry, airlines can adjust flight schedules based on the latest visibility predictions to ensure flight safety. Traffic management authorities, on the other hand, can utilize real-time data to modify speed limits or implement temporary road closures, thus preventing traffic accidents [

3]. In addition to enhancing safety, accurate visibility estimation also contributes to improving the overall efficiency of transportation systems [

4]. For instance, in logistics and freight delivery, knowledge of visibility conditions enables more effective route planning, allowing vehicles to avoid areas with reduced visibility and enhancing delivery speed. Similarly, ports and airports can also leverage visibility forecasts to optimize scheduling and operations, ensuring smooth operations under favorable conditions [

5].

Given the aforementioned importance, researchers have continuously explored new approaches to improve the accuracy of visibility estimation in recent years [

6]. Early methods primarily relied on statistical analysis, estimating visibility by investigating the relationships between image features and visibility levels—for example, techniques based on the dark channel prior [

7,

8,

9,

10,

11]. With advances in computer vision and deep learning technologies, models based on convolution neural network (CNN) [

12,

13,

14,

15,

16,

17,

18,

19,

20] and transformer block [

21,

22,

23] have gradually become dominant. These methods are capable of extracting complex features from input images and learning intricate relationships between these features and visibility levels, thereby achieving more accurate and robust estimation results.

However, most existing methods rely solely on monocular RGB image as input, lacking access to accurate scene geometric information [

6]. From the perspective of Koschmieder’s haze model [

24], estimating precise visibility values fundamentally requires knowledge of scene depth, which is inherently missing in monocular setups. Current monocular approaches either estimate only the transmittance map without providing explicit visibility values, or attempt to infer depth through deep learning techniques. However, recovering metric scene depth from a single RGB image is inherently ill-posed, and the resulting depth maps often lack the reliability needed for accurate visibility estimation.

To address this limitation, we propose a two-stage network based on transformer architecture that utilizes binocular RGB images as input. The stereo images provide sufficient and reliable scene scale information, enabling a more robust and accurate visibility estimation process. In the first stage, the network utilizes a recurrent stereo matching network to derive the depth information of the scene. In the second stage, we propose a bidirectional cross-attention block (BCB) to align the RGB texture features with the depth information. After undergoing the feature alignment and the fusion of multi-scale features, the network produces a comprehensive feature map. Finally, a head layer is attached to compute the pixel-wise visibility map.

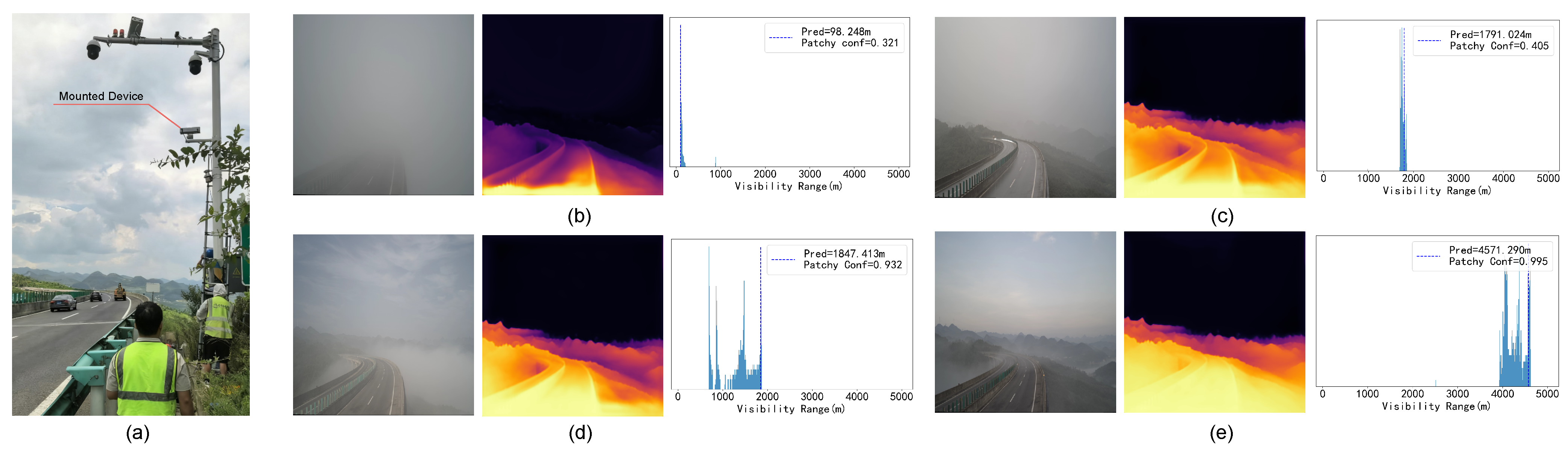

After obtaining the pixel-wise visibility estimation from the network, we design a mechanism that detects the presence of non-uniform patchy fog by analyzing the spatial distribution differences of the estimated visibility values. This mechanism generates a confidence score indicating the likelihood of uneven fog occurring in the scene. When the confidence score exceeds a predefined threshold, it can be determined that non-uniform patchy fog is present. This detection capability is of significant importance for transportation systems, as patchy fog is a critical factor affecting traffic safety.

To facilitate more effective model training, we propose an automatic pipeline for constructing synthetic foggy images to augment the real-world collections. First, we collect RGB images captured under clear-weather conditions from various sources, including web images, public datasets, Carla simulator [

25], and highway or urban traffic cameras. We employ stereo depth estimation models such as Monster [

26] to obtain the corresponding scene depth information. Finally, guided by the Koschmieder’s haze model [

24], we utilize the estimated depth maps together with the clear-weather RGB images to synthesize foggy images with specified visibility levels. In addition, we introduce Gaussian perturbations during the generation of uniform fog to synthesize patchy fog images. Benefiting from the diversity of the data sources, the proposed pipeline enables the efficient and automatic generation of large-scale foggy image datasets required for training and evaluation.

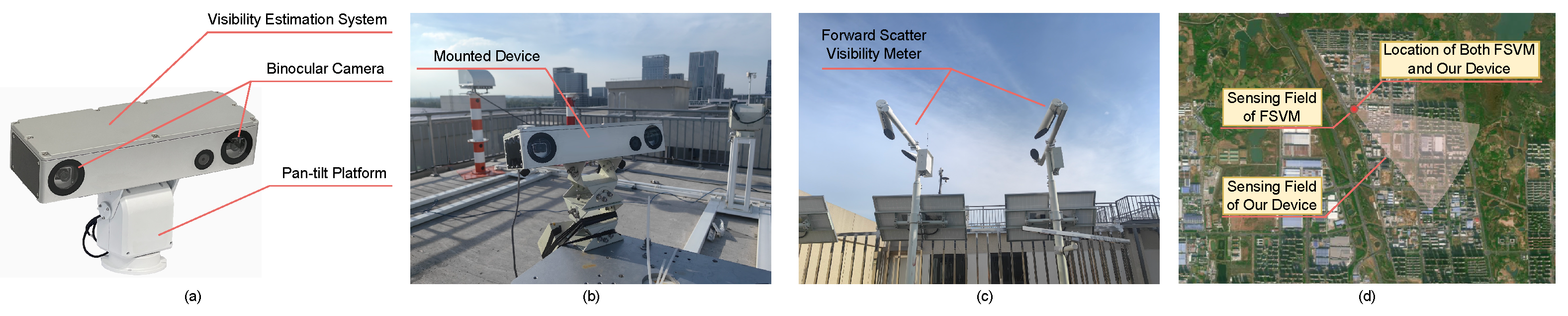

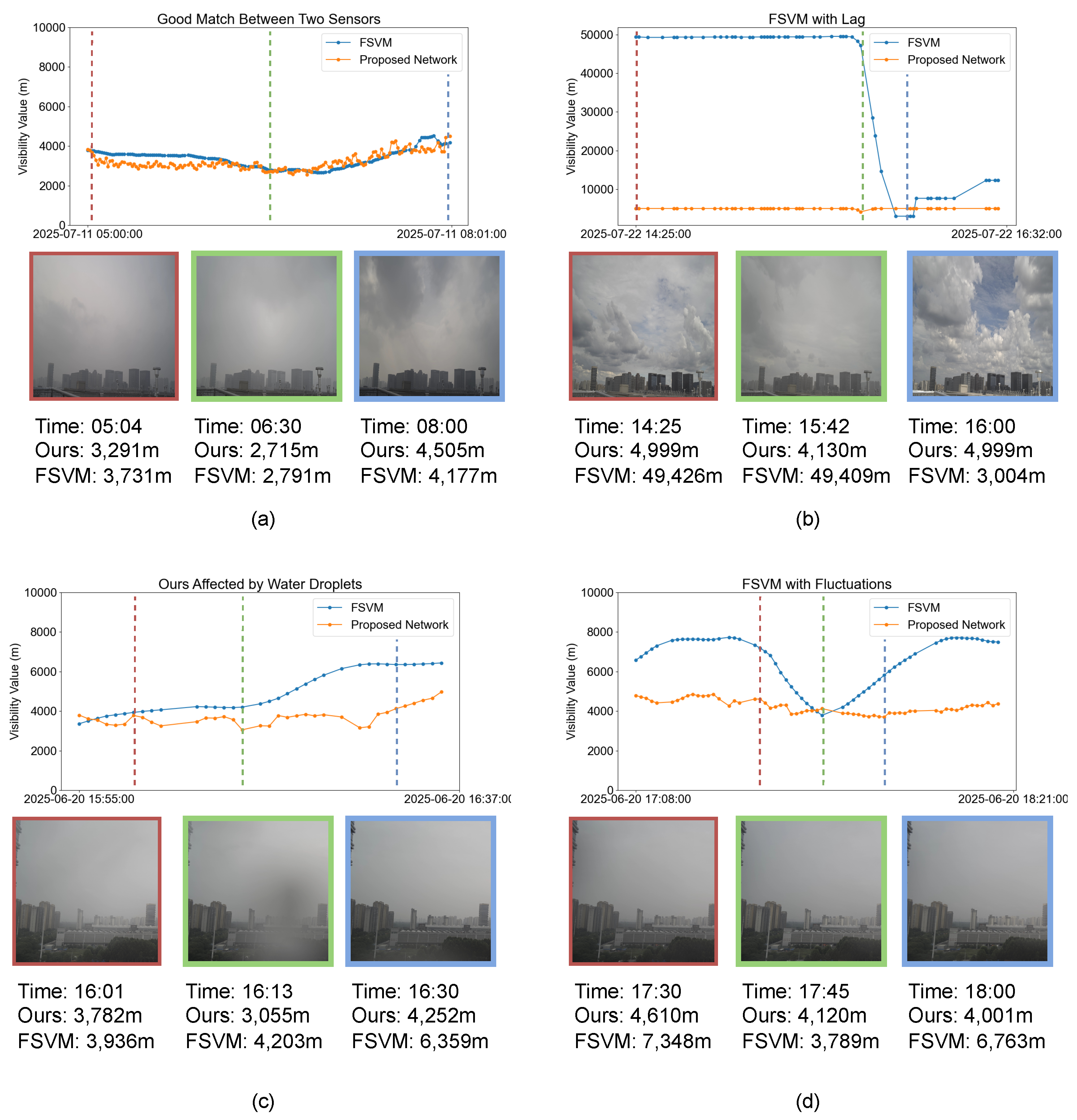

To evaluate the effectiveness of our method, we conduct both comparative and ablation studies on a synthetic fog dataset. Moreover, we build a real-world system equipped with a binocular camera unit and a pan-tilt unit. We compare our estimates against measurements from a forward scatter visibility meter (FSVM) and validate the system using real-world image sequences.

To sum up, our contributions are as follows:

- 1.

We propose a two-stage network that utilizes stereo image inputs and a physically grounded, progressive training paradigm to achieve accurate pixel-wise visibility estimation of scenes.

- 2.

We introduce a mechanism for detecting patchy fog by analyzing the spatial variation in visibility results.

- 3.

We present an automatic technique for generating foggy images and a pipeline for constructing foggy image datasets.

- 4.

Experimental results on both the dataset and real-world environments demonstrate that our proposed methods can accurately estimate visibility across diverse scenes and detect the presence of fog patches within images.

Our work can be found at

https://github.com/HexaWarriorW/Pixel-Wise-Visibility (accessed on 16 September 2025). The remainder of this paper is organized as follows.

Section 2 presents the related work in key areas relevant to this study.

Section 3 introduces the problem setup investigated in this paper, along with the associated mathematical models.

Section 4 describes the construction methodology of the foggy image dataset we built.

Section 5 details the architecture of our proposed network and the loss functions used during training.

Section 6 covers the experimental setup, including comprehensive experiments and ablation studies conducted on both synthetic datasets and real-world scenarios to evaluate the model’s performance. Finally,

Section 7 summarizes the main findings of this work and provides an outlook on potential future research directions.

3. Problem Setup

Visibility is defined as the maximum distance at which a person with normal visual acuity can detect and recognize a target object (of black color and moderate size) against its background under given atmospheric conditions. According to the International Commission on Illumination, the threshold contrast sensitivity for human vision under normal viewing conditions is 0.05.

The imaging process of an outdoor scene under foggy conditions during daytime is illustrated in

Figure 1. In such scenes, sunlight serves as the primary illumination source under both clear and overcast conditions. Consequently, in hazy or foggy weather, the scattering of sunlight dominates the degradation of image quality. Fog exerts both attenuation and scattering effects on light. A foggy image can be modeled as the superposition of the attenuated reflected light from the scene and the atmospheric light generated by scattering.

According to the Koschmieder’s haze model [

24], let

be the original light emitted by the scene object,

be the atmospheric light intensity,

be the extinction coefficient, and

d be the distance between the object and the observer. The light reaching the observer after being scattered by fog or haze

l can be calculated using the following equation:

Through derivation, it can be shown that the contrast

c under the influence of haze is related to the original contrast

as follows:

Given that the contrast of a black object against the sky background can be considered as 1, the relationship between the visibility value

v (the distance at which the contrast decreases to 0.05 under haze conditions) and the extinction coefficient is as follows:

Our proposed end-to-end model

F is inspired by the atmospheric scattering model, which establishes a physical relationship between scene visibility, depth, and image degradation under foggy conditions. The model utilizes both the left image and the right image

from a binocular camera as input. Through extinction coefficient regression informed by depth context guided by physically meaningful supervision, it produces a visibility map

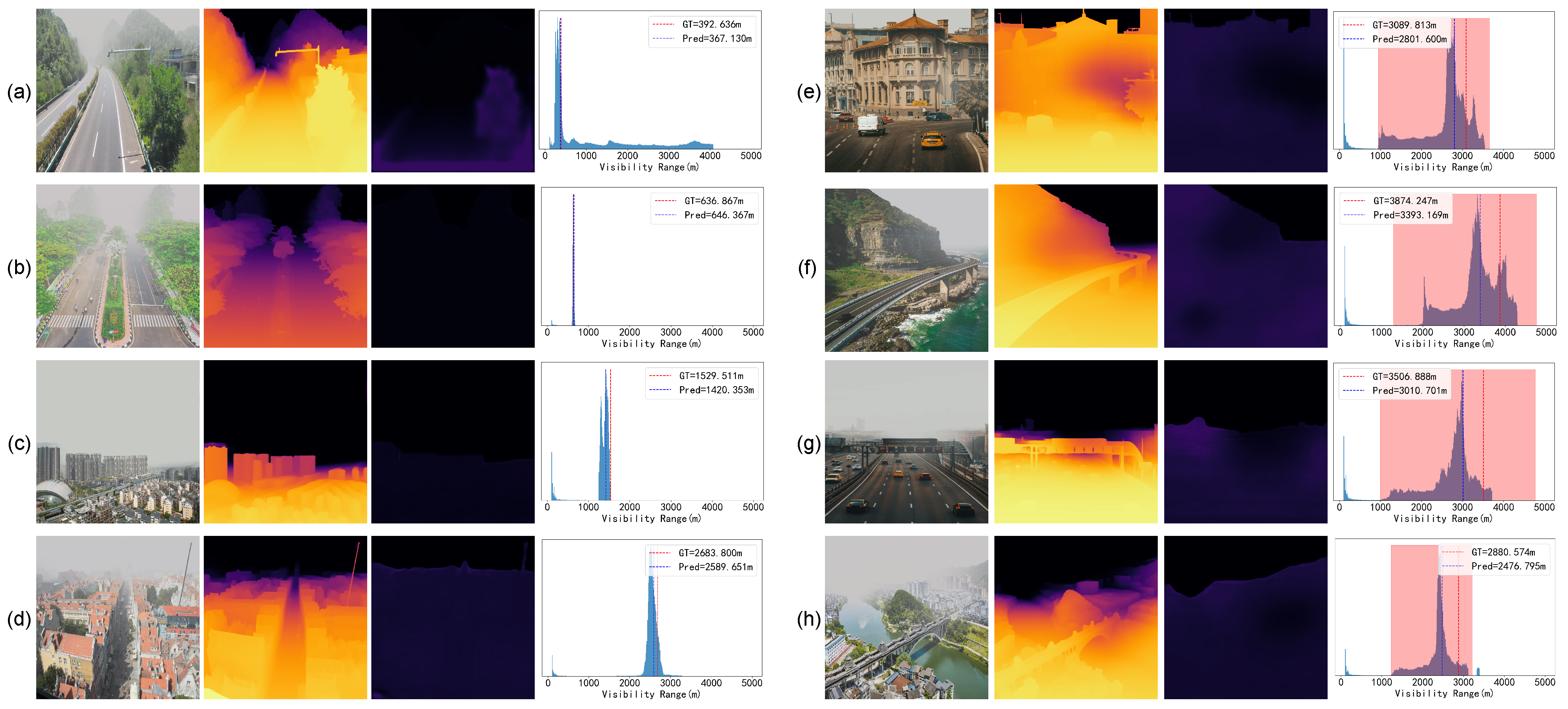

with the same resolution as the input images, effectively estimating the scene’s visibility in hazy or foggy condition.

The estimated visibility map is subsequently fed into our custom-designed mechanism

M for detecting patchy fog. By analyzing spatial variations in visibility levels across different regions of the map, the system generates a confidence score

that quantifies the likelihood of the presence of non-uniform, localized fog within the scene.

5. Method

In this section, we present the overall network architecture, followed by a detailed description of the key modules that play crucial roles in the visibility estimation process. Besides, we introduce an algorithm that identifies whether dense fog patches have occurred in the scene based on the pixel-wise visibility output. Finally, we present the loss function designed for network training.

5.1. Overall

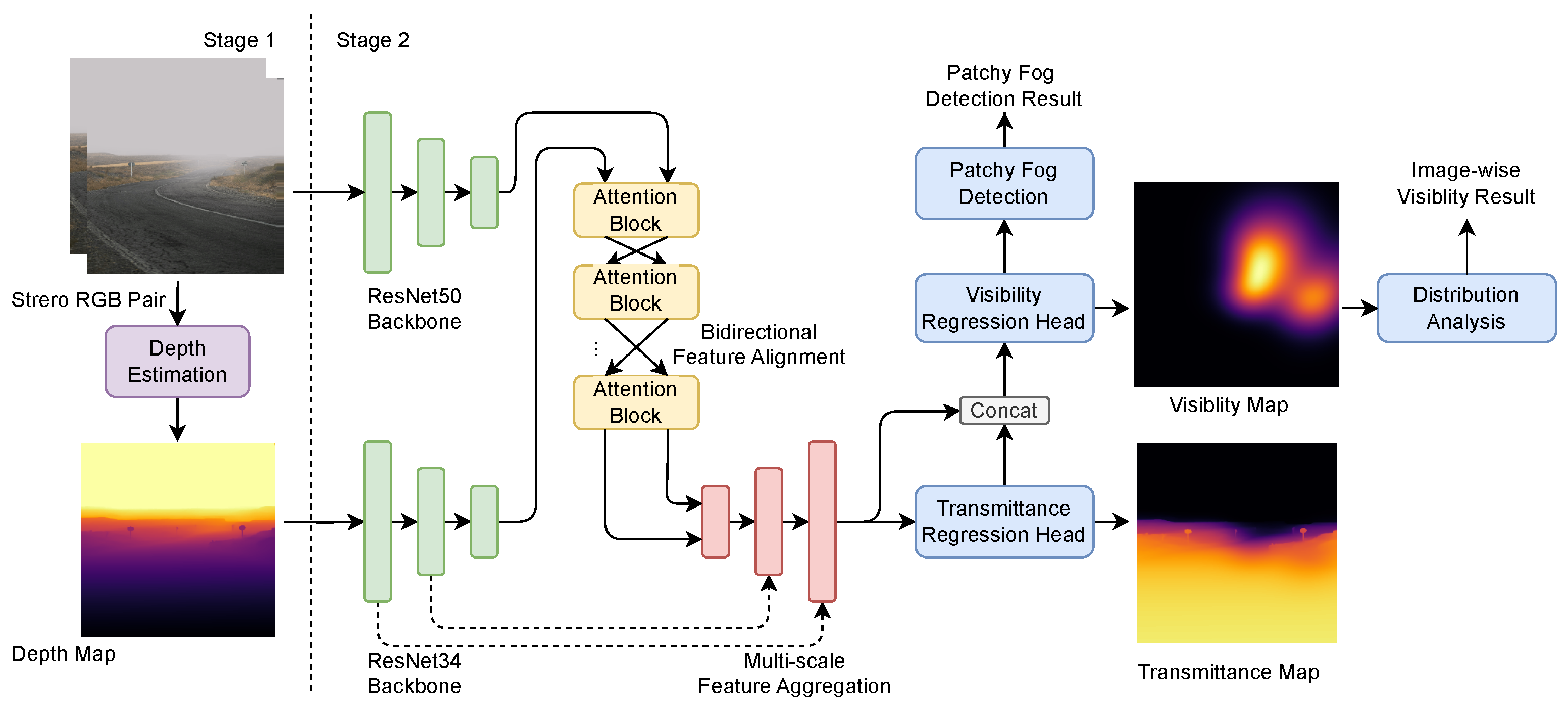

As shown in the

Figure 4, the proposed network is divided into two stages. The first stage is the depth estimation module. To prevent the effects of fog on the depth estimation results, this module takes stereo images captured under clear-weather conditions as input and generates a disparity map. The corresponding depth map is calculated based on the intrinsic and extrinsic parameters of the camera. The second stage plays the role of the visibility estimation. It takes the depth map generated in the first stage and the foggy RGB image as inputs. These inputs are processed through feature extraction and feature fusion processes. Ultimately, this stage outputs a pixel-wise visibility map for the entire scene after multi-scale feature aggregation and regression.

Additionally, after the two-stage network, we add a patchy fog discriminator which analyzes the features from the previously generated pixel-wise visibility map to determine whether patchy fog is present in the scene.

5.2. Stage I: Scene Depth Perception

Inspired by the design of [

43] and considering hardware efficiency, We adopt a hybrid design that combines a traditional stereo matching algorithm with a GRU-based cascaded recurrent stereo matching network for the depth estimation module. This advanced stereo matching architecture leverages rich semantic information while relying on geometric cues to ensure accurate scale, thereby addressing the challenge of accurately estimating disparity maps in diverse real-world scenarios.

We first employ traditional methods such as SGM [

44] to perform cost construction and aggregation on features extracted by the CNN, obtaining a coarse initial disparity. Then, we employ a hierarchical network that progressively refines the disparity map in a coarse-to-fine manner. It utilizes a recurrent update module based on GRUs to iteratively refine the predictions across different scales. This design not only helps recover fine-grained depth details but also effectively handles high-resolution inputs. To mitigate the negative effects of imperfect rectification, we use an adaptive group correlation layer introduced by CREStereo [

43], which performs feature matching within local windows and uses learned offsets to adaptively adjust the search region. It effectively handles real-world imperfections such as camera module misalignment and slight rotational deviations. This design enhances the network’s capability in handling small objects and complex scenes, significantly improving the quality of the final depth map.

During inference, to achieve a balance between speed and accuracy, initial depth estimation is performed at scale of the original resolution with a disparity range of 256, followed by iterative optimization conducted 4, 10, and 20 times at , , and scales of the original resolutions, respectively. Finally, based on the camera setup and intrinsic parameters, we convert the disparity map into a depth map.

5.3. Stage II: Pixel-Wise Visibility Measurement

In this section, we present the visibility estimation framework that effectively integrates RGB texture information and depth cues through a hybrid architecture combining convolution neural networks and transformer-based attention mechanisms [

45]. As illustrated in

Figure 4, our design consists of four key components: (1) a dual-branch feature extractor based on ResNet [

46], (2) a bidirectional multi-modal feature alignment module, (3) a multi-scale feature aggregation module, and (4) a regression head for pixel-wise visibility map generation. This design enables comprehensive feature learning and precise visibility estimation by leveraging both local texture details and global contextual information.

5.3.1. Dual-Branch Feature Extraction

Our network utilizes two separate feature extractors to process RGB images and depth maps independently. The RGB branch is built upon a pre-trained ResNet50 architecture, while the depth branch adopts a pre-trained ResNet34 network modified to accept single-channel input, enabling it to effectively process depth maps. To ensure that the generated visibility maps are more consistent with the semantic and structural distributions, we utilize the feature outputs from the first to third layers of the depth branch to capture multi-scale spatial features.

5.3.2. Bidirectional Multi-Modal Feature Alignment

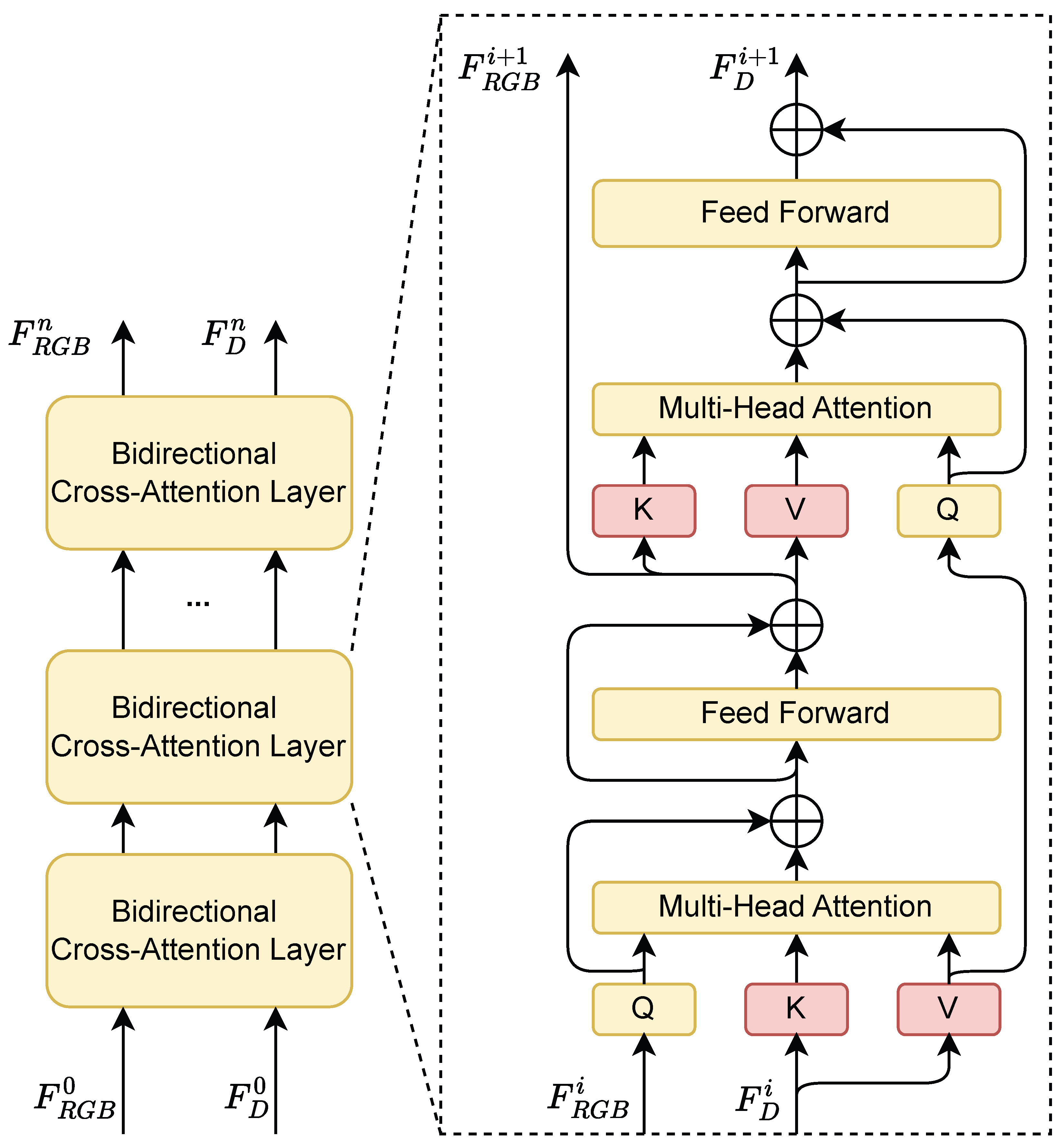

To integrate RGB and depth features, we introduce a feature alignment module involved cross-attention mechanism that fuses the structural and the semantic information from the scene, establishing a relationship between the transmittance and the depth. As illustrated in

Figure 5, to achieve better aggregation of multi-modal features, we use a stacked BCB structure, allowing RGB semantic features and depth structural features to alternately query information from the other modality. The process of the stage

i is described by Equation (

7):

Specifically, the fusion proceeds in two phases. In the first phase, the RGB feature is mapped as query , while the depth feature is mapped as the key and the value . Multi-head attention computation followed by an MLP is applied after the feature mapping, which produces the RGB feature enriched with the depth information. In the second phase, on the other hand, the depth feature serves as the query , and the updated RGB features is used as the key and the value. Another round of attention computation is performed to generate the depth feature enhanced with RGB semantic information. This bidirectional interaction is repeated in a stacked manner, enabling progressive and mutual refinement of both modalities, thereby achieving more robust and context-aware feature representations.

5.3.3. Multi-Scale Feature Aggregation

Following the feature alignment module, we progressively merge the dual-branch features while gradually restoring the spatial resolution. Due to the strong correlation between visibility and metric depth, we further incorporate shallow-level features from the depth branch during the fusion process. To align the feature dimensions for feature aggregation, we apply

convolution layers to project both RGB and depth features into a shared embedding space of dimension 512. This integration provides structural constraints on the visibility output, enhancing its geometric consistency. As illustrated in the

Figure 6, the entire fusion process is accomplished through multiple fusion blocks. Each block consists of feature add, convolution with skip connections, and upsampling operations, enabling effective multi-scale feature refinement and facilitating the generation of accurate and spatially coherent visibility maps. Finally, we employ a ConvBlock consisting of multiple 2D convolution layers to further refine and aggregate the features.

5.3.4. Pixel-Wise Visibility Map Regression

At the final stage of the network, we design a regression head to estimate visibility. Due to its bounded range and invertible relationship with visibility, the extinction coefficient serves as a more suitable regression target than visibility itself. Therefore, we supervise the network using pixel-wise extinction coefficient predictions. The predicted visibility values is converted back during evaluation.

Furthermore, to impose stronger physical constraints on the network output, we introduce an auxiliary regression head that predicts a transmittance map. The transmittance map derived from the intermediate feature is jointly fed into the extinction coefficient regression head. During training, this transmittance prediction is supervised with ground truth transmittance, providing auxiliary supervision that regularizes the learning process and enhances the physical consistency of the estimated visibility. This multi-task design encourages the network to learn physically atmospheric models, thereby improving both accuracy and interpretability.

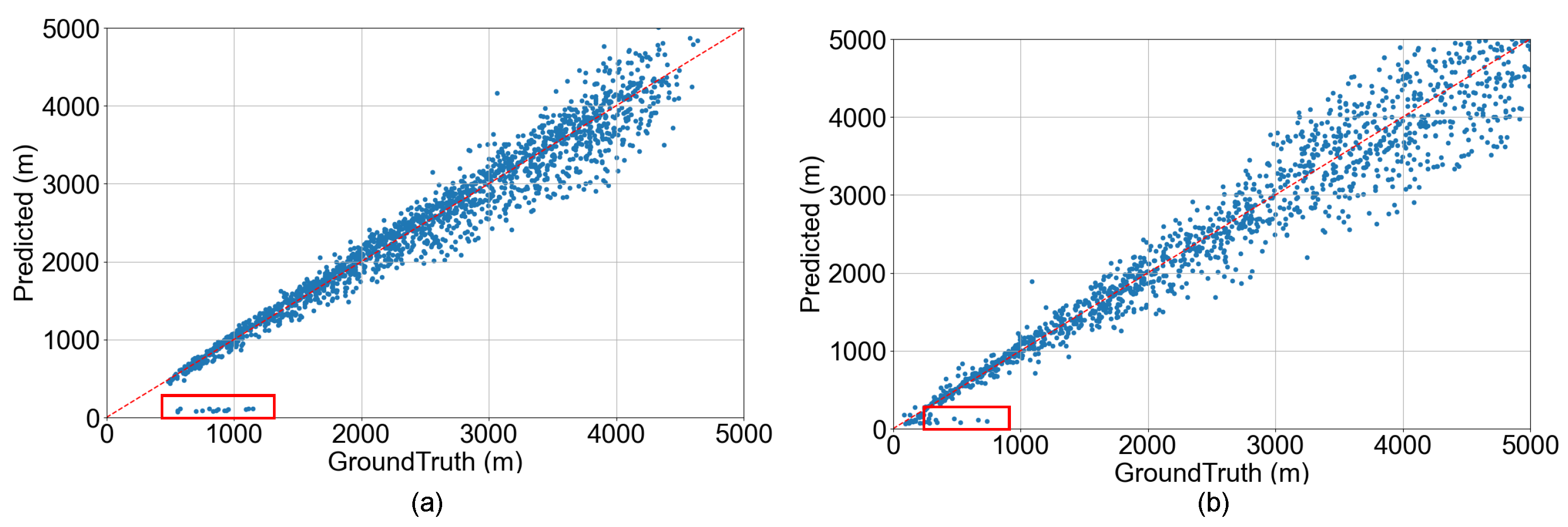

5.3.5. Visibility Map Distribution Analysis

To evaluate the pixel-wise visibility results, we convert the extinction coefficient

B predicted by the network into visibility

V following the Equation (

3). Additionally, to facilitate a more direct comparison with scalar visibility estimates from other methods and physical sensors, we convert our pixel-wise visibility results into image-wise visibility values. Unlike DMRVisNet [

20], which directly uses a predefined data distribution to set thresholds, we analyze the distribution of the visibility results. Specifically, we compute the histogram of visibility map for 50 bins. Bins with counts less than 1% of the total are considered outliers and are filtered out. Further, we sort the histogram and select the farthest value among the top three bins as the final result. This method provides a more accurate representation of scene visibility by mitigating the impact of close objects, which lack rich transmittance information and could distort the visibility estimation. Finally, we clip the visibility values exceeding 5000 m to ensure the output remains within a physically meaningful and practically relevant range.

5.4. Patchy Fog Detection

In addition to generating a pixel-wise visibility map, we further propose a CNN-based patchy fog discriminator to determine whether patchy fog exists in the scene. Specifically, the discriminator takes the previously estimated visibility map combined with the transmittance map as input, which captures spatial variations in visibility across the scene. We design a lightweight CNN backbone to extract the representation indicative of fog distribution. To further enhance the model’s discriminative capability, we incorporate a global max pooling layer after each convolution block. Finally, we employ two fully connected layers to map the features to a binary classification indicating the presence or absence of patchy fog. This patchy fog detection module enables the system to not only estimate visibility quantitatively but also qualitatively perceive complex real-world fog distributions, improving situational awareness in practical transportation scenarios.

5.5. Loss Function

Considering that our network involves three distinct tasks—depth estimation, visibility estimation and patchy fog discrimination—we propose a multi-stage training manner correspondingly. In the first stage, we train the depth estimation module by feeding it RGB image pairs without fog augmentation and supervising it with depth ground truth. For the loss of the depth estimation, we refer to [

43] and employ ground-truth depth to supervise the multi-scale depth outputs of the network following Equation (

8). Specifically, for each scale

, we resize the sequence of outputs from different iterations

n,

to the full prediction resolution. Subsequently, we compute the L1 loss of these multi-scale depth outputs with respect to the ground truth and obtain the final loss through a weighted summation.

In the second stage, we freeze the depth estimation component and proceed to train the network for visibility estimation. For images with stereo modalities, we use the depth inferred by the model from the first stage as the input for the depth branch. For the remaining images with monocular modalities, we utilize the depth ground truth provided by the dataset as input. Given that the proposed network produces multiple outputs, including a transmittance map, a pixel-wise visibility map, and a binary decision on the presence of patchy fog, we design a multi-task, weighted composite loss function that jointly optimizes all these objectives. Each component is normalized and scaled by a learnable or empirically tuned weight to balance the contribution of each task during training.

Specifically, the overall loss function is formulated as a weighted sum of these individual loss terms, as shown in Equation (

9):

where

denote the visibility regression loss and the patchy fog classification loss, respectively.

are empirically tuned weights to balance the contribution of each task during training.

For visibility estimation, we observe a significant correlation among extinction coefficient map

B, depth map

D, and transmittance map

T. Moreover, the transmittance map plays a crucial role in dehazing foggy images. To leverage these physical relationships, we introduce a semi-supervised loss function with strong physical constraints. The overall objective is formulated as a weighted sum of the extinction coefficient regression loss

, the transmittance regression loss

, the transmittance reconstruction loss

, and the dehazed image reconstruction loss

. The L1 loss is employed to uniformly measure the loss between the estimated results and the corresponding ground truths for each term. The loss function is defined as Equation (

10):

where

are hyperparameters that balance the transmittance loss and the visibility loss, and it varies dynamically during training. To align with the distribution used during dataset generation and enhance model generalization, we randomly sample the value of

from the same interval as used in data generation step to introduce small perturbations during training.

For

, we employ the binary cross-entropy loss, where

denotes the ground-truth label of sample, and

represents the model’s prediction result for the presence of patchy fog in per sample.