Abstract

With the rapid development of urbanization, ambient air pollution is becoming increasingly serious. Out of many pollutants, fine particulate matter (PM2.5) is the pollutant that affects the urban atmospheric environment to the greatest extent. Fine particulate matter (PM2.5) concentration prediction is of great significance to human health and environmental protection. This paper proposes a CNN-SSA-DBiLSTM-attention deep learning fusion model. This paper took the meteorological observation data and pollutant data from eight stations in Bijie from 1 January 2015 to 31 December 2022 as the sample data for training and testing. For the obtained data, the missing values and the data obtained from the correlation analysis performed were first processed. Secondly, a convolutional neural network (CNN) was used for the feature selection. DBILSTM was then used to establish a network model for the relationship between the input and actual output sequences, and an attention mechanism was added to enhance the impact of the relevant information. The number of units in the DBILSTM and the epoch of the whole network were optimized using the sparrow search algorithm (SSA), and the predicted value was the output after optimization. This paper predicts the concentration of PM2.5 in different time spans and seasons, and makes a comparison with the CNN-DBILSTM, BILSTM, and LSTM models. The results show that the CNN-SSA-DBiLSTM-attention model had the best prediction effect, and its accuracy improved with the increasing prediction time span. The coefficient of determination (R2) is stable at about 0.95. The results revealed that the proposed CNN-SSA-DBiLSTM-attention ensemble framework is a reliable and accurate method, and verifies the research results of this paper in regard to the prediction of PM2.5 concentration. This research has important implications for human health and environmental protection. The proposed method could inspire researchers to develop even more effective methods for atmospheric environment pollution modeling.

1. Introduction

In recent years, with the continuous acceleration of the industrialization process [1], China’s ecological and environmental pollution problems have become prominent [1,2]; air pollution is particularly prominent, and air pollutants, such as PM2.5, have accumulated in large amounts [3], and these have received significant attention across society [4]. PM2.5 is a form of fine particle with an aerodynamic diameter of <2.5 μm [5]; it is one of the main sources of air pollution in urban areas [6,7]. The sources of PM2.5 include direct sources [8] and indirect sources [9]. The direct sources are particulate matter generated by pollution sources, such as smoke, particles from automobile exhaust emissions, and sand and soil formed by the wind force. The indirect source is the secondary pollution formed by the complex reactions of gases generated by the pollution sources in the air; for example, H2S, SO2, etc., discharged from a boiler are oxidized by the atmosphere to form sulfate particles. With the implementation of the “Air Pollution Prevention and Control Action Plan”, the PM2.5 pollution problem in most cities in China has been alleviated [10], but PM2.5 concentrations have not yet reached the national standard [11]. Therefore, the advanced prediction of PM2.5 concentration could effectively prevent and reduce its harm to human beings.

At present, numerical prediction, statistical prediction, and artificial intelligence prediction are the three main prediction methods for predicting pollutants [12,13]. The first numerical prediction simulates the space–time distribution of air pollutants by adding pollution source data and meteorological data, and uses the WRF Chem model to predict surface air pollutants in East China [14,15]. Li et al. [16] introduced the idea of regional chemical transport on the basis of the CMAQ model to forecast the air quality in Urumqi. Although WRF and CMAQ have improved the prediction accuracy to a certain extent, complex chemical reactions and geographical conditions cannot be easily simulated, so numerical prediction has the disadvantage of insufficient performance. The second method uses statistical models to predict PM2.5 concentration. In 2021, Xu Dong et al. [17] used the basic monitoring index data on temperature, inhalable particulate matter PM10, and CO in Chengdu as the research object, and built a multivariate linear regression (MLR) model for PM2.5 concentration. In the same year, Xu Yixin et al. [18] combined a wavelet analysis with a regression model, and used the W-MLR model to analyze PM2.5. The prediction accuracy was improved, but this method needs to construct the mapping relationship between variables and results, and it is difficult to test.

The last method, AI, is the most popular advanced technology at present. It includes deep learning and machine learning. In terms of traditional machine learning, Ren et al. [19] introduced the undersampling method, based on the random forest (RF) in 2019 to reduce the impact of class inequality on the results. In 2021, Guo et al. [20] used the RF model to integrate the GNSS meteorological parameters and predict concentrations. The experimental results show that the increase in prediction time does not have a significant impact on the prediction accuracy of the RF model. Zhao et al. [21] used an improved support vector machine to predict PM2.5 concentration. It was verified that the new model had a better generalization ability and performance than other models.

In terms of deep learning, models, such as back-propagation neural networks (BPs), multi-layer perceptron (MLP), and long short-term memory (LSTM) are widely used for the prediction of air quality [22,23,24]. For example, using back propagation (BP) [25] and long short-term memory (LSTM) neural networks [26] have been used to predict PM2.5 concentration. Convolutional neural networks (CNNs) are increasingly being used to predict hourly PM2.5 concentrations [27]. However, the prediction accuracy of a single model is limited. In order to improve the prediction accuracy, a model combining multiple neural networks to predict concentration has been developed and used. A model [28] based on a recursive neural network (RNN) and introducing an attention mechanism was developed. The attention weight was allocated to input features with time series in certain proportion. The experimental results show that the prediction accuracy of this model is higher than that of other general neural network models [29]. In addition, the combination of CNNs and BP neural networks has achieved significant results for multi-region and multi-line-of-sight concentration prediction [30]. In PM2.5 prediction, some scholars compared an MLP neural network and RF with an LSTM neural network, and they found that LSTM had a better effect [31]. LSTM is able to better capture the data characteristics in time series [32] and effectively avoid the gradient disappearance phenomenon in the calculation of time series prediction problems [33]. At present, many air quality prediction models based on LSTM have been proposed, for example, LSTM extension (LSTME) [34], convolution LSTM extension (C-LSTME) [35], graph convolution LSTM (GC-LSTM) [36], deep multi-output LSTM (DM-LSTM) [37], and other models. Although neural networks have been successfully used to predict data series, there are still shortcomings, such as over-fitting, local optimization, and parameter optimization [38].

This paper constructs a (CNN-SSA-DBiLSTM-attention) model for time series prediction. The constructed DBiLSTM neural network is based on an LSTM neural network and uses DBiLSTM to predict and introduce an attention mechanism to enhance the impact of key information. Aiming at the problem of local optimization in the selection of model network parameters, the sparrow search algorithm is used to train the model parameters. The hourly meteorological observation data and pollutant data of eight stations in Bijie, Guizhou, from 1 January 2015 to 31 December 2022, are used as input data, and the multiple imputation method is used to fill in the missing values in the data. Following the normalization of the data, a correlation analysis is carried out to remove data with low correlation in order to reduce the data dimensions and improve prediction accuracy. Finally, the CNN-SSA-DBiLSTM-attention model is used to predict PM2.5 concentration in comparison with the three other models—CNN-BILSTM, BILSTM, and LSTM. The final results show that the CNN-SSA-DBiLSTM-attention model proposed in this paper has better prediction results, and the accuracy increases with the increase in prediction time. This model effectively solves the problem of the decline in prediction accuracy in long time series.

2. Materials and Methods

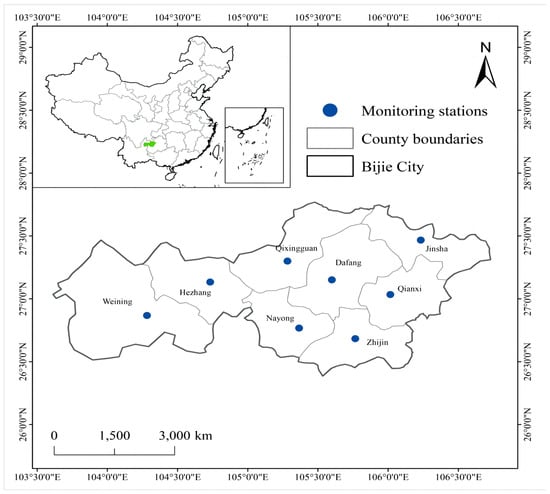

There are eight pollutant monitoring stations in Bijie District; they are located in Qixingguan District, Dafang County, Qianxi County, Jinsha County, Zhijin County, Nayong County, Weining Yi Autonomous County, and Hezhang County. Each region has a monitoring station, so the average data of the eight regional monitoring stations were selected as the experimental data. The study area and site distribution are shown in Figure 1.

Figure 1.

Regional division and site distribution, Bijie, China.

The data covers the period from 1 January 2015 to 31 December 2022. The data used are from the National Meteorological Administration, and the observed meteorological variables were provided by eight district and county weather stations. The data samples are hourly meteorological observation results. Each sample contains 14 characteristic elements, including speed, temperature, pressure, precipitation, relative humidity, wind speed, wind direction, precipitation, dew point temperature, and visibility. The environmental monitoring station provides six basic pollutant variables, namely, PM2.5, PM10, NO2, SO2, O3, CO, and air quality AQI (Table 1).

Table 1.

Variable description.

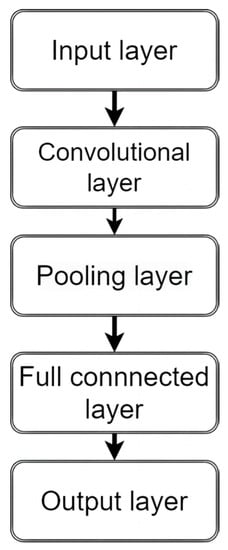

2.1. Convolutional Neural Network (CNN)

In order to better recognize and make better use of existing features, this study uses a CNN to extract features. A CNN can extract the overall characteristics of data within a certain range from the input data through local perception, weight sharing, and pooled sampling, and is able to eliminate redundant data through pooled sampling as the input of the subsequent model. This greatly improves the data recognition effect of subsequent models. The convolutional layer has the ability to extract features from multiple hidden layers and can share the convolution core. The CNN can easily handle multidimensional data [39]. The convolutional neural network structure is shown in Figure 2.

Figure 2.

CNN structure.

The input layer is used to obtain input data.

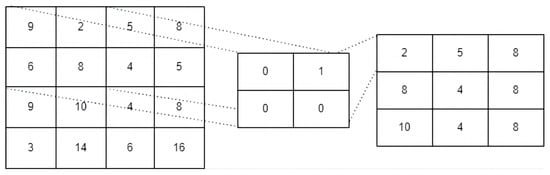

The convolutional layer is used for the convolution of input layer data. The so-called convolution is the inner product of the original data and the intermediate filter matrix to obtain a new characteristic matrix, as shown in Figure 3.

Figure 3.

Schematic diagram of convolutional layer intermediate filter.

The corresponding formula is as follows:

where is the convolution output, is the activation function, is the inductive bias, is the convolution kernel, and is the input representing the inner product.

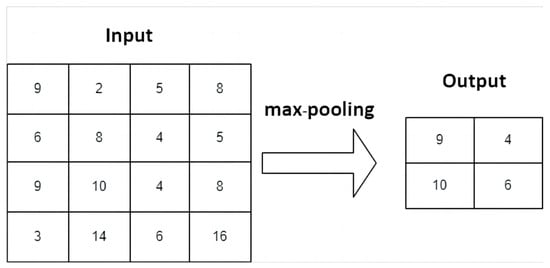

The pooling layer is used to optimize the output of the convolutional layer, reduce the feature dimensions and improve the calculation speed. The pooled data have certain translation and rotation invariance, as shown in Figure 4.

Figure 4.

Schematic diagram of the pooling layer.

The full connection layer maps all pooled data to the sample tag space for the later output layer.

This paper uses a CNN to extract the features of the input data, and then uses DBiLSTM to predict the data.

2.2. Deep Bidirectional Long Short-Term Memory (DBi-LSTM)

2.2.1. BiLSTM

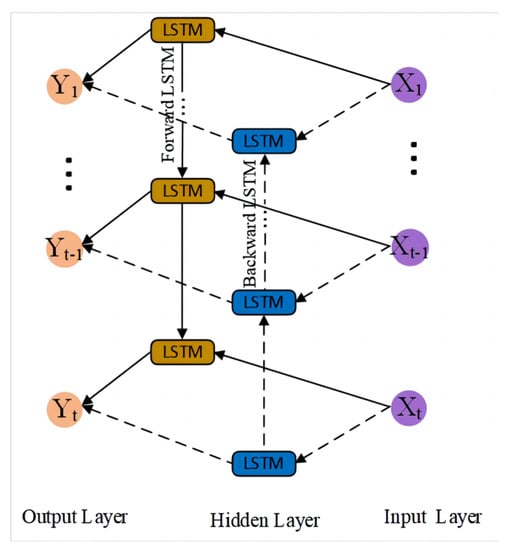

The bi-directional long short-term memory network (BILSTM) is a time-based two-way cyclic network that is trained through the forward time series and the reverse time series. The output data contains information on the entire time series [40]. The BILSTM network is a kind of neural network that is proposed to solve the problem because the long short-term memory network (LSTM) lacks the connection between the front and back data. In essence, the BILSTM network and LSTM network belong to the RNN.

However, the LSTM network can only use the input information from before a certain time to predict the results, so the introduction of the BiLSTM network will produce better results [41]. The two LSTMs are interconnected in the input sequence of the BiLSTM network, such that the information can be used simultaneously in both forward and reverse directions via the present time node’s output. Each time the node’s input is sent in turn to the forward and reverse LSTM units, depending on each of their individual states, they produce outputs. These two outputs are together connected to the output node of the model order, to synthesize the final output. The BILSTM network structure is shown in Figure 5.

Figure 5.

BILSTM network structure.

2.2.2. DBi-LSTM

The DBILSTM network is composed of an input layer, an output layer, a hidden layer, and a dense layer. The hidden layer is composed of n BILSTM networks. Each BILSTM layer contains one forward LSTM network and one reverse LSTM network; this enables the BILSTM network at each layer to obtain information from both directions at the same time. The first n − 1, layer will return all output sequences, and then these sequences will be transmitted to the next layer after information fusion through the adder. The nth layer only returns the result of the last time step of the output sequence and outputs the prediction result through the one dense layer (Figure 6). The calculation process is as follows.

Figure 6.

Structure diagram of the DBILSTM neural network.

Suppose the th sequence of the DBiLSTM network input is xi = [x1, x2, …xn, xm]; then, the output of the first layer can be expressed as

where f is the activation function of the BILSTM network; are forward and reverse weight matrices, respectively; and ⊕, where superscript 1 indicates the first layer, and ⊕ is the addition calculation. The output sequence of layer v can be expressed as

The final output sequence can be expressed as

where () is the activation function of the dense layer, usually a rule function; are the weight parameters of the dense layer and the output layer, respectively [42]; and is the offset of the dense layer.

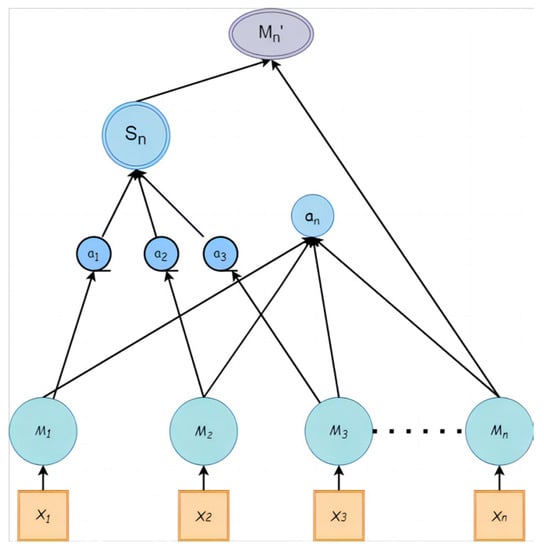

2.3. Attention Mechanism

An attention mechanism is a special structure embedded in a machine-learning model and has a large number of applications in many fields. Its essence is that a reasonable distribution model reduces or ignores irrelevant information about the target information and enlarges the important information needed [43]. The attention mechanism mainly causes the model to assign different weight parameters to the input feature vectors in order to adjust the proportion of the input features, highlight the important influence feature vectors, suppress the useless feature vectors, optimize model learning, and make better choices, while not increasing the calculation amount of the model. The structure is shown in Figure 7.

Figure 7.

Attention mechanism structure.

2.4. Sparrow Search Algorithm (SSA)

A novel swarm intelligence optimization algorithm called the SSA was created by modeling the behavior of sparrows seeking food and avoiding predators. Discoverer, enrollee, and watcher are the three categories. The discoverer has a broad search area and can direct the populace toward food. The enrollee will approach the discoverer in order to find nourishment and to become more adaptable. When natural enemies pose a threat to the entire community, the watcher will take off and immediately engage in anti-predation. In general, 10% to 20% of the population are known discoverers. The following is the location update formula:

where denotes the current iteration count. The maximum amount of iterations is T, Q is a random integer that follows the ordinary normal distribution, and is a uniform random number within (0,1) [44]. L means the size is 1 × d in a matrix with all elements of 1;

If < ST, the warning threshold is not reached. When ST, it reaches the threshold of the warning value.

The remaining sparrows, except the discoverers, are all enrollees. They use the following formula to update their positions:

stands for the sparrow’s worst position in the d dimension at population iteration t, and stands for the sparrow’s best position in the d dimension at population iteration [45]. If , the th person has a low level of fitness. The th participant has a reasonably high level of fitness when . Between 10% and 20% of the population are typically used for reconnaissance and early warning, and their locations are updated as follows:

where K is a random number between −1 and 1; is a random number produced by a normal distribution with a mean value of 0 and a variance of 1; is the fitness of the th sparrow; are the best and worst fitness values of the current sparrow population [46]; and is a very small number to avoid the position being frozen when the denominator is 0.

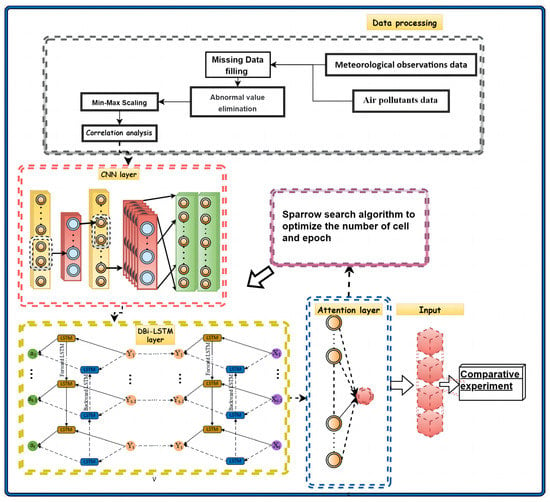

2.5. CNN-SSA-DBiLSTM-Attention Model

First, a deep bidirectional short-term memory network was established. On the basis of LSTM, the reverse LSTM layer was added, and the bi-directional long short-term memory (BiLSTM) was established. The deep bi-directional long short-term memory (DBiLSTM) was formed by using a multi-layered BiLSTM. Then, a convolutional neural network (CNN) consisting of two convolutional layers, two pooling layers, and two fully connected layers was established to improve the utilization of the feature data. The introduction of an attention mechanism, by mapping weights and learning parameter matrices, reveals the hidden states of different DBILSTM weights to capture important features of long time-series data and enhance the impact of key information. Aiming at the problem of local optimization in the selection of model network parameters, the sparrow search algorithm was used to train the model parameters, improve the prediction accuracy of the model, and finally output the prediction results. The whole process and model structure were as follows (Figure 8):

Figure 8.

The overall framework of the CNN-SSA-DBiLSTM-attention neural network model.

In this experiment, four evaluation indicators, root mean square error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and the coefficient of determination (R2), were used to evaluate the model prediction results. The coefficient of determination reflects the level of fitting, the average absolute error reflects the magnitude of the model error, the average absolute percentage error clearly shows the size of the error, and the root mean square error reflects the global stability of the mistake in the model. The formulas are as follows:

where is the ith predicted value and the ith true value of ; n is the total number of data in the test set and is the average of the true values.

2.6. Data Preprocessing

Missing Value Processing

This study used data from eight stations in Bijie City, with a resolution of 1 h, from 1 January 2015 to 31 December 2022. Owing to the length of time, damage to the sensor itself, or the impact of the environment, there are often data missing and abnormal values in the dataset. In order to improve the accuracy of the model, data cleaning is required. The number of missing air pollutant data and meteorological observation data are shown in Table 2. For missing data, this paper used multiple interpolation methods to fill in the data; the mice package in the R language was used to perform the multiple interpolation data filling.

Table 2.

Missing data.

It can be seen from the table that the meteorological data loss is more serious. This is because, in addition to computer failure and monitoring software failure, sometimes the collector, sensor, and other measuring and reporting instruments are hit by lightning when thundery weather occurs. This inevitably leads to instrument damage and failure, resulting in the lack of measurements for all surface meteorological observation data. At the same time, because of the interference of certain complex weather events, the instrument’s collection and transmission of meteorological elements can be affected, resulting in the occurrence of data anomalies. For example, when a rainstorm occurs, the filter covers the temperature and humidity sensor, and the ground temperature sensor is likely to be soaked with rain, resulting in abnormal phenomena, such as missing measurements of temperature and humidity data.

Owing to the influence of different units, the data scale of each influencing factor may vary greatly. In order to make each influencing factor comparable, we need to normalize them. Moreover, for LSTM prediction, the value after the activation function is between −1 and 1, so the data must be normalized. If the data are not normalized, the training speed of the model will slow down, and the final prediction result will be adversely affected.

2.7. Correlation Analysis

For the influencing factors that were initially screened according to experience, we used the collected data to analyze the correlation between the relevant influencing factors and the load.

The correlation between the explanatory variable and the dependent variable (PM2.5) was calculated, and the correlation coefficient heat plot is shown in Table 3. The correlation analysis shows that PM2.5 has a large positive correlation with PM10 and the air quality index, and the correlation coefficients with PM10 and the air quality index are 0.95 and 0.92, respectively, illustrating the importance of PM10 and AQI as input variables. Following PM10 and AQI, the order of correlation coefficients is NO2, SO2, and CO. The correlation between NO2 and PM2.5 is 0.53. The correlation between SO2 and PM2.5 is 0.48. The correlation between CO and PM2.5 is 0.36. However, O3 is also an environmental pollutant, and it has a weak negative correlation with PM2.5. This is because the main source of PM2.5 is anthropogenic emissions, such as the combustion of fossil fuels and the emission of automobile exhaust, and the NO2, SO2, and CO contained in these emissions generate PM2.5 through a variety of chemical and physical processes in the atmosphere. PM2.5 is one of the variables used to calculate the air quality index. O3 in the near-surface (troposphere) atmosphere is mainly produced by a photochemical reaction, which is different from the source and mode generation of PM2.5.

Table 3.

Correlation coefficient between various factors and PM2.5.

In the meteorological data, there is a strong negative correlation between visibility and PM2.5. The correlation coefficient is −0.49, indicating that visibility decreases when the concentration of PM2.5 increases. The negative correlation with dew point temperature is second only to visibility, and the correlation coefficient is −0.26, which indicates that the dew point temperature has a good correlation with PM2.5. Other meteorological data, including wind direction, temperature, precision, relative humidity, and wind speed, are also negatively correlated. It can also be seen from Table 3 that the correlation between other variables and PM2.5 is not high, and the correlation coefficient between pressure and PM2.5 is only 0.01, indicating that there is almost no relationship between pressure and PM2.5. In order to improve the prediction accuracy, we removed pressure, which had the lowest correlation coefficient in order, to reduce the data dimensions.

3. Results and Discussions

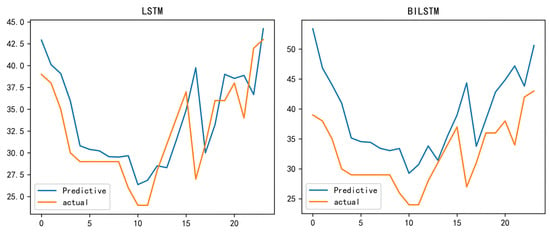

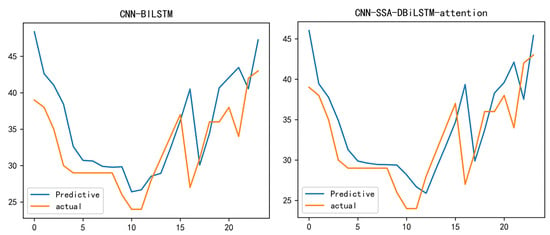

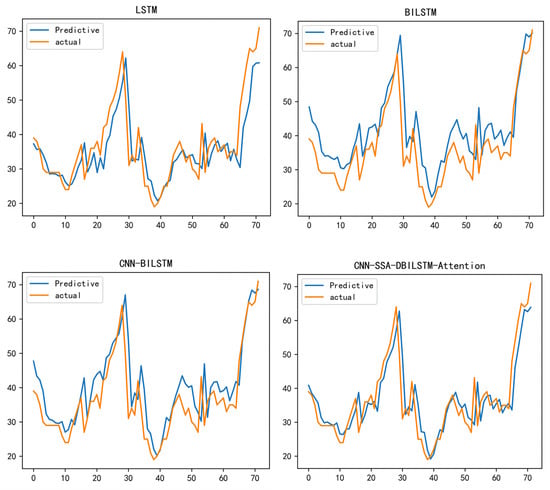

In order to study the visible prediction performance of the proposed hybrid model, the prediction results of Bijie City after 24 h, 48 h, 168 h, and 720 h were analyzed using a CNN-SSA-BILSTM-attention model and compared with three basic models, CNN-BILSTM-attention, LSTM, and BILSTM. CNN-SSA-BILSTM-attention shows good prediction results at 24 h, 72 h, 168 h, and 720 h. We forecasted and compared the 2021 winter data. The reason we used the winter data from 2021 was that PM2.5 fluctuated greatly during this period, and the difference between the lowest concentration value and the highest concentration value exceeded 60—this requires a model with a high prediction accuracy.

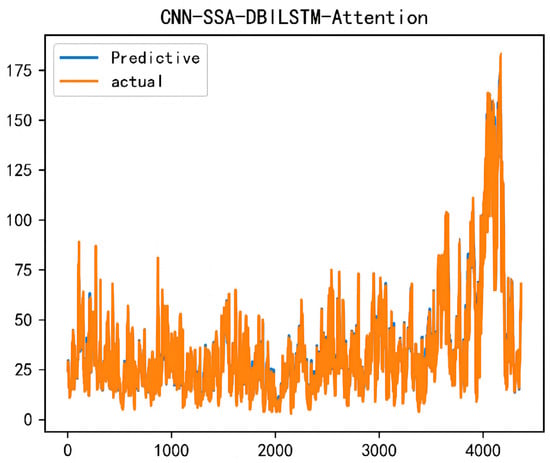

According to the short-term prediction results for 24 h and 72 h, CNN-SSA-DBiLSTM-attention shows good prediction performance in the short-term PM2.5 prediction. In the 24 h prediction, the coefficient of the determination (R2) of CNN-SSA-DBiLSTM-attention is 0.95, which is higher than the 0.92 and 0.89 of CNN-BiLSTM and LSTM. It is far higher than the 0.82 of LSTM. With regard to the three parameters of the root mean square error (RMSE), mean absolute percentage error (MAPE), and mean absolute error (MAE), the effects of the CNN-SSA-DBiLSTM-attention models are also better than other three models. From Figure 9, it can be seen that CNN-SSA-DBiLSTM-attention has the best prediction performance. Compared to other models, the predicted values are more in line with the actual values. The worst result in the graph is BILSTM. For the 72 h forecast, the evaluation values of the CNN-SSA-DBiLSTM-attention model are: RMSE 10.29, MAPE 9.44, MAE 6.41, and R2 0.94. These are the four models that have the best neutral energy. Additionally, the LSTM with the worst performance has evaluation values of RMSE 18.95, MAPE 14.80, MAE 11.24, and R2 0.82. From Figure 10, it can be seen that the consistency between the true and predicted values of the CNN-SSA-DBILSTM-attention model is much higher than that of the other three models. The predicted values of the CNN-SSA-DBiLSTM-attention model are basically consistent with the trend of the actual values. The overall accuracy of the CNN-SSA-DBiLSTM-attention model proposed in this paper is higher, from the perspective of 24 h and 72 h prediction (Table 4 and Table 5).

Figure 9.

Actual and predicted values of PM2.5 at 24 h. The x-axis represents the predicted time and the y-axis represents the PM2.5 concentration.

Figure 10.

Actual and predicted values of PM2.5 at 72 h. The x-axis represents the predicted time and the y-axis represents the PM2.5 concentration.

Table 4.

PM2.5 prediction (24/72 h) results. The left values are the 24 h data and the right values are the 72 h data.

Table 5.

PM2.5 prediction (168 h/720 h) results. The left shows 168 h data and the right shows 720 h data.

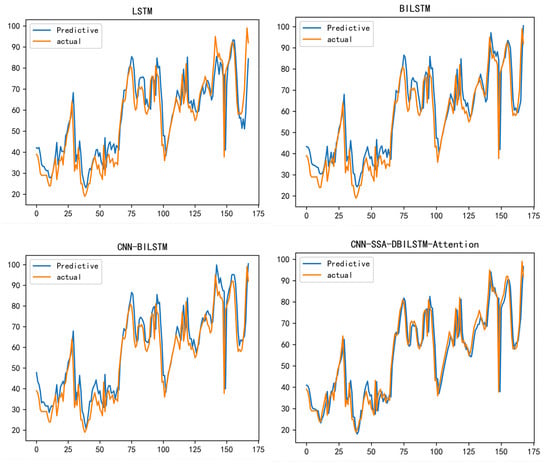

According to the short-term prediction results for a week and a month, in terms of PM2.5 prediction, the accuracy of the CNN-SSA-DBiLSTM-attention model proposed in this paper is significantly higher than that of the other three comparison models: RMSE 9.05/10.14, MAPE 10.28/9.68, MAE 5.78/6.22, and R2 0.96/0.95. Each evaluation value is superior to the other three models. From the comparison between the real value and the predicted value, when the time span is one week, the curves of the predicted value and the real value are basically the same. The degree of coincidence is also quite close. When the time span is one month, the curves of the predicted value and the real value are basically the same, and the coincidence degree is almost total. Overall, the model proposed in this article exhibits good predictive performance at 24 h, 72 h, 168 h, and 720 h. The R2 stability of the CNN-SSA-DBiLSTM-attention model at 0.95 is much better than the other three models. CNN-SSA-DBiLSTM-attention model’s MAPE, RMES, and MAE values are also better than those of the other three models. Overall, the LSTM model has the worst performance. CNN-BILSTM and BILSTM have good performance. The CNN-SSA-DBiLSTM-attention model performs 15% better than the LSTM model. It can also be seen from the chart that the degree of coincidence between the predicted value curve and the true value curve increases significantly with the increase in time. This reflects the excellent performance of the model in short-term prediction that is proposed in this article (Figure 11 and Figure 12).

Figure 11.

Actual and predicted values of 168 h of PM2.5. The x-axis represents the predicted time and the y-axis represents the PM2.5 concentration.

Figure 12.

Actual and predicted values of PM2.5 at 720 h. The x-axis represents the predicted time and the y-axis represents the PM2.5 concentration.

In order to better verify the accuracy of the model for long-time-span prediction, this paper used the CNN-SSA-DBiLSTM-attention model to predict PM2.5 concentration after half a year. The prediction results are shown in Figure 13. It can be seen from the figure that the predicted value and true value almost completely fit; RMSE is 6.20, MAPE is 11.93, MAE is 4.08, and R2 is 0.96. Compared with the short-term PM2.5 prediction results, the prediction accuracy of PM2.5 over a time span of six months remains stable. The coefficient of determination is 0.96. This indicates that the model proposed in this article has better prediction accuracy for long-term span prediction.

Figure 13.

Six-month actual PM2.5 and predicted values.

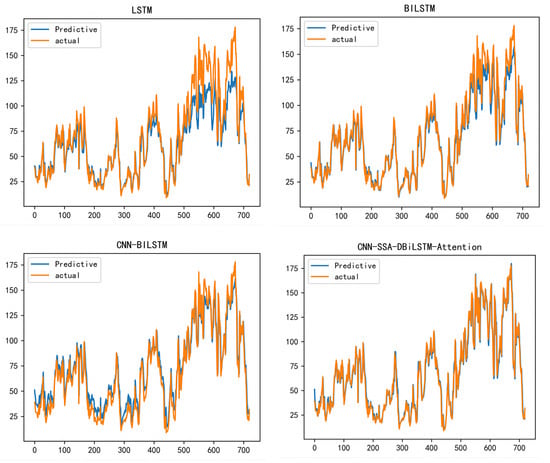

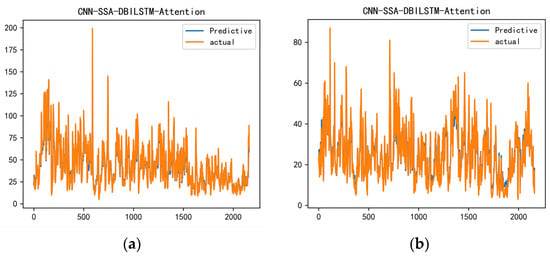

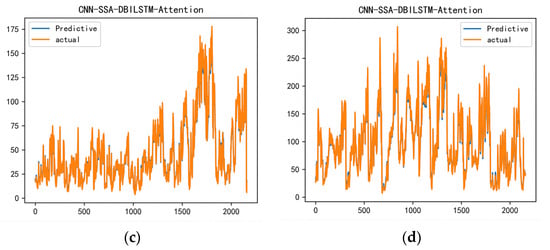

It is beneficial to study the applicability of the model in different seasons and the impact of different seasons on the model’s accuracy. This paper also forecasted and analyzed data from the four seasons, namely spring, summer, autumn, and winter. The results are shown in Table 6. According to the results in the table, the model proposed in this paper is better than the other three models, in terms of R2, MAPE, RMSE, and MAE values, in spring, summer, autumn, and winter. In spring, RMSE is 5.247, MAPE is 12.288, MAE is 3.424, and R2 is 0.94. In summer, RMSE is 3.902, MAPE is 10.128, MAE is 4.033, and R2 is 0.95. In autumn, RMSE is 7.642, MAPE is 12.096, MAE is 4.629, and R2 is 0.95. In winter, RMSE is 8.871, MAPE is 9.890, MAE is 6.579, and R2 is 0.96. The prediction results are shown in Figure 14.

Table 6.

PM2.5 prediction (spring, summer, autumn, and winter) results.

Figure 14.

Predicted values for the four seasons. (a) Spring predicted values; (b) summer predicted values; (c) autumn predicted values; and (d) winter predicted values.

4. Conclusions

Based on the hourly air quality historical data and meteorological dataset of Bijie City from 1 January 2015 to 31 December 2022, this paper conducted empirical research and drew the following conclusions:

From the chart in the text, it can be seen that there are multiple cases of PM2.5 exceeding 100. This indicates that the atmospheric conditions in the Bijie area are poor. The correlation analysis results show that PM10 is the most important input variable, followed by the air quality index. The correlation coefficients of PM10 and the air quality index with PM2.5 are 0.95 and 0.92, respectively, and they have an important impact on PM2.5. NO2, SO2, CO, and other factors that also have an impact on PM2.5. O3 has a negative correlation with PM2.5 and has an inhibiting effect on PM2.5. Temperature, rainfall, wind speed, and humidity also have an inhibiting effect on PM2.5. The most important meteorological factor is visibility, and it has a strong negative correlation with PM2.5. Precipitation and dew point temperature are also relatively important variables. Overall, meteorological factors have little impact on PM2.5. Four models were used to predict PM2.5 concentration. The results showed that the prediction accuracy of the CNN-SSA-DBiLSTM-attention model is the highest, and the coefficient of determination was stable at about 0.95. In contrast, the prediction accuracy of the LSTM model is poor, and the coefficient of determination is 0.84. The overall performance of the CNN-BILSTM and BILSTM models are not as good as those of the CNN-SSA-DBiLSTM attention model. However that model also achieve good prediction results. Surprisingly, the model proposed in this article has high accuracy in both short-term and long-term predictions. Additionally, it also maintains high accuracy in predicting different seasons and was not affected by seasonal changes. In the PM2.5 prediction model, it can be seen that the values of rems and map will fluctuate due to the different seasons. This is due to different concentrations of PM2.5 during different seasons. This indicates the effectiveness of the proposed model and indicates that the hybrid model is able to effectively improve prediction accuracy. The use of hybrid models to improve the accuracy of PM2.5 prediction is a promising research direction. In future research, researchers should make more use of fusion models for prediction and to optimize model structures.

Author Contributions

Conceptualization, X.L. and N.Z.; methodology, X.L.; software, X.L.; validation, X.L. and N.Z.; formal analysis, X.L. and Z.W.; investigation, X.L.; resources, X.L.; data curation, X.L.; writing—original draft preparation, X.L.; writing—review and editing, X.L., N.Z., and Z.W.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by 2022 Bijie City Science and Technology Bureau’s "Unveiling and Leading" Project of Bikehe Major Special Project (Grant number: 2022 No. 4).

Institutional Review Board Statement

The study was approved by the Dalian Polytechnic University.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The first author is grateful for the support from the Qipeng He.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Orellano, P.; Reynoso, J.; Quaranta, N.; Bardach, A.; Ciapponi, A. Short-term Exposure to Particulate Matter (PM10 and PM2.5), Nitrogen Dioxide (NO2), and Ozone (O3) and All-cause and Cause-specific Mortality: Systematic Review and Meta-analysis. Environ. Int. 2020, 142, 105876. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Yang, S.; Zhou, J.; Yu, Z.; Zhou, J. Effect of Ambient Air Pollution on Emergency Room Admissions for Respiratory Diseases in Beijing, China. Atmos. Environ. 2018, 191, 320–327. [Google Scholar] [CrossRef]

- The State Council. Decision of the State Council on Implementing the Scientific Outlook on Development and Strengthening Environmental Protection. Environ. Monit. China 2006, 22, 6. [Google Scholar]

- Ali-Taleshi, M.; Moeinaddini, M.; Bakhtiari, A.; Feiznia, S.; Squizzato, S.; Bourliva, A. A One-year Monitoring of Spatiotemporal Variations of PM2.5-bound Pahs in Tehran, Iran: Source Apportionment, Local and Regional Sources Origins and Source-specific Cancer Risk Assessment. Environ. Pollut. 2021, 274, 115883. [Google Scholar] [CrossRef]

- Ali-Taleshi, M.S.; Bakhtiari, A.R.; Moeinaddini, M.; Squizzato, S.; Feiznia, S.; Cesari, D. Single-site source apportionment modeling of PM2.5-bound PAHs in the Tehran metropolitan area, Iran: Implications for source-specifific multipathway cancer risk assessment. Urban Clim. 2021, 39, 100928. [Google Scholar] [CrossRef]

- Barzeghar, V.; Sarbakhsh, P.; Hassanvand, M.S.; Faridi, S.; Gholampour, A. Long-term trend of ambient air PM10, PM2.5, and O3 and their health effects in Tabriz city, Iran, during 2006–2017. Sustain. Cities Soc. 2020, 54, 101988. [Google Scholar] [CrossRef]

- Kampa, M.; Castanas, E. Human health effects of air pollution. Environ. Pollut. 2008, 151, 362–367. [Google Scholar] [CrossRef]

- Guo, Y.; Zeng, H.; Zheng, R.; Li, S.; Barnett, A.; Zhang, S.; Zou, X.; Huxley, R.; Chen, W.; Williams, G. The Association Between Lung Cancer Incidence and Ambient Air Pollution in China: A Spatiotemporal Analysis. Environ. Res. 2016, 144, 60–65. [Google Scholar] [CrossRef]

- Van Donkelaar, A.; Martin, R.V.; Brauer, M.; Hsu, N.; Kahn, R.; Levy, R.; Winker, D. Global Estimates of Fine Particulate Matter Using a Combined Geophysical-statistical Method with Information from Satellites, Models, and Monitors. Environ. Sci. Technol. 2016, 50, 3762–3772. [Google Scholar] [CrossRef]

- Sun, S.L.; Fu, M.X.; Xu, L.X. Signal and Information Processing, Networking and Computers; Springer Science and Business Media LLC: Yuzhou, China, 2019; p. 550. [Google Scholar]

- Duan, W.J.; Wang, X.Q.; Cheng, S.Y.; Wang, R.P.; Zhu, J.X. Influencing factors of PM2.5 and O3 from 2016 to 2020 based on DLNM and WRF-CMAQ. Environ. Pollut. 2021, 285, 117512. [Google Scholar] [CrossRef]

- Athira, V.; Geetha, P.; Vinayakumar, R.; Soman, K. Deepairnet: Applying recurrent networks for air quality prediction. Procedia Comput. Sci. 2018, 132, 1394–1403. [Google Scholar]

- Kang, G.K.; Gao, J.Z.; Chiao, S.; Lu, S.; Xie, G. Air quality prediction: Big data and machine learning approaches. Int. J. Environ. Sci. Dev. 2018, 9, 8–16. [Google Scholar] [CrossRef]

- Huang, L.; Zhu, Y.; Zhai, H.; Xue, S.; Zhu, T.; Shao, Y.; Liu, Z.; Emery, C.; Yarwood, G.; Wang, Y. Recommendations on benchmarks for numerical air quality model applications in China–Part 1: PM2.5 and chemical species. Atmos. Chem. Phys. 2021, 21, 2725–2743. [Google Scholar] [CrossRef]

- Zhou, G.Q.; Xu, J.M.; Xie, Y.; Chang, L.; Gao, W.; Gu, Y.; Zhou, J. Numerical air quality forecasting over eastern China: An operational application of WRF-Chem. Atmos. Environ. 2017, 153, 94–108. [Google Scholar] [CrossRef]

- Li, R.; Zhang, M.; Chen, L.; Kou, X.; Skorokhod, A. CMAQ simulation of atmospheric CO2 concentration in East Asia: Comparison with GOSAT observations and ground measurements. Atmos. Environ. 2017, 160, 176–185. [Google Scholar]

- Xu, D.; Yang, X.F. Prediction of PM2.5 trend in Chengdu City based on multiple linear regression model. Heilongjiang Sci. 2021, 12, 36–37. [Google Scholar]

- Xu, Y.X.; Ren, J.; Feng, L.; Liang, Y.L.; Liu, Y.M. Wavelet analysis to optimize the concentration of PM2.5 forecasting models. Adm. Tech. Environ. Monit. 2021, 33, 24–28+34. [Google Scholar]

- Ren, C.R.; Xie, G. Prediction of PM2.5 concentration level based on random forest and meteorological parameters. Comput. Eng. Appl. 2019, 55, 213–220. [Google Scholar]

- Guo, Q.J.; Yao, Y.B.; Zhou, Y.J. PM2.5 random forest prediction model incorporating GNSS meteorological parameters. Sci. Surv. Mapp. 2021, 46, 37–42+56. [Google Scholar]

- Zhao, F.; Li, W.D. A Combined model based on feature selection and WOA for PM2.5 concentration forecasting. Atmosphere 2019, 10, 223. [Google Scholar] [CrossRef]

- Chen, Y. Prediction algorithm of PM2.5 mass concentration based on adaptive BP neural network. Computing 2018, 100, 825–838. [Google Scholar] [CrossRef]

- Jiao, Y.; Wang, Z.; Zhang, Y. Prediction of air quality index based on LSTM. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24–26 May 2019; pp. 17–20. [Google Scholar]

- Liu, D.R.; Hsu, Y.K.; Chen, H.Y.; Jau, H.J. Air pollution prediction based on factory-aware attentional LSTM neural network. Computing 2021, 103, 75–98. [Google Scholar] [CrossRef]

- Xue, T.L.; Zhao, D.H.; Han, F. Prediction of PM2.5 concentration in Beijing based on BP neural network. J. New Ind. 2019, 9, 87–91. [Google Scholar]

- Chang, Y.S.; Chiao, H.T.; Abimannan, S.; Huang, Y.-P.; Tsai, Y.-T.; Lin, K.-M. An LSTM based aggregated model for air pollution forecasting. Atmos. Pollut. Res. 2020, 11, 1451–1463. [Google Scholar] [CrossRef]

- Liu, X.L.; Zhao, W.F.; Tang, W. Forecasting model of PM2.5 concentration one hour in advance based on CNN-Seq2Seq. J. Chin. Mini-Micro Comput. Syst. 2020, 41, 1000–1006. [Google Scholar]

- Zhang, Y.W.; Yuan, H.W.; Sun, X.; Wu, H.; Dong, Y. PM2.5 concentration prediction method based on Adam’s attention model. J. Atmos. Environ. Opt. 2021, 16, 117–126. [Google Scholar]

- Liu, H.; Yan, G.X.; Duan, Z.; Chen, C. Intelligent modeling strategies for forecasting air quality time series: A review. Appl. Soft. Comput. 2021, 102, 106957. [Google Scholar] [CrossRef]

- Kow, P.Y.; Wang, Y.S.; Zhou, Y.; Kao, I.-F.; Issermann, M.; Chang, L.-C.; Chang, F.-J. Seamless integration of convolutional and back-propagation neural networks for regional multi-step-ahead PM2.5 forecasting. J. Clean. Prod. 2020, 261, 121285. [Google Scholar] [CrossRef]

- Liu, X.P.; Guo, H.Y. Air quality indicators and AQI prediction coupling long-short term memory (LSTM) and sparrow search algorithm (SSA): A case study of Shanghai. Atmos. Pollut. Res. 2022, 13, 101551. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classifi-cation with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Yang, L.; Wu, Y.X.; Wang, J.L.; Liu, Y.L. Research on recurrent neural network. J. Comput. Ap-Plications 2018, 38, 1–6, 26. [Google Scholar]

- Li, X.; Peng, L.; Yao, X.; Cui, S.; Hu, Y.; You, C.; Chi, T. Long short-term memory neural network for air pollutant concentration predictions: Method development and evaluation. Environ. Pollut. 2017, 231, 997–1004. [Google Scholar] [CrossRef] [PubMed]

- Wen, C.; Liu, S.; Yao, X.; Peng, L.; Li, X.; Hu, Y.; Chi, T. A novel spatiotemporal convolutional long short-term neural network for air pollution prediction. Sci. Total Environ. 2019, 654, 1091–1099. [Google Scholar] [CrossRef] [PubMed]

- Qi, Y.; Li, Q.; Karimian, H.; Liu, D. A hybrid model for spatiotemporal forecasting of PM2.5 based on graph convolutional neural network and long short-term memory. Sci. Total Environ. 2019, 664, 1–10. [Google Scholar] [CrossRef]

- Zhou, Y.; Chang, F.; Chang, L.; Kao, I.; Wang, Y. Explore a deep learning multi-output neural network for regional multi-step-ahead air quality forecasts. J. Clean. Prod. 2019, 209, 134–145. [Google Scholar] [CrossRef]

- Liu, S.; Liao, G.; Ding, Y. Stock transaction prediction modeling and analysis based on LSTM. In Proceedings of the 2018 13th IEEE Conference on Industrial Electronics and Applications (ICIEA), Wuhan, China, 31 May–2 June 2018; pp. 2787–2790. [Google Scholar]

- Lin, S.; Wang, H.; Qi, L.H.; Feng, H.Y.; Su, Y. Short-term load forecasting based on conditionally generated countermeasures network. Autom. Electr. Power Syst. 2021, 11, 52–60. [Google Scholar]

- Zhao, F.; Yang, Y.; Jiang, R.; Zhang, L.J.; Ren, X.L. Sentiment analysis based on double-attention Bi-LSTM using part-of-speech. J. Comput. Ap-Plications 2018, 38, 103–106+147. [Google Scholar]

- Shen, G.N.; Chen, Z.X.; Wang, H.; Chen, H.; Wang, S.Q. Feature fusion-based malicious code detection with dual attention mechanism and BiLSTM. Comput. Secur. 2022, 119, 102761. [Google Scholar] [CrossRef]

- Yao, Z.G.; Zhang, T.Y.; Wang, Q.M.; Zhao, Y. Short-Term Power Load Forecasting of Integrated Energy System Based on Attention-CNN-DBILSTM. Math. Probl. Eng. 2022, 12, 1075698. [Google Scholar] [CrossRef]

- Kuo, P.H.; Huang, C.J. A High Precision Artificial Neural Networks Model for Short-Term Energy Load Forecasting. Energies 2018, 11, 213. [Google Scholar] [CrossRef]

- Yuan, J.; Zhao, Z.; Liu, Y.; He, B.; Wang, L.; Xie, B.; Gao, Y. DMPPT Control of Photovoltaic Microgrid Based on Improved Sparrow Search Algorithm. IEEE Access 2021, 9, 16623–16629. [Google Scholar] [CrossRef]

- Cao, L.; Yue, Y.G.; Zhang, Y. A Data Collection Strategy for Heterogeneous Wireless Sensor Networks Based on Energy Efficiency and Collaborative Optimization. Comput. Intell. Neurosci. 2021, 13, 9808449. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Wang, S. A Carbon Price Prediction Model Based on the Secondary Decomposition Algorithm and Influencing Factors. Energies 2021, 14, 1328. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).