High-Speed Visualization of Very Large High-Resolution Simulations for Air Hazard Transport and Dispersion

Abstract

:1. Introduction

2. The EMERGENCIES and EMED Project

2.1. Overview of the Projects

2.1.1. Modeling and Computing Capabilities

- Flow and turbulence are computed in advance each day for the next, and for the whole domain starting from meso-scale meteorological forecasts computed using the WRF model [31];

- Dispersion is computed on-demand when a situation occurs.

2.1.2. Experimental Setting for the EMERGENCIES Project

2.1.3. Experimental Setting for the EMED Project

2.2. Data Produced

- First the flow and turbulence data (FT data), on each and every tile of the domain;

- Then concentration data (C data), only on tiles reached by the plumes generated by the releases.

- 200 GB per timeframe for the domain covering Paris;

- 668 GB per timeframe for the domain covering Marseille.

- The area covered by the plume: the larger the plume, the larger the number of tiles that contain C data;

- The persistency duration of the plume in the domain;

- The averaging period used.

3. Treatment and Visualization of the Data

3.1. Initial Attemtps

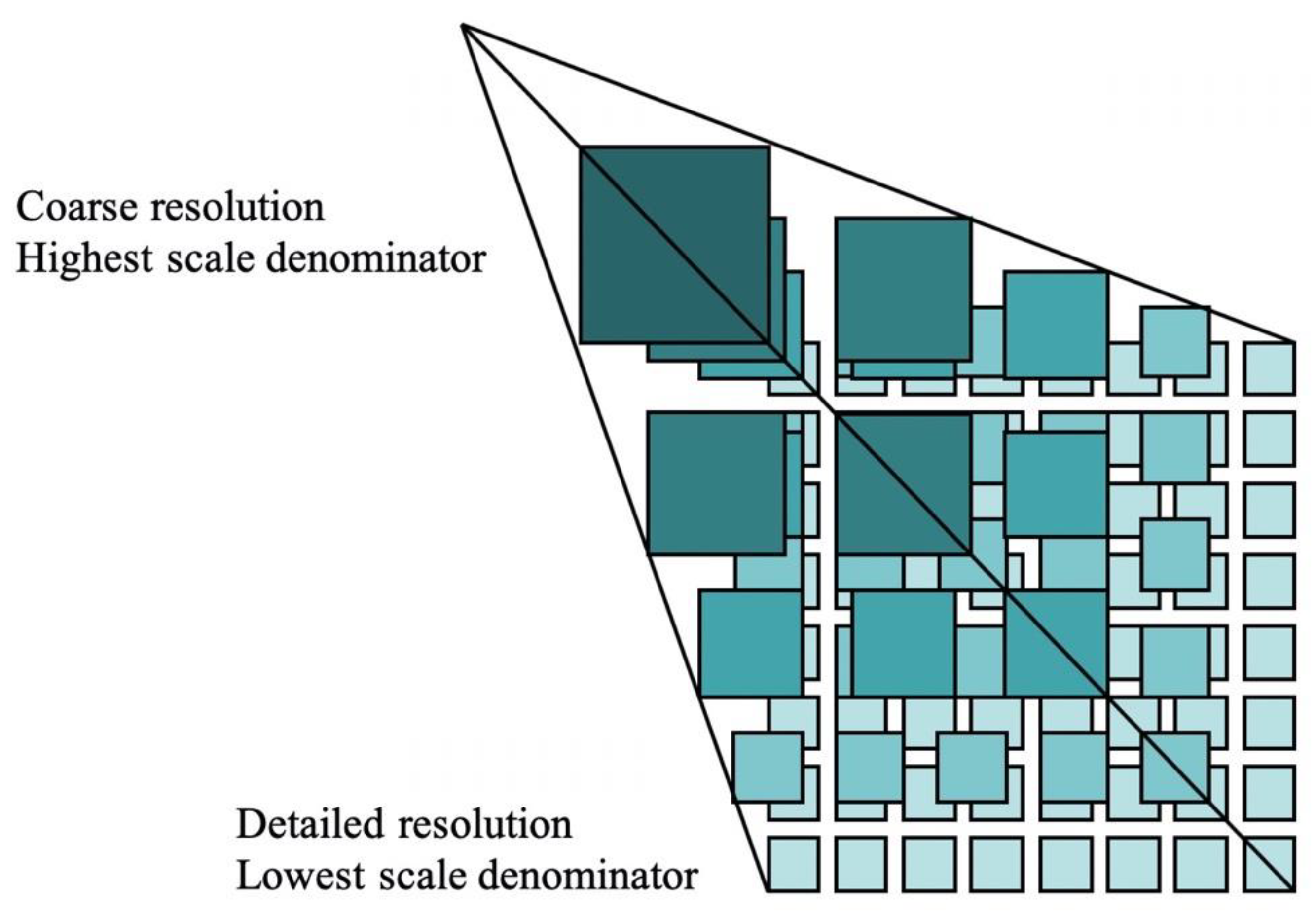

3.2. Introduction to the Methodology

3.3. Details on the Multilevel Tiling Implementation

3.3.1. Parallel Scheme

- First step, generation of the Tiff files:

- ○

- Distribution of the analysis of available bin files to available cores to retrieve available fields, domain coordinates, available time steps;

- ○

- Calculation by the master core of the large domain footprint, the tiles coordinates required for each zoom levels and the time steps to extract;

- ○

- Generation of Tiff files for each FT or C data file, and for each vertical level and time step selected. The files are generated using the Google Mercator projection;

- ○

- Creation of a Tiff virtual stack encompassing the whole calculation domain, the domain being, or not, decomposed in multiple computation tiles of arbitrary dimension;

- Second step, generation of the tiles from the Tiff files:

- ○

- Loop on zoom levels being treated starting from the larger zoom level;

- ○

- Distribution of each tile, from the total pool of tiles combining field name, time step and vertical level, to a core for generation.

3.3.2. Specificity for Vector Fields

4. Results

4.1. Visualization

4.1.1. Flow and Turbulence Data

4.1.2. Concentration Data

4.2. Performances

4.2.1. Post-processing Step

4.2.2. Navigation

- Data are accessible in less than several seconds, whatever the global size of the domain;

- Change in locations used the tiling capability, with additional tiles being loaded when required;

- Change in zoom allows both large scale and small-scale features to be monitored.

4.3. Discussion for Crisis Management

- Take the most out of the computing infrastructure during the modeling phase by preparing the output;

- Enable a very efficient navigation in and consultation of the result during the exploitation of the simulation, even for non-expert users of modeling due to the very large diffusion of web mapping tools.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Armand, P.; Bartzis, J.; Baumann-Stanzer, K.; Bemporad, E.; Evertz, S.; Gariazzo, C.; Gerbec, M.; Herring, S.; Karppinen, A.; Lacome, J.M.; et al. Trini Castelli S (2015) Best practice guidelines for the use of the atmospheric dispersion models in emergency response tools at local-scale in case of hazmat releases into the air. Tech Rep, COST Action ES 1006. Available online: http://elizas.eu/images/Documents/Best%20Practice%20Guidelines_web.pdf (accessed on 16 July 2021).

- Biltoft, C.A. Customer Report for Mock Urban Setting Test; DPG Document Number 8-CO-160-000-052; Defense Threat Reduction Agency: Alexandria, VA, USA, 2001.

- Warner, S.; Platt, N.; Heagy, J.F.; Jordan, J.E.; Bieberbach, G. Comparisons of transport and dispersion model predictions of the mock urban setting test field experiment. J. Appl. Meteorol. Climatol. 2006, 45, 1414–1428. [Google Scholar] [CrossRef]

- Allwine, K.J.; Flaherty, J. Joint Urban 2003: Study Overview and Instrument Locations; PNNL-15967; Pacific Northwest National Lab.: Richland, WA, USA, 2006. [Google Scholar]

- Hernández-Ceballos, M.A.; Hanna, S.; Bianconi, R.; Bellasio, R.; Mazzola, T.; Chang, J.; Andronopoulos, S.; Armand, P.; Benbouta, N.; Čarný, P.; et al. UDINEE: Evaluation of multiple models with data from the JU2003 puff releases in Oklahoma City. Part I: Comparison of observed and predicted concentrations. Bound. Layer Meteorol. 2019, 171, 323–349. [Google Scholar] [CrossRef]

- Hernández-Ceballos, M.A.; Hanna, S.; Bianconi, R.; Bellasio, R.; Chang, J.; Mazzola, T.; Andronopoulos, S.; Armand, P.; Benbouta, N.; Čarný, P.; et al. UDINEE: Evaluation of multiple models with data from the JU2003 puff releases in Oklahoma City. Part II: Simulation of puff parameters. Bound. Layer Meteorol. 2019, 171, 351–376. [Google Scholar] [CrossRef]

- EPA; NOAA. Area Locations of Hazardous Atmospheres (ALOHA); User’s Manual; US Environmental Protection Agency (USEPA) and the National Oceanic and Atmospheric Administration (NOAA): Washington, DC, USA, 1999.

- Sykes, R.I.; Parker, S.F.; Henn, D.S.; Cerasoli, C.P.; Santos, L.P. PC-SCIPUFF Version 1.3 Technical Documentation; ARAP Report No. 725; Titan Corporation, ARAP Group: Princeton, NJ, USA, 2000. [Google Scholar]

- Chang, J.C.; Hanna, S.R.; Boybeyi, Z.; Franz, P. Use of Salt Lake City URBAN 2000 field data to evaluate the urban hazard prediction assessment capability (HPAC) dispersion model. J. Appl. Meteorol. 2005, 44, 485–501. [Google Scholar] [CrossRef]

- Milliez, M.; Carissimo, B. Numerical simulations of pollutant dispersion in an idealized urban area. for different meteorological conditions. Bound. Layer Meteorol. 2007, 122, 321–342. [Google Scholar] [CrossRef]

- Gowardhan, A.A.; Pardyjak, E.R.; Senocak, I.; Brown, M.J. A CFD-based wind solver for urban response transport and dis-persion model. Environ. Fluid Mech. 2011, 11, 439–464. [Google Scholar] [CrossRef]

- Yee, E.; Lien, F.S.; Ji, H. A Building-Resolved Wind Field Library for Vancouver: Facilitating CBRN Emergency Response for the 2010 Winter Olympic Games; Defense Research and Development: Suffield, AB, Canada, 2010. [Google Scholar]

- Armand, P.; Christophe, D.; Luc, P. Is it now possible to use advanced dispersion modelling for emergency response? The example of a CBRN-E exercise in Paris. In Air Pollution Modeling and its Application XXIV; Springer: Cham, Switzerland, 2016; pp. 433–446. [Google Scholar]

- Oldrini, O.; Armand, P.; Duchenne, C.; Perdriel, S.; Nibart, M. Accelerated Time and High-Resolution 3D Modeling of the Flow and Dispersion of Noxious Substances over a Gigantic Urban Area—The EMERGENCIES Project. Atmosphere 2021, 12, 640. [Google Scholar] [CrossRef]

- Armand, P.; Duchenne, C.; Oldrini, O.; Perdriel, S. Emergencies Mediterranean—A Prospective High-Resolution Modelling and Decision-Support System in Case of Adverse Atmospheric Releases Applied to the French Mediterranean Coast. In Proceedings of the 18th International Conference on Harmonisation within Atmospheric Dispersion Modeling for Regulatory Purposes, Bologna, Italy, 9–12 October 2017; pp. 9–12. [Google Scholar]

- OSGEO. Tile Map Service Specification. Available online: https://wiki.osgeo.org/wiki/Tile_Map_Service_Specification (accessed on 16 July 2021).

- Oldrini, O.; Olry, C.; Moussafir, J.; Armand, P.; Duchenne, C. Development of PMSS, the Parallel Version of Micro SWIFT SPRAY. In Proceedings of the 14th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Kos, Greece, 2–6 October 2011; pp. 443–447. [Google Scholar]

- Oldrini, O.; Armand, P.; Duchenne, C.; Olry, C.; Tinarelli, G. Description and preliminary validation of the PMSS fast response parallel atmospheric flow and dispersion solver in complex built-up areas. J. Environ. Fluid Mech. 2017, 17, 1–18. [Google Scholar] [CrossRef]

- Oldrini, O.; Armand, P.; Duchenne, C.; Perdriel, S. Parallelization Performances of PMSS Flow and Dispersion Modelling System over a Huge Urban Area. Atmosphere 2019, 10, 404. [Google Scholar] [CrossRef] [Green Version]

- Oldrini, O.; Armand, P. Validation and sensitivity study of the PMSS modelling system for puff releases in the Joint Urban 2003 field experiment. Bound. Layer Meteorol. 2019, 171, 513–535. [Google Scholar] [CrossRef]

- Moussafir, J.; Oldrini, O.; Tinarelli, G.; Sontowski, J.; Dougherty, C. A new operational approach to deal with dispersion around obstacles: The MSS (Micro-Swift-Spray) software suite. In Proceedings of the 9th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Garmisch-Partenkirchen, Germany, 1–4 June 2004; Volume 2, pp. 114–118. [Google Scholar]

- Tinarelli, G.; Brusasca, G.; Oldrini, O.; Anfossi, D.; Trini Castelli, S.; Moussafir, J. Micro-Swift-Spray (MSS) a new modelling system for the simulation of dispersion at microscale. General description and validation. In Air Pollution Modelling and Its Applications XVII; Borrego, C., Norman, A.N., Eds.; Springer: Boston, MA, USA, 2007; pp. 449–458. [Google Scholar]

- Oldrini, O.; Nibart, M.; Armand, P.; Olry, C.; Moussafir, J.; Albergel, A. Introduction of Momentum Equations in Mi- cro-SWIFT. In Proceedings of the 15th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Varna, Bulgaria, 8–11 September 2014. [Google Scholar]

- Oldrini, O.; Nibart, M.; Duchenne, C.; Armand, P.; Moussafir, J. Development of the parallel version of a CFD–RANS flow model adapted to the fast response in built-up environments. In Proceedings of the 17th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Budapest, Hungary, 9–12 May 2016. [Google Scholar]

- Röckle, R. Bestimmung der Strömungsverhaltnisse im Bereich Komplexer Bebauungs-Strukturen. Doctoral Thesis, Technical University Darmstadt, Darmstadt, Germany, 1990. [Google Scholar]

- Anfossi, D.; Desiato, F.; Tinarelli, G.; Brusasca, G.; Ferrero, E.; Sacchetti, D. TRANSALP 1989 experimental campaign—II. Simulation of a tracer experiment with Lagrangian particle models. Atmos. Environ. 1998, 32, 1157–1166. [Google Scholar] [CrossRef]

- Tinarelli, G.; Mortarini, L.; Trini Castelli, S.; Carlino, G.; Moussafir, J.; Olry, C.; Armand, P.; Anfossi, D. Review and validation of Micro-Spray, a Lagrangian particle model of turbulent dispersion. In Lagrangian Modelling of the Atmosphere, Geophysical Monograph; American Geophysical Union (AGU): Washington, DC, USA, May 2013; Volume 200, pp. 311–327. [Google Scholar]

- Rodean, H.C. Stochastic Lagrangian Models of Turbulent Diffusion; American Meteorological Society: Boston, MA, USA, 1996; Volume 45. [Google Scholar]

- Thomson, D.J. Criteria for the selection of stochastic models of particle trajectories in turbulent flows. J. Fluid Mech. 1987, 180, 529–556. [Google Scholar] [CrossRef]

- Archambeau, F.; Méchitoua, N.; Sakiz, M. Code Saturne: A finite volume code for the computation of turbulent incompressible flows-Industrial applications—Industrial Applications. Int. J. Finite Vol. 2004, 1, 1. [Google Scholar]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Duda, M.G.; Huang, X.-Y.; Wang, W.; Powers, J.G. 2008: A Description of the Advanced Research WRF Version 3; NCAR Tech. Note NCAR/TN-475+STR; UCAR Communications: Boulder, CO, USA, 2008. [Google Scholar] [CrossRef]

- Ahrens, J.; Geveci, B.; Law, C. ParaView: An End-User Tool for Large Data Visualization; Visualization Handbook; Elsevier: Amsterdam, The Netherlands, 2005; ISBN 978-0123875822. [Google Scholar]

- Adobe Developers Association. TIFF” Revision 6.0, Jun. 3, 1992; Adobe Systems Incorporated: Mountain View, CA, USA, 1992. [Google Scholar]

- Warmerdam, F. The geospatial data abstraction library. In Open Source Approaches in Spatial Data Handling; Springer: Berlin/Heidelberg, Germany, 2008; pp. 87–104. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oldrini, O.; Perdriel, S.; Armand, P.; Duchenne, C. High-Speed Visualization of Very Large High-Resolution Simulations for Air Hazard Transport and Dispersion. Atmosphere 2021, 12, 920. https://doi.org/10.3390/atmos12070920

Oldrini O, Perdriel S, Armand P, Duchenne C. High-Speed Visualization of Very Large High-Resolution Simulations for Air Hazard Transport and Dispersion. Atmosphere. 2021; 12(7):920. https://doi.org/10.3390/atmos12070920

Chicago/Turabian StyleOldrini, Olivier, Sylvie Perdriel, Patrick Armand, and Christophe Duchenne. 2021. "High-Speed Visualization of Very Large High-Resolution Simulations for Air Hazard Transport and Dispersion" Atmosphere 12, no. 7: 920. https://doi.org/10.3390/atmos12070920

APA StyleOldrini, O., Perdriel, S., Armand, P., & Duchenne, C. (2021). High-Speed Visualization of Very Large High-Resolution Simulations for Air Hazard Transport and Dispersion. Atmosphere, 12(7), 920. https://doi.org/10.3390/atmos12070920