Abstract

From an analysis of the priors used in state-of-the-art algorithms for single image defogging, a new prior is proposed to obtain a better atmospheric veil removal. Our hypothesis is based on a physical model, considering that the fog appears denser near the horizon rather than close to the camera. It leads to more restoration when the fog depth is more important, for a more natural rendering. For this purpose, the Naka–Rushton function is used to modulate the atmospheric veil according to empirical observations on synthetic foggy images. The parameters of this function are set from features of the input image. This method also prevents over-restoration and thus preserves the sky from artifacts and noises. The algorithm generalizes to different kinds of fog, airborne particles, and illumination conditions. The proposed method is extended to the nighttime and underwater images by computing the atmospheric veil on each color channel. Qualitative and quantitative evaluations show the benefit of the proposed algorithm. The quantitative evaluation shows the efficiency of the algorithm on four databases with different types of fog, which demonstrates the broad generalization allowed by the proposed algorithm, in contrast with most of the currently available deep learning techniques.

1. Introduction

Visibility restoration of outdoor images is a well-known problem in both computer vision applications and digital photography, particularly in adverse weather conditions such as fog, haze, rain, and snow. Such weather conditions cause visual artifacts in the images such as loss of contrast and color shift, which contributes to reducing scene visibility. The lack of visibility can be detrimental for the performance of automated systems based on image segmentation [1] and object detection [2], and thus requires visibility restoration as a pre-processing [3]. With fog or haze, contrast reduction is caused by the atmospheric veil. With rain or snow, it is caused by the occlusion of the distant background by raindrops or snowflakes.

This paper proposes a single image dehazing method based on the Naka–Rushton function to treat the fog presents near the horizon in real images and to generalize to different kinds of fog represented in the databases of the evaluation section. In addition, a simple solution is added to process nighttime and underwater images, allowing further generalization to different kinds of particles and illumination conditions. Some of these contributions have been proposed in [4]. This paper proposes three contributions:

- The use of a Naka–Rushton function in the inference of the atmospheric veil to restore the fog near the horizon without restoring the part near the camera. The parameters of this function are estimated from the characteristics of the input foggy image.

- The proposed algorithms also address spatially uniform veils, and generalize to different kinds of fog, by the help of an interpolation function.

- Applying our method on each color channel addresses the local variations of the color of the fog. This allows for restoring images with color distortions due to dust and pollution, as well as nighttime and underwater images.

2. Related Works

2.1. Fog Visual Effect

Koschmieder’s law is a straightforward optical model that describes the visual effects of the scattering of daylight by the particles fog is made of. When fog and illumination are homogeneous along a light path going through x, the model is:

where is the foggy image, the fog-free image, A the sky intensity, and denotes the pixel coordinates. The transmittance describes the percentage of light that is not scattered:

where k is the extinction coefficient that is related to the density of fog, and is the distance between the camera and the objects in the scene. Equations (1) and (2) are valid only together. The atmospheric veil is the second term in (1).

2.2. Daytime Image Defogging

Single image defogging algorithms can be divided into two categories. Image enhancement algorithms use ad-hoc techniques to improve the image contrast such as histogram equalization and retinex, but they do not account for scene depth. Visibility restoration, on which we focus, are model-based and use Koschmieder’s law. Since depth is unknown, the problem is an ill-posed inverse problem that requires priors to be solved. Priors may be introduced as constraints or using a learning dataset.

He et al. [5,6] introduced the Dark Channel Prior (DCP) for a method dedicated to color images. The idea is that fog-free outdoor images contain pixels with very low intensity in at least one of the three color channels at any location. It was also proposed to refine the transmission map by a filtering guided by the input image to avoid halos in the final result [7,8]. Many works were inspired by the DCP method and brought some improvements [9,10,11,12,13,14]. Li et al. [11] proposed an edge preservation technique to improve the transmission map estimation. They split the dark channel of the foggy image into two layers with a guided filter. This method reduces artifacts and noises in the restored images. Zhu et al. [12] developed a method to estimate the transmission map by minimizing the energy function, in order to fully exploit the DCP. This method combines the DCP with piecewise smoothness. Recently, Jackson et al. [13] proposed a fast dehazing method based on Rayleigh’s scattering theory, in order to estimate the transmission map with a fast-guided filter. This method reduces the computational time and provides good results. Zhang et al. [14] proposed a criterion-based segmenting the transmission map into foreground-background regions, in order to reduce halo artifacts and computational complexity.

Tarel et al. [15] proposed two priors: the fog is white, and locally smooth. As pointed out in [16], the first prior leads to the use of the channel with the minimum intensity among the color channels, as in [6]. The second prior leads to the use of a local filter, preferably one that preserves both edges and corners. This fast algorithm allows restoration of color and gray-scale images. In [16], a flat terrain assumption was introduced in order to avoid over-restoration in the bottom part of the image. It works well on objects close to the camera but requires the height of the horizon line to be approximately known. To tackle the same difficulty, a modulation function of the atmospheric veil was introduced to deal with road traffic scenes [17]. This modulation function is a function of the pixel position, and thus its parameters must be tuned according to the observed scene.

Ancuti et al. [18,19] proposed a fusion-based approach to estimate the atmospheric veil. It combines several feature images and weight maps derived from the original input image. The prior lies implicit in the way the fusion is achieved. Wang et al. [20] proposed a method based on a so-called color attenuation prior, where the atmospheric veil is estimated using a linear combination of features extracted locally in the input foggy image.

Zheng et al. [21] proposed an adaptative structure decomposition method integrated multi-exposure image fusion for single image dehazing. A set of underexposed images are extracted from different gamma correction images to fused into haze-free images. This method avoids using a physical model and priors estimation. An extension of this research is proposed by Zhu et al. [22] with the aim of reducing over-exposed areas located in hazy regions, by balancing image luminance and saturation, and preserving details and the overall structure of the restored image.

In the last five years, learning-based methods have been proposed for defogging usually based on Convolutional Neural Networks (CNN) with supervised training [23,24,25,26,27]. Cai et al. [23] proposed a trainable model to estimate the transmission map and recover clear images using the Koschmieder’s model. However, the intermediate steps for estimating the parameters can generate reconstruction errors and provide inaccurate transmission maps. To overcome this issue, Li et al. [26] proposed a fully end-to-end model to directly generate clear images from foggy images with a joint estimation of the transmission map and the atmospheric veil. Fog is a rather unpredictable phenomenon, so building a large and representative training dataset with pairs of images with and without fog is very difficult. This leads to generalization problems. More recently, GAN networks have been used [28,29], with partially supervised training databases, but the learning control is complicated. Fog removal being a pre-processing, fast and computationally inexpensive algorithms are usually required. We thus focus here on algorithms with very few parameters to be learned.

2.3. Hidden Priors in the DCP Method

In DCP [5,6], a widely used parameter called was clearly introduced in the transmission map computation:

where is the local patch centered on x, c the color channel, A the sky intensity, and I the image intensity. This parameter was introduced to mitigate over-restoration, and it is usually set to . According to [5], it maintains a small amount of haze in the distance, producing more natural results.

This parameter is actually a prior and needs to be explicit. First, let us propose a different interpretation of . The term in the transmittance Equation (3) is a first estimation of the atmospheric veil, based on the white fog and the locally smooth fog priors. The result is a mixture between the actual atmospheric veil and the luminance of objects in the scene. Let us name it the “pre-veil”. The percentage of the pre-veil which corresponds to the real atmospheric veil is unknown; it is assumed to be constant and equal to across the image. Therefore, can be explicitly described as a prior parameter: it is the assumed constant percentage of the atmospheric veil in the pre-veil map. We test the validity of this prior in the next section.

3. Single Image Atmospheric Veil Removal

3.1. Algorithm Flowchart

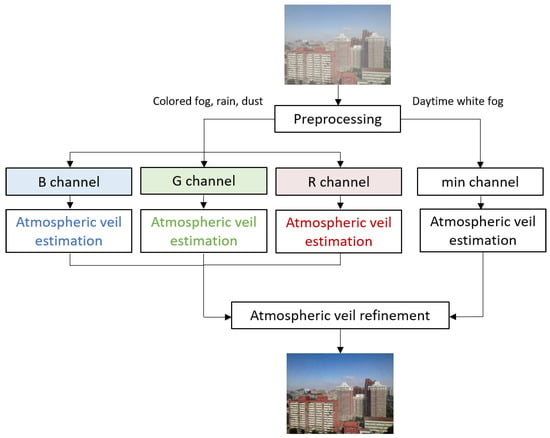

The proposed algorithm first needs a prior about the nature of the observed scene. Two categories are considered:

- Daytime white fog: the pre-veil is computed from the color channel that has the minimum intensity. The fog is assumed to be homogeneous or may vary slowly in density from one pixel to another, but not in the viewing frustum of a pixel.

- Colored fog, rain, smoke, dust: the airlight cannot be assumed to be pure white, so color channels are processed separately by simply splitting the images into RGB channels. Then, the atmospheric veil is computed on each color channel. The density of airborne particles may vary slowly from a pixel to another.

The flowchart of the proposed algorithm is shown in Figure 1. From a pre-veil step, the atmospheric veil is obtained with the Naka–Rushton function, with parameters computed from the input image features. The atmospheric veil is then refined by applying a filtering guided by the input image. The final step consists of the computation of the restored image by inversing Koschmieder’s law on the input image with the previously obtained atmospheric veil map.

Figure 1.

Flowchart of the proposed algorithm.

3.2. Is the Use of ω a Valid Prior?

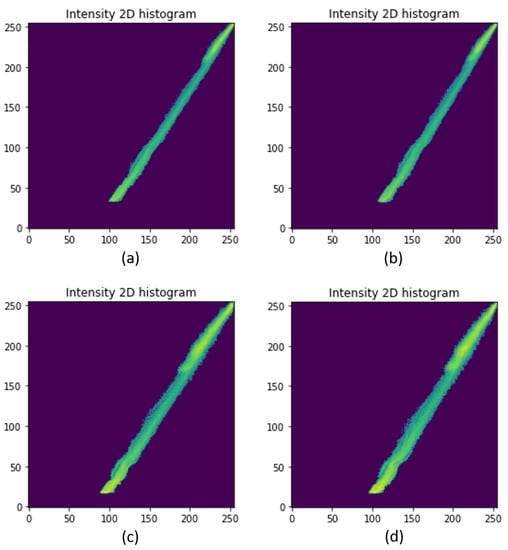

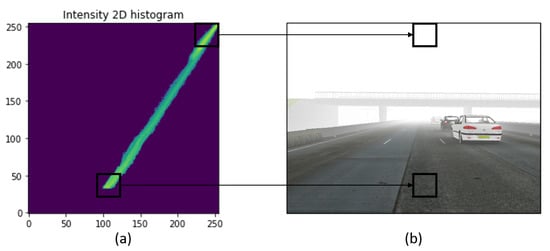

The usual way to compute the atmospheric veil from the pre-veil is to apply the parameter . To test the validity of this prior, we have to look at the link between the intensities in the true veil and in the pre-veil images. This can only be achieved with a synthetic image database, and, to achieve this, we used the generator of the FRIDA dataset [16]. In these synthetic images, the veil is computed using Koschmieder’s law from the scene depth map. Thus, the atmospheric veil map can be computed for each generated foggy image. An advantage is that it is possible to generate images with homogeneous or heterogeneous fog, and homogeneous or heterogeneous illumination. Figure 2 shows the histograms of fifty such foggy images, with the pre-veil image intensities on the horizontal axis, and the intensities of the ground truth atmospheric veil on the vertical axis. Figure 2 and Figure 3a show that the link between foggy pixel intensities and associated veil intensities is roughly affine. The intensity of the atmospheric veil is high in the sky region, and it is low in the ground region that is closer to the camera. Since this variation is affine, it cannot be modeled well with a constant parameter such as . A function would be more relevant.

Figure 2.

Histograms showing the link between pre-veil and veil pixel intensities (mean of fifty images from the FRIDA dataset [16]). (a) homogeneous fog and illumination, (b) homogeneous fog and heterogeneous illumination, (c) heterogeneous fog and homogeneous illumination, (d) heterogeneous fog and illumination.

Figure 3.

Foggy pixels and veil pixels intensities: (a) histogram showing the link between pre-veil and veil pixel intensities (mean of fifty images from the FRIDA dataset [16]), (b) input foggy image.

3.3. Modulation Function as a Prior

To avoid over-restoration at the bottom of the image, but, to ensure that the restoration is maximum at the top of the image, a modulation function f is necessary to compute the atmospheric veil from the pre-veil. This function should be smooth to avoid visual artifacts in the restored image.

From Figure 3a, the following constraints are proposed to choose an appropriate function f which will appropriately modulate the pre-veil:

- The function f should be roughly linear on a large range of intensities. This range is denoted . We introduce here the slope a of f at , i.e., .

- The function is close to zero on the intensity range , i.e., for the intensities of pixels looking at objects that are close to the camera.

- The function and the restored image must not be negative.

- is the intensity of the clearest (sky) region. To avoid too dark values in the corresponding areas, should be a little lower than .We thus introduce a parameter such that in order to preserve the sky. is smoothly estimated as a function of .= 0.3 when is high ( > 0.8), = 0.05 when is low ( < 0.6). Between these values,

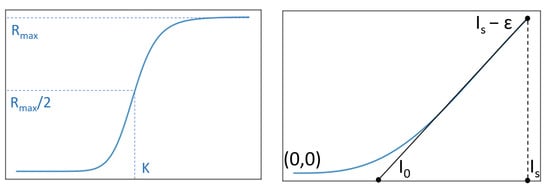

Among the different functions we tested, the Naka–Rushton [30] function was the easiest to tune. This function was first introduced to describe the biological response of a neuron, and was further used in computer graphics for the tone-mapping problem. It is defined as:

where is the upper-bound of the Naka–Rushton function, K is the horizontal position of the inflection point, and n is related to the slope at the inflection point (see Figure 4). The shape of the first part of the curve in Figure 4 (left) fits our needs, as shown in Figure 4 (right). The inflexion point with coordinates should correspond to the modulation function f at . Indeed, is the maximum of the pixel intensities in the input image. Then, the constraint f() = is used to avoid artifacts in the sky region. From this, the value of K was set to .

Figure 4.

(Left): the Naka–Rushton function with parameters , K and n. (Right): the modulation function, shaped like the left-hand side of the Naka–Rushton function, showing parameters , and .

3.4. Naka–Rushton Function Parameters

, K, and n are the parameters of the Naka–Rushton function, whereas the parameters of the modulation function f are , , and . In the previous section, K was set to . Following constraint 4 in Section 3.3, is set to . Thus, . The slope at is set to a. This slope in the Naka–Rushton function being (obtained by deriving the Naka–Rushton function), we have . It follows that . The parameter a was calculated as the slope between the points of coordinates (, 0) and (, ) (see the right image in Figure 4).

Finally, the proposed modulation function f is:

where , , and . This modulation function has only three parameters: , , and . The last one must be set to a small value, and the other two can be computed from the input image.

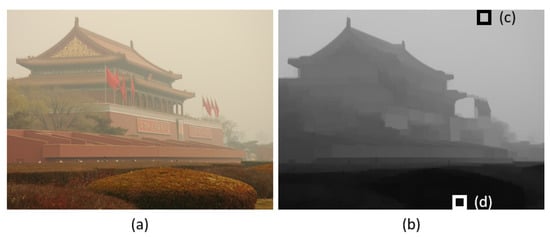

is the intensity of the sky and is the intensity of the ground close to the camera. We have investigated how and can be best estimated (see Figure 5). Take the maximum of the image intensities for , and the minimum for is too sensitive to noise. Therefore, and are computed by taking, respectively, the minimum and the maximum of the input foggy image after applying a morphological closing followed by a morphological opening. Figure 6 schematizes the process of estimating atmospheric veil.

Figure 5.

Estimating and : (a) input image, (b) filtered image using a morphological opening, (c) pixels where the intensity is maximum, (d) pixels where the intensity is minimum.

Figure 6.

Atmospheric veil estimation from the morphologically filtered pre-veil using Naka–Rushton as a modulation function.

3.5. Interpolation between Two Models

Several analyses carried out on different kinds of databases have shown that the proposed algorithm is less efficient when the veil is spatially close to uniform. Although these types of images are not very realistic, our main algorithm has been modified in order to deal with veils spatially close to uniform with the aim of generalizing the proposed method. To take this class of images into account, we propose a new version of our algorithm, which is improved for images with a spatially uniform veil, and roughly unchanged for a depth-dependent fog.

When the veil is spatially close to uniform, as with satellite images, the most suitable function to use for restoration is a constant, as the fog has a nearly constant depth. To address this situation, we define , which estimates this constant function.

is set to the minimum value in the pre-veil (see Figure 5), so that it gives the intensity of the darker areas in the filtered image. In “normal” fog, this value is expected to be low, representative of close-by objects without much fog. The veil is considered to be spatially constant on the image when is greater than a fixed threshold (0.2 in the following).

We have considered several methods to take uniform veils into account: their common feature is that they interpolate between two functions, f and g. Two functions have been tested for f: the Naka–Rushton function from Equation (5), named in the following, and a function matching the affine part of our Naka–Rushton-like function: , which is simpler and close to the shape of the histogram (Figure 3).

The interpolation takes the form:

The free parameters (m and p) allow for tuning the weighting of the two functions. High values of p lead to a sharp switch between and g; preliminary tests showed that the best values for these parameters were and .

We compared five interpolation methods in terms of the SSIM and PSNR indexes on the same datasets (Table 1):

Table 1.

Comparison of the SSIM and PSNR indexes on the four datasets with the five interpolation functions listed in the text before the table. I is for Interpolation, S for Switch. The two best results are in bold.

- Our modulation function: ;

- An interpolated function between and ;

- An interpolated function between and ;

- A switch between and at the threshold ;

- A switch between and at the threshold .

Table 1 shows that alone performs well on both the FRIDA and the NTIRE20 datasets, but the interpolated functions and provide better results on the SOTS and O-HAZE datasets. Using instead of does not improve the algorithm’s performance, nor does using a sharp switch between and g. The results on the datasets where the veil is spatially close to uniform (SOTS and O-HAZE) show that the constant function is a good alternative for this type of fog. The modulation function interpolated between a constant and our Naka–Rushton-like function deals with several types of fog, and it is competitive with the other algorithms listed in the Section 4.1 on all datasets. In the evaluation part, this interpolated function is called I.

3.6. Beyond the White Fog Prior

Koschmieder’s law applies along light paths when the fog and the illumination are homogeneous. In situations that do not meet these conditions, Koschmieder’s law is no longer valid. In the nighttime images, for instance, scattering causes luminous halos around light sources which makes for a non-uniform atmospheric veil, even in homogeneous fog. Underwater images also suffer from large illumination variations due to light absorption with depth. Several works override these theoretical limits and apply Koschmieder’s law on nighttime and underwater images.

Many algorithms have been proposed for nighttime haze removal. Li et al. [31] proposed an algorithm to remove the glow by splitting it from the rest of the image. In [32], defogging being a local process, a multi-scale fusion approach was proposed to restore foggy nighttime images. Zhang et al. [33] proposed a maximum direct reflectance (MRP) method to estimate ambient illuminance based on the following assumption: for most daytime haze-free image patches, each color channel has a very high intensity at certain pixels. Very recently, Lou et al. [34] proposed a novel color correction technique based on MRP and used an inverse correlation between the transmittance and haze density to estimate the transmission map.

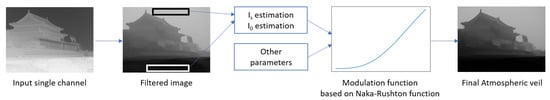

Even though interesting results can be obtained outside the validity domain of Koschmieder’s law, the white fog prior is not valid for nighttime and underwater images. Indeed, nighttime fog halos are usually the same color as the artificial light sources, such as street lamps or car lights, see Figure 7. In underwater images, the absorption varies drastically with the light wavelength, depending on the chemical content of the water. Usually, there is an important blue or green shift of the scene colors. The limits of the white fog prior can also be observed in other situations such as haze in shadows, rain at far distances, smoke, and dust.

Figure 7.

Nighttime foggy images. Reprinted with permission from ref. [35]. Copyright 2021 Mark Broyer.

Figure 8a shows the pixel values on the three color channels of a white fog image. The three values of the pixels in the center of the black squares are very close to each other. Therefore, it is possible to estimate the pre-veil from the color channel that has the minimum intensity. As shown in the histogram (Figure 8b), the intensity of the pixels on each channel is approximately the same. In contrast, Figure 8c shows that the pixel values in the middle of the black squares are different across channels, and depend on the area of the image. The RGB values are R = 223, G = 165, and B = 84 in the yellow fog, and R = 9, G = 75 and B = 109 in the blue fog. These intensity differences between the three RGB channels are illustrated in Figure 8d. Thus, the pre-veil estimation technique for daytime white fog does not work with nighttime colored fog: a colored pre-veil must be estimated (see Figure 9c).

Figure 8.

RGB values comparison: (a) daylight white fog image from the RESIDE dataset [36], (b) histogram of the daylight white fog image, (c) colored fog image (reprinted with permission from ref. [35]. Copyright 2021 Mark Broyer), (d) histogram of the colored fog image. This figure shows the RGB values of the pixel in the center of the black squares. Pixel intensity is between 0 and 255.

Figure 9.

Atmospheric veils before the refinement step: (a) atmospheric veil estimation of a white fog image, (b) atmospheric veil estimation of a colored foggy image from the color channel with the minimum intensity, (c) atmospheric veil estimation of a colored foggy image from each color channel (adapted with permission from ref. [35]. Copyright 2021 Mark Broyer).

In order to handle colored atmospheric veil, we propose a simple method: processing each color channel separately with our algorithm. Figure 9 shows examples of the atmospheric veil estimated from the color channel that has the minimum intensity (Figure 9a,b) and an example of the atmospheric veil estimating on each color channel (Figure 9c). This is possible only because the proposed atmospheric veil removal method is able to process gray-level images thanks to the use of the modulation function prior. By processing each color channel separately, is estimated on each channel and thus the color of the veil is inferred.

4. Experimental Results

The proposed algorithm is compared to eight state-of-the-art algorithms, including three prior-based methods (DCP [6], NBPC [15], Zhu et al. [12]), one fusion-based method (Zhu et al. [22]) and four learning-based methods (AOD-Net [26], Dehaze-Net [23], GCA-Net [27] and FFA-Net [37]). We selected algorithms whose codes were publicly available. For each algorithm, we optimized all the input parameters, except the parameter in the DCP which we set to as in the original paper. We tested different values of each parameter on four datasets using the Peak Signal-to-Noise Ratio (PSNR), the Structural Similarity Index Measure (SSIM) [38], and the Feature Similarity Index for color images (FSIMc) [39] as comparison criteria. The FSIMc metric compares both the luminance and chromatic information of the images. We first present a quantitative comparison on synthetic images from the public FRIDA dataset [16], the Synthetic Objective Testing Set (SOTS) from the RESIDE dataset [36], the NTIRE20 dataset, and the O-HAZE dataset [40]. Then, we make a qualitative comparison on real world images. In the following results, the standard deviation is given in parentheses. For the FRIDA synthetic dataset, . For the NTIRE20 and O-HAZE dataset, .

4.1. Quantitative Evaluation

4.1.1. Evaluation on Standard Metrics

Table 2 and Table 3 show that all methods are competitive, but our method (W) outperforms the others for both criteria on the FRIDA, NTIRE20, and O-HAZE datasets. On the O-HAZE dataset, the color version of our method (C) shows better performance. The color distortions are attenuated. The results on the SOTS dataset show that the proposed algorithm is less efficient on images where the veil is spatially close to uniform, as described in Section 3.5. Our method (I) allows for dealing with constant veils and provides better results on the SOTS dataset in terms of SSIM and PSNR. However, the performance decreases slightly on the three other databases compared to the two other versions of our method (W and C). The main result is that I generalizes to more types of fog. Then, the general performance of the interpolated method is higher than those of the other algorithms.

Table 2.

Comparison of the PSNR index on four datasets: fifty images from the FRIDA and the SOTS datasets and forty-five images from the NTIRE20 and the O-HAZE datasets. W corresponds to our main algorithm assuming white fog, and C is the color version (see Section 3.6), and I the interpolated version (see Section 3.5). The best results are in bold.

Table 3.

Comparison of the SSIM index on four datasets: fifty images from the FRIDA and the SOTS datasets, forty-five images from the NTIRE20 and the O-HAZE datasets. W corresponds to our main algorithm assuming white fog, C is the color version (see Section 3.6), and I the interpolated version (see Section 3.5). The best results are in bold.

Table 4 shows that our algorithms are competitive on the four datasets. The results obtained with the FSIMc metric contribute to illustrating the genericity of our method. In particular, Algorithm I competes with deep learning algorithms such as Dehaze-Net and FFA-Net on the SOTS dataset. On the FRIDA dataset, our method and the DCP method perform best. The GCA-Net and AOD-Net algorithms seem to outperform on the NTIRE20 datasets; however, the qualitative evaluation shows severe color distortions and darkenings. Our methods provide very good results on these two datasets. Moreover, the color version (C) of our algorithm seems to play a role in improving the performance on the O-haze and Ntire20 datasets. Indeed, the color version is better than the others to handling the blue-shift distortions.

Table 4.

Comparison of the FSIMc index on four datasets: fifty images from the FRIDA and the SOTS datasets, forty-five images from the NTIRE20 and the O-HAZE datasets. W corresponds to our main algorithm assuming white fog, and C is the color version (see Section 3.6), and I the interpolated version (see Section 3.5). The best results are in bold.

4.1.2. Evaluation on Additional Metrics

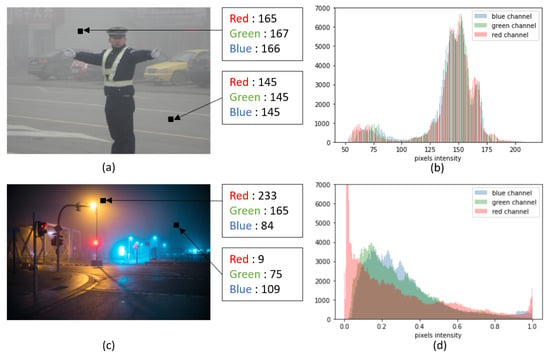

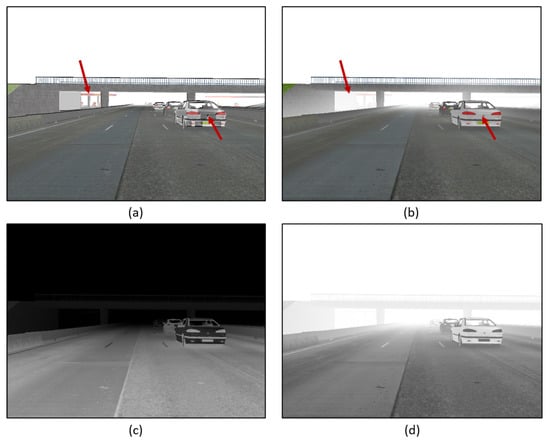

PSNR, SSIM, and FSIMc are useful and broadly used criteria, but they are not sufficient to rate the quality of image restoration. Figure 10a gives an example where the restoration succeeds for objects in the background (see the arrow on the left), whereas the car in the foreground is degraded (arrow on the right). Conversely, the restoration in Figure 10b preserves the foreground but degrades the background. However, the results of the SSIM and PSNR metrics are approximately the same:

Figure 10.

FRIDA images: (a) restored image; SSIM = 0.89 and PSNR = 17.9; (b) restored image; SSIM = 0.89, PSNR = 17.7, (c) weight map focusing on the bottom part of the input foggy image, and (d) weight map focusing on the foggy part of the input foggy image.

This example reveals that global quality indexes such as the PSNR, FSIMc, and SSIM may be inadequate either because the restoration is inefficient, or because the algorithm over-restores contrast in the areas where fog is absent or almost absent. In addition to these global metrics, we propose to estimate the quality of the restored images with two weight maps (Figure 10c,d), which are dense in areas where fog is respectively thick or thin.

Moreover, depending on the application, one may wish to preserve various features of the image. We have considered three important features in the following evaluation: image intensity, intensity of the gradient images, and image structure similarity (SSIM). In the following, the performance of the three proposed algorithms, named W (white fog), C (color), and I (interpolation), is compared with these new indexes (see Figure 11):

Figure 11.

(a) SSIM map of a restored image from the FRIDA dataset, (b) gradient map, (c) intensity map.

- d1: Weighted distance between the SSIM of the ground truth and the SSIM of the restored images (Figure 11a).

- d2: Weighted distance between the gradient of the ground truth and the gradient of the restored images (Figure 11b).

- d3: Weighted distance between the ground truth and the restored images (Figure 11c).

The weighted distance calculations are defined as:

where p is a weight map roughly associated with foggy regions in the image (we use a normalized version of the pre-veil), G the ground truth, R the restored image, and F an intensity compensation factor between the restored image and the ground truth. emphasizes the parts of the image close to the camera (Figure 10c), whereas emphasizes the foggy part (Figure 10d).

Table 5 and Table 6 show that the Interpolation method outperforms the W and C versions, as expected, on the SOTS dataset (since these are distance calculations, smaller values are better). It provides good results on the three proposed metrics, and it is competitive with previous algorithms, both model-based and learning-based.

Table 5.

Comparison of three distance indexes d1, d2 and d3 on fifty images from the SOTS dataset, with the index (in the fog area). W corresponds to our main algorithm assuming white fog, C to the color version, and I to the interpolation version. The two best results are in bold. The best result is also underlined.

Table 6.

Comparison of three distance indexes d1, d2, and d3 on fifty images from the SOTS dataset, with the index (in the no-fog area). W corresponds to our main algorithm assuming white fog, C to the color version, and I to the interpolation version. The two best results are in bold. The best result is also underlined.

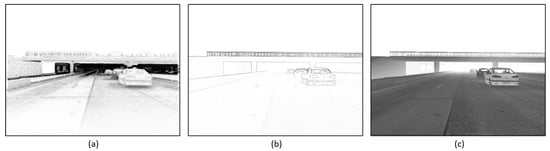

In addition to these metrics, an edge-based criterion was considered. It consists of applying an edge filter, such as the Canny edge detector [41], on both the ground truth and the restored images, and to compare the two binary edge maps in order to assess the benefit of restoration in terms of edge visibility. This criteria may be useful, for instance, to assess restoration for detection algorithms for onboard cameras. Figure 12 shows that edge maps obtained with the Canny filter on the same scene without fog, with fog, and after fog removal.

Figure 12.

Edge maps after application of the Canny filter on a FRIDA image: (a) without fog, (b) with fog, (c) after restoration. The min and max thresholds of the edge detector are set to 120 and 180.

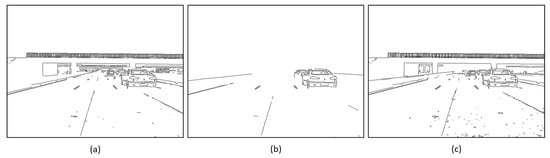

The two binary edge maps are compared with a Receiver Operating Characteristic (ROC), varying the min threshold between 0 and the max threshold value (Figure 13). The left image on Figure 13 shows that Zhu et al. [12], our W algorithm, the DCP, and the multi-exposure method [22] perform better than the other in terms of edge visibility restoration. Our Interpolation algorithm, the NBPC, and the GCA-Net are also competitive in terms of edges restoration, with lower performances. The best rated algorithm tends to succeed better in restoring edges at large distances.

Figure 13.

ROC curves comparison for different algorithms. (Left): ROC curves obtained from fifty images of the FRIDA dataset. (Right): ROC curve from fifty images of the SOTS dataset. The max threshold of the Canny filter is set to 180. The legend Multiexposure corresponds to Zhu et al.’s [22] method.

The right image in Figure 13 shows that, while FFA-net outperforms others in terms of edge visibility on the SOTS dataset, it gives the worst results on the FRIDA dataset. This algorithm has been trained to be very efficient on images with a spatially uniform veil (as in the RESIDE/SOTS datasets). The quantitative evaluation shows it does not generalize to different types of fog, which is the goal of ours. After FFA-net, our Interpolation algorithm and Zhu et al. [12] provide very good results, whereas DCP and Dehaze-Net are competitive on the SOTS dataset. Our White fog algorithm gives better results in terms of edge visibility than the Interpolation version on the FRIDA dataset, whereas it is the opposite on the SOTS dataset. This strengthens the idea that the Interpolation version gives better results with spatially uniform fog.

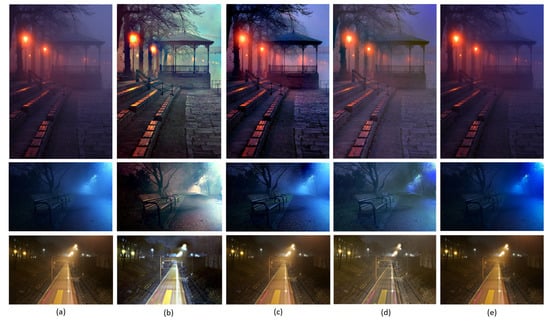

4.2. Qualitative Evaluation

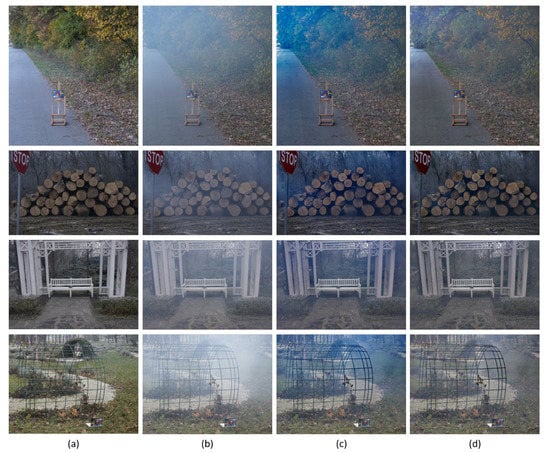

Real world images from previous works on single image fog removal have been used for a qualitative comparison. DCP, NBPC, and Zhu et al. [12] algorithms remove the fog with good results (Figure 14). However, DCP images are bright and contrasted, whereas NBPC and Zhu et al.’s [12] results are darker and more faded. Zhu et al.’s [22] method provides very contrasted and saturated results, particularly notable in the pumpkins and the mountain images. The AOD-Net learning-based method provides faded and dark results but with less halos. It appears in the tree and the buildings images that the Dehaze-Net method retains far away haze. It works better with colored sky regions, avoiding artifacts and blue-shift distortions found with most algorithms. FFA-Net seems to retain far away haze more than Dehaze-Net. This effect is particularly significant in the images with sky regions as in the tree and the building images. GCA-Net produces artifacts in the sky of the tree image and of the the first two lines images but provides good and colored results in other images. The White fog version of our algorithm provides light images and removes the haze over the entire images. The color version reduces color distortions.

Figure 14.

Comparison of fog removal results on real world images: (a) input foggy images, (b) DCP [6], (c) NBPC [15], (d) Zhu et al. [12], (e) Zhu et al. [22], (f) Dehaze-Net [23] (g) AOD-Net [26], (h) GCA-Net [27], (i) FFA-Net [37], (j) White fog, and (k) Color algorithm.

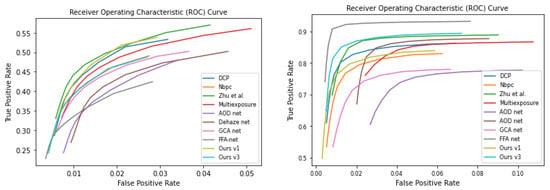

Figure 15 shows results on images from the O-HAZE dataset with two versions of our algorithm. The results of the White fog version of the algorithm (Figure 15c) are blue-shifted and noisy. The color version of the algorithm succeeds in attenuating the blue-shift effect (see Figure 15d) and contributes to improving the criterion described in Section 4.1.

Figure 15.

O-HAZE images [40]: (a) without fog, (b) with fog, (c) images restored with the White fog version of our algorithm (W), (d) images restored with the color version of our algorithm (C).

4.3. Robustness of the Proposed Algorithm

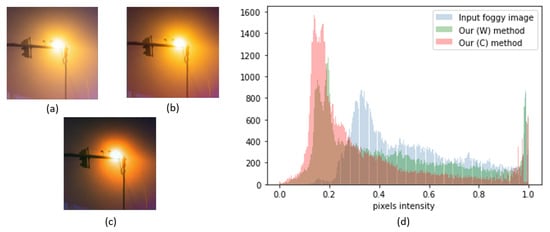

Figure 16 shows sample results of our algorithm on nighttime images. To test the relevance of our algorithm for nighttime images, a comparison was conducted with two state-of-the-art algorithms, Li et al. [31] and Yu et al. [42], on previously used nighttime images. Figure 16 shows that the algorithm of Li et al. succeeds in attenuating halos, but the images are color-shifted and noisy, particularly in sky regions. On the contrary, the algorithm of Yu et al. produces light and contrasted images with color consistency and natural rendering, whereas halos seem emphasized, particularly in the bottom part of the image. The color version of our algorithm succeeds in attenuating halos and maintaining a natural color rendering. It is particularly notable in the bottom part of the image; in the top part of the image, the objects far away are not contrasted enough.

Figure 16.

Comparison on nighttime images from [33]: (a) foggy images, (b) Li et al. [31], (c) Yu et al. [42], (d) color version (C), (e) white version (W).

Figure 17 shows a comparison between the white version (W) and the colored version (C) of our algorithm of an image representing a halo with an orange fog. Figure 17b shows that W seems to accentuate the glow effect and does not remove the colored fog. In contrast, C (Figure 17c) succeeds in attenuating the halo effect and the orange shift. The histogram in Figure 17d shows the intensity distribution of the image. The blue part corresponds to the input foggy image. It is evenly distributed over the intensity range [0.2, 1.0], which represents the foggy part of the image. Therefore, ideally, the histogram of the restored image should peak towards the left side of the histogram.

Figure 17.

Comparison on a glow patch: (a) input foggy image, (b) restored patch with our white fog algorithm, (c) restored patch with our colored fog algorithm, (d) histogram of the intensity of each glow patch (glow images are Reprinted with permission from ref. [35]. Copyright 2021 Mark Broyer).

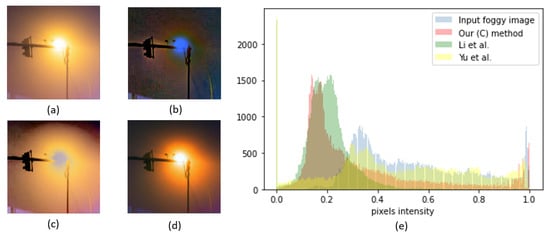

Figure 18 shows a comparison between the colored version (C) of our algorithm and the two other algorithms of the state-of-the-art mentioned in Figure 16. Figure 18b shows that Li et al.’s result succeeds in eliminating the glow effect, but introduces color distortions. Yu et al.’s result (Figure 18c) seems to slightly attenuate the colored fog. However, the histogram distribution (the yellow part) shows that the fog around the artificial source is not completely removed. A peak in the left side of the histogram seems to be a good indicator of night fog removal (Our C and Li et al. [31]).

Figure 18.

Comparison on a glow patch: (a) foggy image, (b) Li et al. [31], (c) Yu et al. [42], (d) restored image with the color version of our algorithm, (e) histogram of the intensity of each glow patch (glow images are adapted with permission from ref. [35]. Copyright 2021 Mark Broyer).

Table 7 shows a small quantitative evaluation on a database of twenty synthetic nighttime images created by Zhang et al. [43] from the Middlebury dataset (see an example in Figure 19). The results show that our method is slightly lower but remains competitive with methods dedicated to nighttime image restoration. It also shows that the simple method of applying our idea on each color channel gives promising results.

Table 7.

Comparison of the SSIM and PSNR indexes on twenty synthetic nighttime images.

Figure 19.

Image from the Middlebury dataset [44]: (a) input image, (b) nighttime synthetic image [43]. In the context of synthetic images, the size of the opening filter is set to (30,30) instead of (10,10).

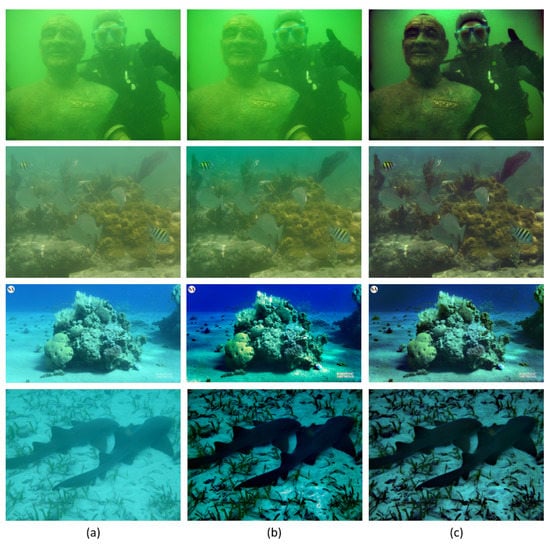

Figure 20 illustrates that applying our algorithm on each color channel may also be promising for underwater visibility restoration.

Figure 20.

Underwater images from [45]: (a) input images, (b) images restored with our White fog algorithm (W), (c) images restored with the color version of our algorithm (C).

5. Discussion

We have reinterpreted the DCP method in terms of three priors. We propose to improve the third prior, associated with , with a smooth modulation function as a prior to estimate the atmospheric veil from the pre-veil. The input parameters of this function are automatically estimated according to the input image pixel intensities in light (sky) and dark (ground) regions, after filtering. In addition, our method makes it possible to generalize to different types of fog, both depth-dependent fog and spatially uniform fog. The evaluation on the different databases with different types of fog testifies to the generalizability of our algorithm. The proposed method provides good results on both synthetic and real world images for objects at all distances. To extend the proposed algorithm to smoke, dust, and other colored airborne particles, we process each color channel separately, in order to remove colored components. This allows for applying the algorithm to nighttime images as well as underwater images. An interpolation is needed between our Naka–Rushton algorithm and a constant veil algorithm in order to cope with images with a more or less uniform veiling luminance. The proposed algorithms should be carefully evaluated for various applications in daylight, nighttime, and possibly underwater.

Author Contributions

Conceptualization, R.B., J.-P.T. and A.D.; methodology, A.D.; software, A.D.; validation, A.D.; formal analysis, A.D.; investigation, R.B., J.-P.T. and A.D.; resources, R.B. and J.-P.T.; data curation, A.D.; writing—original draft preparation, R.B., J.-P.T. and A.D.; writing—review and editing, R.B. and J.-P.T.; visualization, A.D.; supervision, R.B. and J.-P.T.; project administration, R.B., J.-P.T. and A.D.; funding acquisition, R.B. and J.-P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

FRIDA dataset: http://perso.lcpc.fr/tarel.jean-philippe/bdd/frida.html, accessed on 15 June 2021; RESIDE/SOTS dataset: https://sites.google.com/view/reside-dehaze-datasets/reside-v0, accessed on 15 June 2021; O-HAZE dataset: https://data.vision.ee.ethz.ch/cvl/ntire18//o-haze/, accessed on 13 May 2020; NTIRE20 dataset: https://data.vision.ee.ethz.ch/cvl/ntire20/, accessed on 4 June 2020; [15] code: http://perso.lcpc.fr/tarel.jean-philippe/, accessed on 30 October 2020; [6] code: https://github.com/sjtrny/Dark-Channel-Haze-Removal, accessed on 24 July 2020; [12] code: https://github.com/Lilin2015/Author—Single-Image-Dehazing-based-on-Dark-Channel-Prior-and-Energy-Minimization, accessed on 17 December 2020; [26] code: https://github.com/MayankSingal/PyTorch-Image-Dehazing, accessed on 30 June 2020; [23] code: https://github.com/caibolun/DehazeNet, accessed on 3 July 2020; [27] code: https://github.com/cddlyf/GCANet, accessed on 8 December 2020; [31] code: https://github.com/yu-li/yu-li.github.io/blob/master/index.html, accessed on 7 July 2020; [42] code: https://github.com/yuteng/nighttime-dehazing, accessed on 7 July 2020; [37] code: https://github.com/zhilin007/FFA-Net, accessed on 11 May 2021; [22] code: https://github.com/zhiqinzhu123/Fast-multi-exposure-fusion-for-image-dehazing, accessed on 11 May 2021.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tremblay, M.; Halder, S.; De Charette, R.; Lalonde, J.F. Rain Rendering for Evaluating and Improving Robustness to Bad Weather. Int. J. Comput. Vis. Vol. 2020, 129, 341–360. [Google Scholar] [CrossRef]

- Huang, S.C.; Le, T.H.; Jaw, D.W. DSNet: Joint Semantic Learning for Object Detection in Inclement Weather Conditions. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 1. [Google Scholar] [CrossRef]

- Hautière, N.; Tarel, J.P.; Aubert, D. Towards Fog-Free In-Vehicle Vision Systems through Contrast Restoration. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Duminil, A.; Tarel, J.P.; Brémond, R. Single Image Atmospheric Veil Removal Using New Priors. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In Proceedings of the 2010 European Conference on Computer Vision (ECCV), Crete, Greece, 5–11 September 2010; pp. 1–14. [Google Scholar]

- Caraffa, L.; Tarel, J.P.; Charbonnier, P. The Guided Bilateral Filter: When the Joint/Cross Bilateral Filter Becomes Robust. IEEE Trans. Image Process. 2015, 24, 1199–1208. [Google Scholar] [CrossRef]

- Xu, H.; Guo, J.; Liu, Q.; Ye, L. Fast image dehazing using improved dark channel prior. In Proceedings of the 2012 IEEE International Conference on Information Science and Technology, Wuhan, China, 23–25 March 2012; pp. 663–667. [Google Scholar] [CrossRef]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient Image Dehazing with Boundary Constraint and Contextual Regularization. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, J. Edge-Preserving Decomposition-Based Single Image Haze Removal. IEEE Trans. Image Process. 2015, 24, 5432–5441. [Google Scholar] [CrossRef]

- Zhu, M.; He, B.; Wu, Q. Single Image Dehazing Based on Dark Channel Prior and Energy Minimization. IEEE Signal Process. Lett. 2018, 25, 174–178. [Google Scholar] [CrossRef]

- Jackson, J.; Kun, S.; Agyekum, K.O.; Oluwasanmi, A.; Suwansrikham, P. A Fast Single-Image Dehazing Algorithm Based on Dark Channel Prior and Rayleigh Scattering. IEEE Access 2020, 8, 73330–73339. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Y.; Lu, J. Single image dehazing using a novel criterion based segmenting dark channel prior. In Proceedings of the 2019 Eleventh International Conference on Graphics and Image Processing (ICGIP); Pan, Z., Wang, X., Eds.; SPIE: Hangzhou, China, 2020; p. 121. [Google Scholar] [CrossRef]

- Tarel, J.P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 2201–2208. [Google Scholar] [CrossRef]

- Tarel, J.P.; Hautiere, N.; Caraffa, L.; Cord, A.; Halmaoui, H.; Gruyer, D. Vision Enhancement in Homogeneous and Heterogeneous Fog. IEEE Intell. Transp. Syst. Mag. 2012, 4, 6–20. [Google Scholar] [CrossRef]

- Negru, M.; Nedevschi, S.; Peter, R.I. Exponential Contrast Restoration in Fog Conditions for Driving Assistance. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2257–2268. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Bekaert, P. Effective single image dehazing by fusion. In Proceedings of the 2010 IEEE International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010; pp. 3541–3544. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C. Single Image Dehazing by Multi-Scale Fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef]

- Wang, Q.; Zhao, L.; Tang, G.; Zhao, H.; Zhang, X. Single-Image Dehazing Using Color Attenuation Prior Based on Haze-Lines. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 5080–5087. [Google Scholar] [CrossRef]

- Zheng, M.; Qi, G.; Zhu, Z.; Li, Y.; Wei, H.; Liu, Y. Image Dehazing by an Artificial Image Fusion Method Based on Adaptive Structure Decomposition. IEEE Sens. J. 2020, 20, 8062–8072. [Google Scholar] [CrossRef]

- Zhu, Z.; Wei, H.; Hu, G.; Li, Y.; Qi, G.; Mazur, N. A Novel Fast Single Image Dehazing Algorithm Based on Artificial Multiexposure Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 1–23. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single Image Dehazing via Multi-scale Convolutional Neural Networks. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; pp. 154–169. [Google Scholar] [CrossRef]

- Meinhardt, T.; Moeller, M.; Hazirbas, C.; Cremers, D. Learning Proximal Operators: Using Denoising Networks for Regularizing Inverse Imaging Problems. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1799–1808. [Google Scholar] [CrossRef]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. An All-in-One Network for Dehazing and Beyond. arXiv 2017, arXiv:1707.06543. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated Context Aggregation Network for Image Dehazing and Deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1375–1383, ISSN 1550-5790. [Google Scholar] [CrossRef]

- Engin, D.; Genc, A.; Ekenel, H.K. Cycle-Dehaze: Enhanced CycleGAN for Single Image Dehazing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 938–9388. [Google Scholar] [CrossRef]

- Zhu, H.; Peng, X.; Chandrasekhar, V.; Li, L.; Lim, J.H. DehazeGAN: When Image Dehazing Meets Differential Programming. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018; p. 7. [Google Scholar]

- Naka, K.I.; Rushton, W.A.H. S-potentials from color units in the retina of fish (Cyprinidae). J. Physiol. 1966, 185. [Google Scholar] [CrossRef]

- Li, Y.; Tan, R.T.; Brown, M.S. Nighttime Haze Removal with Glow and Multiple Light Colors. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 226–234. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C.; Bovik, A.C. Night-time dehazing by fusion. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2256–2260. [Google Scholar] [CrossRef]

- Zhang, J.; Cao, Y.; Fang, S.; Kang, Y.; Chen, C.W. Fast Haze Removal for Nighttime Image Using Maximum Reflectance Prior. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7016–7024. [Google Scholar] [CrossRef]

- Lou, W.; Li, Y.; Yang, G.; Chen, C.; Yang, H.; Yu, T. Integrating Haze Density Features for Fast Nighttime Image Dehazing. IEEE Access 2020, 8, 113318–113330. [Google Scholar] [CrossRef]

- Petzold, D. What the Fog-Street Photography by Mark Broyer. WE AND THE COLOR. 2017. Available online: https://weandthecolor.com/what-the-fog-street-photography-mark-broyer/92328 (accessed on 29 April 2021).

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking Single-Image Dehazing and Beyond. IEEE Trans. Image Process. 2019, 28, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. Proc. AAAI Conf. Artif. Intell. 2020, 34, 11908–11915. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-HAZE: A Dehazing Benchmark With Real Hazy and Haze-Free Outdoor Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 754–762. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Yu, T.; Song, K.; Miao, P.; Yang, G.; Yang, H.; Chen, C. Nighttime Single Image Dehazing via Pixel-Wise Alpha Blending. IEEE Access 2019, 7, 114619–114630. [Google Scholar] [CrossRef]

- Zhang, J.; Cao, Y.; Zha, Z.J.; Tao, D. Nighttime Dehazing with a Synthetic Benchmark. In Proceedings of the 28th ACM International Conference on Multimedia (ICM), Seattle, WA, USA, 12–16 October 2020; pp. 2355–2363. [Google Scholar]

- Hirschmüller, H.; Scharstein, D. Evaluation of cost functions for stereo matching. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2007), Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Wang, Y.; Song, W.; Fortino, G.; Qi, L.Z.; Zhang, W.; Liotta, A. An Experimental-Based Review of Image Enhancement and Image Restoration Methods for Underwater Imaging. IEEE Access 2019, 7, 140233–140251. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).