Description and Evaluation of the Fine Particulate Matter Forecasts in the NCAR Regional Air Quality Forecasting System

Abstract

1. Introduction

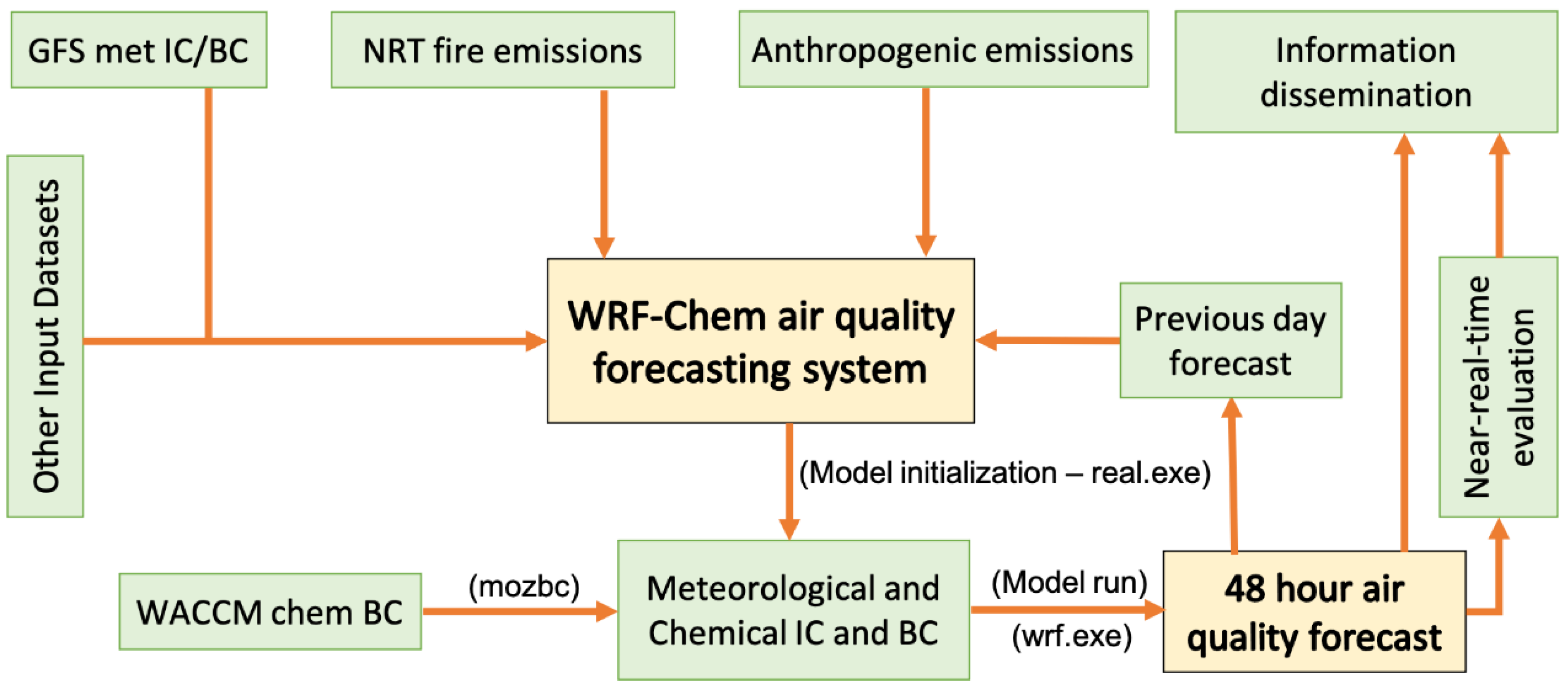

2. Description of the NCAR Air Quality Forecasting System

2.1. The Model Configuration

2.2. Challenges and Mitigation Strategies

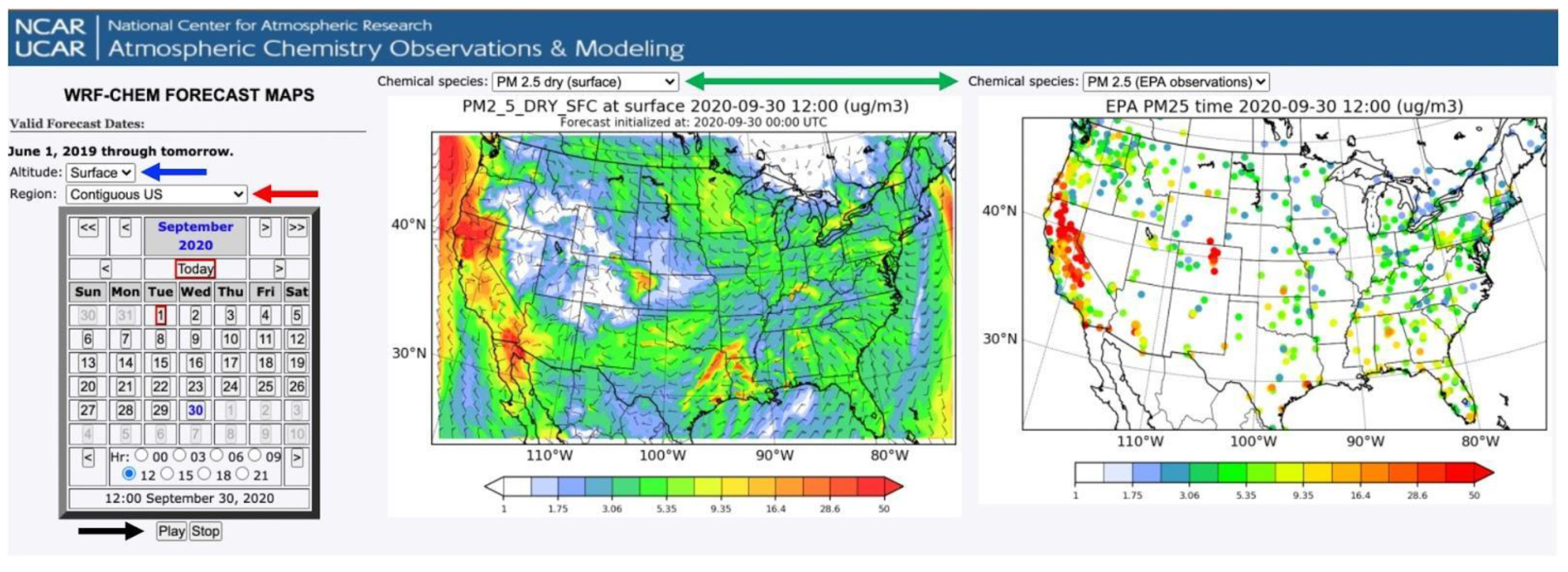

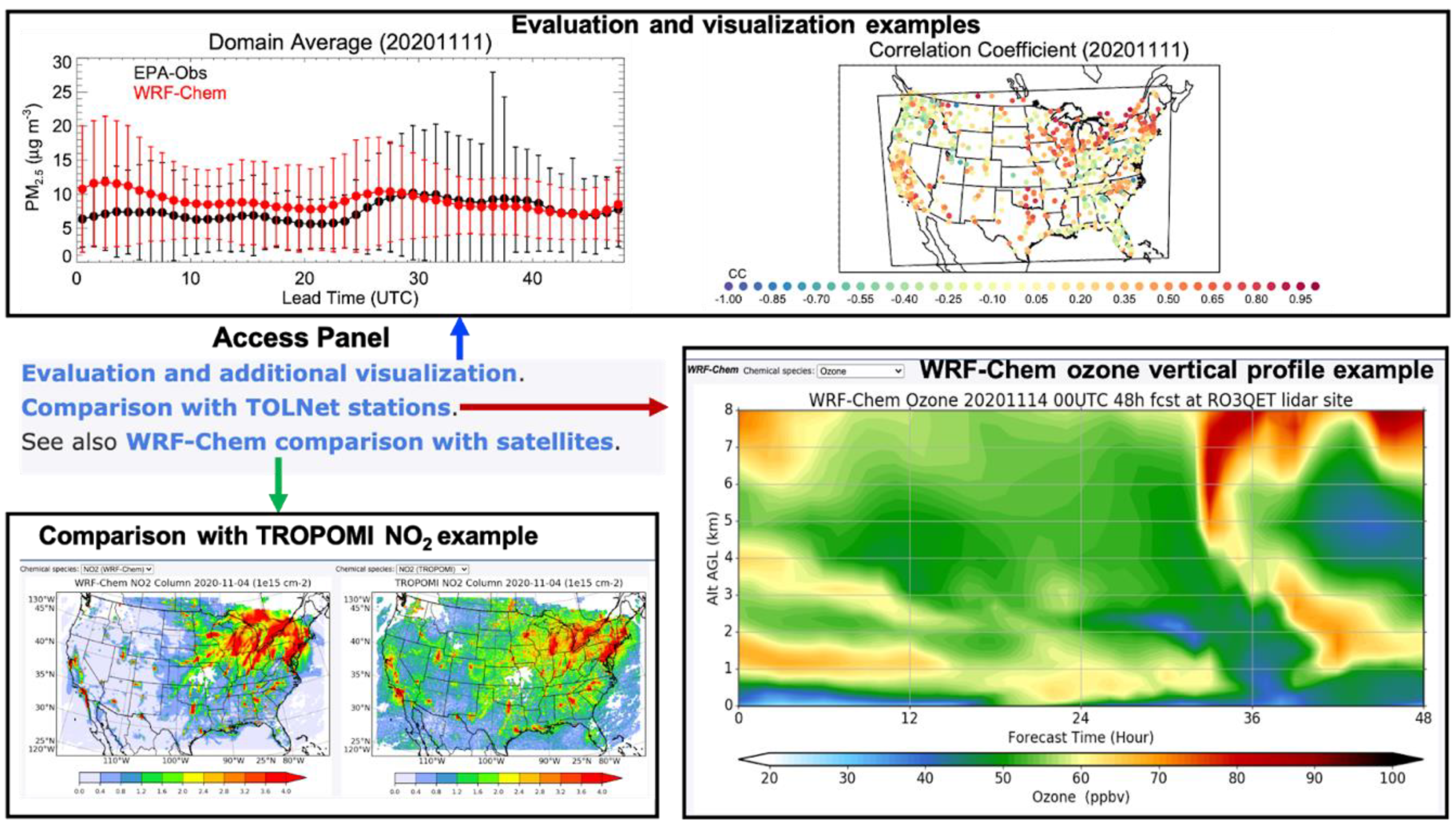

2.3. Information Dissemination System

3. Observations

4. Results and Discussion

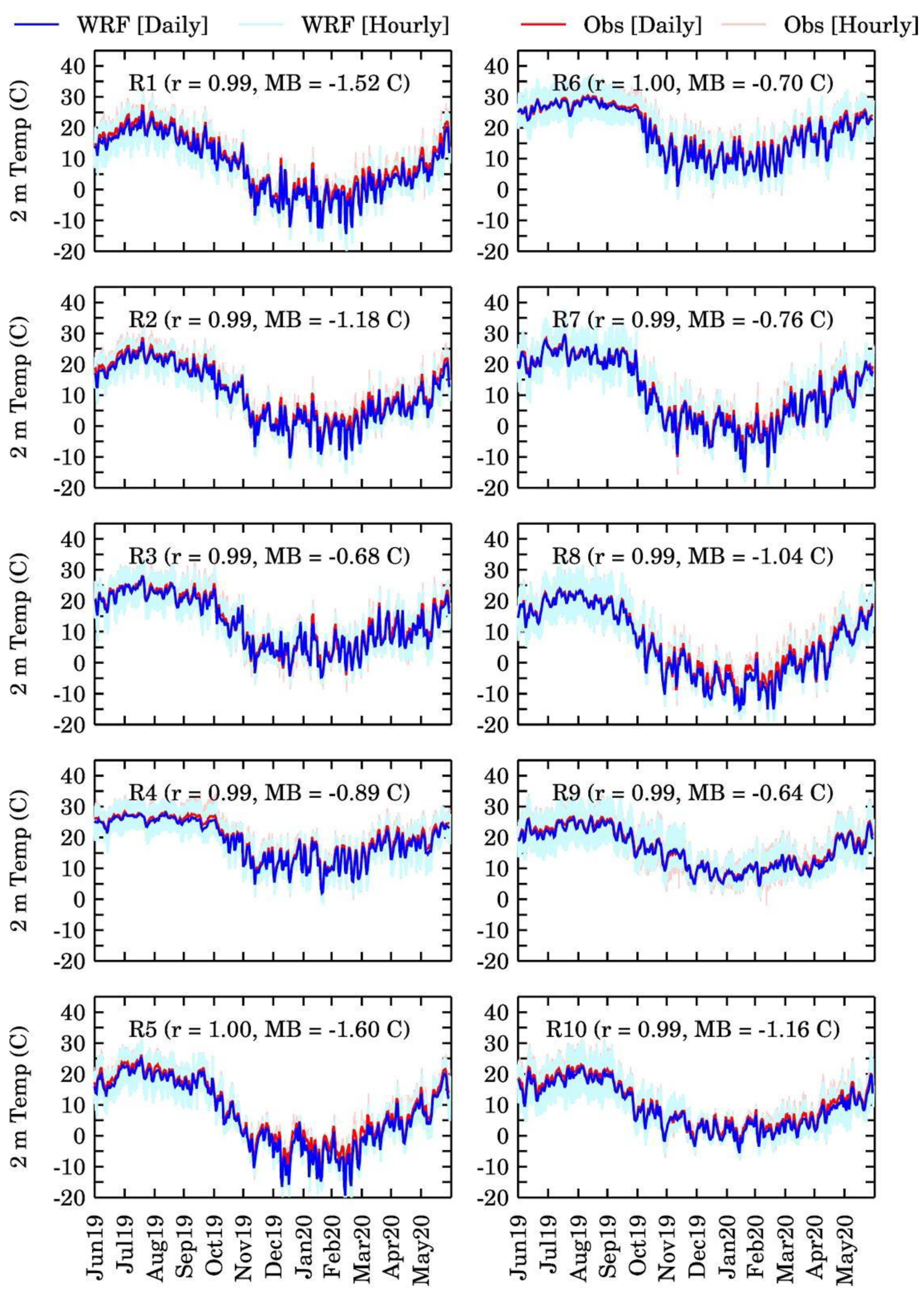

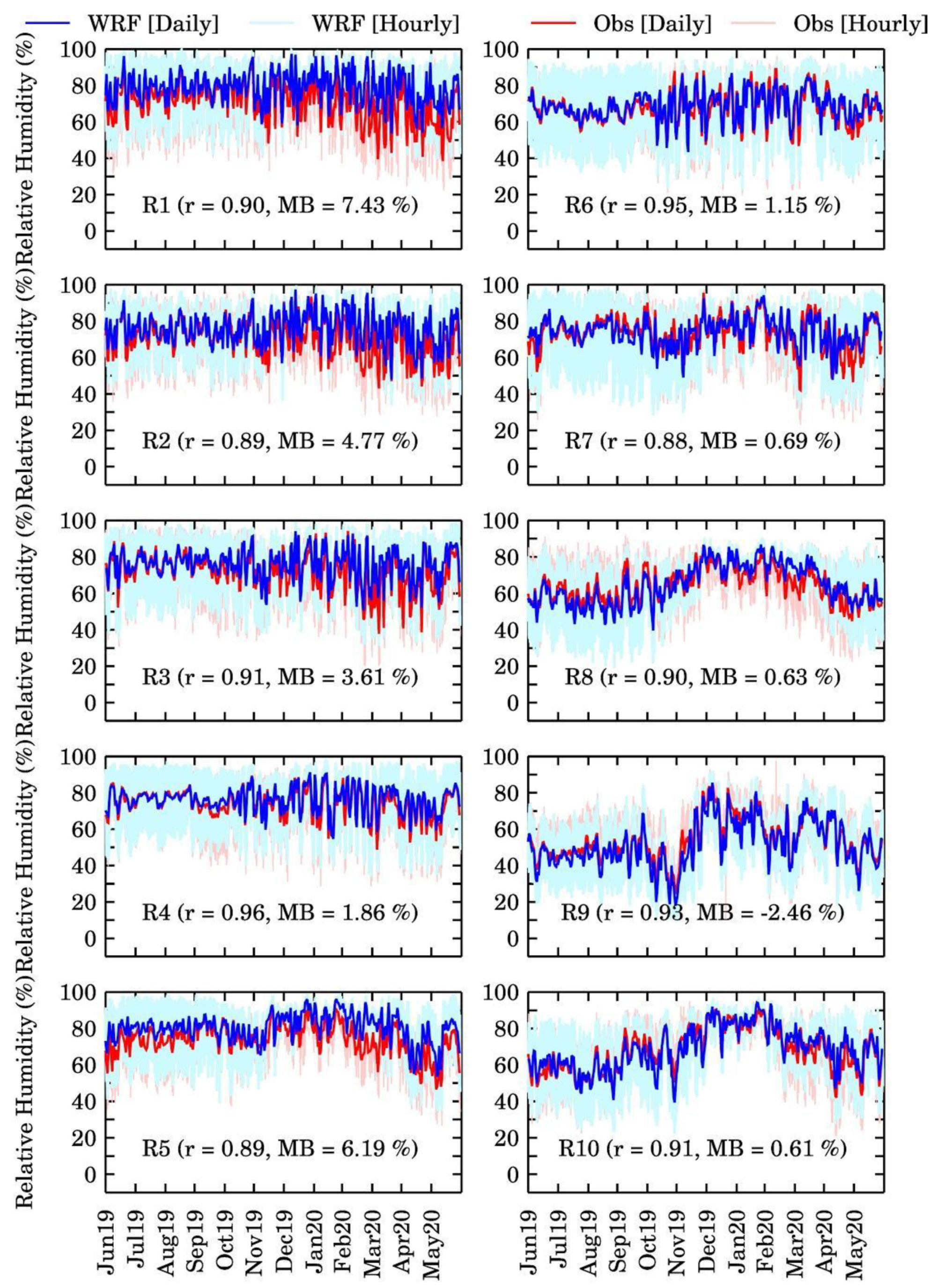

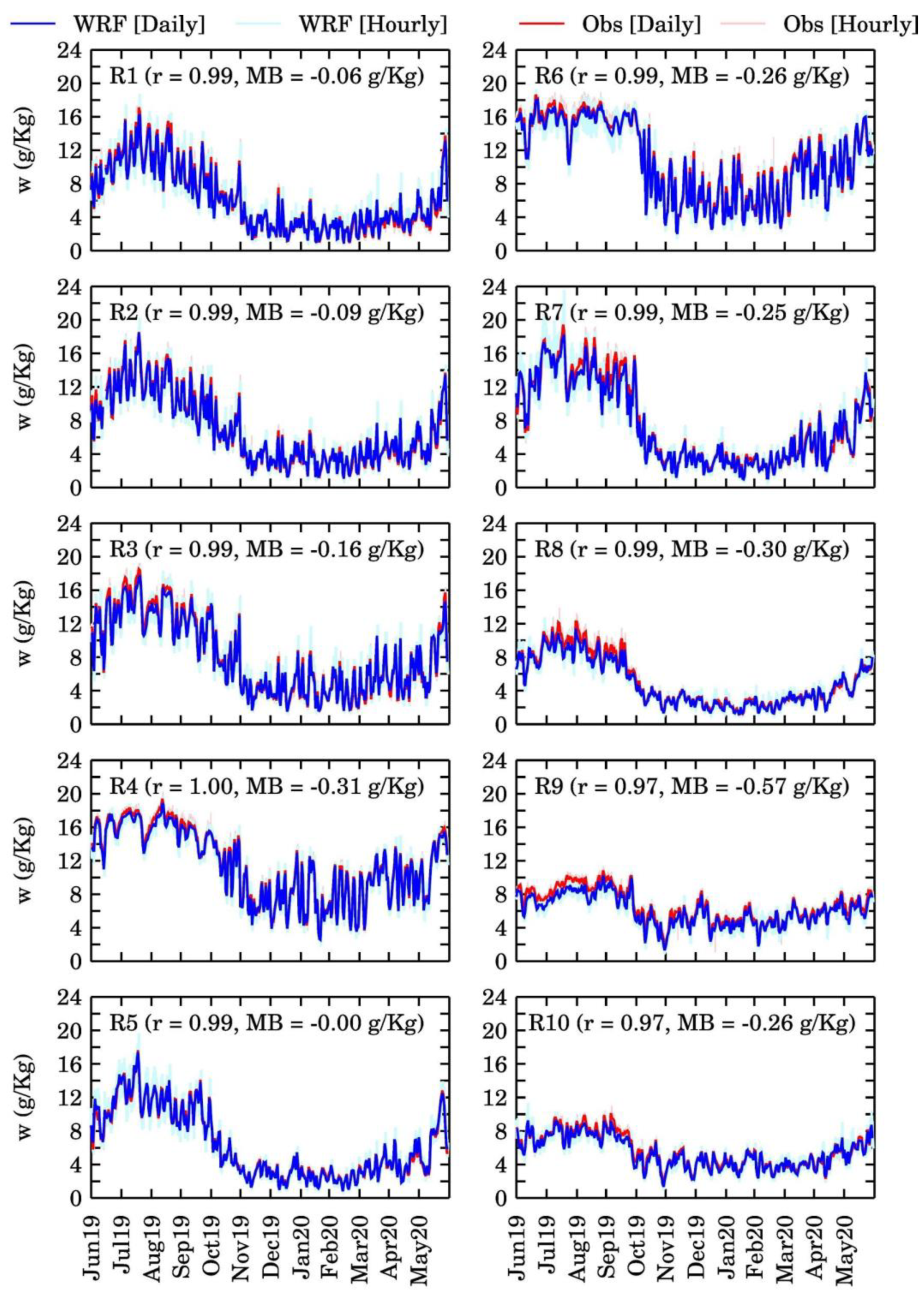

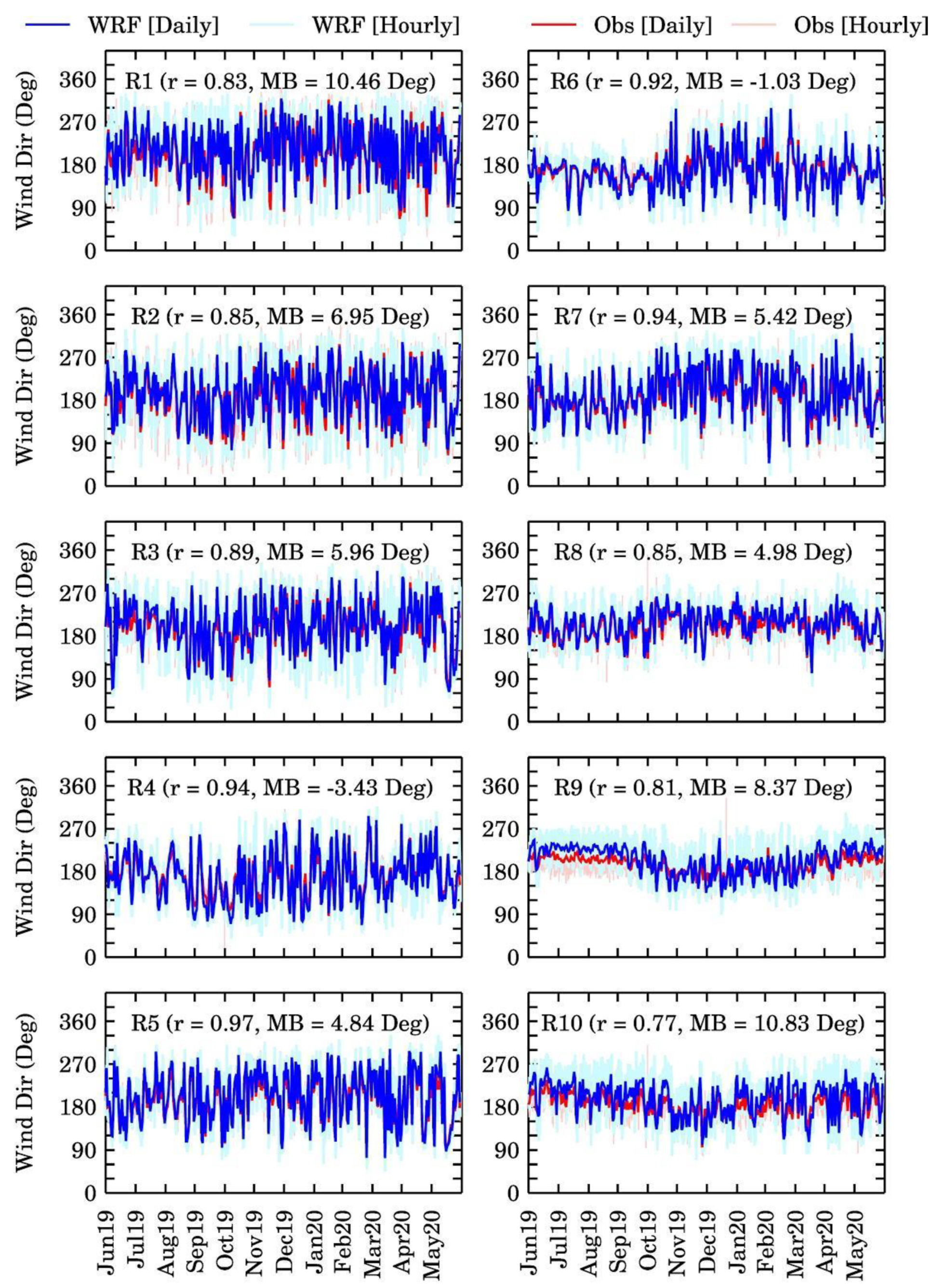

4.1. Meteorological Evaluation

10−4 × (1 − 10(−8.29692 × (T2/273.15 − 1))) + 0.42873 × 10−3 × (10(4.76955 × (1 − 273.15/T2)) − 1) −

2.2195983

× (1 − 10(−8.29692 × (Td/273.15 – 1))) + 0.42873 × 10−3 × (10(4.76955 × (1 − 273.15/Td)) − 1) − 2.2195983

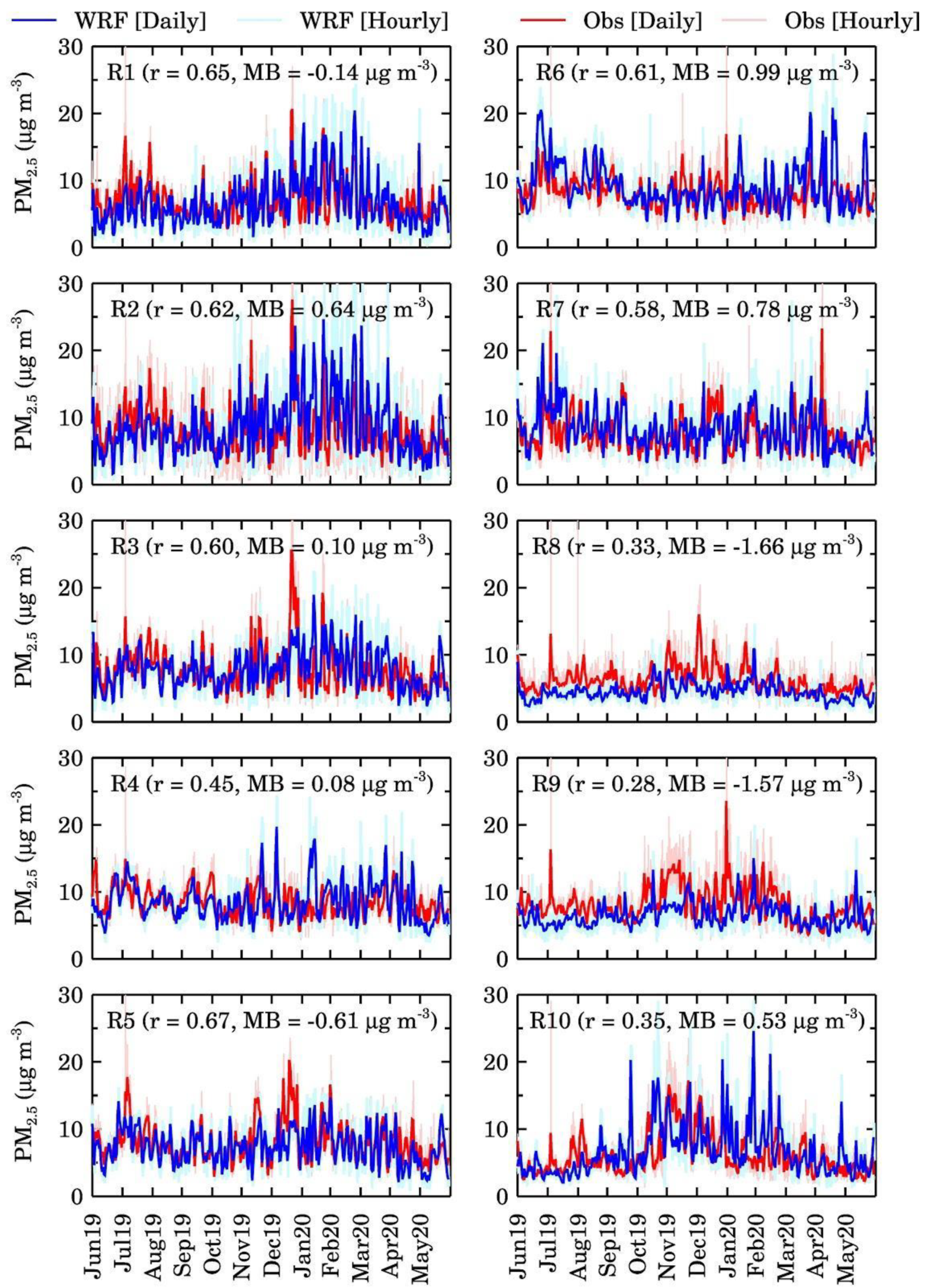

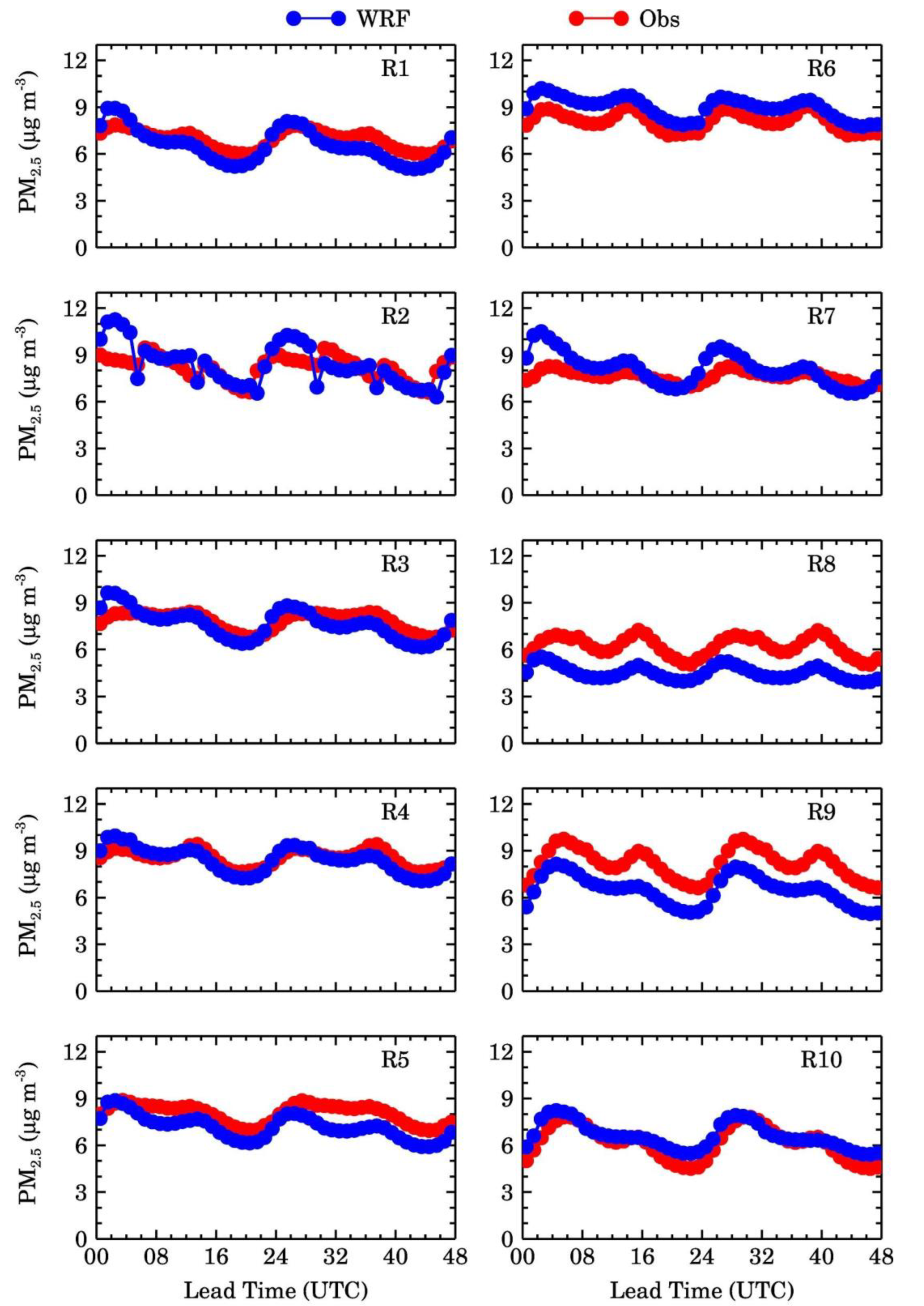

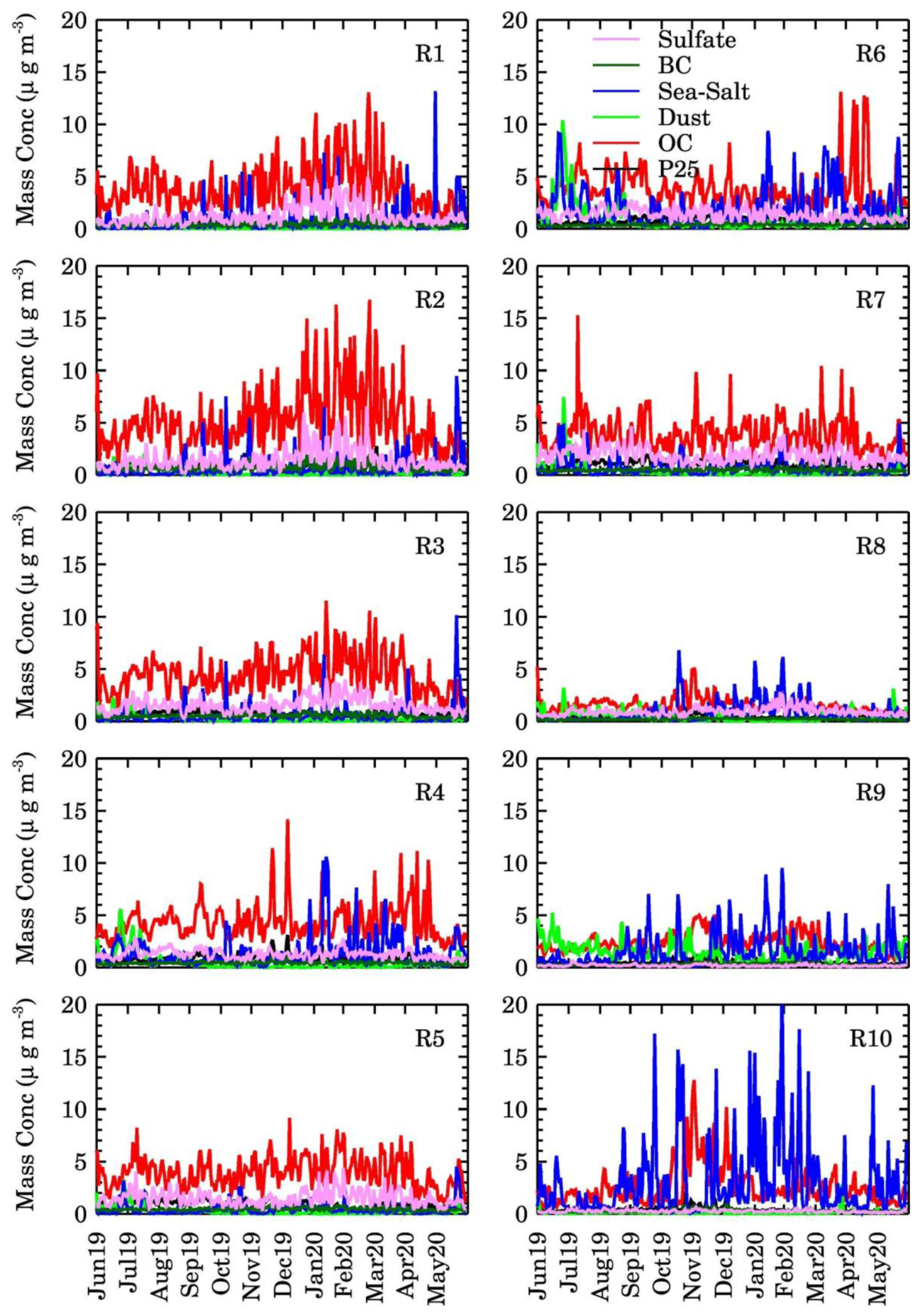

4.2. Surface PM2.5 Evaluation

0.942 × SEAS2 + 1.375 × SULF + P25

5. Conclusions and Outlook

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Burnett, R.T.; Pope, C.A.; Ezzati, M.; Olives, C.; Lim, S.S.; Mehta, S.; Shin, H.H.; Singh, G.; Hubbell, B.; Brauer, M.; et al. An Integrated Risk Function for Estimating the Global Burden of Disease Attributable to Ambient Fine Particulate Matter Exposure. Environ. Health Perspect. 2014, 122, 397–403. [Google Scholar] [CrossRef]

- Fann, N.; Lamson, A.D.; Anenberg, S.C.; Wesson, K.; Risley, D.; Hubbell, B.J. Estimating the National Public Health Burden Associated with Exposure to Ambient PM2.5 and Ozone. Risk Anal. 2012, 32, 81–95. [Google Scholar] [CrossRef]

- Di, Q.; Wang, Y.; Zanobetti, A.; Wang, Y.; Koutrakis, P.; Choirat, C.; Dominici, F.; Schwartz, J.D. Air Pollution and Mortality in the Medicare Population. New Engl. J. Med. 2017, 376, 2513–2522. [Google Scholar] [CrossRef]

- Im, U.; Brandt, J.; Geels, C.; Hansen, K.M.; Christensen, J.H.; Andersen, M.S.; Solazzo, E.; Kioutsioukis, I.; Alyuz, U.; Balzarini, A.; et al. Assessment and Economic Valuation of Air Pollution Impacts on Human Health over Europe and the United States as Calculated by a Multi-Model Ensemble in the Framework of AQMEII3. Atmos. Chem. Phys. 2018, 18, 5967–5989. [Google Scholar] [CrossRef] [PubMed]

- Lee, P.; McQueen, J.; Stajner, I.; Huang, J.; Pan, L.; Tong, D.; Kim, H.; Tang, Y.; Kondragunta, S.; Ruminski, M.; et al. NAQFC Developmental Forecast Guidance for Fine Particulate Matter (PM2.5). Weather Forecast. 2017, 32, 343–360. [Google Scholar] [CrossRef]

- Chouza, F.; Leblanc, T.; Brewer, M.; Wang, P.; Piazzolla, S.; Pfister, G.; Kumar, R.; Drews, C.; Tilmes, S.; Emmons, L. The Impact of Los Angeles Basin Pollution and Stratospheric Intrusions on the Surrounding San Gabriel Mountains as Seen by Surface Measurements, Lidar, and Numerical Models. Atmos. Chem. Phys. Discuss. 2020, 1–29. [Google Scholar] [CrossRef]

- Grell, G.A.; Peckham, S.E.; Schmitz, R.; McKeen, S.A.; Frost, G.; Skamarock, W.C.; Eder, B. Fully Coupled “Online” Chemistry within the WRF Model. Atmos. Environ. 2005, 39, 6957–6975. [Google Scholar] [CrossRef]

- Powers, J.G.; Klemp, J.B.; Skamarock, W.C.; Davis, C.A.; Dudhia, J.; Gill, D.O.; Coen, J.L.; Gochis, D.J.; Ahmadov, R.; Peckham, S.E.; et al. The Weather Research and Forecasting Model: Overview, System Efforts, and Future Directions. Bull. Am. Meteorol. Soc. 2017, 98, 1717–1737. [Google Scholar] [CrossRef]

- Marsh, D.R.; Mills, M.J.; Kinnison, D.E.; Lamarque, J.-F.; Calvo, N.; Polvani, L.M. Climate Change from 1850 to 2005 Simulated in CESM1(WACCM). J. Clim. 2013, 26, 7372–7391. [Google Scholar] [CrossRef]

- Wiedinmyer, C.; Akagi, S.K.; Yokelson, R.J.; Emmons, L.K.; Al-Saadi, J.A.; Orlando, J.J.; Soja, A.J. The Fire INventory from NCAR (FINN): A High Resolution Global Model to Estimate the Emissions from Open Burning. Geosci. Model. Dev. 2011, 4, 625–641. [Google Scholar] [CrossRef]

- Freitas, S.R.; Longo, K.M.; Chatfield, R.; Latham, D.; Silva Dias, M.A.F.; Andreae, M.O.; Prins, E.; Santos, J.C.; Gielow, R.; Carvalho, J.A., Jr. Including the Sub-Grid Scale Plume Rise of Vegetation Fires in Low Resolution Atmospheric Transport Models. Atmos. Chem. Phys. 2007, 7, 3385–3398. [Google Scholar] [CrossRef]

- Kumar, R.; Ghude, S.D.; Biswas, M.; Jena, C.; Alessandrini, S.; Debnath, S.; Kulkarni, S.; Sperati, S.; Soni, V.K.; Nanjundiah, R.S.; et al. Enhancing Accuracy of Air Quality and Temperature Forecasts During Paddy Crop Residue Burning Season in Delhi Via Chemical Data Assimilation. J. Geophys. Res. Atmos. 2020, 125, e2020JD033019. [Google Scholar] [CrossRef]

- Guenther, A.B.; Jiang, X.; Heald, C.L.; Sakulyanontvittaya, T.; Duhl, T.; Emmons, L.K.; Wang, X. The Model of Emissions of Gases and Aerosols from Nature Version 2.1 (MEGAN2.1): An Extended and Updated Framework for Modeling Biogenic Emissions. Geosci. Model. Dev. 2012, 5, 1471–1492. [Google Scholar] [CrossRef]

- Guenther, A.; Karl, T.; Harley, P.; Wiedinmyer, C.; Palmer, P.I.; Geron, C. Estimates of Global Terrestrial Isoprene Emissions Using MEGAN (Model of Emissions of Gases and Aerosols from Nature). Atmos. Chem. Phys. 2006, 6, 3181–3210. [Google Scholar] [CrossRef]

- Ginoux, P.; Chin, M.; Tegen, I.; Prospero, J.M.; Holben, B.; Dubovik, O.; Lin, S.-J. Sources and Distributions of Dust Aerosols Simulated with the GOCART Model. J. Geophys. Res. Atmos. 2001, 106, 20255–20273. [Google Scholar] [CrossRef]

- Gong, S.L.; Barrie, L.A.; Blanchet, J.-P. Modeling Sea-Salt Aerosols in the Atmosphere: 1. Model Development. J. Geophys. Res. Atmos. 1997, 102, 3805–3818. [Google Scholar] [CrossRef]

- Emmons, L.K.; Walters, S.; Hess, P.G.; Lamarque, J.-F.; Pfister, G.G.; Fillmore, D.; Granier, C.; Guenther, A.; Kinnison, D.; Laepple, T.; et al. Description and Evaluation of the Model for Ozone and Related Chemical Tracers, Version 4 (MOZART-4). Geosci. Model. Dev. 2010, 3, 43–67. [Google Scholar] [CrossRef]

- Chin, M.; Ginoux, P.; Kinne, S.; Torres, O.; Holben, B.N.; Duncan, B.N.; Martin, R.V.; Logan, J.A.; Higurashi, A.; Nakajima, T. Tropospheric Aerosol Optical Thickness from the GOCART Model and Comparisons with Satellite and Sun Photometer Measurements. J. Atmos. Sci. 2002, 59, 461–483. [Google Scholar] [CrossRef]

- Chin, M.; Rood, R.B.; Lin, S.-J.; Müller, J.-F.; Thompson, A.M. Atmospheric Sulfur Cycle Simulated in the Global Model GOCART: Model Description and Global Properties. J. Geophys. Res. Atmos. 2000, 105, 24671–24687. [Google Scholar] [CrossRef]

- Thompson, G.; Field, P.R.; Rasmussen, R.M.; Hall, W.D. Explicit Forecasts of Winter Precipitation Using an Improved Bulk Microphysics Scheme. Part II: Implementation of a New Snow Parameterization. Mon. Weather Rev. 2008, 136, 5095–5115. [Google Scholar] [CrossRef]

- Iacono, M.J.; Delamere, J.S.; Mlawer, E.J.; Shephard, M.W.; Clough, S.A.; Collins, W.D. Radiative Forcing by Long-Lived Greenhouse Gases: Calculations with the AER Radiative Transfer Models. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Janjic, Z.I. The step-mountain Eta coordinate model: Further developments of the convection, viscous sublayer and turbulence closure schemes. Mon. Weather Rev. 1994, 122, 927–945. [Google Scholar] [CrossRef]

- Niu, G.-Y.; Yang, Z.-L.; Mitchell, K.E.; Chen, F.; Ek, M.B.; Barlage, M.; Kumar, A.; Manning, K.; Niyogi, D.; Rosero, E.; et al. The Community Noah Land Surface Model with Multiparameterization Options (Noah-MP): 1. Model Description and Evaluation with Local-Scale Measurements. J. Geophys. Res. Atmos. 2011, 116. [Google Scholar] [CrossRef]

- Hong, S.-Y.; Noh, Y.; Dudhia, J. A New Vertical Diffusion Package with an Explicit Treatment of Entrainment Processes. Mon. Weather Rev. 2006, 134, 2318–2341. [Google Scholar] [CrossRef]

- Grell, G.A.; Freitas, S.R. A Scale and Aerosol Aware Stochastic Convective Parameterization for Weather and Air Quality Modeling. Atmos. Chem. Phys. 2014, 14, 5233–5250. [Google Scholar] [CrossRef]

- Wesely, M.L. Parameterization of Surface Resistances to Gaseous Dry Deposition in Regional-Scale Numerical Models. Atmos. Environ. 1989, 23, 1293–1304. [Google Scholar] [CrossRef]

- Neu, J.L.; Prather, M.J. Toward a More Physical Representation of Precipitation Scavenging in Global Chemistry Models: Cloud Overlap and Ice Physics and Their Impact on Tropospheric Ozone. Atmos. Chem. Phys. 2012, 12, 3289–3310. [Google Scholar] [CrossRef]

- UCAR. WRF-Chem Tracers for FIREX-AQ. 2021. Available online: https://www.acom.ucar.edu/firex-aq/tracers.shtml (accessed on 20 February 2021).

- UCAR. WRF-Chem Forecast maps. 2021. Available online: https://www.acom.ucar.edu/firex-aq/forecast.shtml (accessed on 20 February 2021).

- Golf, J.A. Saturation Pressure of Water on the New Kelvin Scale. In Humidity and Moisture: Measurement and Control in Science and Industry; Reinhold Publishing: New York, NY, USA, 1965. [Google Scholar]

- Mass, C.; Ovens, D. WRF Model Physics: Progress, Problems and Perhaps Some Solutions. In Proceedings of the 11th WRF Users’ Workshop, Boulder, CO, USA, 21 June 2010. [Google Scholar]

- Cheng, W.Y.Y.; Steenburgh, W.J. Evaluation of Surface Sensible Weather Forecasts by the WRF and the Eta Models over the Western United States. Weather Forecast 2005, 20, 812–821. [Google Scholar] [CrossRef]

- Kumar, R.; Monache, L.D.; Bresch, J.; Saide, P.E.; Tang, Y.; Liu, Z.; da Silva, A.M.; Alessandrini, S.; Pfister, G.; Edwards, D.; et al. Toward Improving Short-Term Predictions of Fine Particulate Matter Over the United States Via Assimilation of Satellite Aerosol Optical Depth Retrievals. J. Geophys. Res. Atmos. 2019, 124, 2753–2773. [Google Scholar] [CrossRef]

- Tang, Y.; Pagowski, M.; Chai, T.; Pan, L.; Lee, P.; Baker, B.; Kumar, R.; Monache, L.D.; Tong, D.; Kim, H.-C. A Case Study of Aerosol Data Assimilation with the Community Multi-Scale Air Quality Model over the Contiguous United States Using 3D-Var and Optimal Interpolation Methods. Geosci. Model. Dev. 2017, 10, 4743–4758. [Google Scholar] [CrossRef]

- Gan, C.-M.; Hogrefe, C.; Mathur, R.; Pleim, J.; Xing, J.; Wong, D.; Gilliam, R.; Pouliot, G.; Wei, C. Assessment of the Effects of Horizontal Grid Resolution on Long-Term Air Quality Trends Using Coupled WRF-CMAQ Simulations. Atmos. Environ. 2016, 132, 207–216. [Google Scholar] [CrossRef]

- Knote, C.; Hodzic, A.; Jimenez, J.L. The Effect of Dry and Wet Deposition of Condensable Vapors on Secondary Organic Aerosols Concentrations over the Continental US. Atmos. Chem. Phys. 2015, 15, 12413–12443. [Google Scholar] [CrossRef]

- Hodzic, A.; Aumont, B.; Knote, C.; Lee-Taylor, J.; Madronich, S.; Tyndall, G. Volatility Dependence of Henry’s Law Constants of Condensable Organics: Application to Estimate Depositional Loss of Secondary Organic Aerosols. Geophys. Res. Lett. 2014, 41, 4795–4804. [Google Scholar] [CrossRef]

| Atmospheric Process | Parameterization |

|---|---|

| Cloud Microphysics | Thompson scheme [20] |

| Short- and Long-wave radiation | Rapid Radiative Transfer Model for GCMs [21] |

| Surface Layer | Eta Similarity [22] |

| Land Surface model | Unified Noah Land-surface model [23] |

| Planetary Boundary Layer | Yonsei University Scheme (YSU) [24] |

| Cumulus | Grell-Freitas ensemble scheme [25] |

| Dry Deposition | Wesely [26] |

| Wet Deposition | Neu and Prather [27] |

| Photolysis | Troposphere Ultraviolet Visible (TUV) model |

| Fire Plume Rise | Freitas et al. [11] |

| Soil NOx emissions | MEGAN v2.0.4 [13,14] |

| r | Mean Bias | Root Mean Squared Error | ||

|---|---|---|---|---|

| Temperature (°C) | Day-1 | 0.99–1.00 | −1.52–−0.64 | 0.88–1.74 |

| Day-2 | 0.99–1.00 | −1.79–−0.62 | 0.84–1.73 | |

| Relative humidity (%) | Day-1 | 0.88–0.96 | 0.61–7.43 | 3.39–9.21 |

| Day-2 | 0.88–0.95 | 0.62–7.98 | 2.98–8.71 | |

| Water vapor mixing ratios (g/kg) | Day-1 | 0.97–1.00 | −0.57–−0.01 | 0.32–0.68 |

| Day-2 | 0.97–0.99 | −0.71–−0.04 | 0.35–0.90 | |

| Surface Pressure (hPa) | Day-1 | 0.63–1.00 | −7.55–0.01 | 0.34–7.61 |

| Day-2 | 0.62–0.99 | −7.67–0.05 | 0.61–7.74 | |

| Wind Speed (m/s) | Day-1 | 0.64–0.92 | 0.36–1.25 | 0.53–1.33 |

| Day-2 | 0.32–0.74 | 0.25–1.36 | 0.49–1.45 | |

| Wind Direction (degrees) | Day-1 | 0.77–0.97 | −3.43–10.83 | 10.66–25.79 |

| Day-2 | 0.76–0.95 | −5.83–14.93 | 13.02–26.90 | |

| PM2.5 (µg m−3) (all sites) | Day-1 | 0.28–0.67 | −1.66–0.99 | 2.31–3.84 |

| Day-2 | 0.29–0.67 | −1.66–0.99 | 2.44–3.77 | |

| PM2.5 (µg m−3) (Urban sites) | Day-1 | 0.24–0.64 | −1.88–1.06 | 2.43–4.06 |

| Day-2 | 0.25–0.64 | −1.85–1.04 | 2.57–4.01 | |

| PM2.5 (µg m−3) (Suburban sites) | Day-1 | 0.24–0.67 | −2.08–1.00 | 2.40–3.74 |

| Day-2 | 0.25–0.67 | −2.07–0.99 | 2.53–3.66 | |

| PM2.5 (µg m−3) (Rural sites) | Day-1 | 0.24–0.67 | −2.08–1.00 | 2.31–4.02 |

| Day-2 | 0.25–0.67 | −2.07–0.99 | 2.33–4.03 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, R.; Bhardwaj, P.; Pfister, G.; Drews, C.; Honomichl, S.; D’Attilo, G. Description and Evaluation of the Fine Particulate Matter Forecasts in the NCAR Regional Air Quality Forecasting System. Atmosphere 2021, 12, 302. https://doi.org/10.3390/atmos12030302

Kumar R, Bhardwaj P, Pfister G, Drews C, Honomichl S, D’Attilo G. Description and Evaluation of the Fine Particulate Matter Forecasts in the NCAR Regional Air Quality Forecasting System. Atmosphere. 2021; 12(3):302. https://doi.org/10.3390/atmos12030302

Chicago/Turabian StyleKumar, Rajesh, Piyush Bhardwaj, Gabriele Pfister, Carl Drews, Shawn Honomichl, and Garth D’Attilo. 2021. "Description and Evaluation of the Fine Particulate Matter Forecasts in the NCAR Regional Air Quality Forecasting System" Atmosphere 12, no. 3: 302. https://doi.org/10.3390/atmos12030302

APA StyleKumar, R., Bhardwaj, P., Pfister, G., Drews, C., Honomichl, S., & D’Attilo, G. (2021). Description and Evaluation of the Fine Particulate Matter Forecasts in the NCAR Regional Air Quality Forecasting System. Atmosphere, 12(3), 302. https://doi.org/10.3390/atmos12030302