Single Image Dehazing Using Sparse Contextual Representation

Abstract

:1. Introduction

2. Hazy Image Formulation

3. Our Method

3.1. Piecewise-Smooth Assumption

3.2. The Lower Bound of Transmission Map

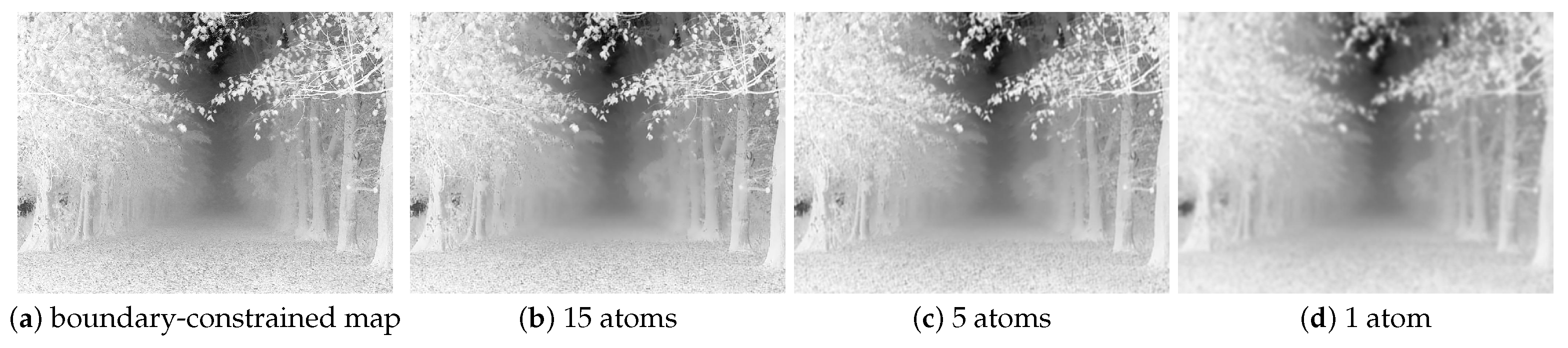

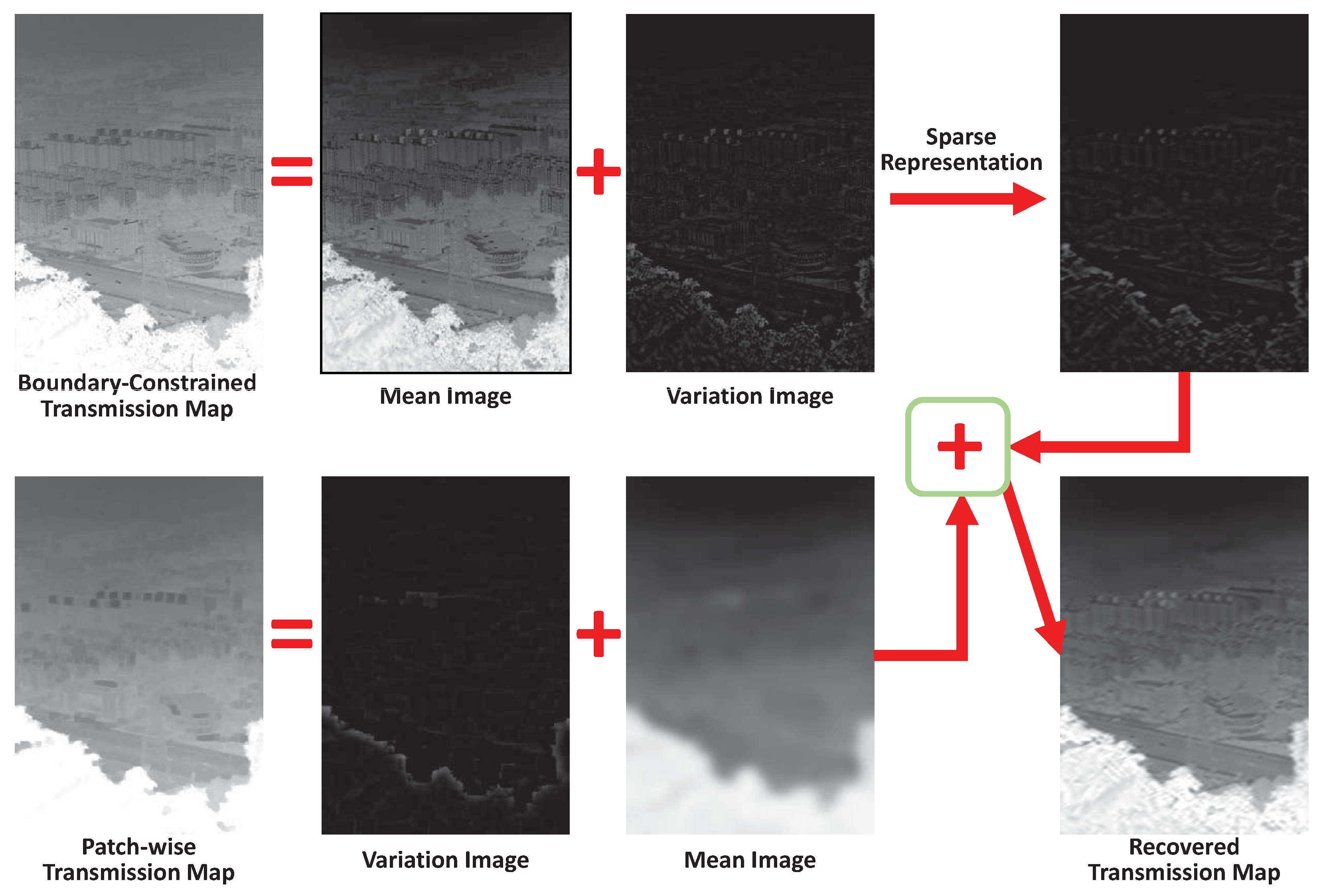

3.3. Contextual Regularization Using Sparse Representation

3.4. Atmospheric Light Estimation

4. Experimental Results

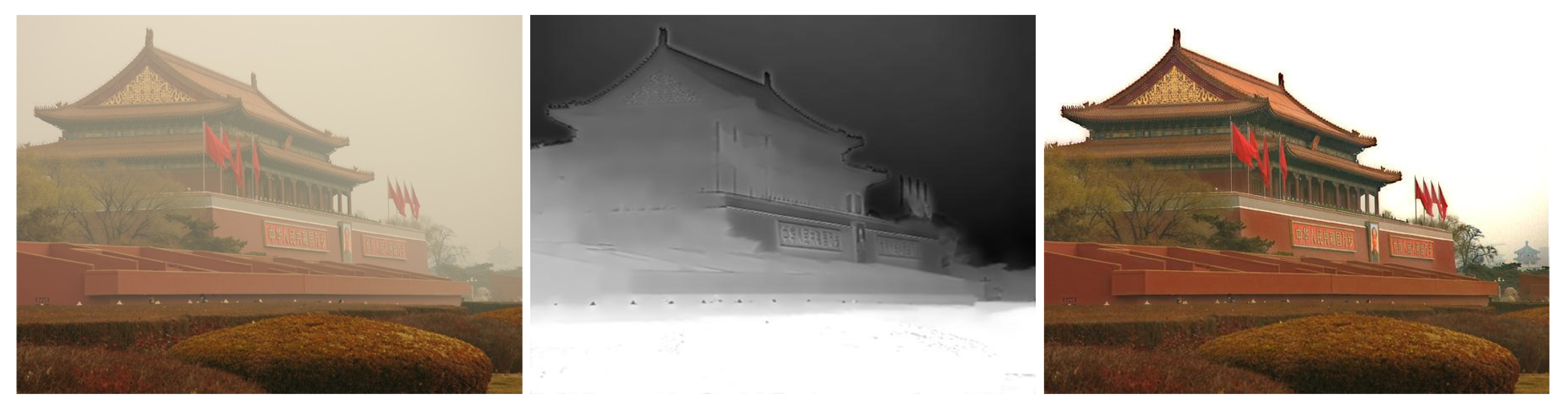

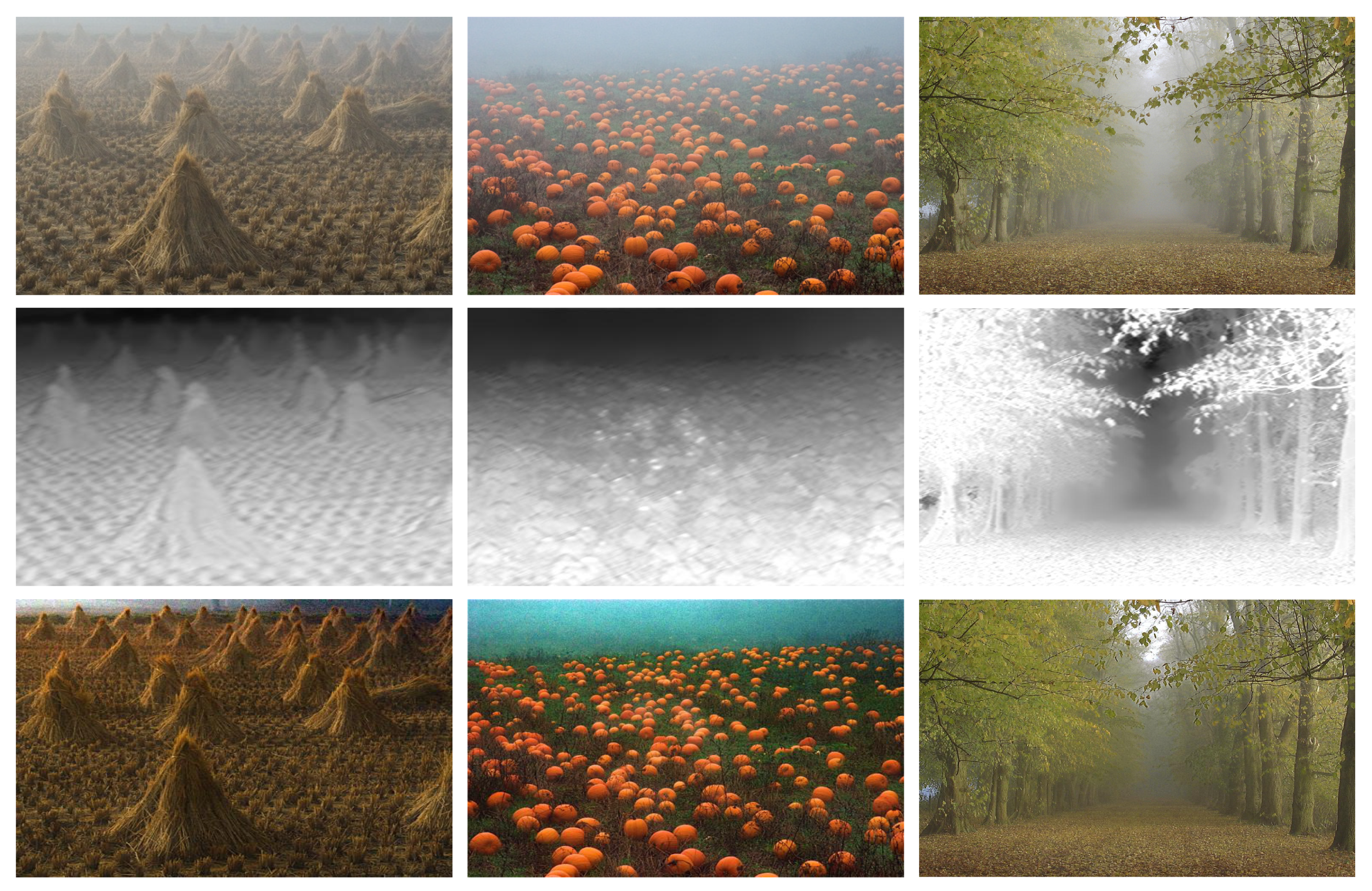

4.1. Tests on Real-World Images

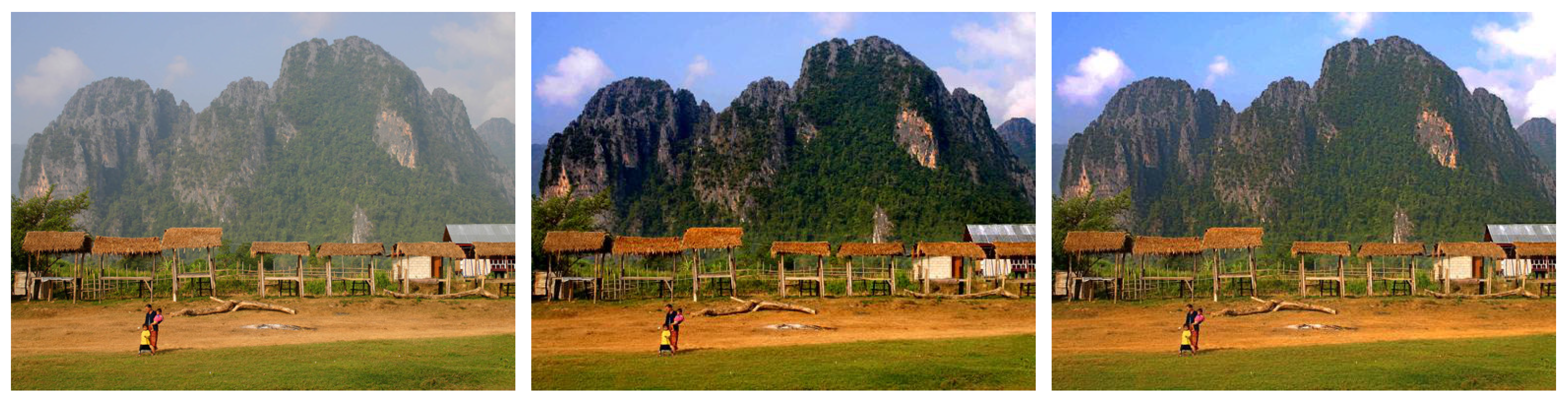

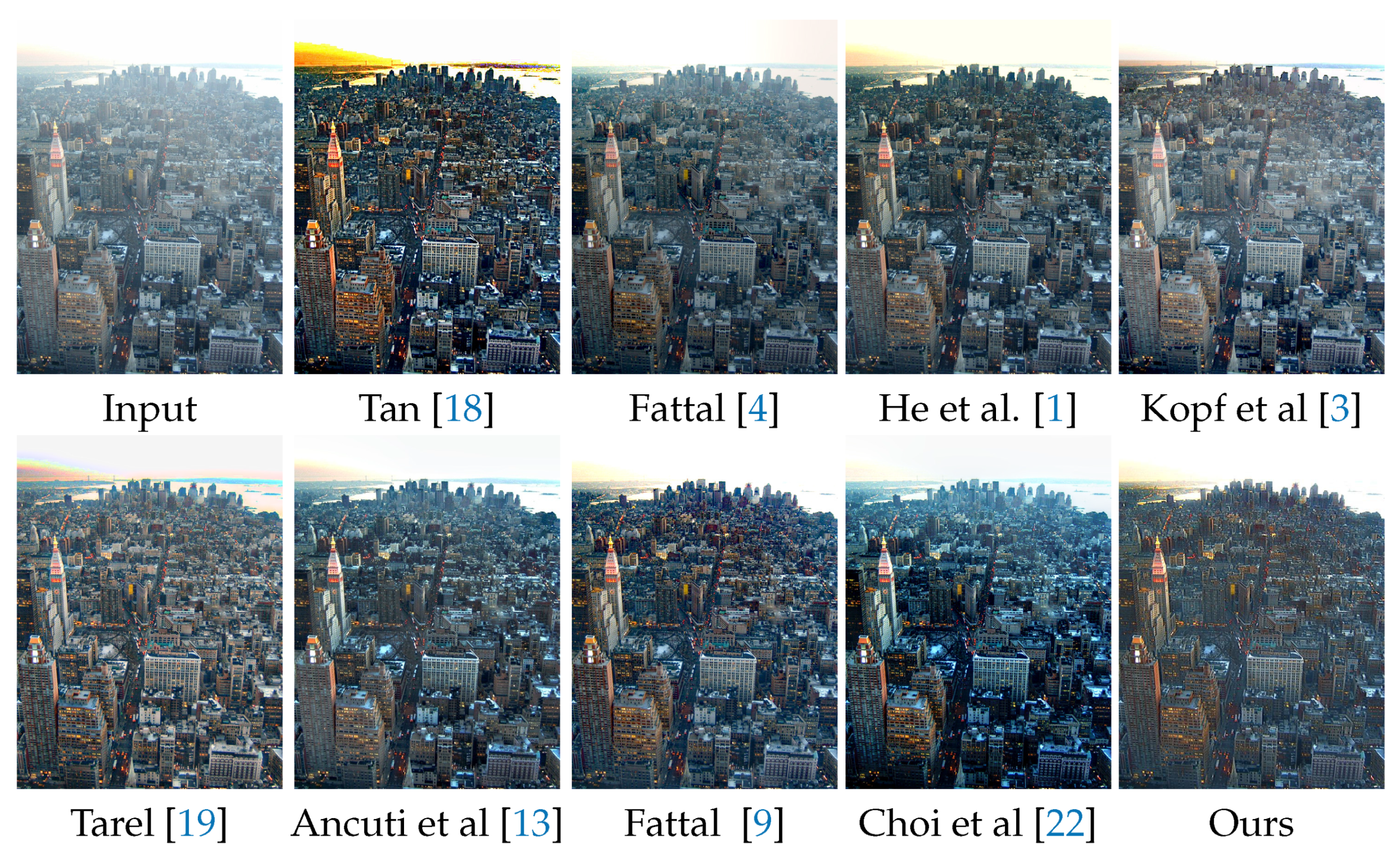

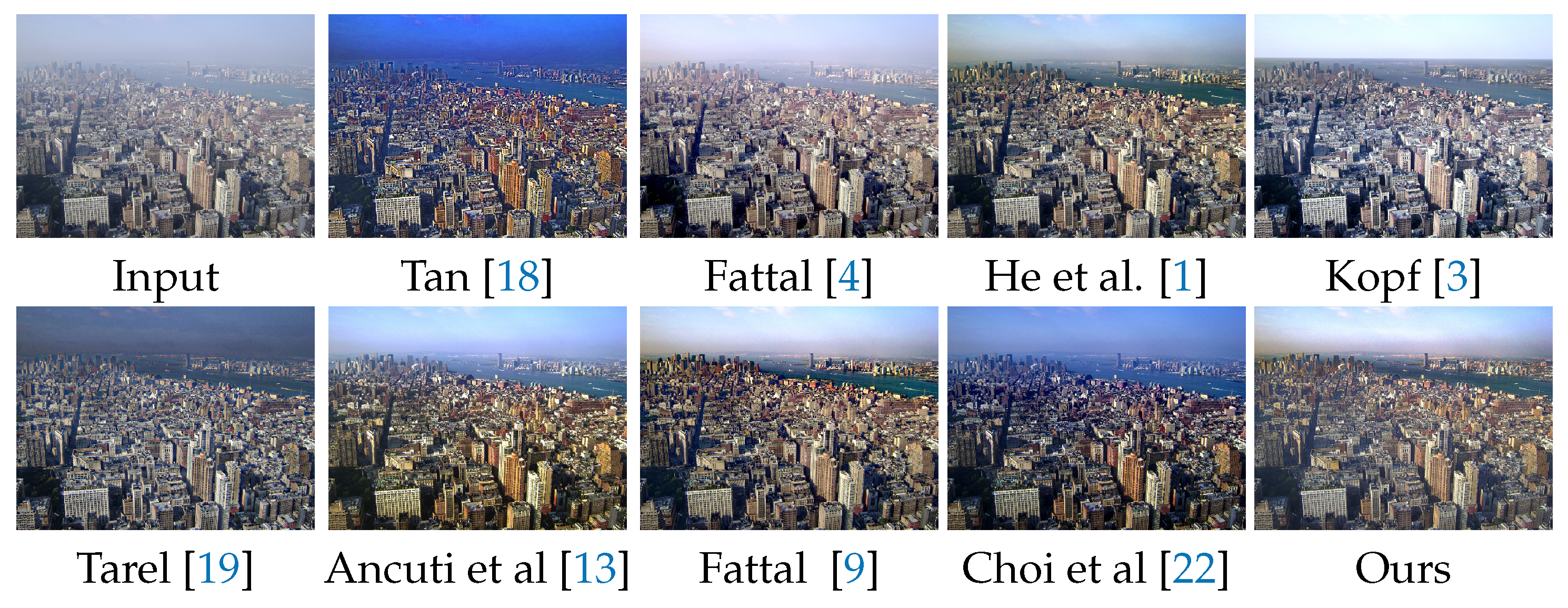

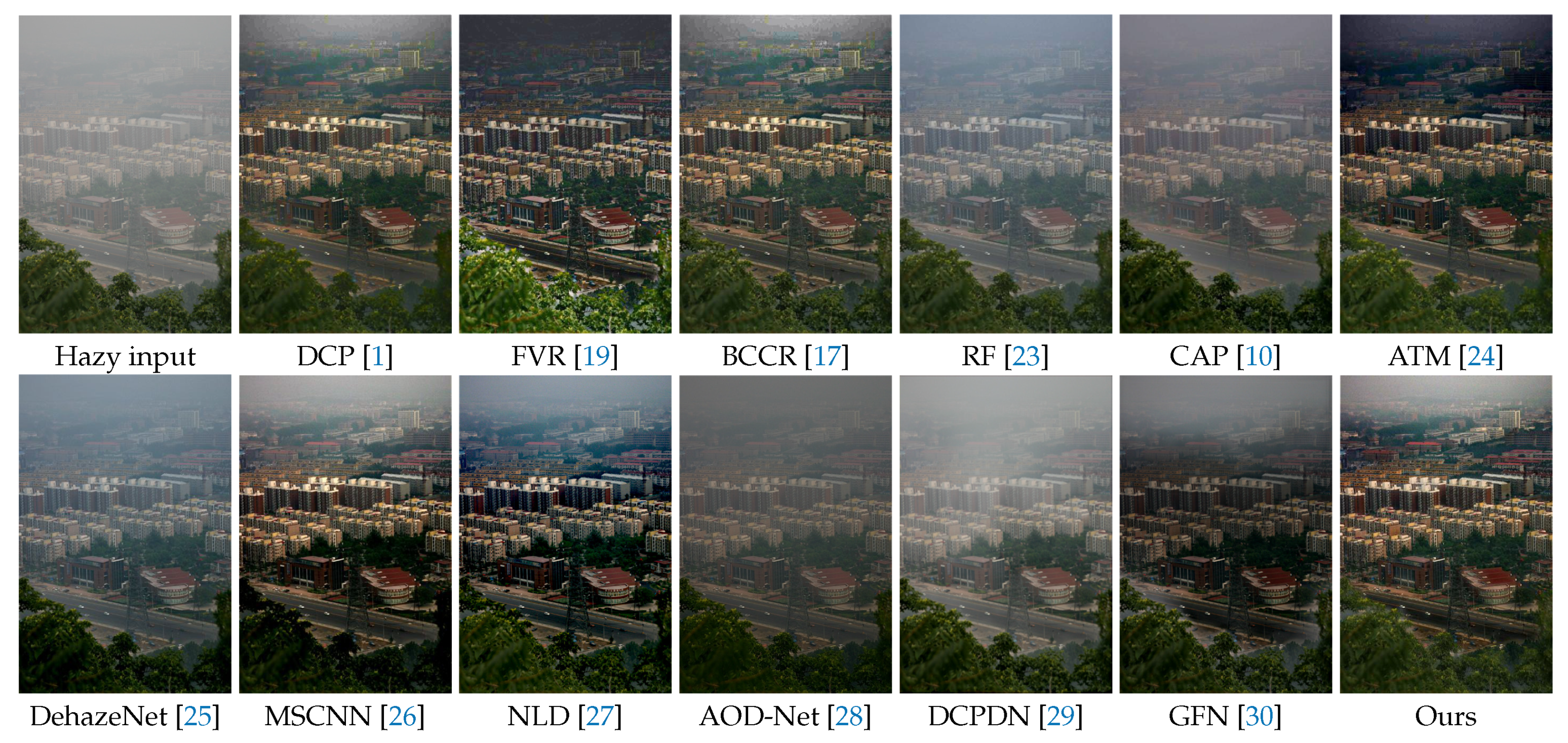

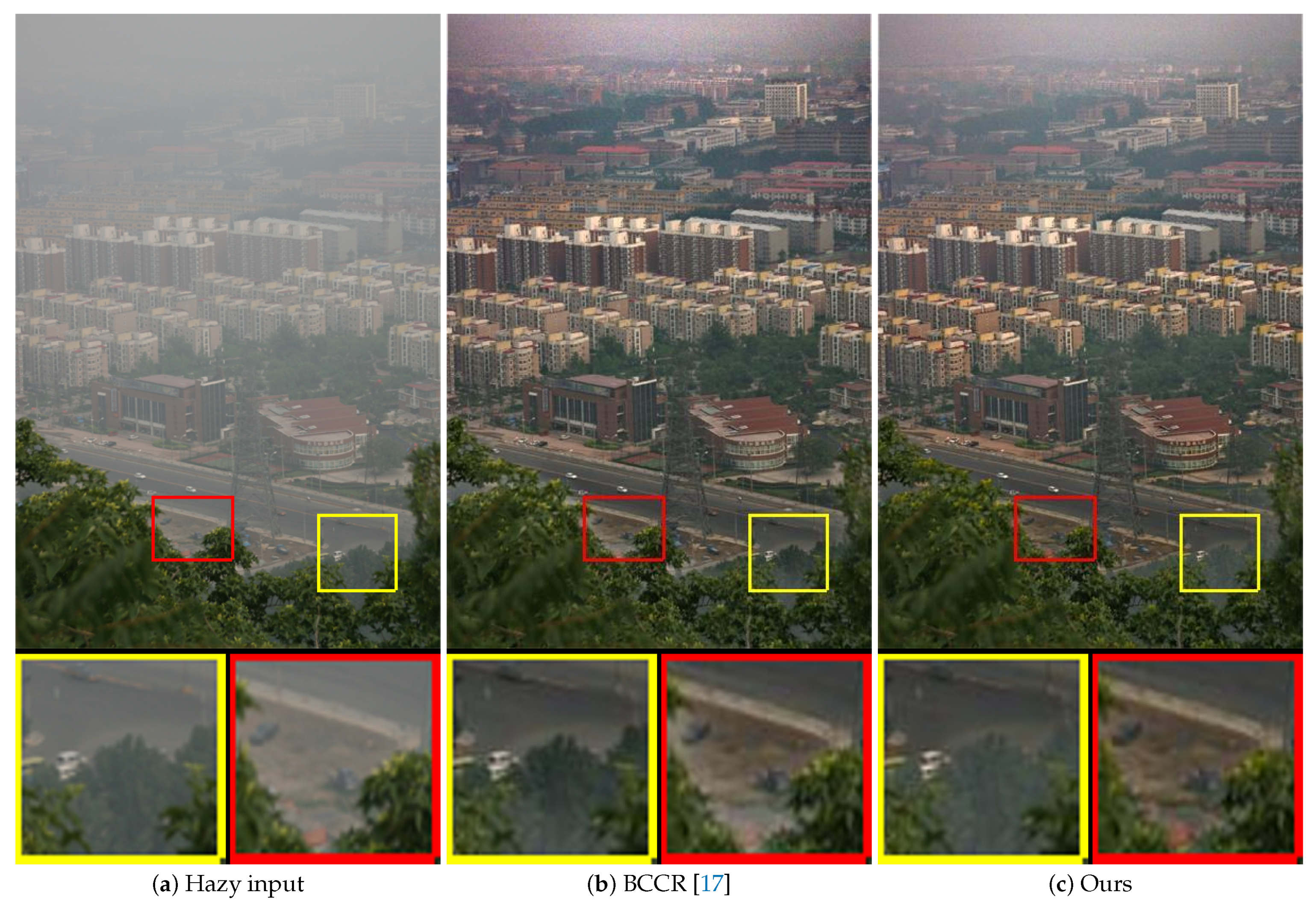

4.2. Visual Comparison

4.3. Quantitative Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Instant dehazing of images using polarization. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. CVPR 2001, 1, 325–332. [Google Scholar]

- Kopf, J.; Neubert, B.; Chen, B.; Cohen, M.; Cohen-Or, D.; Deussen, O.; Uyttendaele, M.; Lischinski, D. Deep photo: Model-based photograph enhancement and viewing. ACM Trans. Graph. TOG 2008, 27, 32–39. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Kratz, L.; Nishino, K. Factorizing Scene Albedo and Depth from a Single Foggy Image. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 1701–1708. [Google Scholar]

- Shanmugapriya, S.; Valarmathi, A. Efficient fuzzy c-means based multilevel image segmentation for brain tumor detection in MR images. In Design Automation for Embedded Systems; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Versaci, M.; Calcagno, S.; Morabito, F. Fuzzy geometrical approach based on unit hyper-cubes for image contrast enhancement. In Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 19–21 October 2015. [Google Scholar]

- Gibson, K.B.; Nguyen, T.Q. An analysis of single image defogging methods using a color ellipsoid framework. EURASIP J. Image Video Process. 2013, 2013, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. TOG 2014, 34, 1–14. [Google Scholar] [CrossRef]

- Zhu, Q.; Mai, J.; Shao, L. A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed] [Green Version]

- Li, Z.; Zheng, J. Edge-Preserving Decomposition-Based Single Image Haze Removal. IEEE Trans. Image Process. 2015, 24, 5432–5441. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.O.; Ancuti, C.; Hermans, C.; Bekaert, P. A fast semi-inverse approach to detect and remove the haze from a single image. In Computer Vision–ACCV 2010; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; pp. 501–514. [Google Scholar]

- Ancuti, C.O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef] [PubMed]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Koschmieder, H. Theorie der horizontalen Sichtweite, Beiträge zur Physik der freien Atmosphäre. Meteorol. Z 1924, 12, 33–53. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 24–26 June 2008; pp. 1–8. [Google Scholar]

- Tarel, J.P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 2201–2208. [Google Scholar]

- Nishino, K.; Kratz, L.; Lombardi, S. Bayesian defogging. Int. J. Comput. Vis. 2012, 98, 263–278. [Google Scholar] [CrossRef]

- Wang, Y.K.; Fan, C.T. Single Image Defogging by Multiscale Depth Fusion. IEEE Trans. Image Process. 2014, 23, 4826–4837. [Google Scholar] [CrossRef] [PubMed]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef] [PubMed]

- Tang, K.; Yang, J.; Wang, J. Investigating haze-relevant features in a learning framework for image dehazing. In Proceedings of the CVPR, Columbus, OH, USA, 24–27 June 2014; pp. 2995–3000. [Google Scholar]

- Sulami, M.; Glatzer, I.; Fattal, R.; Werman, M. Automatic recovery of the atmospheric light in hazy images. In Proceedings of the ICCP, Cluj-Napoca, Romania, 4–6 September 2014. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. TIP 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the ECCV, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. An All-in-One Network for Dehazing and Beyond. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely Connected Pyramid Dehazing Network. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.H. Gated Fusion Network for Single Image Dehazing. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Hautière, N.; Tarel, J.P.; Aubert, D.; Dumont, E. Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. J. 2008, 27, 87–95. [Google Scholar] [CrossRef]

| Image | Tan | Fatta | Kopf et al. | He et al. | Tarrel et al. | Ancuti et al. | Choi et al. | Ours | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| e | ∑ | e | ∑ | e | ∑ | e | ∑ | e | ∑ | e | ∑ | e | ∑ | e | ∑ | |||||||||

| ny12 | −0.14 | 0.02 | 2.34 | −0.06 | 0.09 | 1.32 | 0.05 | 0.00 | 1.42 | 0.06 | 0.00 | 1.42 | 0.07 | 0.0 | 1.88 | 0.02 | 0.00 | 1.49 | 0.09 | 0.00 | 1.56 | 0.26 | 0.00 | 1.42 |

| ny17 | −0.06 | 0.01 | 2.22 | −0.12 | 0.02 | 1.56 | 0.01 | 0.01 | 1.62 | 0.01 | 0.00 | 1.65 | −0.01 | 0.0 | 1.87 | 0.12 | 0.00 | 1.54 | 0.03 | 0.00 | 1.49 | 0.15 | 0.00 | 1.59 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, J.; Chen, L.; Xu, J.; Ren, W. Single Image Dehazing Using Sparse Contextual Representation. Atmosphere 2021, 12, 1266. https://doi.org/10.3390/atmos12101266

Qin J, Chen L, Xu J, Ren W. Single Image Dehazing Using Sparse Contextual Representation. Atmosphere. 2021; 12(10):1266. https://doi.org/10.3390/atmos12101266

Chicago/Turabian StyleQin, Jing, Liang Chen, Jian Xu, and Wenqi Ren. 2021. "Single Image Dehazing Using Sparse Contextual Representation" Atmosphere 12, no. 10: 1266. https://doi.org/10.3390/atmos12101266

APA StyleQin, J., Chen, L., Xu, J., & Ren, W. (2021). Single Image Dehazing Using Sparse Contextual Representation. Atmosphere, 12(10), 1266. https://doi.org/10.3390/atmos12101266