Wind Speed Forecast Based on Post-Processing of Numerical Weather Predictions Using a Gradient Boosting Decision Tree Algorithm

Abstract

1. Introduction

2. Experiments

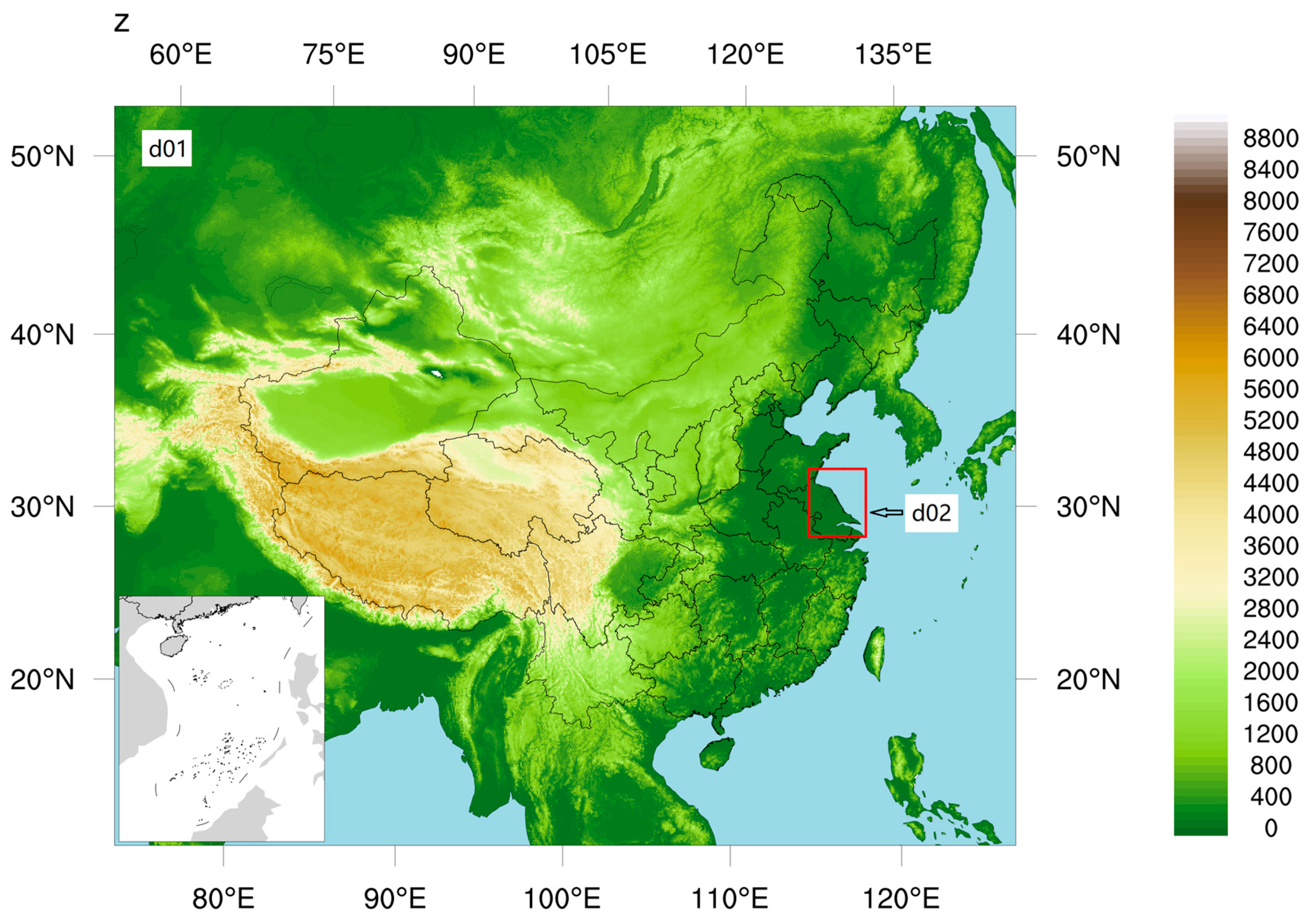

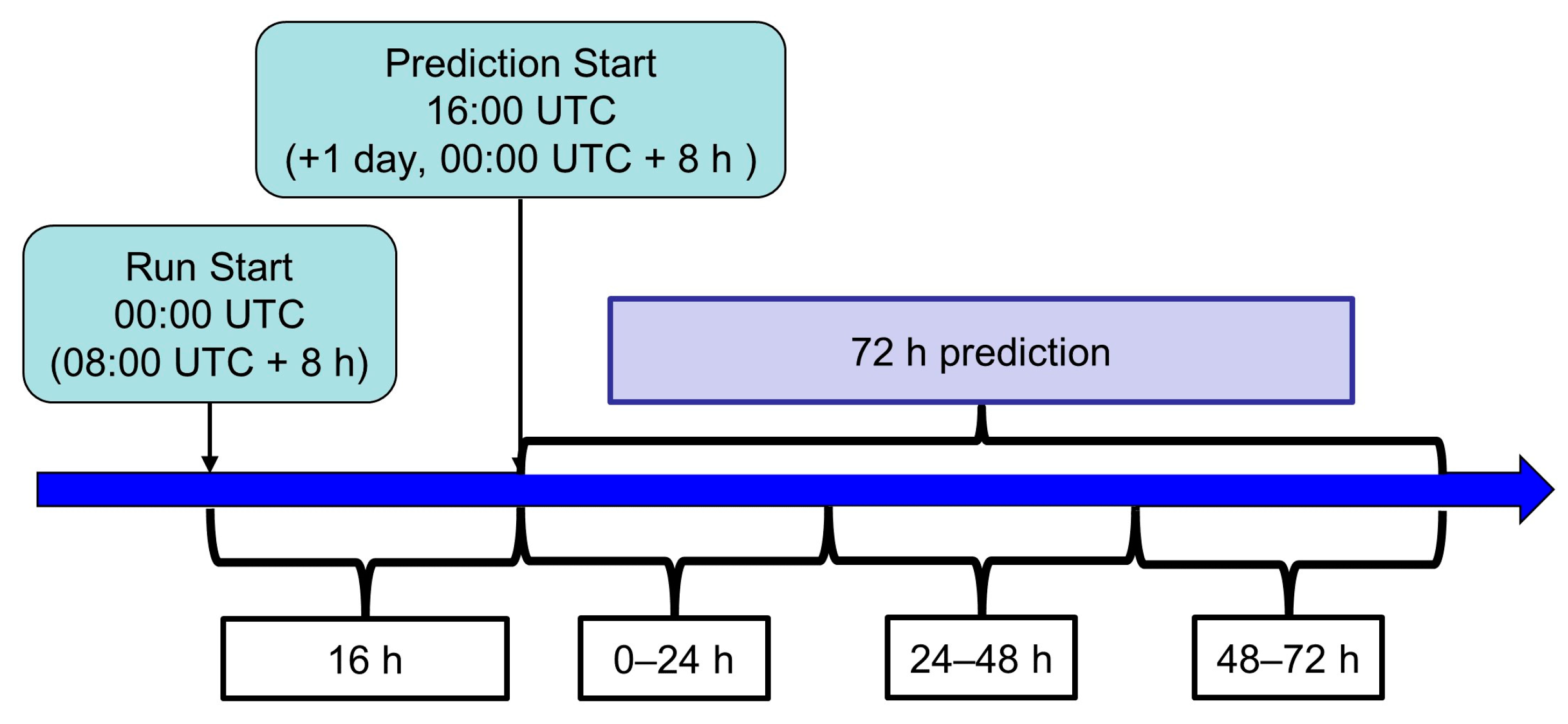

2.1. Numerical Weather Model

2.2. Wind Observation Data

2.3. Results Measurements

2.3.1. Root Mean Square Error

2.3.2. Index of Agreement

2.3.3. Correlation Coefficient

2.3.4. Nash–Sutcliffe Efficiency Coefficient

2.4. Gradient Boosting Decision Tree

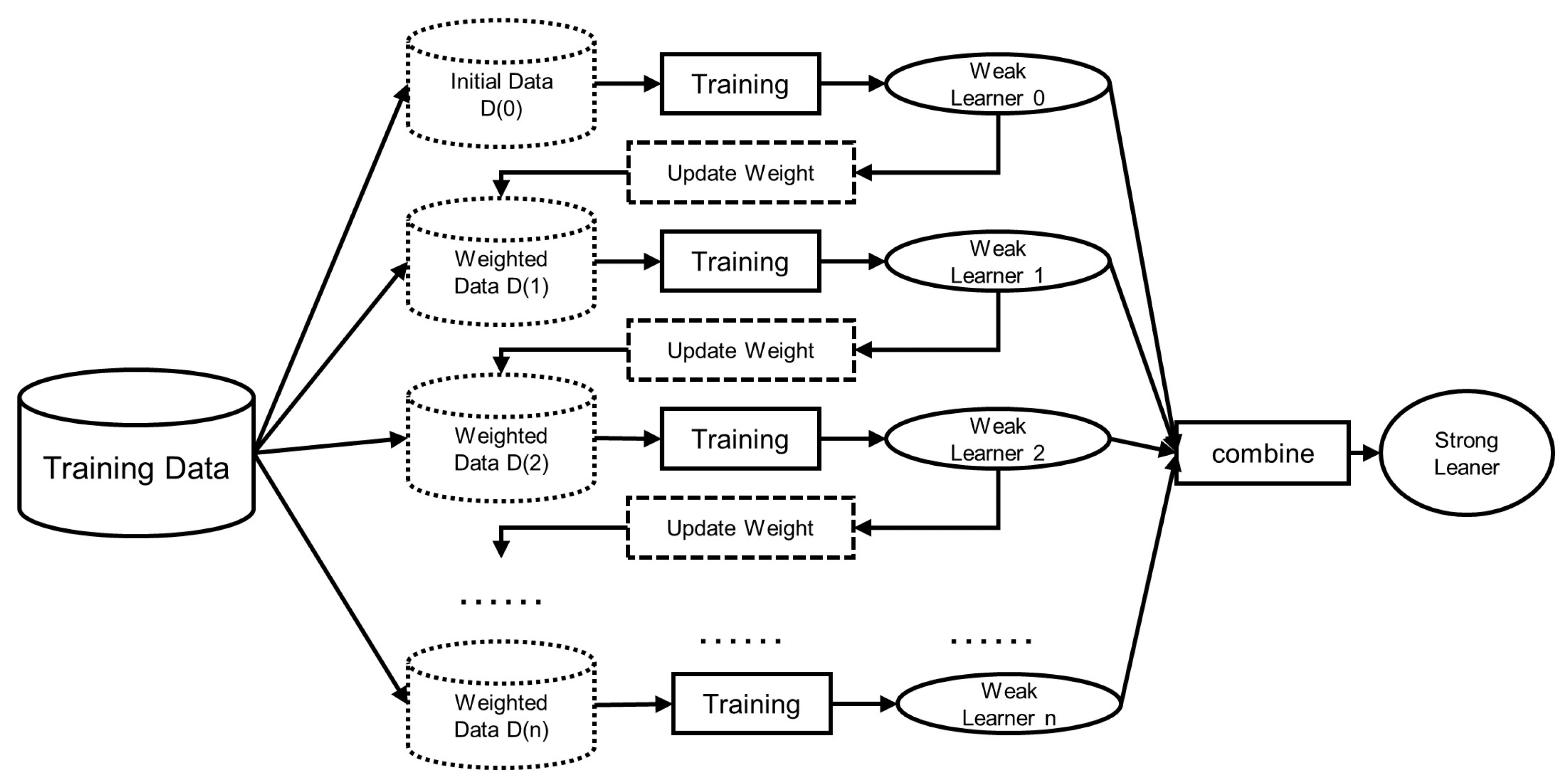

2.4.1. Ensemble Learning Approach: Boosting

2.4.2. Classification and Regression Tree (CART)

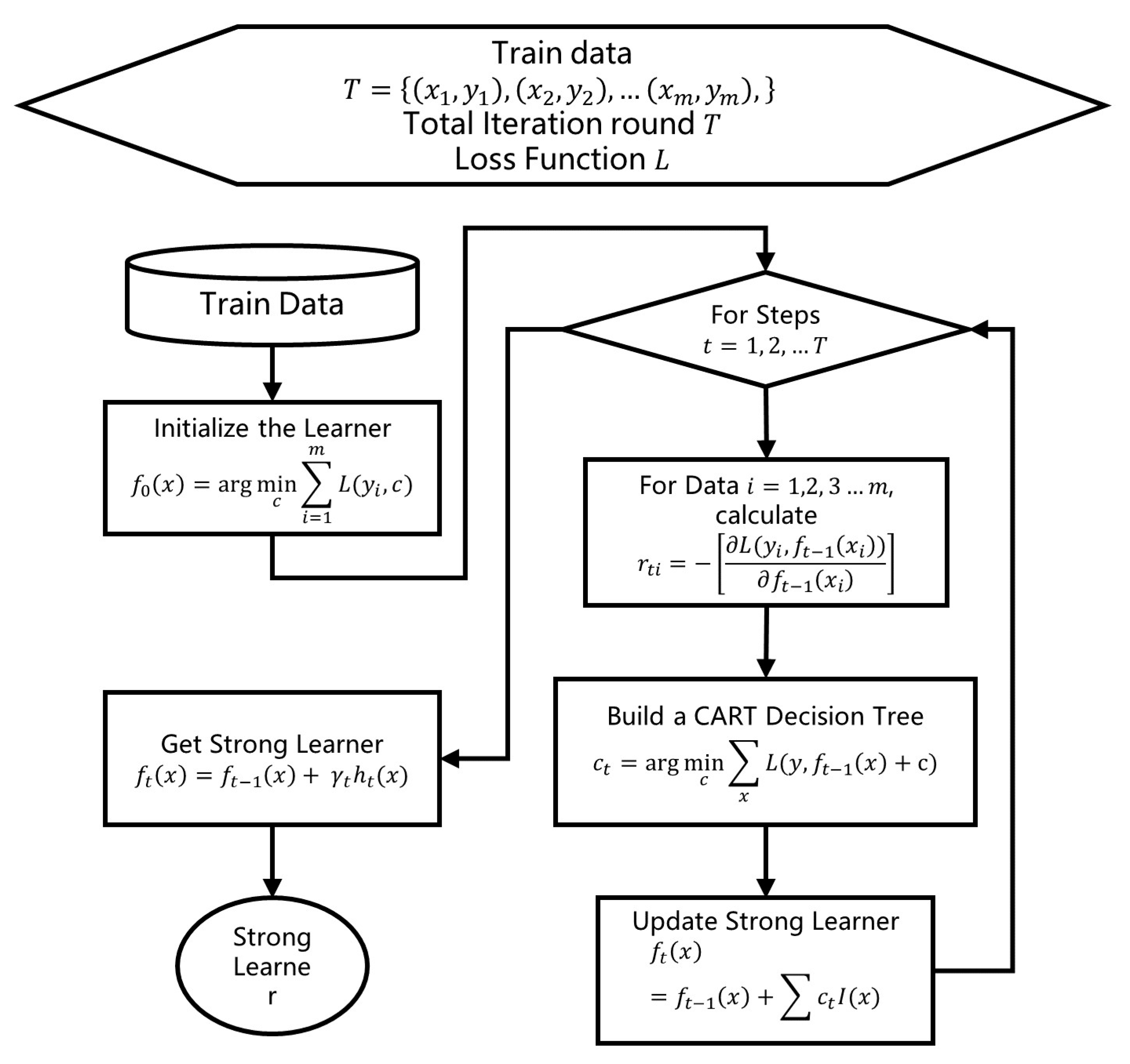

2.4.3. Training Process of GBDT

2.4.4. Feature Importance of GBDT

2.5. Features Selection and Parameters Setting of GBDT

2.6. Models Used for Comparison

2.7. Significance Test

- Whether the statistical variables (RMSE, IA, R, NSE) of GBDT results have changed significantly compared to WRF results.

- Whether the statistical variables (RMSE, IA, R, NSE) of GBDT results have changed significantly compared to the comparison models (DTR, MLPR).

3. Results

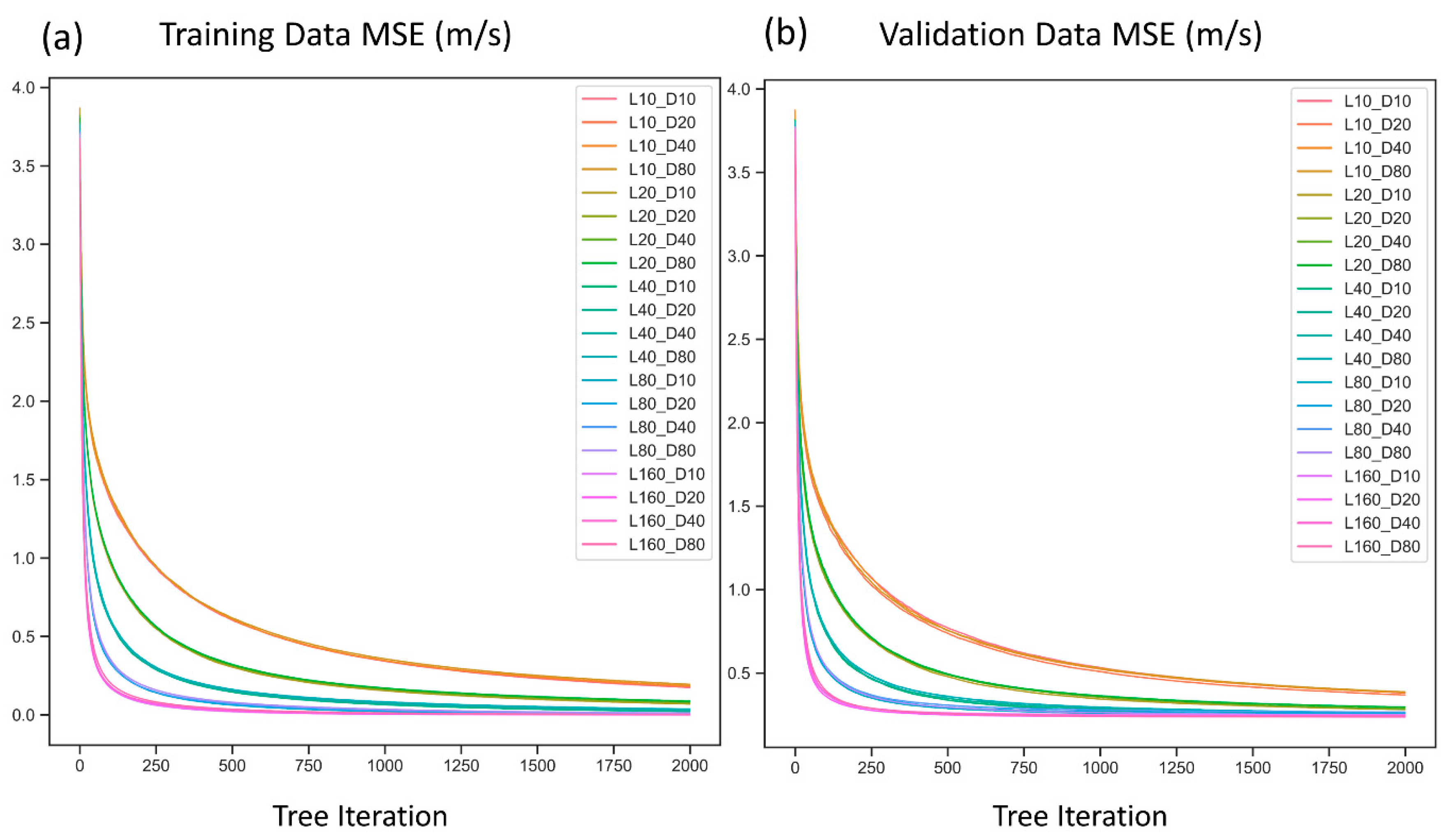

3.1. GBDT Parameters Tuning Results

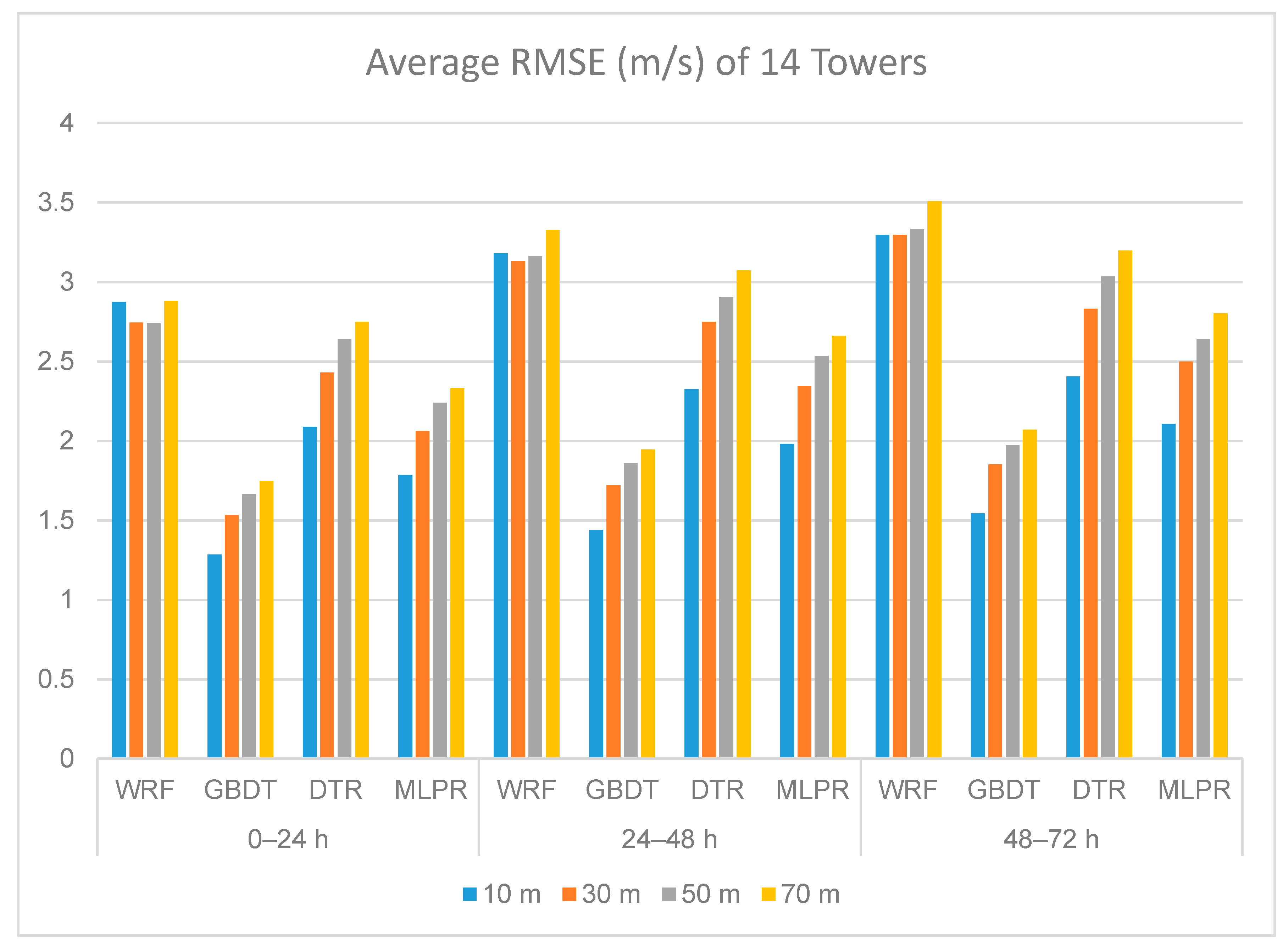

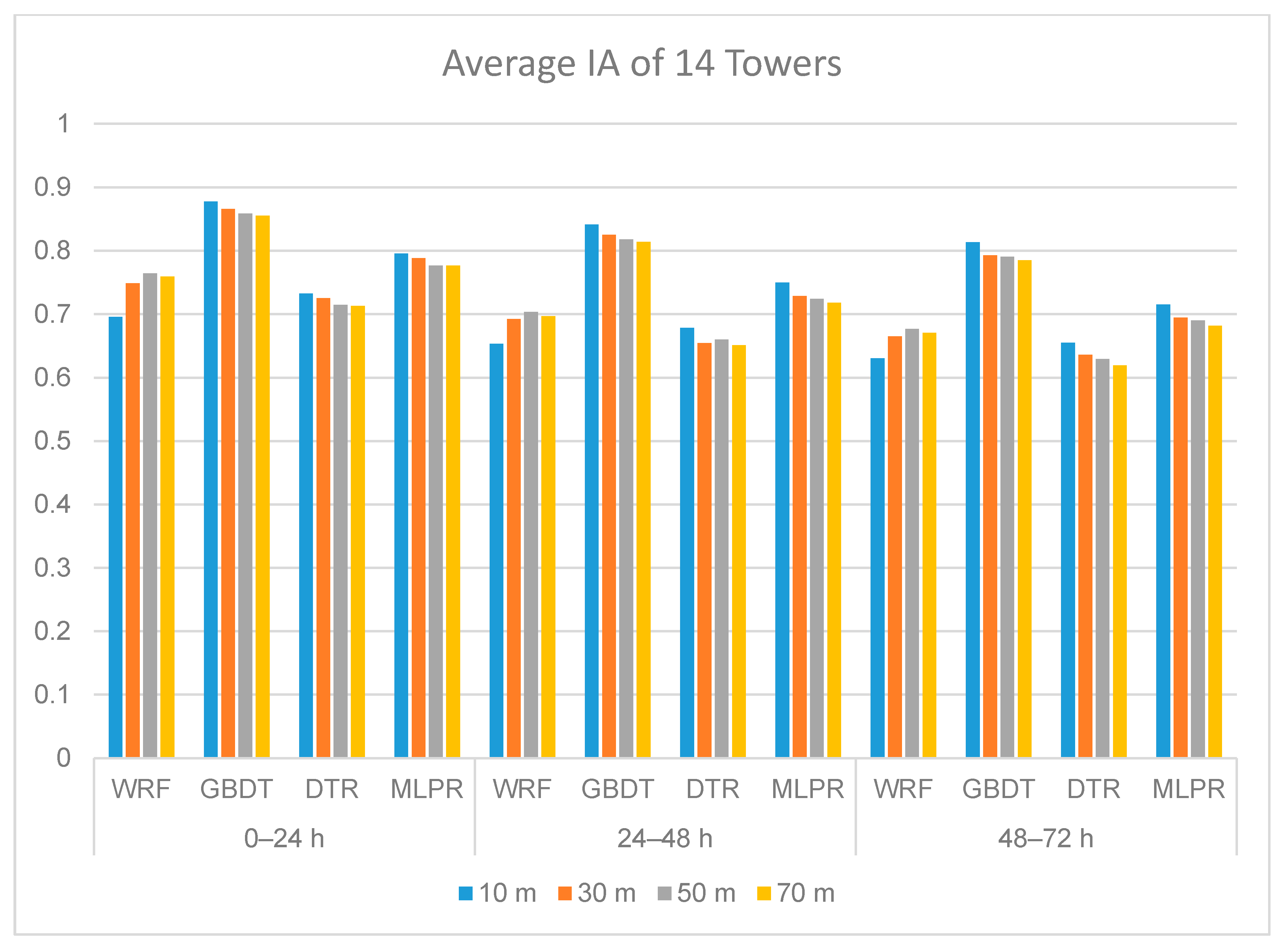

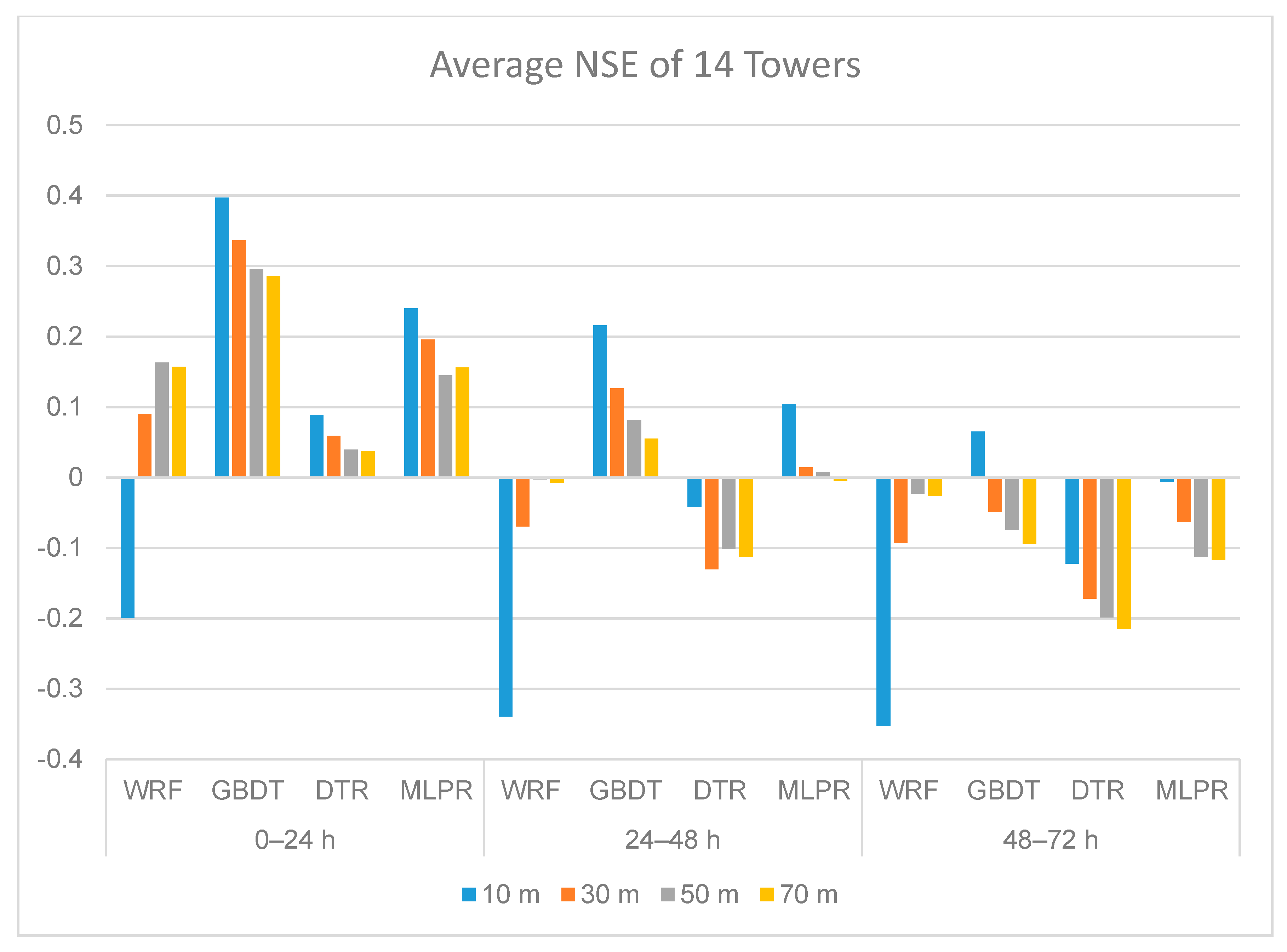

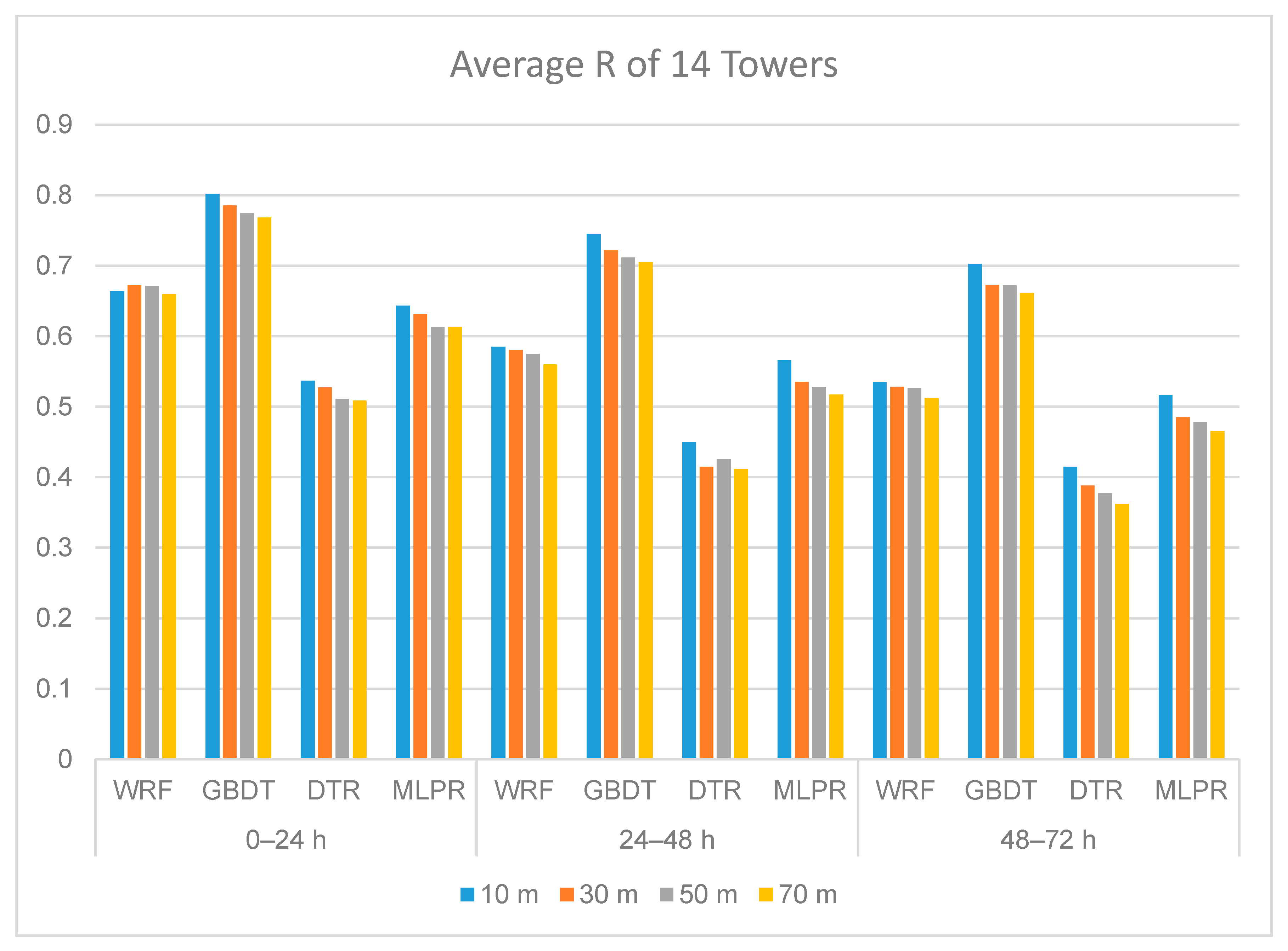

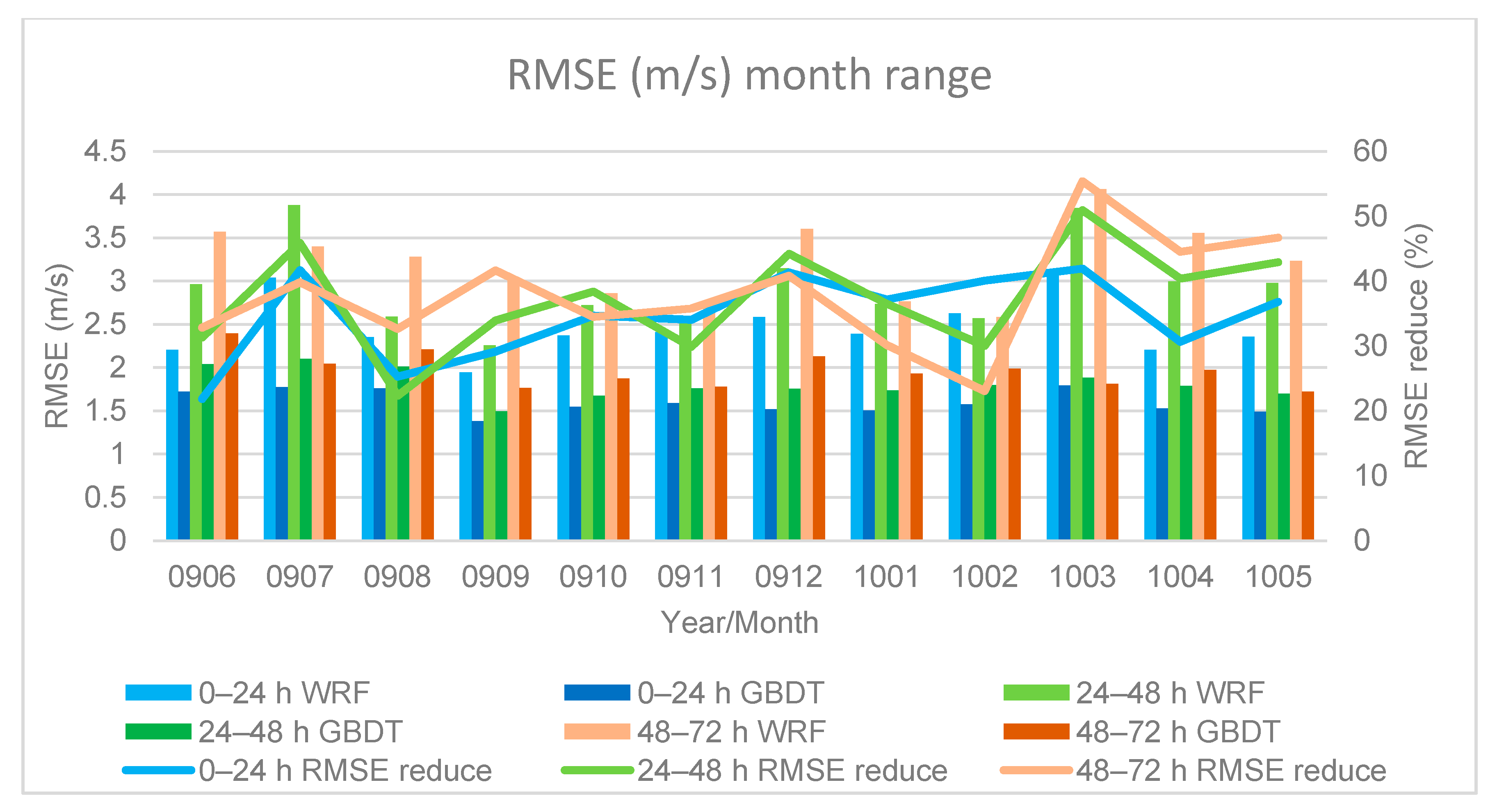

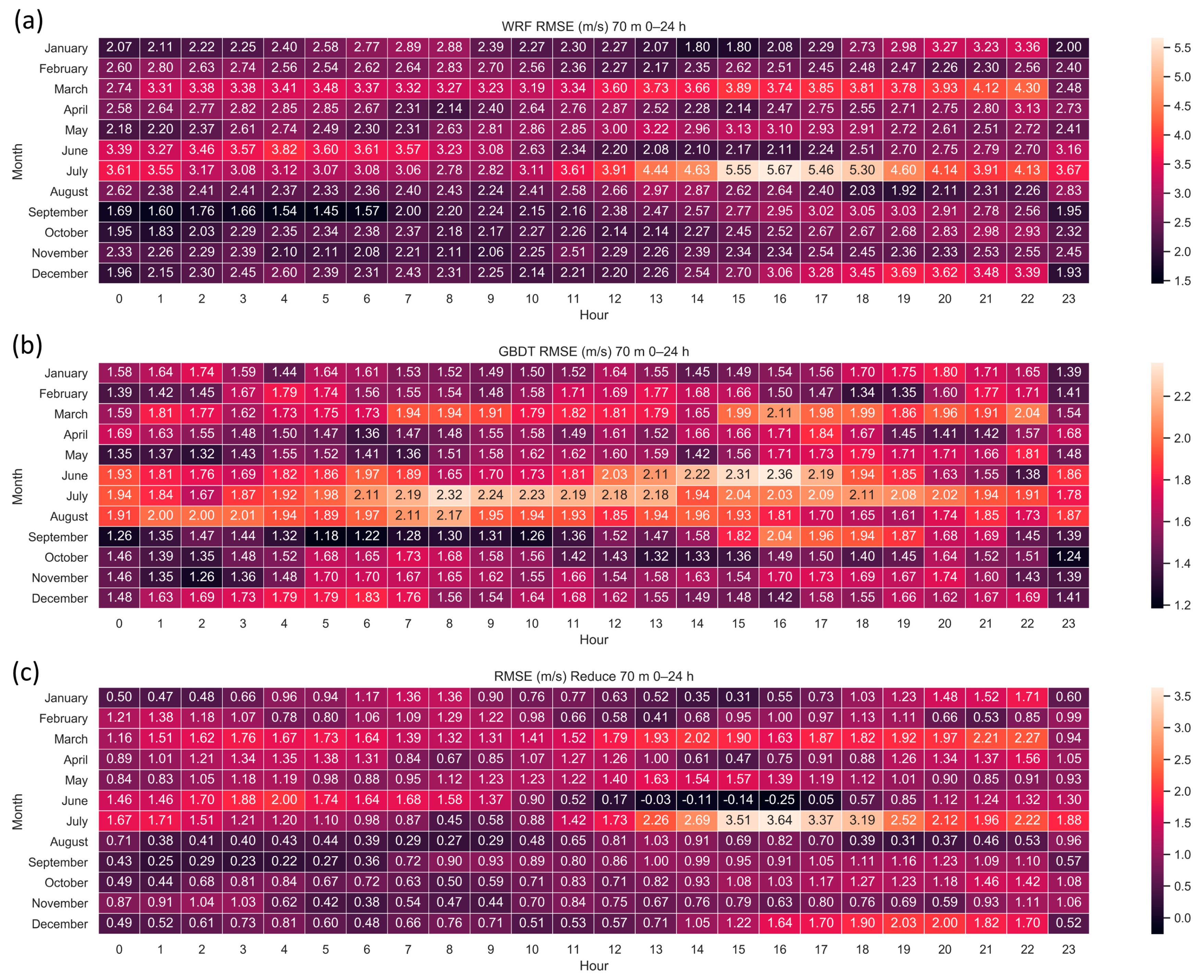

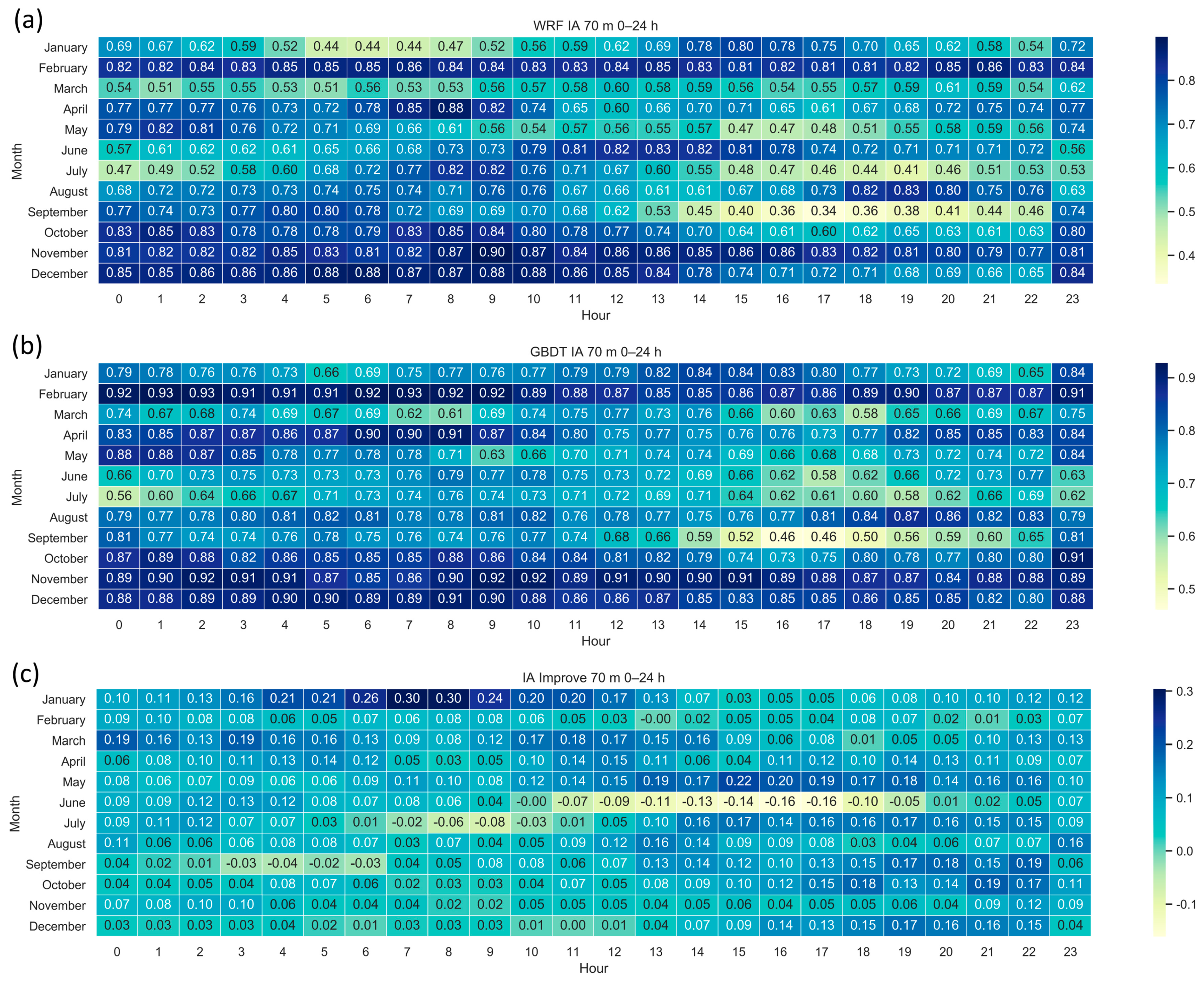

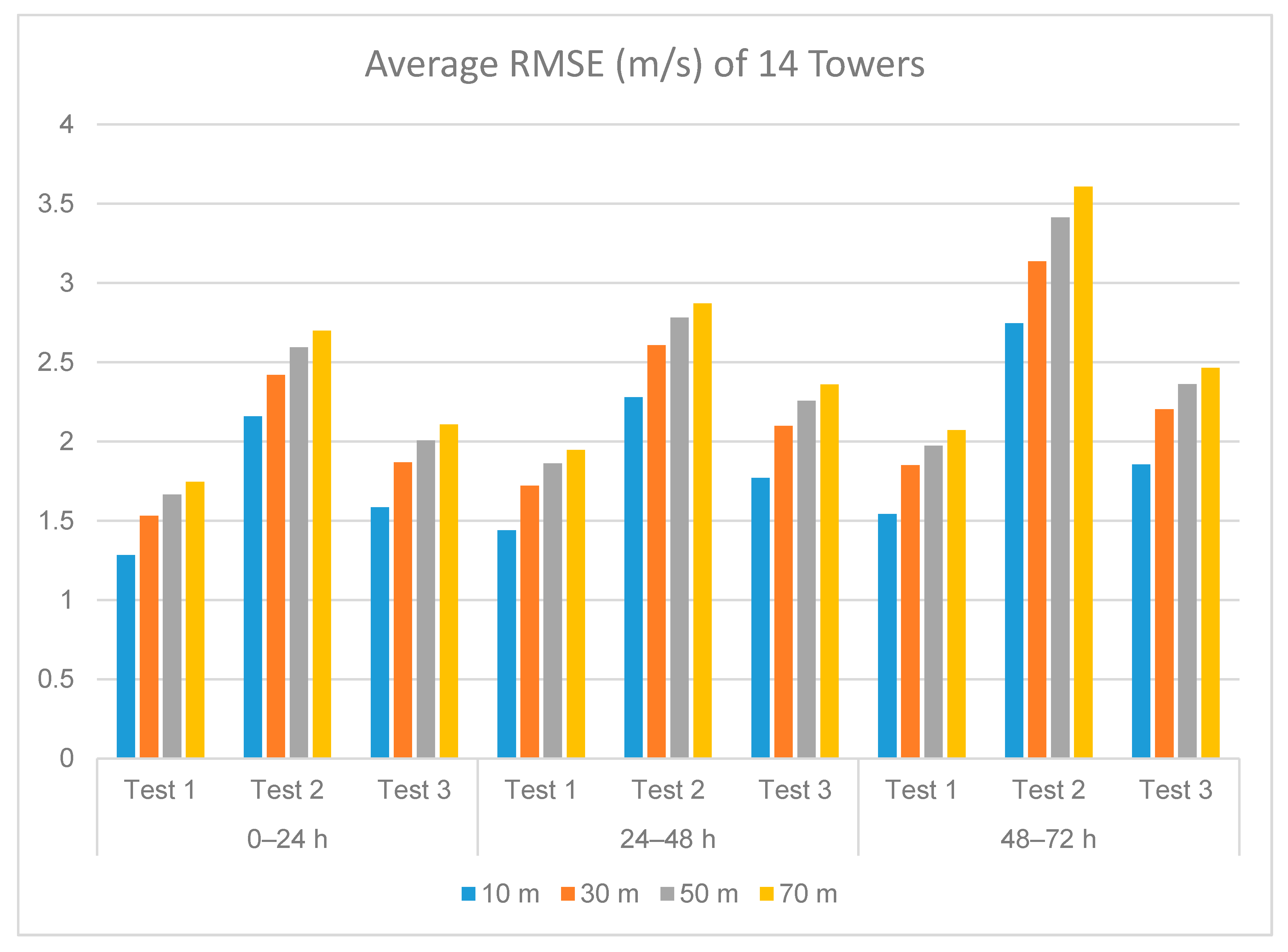

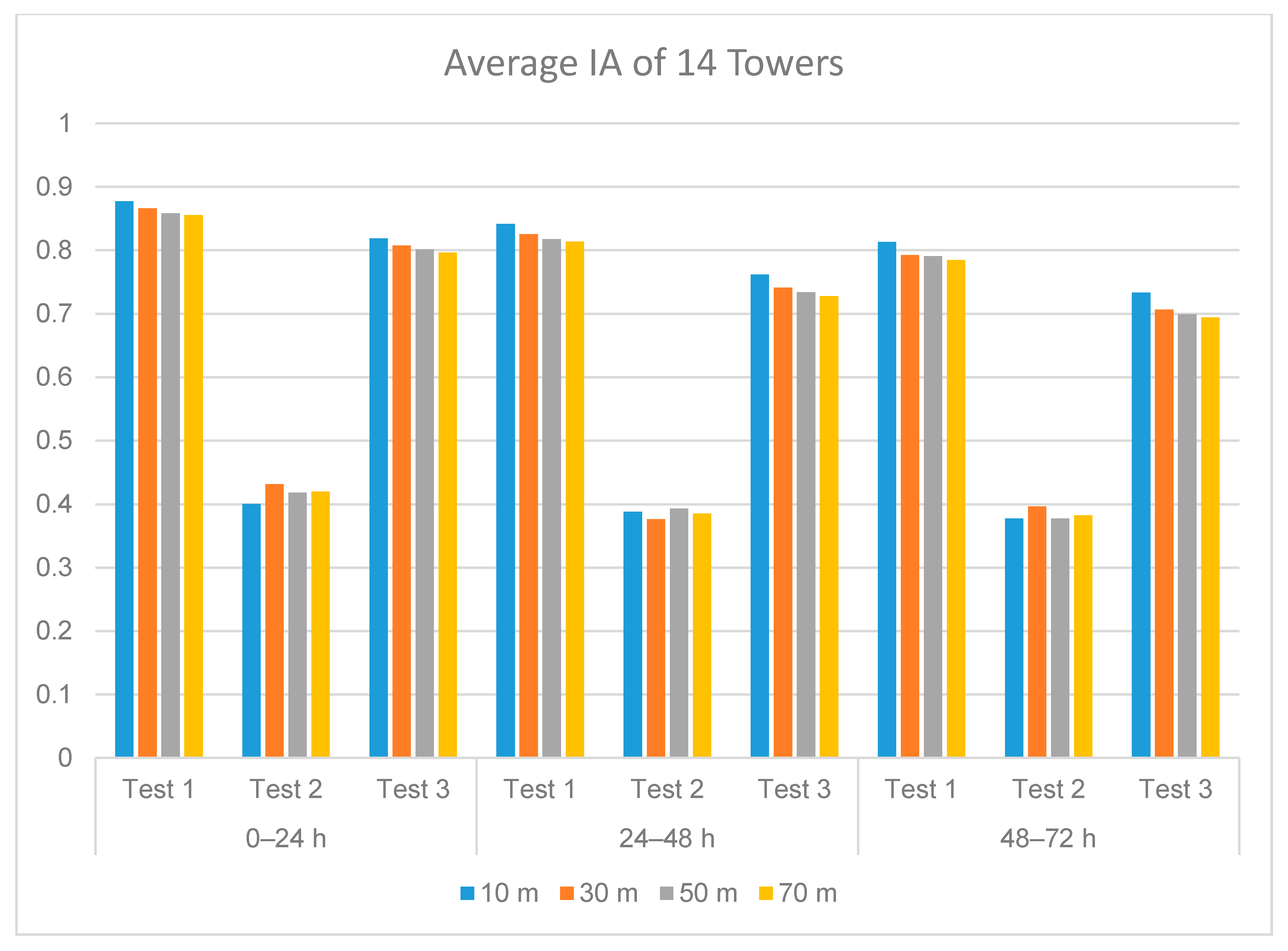

3.2. Post-Processing Results

3.3. Weibull Distributions

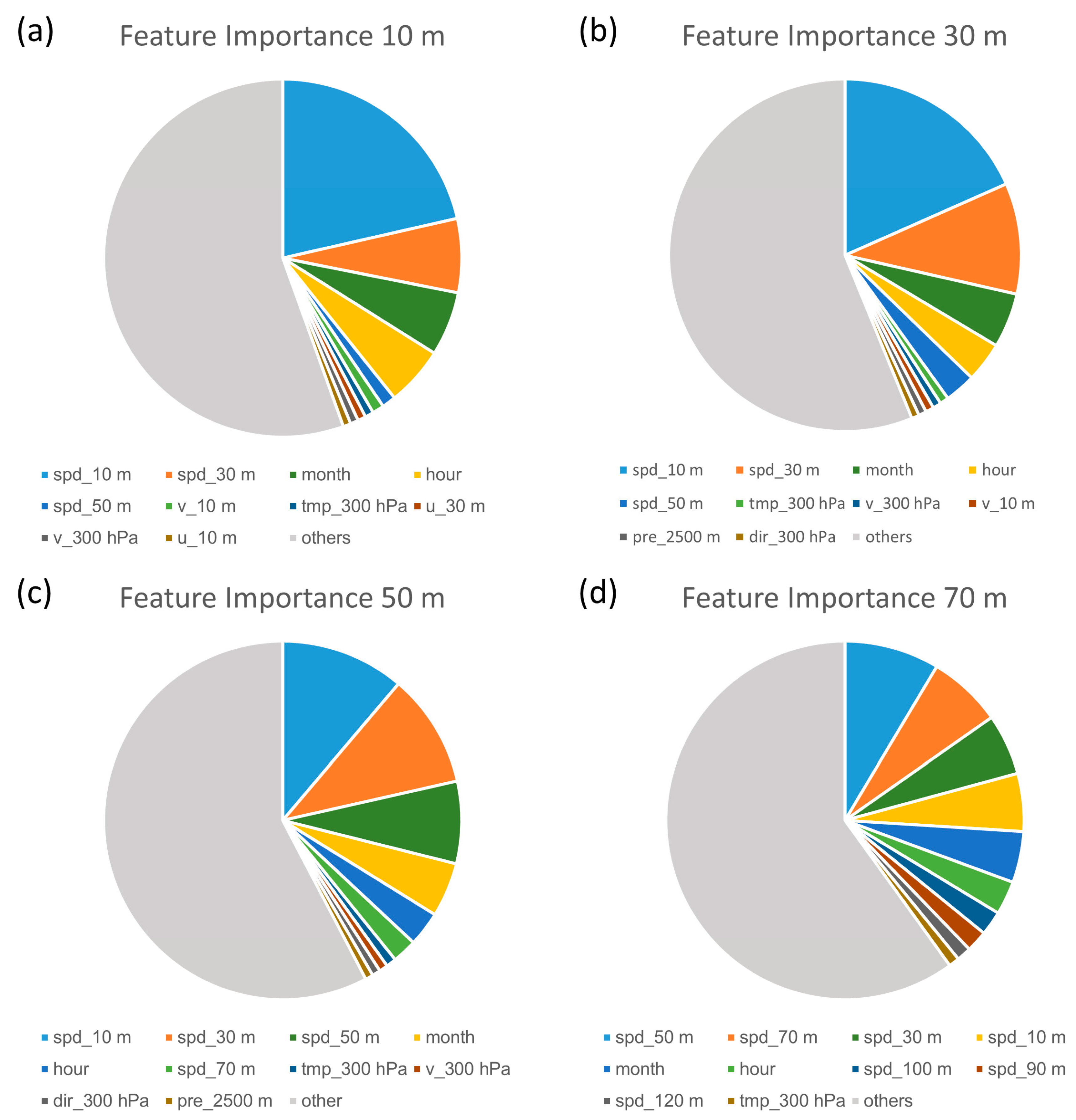

3.4. GBDT Feature Importance Results

3.5. Feature Importance Sensitivity Tests

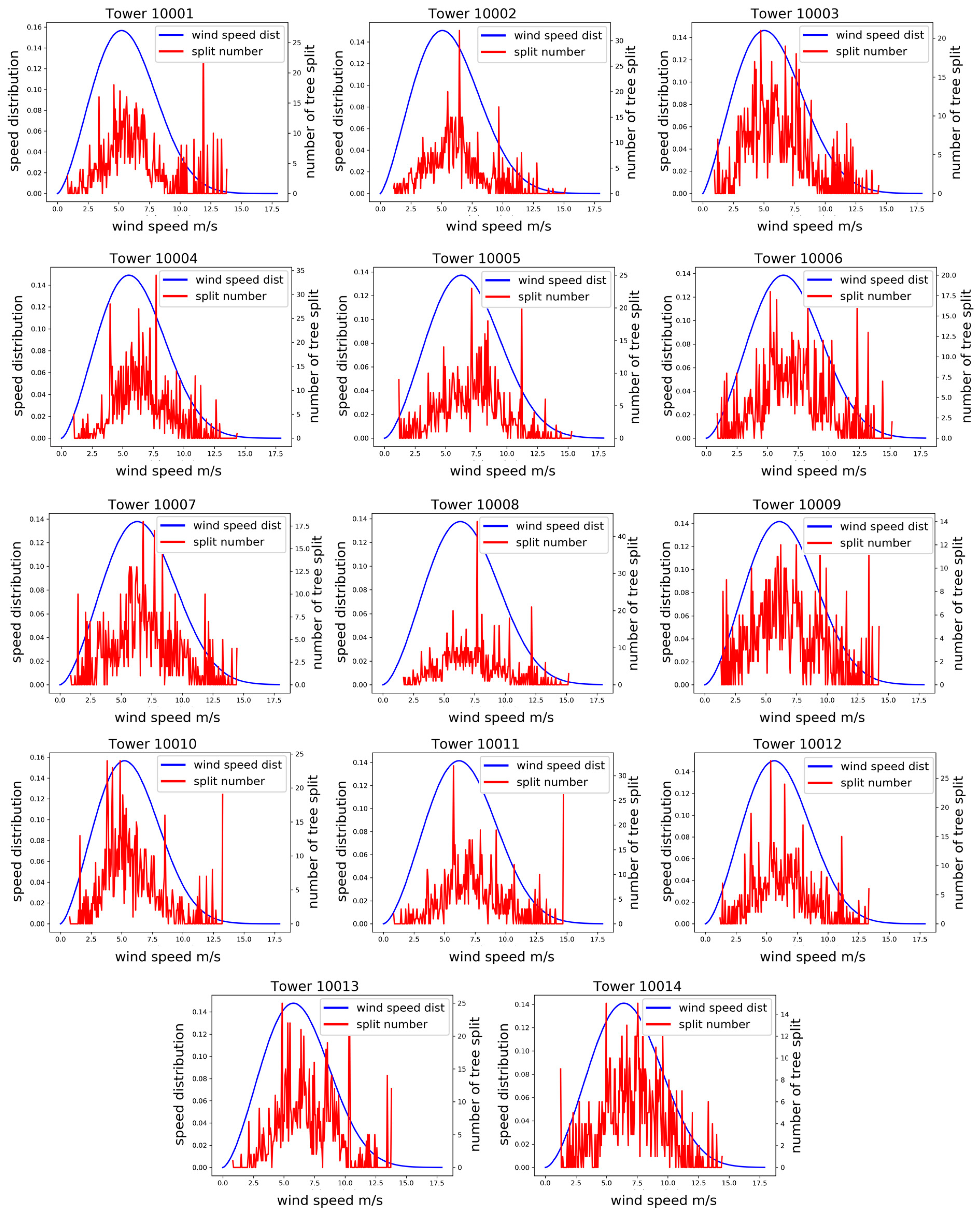

3.6. GBDT Feature Split Value Distributions

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 3.00 | 1.30 | 2.08 | 1.78 | 3.10 | 1.46 | 2.30 | 1.99 | 3.33 | 1.69 | 2.67 | 2.37 | 3.36 | 1.62 | 2.58 | 2.23 |

| 2 | 2.64 | 1.29 | 2.20 | 1.92 | 2.73 | 1.53 | 2.41 | 2.19 | 2.83 | 1.65 | 2.65 | 2.19 | 3.08 | 1.71 | 2.76 | 2.41 |

| 3 | 2.82 | 1.20 | 2.01 | 1.65 | 2.70 | 1.50 | 2.25 | 2.08 | 2.70 | 1.66 | 2.76 | 2.16 | 2.89 | 1.78 | 2.73 | 2.37 |

| 4 | 2.28 | 1.35 | 2.17 | 1.89 | 2.42 | 1.65 | 2.54 | 2.15 | 2.57 | 1.79 | 2.70 | 2.34 | 2.76 | 1.91 | 2.88 | 2.59 |

| 5 | 2.69 | 1.52 | 2.31 | 2.00 | 2.63 | 1.72 | 2.67 | 2.27 | 2.65 | 1.83 | 2.80 | 2.53 | 2.75 | 1.92 | 2.94 | 2.59 |

| 6 | 3.41 | 1.17 | 1.88 | 1.68 | 2.82 | 1.54 | 2.41 | 2.09 | 2.76 | 1.67 | 2.72 | 2.25 | 2.94 | 1.76 | 2.68 | 2.41 |

| 7 | 3.45 | 1.18 | 1.97 | 1.69 | 2.80 | 1.59 | 2.63 | 2.16 | 2.72 | 1.75 | 2.70 | 2.28 | 2.83 | 1.83 | 2.85 | 2.38 |

| 8 | 3.04 | 1.35 | 2.14 | 1.87 | 2.87 | 1.68 | 2.60 | 2.27 | 2.76 | 1.83 | 2.73 | 2.48 | 3.03 | 1.91 | 2.93 | 2.53 |

| 9 | 2.93 | 1.41 | 2.37 | 2.13 | 2.72 | 1.57 | 2.57 | 2.07 | 2.72 | 1.72 | 2.62 | 2.32 | 2.81 | 1.80 | 3.04 | 2.39 |

| 10 | 2.30 | 1.51 | 2.37 | 2.02 | 2.47 | 1.62 | 2.51 | 2.10 | 2.64 | 1.71 | 2.74 | 2.23 | 2.98 | 1.81 | 2.79 | 2.39 |

| 11 | 2.93 | 1.29 | 2.16 | 1.71 | 2.82 | 1.43 | 2.39 | 1.92 | 2.82 | 1.51 | 2.50 | 2.03 | 2.91 | 1.58 | 2.66 | 2.03 |

| 12 | 2.39 | 1.25 | 1.97 | 1.65 | 2.38 | 1.46 | 2.37 | 1.99 | 2.36 | 1.49 | 2.53 | 2.14 | 2.43 | 1.57 | 2.65 | 2.18 |

| 13 | 2.87 | 1.06 | 1.75 | 1.42 | 2.76 | 1.35 | 2.20 | 1.78 | 2.65 | 1.55 | 2.44 | 1.95 | 2.76 | 1.63 | 2.53 | 2.10 |

| 14 | 3.49 | 1.11 | 1.89 | 1.58 | 3.22 | 1.35 | 2.15 | 1.80 | 2.85 | 1.47 | 2.44 | 2.09 | 2.81 | 1.63 | 2.48 | 2.06 |

| Ave | 2.87 | 1.28 | 2.09 | 1.78 | 2.75 | 1.53 | 2.43 | 2.06 | 2.74 | 1.66 | 2.64 | 2.24 | 2.88 | 1.75 | 2.75 | 2.33 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 3.13 | 1.34 | 2.24 | 2.00 | 3.26 | 1.50 | 2.36 | 2.18 | 3.50 | 1.70 | 2.79 | 2.40 | 3.54 | 1.66 | 2.80 | 2.45 |

| 2 | 2.78 | 1.40 | 2.34 | 2.01 | 2.92 | 1.61 | 2.80 | 2.36 | 3.05 | 1.77 | 2.93 | 2.43 | 3.27 | 1.85 | 2.97 | 2.63 |

| 3 | 3.03 | 1.26 | 2.15 | 1.78 | 2.94 | 1.60 | 2.56 | 2.30 | 2.98 | 1.75 | 2.87 | 2.68 | 3.19 | 1.84 | 3.11 | 2.72 |

| 4 | 2.61 | 1.48 | 2.40 | 2.06 | 2.82 | 1.87 | 3.07 | 2.42 | 3.00 | 2.02 | 3.07 | 2.68 | 3.23 | 2.14 | 3.20 | 2.84 |

| 5 | 3.01 | 1.62 | 2.50 | 2.17 | 3.03 | 1.91 | 2.88 | 2.53 | 3.07 | 2.03 | 3.22 | 2.70 | 3.20 | 2.13 | 3.39 | 2.76 |

| 6 | 3.82 | 1.39 | 2.19 | 1.90 | 3.37 | 1.80 | 2.74 | 2.40 | 3.33 | 1.94 | 2.96 | 2.60 | 3.54 | 2.05 | 3.18 | 2.74 |

| 7 | 3.83 | 1.39 | 2.23 | 1.90 | 3.32 | 1.85 | 2.88 | 2.45 | 3.28 | 2.01 | 3.02 | 2.72 | 3.42 | 2.06 | 3.19 | 2.77 |

| 8 | 3.47 | 1.53 | 2.40 | 2.06 | 3.37 | 1.89 | 2.93 | 2.55 | 3.31 | 2.03 | 2.99 | 2.66 | 3.58 | 2.15 | 3.54 | 2.87 |

| 9 | 3.33 | 1.64 | 2.46 | 2.24 | 3.19 | 1.83 | 2.76 | 2.39 | 3.24 | 1.94 | 3.05 | 2.55 | 3.35 | 2.03 | 3.00 | 2.81 |

| 10 | 2.57 | 1.69 | 2.79 | 2.26 | 2.85 | 1.80 | 2.80 | 2.46 | 3.09 | 1.93 | 2.83 | 2.60 | 3.47 | 2.07 | 3.18 | 2.85 |

| 11 | 3.33 | 1.46 | 2.29 | 2.02 | 3.28 | 1.61 | 2.72 | 2.19 | 3.30 | 1.71 | 2.71 | 2.35 | 3.40 | 1.82 | 2.92 | 2.45 |

| 12 | 2.77 | 1.46 | 2.24 | 1.96 | 2.84 | 1.73 | 2.93 | 2.42 | 2.86 | 1.80 | 2.90 | 2.45 | 2.97 | 1.85 | 2.86 | 2.47 |

| 13 | 3.15 | 1.23 | 1.95 | 1.62 | 3.10 | 1.51 | 2.45 | 2.13 | 3.03 | 1.71 | 2.64 | 2.34 | 3.18 | 1.76 | 2.72 | 2.41 |

| 14 | 3.69 | 1.26 | 2.37 | 1.76 | 3.54 | 1.59 | 2.62 | 2.06 | 3.26 | 1.73 | 2.69 | 2.32 | 3.26 | 1.85 | 2.97 | 2.47 |

| Ave | 3.18 | 1.44 | 2.33 | 1.98 | 3.13 | 1.72 | 2.75 | 2.35 | 3.16 | 1.86 | 2.91 | 2.53 | 3.33 | 1.95 | 3.07 | 2.66 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 3.35 | 1.44 | 2.34 | 2.08 | 3.51 | 1.60 | 2.62 | 2.33 | 3.70 | 1.75 | 2.74 | 2.48 | 3.81 | 1.77 | 2.76 | 2.49 |

| 2 | 3.00 | 1.46 | 2.37 | 2.19 | 3.19 | 1.70 | 2.59 | 2.43 | 3.32 | 1.81 | 2.91 | 2.52 | 3.57 | 1.90 | 3.04 | 2.74 |

| 3 | 3.31 | 1.38 | 2.10 | 1.87 | 3.33 | 1.74 | 2.76 | 2.42 | 3.37 | 1.87 | 2.91 | 2.57 | 3.59 | 2.01 | 2.96 | 2.79 |

| 4 | 2.80 | 1.61 | 2.44 | 2.18 | 3.07 | 1.98 | 2.97 | 2.60 | 3.24 | 2.07 | 3.20 | 2.66 | 3.47 | 2.19 | 3.37 | 2.89 |

| 5 | 3.10 | 1.66 | 2.69 | 2.21 | 3.16 | 1.95 | 3.02 | 2.64 | 3.22 | 2.06 | 3.13 | 2.54 | 3.35 | 2.11 | 3.48 | 2.84 |

| 6 | 3.83 | 1.53 | 2.39 | 1.99 | 3.42 | 2.00 | 2.88 | 2.79 | 3.39 | 2.16 | 3.16 | 2.74 | 3.59 | 2.25 | 3.43 | 2.84 |

| 7 | 3.83 | 1.49 | 2.33 | 1.93 | 3.39 | 2.05 | 3.26 | 2.58 | 3.37 | 2.19 | 3.24 | 2.77 | 3.51 | 2.31 | 3.42 | 2.98 |

| 8 | 3.51 | 1.68 | 2.50 | 2.24 | 3.46 | 2.07 | 3.03 | 2.75 | 3.40 | 2.21 | 3.37 | 2.90 | 3.64 | 2.33 | 3.45 | 3.07 |

| 9 | 3.34 | 1.69 | 2.54 | 2.36 | 3.23 | 1.89 | 2.89 | 2.64 | 3.26 | 2.01 | 3.17 | 2.70 | 3.37 | 2.11 | 3.36 | 2.92 |

| 10 | 2.68 | 1.93 | 2.99 | 2.59 | 2.95 | 2.03 | 2.97 | 2.61 | 3.18 | 2.02 | 3.07 | 2.77 | 3.53 | 2.18 | 3.22 | 3.00 |

| 11 | 3.36 | 1.53 | 2.36 | 2.05 | 3.35 | 1.71 | 2.67 | 2.24 | 3.38 | 1.79 | 2.78 | 2.45 | 3.49 | 1.95 | 3.00 | 2.59 |

| 12 | 2.89 | 1.55 | 2.42 | 2.24 | 3.01 | 1.81 | 2.77 | 2.55 | 3.06 | 1.92 | 3.14 | 2.74 | 3.16 | 1.96 | 3.12 | 2.85 |

| 13 | 3.26 | 1.34 | 2.01 | 1.76 | 3.30 | 1.68 | 2.51 | 2.17 | 3.25 | 1.87 | 2.77 | 2.58 | 3.45 | 1.92 | 2.96 | 2.67 |

| 14 | 3.90 | 1.32 | 2.21 | 1.82 | 3.79 | 1.69 | 2.73 | 2.25 | 3.55 | 1.87 | 2.94 | 2.57 | 3.58 | 1.99 | 3.20 | 2.57 |

| Ave | 3.30 | 1.54 | 2.41 | 2.11 | 3.30 | 1.85 | 2.83 | 2.50 | 3.34 | 1.97 | 3.04 | 2.64 | 3.51 | 2.07 | 3.20 | 2.80 |

Appendix B

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 0.59 | 0.83 | 0.66 | 0.74 | 0.62 | 0.80 | 0.65 | 0.69 | 0.62 | 0.77 | 0.61 | 0.65 | 0.62 | 0.78 | 0.64 | 0.69 |

| 2 | 0.67 | 0.85 | 0.69 | 0.73 | 0.70 | 0.83 | 0.68 | 0.71 | 0.70 | 0.82 | 0.66 | 0.75 | 0.68 | 0.82 | 0.65 | 0.71 |

| 3 | 0.65 | 0.85 | 0.68 | 0.76 | 0.73 | 0.85 | 0.75 | 0.78 | 0.75 | 0.85 | 0.68 | 0.79 | 0.74 | 0.85 | 0.71 | 0.77 |

| 4 | 0.78 | 0.88 | 0.74 | 0.79 | 0.80 | 0.86 | 0.73 | 0.79 | 0.79 | 0.85 | 0.72 | 0.77 | 0.77 | 0.84 | 0.71 | 0.75 |

| 5 | 0.74 | 0.86 | 0.72 | 0.79 | 0.78 | 0.86 | 0.70 | 0.77 | 0.80 | 0.85 | 0.72 | 0.75 | 0.79 | 0.85 | 0.71 | 0.78 |

| 6 | 0.65 | 0.89 | 0.76 | 0.79 | 0.76 | 0.86 | 0.73 | 0.79 | 0.78 | 0.86 | 0.70 | 0.77 | 0.77 | 0.86 | 0.75 | 0.78 |

| 7 | 0.64 | 0.88 | 0.74 | 0.79 | 0.76 | 0.87 | 0.69 | 0.80 | 0.79 | 0.86 | 0.73 | 0.80 | 0.78 | 0.86 | 0.71 | 0.79 |

| 8 | 0.69 | 0.86 | 0.71 | 0.80 | 0.75 | 0.85 | 0.71 | 0.77 | 0.78 | 0.84 | 0.71 | 0.75 | 0.75 | 0.84 | 0.69 | 0.74 |

| 9 | 0.70 | 0.87 | 0.72 | 0.77 | 0.76 | 0.87 | 0.72 | 0.79 | 0.77 | 0.86 | 0.72 | 0.78 | 0.78 | 0.86 | 0.68 | 0.79 |

| 10 | 0.80 | 0.91 | 0.80 | 0.86 | 0.80 | 0.89 | 0.76 | 0.84 | 0.78 | 0.88 | 0.74 | 0.81 | 0.75 | 0.87 | 0.73 | 0.80 |

| 11 | 0.71 | 0.89 | 0.74 | 0.82 | 0.75 | 0.89 | 0.73 | 0.81 | 0.76 | 0.89 | 0.74 | 0.81 | 0.77 | 0.89 | 0.74 | 0.82 |

| 12 | 0.78 | 0.91 | 0.81 | 0.86 | 0.82 | 0.91 | 0.80 | 0.84 | 0.83 | 0.91 | 0.78 | 0.81 | 0.83 | 0.90 | 0.76 | 0.82 |

| 13 | 0.70 | 0.91 | 0.80 | 0.85 | 0.76 | 0.89 | 0.76 | 0.84 | 0.79 | 0.88 | 0.74 | 0.83 | 0.79 | 0.87 | 0.73 | 0.80 |

| 14 | 0.63 | 0.88 | 0.71 | 0.78 | 0.70 | 0.88 | 0.75 | 0.81 | 0.77 | 0.89 | 0.75 | 0.80 | 0.79 | 0.88 | 0.77 | 0.84 |

| Ave | 0.70 | 0.88 | 0.73 | 0.80 | 0.75 | 0.87 | 0.73 | 0.79 | 0.76 | 0.86 | 0.71 | 0.78 | 0.76 | 0.86 | 0.71 | 0.78 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 0.56 | 0.81 | 0.64 | 0.67 | 0.59 | 0.78 | 0.61 | 0.62 | 0.58 | 0.76 | 0.56 | 0.62 | 0.59 | 0.75 | 0.56 | 0.62 |

| 2 | 0.65 | 0.82 | 0.63 | 0.71 | 0.67 | 0.80 | 0.60 | 0.67 | 0.67 | 0.79 | 0.59 | 0.70 | 0.66 | 0.78 | 0.60 | 0.66 |

| 3 | 0.62 | 0.83 | 0.63 | 0.72 | 0.69 | 0.83 | 0.66 | 0.70 | 0.71 | 0.83 | 0.66 | 0.71 | 0.70 | 0.83 | 0.63 | 0.70 |

| 4 | 0.73 | 0.85 | 0.70 | 0.77 | 0.74 | 0.81 | 0.60 | 0.74 | 0.72 | 0.79 | 0.62 | 0.71 | 0.70 | 0.79 | 0.63 | 0.70 |

| 5 | 0.70 | 0.84 | 0.69 | 0.75 | 0.73 | 0.82 | 0.67 | 0.76 | 0.74 | 0.82 | 0.63 | 0.74 | 0.74 | 0.82 | 0.66 | 0.75 |

| 6 | 0.60 | 0.84 | 0.68 | 0.74 | 0.68 | 0.81 | 0.66 | 0.72 | 0.70 | 0.81 | 0.67 | 0.74 | 0.68 | 0.81 | 0.66 | 0.72 |

| 7 | 0.60 | 0.84 | 0.69 | 0.76 | 0.69 | 0.82 | 0.67 | 0.74 | 0.71 | 0.82 | 0.68 | 0.72 | 0.70 | 0.82 | 0.66 | 0.74 |

| 8 | 0.63 | 0.82 | 0.67 | 0.73 | 0.68 | 0.81 | 0.64 | 0.74 | 0.71 | 0.80 | 0.68 | 0.72 | 0.68 | 0.78 | 0.58 | 0.71 |

| 9 | 0.64 | 0.83 | 0.68 | 0.72 | 0.69 | 0.82 | 0.67 | 0.72 | 0.70 | 0.82 | 0.67 | 0.74 | 0.70 | 0.82 | 0.69 | 0.71 |

| 10 | 0.77 | 0.88 | 0.74 | 0.81 | 0.75 | 0.86 | 0.73 | 0.79 | 0.72 | 0.84 | 0.72 | 0.76 | 0.69 | 0.82 | 0.66 | 0.74 |

| 11 | 0.66 | 0.86 | 0.70 | 0.78 | 0.69 | 0.85 | 0.64 | 0.75 | 0.70 | 0.84 | 0.68 | 0.75 | 0.70 | 0.85 | 0.69 | 0.76 |

| 12 | 0.72 | 0.87 | 0.74 | 0.79 | 0.75 | 0.86 | 0.69 | 0.76 | 0.76 | 0.86 | 0.69 | 0.76 | 0.76 | 0.85 | 0.70 | 0.79 |

| 13 | 0.67 | 0.87 | 0.74 | 0.81 | 0.71 | 0.86 | 0.70 | 0.76 | 0.73 | 0.84 | 0.70 | 0.75 | 0.73 | 0.84 | 0.71 | 0.72 |

| 14 | 0.60 | 0.83 | 0.55 | 0.73 | 0.64 | 0.82 | 0.61 | 0.73 | 0.70 | 0.83 | 0.69 | 0.73 | 0.72 | 0.83 | 0.67 | 0.73 |

| Ave | 0.65 | 0.84 | 0.68 | 0.75 | 0.69 | 0.83 | 0.65 | 0.73 | 0.70 | 0.82 | 0.66 | 0.72 | 0.70 | 0.81 | 0.65 | 0.72 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 0.56 | 0.79 | 0.62 | 0.66 | 0.58 | 0.77 | 0.58 | 0.64 | 0.59 | 0.76 | 0.59 | 0.60 | 0.59 | 0.73 | 0.59 | 0.60 |

| 2 | 0.65 | 0.81 | 0.63 | 0.68 | 0.66 | 0.79 | 0.62 | 0.64 | 0.66 | 0.78 | 0.61 | 0.65 | 0.65 | 0.78 | 0.59 | 0.65 |

| 3 | 0.59 | 0.80 | 0.66 | 0.70 | 0.65 | 0.80 | 0.63 | 0.69 | 0.67 | 0.81 | 0.65 | 0.71 | 0.66 | 0.80 | 0.67 | 0.68 |

| 4 | 0.71 | 0.82 | 0.68 | 0.74 | 0.71 | 0.80 | 0.63 | 0.72 | 0.70 | 0.80 | 0.61 | 0.71 | 0.69 | 0.79 | 0.62 | 0.69 |

| 5 | 0.69 | 0.83 | 0.64 | 0.76 | 0.72 | 0.81 | 0.66 | 0.71 | 0.73 | 0.81 | 0.68 | 0.76 | 0.72 | 0.81 | 0.62 | 0.72 |

| 6 | 0.58 | 0.80 | 0.63 | 0.72 | 0.66 | 0.76 | 0.62 | 0.64 | 0.67 | 0.76 | 0.63 | 0.68 | 0.66 | 0.77 | 0.58 | 0.69 |

| 7 | 0.58 | 0.81 | 0.65 | 0.73 | 0.66 | 0.77 | 0.58 | 0.70 | 0.68 | 0.78 | 0.62 | 0.70 | 0.68 | 0.77 | 0.61 | 0.67 |

| 8 | 0.61 | 0.78 | 0.64 | 0.67 | 0.65 | 0.76 | 0.60 | 0.65 | 0.68 | 0.76 | 0.58 | 0.68 | 0.66 | 0.74 | 0.59 | 0.64 |

| 9 | 0.62 | 0.81 | 0.64 | 0.68 | 0.67 | 0.79 | 0.64 | 0.70 | 0.68 | 0.79 | 0.62 | 0.68 | 0.68 | 0.79 | 0.61 | 0.68 |

| 10 | 0.73 | 0.83 | 0.65 | 0.73 | 0.72 | 0.81 | 0.67 | 0.74 | 0.69 | 0.80 | 0.65 | 0.69 | 0.66 | 0.79 | 0.64 | 0.68 |

| 11 | 0.63 | 0.83 | 0.70 | 0.74 | 0.65 | 0.81 | 0.67 | 0.74 | 0.67 | 0.81 | 0.66 | 0.72 | 0.67 | 0.81 | 0.65 | 0.72 |

| 12 | 0.69 | 0.84 | 0.70 | 0.73 | 0.71 | 0.84 | 0.72 | 0.73 | 0.71 | 0.82 | 0.63 | 0.70 | 0.72 | 0.82 | 0.66 | 0.72 |

| 13 | 0.63 | 0.84 | 0.70 | 0.76 | 0.67 | 0.81 | 0.68 | 0.73 | 0.69 | 0.79 | 0.66 | 0.67 | 0.68 | 0.80 | 0.63 | 0.67 |

| 14 | 0.56 | 0.80 | 0.62 | 0.71 | 0.60 | 0.78 | 0.59 | 0.70 | 0.65 | 0.79 | 0.62 | 0.69 | 0.67 | 0.79 | 0.61 | 0.72 |

| Ave | 0.63 | 0.81 | 0.66 | 0.72 | 0.66 | 0.79 | 0.64 | 0.69 | 0.68 | 0.79 | 0.63 | 0.69 | 0.67 | 0.78 | 0.62 | 0.68 |

Appendix C

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | −0.67 | 0.11 | −0.09 | 0.11 | −0.46 | −0.01 | −0.07 | −0.12 | −0.51 | −0.19 | −0.14 | −0.21 | −0.39 | −0.20 | −0.07 | −0.04 |

| 2 | −0.20 | 0.28 | 0.01 | 0.05 | −0.06 | 0.18 | −0.01 | −0.02 | −0.05 | 0.13 | −0.02 | 0.14 | −0.13 | 0.16 | −0.05 | 0.03 |

| 3 | −0.22 | 0.24 | −0.02 | 0.13 | 0.07 | 0.26 | 0.16 | 0.23 | 0.14 | 0.28 | −0.02 | 0.21 | 0.10 | 0.29 | 0.07 | 0.18 |

| 4 | 0.18 | 0.43 | 0.06 | 0.22 | 0.26 | 0.33 | 0.07 | 0.22 | 0.24 | 0.30 | 0.03 | 0.13 | 0.22 | 0.24 | 0.04 | 0.09 |

| 5 | 0.04 | 0.31 | 0.04 | 0.18 | 0.23 | 0.30 | −0.05 | 0.08 | 0.28 | 0.28 | 0.03 | 0.03 | 0.29 | 0.30 | 0.01 | 0.23 |

| 6 | −0.49 | 0.46 | 0.15 | 0.16 | 0.14 | 0.28 | 0.04 | 0.22 | 0.23 | 0.28 | −0.02 | 0.08 | 0.20 | 0.30 | 0.12 | 0.12 |

| 7 | −0.51 | 0.43 | 0.16 | 0.21 | 0.14 | 0.34 | −0.10 | 0.26 | 0.27 | 0.31 | 0.06 | 0.18 | 0.26 | 0.30 | −0.08 | 0.15 |

| 8 | −0.17 | 0.31 | −0.02 | 0.29 | 0.11 | 0.25 | −0.04 | 0.14 | 0.25 | 0.18 | −0.08 | 0.03 | 0.16 | 0.15 | −0.03 | −0.09 |

| 9 | −0.16 | 0.37 | 0.04 | 0.24 | 0.16 | 0.37 | 0.04 | 0.18 | 0.22 | 0.26 | −0.02 | 0.16 | 0.23 | 0.29 | −0.05 | 0.21 |

| 10 | 0.11 | 0.58 | 0.22 | 0.45 | 0.19 | 0.49 | 0.08 | 0.34 | 0.17 | 0.43 | 0.11 | 0.25 | 0.05 | 0.40 | 0.02 | 0.19 |

| 11 | −0.16 | 0.47 | 0.06 | 0.27 | 0.09 | 0.46 | 0.08 | 0.24 | 0.17 | 0.45 | 0.15 | 0.28 | 0.19 | 0.45 | 0.14 | 0.25 |

| 12 | 0.13 | 0.56 | 0.37 | 0.45 | 0.31 | 0.57 | 0.32 | 0.35 | 0.38 | 0.56 | 0.24 | 0.21 | 0.40 | 0.55 | 0.18 | 0.29 |

| 13 | −0.16 | 0.58 | 0.26 | 0.42 | 0.13 | 0.49 | 0.16 | 0.36 | 0.26 | 0.39 | 0.07 | 0.31 | 0.28 | 0.35 | 0.01 | 0.19 |

| 14 | −0.50 | 0.43 | 0.01 | 0.17 | −0.05 | 0.42 | 0.13 | 0.28 | 0.24 | 0.48 | 0.17 | 0.23 | 0.32 | 0.43 | 0.22 | 0.39 |

| Ave | −0.20 | 0.40 | 0.09 | 0.24 | 0.09 | 0.34 | 0.06 | 0.20 | 0.16 | 0.29 | 0.04 | 0.15 | 0.16 | 0.29 | 0.04 | 0.16 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | −0.76 | −0.04 | −0.07 | −0.13 | −0.57 | −0.22 | −0.26 | −0.39 | −0.63 | −0.36 | −0.33 | −0.40 | −0.50 | −0.44 | −0.26 | −0.27 |

| 2 | −0.22 | 0.14 | −0.13 | 0.01 | −0.09 | 0.05 | −0.16 | −0.12 | −0.10 | −0.05 | −0.20 | −0.03 | −0.15 | −0.07 | −0.18 | −0.08 |

| 3 | −0.31 | 0.10 | −0.16 | 0.02 | −0.02 | 0.19 | −0.11 | −0.07 | 0.04 | 0.15 | −0.08 | 0.11 | 0.00 | 0.17 | −0.16 | 0.04 |

| 4 | 0.02 | 0.22 | 0.02 | 0.16 | 0.08 | 0.01 | −0.35 | 0.08 | 0.07 | −0.04 | −0.31 | −0.01 | 0.03 | −0.09 | −0.19 | −0.08 |

| 5 | −0.11 | 0.24 | −0.02 | 0.10 | 0.07 | 0.12 | −0.11 | 0.16 | 0.12 | 0.14 | −0.21 | 0.09 | 0.14 | 0.15 | −0.00 | 0.16 |

| 6 | −0.71 | 0.26 | −0.01 | 0.07 | −0.10 | 0.08 | −0.14 | −0.05 | 0.01 | 0.10 | −0.06 | 0.10 | −0.03 | 0.05 | −0.06 | 0.00 |

| 7 | −0.69 | 0.27 | 0.06 | 0.18 | −0.07 | 0.14 | 0.02 | 0.07 | 0.05 | 0.12 | −0.02 | −0.02 | 0.05 | 0.11 | −0.15 | 0.08 |

| 8 | −0.38 | 0.12 | −0.08 | 0.04 | −0.09 | 0.08 | −0.18 | 0.13 | 0.03 | −0.02 | −0.05 | −0.07 | −0.04 | −0.15 | −0.23 | −0.02 |

| 9 | −0.34 | 0.16 | −0.12 | −0.02 | −0.04 | 0.12 | −0.05 | −0.09 | 0.02 | 0.15 | −0.04 | 0.07 | 0.03 | 0.12 | −0.03 | −0.05 |

| 10 | 0.03 | 0.41 | 0.15 | 0.25 | 0.07 | 0.32 | 0.08 | 0.26 | 0.02 | 0.18 | 0.03 | 0.16 | −0.11 | 0.12 | −0.20 | 0.11 |

| 11 | −0.30 | 0.31 | −0.02 | 0.22 | −0.06 | 0.25 | −0.20 | 0.09 | 0.01 | 0.21 | −0.09 | 0.09 | 0.03 | 0.24 | 0.00 | 0.09 |

| 12 | −0.04 | 0.36 | 0.14 | 0.24 | 0.13 | 0.35 | 0.03 | 0.12 | 0.20 | 0.30 | −0.04 | 0.09 | 0.21 | 0.29 | −0.01 | 0.25 |

| 13 | −0.31 | 0.37 | 0.10 | 0.29 | −0.04 | 0.26 | −0.04 | 0.10 | 0.10 | 0.17 | −0.02 | 0.05 | 0.11 | 0.16 | 0.02 | −0.20 |

| 14 | −0.63 | 0.11 | −0.44 | 0.03 | −0.24 | 0.01 | −0.36 | −0.10 | 0.03 | 0.09 | −0.00 | −0.12 | 0.12 | 0.11 | −0.14 | −0.10 |

| Ave | −0.34 | 0.22 | −0.04 | 0.10 | −0.07 | 0.13 | −0.13 | 0.01 | −0.00 | 0.08 | −0.10 | 0.01 | −0.01 | 0.05 | −0.11 | −0.01 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | −0.57 | −0.00 | −0.13 | −0.12 | −0.40 | −0.14 | −0.21 | −0.12 | −0.45 | −0.26 | −0.22 | −0.40 | −0.34 | −0.36 | −0.17 | −0.29 |

| 2 | −0.11 | 0.13 | −0.14 | 0.01 | −0.01 | 0.02 | −0.19 | −0.18 | −0.00 | −0.01 | −0.11 | −0.15 | −0.06 | −0.02 | −0.21 | −0.06 |

| 3 | −0.19 | 0.01 | −0.09 | −0.01 | 0.01 | 0.05 | −0.16 | −0.05 | 0.07 | 0.09 | −0.11 | 0.05 | 0.02 | 0.04 | −0.05 | −0.07 |

| 4 | 0.06 | 0.13 | −0.08 | 0.13 | 0.11 | 0.05 | −0.22 | 0.07 | 0.11 | 0.06 | −0.21 | −0.04 | 0.09 | 0.01 | −0.16 | −0.07 |

| 5 | −0.04 | 0.15 | −0.18 | 0.16 | 0.11 | 0.05 | −0.06 | −0.02 | 0.16 | 0.07 | −0.01 | 0.12 | 0.17 | 0.11 | −0.16 | 0.04 |

| 6 | −0.72 | 0.03 | −0.11 | 0.01 | −0.13 | −0.25 | −0.23 | −0.18 | −0.02 | −0.19 | −0.17 | −0.11 | −0.05 | −0.12 | −0.36 | −0.13 |

| 7 | −0.71 | 0.09 | −0.10 | 0.03 | −0.11 | −0.18 | −0.23 | −0.06 | 0.00 | −0.11 | −0.30 | −0.13 | 0.01 | −0.10 | −0.29 | −0.22 |

| 8 | −0.44 | −0.13 | −0.13 | −0.20 | −0.15 | −0.27 | −0.39 | −0.28 | −0.02 | −0.29 | −0.35 | −0.11 | −0.08 | −0.39 | −0.29 | −0.26 |

| 9 | −0.39 | 0.05 | −0.25 | −0.16 | −0.09 | −0.09 | −0.16 | 0.01 | −0.03 | −0.09 | −0.23 | −0.20 | −0.00 | −0.11 | −0.23 | −0.16 |

| 10 | −0.12 | 0.12 | −0.28 | −0.08 | −0.03 | 0.03 | −0.18 | 0.05 | −0.08 | −0.08 | −0.21 | −0.18 | −0.19 | −0.16 | −0.23 | −0.14 |

| 11 | −0.40 | 0.08 | −0.01 | 0.00 | −0.15 | 0.03 | −0.08 | 0.04 | −0.07 | −0.04 | −0.16 | −0.00 | −0.04 | −0.05 | −0.18 | −0.01 |

| 12 | −0.15 | 0.20 | 0.03 | 0.08 | 0.01 | 0.16 | 0.11 | 0.02 | 0.07 | 0.08 | −0.27 | −0.06 | 0.11 | 0.08 | −0.07 | 0.11 |

| 13 | −0.40 | 0.13 | −0.05 | 0.09 | −0.14 | 0.01 | −0.07 | −0.08 | 0.00 | −0.14 | −0.17 | −0.27 | −0.02 | −0.10 | −0.30 | −0.32 |

| 14 | −0.75 | −0.06 | −0.19 | −0.04 | −0.34 | −0.17 | −0.34 | −0.11 | −0.07 | −0.13 | −0.26 | −0.10 | 0.01 | −0.14 | −0.29 | −0.06 |

| Ave | −0.35 | 0.07 | −0.12 | −0.01 | −0.09 | −0.05 | −0.17 | −0.06 | −0.02 | −0.07 | −0.20 | −0.11 | −0.03 | −0.09 | −0.21 | −0.12 |

Appendix D

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 0.48 * | 0.73 * | 0.43 * | 0.56 * | 0.52 * | 0.69 * | 0.41 * | 0.49 * | 0.52 * | 0.64 * | 0.36 * | 0.41 * | 0.52 * | 0.66 * | 0.39 * | 0.47 * |

| 2 | 0.60 * | 0.76 * | 0.45 * | 0.53 * | 0.61 * | 0.71 * | 0.45 * | 0.50 * | 0.60 * | 0.71 * | 0.43 * | 0.56 * | 0.58 * | 0.70 * | 0.41 * | 0.50 * |

| 3 | 0.59 * | 0.76 * | 0.45 * | 0.59 * | 0.63 * | 0.76 * | 0.55 * | 0.61 * | 0.64 * | 0.76 * | 0.45 * | 0.63 * | 0.63 * | 0.74 * | 0.50 * | 0.60 * |

| 4 | 0.72 * | 0.81 * | 0.54 * | 0.64 * | 0.69 * | 0.78 * | 0.53 * | 0.64 * | 0.67 * | 0.76 * | 0.51 * | 0.60 * | 0.65 * | 0.74 * | 0.51 * | 0.57 * |

| 5 | 0.66 * | 0.77 * | 0.53 * | 0.63 * | 0.68 * | 0.78 * | 0.50 * | 0.61 * | 0.68 * | 0.77 * | 0.52 * | 0.58 * | 0.67 * | 0.76 * | 0.50 * | 0.62 * |

| 6 | 0.70 * | 0.82 * | 0.58 * | 0.64 * | 0.71 * | 0.80 * | 0.53 * | 0.64 * | 0.70 * | 0.79 * | 0.49 * | 0.61 * | 0.67 * | 0.79 * | 0.56 * | 0.61 * |

| 7 | 0.67 * | 0.82 * | 0.56 * | 0.64 * | 0.69 * | 0.79 * | 0.47 * | 0.64 * | 0.69 * | 0.79 * | 0.54 * | 0.65 * | 0.68 * | 0.79 * | 0.50 * | 0.64 * |

| 8 | 0.68 * | 0.79 * | 0.51 * | 0.65 * | 0.67 * | 0.78 * | 0.50 * | 0.61 * | 0.68 * | 0.76 * | 0.50 * | 0.57 * | 0.65 * | 0.75 * | 0.48 * | 0.56 * |

| 9 | 0.62 * | 0.80 * | 0.51 * | 0.61 * | 0.67 * | 0.79 * | 0.51 * | 0.65 * | 0.68 * | 0.78 * | 0.52 * | 0.61 * | 0.68 * | 0.78 * | 0.46 * | 0.63 * |

| 10 | 0.70 * | 0.85 * | 0.64 * | 0.74 * | 0.70 * | 0.83 * | 0.58 * | 0.71 * | 0.68 * | 0.80 * | 0.55 * | 0.67 * | 0.65 * | 0.80 * | 0.54 * | 0.64 * |

| 11 | 0.69 * | 0.83 * | 0.54 * | 0.69 * | 0.70 * | 0.81 * | 0.53 * | 0.67 * | 0.70 * | 0.81 * | 0.56 * | 0.67 * | 0.69 * | 0.82 * | 0.55 * | 0.70 * |

| 12 | 0.75 * | 0.85 * | 0.67 * | 0.74 * | 0.74 * | 0.85 * | 0.65 * | 0.71 * | 0.74 * | 0.85 * | 0.60 * | 0.67 * | 0.74 * | 0.83 * | 0.58 * | 0.68 * |

| 13 | 0.75 * | 0.85 * | 0.63 * | 0.74 * | 0.73 * | 0.83 * | 0.58 * | 0.71 * | 0.72 * | 0.81 * | 0.55 * | 0.70 * | 0.72 * | 0.80 * | 0.54 * | 0.66 * |

| 14 | 0.68 * | 0.80 * | 0.49 * | 0.61 * | 0.67 * | 0.81 * | 0.56 * | 0.67 * | 0.70 * | 0.82 * | 0.57 * | 0.65 * | 0.72 * | 0.81 * | 0.61 * | 0.71 * |

| Ave | 0.66 | 0.80 | 0.54 | 0.64 | 0.67 | 0.79 | 0.53 | 0.63 | 0.67 | 0.77 | 0.51 | 0.61 | 0.66 | 0.77 | 0.51 | 0.61 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 0.46 * | 0.70 * | 0.40 * | 0.44 * | 0.48 * | 0.66 * | 0.34 * | 0.36 * | 0.47 * | 0.63 * | 0.27 * | 0.36 * | 0.46 * | 0.63 * | 0.28 * | 0.36 * |

| 2 | 0.56 * | 0.71 * | 0.38 * | 0.50 * | 0.56 * | 0.68 * | 0.33 * | 0.43 * | 0.54 * | 0.66 * | 0.32 * | 0.47 * | 0.54 * | 0.64 * | 0.33 * | 0.43 * |

| 3 | 0.56 * | 0.73 * | 0.37 * | 0.53 * | 0.58 * | 0.72 * | 0.42 * | 0.49 * | 0.59 * | 0.73 * | 0.42 * | 0.51 * | 0.57 * | 0.72 * | 0.37 * | 0.50 * |

| 4 | 0.65 * | 0.76 * | 0.48 * | 0.59 * | 0.60 * | 0.71 * | 0.33 * | 0.56 * | 0.57 * | 0.68 * | 0.36 * | 0.51 * | 0.54 * | 0.66 * | 0.38 * | 0.48 * |

| 5 | 0.59 * | 0.74 * | 0.47 * | 0.57 * | 0.59 * | 0.71 * | 0.44 * | 0.58 * | 0.60 * | 0.71 * | 0.38 * | 0.55 * | 0.59 * | 0.70 * | 0.44 * | 0.57 * |

| 6 | 0.57 * | 0.74 * | 0.46 * | 0.55 * | 0.56 * | 0.70 * | 0.42 * | 0.52 * | 0.56 * | 0.70 * | 0.44 * | 0.55 * | 0.53 * | 0.70 * | 0.43 * | 0.52 * |

| 7 | 0.57 * | 0.73 * | 0.48 * | 0.58 * | 0.56 * | 0.71 * | 0.46 * | 0.55 * | 0.56 * | 0.71 * | 0.46 * | 0.52 * | 0.55 * | 0.72 * | 0.42 * | 0.55 * |

| 8 | 0.56 * | 0.72 * | 0.43 * | 0.54 * | 0.55 * | 0.70 * | 0.40 * | 0.55 * | 0.56 * | 0.69 * | 0.46 * | 0.51 * | 0.53 * | 0.67 * | 0.32 * | 0.50 * |

| 9 | 0.52 * | 0.72 * | 0.45 * | 0.52 * | 0.56 * | 0.71 * | 0.45 * | 0.53 * | 0.56 * | 0.71 * | 0.44 * | 0.55 * | 0.56 * | 0.71 * | 0.47 * | 0.51 * |

| 10 | 0.64 * | 0.81 * | 0.55 * | 0.66 * | 0.62 * | 0.78 * | 0.53 * | 0.63 * | 0.59 * | 0.74 * | 0.51 * | 0.59 * | 0.54 * | 0.72 * | 0.42 * | 0.55 * |

| 11 | 0.60 * | 0.77 * | 0.49 * | 0.61 * | 0.60 * | 0.76 * | 0.39 * | 0.58 * | 0.60 * | 0.75 * | 0.46 * | 0.57 * | 0.59 * | 0.75 * | 0.47 * | 0.58 * |

| 12 | 0.67 * | 0.78 * | 0.55 * | 0.64 * | 0.65 * | 0.77 * | 0.48 * | 0.59 * | 0.64 * | 0.76 * | 0.47 * | 0.58 * | 0.63 * | 0.76 * | 0.49 * | 0.62 * |

| 13 | 0.68 * | 0.80 * | 0.55 * | 0.67 * | 0.65 * | 0.78 * | 0.48 * | 0.58 * | 0.63 * | 0.76 * | 0.49 * | 0.57 * | 0.62 * | 0.76 * | 0.50 * | 0.54 * |

| 14 | 0.59 * | 0.73 * | 0.23 * | 0.53 * | 0.56 * | 0.72 * | 0.33 * | 0.54 * | 0.58 * | 0.74 * | 0.48 * | 0.54 * | 0.60 * | 0.75 * | 0.43 * | 0.55 * |

| Ave | 0.59 | 0.75 | 0.45 | 0.57 | 0.58 | 0.72 | 0.41 | 0.54 | 0.57 | 0.71 | 0.43 | 0.53 | 0.56 | 0.70 | 0.41 | 0.52 |

| 10 m | 30 m | 50 m | 70 m | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tower | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR | WRF | GBDT | DTR | MLPR |

| 1 | 0.44 * | 0.66 * | 0.36 * | 0.43 * | 0.47 * | 0.62 * | 0.30 * | 0.40 * | 0.46 * | 0.61 * | 0.32 * | 0.34 * | 0.45 * | 0.58 * | 0.32 * | 0.35 * |

| 2 | 0.57 * | 0.68 * | 0.37 * | 0.47 * | 0.56 * | 0.65 * | 0.36 * | 0.40 * | 0.55 * | 0.64 * | 0.36 * | 0.42 * | 0.53 * | 0.62 * | 0.31 * | 0.41 * |

| 3 | 0.50 * | 0.67 * | 0.41 * | 0.49 * | 0.52 * | 0.66 * | 0.37 * | 0.46 * | 0.53 * | 0.68 * | 0.40 * | 0.51 * | 0.51 * | 0.66 * | 0.43 * | 0.45 * |

| 4 | 0.60 * | 0.72 * | 0.46 * | 0.56 * | 0.56 * | 0.67 * | 0.38 * | 0.52 * | 0.54 * | 0.66 * | 0.36 * | 0.50 * | 0.52 * | 0.65 * | 0.37 * | 0.48 * |

| 5 | 0.57 * | 0.72 * | 0.39 * | 0.58 * | 0.57 * | 0.70 * | 0.43 * | 0.51 * | 0.57 * | 0.70 * | 0.45 * | 0.59 * | 0.56 * | 0.70 * | 0.37 * | 0.53 * |

| 6 | 0.52 * | 0.67 * | 0.40 * | 0.52 * | 0.52 * | 0.62 * | 0.37 * | 0.41 * | 0.52 * | 0.62 * | 0.37 * | 0.47 * | 0.50 * | 0.63 * | 0.30 * | 0.48 * |

| 7 | 0.52 * | 0.69 * | 0.41 * | 0.54 * | 0.51 * | 0.63 * | 0.31 * | 0.49 * | 0.52 * | 0.64 * | 0.36 * | 0.49 * | 0.51 * | 0.63 * | 0.35 * | 0.45 * |

| 8 | 0.51 * | 0.65 * | 0.40 * | 0.45 * | 0.50 * | 0.63 * | 0.33 * | 0.42 * | 0.51 * | 0.62 * | 0.31 * | 0.46 * | 0.49 * | 0.60 * | 0.32 * | 0.41 * |

| 9 | 0.47 * | 0.70 * | 0.40 * | 0.47 * | 0.51 * | 0.68 * | 0.40 * | 0.49 * | 0.52 * | 0.68 * | 0.36 * | 0.47 * | 0.52 * | 0.68 * | 0.35 * | 0.46 * |

| 10 | 0.58 * | 0.74 * | 0.41 * | 0.54 * | 0.56 * | 0.71 * | 0.44 * | 0.56 * | 0.52 * | 0.71 * | 0.41 * | 0.49 * | 0.49 * | 0.68 * | 0.40 * | 0.47 * |

| 11 | 0.54 * | 0.74 * | 0.48 * | 0.56 * | 0.53 * | 0.71 * | 0.44 * | 0.56 * | 0.53 * | 0.72 * | 0.42 * | 0.53 * | 0.52 * | 0.71 * | 0.41 * | 0.53 * |

| 12 | 0.57 * | 0.75 * | 0.49 * | 0.54 * | 0.56 * | 0.75 * | 0.52 * | 0.54 * | 0.55 * | 0.72 * | 0.37 * | 0.50 * | 0.55 * | 0.72 * | 0.43 * | 0.53 * |

| 13 | 0.59 * | 0.75 * | 0.49 * | 0.59 * | 0.55 * | 0.72 * | 0.46 * | 0.54 * | 0.55 * | 0.70 * | 0.43 * | 0.46 * | 0.52 * | 0.70 * | 0.37 * | 0.45 * |

| 14 | 0.50 * | 0.70 * | 0.35 * | 0.50 * | 0.47 * | 0.68 * | 0.31 * | 0.49 * | 0.50 * | 0.68 * | 0.36 * | 0.48 * | 0.52 * | 0.69 * | 0.33 * | 0.52 * |

| Ave | 0.53 | 0.70 | 0.41 | 0.52 | 0.53 | 0.67 | 0.39 | 0.49 | 0.53 | 0.67 | 0.38 | 0.48 | 0.51 | 0.66 | 0.36 | 0.47 |

References

- Rife, D.L.; Davis, C.A.; Liu, Y.; Warner, T.T. Predictability of low-level winds by mesoscale meteorological models. Mon. Weather Rev. 2004, 132, 2553–2569. [Google Scholar] [CrossRef]

- Storm, B.; Dudhia, J.; Basu, S.; Swift, A.; Giammanco, I. Evaluation of the weather research and forecasting model on forecasting low-level jets: Implications for wind energy. Wind Energy 2009, 12, 81–90. [Google Scholar] [CrossRef]

- Marquis, M.; Wilczak, J.; Ahlstrom, M.; Sharp, J.; Stern, A.; Smith, J.C.; Calvert, S. Forecasting the wind to reach significant penetration levels of wind energy. Bull. Am. Meteorol. Soc. 2011, 92, 1159–1171. [Google Scholar] [CrossRef]

- Foley, A.M.; Leahy, P.G.; Marvuglia, A.; McKeogh, E.J. Current methods and advances in forecasting of wind power generation. Renew. Energy 2012, 37, 1–8. [Google Scholar] [CrossRef]

- Zhao, P.; Wang, J.; Xia, J.; Dai, Y.; Sheng, Y.; Yue, J. Performance evaluation and accuracy enhancement of a day-ahead wind power forecasting system in China. Renew. Energy 2012, 43, 234–241. [Google Scholar] [CrossRef]

- Mahoney, W.P.; Parks, K.; Wiener, G.; Liu, Y.; Myers, W.L.; Sun, J.; Delle Monache, L.; Hopson, T.; Johnson, D.; Haupt, S.E. A wind power forecasting system to optimize grid integration. IEEE Trans. Sustain. Energy 2012, 3, 670–682. [Google Scholar] [CrossRef]

- Stathopoulos, C.; Kaperoni, A.; Galanis, G.; Kallos, G. Wind power prediction based on numerical and statistical models. J. Wind Eng. Ind. Aerodyn. 2013, 112, 25–38. [Google Scholar] [CrossRef]

- Wyszogrodzki, A.A.; Liu, Y.; Jacobs, N.; Childs, P.; Zhang, Y.; Roux, G.; Warner, T.T. Analysis of the surface temperature and wind forecast errors of the NCAR-AirDat operational CONUS 4-km WRF forecasting system. Meteorol. Atmos. Phys. 2013, 122, 125–143. [Google Scholar] [CrossRef]

- Deppe, A.J.; Gallus, W.A., Jr.; Takle, E.S. A WRF ensemble for improved wind speed forecasts at turbine height. Weather Forecast. 2013, 28, 212–228. [Google Scholar] [CrossRef]

- Tateo, A.; Miglietta, M.M.; Fedele, F.; Menegotto, M.; Monaco, A.; Bellotti, R. Ensemble using different Planetary Boundary Layer schemes in WRF model for wind speed and direction prediction over Apulia region. Adv. Sci. Res. 2017, 14, 95. [Google Scholar] [CrossRef][Green Version]

- Cheng, W.Y.; Liu, Y.; Liu, Y.; Zhang, Y.; Mahoney, W.P.; Warner, T.T. The impact of model physics on numerical wind forecasts. Renew. Energy 2013, 55, 347–356. [Google Scholar] [CrossRef]

- Marjanovic, N.; Wharton, S.; Chow, F.K. Investigation of model parameters for high-resolution wind energy forecasting: Case studies over simple and complex terrain. J. Wind Eng. Ind. Aerodyn. 2014, 134, 10–24. [Google Scholar] [CrossRef]

- Liu, Y.; Warner, T.; Liu, Y.; Vincent, C.; Wu, W.; Mahoney, B.; Swerdlin, S.; Parks, K.; Boehnert, J. Simultaneous nested modeling from the synoptic scale to the LES scale for wind energy applications. J. Wind Eng. Ind. Aerodyn. 2011, 99, 308–319. [Google Scholar] [CrossRef]

- Zhang, F.; Yang, Y.; Wang, C. The Effects of Assimilating Conventional and ATOVS Data on Forecasted Near-Surface Wind with WRF-3DVAR. Mon. Weather Rev. 2015, 143, 153–164. [Google Scholar] [CrossRef]

- Ancell, B.C.; Kashawlic, E.; Schroeder, J.L. Evaluation of wind forecasts and observation impacts from variational and ensemble data assimilation for wind energy applications. Mon. Weather Rev. 2015, 143, 3230–3245. [Google Scholar] [CrossRef]

- Ulazia, A.; Saenz, J.; Ibarra-Berastegui, G. Sensitivity to the use of 3DVAR data assimilation in a mesoscale model for estimating offshore wind energy potential. A case study of the Iberian northern coastline. Appl. Energy 2016, 180, 617–627. [Google Scholar] [CrossRef]

- Che, Y.; Xiao, F. An integrated wind-forecast system based on the weather research and forecasting model, Kalman filter, and data assimilation with nacelle-wind observation. J. Renew. Sustain. Energy 2016, 8, 53308. [Google Scholar] [CrossRef]

- Ulazia, A.; Sáenz, J.; Ibarra-Berastegui, G.; González-Rojí, S.J.; Carreno-Madinabeitia, S. Using 3DVAR data assimilation to measure offshore wind energy potential at different turbine heights in the West Mediterranean. Appl. Energy 2017, 208, 1232–1245. [Google Scholar] [CrossRef]

- Cheng, W.Y.; Liu, Y.; Bourgeois, A.J.; Wu, Y.; Haupt, S.E. Short-term wind forecast of a data assimilation/weather forecasting system with wind turbine anemometer measurement assimilation. Renew. Energy 2017, 107, 340–351. [Google Scholar] [CrossRef]

- Akish, E.; Bianco, L.; Djalalova, I.V.; Wilczak, J.M.; Olson, J.B.; Freedman, J.; Finley, C.; Cline, J. Measuring the impact of additional instrumentation on the skill of numerical weather prediction models at forecasting wind ramp events during the first Wind Forecast Improvement Project (WFIP). Wind Energy 2019, 22, 1165–1174. [Google Scholar] [CrossRef]

- Costa, A.; Crespo, A.; Navarro, J.; Lizcano, G.; Madsen, H.; Feitosa, E. A review on the young history of the wind power short-term prediction. Renew. Sustain. Energy Rev. 2008, 12, 1725–1744. [Google Scholar] [CrossRef]

- Jung, J.; Broadwater, R.P. Current status and future advances for wind speed and power forecasting. Renew. Sustain. Energy Rev. 2014, 31, 762–777. [Google Scholar] [CrossRef]

- Glahn, H.R.; Lowry, D.A. The use of model output statistics (MOS) in objective weather forecasting. J. Appl. Meteorol. 1972, 11, 1203–1211. [Google Scholar] [CrossRef]

- Carter, G.M.; Dallavalle, J.P.; Glahn, H.R. Statistical forecasts based on the National Meteorological Center’s numerical weather prediction system. Weather Forecast. 1989, 4, 401–412. [Google Scholar] [CrossRef]

- Jacks, E.; Bower, J.B.; Dagostaro, V.J.; Dallavalle, J.P.; Erickson, M.C.; Su, J.C. New NGM-based MOS guidance for maximum/minimum temperature, probability of precipitation, cloud amount, and surface wind. Weather Forecast. 1990, 5, 128–138. [Google Scholar] [CrossRef]

- Hart, K.A.; Steenburgh, W.J.; Onton, D.J.; Siffert, A.J. An evaluation of mesoscale-model-based model output statistics (MOS) during the 2002 Olympic and Paralympic Winter Games. Weather Forecast. 2004, 19, 200–218. [Google Scholar] [CrossRef]

- Wilks, D.S.; Hamill, T.M. Comparison of ensemble-MOS methods using GFS reforecasts. Mon. Weather Rev. 2007, 135, 2379–2390. [Google Scholar] [CrossRef]

- Stensrud, D.J.; Skindlov, J.A. Gridpoint predictions of high temperature from a mesoscale model. Weather Forecast. 1996, 11, 103–110. [Google Scholar] [CrossRef][Green Version]

- Stensrud, D.J.; Yussouf, N. Short-range ensemble predictions of 2-m temperature and dewpoint temperature over New England. Mon. Weather Rev. 2003, 131, 2510–2524. [Google Scholar] [CrossRef]

- Eckel, F.A.; Mass, C.F. Aspects of effective mesoscale, short-range ensemble forecasting. Weather Forecast. 2005, 20, 328–350. [Google Scholar] [CrossRef]

- Hacker, J.P.; Rife, D.L. A practical approach to sequential estimation of systematic error on near-surface mesoscale grids. Weather Forecast. 2007, 22, 1257–1273. [Google Scholar] [CrossRef]

- Homleid, M. Diurnal corrections of short-term surface temperature forecasts using the Kalman filter. Weather Forecast. 1995, 10, 689–707. [Google Scholar] [CrossRef]

- Roeger, C.; Stull, R.; McClung, D.; Hacker, J.; Deng, X.; Modzelewski, H. Verification of mesoscale numerical weather forecasts in mountainous terrain for application to avalanche prediction. Weather Forecast. 2003, 18, 1140–1160. [Google Scholar] [CrossRef]

- McCollor, D.; Stull, R. Hydrometeorological accuracy enhancement via postprocessing of numerical weather forecasts in complex terrain. Weather Forecast. 2008, 23, 131–144. [Google Scholar] [CrossRef]

- Delle Monache, L.; Nipen, T.; Liu, Y.; Roux, G.; Stull, R. Kalman filter and analog schemes to postprocess numerical weather predictions. Mon. Weather Rev. 2011, 139, 3554–3570. [Google Scholar] [CrossRef]

- Cassola, F.; Burlando, M. Wind speed and wind energy forecast through Kalman filtering of Numerical Weather Prediction model output. Appl. Energy 2012, 99, 154–166. [Google Scholar] [CrossRef]

- Li, G.; Shi, J. On comparing three artificial neural networks for wind speed forecasting. Appl. Energy 2010, 87, 2313–2320. [Google Scholar] [CrossRef]

- Ishak, A.M.; Remesan, R.; Srivastava, P.K.; Islam, T.; Han, D. Error correction modelling of wind speed through hydro-meteorological parameters and mesoscale model: A hybrid approach. Water Resour. Manag. 2013, 27, 1–23. [Google Scholar] [CrossRef]

- Sweeney, C.P.; Lynch, P.; Nolan, P. Reducing errors of wind speed forecasts by an optimal combination of post-processing methods. Meteorol. Appl. 2013, 20, 32–40. [Google Scholar] [CrossRef]

- Zjavka, L. Wind speed forecast correction models using polynomial neural networks. Renew. Energy 2015, 83, 998–1006. [Google Scholar] [CrossRef]

- Zhao, J.; Guo, Z.; Su, Z.; Zhao, Z.; Xiao, X.; Liu, F. An improved multi-step forecasting model based on WRF ensembles and creative fuzzy systems for wind speed. Appl. Energy 2016, 162, 808–826. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, J.; Yu, D.; Chang, J. One-day-ahead probabilistic wind speed forecast based on optimized numerical weather prediction data. Energy Convers. Manag. 2018, 164, 560–569. [Google Scholar] [CrossRef]

- Papayiannis, G.I.; Galanis, G.N.; Yannacopoulos, A.N. Model aggregation using optimal transport and applications in wind speed forecasting. Environmetrics 2018, 29, e2531. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3. NCAR Technical Note-475+ STR. 2008. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.484.3656 (accessed on 11 November 2019).

- National Centers for Environmental Prediction/National Weather Service/NOAA/U.S. Department of Commerce. NCEP FNL Operational Model Global Tropospheric Analyses, Continuing from July 1999. Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory. 2000. Available online: https://doi.org/10.5065/D6M043C6 (accessed on 11 December 2018).

- Willmott, C.J. On the validation of models. Phys. Geogr. 1981, 2, 184–194. [Google Scholar] [CrossRef]

- Willmott, C.J. On the evaluation of model performance in physical geography. In Spatial Statistics and Models; Gaile, G.L., Willmott, C.J., Eds.; Springer: Dordrecht, The Netherlands, 1984; pp. 443–460. [Google Scholar]

- Willmott, C.J.; Ackleson, S.G.; Davis, R.E.; Feddema, J.J.; Klink, K.M.; Legates, D.R.; O’Donnell, J.; Rowe, C.M. Statistics for the evaluation and comparison of models. J. Geophys. Res. Oceans 1985, 90, 8995–9005. [Google Scholar] [CrossRef]

- Legates, D.R.; McCabe, G.J., Jr. Evaluating the use of “goodness-of-fit” measures in hydrologic and hydroclimatic model validation. Water Resour. Res. 1999, 35, 233–241. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River flow forecasting through conceptual models part I—A discussion of principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar] [CrossRef]

- Zhou, Z. Ensemble Methods: Foundations and Algorithms, 1st ed.; Chapman & Hall/CRC: New York, NY, USA, 2012. [Google Scholar]

- Kearns, M.J.; Valiant, L.G. Cryptographic limitations on learning Boolean formulae and finite automata. In Machine Learning: From Theory to Applications; Hanson, S.J.E.A., Ed.; Springer: Berlin/Heidelberg, Germany, 1993; pp. 29–49. [Google Scholar]

- Schapire, R.E. The Strength of Weak Learnability. Mach. Learn. 1990, 5, 197–227. [Google Scholar] [CrossRef]

- Friedman, J.H.; Hastie, T.; Tibshirani, R. Additive logistic regression: A statistical view of boosting. Ann. Stat. 2000, 28, 337–407. [Google Scholar] [CrossRef]

- Kegl, B. The return of AdaBoost.MH: Multi-class Hamming trees. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Quinlan, J.R. Induction of Decision Trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Quinlan, J.R. Improved Use of Continuous Attributes in C4.5. J. Artif. Int. Res. 1996, 4, 77–90. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wadsworth & Brooks/Cole Advanced Books & Software: Monterey, CA, USA, 1984. [Google Scholar]

- Mason, L.; Baxter, J.; Bartlett, P.L.; Frean, M. Boosting Algorithms as Gradient Descent. Adv. Neural Inf. Process. Syst. 1999, 512–518. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 3146–3154. [Google Scholar]

- Lun, I.Y.F.; Lam, J.C. A study of Weibull parameters using long-term wind observations. Renew. Energy 2000, 20, 145–153. [Google Scholar] [CrossRef]

| Domain | 01 | 02 |

|---|---|---|

| Grid number | 252 × 207 | 81 × 96 |

| Grid resolution | 25 km | 5 km |

| Vertical levels | 41 | 41 |

| Microphysics | Thompson graupel | |

| Longwave radiation | RRTMG | |

| Shortwave radiation | RRTMG | |

| Land-surface | Noah | |

| Cumulus convention | Kain–Fritsch | |

| PBL | YSU | |

| Tower ID | Terrain Height (m) | Longitude (E) | Latitude (N) | Sampling Frequency | Sensor Bias |

|---|---|---|---|---|---|

| 10001 | 1 | 119.2167 | 35.0175 | 1 s | |

| 10002 | 1 | 119.2044 | 34.7666 | 1 s | |

| 10003 | 1 | 119.7784 | 34.4695 | 1 s | |

| 10004 | 2 | 120.3096 | 34.142 | 1 s | |

| 10005 | 1 | 120.5754 | 33.6442 | 1 s | |

| 10006 | 0.5 | 120.8807 | 33.0107 | 1 s | |

| 10007 | 0.5 | 120.8904 | 33.0131 | 1 s | |

| 10008 | 0.5 | 120.8955 | 33.0145 | 1 s | |

| 10009 | 2 | 120.9377 | 32.6452 | 1 s | |

| 10010 | 1 | 121.1993 | 32.47 | 1 s | |

| 10011 | 1 | 121.4183 | 32.2547 | 1 s | |

| 10012 | 2 | 121.5318 | 32.1059 | 1 s | |

| 10013 | 2 | 121.7346 | 32.0139 | 1 s | |

| 10014 | 1.5 | 121.8894 | 31.7003 | 1 s |

| Variables | Pressure Layers |

|---|---|

| Wind speed, wind direction, temperature, height, avo, pvo. | 850 hPa, 700 hPa, 500 hPa, 300 hPa |

| Variables | Height Levels |

|---|---|

| Wind speed, wind direction, temperature, pressure, avo, pvo | 10 m, 30 m, 50 m, 70 m, 90 m, 100 m, 120 m, 150 m, 200 m, 250 m, 300 m, 350 m, 400 m, 450 m, 500 m, 600 m, 700 m, 800 m, 1000 m, 1250 m, 1500 m, 1750 m, 2000 m, 2500 m, 3000 m, 3500 m, 4000 m, 4500 m, 5000 m. |

| Feature | Categories |

|---|---|

| Month | January, February, March, ……, December |

| Hour | 1, 2, 3, ……, 24. |

| Wind direction | N, S, E, W, NW, NE, SW, SE |

| Date Used as Train Data | Date Used as Test Data |

|---|---|

| 1, 2, 4, 5, 6, 8, 9, 10, 12, 13, 14, 16, 17, 18, 20, 21, 22, 24, 25, 26, 28, (29), (30), (31) | 3, 7, 11, 15, 19, 23, 27 |

| Param Name | Value/Value Range |

|---|---|

| Number of iterations | 2000 |

| Learning rate | 0.1 |

| Number of leaves | 10, 20, 40, 80, 160 |

| Minimum data in leaf | 10, 20, 40, 80 |

| Bagging fraction | 0.8 |

| Bagging frequency | 5 |

| Feature fraction | 0.9 |

| Metric | Mean square error |

| Parameter Name | Value |

|---|---|

| Hidden layer sizes | 100 |

| Activation function | Relu |

| Optimization method | Adam |

| Iterations | 200 |

| Loss function | Mean Square Error (MSE) |

| Learning rate init | 0.001 |

| Parameter Name | Value |

|---|---|

| Criterion | Mean Square Error (MSE) |

| Split method | Best split |

| Max depth | No limit |

| Iterations | 200 |

| Loss function | Mean Square Error (MSE) |

| Learning rate init | 0.001 |

| D | 10 | 20 | 40 | 80 | |||||

|---|---|---|---|---|---|---|---|---|---|

| L | Train | Val | Train | Val | Train | Val | Train | Val | |

| 10 | 0.174 | 0.380 | 0.179 | 0.369 | 0.185 | 0.382 | 0.195 | 0.387 | |

| 20 | 0.069 | 0.281 | 0.074 | 0.285 | 0.080 | 0.287 | 0.088 | 0.296 | |

| 40 | 0.022 | 0.248 | 0.025 | 0.257 | 0.029 | 0.263 | 0.037 | 0.264 | |

| 80 | 0.004 | 0.250 | 0.005 | 0.255 | 0.007 | 0.266 | 0.011 | 0.250 | |

| 160 | 0.000 | 0.249 | 0.001 | 0.237 | 0.001 | 0.243 | 0.002 | 0.238 | |

| Indices | 10 m | 30 m | 50 m | 70 m | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WRF and GBDT | DTR and GBDT | MLPR and GBDT | WRF and GBDT | DTR and GBDT | MLPR and GBDT | WRF and GBDT | DTR and GBDT | MLPR and GBDT | WRF and GBDT | DTR and GBDT | MLPR and GBDT | ||

| RMSE | 0–24 h | 1.8 × 10−10 | 3.1 × 10−12 | 5.32 × 10−8 | 4.09 × 10−13 | 1.76 × 10−14 | 2.31 × 10−10 | 2.17 × 10−13 | 3.26 × 10−18 | 1.51 × 10−10 | 4.15 × 10−14 | 1.47 × 10−15 | 1.23 × 10−9 |

| 24–48 h | 9.96 × 10−11 | 8.1 × 10−13 | 4.32 × 10−9 | 4.55 × 10−15 | 3.67 × 10−14 | 2.11 × 10−11 | 1.87 × 10−17 | 3.57 × 10−16 | 1.64 × 10−12 | 3.19 × 10−18 | 1.24 × 10−13 | 1.53 × 10−11 | |

| 48–72 h | 8.39 × 10−12 | 1.01 × 10−10 | 8.8 × 10−8 | 2.4 × 10−16 | 3.45 × 10−13 | 7.56 × 10−10 | 7.03 × 10−19 | 1.56 × 10−14 | 2.39 × 10−12 | 4.79 × 10−19 | 1.56 × 10−13 | 1.24 × 10−11 | |

| IA | 0–24 h | 1.68 × 10−8 | 1.18 × 10−9 | 1.57 × 10−6 | 2.42 × 10−7 | 6.31 × 10−11 | 8.75 × 10−6 | 7.36 × 10−6 | 3.02 × 10−10 | 1.09 × 10−5 | 4.85 × 10−6 | 7.99 × 10−11 | 9.79 × 10−6 |

| 24–48 h | 4.54 × 10−9 | 4.89 × 10−9 | 3 × 10−7 | 3.98 × 10−9 | 4.74 × 10−12 | 3.82 × 10−7 | 1.48 × 10−8 | 3.21 × 10−10 | 4.22 × 10−8 | 5.23 × 10−9 | 1.05 × 10−10 | 4.03 × 10−7 | |

| 48–72 h | 3.6 × 10−9 | 3.65 × 10−14 | 4.78 × 10−9 | 1.27 × 10−9 | 6.3 × 10−11 | 3.43 × 10−8 | 2.14 × 10−10 | 1.37 × 10−15 | 1.01 × 10−8 | 1.7 × 10−10 | 3 × 10−15 | 3.77 × 10−9 | |

| R | 0–24 h | 2.87 × 10−6 | 1.18 × 10−10 | 1.2 × 10−7 | 3.35 × 10−6 | 1.78 × 10−12 | 3.79 × 10−7 | 2.63 × 10−5 | 6.68 × 10−12 | 4.53 × 10−7 | 1.28 × 10−5 | 2.84 × 10−12 | 4.77 × 10−7 |

| 24–48 h | 2.14 × 10−8 | 1.28 × 10−9 | 1.43 × 10−8 | 1.17 × 10−9 | 1.1 × 10−12 | 1.92 × 10−8 | 1.38 × 10−9 | 1.06 × 10−11 | 1.67 × 10−9 | 1.86 × 10−9 | 1.81 × 10−12 | 7.65 × 10−9 | |

| 48–72 h | 1.57 × 10−10 | 1.04 × 10−15 | 7.08 × 10−11 | 2.31 × 10−10 | 2.08 × 10−12 | 1.05 × 10−9 | 3.28 × 10−11 | 5.16 × 10−17 | 2.25 × 10−10 | 2.42 × 10−10 | 2.08 × 10−16 | 7.3 × 10−11 | |

| NSE | 0–24 h | 3.76 × 10−7 | 1.44 × 10−6 | 0.003782 | 0.000748 | 9.14 × 10−6 | 0.01537 | 0.0939 | 0.000184 | 0.021304 | 0.086145 | 0.000173 | 0.036681 |

| 24–48 h | 1.5 × 10−6 | 4.24 × 10−5 | 0.024931 | 0.00354 | 5.56 × 10−5 | 0.067188 | 0.221716 | 0.001957 | 0.207006 | 0.366434 | 0.007758 | 0.347349 | |

| 48–72 h | 4.62 × 10−5 | 5.15 × 10−6 | 0.063338 | 0.410048 | 0.016471 | 0.757702 | 0.311537 | 0.00555 | 0.435889 | 0.198474 | 0.014306 | 0.65035 | |

| Observation | WRF | GBDT | DTR | MLPR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tower | K (Shape) | Lambda (Scale) | K (Shape) | Lambda (Scale) | K (Shape) | Lambda (Scale) | K (Shape) | Lambda (Scale) | K (Shape) | Lambda (Scale) |

| 10001 | 2.07 | 4.21 | 2.66 | 6.52 | 1.95 | 4.21 | 2.37 | 4.30 | 2.92 | 4.16 |

| 10002 | 2.17 | 4.61 | 2.55 | 6.55 | 1.88 | 4.48 | 2.38 | 4.55 | 2.73 | 4.46 |

| 10003 | 2.27 | 4.44 | 2.43 | 6.58 | 2.00 | 4.23 | 2.14 | 4.17 | 3.02 | 4.33 |

| 10004 | 2.14 | 5.22 | 2.53 | 6.77 | 2.01 | 5.05 | 2.10 | 4.85 | 2.63 | 5.01 |

| 10005 | 2.26 | 5.77 | 2.60 | 7.56 | 2.24 | 5.75 | 2.71 | 5.94 | 2.95 | 5.66 |

| 10006 | 2.02 | 4.44 | 2.54 | 7.52 | 1.93 | 4.49 | 2.30 | 4.82 | 2.65 | 4.42 |

| 10007 | 2.05 | 4.47 | 2.54 | 7.55 | 1.94 | 4.48 | 2.33 | 4.79 | 2.66 | 4.43 |

| 10008 | 2.20 | 5.11 | 2.54 | 7.58 | 2.22 | 5.04 | 2.62 | 5.38 | 2.95 | 5.05 |

| 10009 | 2.05 | 5.15 | 2.51 | 7.27 | 2.03 | 5.15 | 2.38 | 5.34 | 2.75 | 5.16 |

| 10010 | 1.76 | 5.37 | 2.50 | 6.44 | 1.85 | 5.34 | 1.94 | 5.37 | 2.19 | 5.42 |

| 10011 | 2.05 | 4.99 | 2.57 | 7.38 | 2.08 | 4.95 | 2.19 | 5.01 | 2.63 | 4.89 |

| 10012 | 1.97 | 4.93 | 2.50 | 6.77 | 1.91 | 5.05 | 2.04 | 4.92 | 2.49 | 4.94 |

| 10013 | 2.03 | 4.42 | 2.47 | 6.96 | 1.94 | 4.37 | 2.37 | 4.46 | 2.51 | 4.35 |

| 10014 | 2.32 | 4.56 | 2.57 | 7.69 | 2.12 | 4.47 | 2.47 | 4.50 | 2.92 | 4.45 |

| Test | Features |

|---|---|

| Test 1 | All features |

| Test 2 | ‘Other’ features |

| Test 3 | 10 m speed, 30 m speed, 50 m speed, 70 m speed, hour, month |

| Indices | 10 m | 30 m | 50 m | 70 m | |||||

|---|---|---|---|---|---|---|---|---|---|

| Tests 1 and 2 | Tests 1 and 3 | Tests 1 and 2 | Tests 1 and 3 | Tests 1 and 2 | Tests 1 and 3 | Tests 1 and 2 | Tests 1 and 3 | ||

| RMSE | 0–24 h | 4.35 × 10−7 | 3.81 × 10−5 | 2.48 × 10−8 | 3.43 × 10−8 | 1.62 × 10−10 | 1.58 × 10−8 | 3.49 × 10−8 | 1.13 × 10−7 |

| 24–48 h | 6.23 × 10−6 | 5.28 × 10−5 | 3.43 × 10−5 | 2.34 × 10−6 | 1.73 × 10−6 | 2.8 × 10−7 | 4.01 × 10−6 | 3.74 × 10−6 | |

| 48–72 h | 9.36 × 10−6 | 0.000277 | 5.95 × 10−5 | 1.58 × 10−5 | 1.84 × 10−6 | 7.75 × 10−7 | 6.45 × 10−5 | 5.63 × 10−6 | |

| IA | 0–24 h | 1.92 × 10−13 | 6.21 × 10−7 | 3.55 × 10−13 | 2.43 × 10−6 | 4.01 × 10−16 | 4.17 × 10−5 | 4.78 × 10−15 | 2.49 × 10−5 |

| 24–48 h | 1.44 × 10−12 | 6.21 × 10−9 | 1.51 × 10−12 | 1.22 × 10−8 | 1.2 × 10−13 | 5.85 × 10−9 | 3.82 × 10−17 | 8.59 × 10−8 | |

| 48–72 h | 8.02 × 10−12 | 8.47 × 10−11 | 4.87 × 10−10 | 3.24 × 10−10 | 9.33 × 10−10 | 3.25 × 10−11 | 6.24 × 10−9 | 8.31 × 10−10 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, W.; Ning, L.; Luo, Y. Wind Speed Forecast Based on Post-Processing of Numerical Weather Predictions Using a Gradient Boosting Decision Tree Algorithm. Atmosphere 2020, 11, 738. https://doi.org/10.3390/atmos11070738

Xu W, Ning L, Luo Y. Wind Speed Forecast Based on Post-Processing of Numerical Weather Predictions Using a Gradient Boosting Decision Tree Algorithm. Atmosphere. 2020; 11(7):738. https://doi.org/10.3390/atmos11070738

Chicago/Turabian StyleXu, Wenqing, Like Ning, and Yong Luo. 2020. "Wind Speed Forecast Based on Post-Processing of Numerical Weather Predictions Using a Gradient Boosting Decision Tree Algorithm" Atmosphere 11, no. 7: 738. https://doi.org/10.3390/atmos11070738

APA StyleXu, W., Ning, L., & Luo, Y. (2020). Wind Speed Forecast Based on Post-Processing of Numerical Weather Predictions Using a Gradient Boosting Decision Tree Algorithm. Atmosphere, 11(7), 738. https://doi.org/10.3390/atmos11070738