Abstract

Real-time and effective human thermal discomfort detection plays a critical role in achieving energy efficient control of human centered intelligent buildings because estimation results can provide effective feedback signals to heating, ventilation and air conditioning (HVAC) systems. How to detect occupant thermal discomfort is a challenge. Unfortunately, contact or semi-contact perception methods are inconvenient in practical application. From the contactless perspective, a kind of vision-based contactless human discomfort pose estimation method was proposed in this paper. Firstly, human pose data were captured from a vision-based sensor, and corresponding human skeleton information was extracted. Five thermal discomfort-related human poses were analyzed, and corresponding algorithms were constructed. To verify the effectiveness of the algorithms, 16 subjects were invited for physiological experiments. The validation results show that the proposed algorithms can recognize the five human poses of thermal discomfort.

1. Introduction

At present, global energy consumption has increased rapidly, of which the energy consumption of commercial and residential buildings accounts for 21% of the world′s total energy consumption [1]. Fifty percent of building energy consumption is related to heating, ventilation and air conditioning (HVAC) systems [2]. The main method currently adopted in the air conditioning industry is to provide a constant environment for buildings in accordance with international standards (ASHRAE (American Society of Heating, Refrigerating and Air-Conditioning Engineers) standard 55 [3], ASHRAE standard 62.1 [4]). This method does not take into account the individual difference and time variability of thermal comfort for building occupants. Studies have shown that even small adjustments to room temperature (such as 1 °C) can have a huge impact on the energy consumption of the entire building [5,6]. If the energy distribution can be combined with the specific environment, it can not only meet the individual thermal comfort requirements but also achieve energy efficiency goals.

The ASHRAE Standard 55 and ISO (International Organization for Standardization) Standard 7730 define thermal comfort as follows: In an indoor space, at least 80% of building occupants are psychologically satisfied with the current temperature range of the thermal environment [3,7]. Therefore, human thermal comfort, as a subjective feeling, often needs to be tested for each individual. Sim [8] used a wristband to detect human skin temperature and invited eight subjects to participate under different thermal conditions. A human body thermal comfort model was constructed based on parameters such as average skin temperature, temperature gradient and temperature time difference. Dai [9] proposed a thermal comfort prediction method based on a support vector machine (SVM), which used the skin temperature as an input and improved the model by combining the comprehensive skin temperature of different body parts. The prediction accuracy rate reaches 90%. Ghahramani [10] proposed a skin temperature detection method based on infrared thermal imaging sensing. By installing sensors on glasses, the skin temperature of the face is detected to predict human thermal comfort. Nkurikiyeyezu [11] used human heart rate variability (HRV) to estimate human thermal comfort. The subjects’ electrocardiogram (ECG) signals were used to calculate the HRV index using the special HRV analysis software they developed. Studies have shown that the accuracy of using the HRV index to predict human thermal comfort reaches 93.7%. Barrios [12] proposed an architecture—Comfstat, which uses smart watches and Bluetooth chest straps to obtain human heart rate data and uses machine learning methods to combine heart rate data with environmental data to achieve thermal comfort predictions. Kawakami [13] used photoelectric plethysmography (PPG) to monitor the index of blood circulation to evaluate the human body thermal comfort. Chaudhuri [14] used the wearable device to obtain human physiological characteristics, such as skin temperature, pulse rate and blood pressure, and combined the convolutional neural network and SVM kernel method to assess the thermal state of the subject; the accuracy of the two methods reached 93.33% and 90.6%. Salamone [15] proposed an IoT (Internet of Things) platform with integrated machine learning algorithms to obtain the thermal comfort of occupants. He used wearable devices, such as wristbands, to obtain the occupants’ physiological parameters while using sensors to obtain indoor environmental parameters. Then, he used machine learning algorithms to achieve the occupant’s thermal comfort prediction. The above method can realize human thermal comfort detection by capturing physiological parameters of the human body. However, some sensors must be installed directly or indirectly on corresponding body parts to obtain physiological parameters.

In order to solve the above problems, some scholars use the non-contact method to carry out relevant research on thermal comfort. Combining video magnification and deep learning, Cheng [16,17,18] tried to establish the relationship between the skin changes and skin temperature under different conditions, and proposed non-contact skin temperature detection methods to assess human thermal comfort.

From the perspective of human posture, we proposed a contactless detection method to detect the thermal comfort state of the human body. We used OpenPose, a deep learning-based open-source library, to recognize human body postures to determine thermal comfort. Meier [19] built a library of postures related to thermal discomfort by retrieving public image libraries and observing postures related to thermal discomfort. The library introduces postures that are used to represent most people’s reactions when they feel too cold or too hot and describes the details of these postures. We selected five postures from the library to complete our pose estimation algorithm, including splayed posture, hair movement, rubbing hands, buttoning up and shivering.

The main contributions of this paper are: On the basis of Yang [20], five sub-algorithms were constructed for the five thermal discomfort poses proposed by Meier. Sixteen subjects were invited to obtain data and verify the algorithm proposed.

The structure of the paper is as follows: The first part explains the theoretical basis and implementation process of the algorithm; the second part uses real-time captured data to verify the utility of the proposed algorithm; the third part gives the conclusion of the paper and points out the follow-up research direction.

2. Methodology

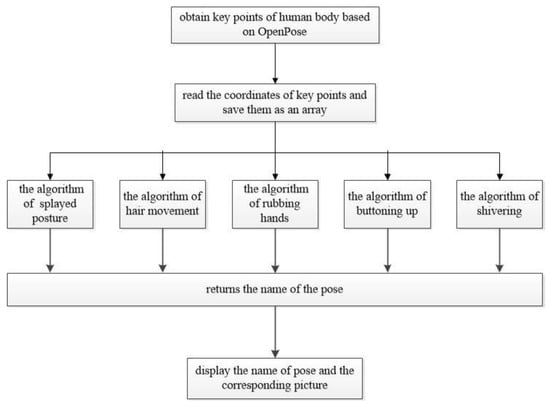

The programming language we used was python. The integrated development environment we used was Spyder. Spyder is a powerful and open-source interactive Python language development environment. On this basis, we implement the algorithm proposed in this paper. The overall algorithm of this paper includes 4 parts: perception of human body key points, extraction of key point coordinate information, estimation of poses and display of the poses. The specific algorithm process is shown in Figure 1.

Figure 1.

Flow chart of the human pose estimation algorithm.

2.1. Perception of Human Body Key Points

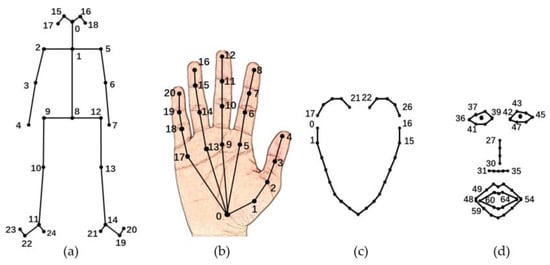

The basis of the algorithm in this paper is derived from OpenPose [21,22,23], a real-time multi-person key point detection library. It is an open-source library based on Caffe, which can detect the key points of the face, hand and body through images, videos or real-time shooting of cameras [24]. The distribution of key points is shown in Figure 2. There are 25 key points on the body to build the basic human body skeleton, 42 key points on the hand (21 for each hand) and 70 key points on the face. The corresponding instructions can be used to get pictures from the camera by frame and save the coordinates of key points in each picture.

Figure 2.

Key points of the human body [21,22,23].

2.2. Extraction of Key Points

Pose estimation is mainly achieved through the corresponding relationship among the body key points. When OpenPose is used to detect human body key points, the algorithm also starts to read the pictures captured by OpenPose and the files that save the key points. The coordinates of key points from the files are read by instructions and are saved as arrays.

2.3. Pose Estimation

Each point consists of three values, where the first two values represent its coordinates and the third value represents the confidence. Each pose is primarily judged by the positional relationship between points and the Euclidean distance between points. The Euclidean distance between the two points is calculated by Equation (1).

For the same posture, since the body type of each person may be different, the Euclidean distance between the calculated points is also different, which may lead to incorrect pose estimation. To avoid this problem, the Euclidean distance from the wrist to the elbow of the subject is used as the reference distance through Equation (2).

The subscripts in Equation (2) correspond to the right elbow and right wrist of the human body. Based on Equation (3), the distance between points is converted to the ratio of the distance between points and the reference distance ρ.

According to human body thermal comfort, this paper designed the following five algorithms in combination with the five poses defined by Meier. The array containing the coordinates is imported into the algorithm. When the calculation result meets the conditions of the defined pose, the name of the pose is returned. Table 1 shows the pose estimation algorithm of the splayed posture. The result of the pose estimation is obtained by calculating the distance from the wrist to the hip and comparing the x-coordinate values of the wrist and elbow.

Table 1.

Pose estimation algorithm of the splayed posture.

Table 2 shows the pose estimation algorithm of hair movement. This pose detection is a dynamic process. In order to achieve this continuous motion estimation, a continuous set of images needs to be selected as the input of the algorithm. The number of images in this article is 60. Afterward, a pairwise comparison between pictures is performed in a circular manner. We calculate the distance of the ear point between the front and back images. In order to avoid the point change caused by the overall movement of the human body, we also need to calculate the distance of the nose point between the front and back images. Since this pose is a continuous action, the conditions may not be met between any two images. In the judgment process, the number of cases where the calculation result between the two pictures meets the conditions is counted. Finally, the result of the counter is compared to 60. If the result of the comparison exceeds a defined threshold, the name of the pose is returned.

Table 2.

Pose estimation algorithm of hair movement.

Table 3 shows the pose estimation algorithm of rubbing hands. Firstly, the distance between the left and right wrist points needs to be calculated to ensure that the hands are tightened. Secondly, the distance of the wrist point between the front and back pictures is calculated, and the posture judgment is performed by using the method mentioned in the previous algorithm. Finally, the name of the pose is returned.

Table 3.

Pose estimation algorithm of rubbing hands.

Table 4 shows the pose estimation algorithm of buttoning up. Similar to the previous pose estimation algorithm of the splayed posture, it is necessary to compare the position of the wrist and shoulder points and calculate the distance between the wrist and neck points to achieve the pose estimation.

Table 4.

Pose estimation algorithm of buttoning up.

The posture of shivering is different from the first four poses because it needs to use the key points of the face. There are 70 key points on the face, which are distributed on the contours of the face, lips, nose and eyes. When a person is shivering, the lip is more active. Therefore, the points of the lip are mainly selected to detect the posture of shivering. Table 5 shows the estimation algorithm of shivering. The detection mechanism is similar to the pose estimation of hair movement. The pose estimation is completed by calculating the distance of the three points of the lip between the front and back pictures.

Table 5.

Pose estimation algorithm of shivering.

2.4. Display of the Poses

When the pose estimation is completed, the pose name and the corresponding picture are displayed on the screen through a window.

3. Results

The algorithm proposed in this paper is used to process real-time video obtained by ordinary cameras. Sixteen subjects were invited to perform experiments. They are students from school. The experiments are conducted in a laboratory with controlled temperature. The subjects complete the pose in front of the camera, and the algorithm recognizes and displays the pose of the subjects. Table 6 gives the basic information of the subjects, such as gender, age, height and weight.

Table 6.

Subjects information form.

Our algorithm detects five poses. The names of the five postures and the cold or hot states they represent are given in Table 7.

Table 7.

Information on five postures.

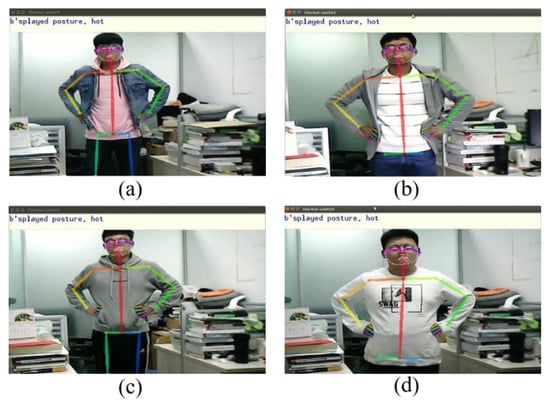

The detection results of the algorithm are shown below. Five pictures were used to show the detection results of five poses. Each picture contains four sections to show the test results of four subjects. Each section in the picture contains two boxes, the text box at the top shows the name of the current pose, and the pose picture detected by the algorithm is below the text box. The height of each subject in the four sections decreases in order.

When people feel hot, they try to stretch their limbs to dissipate heat. For example, people often place their hands on their waists to keep their bodies and arms as far apart as possible. Therefore, we asked the subjects to place their hands on the waist so that our algorithm could detect this splayed posture. Figure 3 shows the splayed posture recognized by the algorithm.

Figure 3.

Recognition results of splayed posture ((a)–(d)) shown the same posture name but the subjects are different, the heights of the subjects decrease in order.).

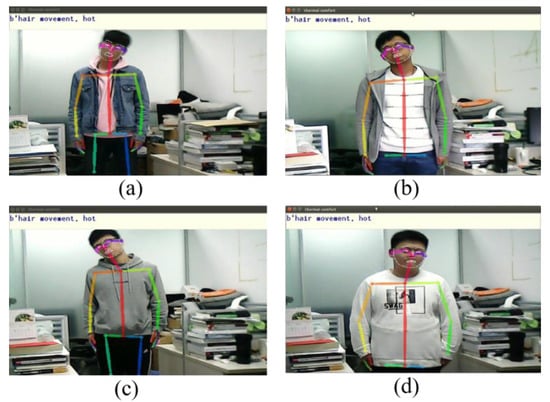

People with long hair are more susceptible to weather. When they feel hot, they may shake their hair to prevent it from clinging to the skin. In the experiment, we used the shaking of the head instead of the movement of the hair because the two situations are often concurrent. Figure 4 shows the posture of hair movement identified by the algorithm. The subject shakes his head to the completed pose estimation.

Figure 4.

Recognition results of hair movement ((a)–(d)) displayed the experimental results of different subjects for the same posture, and their heights are in descending order.).

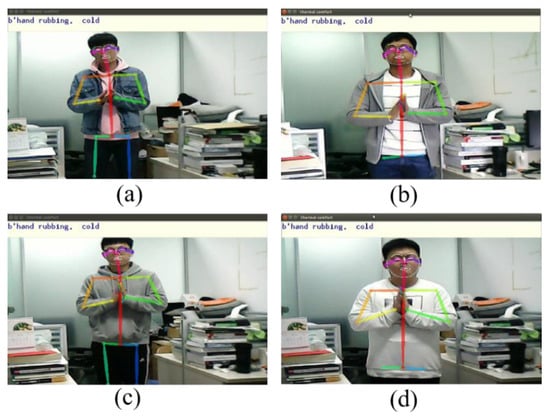

When the weather is cold, people often try to rub their hands rapidly to produce frictional heat to provide heat to the body. Figure 5 shows the posture of rubbing hands identified by the algorithm. The subject rubs his hands together. The algorithm estimates the pose based on the subject’s continuous action.

Figure 5.

Recognition results of rubbing hands ((a)–(d)) shown the results of different subjects on this posture, their body sizes are different and their heights decrease in order.).

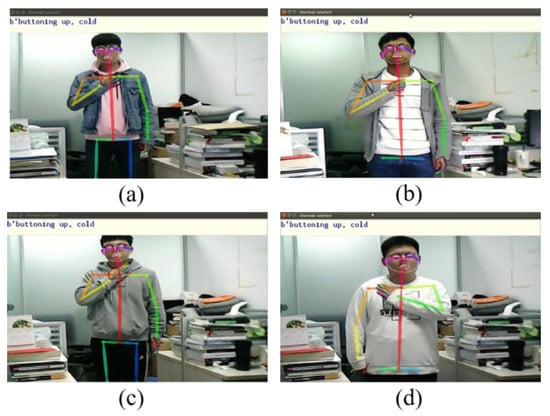

In cold weather, people will fasten the top button of the clothes or press the neckline of the clothes to reduce the air circulation between the clothes and the chest. Figure 6 shows the posture of buttoning up recognized by the algorithm. The subject uses the left or right hand to hold the neckline of the clothes, and the algorithm estimates the current posture.

Figure 6.

Recognition results of buttoning up ((a)–(d)) were the recognition results of different subjects for the same posture, they have different heights.).

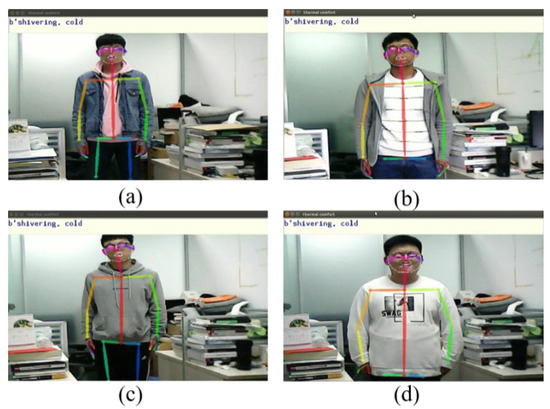

People tend to shiver involuntarily due to physiological reactions in cold weather. The more intuitive manifestation is the shaking of the teeth, so we tried to recognize this pose. Figure 7 shows the posture of shivering identified by the algorithm. The subject shakes teeth quickly, and the algorithm completes pose detection.

Figure 7.

Recognition results of shivering ((a)–(d)) shown the results of four subjects with different heights for the same posture.).

4. Discussion

The purpose of this study is to detect human posture in a contactless way, so as to determine the thermal comfort state of the human body without affecting occupants. The recognition results are fed back to the air-conditioning system to realize the automatic adjustment of the indoor environment.

Each person’s body size is different, and if the distance between points is used as a basis for judgment, detection errors may result. In the algorithm, we decided to use the distance from the wrist to the elbow of the people as the reference distance and rely on the proportional relationship to achieve the posture detection. We also selected subjects of different heights for testing. It can be seen from the results that for people of different body sizes, the algorithm can correctly detect when they complete the same pose.

However, the proposed algorithm also has certain limitations. Except for the posture of shivering, the other four poses are macro in this paper. In daily life, for the hot and cold states, some micro poses produced by the body are of great research value. At the same time, based on more consideration of the influence of body size on the algorithm, the subjects we selected are mostly males who have different body size.

5. Conclusions

The pose estimation algorithm proposed in this paper extracts the coordinates of key points of the body based on OpenPose, and then uses the correlation between the points to represent the features of each pose. On this basis, the algorithm is completed. After verification, our algorithm is useful in detecting these postures.

In the follow-up studies, while improving the performance of the current algorithm, we will also study the micro posture of body. Combining these two types of poses can better achieve thermal comfort detection. We will conduct more experiments on different groups of people in the future.

Author Contributions

Conceptualization, X.C., J.Q.; methodology, X.C., B.Y.; software, J.Q., Z.L.; validation, X.C., J.R.; formal analysis, X.C.; investigation, X.C.; resources, X.C.; data curation, X.C.; writing—original draft preparation, X.C., J.Q.; writing—review and editing, B.Y., Z.L.; visualization, X.C.; supervision T.O., H.L.; project administration, X.C.; funding acquisition, X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the International Postdoctoral Fellowship Program from China Postdoctoral Council, grant number 20160022; the National Natural Science Foundation of China, grant number 61401236; the Jiangsu Postdoctoral Science Foundation, grant number 1601039B; the Key Research Project of Jiangsu Science and Technology Department, grant number BE2016001-3.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- U.S. Energy Information Administration. International Energy Outlook 2017. Available online: https://www.eia.gov/outlooks/archive/ieo17/pdf/0484(2017).pdf (accessed on 11 August 2019).

- Pérez-Lombard, L.; Ortiz, J.; Pout, C. A review on buildings energy consumption information. Energy Build. 2008, 40, 394–398. [Google Scholar] [CrossRef]

- ASHRAE. ASHRAE Standard 55: 2017, Thermal Environmental Conditions for Human Occupancy; American Society of Heating, Refrigeration and Air-Conditioning Engineers: Atlanta, GA, USA, 2017. [Google Scholar]

- ASHRAE. Standard 62.1: 2016, Ventilation for Acceptable Indoor Air Quality; American Society of Heating, Refrigerating and Air-Conditioning Engineers: Atlanta, GA, USA, 2016. [Google Scholar]

- Ghahramani, A.; Zhang, K.; Dutta, K.; Yang, Z.; Becerik-Gerber, B. Energy savings from temperature setpoints and deadband: Quantifying the influence of building and system properties on savings. Appl. Energy 2016, 165, 930–942. [Google Scholar] [CrossRef]

- Ghahramani, A.; Dutta, K.; Yang, Z.; Ozcelik, G.; Becerik-Gerber, B. Quantifying the influence of temperature setpoints, building and system features on energy consumption. In Proceedings of the 2015 Winter Simulation Conference (WSC), Huntington Beach, CA, USA, 6–9 December 2015; pp. 1000–1011. [Google Scholar]

- ISO. ISO Standard 7730. Ergonomics of the Thermal Environment―Analytical Determination and Interpretation of Thermal Comfort Using Calculation of the PMV and PPD Indices and Local Thermal Comfort Criteria; International Organization for Standardization: Geneva, Switzerland, 2005. [Google Scholar]

- Sim, S.Y.; Koh, M.J.; Joo, K.M.; Noh, S.; Park, S.; Kim, Y.H.; Park, K.S. Estimation of thermal sensation based on wrist skin temperatures. Sensors 2016, 16, 420. [Google Scholar] [CrossRef] [PubMed]

- Dai, C.; Zhang, H.; Arens, E.; Lian, Z. Machine learning approaches to predict thermal demands using skin temperatures: Steady-state conditions. Build. Environ. 2017, 114, 1–10. [Google Scholar] [CrossRef]

- Ghahramani, A.; Castro, G.; Becerik-Gerber, B.; Yu, X. Infrared thermography of human face for monitoring thermoregulation performance and estimating personal thermal comfort. Build. Environ. 2016, 109, 1–11. [Google Scholar] [CrossRef]

- Nkurikiyeyezu, K.N.; Suzuki, Y.; Tobe, Y.; Lopez, G.F.; Itao, K. Heart rate variability as an indicator of thermal comfort state. In Proceedings of the 2017 56th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Kanazawa, Japan, 19–22 September 2017; pp. 1510–1512. [Google Scholar]

- Barrios, L.; Kleiminger, W. The Comfstat-automatically sensing thermal comfort for smart thermostats. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications (PerCom), Kona, HI, USA, 13–17 March 2017; pp. 257–266. [Google Scholar]

- Kawakami, K.; Ogawa, T.; Haseyama, M. Blood circulation based on PPG signals for thermal comfort evaluation. In Proceedings of the 2018 IEEE 7th Global Conference on Consumer Electronics (GCCE), Nara, Japan, 9–12 October 2018; pp. 194–195. [Google Scholar]

- Chaudhuri, T.; Zhai, D.; Soh, Y.C.; Li, H.; Xie, L.; Ou, X. Convolutional neural network and kernel methods for occupant thermal state detection using wearable technology. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Salamone, F.; Belussi, L.; Currò, C.; Danza, L.; Ghellere, M.; Guazzi, G.; Lenzi, B.; Megale, V.; Meroni, I. Integrated method for personal thermal comfort assessment and optimization through users’ feedback, IoT and machine learning: A case study. Sensors 2018, 18, 1602. [Google Scholar] [CrossRef] [PubMed]

- Cheng, X.; Yang, B.; Olofsson, T.; Liu, G.; Li, H. A pilot study of online non-invasive measuring technology based on video magnification to determine skin temperature. Build. Environ. 2017, 121, 1–10. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, B.; Hedman, A.; Olofsson, T.; Li, H.; Van Gool, L. NIDL: A pilot study of contactless measurement of skin temperature for intelligent building. Energy Build. 2019, 198, 340–352. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, B.; Tan, K.; Isaksson, E.; Li, L.; Hedman, A.; Olofsson, T.; Li, H. A contactless measuring method of skin temperature based on the skin sensitivity index and deep learning. Appl. Sci. 2019, 9, 1375. [Google Scholar] [CrossRef]

- Meier, A.; Cheng, X.; Dyer, W.; Chris, G.; Olofsson, T.; Yang, B. Non-invasive assessments of thermal discomfort in real time. In Proceedings of the 1st International Conference on Comfort at the Extremes: Energy, Economy and Climate, Heriot Watt University, Dubai, United Arab Emirates, 10–11 April 2019; pp. 692–707. [Google Scholar]

- Yang, B.; Cheng, X.; Dai, D.; Olofsson, T.; Li, H.; Meier, A. Macro pose based non-invasive thermal comfort perception for energy efficiency. arXiv 2018, arXiv:1811.07690. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Simon, T.; Joo, H.; Matthews, I.; Sheikh, Y. Hand keypoint detection in single images using multiview bootstrapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1145–1153. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Qiao, S.; Wang, Y.; Li, J. Real-time human gesture grading based on OpenPose. In Proceedings of the 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 14–16 October 2017; pp. 1–6. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).