Artificial Intelligence Based Ensemble Modeling for Multi-Station Prediction of Precipitation

Abstract

:1. Introduction

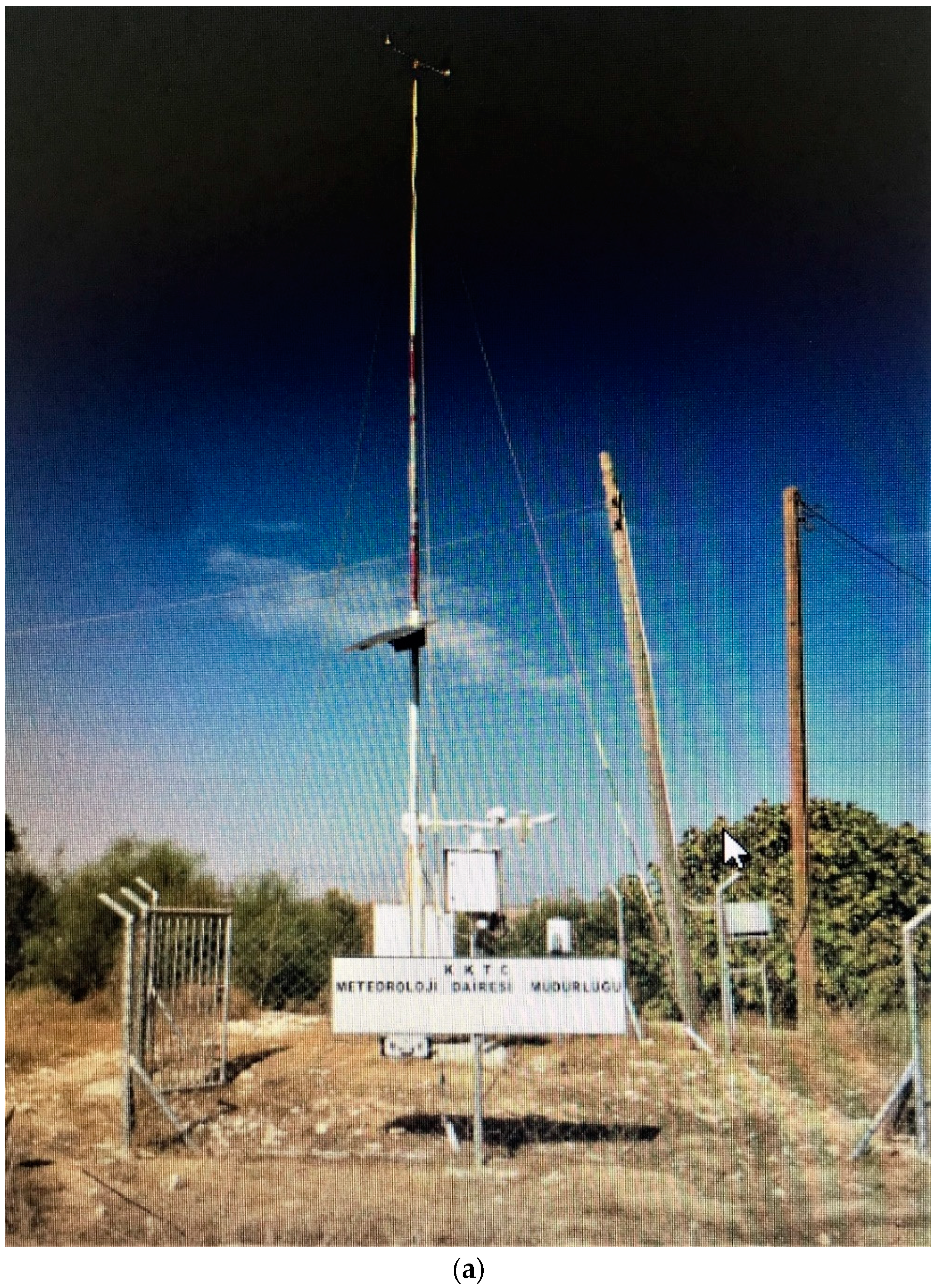

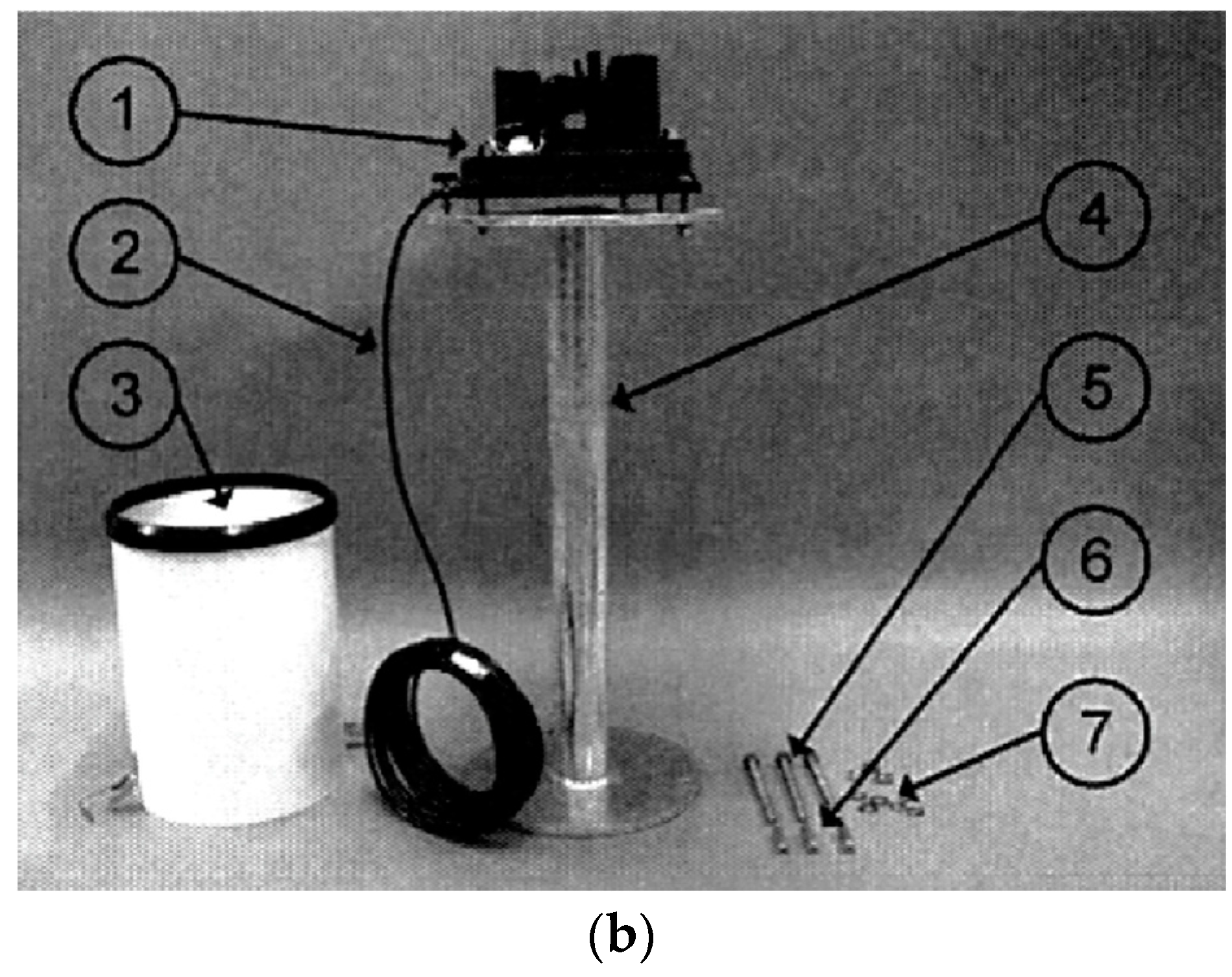

2. Experiments

2.1. Used Data and Efficiency Criteria

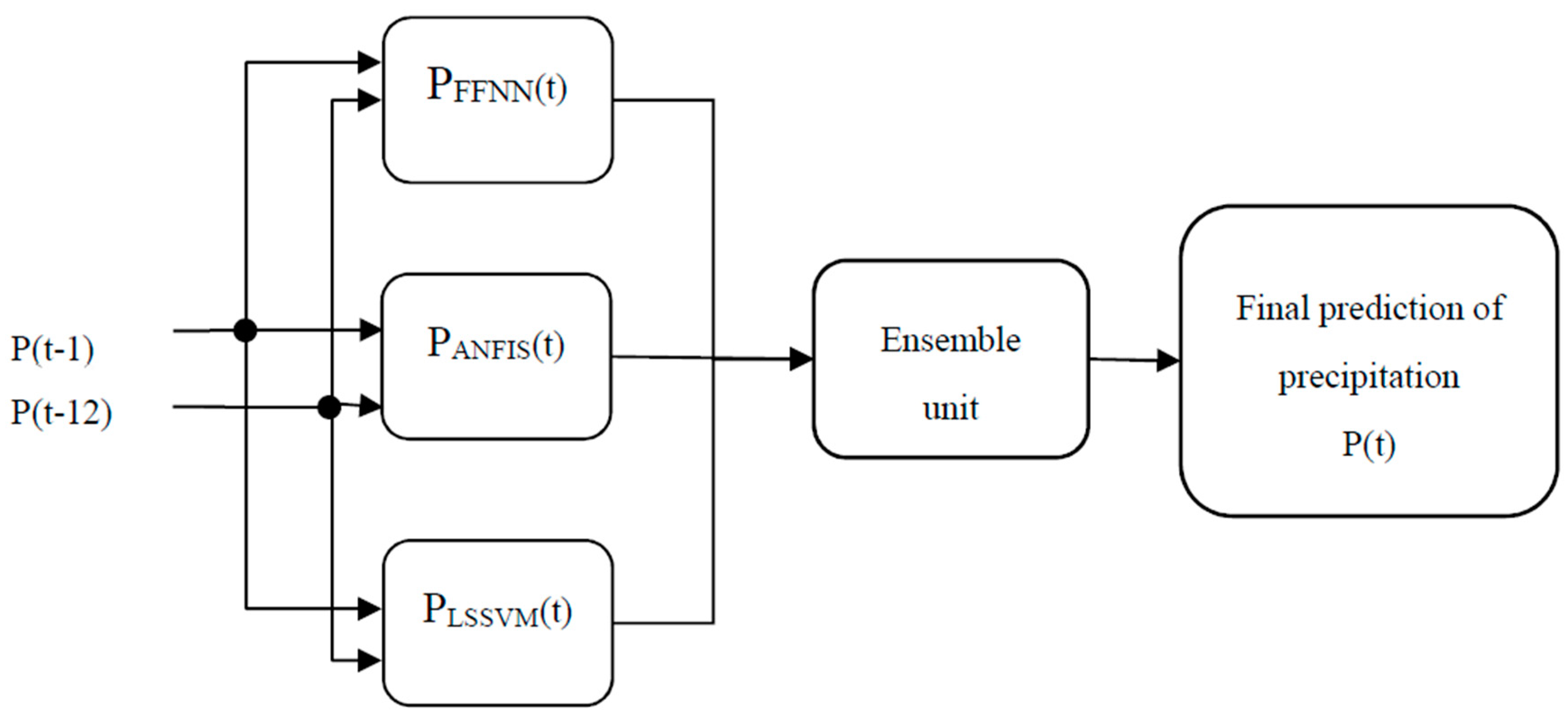

2.2. Proposed Methodology

2.2.1. First Scenario

2.2.2. Second Scenario

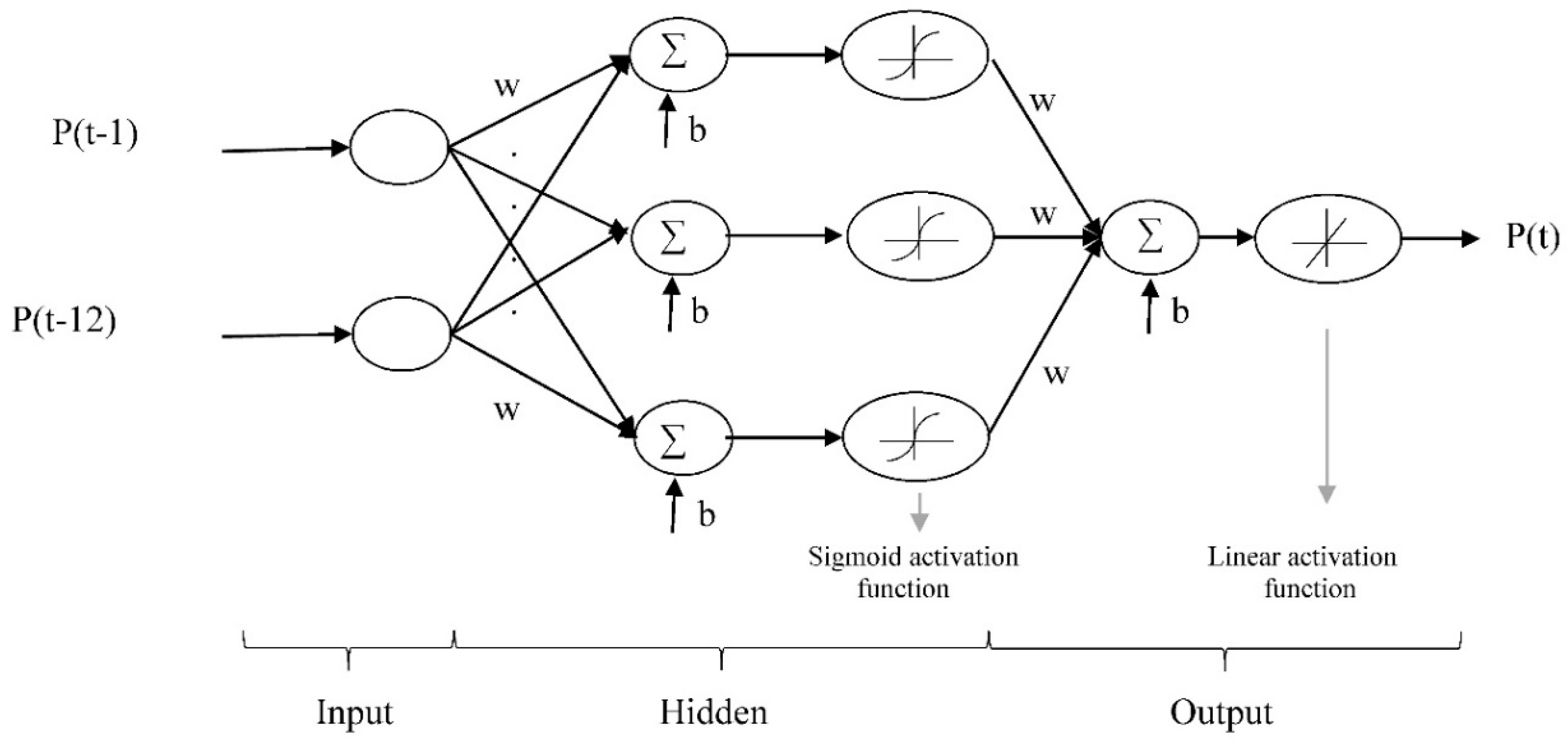

2.3. Feed Forward Neural Network (FFNN) Concept

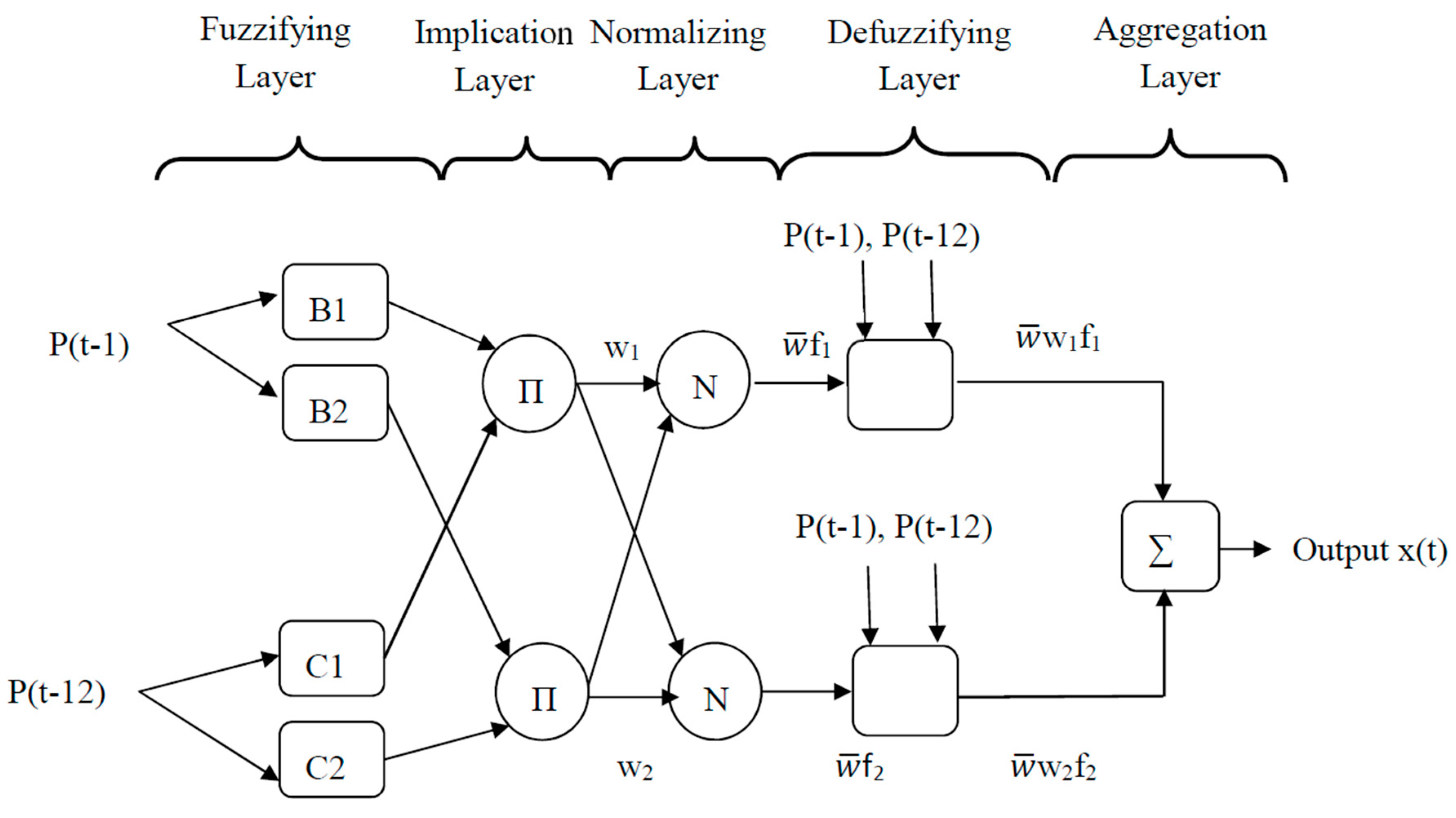

2.4. Adaptive Neural Fuzzy Inference System (ANFIS) concept

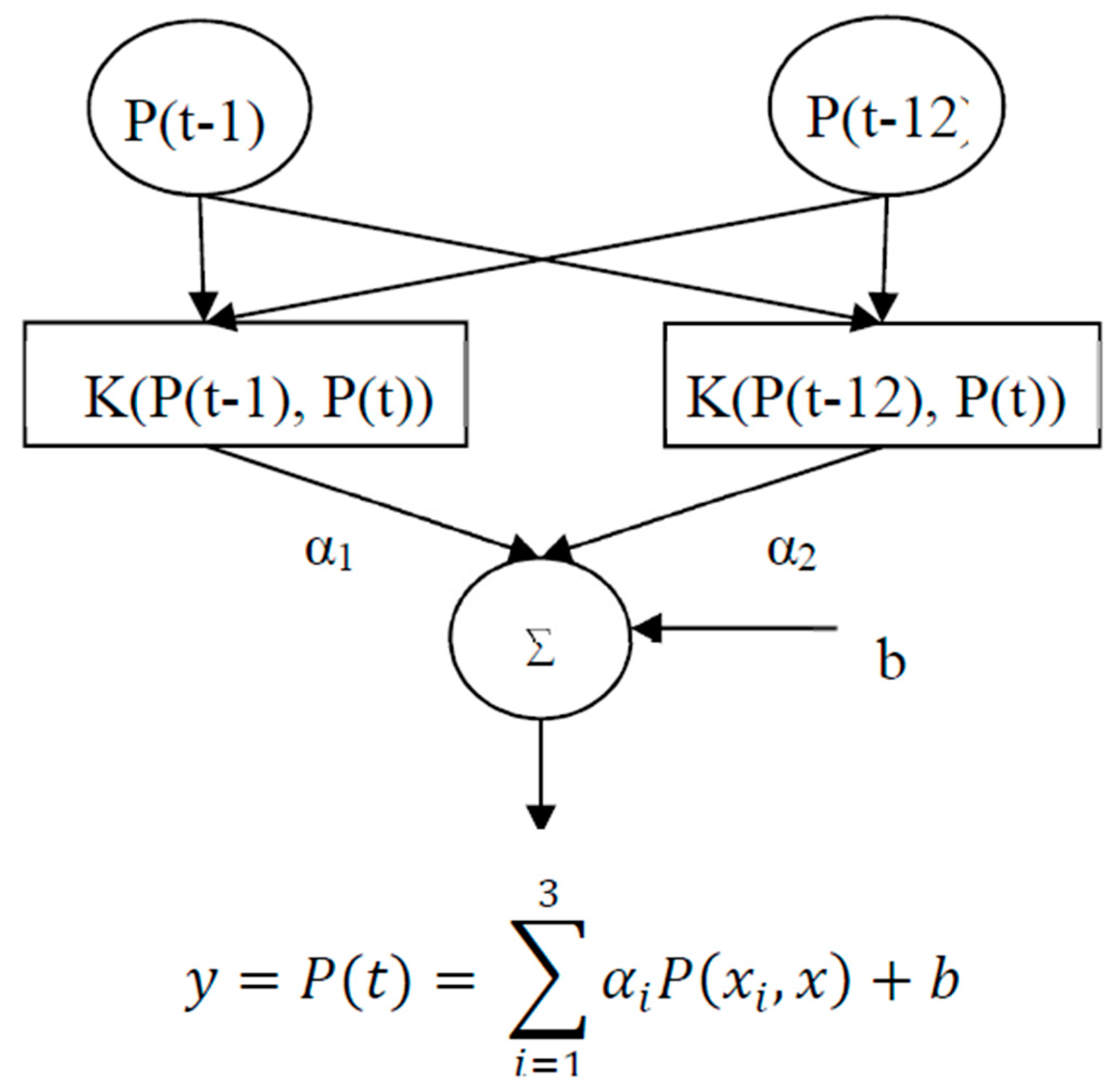

2.5. Least Square Support Vector Machine (LSSVM) concept

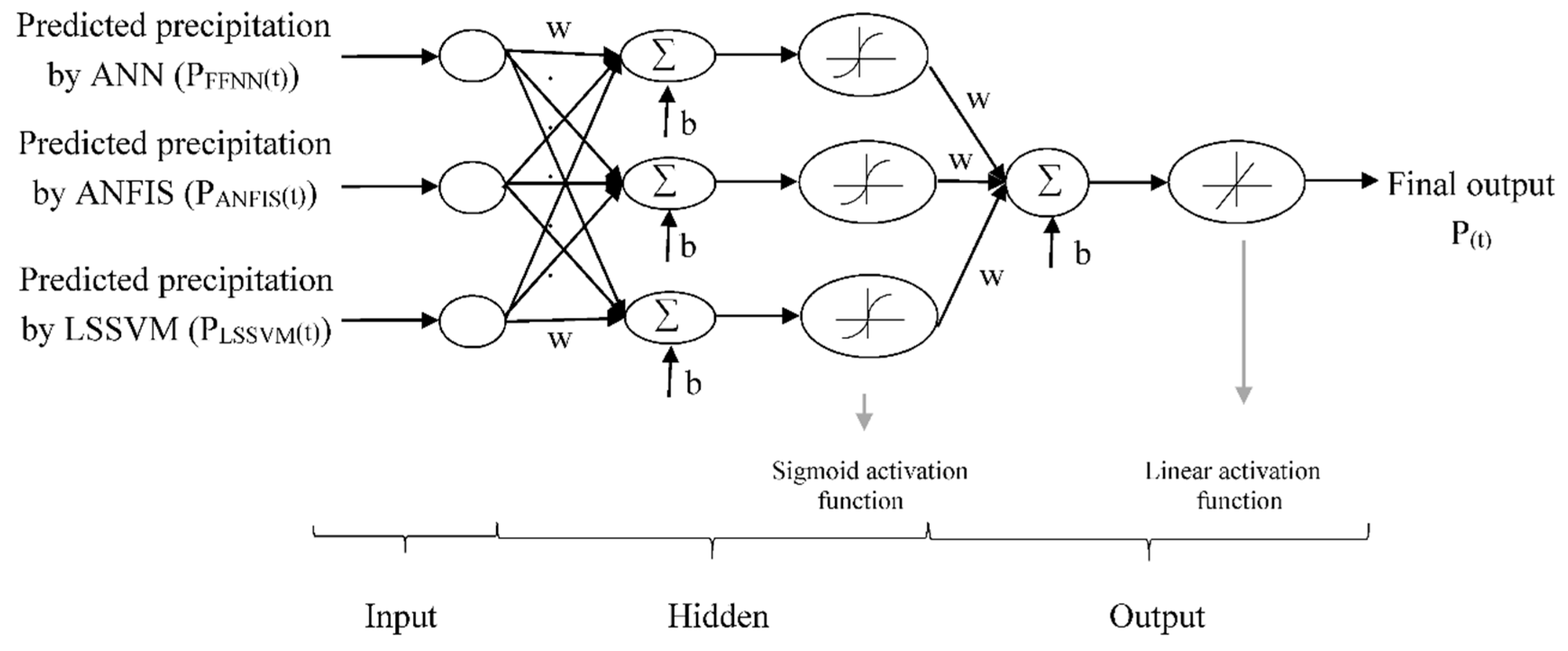

2.6. Ensemble Unit

3. Results and Discussion

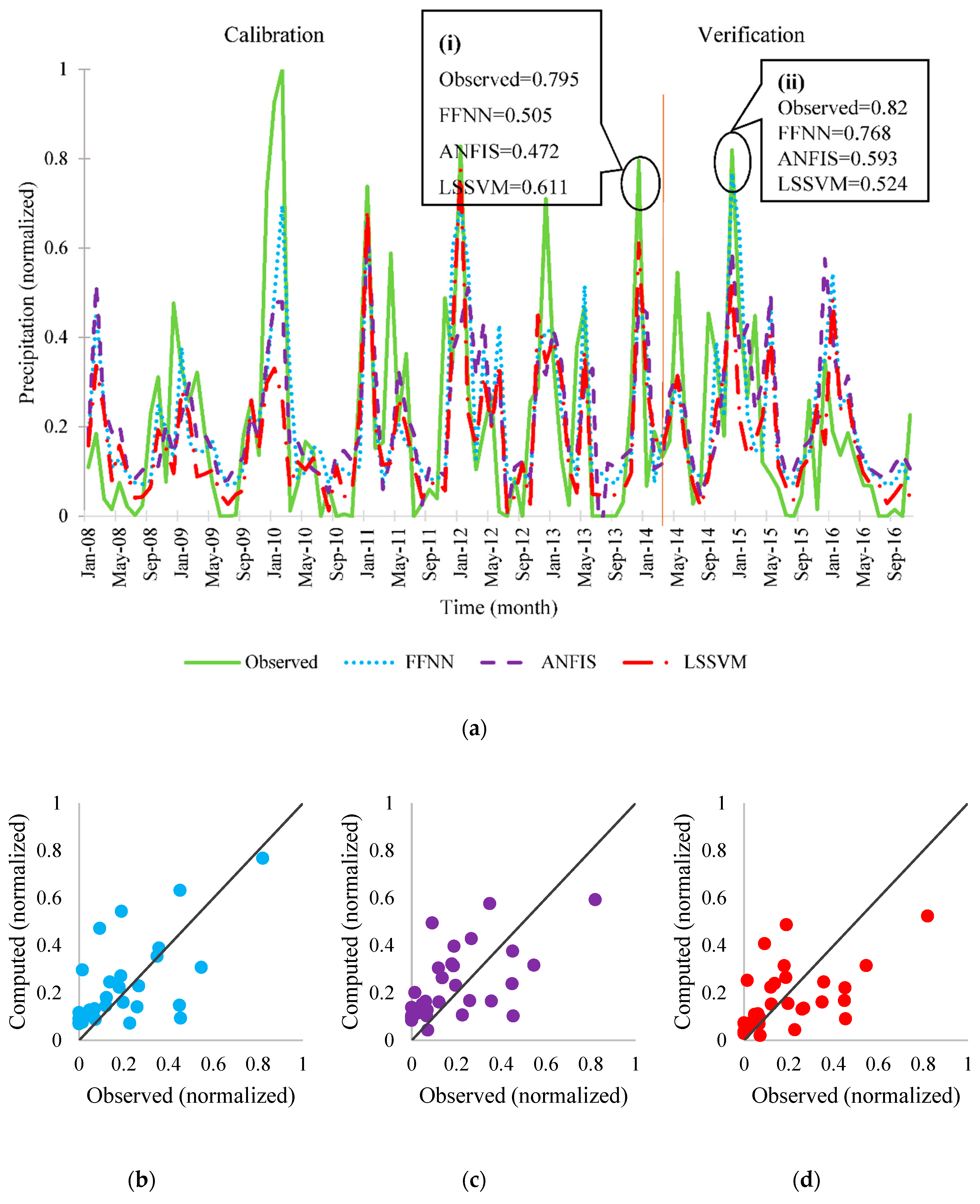

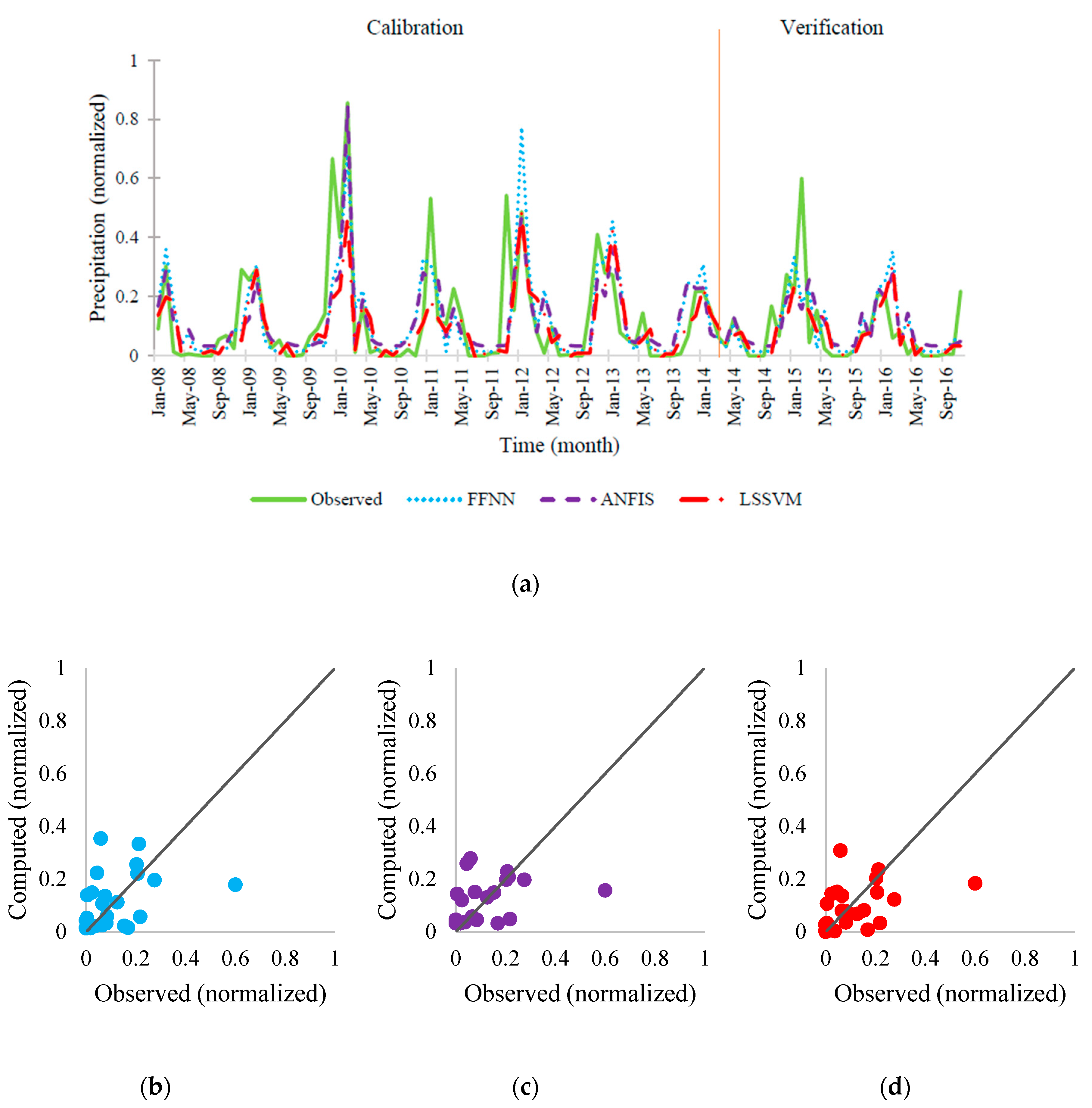

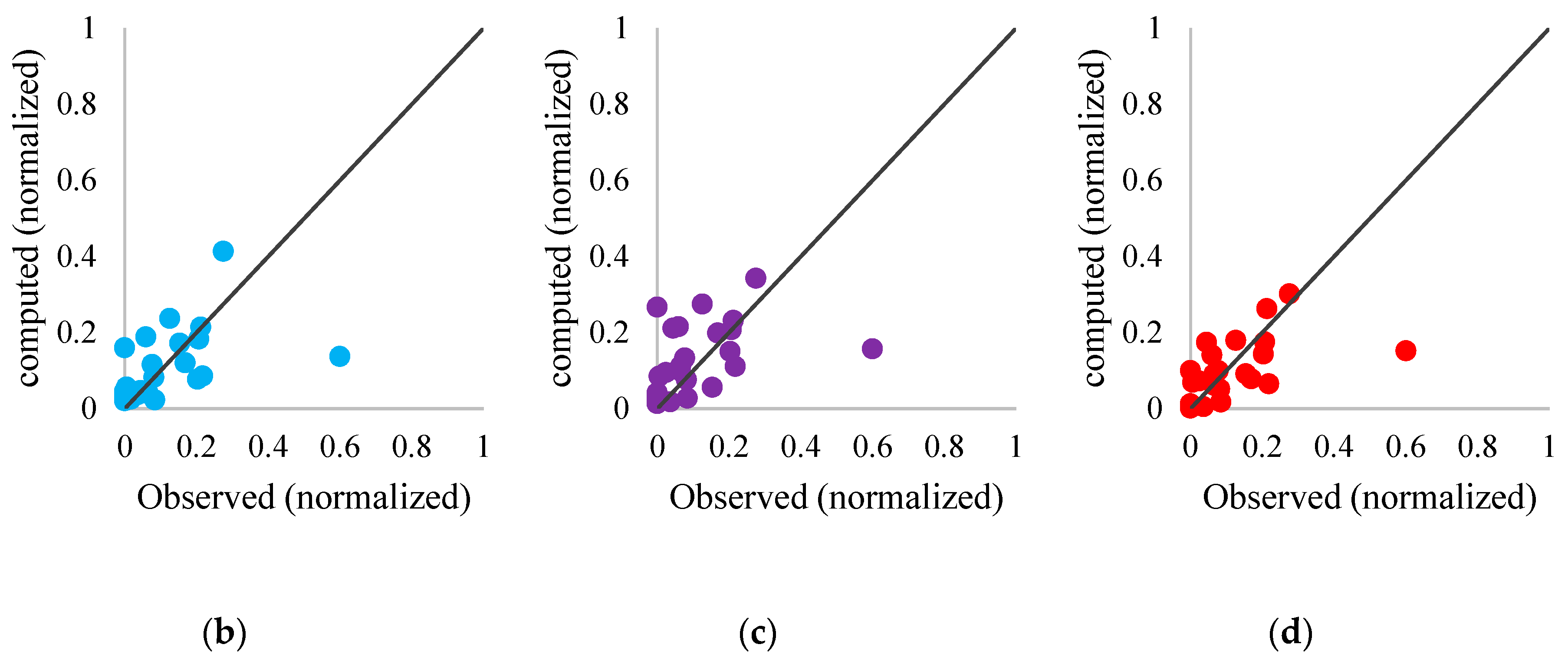

3.1. Results of Single AI Models

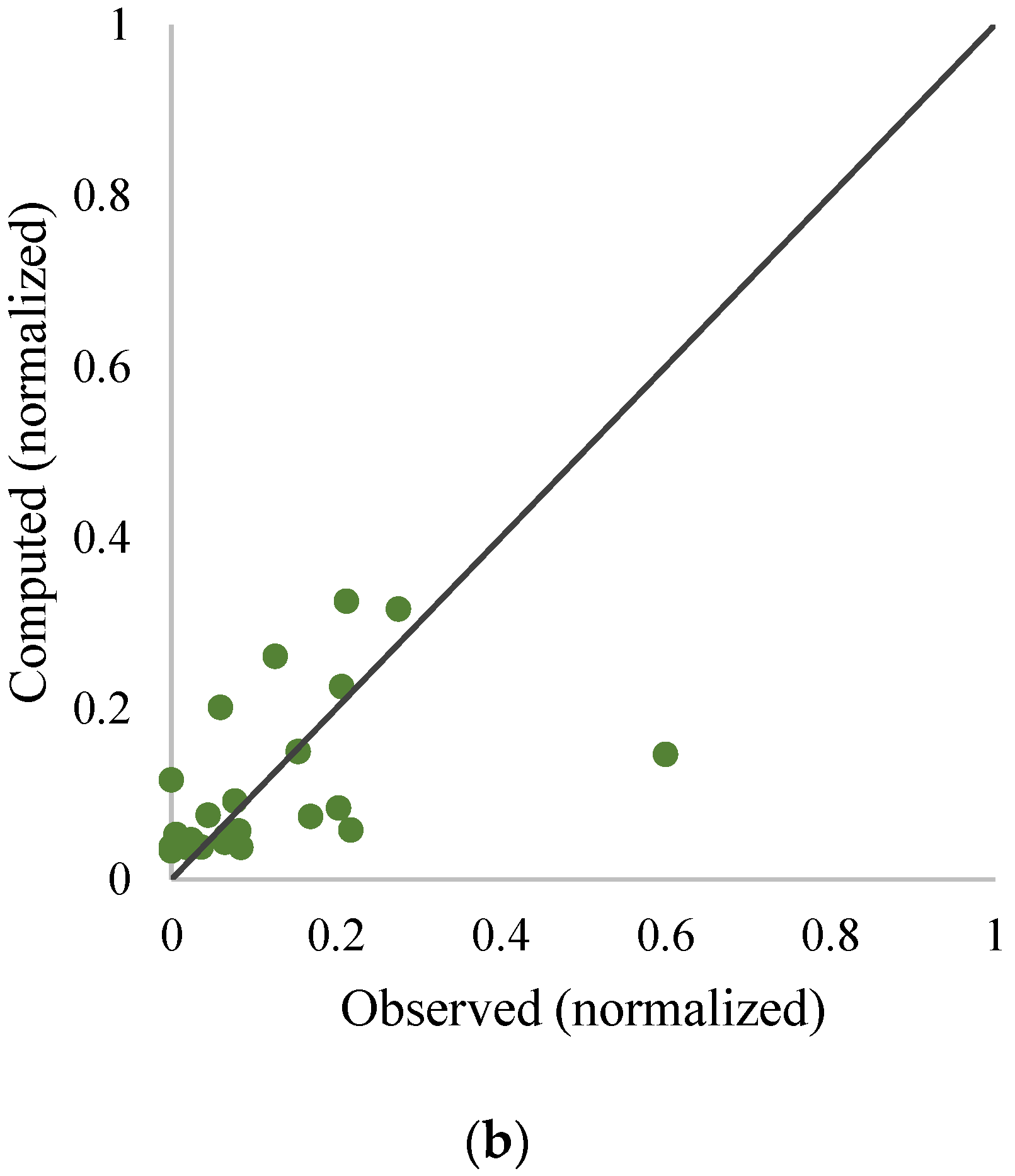

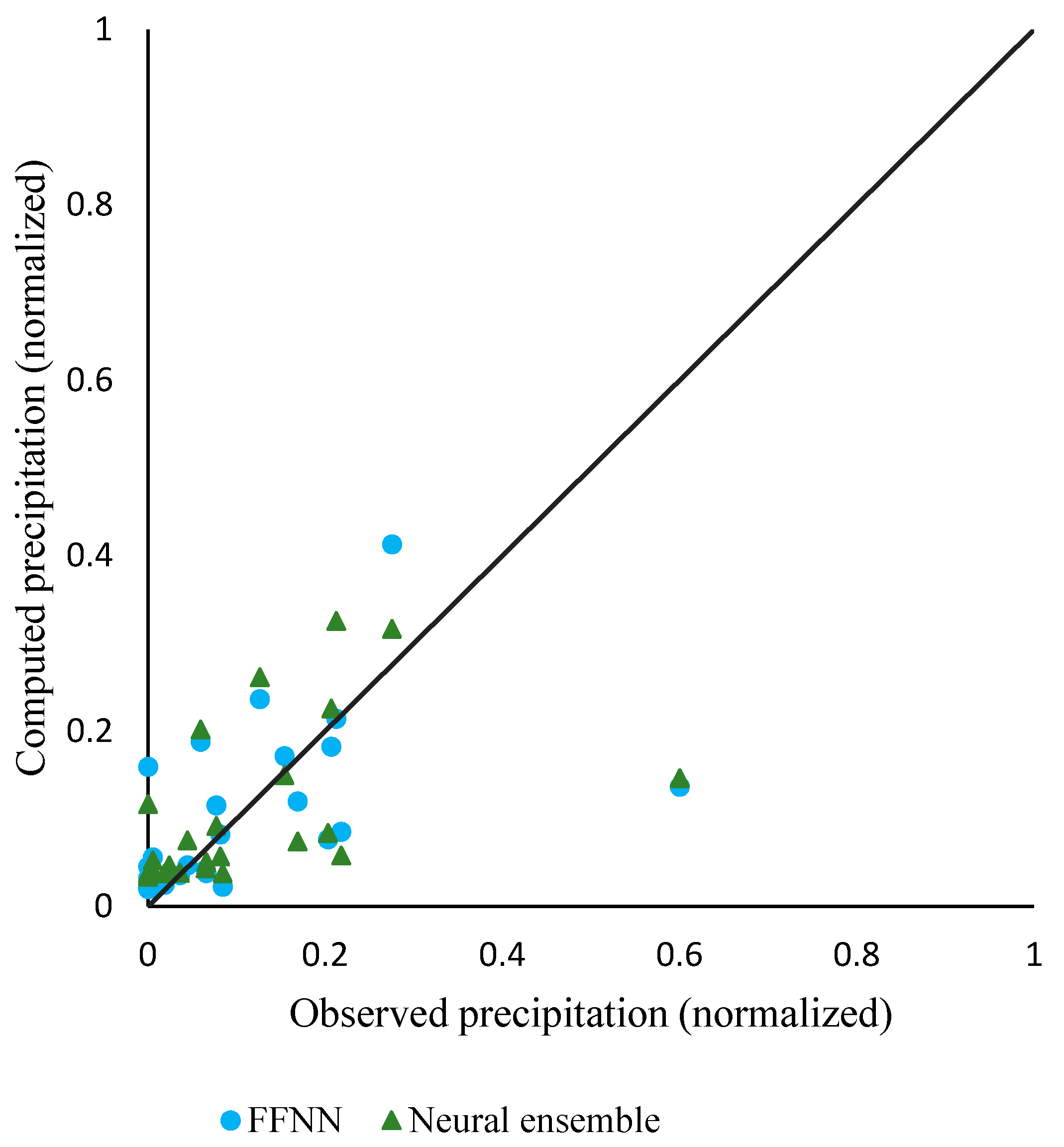

3.2. Results of Ensemble Modeling

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| Symbol | Description |

| A | measure of accuracy |

| B | membership functions parameter |

| b | bias |

| C | membership functions parameter |

| ei | slack variable |

| H(X) | entropy of X |

| H(X,Y) | joint entropy of X and Y |

| N | number of single models |

| n | data number |

| p | outlet function variable |

| Pobs | monthly observed precipitation (mm/month) |

| Pcom | monthly calculated precipitation (mm/month) |

| P(max)t | max value of monthly observed precipitation (mm/month) |

| P(min)t | min value of monthly observed precipitation (mm/month) |

| Pnorm | normalized value of monthly observed precipitation |

| Pi(t) | precipitation of station i at time t (mm/month) |

| P(t-α) | previous monthly precipitation value corresponding to α moth ago (mm/month) |

| PErcan(t) | monthly precipitation of Ercan station at time t (mm/month) |

| P(t) | precipitation monthly data (mm/month) |

| q | outlet function variable |

| r | outlet function variable |

| t | time (month) |

| w | weight |

| α | Lagrange multiplier |

| ɣ | margin parameter |

| λ | kernel parameter |

| ϕ | kernel function |

References

- Nourani, V.; Mano, A. Semi-distributed flood runoff model at the sub continental scale for southwestern Iran. Hydrol. Process. 2007, 21, 3173–3180. [Google Scholar] [CrossRef]

- Clarke, R.T. Statistical Modelling in Hydrology; John Wiley and Sons: Hoboken, NJ, USA, 1994; p. 412. [Google Scholar]

- Abbot, J.; Marohasy, J. Application of artificial neural networks to rainfall forecasting in Queensland, Australia. Adv. Atmos. Sci. 2012, 29, 717–730. [Google Scholar] [CrossRef]

- Jiao, G.; Guo, T.; Ding, Y. A New Hybrid Forecasting Approach Applied to Hydrological Data: A Case Study on Precipitation in Northwestern China. Water 2016, 8, 367. [Google Scholar] [CrossRef]

- Guhathakurta, P. Long lead monsoon rainfall prediction for meteorological sub-divisions of India using deterministic artificial neural network model. Meteorol. Atmos. Phys. 2008, 101, 93–108. [Google Scholar] [CrossRef]

- Hung, N.Q.; Babel, M.S.; Weesakul, S.; Tripathi, N.K. An artificial neural network model for rainfall forecasting in Bangkok, Thailand. Hydrol. Earth Syst. Sci. 2009, 13, 1413–1425. [Google Scholar] [CrossRef]

- Khalili, N.; Khodashenas, S.R.; Davary, K.; Mousavi, B.; Karimaldini, F. Prediction of rainfall using artificial neural networks for synoptic station of Mashhad: A case study. Arab. J. Geosci. 2016, 9, 624. [Google Scholar] [CrossRef]

- Devi, S.R.; Arulmozhivarman, P.; Venkatesh, C. ANN based rainfall prediction—A tool for developing a landslide early warning system. In Proceedings of the Advancing Culture of Living with Landslides—Workshop on World Landslide Forum, Ljubljana, Slovenia, 29 May–2 June 2017; pp. 175–182. [Google Scholar]

- Mehdizadeh, S.; Behmanesh, J.; Khalili, K. New approaches for estimation of monthly rainfall based on GEP-ARCH and ANN-ARCH hybrid models. Water Resour. Manag. 2018, 32, 527–545. [Google Scholar] [CrossRef]

- Ye, J.; Xiong, T. SVM versus Least Squares SVM. In Proceedings of the Eleventh International Conference on Artificial Intelligence and Statistics, San Juan, Puerto Rico, 21–24 March 2007; pp. 644–651. [Google Scholar]

- Guo, J.; Zhou, J.; Qin, H.; Zou, Q.; Li, Q. Monthly streamflow forecasting based on improved support vector machine model. Expert Syst. Appl. 2011, 38, 13073–13081. [Google Scholar] [CrossRef]

- Kumar, M.; Kar, I.N. Non-linear HVAC computations using least square support vector machines. Energy Convers. Manag. 2009, 50, 1411–1418. [Google Scholar] [CrossRef]

- Suykens, J.A.K.; Vandewalle, J. Least square support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Ortiz-García, E.G.; Salcedo-Sanz, S.; Casanova-Mateo, C. Accurate precipitation prediction with support vector classifiers: A study including novel predictive variables and observational data. Atmos. Res. 2014, 139, 128–136. [Google Scholar] [CrossRef]

- Sánchez-Monedero, J.; Salcedo-Sanz, S.; Gutierrez, P.A.; Casanova-Mateo, C.; Hervás-Martínez, C. Simultaneous modelling of rainfall occurrence and amount using a hierarchical nominal-ordinal support vector classifier. Eng. Appl. Artif. Intell. 2014, 34, 199–207. [Google Scholar] [CrossRef]

- Lu, K.; Wang, L. A novel nonlinear combination model based on support vector machine for rainfall prediction. In Proceedings of the Fourth International Joint Conference on Computational Sciences and Optimization (CSO), Fourth International Joint Conference, Yunnan, China, 15–19 April 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1343–1347. [Google Scholar]

- Kisi, O.; Cimen, M. Precipitation forecasting by using wavelet-support vector machine conjunction model. Eng. Appl. Artif. Intell. 2012, 25, 783–792. [Google Scholar] [CrossRef]

- Du, J.; Liu, Y.; Yu, Y.; Yan, W. A Prediction of Precipitation Data Based on Support Vector Machine and Particle Swarm Optimization (PSO-SVM) Algorithms. Algorithms 2017, 10, 57. [Google Scholar] [CrossRef]

- Danandeh Mehr, A.; Nourani, V.; KarimiKhosrowshahi, V.; Ghorbani, M.A. A hybrid support vector regression–firefly model for monthly rainfall forecasting. Int. J. Environ. Sci. Technol. 2018, 1–12. [Google Scholar] [CrossRef]

- Jang, J.S.R.; Sun, C.T.; Mizutani, E. Neuro-Fuzzy and Soft Computing: A Computational Approach to Learning and Machine Intelligence; Prentice-Hall: Upper Saddle River, NJ, USA, 1997. [Google Scholar]

- Akrami, S.A.; Nourani, V.; Hakim, S.J.S. Development of nonlinear model based on wavelet-ANFIS for rainfall forecasting at Klang Gates Dam. Water Resour. Manag. 2014, 28, 2999–3018. [Google Scholar] [CrossRef]

- Solgi, A.; Nourani, V.; Pourhaghi, A. Forecasting daily precipitation using hybrid model of wavelet-artificial neural network and comparison with adaptive neurofuzzy inference system. Adv. Civ. Eng. 2014, 3, 1–12. [Google Scholar]

- Sharifi, S.S.; Delirhasannia, R.; Nourani, V.; Sadraddini, A.A.; Ghorbani, A. Using ANNs and ANFIS for modeling and sensitivity analysis of effective rainfall. In Recent Advances in Continuum Mechanics. Hydrology and Ecology; WSEAS Press: Athens, Greece, 2013; pp. 133–139. [Google Scholar]

- Mokhtarzad, M.; Eskandari, F.; Vanjani, N.J.; Arabasadi, A. Drought forecasting by ANN, ANFIS and SVM and comparison of the models. Environ. Earth Sci. 2017, 76, 729. [Google Scholar] [CrossRef]

- Nourani, V.; Komasi, M. A geomorphology-based ANFIS model for multi-station modeling of rainfall–runoff process. J. Hydrol. 2013, 490, 41–55. [Google Scholar] [CrossRef]

- Sojitra, M.A.; Purohit, R.C.; Pandya, P.A. Comparative study of daily rainfall forecasting models using ANFIS. Curr. World Env. 2015, 10, 529–536. [Google Scholar] [CrossRef]

- Yaseen, Z.M.; Ghareb, M.I.; Ebtehaj, I.; Bonakdari, H.; Siddique, R.; Heddam, S.; Yusif, A.A.; Deo, R. Rainfall pattern forecasting using novel hybrid intelligent model based ANFIS-FFA. Water Resour. Manag. 2018, 32, 105–122. [Google Scholar] [CrossRef]

- Zhang, G.P. Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 2003, 50, 159–175. [Google Scholar] [CrossRef]

- Li, W.; Sankarasubramanian, A. Reducing hydrologic model uncertainty in monthly streamflow predictions using multimodel combination. Water Resour. Res. 2012, 48, 1–17. [Google Scholar] [CrossRef]

- Yamashkin, S.; Radovanovic, M.; Yamashkin, A.; Vukovic, D. Using ensemble systems to study natural processes. J. Hydroinf. 2018, 20, 753–765. [Google Scholar] [CrossRef]

- Sharghi, E.; Nourani, V.; Behfar, N. Earthfill dam seepage analysis using ensemble artificial intelligence based modeling. J. Hydroinf. 2018, 20, 1071–1084. [Google Scholar] [CrossRef]

- Kasiviswanathan, K.S.; Cibin, R.; Sudheer, K.P.; Chaubey, I. Constructing prediction interval for artificial neural network rainfall runoff models based on ensemble simulations. J. Hydrol. 2013, 499, 275–288. [Google Scholar] [CrossRef]

- Kourentzes, N.; Barrow, D.K.; Crone, F. Neural network ensemble operators for time series forecasting. Expert Syst. Appl. 2014, 41, 4235–4244. [Google Scholar] [CrossRef]

- Price, C.; Michaelides, S.; Pashiardis, S.; Alperta, P. Long term changes in diurnal temperature range in Cyprus. Atmos. Res. 1999, 51, 85–98. [Google Scholar] [CrossRef]

- Partal, T.; Cigizoglu, H.K. Estimation and forecasting of daily suspended sediment data using wavelet-neural networks. J. Hydrol. 2008, 358, 317–331. [Google Scholar] [CrossRef]

- Nourani, V.; RezapourKhanghah, T.; Hosseini Baghanam, A. Implication of feature extraction methods to improve performance of hybrid Wavelet-ANN rainfall–runoff model. In Case Studies in Intelligent Computing; Issac, B., Israr, N., Eds.; Taylor and Francis Group: New York, NY, USA, 2014; pp. 457–498. [Google Scholar]

- Yang, H.H.; Vuuren, S.V.; Sharma, S.; Hermansky, H. Relevance of timefrequency features for phonetic and speaker-channel classification. Speech Commun. 2000, 31, 35–50. [Google Scholar] [CrossRef]

- Steinskog, D.J.; Tjostheim, D.B.; Kvamsto, N.G. A cautionary note on the use of the Kolmogorov–Smirnov test for normality. Mon. Weather Rev. 2007, 135, 1151–1157. [Google Scholar] [CrossRef]

- Adeloye, A.J.; Montaseri, M. (2002) Preliminary stream flow data analyses prior to water resources planning study. Hydrol. Sci. J. 2002, 47, 679–692. [Google Scholar] [CrossRef]

- Vaheddoost, B.; Aksoy, H. Structural characteristics of annual precipitation in Lake Urmia basin. Theor. Appl. Climatol. 2017, 128, 919–932. [Google Scholar] [CrossRef]

- Pettitt, A.N. A Non-Parametric Approach to the Change-Point Problem. Appl. Stat. 1979, 28, 126–135. [Google Scholar] [CrossRef]

- Alexandersson, H. A homogeneity test applied to precipitation data. J. Climatol. 1986, 6, 661–675. [Google Scholar] [CrossRef]

- Buishand, T.A. Some methods for testing the homogeneity of rainfall records. J. Hydrol. 1982, 58, 11–27. [Google Scholar] [CrossRef]

- Moore, D.S. Chi-Square Tests, in Studies in Statistics; Hogg, R.V., Ed.; Mathematical Association of America: Washington, DC, USA, 1987; Volume 19, pp. 66–106. [Google Scholar]

- Bisht, D.; Joshi, M.C.; Mehta, A. Prediction of monthly rainfall of nainital region using artificial neural network and support vector machine. Int. J. Adv. Res. Innov. Ideas Edu. 2015, 1, 2395–4396. [Google Scholar]

- Nourani, V.; Andalib, G. Daily and monthly suspended sediment load predictions using wavelet-based AI approaches. J. Mt. Sci. 2015, 12, 85–100. [Google Scholar] [CrossRef]

- Legates, D.R.; McCabe Jr, G.J. Evaluating the use of goodness-of-fit measures in hydrologic and hydroclimatic model validation. Water Resour. Res. 1999, 35, 233–241. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmosphere; International Geophysics Series; Academic Press: New York, NY, USA, 1995; p. 467. [Google Scholar]

- Najafi, B.; FaizollahzadehArdabili, S.; Shamshirband, S.; Chau, K.W.; Rabczuk, T. Application of ANNs, ANFIS and RSM to estimating and optimizing the parameters that affect the yield and cost of biodiesel production. Eng. Appl. Comput. Fluid Mech. 2018, 12, 611–624. [Google Scholar] [CrossRef]

- Govindaraju, R.S. Artificial neural networks in hydrology. I: Preliminary concepts. J. Hydrol. Eng. 2000, 5, 115–123. [Google Scholar]

- Solgi, A.; Zarei, H.; Nourani, V.; Bahmani, R. A new approach to flow simulation using hybrid models. Appl. Water Sci. 2017, 7, 3691–3706. [Google Scholar] [CrossRef]

- ASCE Task Committee on Application of Artificial Neural Networks in Hydrology. Artificial neural networks in hydrology. II: Hydrologic applications. J. Hydrol. Eng. 2000, 5, 124–137. [CrossRef]

- Yetilmezsoy, K.; Ozkaya, B.; Cakmakci, M. Artificial intelligence-based prediction models for environmental engineering. Neural Netw. World 2011, 21, 193–218. [Google Scholar] [CrossRef]

- Tan, H.M.; Gouwanda, D.; Poh, P.E. Adaptive neural-fuzzy inference system vs. anaerobic digestion model No. 1 for performance prediction of thermophilic anaerobic digestion of palm oil mill effluent. Proc. Saf. Environ. Prot. 2018, 117, 92–99. [Google Scholar] [CrossRef]

- Parmar, K.S.; Bhardwaj, R. River water prediction modeling using neural networks, fuzzy and wavelet coupled model. Water Res. Manag. 2015, 29, 17–33. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Granata, F.; Papirio, S.; Esposito, G.; Gargano, R.; de Marinis, G. Machine learning algorithms for the forecasting of wastewater quality indicators. Water 2017, 9, 105. [Google Scholar] [CrossRef]

- Zhou, T.; Wang, F.; Yang, Z. Comparative analysis of ANN and SVM models combined with wavelet pre-process for groundwater depth prediction. Water 2017, 9, 781. [Google Scholar] [CrossRef]

- Singh, V.K.; Kumar, P.; Singh, B.P.; Malik, A. A comparative study of adaptive neuro fuzzy inference system (ANFIS) and multiple linear regression (MLR) for rainfall-runoff modeling. Int. J. Sci. Nat. 2016, 7, 714–723. [Google Scholar]

- Shu-gang, C.; Yan-bao, L.; Yan-ping, W. A forecasting and forewarning model for methane hazard in working face of coal mine based on LSSVM. J. China Univ. Min. Technol. 2008, 18, 172–176. [Google Scholar]

- Zhang, G.P.; Berardi, V.L. Time series forecasting with neural network ensembles: An application for exchange rate prediction. J. Oper. Res. Soc. 2001, 52, 652–664. [Google Scholar] [CrossRef]

- Hagan, M.T.; Menhaj, M.B. Training feed forward networks with Marquardt algorithm. IEEE Trans. Neural Netw. 1996, 5, 989–992. [Google Scholar] [CrossRef] [PubMed]

| Property | Description/Value |

|---|---|

| Sensor/Transducer type | Tipping bucket/Reed switch |

| Precipitation type | Liquid |

| Accuracy | ±2% |

| Sensitivity | 0.2 mm |

| Closure time | <100 ms (for 0.2 mm of rain) |

| Capacity | Unlimited |

| Funnel diameter | 225 mm |

| Standard | 400 cm2 |

| With expander unit | 1000 cm2 |

| Max. current rating | 500 mA |

| Breakdown voltage | 400 VDC |

| Capacity open contacts | 0.2 pF |

| Life (operations) | 108 closures |

| Material | Non-corrosive aluminum alloy LM25 |

| Dimensions | 390 (h) × 300 (Ø) mm |

| Weight | 2.5 kg |

| Temperature range (operating) | 0–+85 °C |

| Station | Altitude (m) | Longitude | Latitude | Max Precipitation (mm/month) | Mean Precipitation (mm/month) | Std. Deviation of Precipitation (mm/month) |

|---|---|---|---|---|---|---|

| Ercan | 123 m | 33°29′59.99″ E | 35°09′21.00″ N | 2130.0 | 25.2 | 29.1 |

| Gazimağusa | 1.8 m | 33°56′20.18″ E | 35°7′13.94″ N | 3141.0 | 27.9 | 38.1 |

| Geçitkale | 44 m | 33°23′15″ E | 34°49′30″ N | 2100.0 | 27 | 33.6 |

| Girne | 0 m | 33°19′2.24” E | 35°20′10.82″ N | 4260.0 | 38.4 | 58.5 |

| Güzelyurt | 65 m | 32°59′36.17″ E | 35°11′55.28″ N | 3021.0 | 23.7 | 30 |

| Lefkoşa | 220 m | 33°21′51.12″ E | 35°10′31.12″ N | 1986.0 | 22.8 | 27.6 |

| Yeni Erenköy | 22 m | 34°11′30″ E | 35°31′60″ N | 2280.0 | 33.3 | 43.8 |

| Station | Ercan | Gazimağusa | Geçitkale | Girne | Güzelyurt | Lefkoşa | Yeni Erenköy |

|---|---|---|---|---|---|---|---|

| Ercan | 1.468 | - | - | - | - | - | - |

| Gazimağusa | 0.993 | 1.269 | - | - | - | - | - |

| Geçitkale | 1.038 | 0.939 | 1.294 | - | - | - | - |

| Girne | 1.085 | 0.893 | 0.868 | 1.204 | - | - | - |

| Güzelyurt | 0.958 | 0.964 | 0.908 | 0.911 | 1.281 | - | - |

| Lefkoşa | 1.074 | 0.971 | 0.974 | 0.949 | 0.983 | 1.3265 | - |

| Yeni Erenköy | 0.992 | 0.941 | 0.925 | 0.876 | 0.947 | 0.9673 | 1.278 |

| Mean MI | 1.02 | 0.95 | 0.942 | 0.931 | 0.945 | 0.986 | 0.941 |

| Station | Scenario | Epoch | Network Structure a | DC | RMSE (Normalized) | ||

|---|---|---|---|---|---|---|---|

| Calibration | Verification | Calibration | Verification | ||||

| Ercan | 1 | 20 | (3.14.1) | 0.670 | 0.637 | 0.176 | 0.157 |

| Gazimağusa | 1 | 60 | (3.2.1) | 0.547 | 0.538 | 0.183 | 0.120 |

| 2 | 10 | (4.10.1) | 0.766 | 0.684 | 0.144 | 0.106 | |

| Geçitkale | 1 | 90 | (3.4.1) | 0.673 | 0.503 | 0.147 | 0.099 |

| 2 | 20 | (4.11.1) | 0.845 | 0.677 | 0.108 | 0.087 | |

| Girne | 1 | 30 | (3.16.1) | 0.729 | 0.511 | 0.119 | 0.169 |

| 2 | 10 | (4.12.1) | 0.800 | 0.728 | 0.101 | 0.135 | |

| Güzelyurt | 1 | 10 | (3.5.1) | 0.723 | 0.423 | 0.150 | 0.145 |

| 2 | 10 | (4.18.1) | 0.854 | 0.664 | 0.114 | 0.131 | |

| Lefkoşa | 1 | 20 | (3.17.1) | 0.585 | 0.545 | 0.172 | 0.138 |

| 2 | 10 | (4.3.1) | 0.768 | 0.621 | 0.142 | 0.124 | |

| Yeni Erenköy | 1 | 40 | (3.4.1) | 0.570 | 0.474 | 0.134 | 0.079 |

| 2 | 20 | (4.11.1) | 0.835 | 0.692 | 0.090 | 0.069 | |

| Station | Scenario | Epoch | Network Structure a | DC | RMSE (Normalized) | ||

|---|---|---|---|---|---|---|---|

| Calibration | Verification | Calibration | Verification | ||||

| Ercan | 1 | 5 | trimf-2 | 0.591 | 0.582 | 0.195 | 0.162 |

| Gazimağusa | 1 | 35 | trimf-2 | 0.510 | 0.479 | 0.188 | 0.126 |

| 2 | 80 | pimf-2 | 0.823 | 0.633 | 0.104 | 0.117 | |

| Geçitkale | 1 | 10 | trimf-3 | 0.728 | 0.483 | 0.127 | 0.102 |

| 2 | 5 | gauss2mf-2 | 0.840 | 0.630 | 0.107 | 0.099 | |

| Girne | 1 | 75 | trimf-2 | 0.716 | 0.439 | 0.111 | 0.180 |

| 2 | 5 | trimf-2 | 0.876 | 0.678 | 0.075 | 0.158 | |

| Güzelyurt | 1 | 95 | trimf-2 | 0.700 | 0.418 | 0.128 | 0.148 |

| 2 | 5 | trimf-2 | 0.893 | 0.645 | 0.071 | 0.144 | |

| Lefkoşa | 1 | 100 | trimf-2 | 0.554 | 0.515 | 0.176 | 0.137 |

| 2 | 5 | trimf-2 | 0.869 | 0.609 | 0.092 | 0.135 | |

| Yeni Erenköy | 1 | 15 | gaussmf-2 | 0.617 | 0.447 | 0.127 | 0.082 |

| 2 | 10 | gaussmf-2 | 0.899 | 0.617 | 0.071 | 0.074 | |

| Station | Scenario | Network Structure a | DC | RMSE (Normalized) | ||

|---|---|---|---|---|---|---|

| Calibration | Verification | Calibration | Verification | |||

| Ercan | 1 | (10,2,0.1) | 0.655 | 0.556 | 0.184 | 0.162 |

| Gazimağusa | 1 | (10,0.3,0.3333) | 0.550 | 0.497 | 0.185 | 0.123 |

| 2 | (10,0.3,0.3333) | 0.816 | 0.680 | 0.129 | 0.115 | |

| Geçitkale | 1 | (20,0.1,1) | 0.676 | 0.507 | 0.150 | 0.102 |

| 2 | (20,0.1,1) | 0.866 | 0.654 | 0.089 | 0.110 | |

| Girne | 1 | (50,0.01,0.3333) | 0.704 | 0.502 | 0.123 | 0.177 |

| 2 | (50,0.01,0.3333) | 0.876 | 0.701 | 0.085 | 0.148 | |

| Güzelyurt | 1 | (1,0.2,0.3333) | 0.727 | 0.415 | 0.156 | 0.143 |

| 2 | (1,0.2,0.3333) | 0.894 | 0.657 | 0.109 | 0.122 | |

| Lefkoşa | 1 | (60,0.2,0.5) | 0.582 | 0.530 | 0.173 | 0.142 |

| 2 | (60,0.2,0.5) | 0.769 | 0.588 | 0.098 | 0.139 | |

| Yeni Erenköy | 1 | (60,0.01,0.3333) | 0.619 | 0.473 | 0.132 | 0.084 |

| 2 | (60,0.01,0.3333) | 0.852 | 0.670 | 0.086 | 0.068 | |

| Station | Ensemble Method | Model Structure a | Determination Coefficient (DC) | Root Mean Square Error (RMSE) (Normalized) | ||

|---|---|---|---|---|---|---|

| Calibration | Verification | Calibration | Verification | |||

| Ercan | Simple linear averaging | - | 0.678 | 0.643 | 0.177 | 0.149 |

| Weighted averaging | 0.357-0.331-0.312 | 0.680 | 0.644 | 0.177 | 0.149 | |

| Non-linear averaging | (3,16,1) | 0.786 | 0.677 | 0.148 | 0.146 | |

| Gazimağusa | Simple linear averaging | - | 0.560 | 0.520 | 0.182 | 0.121 |

| Weighted averaging | 0.347-0.320-0.333 | 0.559 | 0.521 | 0.182 | 0.121 | |

| Non-linear averaging | (3,3,1) | 0.702 | 0.540 | 0.155 | 0.126 | |

| Geçitkale | Simple linear averaging | - | 0.7431 | 0.650 | 0.134 | 0.094 |

| Weighted averaging | 0.337-0.323-0.340 | 0.741 | 0.651 | 0.135 | 0.094 | |

| Non-linear averaging | (3,12,1) | 0.765 | 0.670 | 0.128 | 0.092 | |

| Girne | Simple linear averaging | - | 0.753 | 0.516 | 0.111 | 0.173 |

| Weighted averaging | 0.352-0.302-0.346 | 0.750 | 0.522 | 0.112 | 0.173 | |

| Non-linear averaging | (3,11,1) | 0.825 | 0.678 | 0.095 | 0.157 | |

| Güzelyurt | Simple linear averaging | - | 0.779 | 0.432 | 0.139 | 0.143 |

| Weighted averaging | 0.337-0.333-0.330 | 0.776 | 0.433 | 0.140 | 0.143 | |

| Non-linear averaging | (3,4,1) | 0.774 | 0.447 | 0.137 | 0.143 | |

| Lefkoşa | Simple linear averaging | - | 0.594 | 0.561 | 0.171 | 0.138 |

| Weighted averaging | 0.343-0.324-0.333 | 0.592 | 0.564 | 0.171 | 0.138 | |

| Non-linear averaging | (3,2,1) | 0.706 | 0.585 | 0.150 | 0.138 | |

| Yeni Erenköy | Simple linear averaging | - | 0.628 | 0.489 | 0.128 | 0.081 |

| Weighted averaging | 0.340-0.321-0.339 | 0.623 | 0.489 | 0.129 | 0.080 | |

| Non-linear averaging | (3,13,1) | 0.690 | 0.491 | 0.118 | 0.079 | |

| Station | Ensemble Method | Model Structure a | DC | RMSE (Normalized) | ||

|---|---|---|---|---|---|---|

| Calibration | Verification | Calibration | Verification | |||

| Gazimağusa | Simple linear averaging | - | 0.851 | 0.699 | 0.116 | 0.107 |

| Weighted averaging | 0.336-0.320-0.344 | 0.847 | 0.699 | 0.118 | 0.107 | |

| Non-linear averaging | (3,20,1) | 0.900 | 0.722 | 0.095 | 0.102 | |

| Geçitkale | Simple linear averaging | - | 0.880 | 0.681 | 0.096 | 0.096 |

| Weighted averaging | 0.345-0.321-0.334 | 0.873 | 0.691 | 0.099 | 0.093 | |

| Non-linear averaging | (3,5,1) | 0.883 | 0.727 | 0.100 | 0.086 | |

| Girne | Simple linear averaging | - | 0.889 | 0.734 | 0.079 | 0.144 |

| Weighted averaging | 0.345-0.322-0.333 | 0.884 | 0.744 | 0.080 | 0.142 | |

| Non-linear averaging | (3,16,1) | 0.947 | 0.813 | 0.090 | 0.122 | |

| Güzelyurt | Simple linear averaging | - | 0.923 | 0.686 | 0.089 | 0.124 |

| Weighted averaging | 0.338-0.328-0.334 | 0.913 | 0.681 | 0.096 | 0.123 | |

| Non-linear averaging | (3,18,1) | 0.885 | 0.668 | 0.106 | 0.121 | |

| Lefkoşa | Simple linear averaging | - | 0.895 | 0.627 | 0.101 | 0.127 |

| Weighted averaging | 0.342-0.335-0.323 | 0.884 | 0.633 | 0.107 | 0.125 | |

| Non-linear averaging | (3,17,1) | 0.953 | 0.691 | 0.064 | 0.123 | |

| Yeni Erenköy | Simple linear averaging | - | 0.884 | 0.690 | 0.077 | 0.067 |

| Weighted averaging | 0.350-0.312-0.338 | 0.880 | 0.690 | 0.078 | 0.067 | |

| Non-linear averaging | (3,19,1) | 0.929 | 0.787 | 0.060 | 0.059 | |

| Station | Scenario | Reference Model | Skill Score % | |||||

|---|---|---|---|---|---|---|---|---|

| Simple Linear Averaging | Weighted Averaging | Non-Linear Averaging | ||||||

| Calibration | Verification | Calibration | Verification | Calibration | Verification | |||

| Ercan | FFNN | 2.42 | 1.65 | 3.03 | 1.93 | 35.152 | 11.02 | |

| 1 | ANFIS | 21.27 | 14.59 | 21.76 | 14.83 | 47.68 | 22.73 | |

| LSSVM | 6.67 | 19.59 | 7.25 | 19.82 | 37.97 | 27.25 | ||

| Gazimağusa | 1 | FFNN | 2.87 | −3.90 | 2.65 | −3.68 | 34.22 | 0.43 |

| ANFIS | 10.20 | 7.87 | 10.00 | 8.06 | 39.18 | 11.71 | ||

| LSSVM | 2.22 | 4.57 | 2.00 | 4.77 | 33.78 | 8.55 | ||

| 2 | FFNN | 36.32 | 4.75 | 34.62 | 4.75 | 57.26 | 12.03 | |

| ANFIS | 15.82 | 17.98 | 13.56 | 17.98 | 43.50 | 24.25 | ||

| LSSVM | 19.02 | 5.94 | 16.85 | 5.94 | 45.65 | 13.13 | ||

| Geçitkale | 1 | FFNN | 21.44 | 29.58 | 20.80 | 29.78 | 28.13 | 33.60 |

| ANFIS | 5.55 | 32.30 | 4.78 | 32.50 | 13.60 | 36.17 | ||

| LSSVM | 20.71 | 29.01 | 20.06 | 29.21 | 27.47 | 33.06 | ||

| 2 | FFNN | 22.58 | 1.24 | 18.06 | 4.33 | 24.52 | 15.48 | |

| ANFIS | 25.00 | 13.78 | 20.63 | 16.49 | 26.88 | 26.22 | ||

| LSSVM | 10.45 | 7.80 | 5.22 | 10.69 | 12.69 | 21.10 | ||

| Girne | 1 | FFNN | 8.86 | 1.02 | 7.75 | 2.25 | 35.42 | 34.15 |

| ANFIS | 13.03 | 13.73 | 11.97 | 14.80 | 38.38 | 42.60 | ||

| LSSVM | 16.55 | 2.81 | 15.54 | 4.02 | 40.88 | 35.34 | ||

| 2 | FFNN | 44.50 | 2.21 | 42.00 | 5.88 | 73.50 | 31.25 | |

| ANFIS | 10.48 | 17.39 | 6.45 | 20.50 | 57.26 | 41.93 | ||

| LSSVM | 10.48 | 11.04 | 6.45 | 14.38 | 57.26 | 37.46 | ||

| Güzelyurt | 1 | FFNN | 20.22 | 1.56 | 19.13 | 1.73 | 18.41 | 4.16 |

| ANFIS | 26.33 | 2.41 | 25.33 | 2.58 | 24.67 | 4.98 | ||

| LSSVM | 19.05 | 2.91 | 17.95 | 3.08 | 17.22 | 5.47 | ||

| 2 | FFNN | 47.26 | 6.55 | 40.41 | 5.06 | 21.23 | 1.19 | |

| ANFIS | 28.04 | 11.55 | 18.69 | 10.14 | −7.48 | 6.48 | ||

| LSSVM | 27.36 | 8.45 | 17.92 | 7.00 | −8.49 | 3.21 | ||

| Lefkoşa | 1 | FFNN | 2.17 | 3.52 | 1.69 | 4.18 | 29.16 | 8.79 |

| ANFIS | 8.97 | 9.48 | 8.52 | 10.10 | 34.08 | 14.43 | ||

| LSSVM | 2.87 | 6.60 | 2.39 | 7.23 | 29.67 | 11.70 | ||

| 2 | FFNN | 54.74 | 1.58 | 50.00 | 3.17 | 79.74 | 18.47 | |

| ANFIS | 19.85 | 4.60 | 11.45 | 6.14 | 64.12 | 20.97 | ||

| LSSVM | 54.55 | 9.47 | 49.78 | 10.92 | 79.65 | 25.00 | ||

| Yeni Erenköy | 1 | FFNN | 13.49 | 2.85 | 12.33 | 2.85 | 27.91 | 3.23 |

| ANFIS | 2.87 | 7.59 | 1.57 | 7.59 | 19.06 | 7.96 | ||

| LSSVM | 2.36 | 3.04 | 1.05 | 3.04 | 18.64 | 3.42 | ||

| 2 | FFNN | 29.70 | −0.65 | 27.27 | −0.65 | 56.97 | 30.84 | |

| ANFIS | −14.85 | 19.06 | −18.81 | 19.06 | 29.70 | 44.39 | ||

| LSSVM | 21.62 | 6.06 | 18.92 | 6.06 | 52.03 | 35.45 | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nourani, V.; Uzelaltinbulat, S.; Sadikoglu, F.; Behfar, N. Artificial Intelligence Based Ensemble Modeling for Multi-Station Prediction of Precipitation. Atmosphere 2019, 10, 80. https://doi.org/10.3390/atmos10020080

Nourani V, Uzelaltinbulat S, Sadikoglu F, Behfar N. Artificial Intelligence Based Ensemble Modeling for Multi-Station Prediction of Precipitation. Atmosphere. 2019; 10(2):80. https://doi.org/10.3390/atmos10020080

Chicago/Turabian StyleNourani, Vahid, Selin Uzelaltinbulat, Fahreddin Sadikoglu, and Nazanin Behfar. 2019. "Artificial Intelligence Based Ensemble Modeling for Multi-Station Prediction of Precipitation" Atmosphere 10, no. 2: 80. https://doi.org/10.3390/atmos10020080