Sequence-Based Protein–Protein Interaction Prediction and Its Applications in Drug Discovery

Abstract

1. Introduction

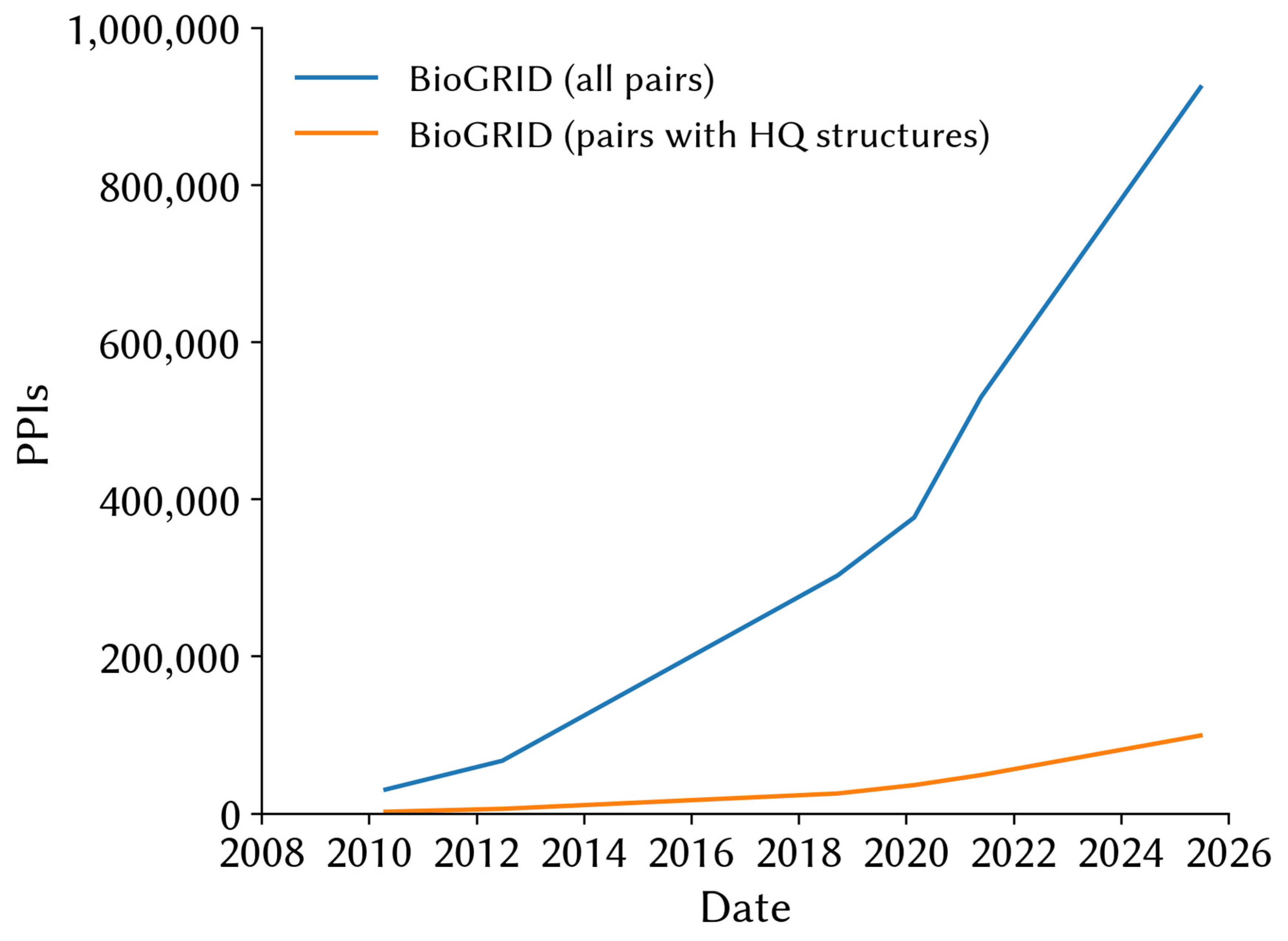

2. The Case for Sequence-Based PPI Predictors; Advantages over Structure-Based Prediction

3. How PPI Predictors Work: Machine Learning Methods and Evaluation Metrics

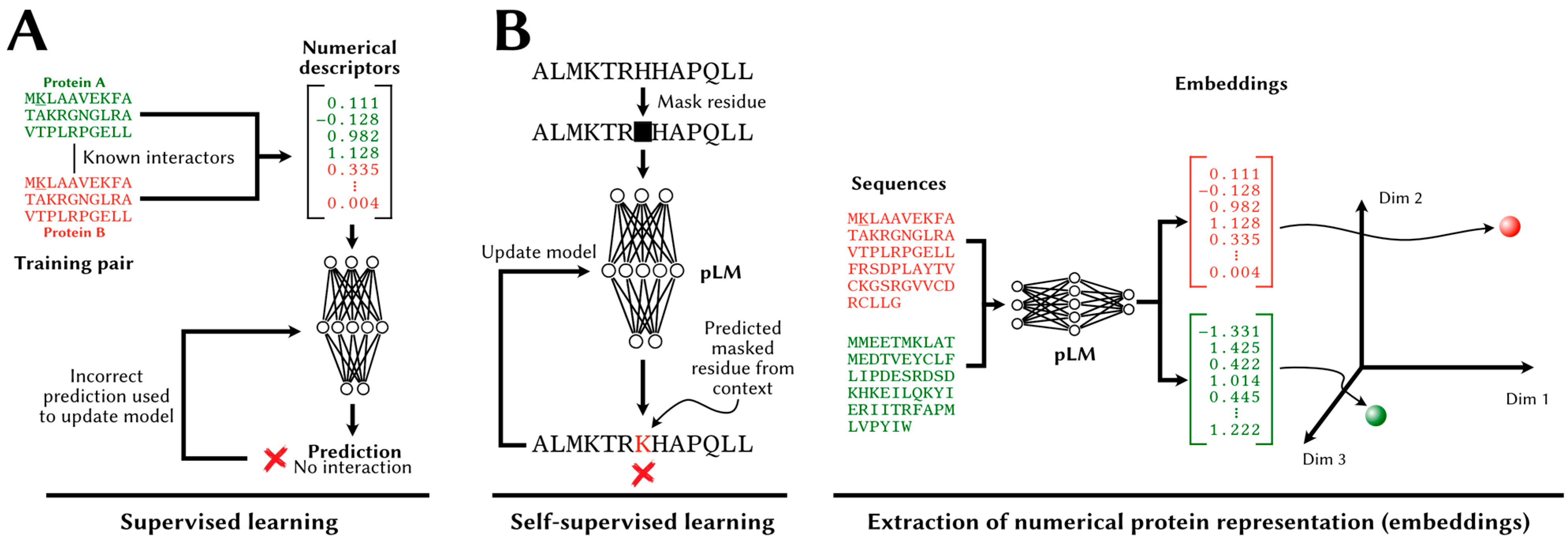

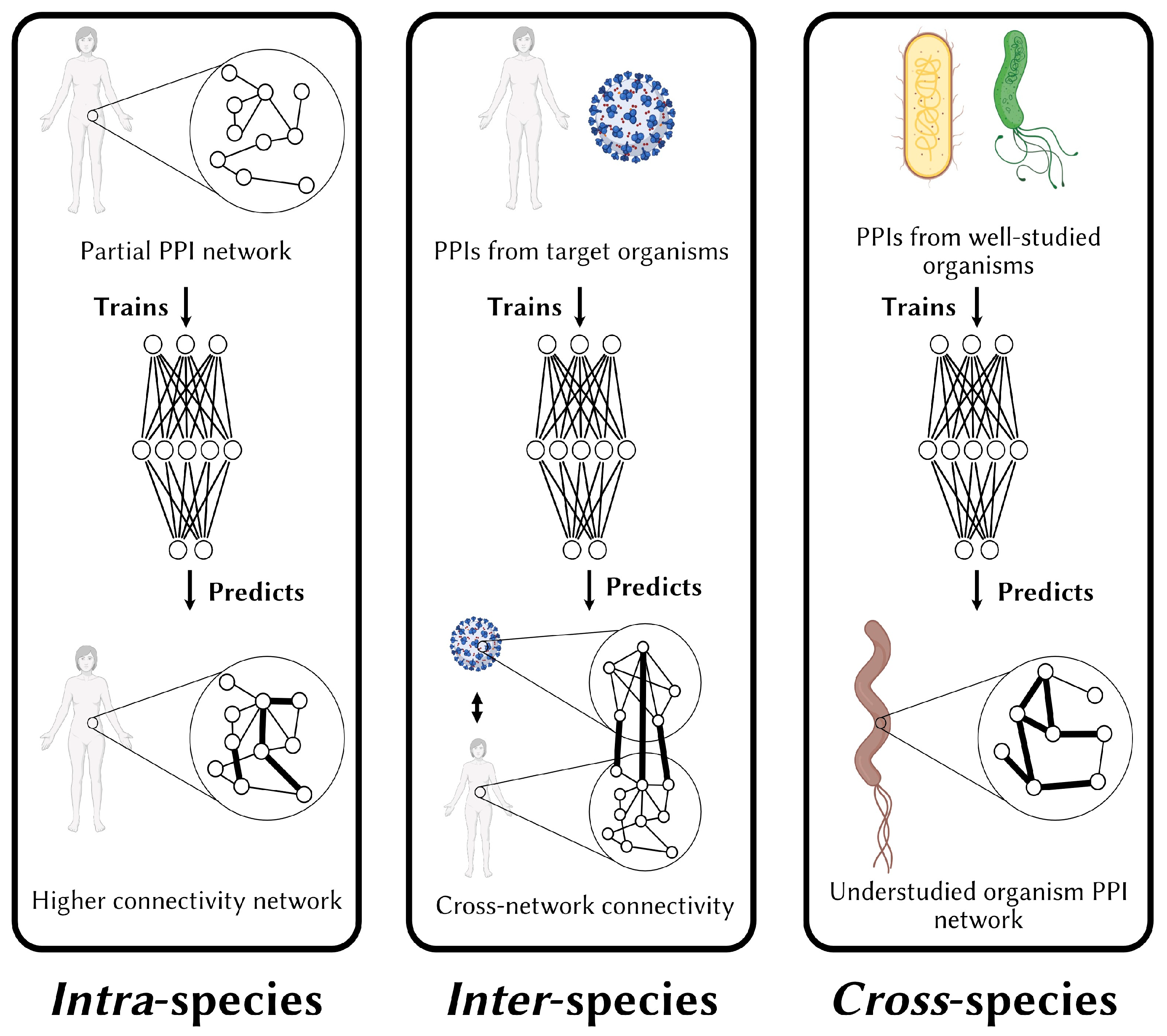

3.1. Paradigms

3.2. Methodology

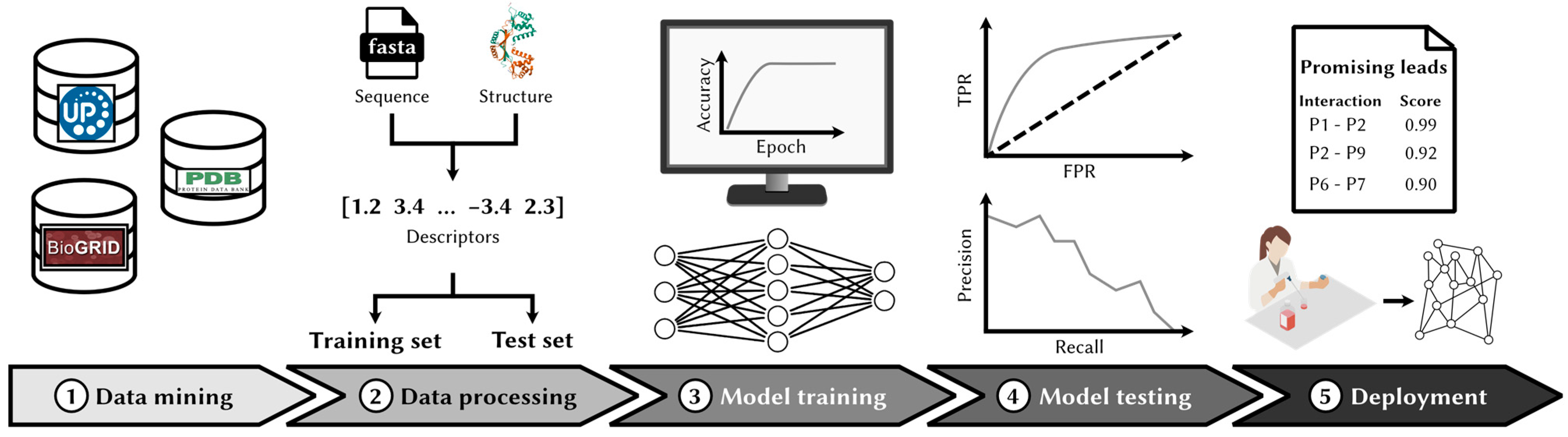

3.2.1. Data Curation

3.2.2. Feature Engineering and Data Splitting

3.2.3. Model Training

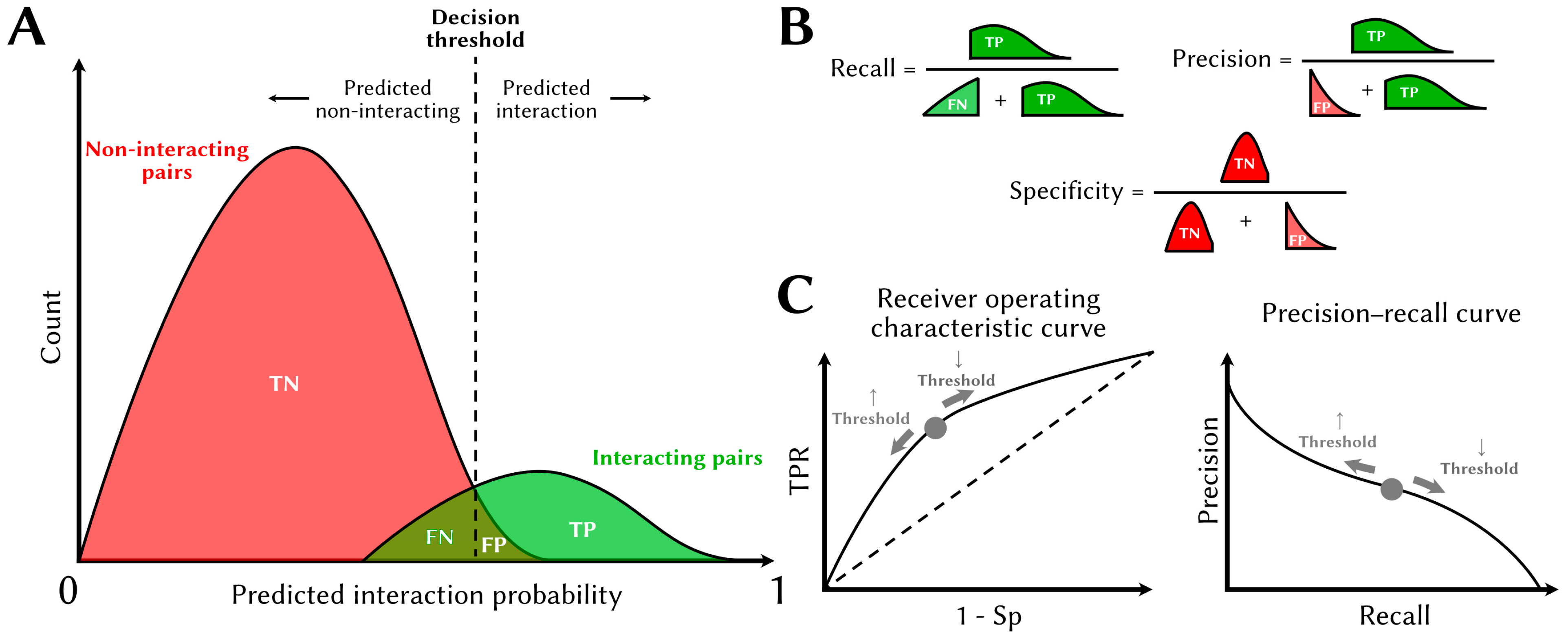

3.2.4. Model Evaluation

4. Generalizing Beyond Model Systems: Challenges and Solutions in Cross-Species PPI Prediction

5. The Class Imbalance Problem in PPI Prediction

6. Old but Gold: Sequence-Based Protein–Protein and Peptide–Protein Predictors

6.1. Machine Learning-Based Approaches

6.2. Protein Language Model-Based Approaches

6.3. Similarity-Based Approaches

7. Protein–Protein Interaction Prediction for Drug Development

7.1. Identification of Drug Targets with PPI Network Analysis

7.2. Targeting PPIs with Peptide Binders

7.3. Antibody Design

8. Summary and Future Trends

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Elhabashy, H.; Merino, F.; Alva, V.; Kohlbacher, O.; Lupas, A.N. Exploring Protein-Protein Interactions at the Proteome Level. Structure 2022, 30, 462–475. [Google Scholar] [CrossRef]

- Nooren, I.M.A.; Thornton, J.M. Diversity of Protein–Protein Interactions. EMBO J. 2003, 22, 3486–3492. [Google Scholar] [CrossRef]

- Friedhoff, P.; Li, P.; Gotthardt, J. Protein-Protein Interactions in DNA Mismatch Repair. DNA Repair 2016, 38, 50–57. [Google Scholar] [CrossRef]

- Guarracino, D.A.; Bullock, B.N.; Arora, P.S. Protein-Protein Interactions in Transcription: A Fertile Ground for Helix Mimetics. Biopolymers 2011, 95, 1–7. [Google Scholar] [CrossRef]

- Jia, X.; He, X.; Huang, C.; Li, J.; Dong, Z.; Liu, K. Protein Translation: Biological Processes and Therapeutic Strategies for Human Diseases. Signal Transduct. Target. Ther. 2024, 9, 44. [Google Scholar] [CrossRef]

- Westermarck, J.; Ivaska, J.; Corthals, G.L. Identification of Protein Interactions Involved in Cellular Signaling. Mol. Cell. Proteomics MCP 2013, 12, 1752–1763. [Google Scholar] [CrossRef]

- Buchner, J. Molecular Chaperones and Protein Quality Control: An Introduction to the JBC Reviews Thematic Series. J. Biol. Chem. 2019, 294, 2074–2075. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, M.W.; Kann, M.G. Chapter 4: Protein Interactions and Disease. PLoS Comput. Biol. 2012, 8, e1002819. [Google Scholar] [CrossRef]

- Cheng, F.; Zhao, J.; Wang, Y.; Lu, W.; Liu, Z.; Zhou, Y.; Martin, W.R.; Wang, R.; Huang, J.; Hao, T.; et al. Comprehensive Characterization of Protein–Protein Interactions Perturbed by Disease Mutations. Nat. Genet. 2021, 53, 342–353. [Google Scholar] [CrossRef] [PubMed]

- Greenblatt, J.F.; Alberts, B.M.; Krogan, N.J. Discovery and Significance of Protein-Protein Interactions in Health and Disease. Cell 2024, 187, 6501–6517. [Google Scholar] [CrossRef] [PubMed]

- Knopman, D.S.; Amieva, H.; Petersen, R.C.; Chételat, G.; Holtzman, D.M.; Hyman, B.T.; Nixon, R.A.; Jones, D.T. Alzheimer Disease. Nat. Rev. Dis. Primers 2021, 7, 33. [Google Scholar] [CrossRef]

- Janssens, J.; Van Broeckhoven, C. Pathological Mechanisms Underlying TDP-43 Driven Neurodegeneration in FTLD–ALS Spectrum Disorders. Hum. Mol. Genet. 2013, 22, R77–R87. [Google Scholar] [CrossRef]

- Bloem, B.R.; Okun, M.S.; Klein, C. Parkinson’s Disease. Lancet 2021, 397, 2284–2303. [Google Scholar] [CrossRef]

- Huang, L.; Guo, Z.; Wang, F.; Fu, L. KRAS Mutation: From Undruggable to Druggable in Cancer. Signal Transduct. Target. Ther. 2021, 6, 386. [Google Scholar] [CrossRef] [PubMed]

- Tabar, M.S.; Parsania, C.; Chen, H.; Su, X.-D.; Bailey, C.G.; Rasko, J.E.J. Illuminating the Dark Protein-Protein Interactome. Cell Rep. Methods 2022, 2, 100275. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Park, J.; Bouhaddou, M.; Kim, K.; Rojc, A.; Modak, M.; Soucheray, M.; McGregor, M.J.; O’Leary, P.; Wolf, D.; et al. A Protein Interaction Landscape of Breast Cancer. Science 2021, 374, eabf3066. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.; Mo, X.; Ivanov, A.A. Decoding the Functional Impact of the Cancer Genome through Protein–Protein Interactions. Nat. Rev. Cancer 2025, 25, 189–208. [Google Scholar] [CrossRef]

- Dunham, W.H.; Mullin, M.; Gingras, A. Affinity-purification Coupled to Mass Spectrometry: Basic Principles and Strategies. Proteomics 2012, 12, 1576–1590. [Google Scholar] [CrossRef]

- Sidhu, S.S.; Fairbrother, W.J.; Deshayes, K. Exploring Protein–Protein Interactions with Phage Display. ChemBioChem 2003, 4, 14–25. [Google Scholar] [CrossRef]

- Zhou, M.; Li, Q.; Wang, R. Current Experimental Methods for Characterizing Protein–Protein Interactions. ChemMedChem 2016, 11, 738–756. [Google Scholar] [CrossRef]

- Brückner, A.; Polge, C.; Lentze, N.; Auerbach, D.; Schlattner, U. Yeast Two-Hybrid, a Powerful Tool for Systems Biology. Int. J. Mol. Sci. 2009, 10, 2763–2788. [Google Scholar] [CrossRef]

- Akbarzadeh, S.; Coşkun, Ö.; Günçer, B. Studying Protein–Protein Interactions: Latest and Most Popular Approaches. J. Struct. Biol. 2024, 216, 108118. [Google Scholar] [CrossRef]

- Pitre, S.; Alamgir, M.; Green, J.R.; Dumontier, M.; Dehne, F.; Golshani, A. Computational Methods For Predicting Protein–Protein Interactions. In Protein–Protein Interaction; Werther, M., Seitz, H., Eds.; Advances in Biochemical Engineering/Biotechnology; Springer: Berlin/Heidelberg, Germany, 2008; Volume 110, pp. 247–267. ISBN 978-3-540-68817-4. [Google Scholar]

- Li, Y.; Ilie, L. SPRINT: Ultrafast Protein-Protein Interaction Prediction of the Entire Human Interactome. BMC Bioinform. 2017, 18, 485. [Google Scholar] [CrossRef]

- Dick, K.; Samanfar, B.; Barnes, B.; Cober, E.R.; Mimee, B.; Tan, L.H.; Molnar, S.J.; Biggar, K.K.; Golshani, A.; Dehne, F.; et al. PIPE4: Fast PPI Predictor for Comprehensive Inter- and Cross-Species Interactomes. Sci. Rep. 2020, 10, 1390. [Google Scholar] [CrossRef]

- Bernett, J.; Blumenthal, D.B.; List, M. Cracking the Black Box of Deep Sequence-Based Protein–Protein Interaction Prediction. Brief. Bioinform. 2024, 25, bbae076. [Google Scholar] [CrossRef] [PubMed]

- Andrei, S.A.; Sijbesma, E.; Hann, M.; Davis, J.; O’Mahony, G.; Perry, M.W.D.; Karawajczyk, A.; Eickhoff, J.; Brunsveld, L.; Doveston, R.G.; et al. Stabilization of Protein-Protein Interactions in Drug Discovery. Expert. Opin. Drug Discov. 2017, 12, 925–940. [Google Scholar] [CrossRef] [PubMed]

- Macalino, S.J.Y.; Basith, S.; Clavio, N.A.B.; Chang, H.; Kang, S.; Choi, S. Evolution of In Silico Strategies for Protein-Protein Interaction Drug Discovery. Molecules 2018, 23, 1963. [Google Scholar] [CrossRef]

- Wang, X.; Ni, D.; Liu, Y.; Lu, S. Rational Design of Peptide-Based Inhibitors Disrupting Protein-Protein Interactions. Front. Chem. 2021, 9, 682675. [Google Scholar] [CrossRef] [PubMed]

- wwPDB consortium. Protein Data Bank: The Single Global Archive for 3D Macromolecular Structure Data. Nucleic Acids Res. 2019, 47, D520–D528. [Google Scholar] [CrossRef]

- Oughtred, R.; Rust, J.; Chang, C.; Breitkreutz, B.-J.; Stark, C.; Willems, A.; Boucher, L.; Leung, G.; Kolas, N.; Zhang, F.; et al. The BioGRID Database: A Comprehensive Biomedical Resource of Curated Protein, Genetic, and Chemical Interactions. Protein Sci. 2021, 30, 187–200. [Google Scholar] [CrossRef]

- Rose, P.W.; Prlić, A.; Altunkaya, A.; Bi, C.; Bradley, A.R.; Christie, C.H.; Costanzo, L.D.; Duarte, J.M.; Dutta, S.; Feng, Z.; et al. The RCSB Protein Data Bank: Integrative View of Protein, Gene and 3D Structural Information. Nucleic Acids Res. 2017, 45, D271–D281. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly Accurate Protein Structure Prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Abramson, J.; Adler, J.; Dunger, J.; Evans, R.; Green, T.; Pritzel, A.; Ronneberger, O.; Willmore, L.; Ballard, A.J.; Bambrick, J.; et al. Accurate Structure Prediction of Biomolecular Interactions with AlphaFold 3. Nature 2024, 630, 493–500. [Google Scholar] [CrossRef]

- Lin, Z.; Akin, H.; Rao, R.; Hie, B.; Zhu, Z.; Lu, W.; Smetanin, N.; Verkuil, R.; Kabeli, O.; Shmueli, Y.; et al. Evolutionary-Scale Prediction of Atomic-Level Protein Structure with a Language Model. Science 2023, 379, 1123–1130. [Google Scholar] [CrossRef]

- Chai Discovery; Boitreaud, J.; Dent, J.; McPartlon, M.; Meier, J.; Reis, V.; Rogozhnikov, A.; Wu, K. Chai-1: Decoding the Molecular Interactions of Life. bioRxiv 2024. [Google Scholar] [CrossRef]

- Wohlwend, J.; Corso, G.; Passaro, S.; Reveiz, M.; Leidal, K.; Swiderski, W.; Portnoi, T.; Chinn, I.; Silterra, J.; Jaakkola, T.; et al. Boltz-1 Democratizing Biomolecular Interaction Modeling. bioRxiv 2024. [Google Scholar] [CrossRef]

- Passaro, S.; Corso, G.; Wohlwend, J.; Reveiz, M.; Thaler, S.; Somnath, V.R.; Getz, N.; Portnoi, T.; Roy, J.; Stark, H.; et al. Boltz-2: Towards Accurate and Efficient Binding Affinity Prediction. bioRxiv 2025. [Google Scholar] [CrossRef] [PubMed]

- Terwilliger, T.C.; Liebschner, D.; Croll, T.I.; Williams, C.J.; McCoy, A.J.; Poon, B.K.; Afonine, P.V.; Oeffner, R.D.; Richardson, J.S.; Read, R.J.; et al. AlphaFold Predictions Are Valuable Hypotheses and Accelerate but Do Not Replace Experimental Structure Determination. Nat. Methods 2023, 21, 110–116. [Google Scholar] [CrossRef]

- Verburgt, J.; Zhang, Z.; Kihara, D. Multi-Level Analysis of Intrinsically Disordered Protein Docking Methods. Methods 2022, 204, 55–63. [Google Scholar] [CrossRef]

- Kibar, G.; Vingron, M. Prediction of Protein–Protein Interactions Using Sequences of Intrinsically Disordered Regions. Proteins Struct. Funct. Bioinforma. 2023, 91, 980–990. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.Y.; Hubrich, D.; Varga, J.K.; Schäfer, C.; Welzel, M.; Schumbera, E.; Djokic, M.; Strom, J.M.; Schönfeld, J.; Geist, J.L.; et al. Systematic Discovery of Protein Interaction Interfaces Using AlphaFold and Experimental Validation. Mol. Syst. Biol. 2024, 20, 75–97. [Google Scholar] [CrossRef] [PubMed]

- Orand, T.; Jensen, M.R. Binding Mechanisms of Intrinsically Disordered Proteins: Insights from Experimental Studies and Structural Predictions. Curr. Opin. Struct. Biol. 2025, 90, 102958. [Google Scholar] [CrossRef] [PubMed]

- Luppino, F.; Lenz, S.; Chow, C.F.W.; Toth-Petroczy, A. Deep Learning Tools Predict Variants in Disordered Regions with Lower Sensitivity. BMC Genom. 2025, 26, 367. [Google Scholar] [CrossRef] [PubMed]

- Yuan, R.; Zhang, J.; Zhou, J.; Cong, Q. Recent Progress and Future Challenges in Structure-Based Protein-Protein Interaction Prediction. Mol. Ther. 2025, 33, 2252–2268. [Google Scholar] [CrossRef]

- Raisinghani, N.; Parikh, V.; Foley, B.; Verkhivker, G. Assessing Structures and Conformational Ensembles of Apo and Holo Protein States Using Randomized Alanine Sequence Scanning Combined with Shallow Subsampling in AlphaFold2: Insights and Lessons from Predictions of Functional Allosteric Conformations. bioRxiv 2024. [Google Scholar] [CrossRef]

- Chen, L.T.; Quinn, Z.; Dumas, M.; Peng, C.; Hong, L.; Lopez-Gonzalez, M.; Mestre, A.; Watson, R.; Vincoff, S.; Zhao, L.; et al. Target Sequence-Conditioned Design of Peptide Binders Using Masked Language Modeling. Nat. Biotechnol. 2025, 1–13. [Google Scholar] [CrossRef]

- Watson, J.L.; Juergens, D.; Bennett, N.R.; Trippe, B.L.; Yim, J.; Eisenach, H.E.; Ahern, W.; Borst, A.J.; Ragotte, R.J.; Milles, L.F.; et al. De Novo Design of Protein Structure and Function with RFdiffusion. Nature 2023, 620, 1089–1100. [Google Scholar] [CrossRef]

- Mitchell, T. Machine Learning; McGraw-Hill Series in Computer Science; McGraw-Hill Professional: New York, NY, USA, 1997. [Google Scholar]

- Yugandhar, K.; Gromiha, M.M. Protein–Protein Binding Affinity Prediction from Amino Acid Sequence. Bioinformatics 2014, 30, 3583–3589. [Google Scholar] [CrossRef]

- Abbasi, W.A.; Yaseen, A.; Hassan, F.U.; Andleeb, S.; Minhas, F.U.A.A. ISLAND: In-Silico Proteins Binding Affinity Prediction Using Sequence Information. BioData Min. 2020, 13, 20. [Google Scholar] [CrossRef]

- Guo, Z.; Yamaguchi, R. Machine Learning Methods for Protein-Protein Binding Affinity Prediction in Protein Design. Front. Bioinform. 2022, 2, 1065703. [Google Scholar] [CrossRef]

- Romero-Molina, S.; Ruiz-Blanco, Y.B.; Mieres-Perez, J.; Harms, M.; Münch, J.; Ehrmann, M.; Sanchez-Garcia, E. PPI-Affinity: A Web Tool for the Prediction and Optimization of Protein–Peptide and Protein–Protein Binding Affinity. J. Proteome Res. 2022, 21, 1829–1841. [Google Scholar] [CrossRef]

- Ofran, Y.; Rost, B. Predicted Protein–Protein Interaction Sites from Local Sequence Information. FEBS Lett. 2003, 544, 236–239. [Google Scholar] [CrossRef]

- Ezkurdia, I.; Bartoli, L.; Fariselli, P.; Casadio, R.; Valencia, A.; Tress, M.L. Progress and Challenges in Predicting Protein–Protein Interaction Sites. Brief. Bioinform. 2009, 10, 233–246. [Google Scholar] [CrossRef]

- Zhang, J.; Kurgan, L. Review and Comparative Assessment of Sequence-Based Predictors of Protein-Binding Residues. Brief. Bioinform. 2018, 19, 821–837. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Golding, G.B.; Ilie, L. DELPHI: Accurate Deep Ensemble Model for Protein Interaction Sites Prediction. Bioinformatics 2021, 37, 896–904. [Google Scholar] [CrossRef]

- Szklarczyk, D.; Kirsch, R.; Koutrouli, M.; Nastou, K.; Mehryary, F.; Hachilif, R.; Gable, A.L.; Fang, T.; Doncheva, N.T.; Pyysalo, S.; et al. The STRING Database in 2023: Protein-Protein Association Networks and Functional Enrichment Analyses for Any Sequenced Genome of Interest. Nucleic Acids Res. 2023, 51, D638–D646. [Google Scholar] [CrossRef]

- del Toro, N.; Shrivastava, A.; Ragueneau, E.; Meldal, B.; Combe, C.; Barrera, E.; Perfetto, L.; How, K.; Ratan, P.; Shirodkar, G.; et al. The IntAct Database: Efficient Access to Fine-Grained Molecular Interaction Data. Nucleic Acids Res. 2022, 50, D648–D653. [Google Scholar] [CrossRef] [PubMed]

- Licata, L.; Briganti, L.; Peluso, D.; Perfetto, L.; Iannuccelli, M.; Galeota, E.; Sacco, F.; Palma, A.; Nardozza, A.P.; Santonico, E.; et al. MINT, the Molecular Interaction Database: 2012 Update. Nucleic Acids Res. 2012, 40, D857–D861. [Google Scholar] [CrossRef]

- Martins, P.; Mariano, D.; Carvalho, F.C.; Bastos, L.L.; Moraes, L.; Paixão, V.; Cardoso de Melo-Minardi, R. Propedia v2.3: A Novel Representation Approach for the Peptide-Protein Interaction Database Using Graph-Based Structural Signatures. Front. Bioinform. 2023, 3, 1103103. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Yu, L.; Wen, Z.; Li, M. Using Support Vector Machine Combined with Auto Covariance to Predict Protein–Protein Interactions from Protein Sequences. Nucleic Acids Res. 2008, 36, 3025–3030. [Google Scholar] [CrossRef]

- Ben-Hur, A.; Noble, W.S. Choosing Negative Examples for the Prediction of Protein-Protein Interactions. BMC Bioinform. 2006, 7, S2. [Google Scholar] [CrossRef]

- Romero-Molina, S.; Ruiz-Blanco, Y.B.; Harms, M.; Münch, J.; Sanchez-Garcia, E. PPI-Detect: A Support Vector Machine Model for Sequence-Based Prediction of Protein-Protein Interactions: PPI-Detect: A Support Vector Machine Model for Sequence-Based Prediction of Protein-Protein Interactions. J. Comput. Chem. 2019, 40, 1233–1242. [Google Scholar] [CrossRef]

- Smialowski, P.; Pagel, P.; Wong, P.; Brauner, B.; Dunger, I.; Fobo, G.; Frishman, G.; Montrone, C.; Rattei, T.; Frishman, D.; et al. The Negatome Database: A Reference Set of Non-Interacting Protein Pairs. Nucleic Acids Res. 2010, 38, D540–D544. [Google Scholar] [CrossRef] [PubMed]

- Blohm, P.; Frishman, G.; Smialowski, P.; Goebels, F.; Wachinger, B.; Ruepp, A.; Frishman, D. Negatome 2.0: A Database of Non-Interacting Proteins Derived by Literature Mining, Manual Annotation and Protein Structure Analysis. Nucleic Acids Res. 2014, 42, D396–D400. [Google Scholar] [CrossRef]

- The UniProt Consortium. UniProt: The Universal Protein Knowledgebase in 2025. Nucleic Acids Res. 2025, 53, D609–D617. [Google Scholar] [CrossRef] [PubMed]

- Schaefer, M.H.; Serrano, L.; Andrade-Navarro, M.A. Correcting for the Study Bias Associated with Protein–Protein Interaction Measurements Reveals Differences between Protein Degree Distributions from Different Cancer Types. Front. Genet. 2015, 6, 260. [Google Scholar] [CrossRef]

- Luck, K.; Kim, D.-K.; Lambourne, L.; Spirohn, K.; Begg, B.E.; Bian, W.; Brignall, R.; Cafarelli, T.; Campos-Laborie, F.J.; Charloteaux, B.; et al. A Reference Map of the Human Binary Protein Interactome. Nature 2020, 580, 402–408. [Google Scholar] [CrossRef] [PubMed]

- Sikic, K.; Carugo, O. Protein Sequence Redundancy Reduction: Comparison of Various Method. Bioinformation 2010, 5, 234–239. [Google Scholar] [CrossRef]

- Fu, L.; Niu, B.; Zhu, Z.; Wu, S.; Li, W. CD-HIT: Accelerated for Clustering the next-Generation Sequencing Data. Bioinformatics 2012, 28, 3150–3152. [Google Scholar] [CrossRef]

- Chen, M.; Ju, C.J.-T.; Zhou, G.; Chen, X.; Zhang, T.; Chang, K.-W.; Zaniolo, C.; Wang, W. Multifaceted Protein–Protein Interaction Prediction Based on Siamese Residual RCNN. Bioinformatics 2019, 35, i305–i314. [Google Scholar] [CrossRef]

- Sledzieski, S.; Singh, R.; Cowen, L.; Berger, B. D-SCRIPT Translates Genome to Phenome with Sequence-Based, Structure-Aware, Genome-Scale Predictions of Protein-Protein Interactions. Cell Syst. 2021, 12, 969–982.e6. [Google Scholar] [CrossRef]

- Yu, B.; Chen, C.; Zhou, H.; Liu, B.; Ma, Q. GTB-PPI: Predict Protein–Protein Interactions Based on L1-Regularized Logistic Regression and Gradient Tree Boosting. Genom. Proteom. Bioinform. 2020, 18, 582–592. [Google Scholar] [CrossRef]

- Steinegger, M.; Söding, J. MMseqs2 Enables Sensitive Protein Sequence Searching for the Analysis of Massive Data Sets. Nat. Biotechnol. 2017, 35, 1026–1028. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, Q.; Yu, B.; Yu, Z.; Lawrence, P.J.; Ma, Q.; Zhang, Y. Improving Protein-Protein Interactions Prediction Accuracy Using XGBoost Feature Selection and Stacked Ensemble Classifier. Comput. Biol. Med. 2020, 123, 103899. [Google Scholar] [CrossRef]

- Liu, D.; Young, F.; Lamb, K.D.; Quiros, A.C.; Pancheva, A.; Miller, C.; Macdonald, C.; Robertson, D.L.; Yuan, K. PLM-Interact: Extending Protein Language Models to Predict Protein-Protein Interactions. bioRxiv 2024. [Google Scholar] [CrossRef]

- Zheng, X.; Du, H.; Xu, F.; Li, J.; Liu, Z.; Wang, W.; Chen, T.; Ouyang, W.; Li, S.Z.; Lu, Y.; et al. PRING: Rethinking Protein-Protein Interaction Prediction from Pairs to Graphs. arXiv 2025, arXiv:2507.05101. [Google Scholar] [CrossRef]

- Park, Y.; Marcotte, E.M. Flaws in Evaluation Schemes for Pair-Input Computational Predictions. Nat. Methods 2012, 9, 1134–1136. [Google Scholar] [CrossRef]

- Dunham, B.; Ganapathiraju, M.K. Benchmark Evaluation of Protein–Protein Interaction Prediction Algorithms. Molecules 2021, 27, 41. [Google Scholar] [CrossRef] [PubMed]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification, 2nd ed.; Wiley: New York, NY, USA, 2001; ISBN 978-0-471-05669-0. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Information Science and Statistics; Springer: New York, NY, USA, 2006; ISBN 978-0-387-31073-2. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; Adaptive Computation and Machine Learning; The MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Dick, K.; Chopra, A.; Biggar, K.K.; Green, J.R. Multi-Schema Computational Prediction of the Comprehensive SARS-CoV-2 vs. Human Interactome. PeerJ 2021, 9, e11117. [Google Scholar] [CrossRef]

- Yang, X.; Yang, S.; Li, Q.; Wuchty, S.; Zhang, Z. Prediction of Human-Virus Protein-Protein Interactions through a Sequence Embedding-Based Machine Learning Method. Comput. Struct. Biotechnol. J. 2020, 18, 153–161. [Google Scholar] [CrossRef] [PubMed]

- Tsukiyama, S.; Hasan, M.M.; Fujii, S.; Kurata, H. LSTM-PHV: Prediction of Human-Virus Protein–Protein Interactions by LSTM with Word2vec. Brief. Bioinform. 2021, 22, bbab228. [Google Scholar] [CrossRef] [PubMed]

- Dong, T.N.; Brogden, G.; Gerold, G.; Khosla, M. A Multitask Transfer Learning Framework for the Prediction of Virus-Human Protein–Protein Interactions. BMC Bioinform. 2021, 22, 572. [Google Scholar] [CrossRef]

- Nissan, N.; Hooker, J.; Arezza, E.; Dick, K.; Golshani, A.; Mimee, B.; Cober, E.; Green, J.; Samanfar, B. Large-Scale Data Mining Pipeline for Identifying Novel Soybean Genes Involved in Resistance against the Soybean Cyst Nematode. Front. Bioinform. 2023, 3, 1199675. [Google Scholar] [CrossRef] [PubMed]

- Barnes, B.; Karimloo, M.; Schoenrock, A.; Burnside, D.; Cassol, E.; Wong, A.; Dehne, F.; Golshani, A.; Green, J.R. Predicting Novel Protein-Protein Interactions between the HIV-1 Virus and Homo Sapiens. In Proceedings of the 2016 IEEE EMBS International Student Conference (ISC), Ottawa, ON, Canada, 29–31 May 2016; pp. 1–4. [Google Scholar]

- Singh, R.; Devkota, K.; Sledzieski, S.; Berger, B.; Cowen, L. Topsy-Turvy: Integrating a Global View into Sequence-Based PPI Prediction. Bioinformatics 2022, 38, i264–i272. [Google Scholar] [CrossRef]

- Szymborski, J.; Emad, A. INTREPPPID—An Orthologue-Informed Quintuplet Network for Cross-Species Prediction of Protein–Protein Interaction. Brief. Bioinform. 2024, 25, bbae405. [Google Scholar] [CrossRef]

- James, K.; Wipat, A.; Cockell, S.J. Expanding Interactome Analyses beyond Model Eukaryotes. Brief. Funct. Genom. 2022, 21, 243–269. [Google Scholar] [CrossRef] [PubMed]

- Volzhenin, K.; Bittner, L.; Carbone, A. SENSE-PPI Reconstructs Interactomes within, across, and between Species at the Genome Scale. iScience 2024, 27, 110371. [Google Scholar] [CrossRef]

- Ajila, V.; Colley, L.; Ste-Croix, D.T.; Nissan, N.; Cober, E.R.; Mimee, B.; Samanfar, B.; Green, J.R. Species-Specific microRNA Discovery and Target Prediction in the Soybean Cyst Nematode. Sci. Rep. 2023, 13, 17657. [Google Scholar] [CrossRef]

- Biggar, K.K.; Charih, F.; Liu, H.; Ruiz-Blanco, Y.B.; Stalker, L.; Chopra, A.; Connolly, J.; Adhikary, H.; Frensemier, K.; Hoekstra, M.; et al. Proteome-Wide Prediction of Lysine Methylation Leads to Identification of H2BK43 Methylation and Outlines the Potential Methyllysine Proteome. Cell Rep. 2020, 32, 107896. [Google Scholar] [CrossRef]

- Wang, G.; Vaisman, I.I.; van Hoek, M.L. Machine Learning Prediction of Antimicrobial Peptides. Methods Mol. Biol. Clifton NJ 2022, 2405, 1–37. [Google Scholar] [CrossRef]

- Brzezinski, D.; Minku, L.L.; Pewinski, T.; Stefanowski, J.; Szumaczuk, A. The Impact of Data Difficulty Factors on Classification of Imbalanced and Concept Drifting Data Streams. Knowl. Inf. Syst. 2021, 63, 1429–1469. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Y.; Xie, D. Implications of Imbalanced Datasets for Empirical ROC-AUC Estimation in Binary Classification Tasks. J. Stat. Comput. Simul. 2024, 94, 183–203. [Google Scholar] [CrossRef]

- Richardson, E.; Trevizani, R.; Greenbaum, J.A.; Carter, H.; Nielsen, M.; Peters, B. The Receiver Operating Characteristic Curve Accurately Assesses Imbalanced Datasets. Patterns 2024, 5, 100994. [Google Scholar] [CrossRef]

- Langote, M.; Zade, N.; Gundewar, S. Addressing Data Imbalance in Machine Learning: Challenges and Approaches. In Proceedings of the 2025 6th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Goathgaun, Nepal, 7–8 January 2025; pp. 1745–1749. [Google Scholar]

- Dick, K.; Green, J.R. Reciprocal Perspective for Improved Protein-Protein Interaction Prediction. Sci. Rep. 2018, 8, 11694. [Google Scholar] [CrossRef]

- Zheng, W.; Wuyun, Q.; Cheng, M.; Hu, G.; Zhang, Y. Two-Level Protein Methylation Prediction Using Structure Model-Based Features. Sci. Rep. 2020, 10, 6008. [Google Scholar] [CrossRef] [PubMed]

- Baranwal, M.; Magner, A.; Saldinger, J.; Turali-Emre, E.S.; Elvati, P.; Kozarekar, S.; VanEpps, J.S.; Kotov, N.A.; Violi, A.; Hero, A.O. Struct2Graph: A Graph Attention Network for Structure Based Predictions of Protein–Protein Interactions. BMC Bioinform. 2022, 23, 370. [Google Scholar] [CrossRef]

- Peace, R.J.; Biggar, K.K.; Storey, K.B.; Green, J.R. A Framework for Improving microRNA Prediction in Non-Human Genomes. Nucleic Acids Res. 2015, 43, e138. [Google Scholar] [CrossRef][Green Version]

- Venkatesan, K.; Rual, J.-F.; Vazquez, A.; Stelzl, U.; Lemmens, I.; Hirozane-Kishikawa, T.; Hao, T.; Zenkner, M.; Xin, X.; Goh, K.-I.; et al. An Empirical Framework for Binary Interactome Mapping. Nat. Methods 2009, 6, 83–90. [Google Scholar] [CrossRef]

- Zhang, J.; Humphreys, I.R.; Pei, J.; Kim, J.; Choi, C.; Yuan, R.; Durham, J.; Liu, S.; Choi, H.-J.; Baek, M.; et al. Computing the Human Interactome. bioRxiv 2024. [Google Scholar] [CrossRef]

- Stumpf, M.P.H.; Thorne, T.; de Silva, E.; Stewart, R.; An, H.J.; Lappe, M.; Wiuf, C. Estimating the Size of the Human Interactome. Proc. Natl. Acad. Sci. USA 2008, 105, 6959–6964. [Google Scholar] [CrossRef] [PubMed]

- Vidal, M. How Much of the Human Protein Interactome Remains to Be Mapped? Sci. Signal. 2016, 9, eg7. [Google Scholar] [CrossRef]

- Rolland, T.; Taşan, M.; Charloteaux, B.; Pevzner, S.J.; Zhong, Q.; Sahni, N.; Yi, S.; Lemmens, I.; Fontanillo, C.; Mosca, R.; et al. A Proteome-Scale Map of the Human Interactome Network. Cell 2014, 159, 1212–1226. [Google Scholar] [CrossRef]

- Schoenrock, A.; Samanfar, B.; Pitre, S.; Hooshyar, M.; Jin, K.; Phillips, C.A.; Wang, H.; Phanse, S.; Omidi, K.; Gui, Y.; et al. Efficient Prediction of Human Protein-Protein Interactions at a Global Scale. BMC Bioinform. 2014, 15, 383. [Google Scholar] [CrossRef]

- Chou, K.-C. Prediction of Protein Cellular Attributes Using Pseudo-Amino Acid Composition. Proteins Struct. Funct. Bioinform. 2001, 43, 246–255. [Google Scholar] [CrossRef] [PubMed]

- Shen, J.; Zhang, J.; Luo, X.; Zhu, W.; Yu, K.; Chen, K.; Li, Y.; Jiang, H. Predicting Protein–Protein Interactions Based Only on Sequences Information. Proc. Natl. Acad. Sci. USA 2007, 104, 4337–4341. [Google Scholar] [CrossRef] [PubMed]

- Govindan, G.; Nair, A.S. Composition, Transition and Distribution (CTD)—A Dynamic Feature for Predictions Based on Hierarchical Structure of Cellular Sorting. In Proceedings of the 2011 Annual IEEE India Conference, Hyderabad, India, 16–18 December 2011; pp. 1–6. [Google Scholar]

- Altschul, S.F.; Madden, T.L.; Schäffer, A.A.; Zhang, J.; Zhang, Z.; Miller, W.; Lipman, D.J. Gapped BLAST and PSI-BLAST: A New Generation of Protein Database Search Programs. Nucleic Acids Res. 1997, 25, 3389–3402. [Google Scholar] [CrossRef]

- Ruiz-Blanco, Y.B.; Paz, W.; Green, J.; Marrero-Ponce, Y. ProtDCal: A Program to Compute General-Purpose-Numerical Descriptors for Sequences and 3D-Structures of Proteins. BMC Bioinform. 2015, 16, 162. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Yao, Y.; Du, X.; Diao, Y.; Zhu, H. An Integration of Deep Learning with Feature Embedding for Protein–Protein Interaction Prediction. PeerJ 2019, 7, e7126. [Google Scholar] [CrossRef] [PubMed]

- Hashemifar, S.; Neyshabur, B.; Khan, A.A.; Xu, J. Predicting Protein–Protein Interactions through Sequence-Based Deep Learning. Bioinformatics 2018, 34, i802–i810. [Google Scholar] [CrossRef]

- Hu, X.; Feng, C.; Zhou, Y.; Harrison, A.; Chen, M. DeepTrio: A Ternary Prediction System for Protein–Protein Interaction Using Mask Multiple Parallel Convolutional Neural Networks. Bioinformatics 2022, 38, 694–702. [Google Scholar] [CrossRef]

- Soleymani, F.; Paquet, E.; Viktor, H.L.; Michalowski, W.; Spinello, D. ProtInteract: A Deep Learning Framework for Predicting Protein–Protein Interactions. Comput. Struct. Biotechnol. J. 2023, 21, 1324–1348. [Google Scholar] [CrossRef]

- Hu, J.; Li, Z.; Rao, B.; Thafar, M.A.; Arif, M. Improving Protein-Protein Interaction Prediction Using Protein Language Model and Protein Network Features. Anal. Biochem. 2024, 693, 115550. [Google Scholar] [CrossRef]

- Dang, T.H.; Vu, T.A. xCAPT5: Protein–Protein Interaction Prediction Using Deep and Wide Multi-Kernel Pooling Convolutional Neural Networks with Protein Language Model. BMC Bioinform. 2024, 25, 106. [Google Scholar] [CrossRef] [PubMed]

- Eid, F.-E.; ElHefnawi, M.; Heath, L.S. DeNovo: Virus-Host Sequence-Based Protein–Protein Interaction Prediction. Bioinformatics 2016, 32, 1144–1150. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Han, P.; Wang, G.; Chen, W.; Wang, S.; Song, T. SDNN-PPI: Self-Attention with Deep Neural Network Effect on Protein-Protein Interaction Prediction. BMC Genom. 2022, 23, 474. [Google Scholar] [CrossRef] [PubMed]

- Gao, H.; Chen, C.; Li, S.; Wang, C.; Zhou, W.; Yu, B. Prediction of Protein-Protein Interactions Based on Ensemble Residual Convolutional Neural Network. Comput. Biol. Med. 2023, 152, 106471. [Google Scholar] [CrossRef]

- Yang, K.K.; Fusi, N.; Lu, A.X. Convolutions Are Competitive with Transformers for Protein Sequence Pretraining. Cell Syst. 2024, 15, 286–294.e2. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Team, G.; Georgiev, P.; Lei, V.I.; Burnell, R.; Bai, L.; Gulati, A.; Tanzer, G.; Vincent, D.; Pan, Z.; Wang, S.; et al. Gemini 1.5: Unlocking Multimodal Understanding across Millions of Tokens of Context. arXiv 2024, arXiv:2403.05530. [Google Scholar] [CrossRef]

- Krogh, A.; Brown, M.; Mian, I.S.; Sjölander, K.; Haussler, D. Hidden Markov Models in Computational Biology: Applications to Protein Modeling. J. Mol. Biol. 1994, 235, 1501–1531. [Google Scholar] [CrossRef]

- Martelli, P.L.; Fariselli, P.; Krogh, A.; Casadio, R. A Sequence-Profile-Based HMM for Predicting and Discriminating β Barrel Membrane Proteins. Bioinformatics 2002, 18, S46–S53. [Google Scholar] [CrossRef]

- Söding, J. Protein Homology Detection by HMM–HMM Comparison. Bioinformatics 2005, 21, 951–960. [Google Scholar] [CrossRef]

- Bepler, T.; Berger, B. Learning the Protein Language: Evolution, Structure, and Function. Cell Syst. 2021, 12, 654–669.e3. [Google Scholar] [CrossRef]

- Suzek, B.E.; Wang, Y.; Huang, H.; McGarvey, P.B.; Wu, C.H.; The UniProt Consortium. UniRef Clusters: A Comprehensive and Scalable Alternative for Improving Sequence Similarity Searches. Bioinformatics 2015, 31, 926–932. [Google Scholar] [CrossRef]

- Steinegger, M.; Söding, J. Clustering Huge Protein Sequence Sets in Linear Time. Nat. Commun. 2018, 9, 2542. [Google Scholar] [CrossRef]

- Medina-Ortiz, D.; Contreras, S.; Fernández, D.; Soto-García, N.; Moya, I.; Cabas-Mora, G.; Olivera-Nappa, Á. Protein Language Models and Machine Learning Facilitate the Identification of Antimicrobial Peptides. Int. J. Mol. Sci. 2024, 25, 8851. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Xiong, S.; Xu, L.; Liang, J.; Zhao, X.; Zhang, H.; Tan, X. Leveraging Protein Language Models for Robust Antimicrobial Peptide Detection. Methods 2025, 238, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Elnaggar, A.; Heinzinger, M.; Dallago, C.; Rehawi, G.; Wang, Y.; Jones, L.; Gibbs, T.; Feher, T.; Angerer, C.; Steinegger, M.; et al. ProtTrans: Towards Cracking the Language of Lifes Code Through Self-Supervised Deep Learning and High Performance Computing. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7112–7127. [Google Scholar] [CrossRef] [PubMed]

- Elnaggar, A.; Essam, H.; Salah-Eldin, W.; Moustafa, W.; Elkerdawy, M.; Rochereau, C.; Rost, B. Ankh: Optimized Protein Language Model Unlocks General-Purpose Modelling. bioRxiv 2023. [Google Scholar] [CrossRef]

- Ko, Y.S.; Parkinson, J.; Liu, C.; Wang, W. TUnA: An Uncertainty-Aware Transformer Model for Sequence-Based Protein–Protein Interaction Prediction. Brief. Bioinform. 2024, 25, bbae359. [Google Scholar] [CrossRef]

- Schoenrock, A.; Dehne, F.; Green, J.R.; Golshani, A.; Pitre, S. MP-PIPE: A Massively Parallel Protein-Protein Interaction Prediction Engine. In Proceedings of the International Conference on Supercomputing—ICS ’11, Tucson, AZ, USA, 31 May–4 June 2011; ACM Press: Tucson, AZ, USA, 2011; p. 327. [Google Scholar]

- Dayhoff, M.; Schwartz, R.; Orcutt, B. A Model of Evolutionary Change in Proteins. Atlas Protein Seq. Struct. 1978, 5, 345–352. [Google Scholar]

- Pitre, S.; North, C.; Alamgir, M.; Jessulat, M.; Chan, A.; Luo, X.; Green, J.R.; Dumontier, M.; Dehne, F.; Golshani, A. Global Investigation of Protein–Protein Interactions in Yeast Saccharomyces Cerevisiae Using Re-Occurring Short Polypeptide Sequences. Nucleic Acids Res. 2008, 36, 4286–4294. [Google Scholar] [CrossRef] [PubMed]

- Bell, E.W.; Schwartz, J.H.; Freddolino, P.L.; Zhang, Y. PEPPI: Whole-Proteome Protein-Protein Interaction Prediction through Structure and Sequence Similarity, Functional Association, and Machine Learning. J. Mol. Biol. 2022, 434, 167530. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Wang, Q.; Wang, T. Drug Target Protein-Protein Interaction Networks: A Systematic Perspective. BioMed Res. Int. 2017, 2017, 1289259. [Google Scholar] [CrossRef]

- Harrold, J.M.; Ramanathan, M.; Mager, D.E. Network-Based Approaches in Drug Discovery and Early Development. Clin. Pharmacol. Ther. 2013, 94, 651–658. [Google Scholar] [CrossRef]

- Kim, Y.-A.; Wuchty, S.; Przytycka, T.M. Identifying Causal Genes and Dysregulated Pathways in Complex Diseases. PLoS Comput. Biol. 2011, 7, e1001095. [Google Scholar] [CrossRef]

- Basar, M.A.; Hosen, M.F.; Kumar Paul, B.; Hasan, M.R.; Shamim, S.M.; Bhuyian, T. Identification of Drug and Protein-Protein Interaction Network among Stress and Depression: A Bioinformatics Approach. Inform. Med. Unlocked 2023, 37, 101174. [Google Scholar] [CrossRef]

- Peng, Q.; Schork, N.J. Utility of Network Integrity Methods in Therapeutic Target Identification. Front. Genet. 2014, 5, 12. [Google Scholar] [CrossRef]

- Li, Z.-C.; Zhong, W.-Q.; Liu, Z.-Q.; Huang, M.-H.; Xie, Y.; Dai, Z.; Zou, X.-Y. Large-Scale Identification of Potential Drug Targets Based on the Topological Features of Human Protein-Protein Interaction Network. Anal. Chim. Acta 2015, 871, 18–27. [Google Scholar] [CrossRef]

- Gordon, D.E.; Jang, G.M.; Bouhaddou, M.; Xu, J.; Obernier, K.; White, K.M.; O’Meara, M.J.; Rezelj, V.V.; Guo, J.Z.; Swaney, D.L.; et al. A SARS-CoV-2 Protein Interaction Map Reveals Targets for Drug Repurposing. Nature 2020, 583, 459–468. [Google Scholar] [CrossRef]

- Agamah, F.E.; Mazandu, G.K.; Hassan, R.; Bope, C.D.; Thomford, N.E.; Ghansah, A.; Chimusa, E.R. Computational/in Silico Methods in Drug Target and Lead Prediction. Brief. Bioinform. 2019, 21, 1663–1675. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, F.; Yang, N.; Zhan, X.; Liao, J.; Mai, S.; Huang, Z. In Silico Methods for Identification of Potential Therapeutic Targets. Interdiscip. Sci. Comput. Life Sci. 2022, 14, 285–310. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wang, N.; Zhang, W.; Cheng, X.; Yan, Z.; Shao, G.; Wang, X.; Wang, R.; Fu, C. Therapeutic Peptides: Current Applications and Future Directions. Signal Transduct. Target. Ther. 2022, 7, 48. [Google Scholar] [CrossRef] [PubMed]

- Rosson, E.; Lux, F.; David, L.; Godfrin, Y.; Tillement, O.; Thomas, E. Focus on Therapeutic Peptides and Their Delivery. Int. J. Pharm. 2025, 675, 125555. [Google Scholar] [CrossRef]

- Sivasankaran, R.P.; Snell, K.; Kunkel, G.; Georgiou, P.G.; Puente, E.G.; Maynard, H.D. Polymer-Mediated Protein/Peptide Therapeutic Stabilization: Current Progress and Future Directions. Prog. Polym. Sci. 2024, 156, 101867. [Google Scholar] [CrossRef]

- Nicze, M.; Borówka, M.; Dec, A.; Niemiec, A.; Bułdak, Ł.; Okopień, B. The Current and Promising Oral Delivery Methods for Protein- and Peptide-Based Drugs. Int. J. Mol. Sci. 2024, 25, 815. [Google Scholar] [CrossRef]

- Xiao, W.; Jiang, W.; Chen, Z.; Huang, Y.; Mao, J.; Zheng, W.; Hu, Y.; Shi, J. Advance in Peptide-Based Drug Development: Delivery Platforms, Therapeutics and Vaccines. Signal Transduct. Target. Ther. 2025, 10, 74. [Google Scholar] [CrossRef] [PubMed]

- Cabri, W.; Cantelmi, P.; Corbisiero, D.; Fantoni, T.; Ferrazzano, L.; Martelli, G.; Mattellone, A.; Tolomelli, A. Therapeutic Peptides Targeting PPI in Clinical Development: Overview, Mechanism of Action and Perspectives. Front. Mol. Biosci. 2021, 8, 697586. [Google Scholar] [CrossRef]

- Coin, I.; Beyermann, M.; Bienert, M. Solid-Phase Peptide Synthesis: From Standard Procedures to the Synthesis of Difficult Sequences. Nat. Protoc. 2007, 2, 3247–3256. [Google Scholar] [CrossRef] [PubMed]

- Charih, F.; Biggar, K.K.; Green, J.R. Assessing Sequence-Based Protein–Protein Interaction Predictors for Use in Therapeutic Peptide Engineering. Sci. Rep. 2022, 12, 9610. [Google Scholar] [CrossRef] [PubMed]

- Schoenrock, A.; Burnside, D.; Moteshareie, H.; Wong, A.; Golshani, A.; Dehne, F.; Green, J.R. Engineering Inhibitory Proteins with InSiPS: The in-Silico Protein Synthesizer. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis on—SC ’15, Austin, TX, USA, 15–20 November 2015; ACM Press: Austin, TX, USA, 2015; pp. 1–11. [Google Scholar]

- Burnside, D.; Schoenrock, A.; Moteshareie, H.; Hooshyar, M.; Basra, P.; Hajikarimlou, M.; Dick, K.; Barnes, B.; Kazmirchuk, T.; Jessulat, M.; et al. In Silico Engineering of Synthetic Binding Proteins from Random Amino Acid Sequences. iScience 2019, 11, 375–387. [Google Scholar] [CrossRef] [PubMed]

- Hajikarimlou, M.; Hooshyar, M.; Moutaoufik, M.T.; Aly, K.A.; Azad, T.; Takallou, S.; Jagadeesan, S.; Phanse, S.; Said, K.B.; Samanfar, B.; et al. A Computational Approach to Rapidly Design Peptides That Detect SARS-CoV-2 Surface Protein S. NAR Genom. Bioinform. 2022, 4, lqac058. [Google Scholar] [CrossRef]

- Lei, Y.; Li, S.; Liu, Z.; Wan, F.; Tian, T.; Li, S.; Zhao, D.; Zeng, J. A Deep-Learning Framework for Multi-Level Peptide–Protein Interaction Prediction. Nat. Commun. 2021, 12, 5465. [Google Scholar] [CrossRef]

- Palepu, K.; Ponnapati, M.; Bhat, S.; Tysinger, E.; Stan, T.; Brixi, G.; Koseki, S.R.T.; Chatterjee, P. Design of Peptide-Based Protein Degraders via Contrastive Deep Learning. bioRxiv 2022. [Google Scholar] [CrossRef]

- Bhat, S.; Palepu, K.; Hong, L.; Mao, J.; Ye, T.; Iyer, R.; Zhao, L.; Chen, T.; Vincoff, S.; Watson, R.; et al. De Novo Design of Peptide Binders to Conformationally Diverse Targets with Contrastive Language Modeling. Sci. Adv. 2025, 11, eadr8638. [Google Scholar] [CrossRef]

- Ruffolo, J.A.; Gray, J.J.; Sulam, J. Deciphering Antibody Affinity Maturation with Language Models and Weakly Supervised Learning. arXiv 2021, arXiv:2112.07782. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Leem, J.; Mitchell, L.S.; Farmery, J.H.R.; Barton, J.; Galson, J.D. Deciphering the Language of Antibodies Using Self-Supervised Learning. Patterns 2022, 3, 100513. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Kenlay, H.; Dreyer, F.A.; Kovaltsuk, A.; Miketa, D.; Pires, D.; Deane, C.M. Large Scale Paired Antibody Language Models. PLoS Comput. Biol. 2024, 20, e1012646. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Olsen, T.H.; Moal, I.H.; Deane, C.M. AbLang: An Antibody Language Model for Completing Antibody Sequences. Bioinform. Adv. 2022, 2, vbac046. [Google Scholar] [CrossRef]

- Shuai, R.W.; Ruffolo, J.A.; Gray, J.J. IgLM: Infilling Language Modeling for Antibody Sequence Design. Cell Syst. 2023, 14, 979–989.e4. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners. OpenAI Blog [Online]. 2019. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 5 July 2025).

- Kovaltsuk, A.; Leem, J.; Kelm, S.; Snowden, J.; Deane, C.M.; Krawczyk, K. Observed Antibody Space: A Resource for Data Mining Next-Generation Sequencing of Antibody Repertoires. J. Immunol. 2018, 201, 2502–2509. [Google Scholar] [CrossRef]

- Hie, B.L.; Shanker, V.R.; Xu, D.; Bruun, T.U.J.; Weidenbacher, P.A.; Tang, S.; Wu, W.; Pak, J.E.; Kim, P.S. Efficient Evolution of Human Antibodies from General Protein Language Models. Nat. Biotechnol. 2024, 42, 275–283. [Google Scholar] [CrossRef] [PubMed]

- Rives, A.; Meier, J.; Sercu, T.; Goyal, S.; Lin, Z.; Liu, J.; Guo, D.; Ott, M.; Zitnick, C.L.; Ma, J.; et al. Biological Structure and Function Emerge from Scaling Unsupervised Learning to 250 Million Protein Sequences. Proc. Natl. Acad. Sci. USA 2021, 118, e2016239118. [Google Scholar] [CrossRef] [PubMed]

- Meier, J.; Rao, R.; Verkuil, R.; Liu, J.; Sercu, T.; Rives, A. Language Models Enable Zero-Shot Prediction of the Effects of Mutations on Protein Function. bioRxiv 2021. [Google Scholar] [CrossRef]

- Boshar, S.; Trop, E.; de Almeida, B.P.; Copoiu, L.; Pierrot, T. Are Genomic Language Models All You Need? Exploring Genomic Language Models on Protein Downstream Tasks. Bioinformatics 2024, 40, btae529. [Google Scholar] [CrossRef]

- Consens, M.E.; Li, B.; Poetsch, A.R.; Gilbert, S. Genomic Language Models Could Transform Medicine but Not Yet. npj Digit. Med. 2025, 8, 212. [Google Scholar] [CrossRef]

- Ali, S.; Qadri, Y.A.; Ahmad, K.; Lin, Z.; Leung, M.-F.; Kim, S.W.; Vasilakos, A.V.; Zhou, T. Large Language Models in Genomics—A Perspective on Personalized Medicine. Bioengineering 2025, 12, 440. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Qiao, G.; Wang, G. scKEPLM: Knowledge Enhanced Large-Scale Pre-Trained Language Model for Single-Cell Transcriptomics. bioRxiv 2024. [Google Scholar] [CrossRef]

- Zeng, Y.; Xie, J.; Shangguan, N.; Wei, Z.; Li, W.; Su, Y.; Yang, S.; Zhang, C.; Zhang, J.; Fang, N.; et al. CellFM: A Large-Scale Foundation Model Pre-Trained on Transcriptomics of 100 Million Human Cells. Nat. Commun. 2025, 16, 4679. [Google Scholar] [CrossRef] [PubMed]

- Fournier, Q.; Vernon, R.M.; Van Der Sloot, A.; Schulz, B.; Chandar, S.; Langmead, C.J. Protein Language Models: Is Scaling Necessary? bioRxiv 2024. [Google Scholar] [CrossRef]

- Smietana, K.; Siatkowski, M.; Møller, M. Trends in Clinical Success Rates. Nat. Rev. Drug Discov. 2016, 15, 379–380. [Google Scholar] [CrossRef]

- Mullard, A. Parsing Clinical Success Rates. Nat. Rev. Drug Discov. 2016, 15, 447. [Google Scholar] [CrossRef]

- Yamaguchi, S.; Kaneko, M.; Narukawa, M. Approval Success Rates of Drug Candidates Based on Target, Action, Modality, Application, and Their Combinations. Clin. Transl. Sci. 2021, 14, 1113–1122. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Z.; Bedi, A.S.; Velasquez, A.; Guerra, S.; Lin-Gibson, S.; Cong, L.; Qu, Y.; Chakraborty, S.; Blewett, M.; et al. A Call for Built-in Biosecurity Safeguards for Generative AI Tools. Nat. Biotechnol. 2025, 43, 845–847. [Google Scholar] [CrossRef]

- de Lima, R.C.; Sinclair, L.; Megger, R.; Maciel, M.A.G.; Vasconcelos, P.F.d.C.; Quaresma, J.A.S. Artificial Intelligence Challenges in the Face of Biological Threats: Emerging Catastrophic Risks for Public Health. Front. Artif. Intell. 2024, 7, 1382356. [Google Scholar] [CrossRef]

- Wheeler, N.E. Responsible AI in Biotechnology: Balancing Discovery, Innovation and Biosecurity Risks. Front. Bioeng. Biotechnol. 2025, 13, 1537471. [Google Scholar] [CrossRef] [PubMed]

- Brent, R.; McKelvey, T.G., Jr. Contemporary AI Foundation Models Increase Biological Weapons Risk. arXiv 2025, arXiv:2506.13798. [Google Scholar] [CrossRef]

- OpenAI. Preparing for Future AI Capabilities in Biology. Available online: https://openai.com/index/preparing-for-future-ai-capabilities-in-biology/ (accessed on 29 August 2025).

| Database | URL | Human Interactions |

|---|---|---|

| BioGRID [31] | https://thebiogrid.org (accessed on 1 August 2025) | 1,890,522 |

| STRING [58] | https://string-db.org (accessed on 1 August 2025) | 2,219,787 |

| IntAct [59] | https://www.ebi.ac.uk/intact (accessed on 1 August 2025) | 1,702,367 |

| MINT [60] | https://mint.bio.uniroma2.it (accessed on 1 August 2025) | 139,901 |

| Propedia [61] | http://bioinfo.dcc.ufmg.br/propedia (accessed on 1 August 2025) | 19,813 |

| Metric | Summary | Comment |

|---|---|---|

| Accuracy (Ac) | Fraction of correctly classified pairs | Not useful in the context of PPI prediction, as it emphasizes the correct classification of non-interacting pairs that vastly outnumber interacting pairs (high class imbalance) |

| Recall (Re) | Fraction of interacting pairs also predicted to interact | |

| Precision (Pr) | Fraction of pairs predicted to interact that truly interact | Useful in high class imbalance situations; allows for the estimation of experiments required to identify a fixed number of new interacting pairs |

| Specificity (Sp) | Fraction of non-interacting pairs also predicted to not interact | Usually not particularly relevant in the context of PPI prediction |

| F1-score | Harmonic mean of precision and recall | |

| Prevalence-corrected precision (PCPr) | Formulation of precision as a function of recall, specificity, and the class imbalance ratio | Particularly useful, as it allows for the prediction of the anticipated precision for imbalance ratios in cases for different hypothetical imbalance ratios (the true ratio is often unknown) |

| Area under the receiver operating characteristic curve (AUROC) | Average recall–specificity tradeoff over the range of operating thresholds | Insensitive to class imbalance; likely to lead to overoptimistic performance estimates |

| Area under the precision–recall curve (AUPRC) | Average recall–precision tradeoff over the range of operating thresholds | Sensitive to class imbalance; captures precision, a highly relevant metric in the context of PPI prediction |

| Feature Set | Input | Dimension | Description |

|---|---|---|---|

| Amino acid composition (AAC) | Amino acid sequence | 20 | Frequency of the amino acids within the sequence of interest; limited information content (no evolutionary information, structural information, etc.) |

| Conjoint → triad method (CT) | Amino acid sequence and a letter code built from shared physicochemical features | 343 (for a 7-letter code) | The sequence is rewritten as a code (usually consisting of 7 letters) where each amino acid is assigned to one of those letters (based on physicochemical properties), and the counts for each possible triplet form the feature vector |

| Composition, transition and distribution (CTD) | Amino acid sequence and 3 amino acid groups defined for 7 physicochemical features | 441 | 3 groups for 7 physicochemical properties are defined. Composition (C): proportion of the residues in the sequence belonging to the 3 possible groups computed for each of the 7 properties Transition (T): number of transitions from one group to another (or vice versa) for all 7 physicochemical properties Distribution (D): chain length at which the 1st, first 25%, first 50%, first 75%, and first 100% of the amino acids in a group are encompassed These features are computed for each of the three equal thirds of the protein and concatenated |

| Pseudo amino acid composition (PseAAC) | Amino acid sequence and a set of physicochemical properties | 20 + λ | Amino acid composition descriptors to which correlation factors are added to account for the autocorrelation between hydrophobicity, hydrophilicity, and side chain mass values of residues up to λ positions apart |

| Position-specific scoring matrix (PSSM) | Amino acid sequence and a reference protein database | 20 × L (matrix) | Matrix tabulating the likelihood of a mutation to each of the 20 amino acids through evolution for all L amino acids in the sequence; rich in evolutionary/phylogenetic information |

| Autocorrelation of physicochemical properties (AC) | Amino acid sequence, physicochemical properties and a “lag” parameter defining the window size within which properties are aggregated | 14 (for 7 properties) | Autocorrelation of physicochemical property values in a sequence between residues in residue neighborhoods whose sizes are defined by the “lag” |

| ProtDCal-extracted features | Amino acid sequence and a list of physicochemical properties and aggregators | >10,000 (variable) | Ensemble of grouping schemes, weights, and aggregation operations applied to the physicochemical properties of the amino acids in a sequence |

| Model | Embedding Dimension | Parameters (Approx.) | Training Strategy | Training Data |

|---|---|---|---|---|

| ProtT5 [138] | 1024 | 3B | 1-gram random masking with demasking | BFD (pre-training; ~2.1B sequences) and UniRef50 (finetuning; ~45M sequences) |

| Ankh [139] | 1536 | 1B | 1-gram random masking with full sequence reconstruction | UniRef50 (~45M sequences) |

| ESM-2 [35] | 1280 | 650M | 1-gram random masking with demasking | UniRef50+90 (~65M sequences) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Charih, F.; Green, J.R.; Biggar, K.K. Sequence-Based Protein–Protein Interaction Prediction and Its Applications in Drug Discovery. Cells 2025, 14, 1449. https://doi.org/10.3390/cells14181449

Charih F, Green JR, Biggar KK. Sequence-Based Protein–Protein Interaction Prediction and Its Applications in Drug Discovery. Cells. 2025; 14(18):1449. https://doi.org/10.3390/cells14181449

Chicago/Turabian StyleCharih, François, James R. Green, and Kyle K. Biggar. 2025. "Sequence-Based Protein–Protein Interaction Prediction and Its Applications in Drug Discovery" Cells 14, no. 18: 1449. https://doi.org/10.3390/cells14181449

APA StyleCharih, F., Green, J. R., & Biggar, K. K. (2025). Sequence-Based Protein–Protein Interaction Prediction and Its Applications in Drug Discovery. Cells, 14(18), 1449. https://doi.org/10.3390/cells14181449