Abstract

The production of facility vegetables is of great significance but there are still limitations to this production in terms of yield and quality. Optical sensing technology offers a rapid and non-destructive solution for phenotypic analysis, which is superior to traditional destructive methods. This article reviews and analyzes nine optical sensing technologies, including RGB imaging, and introduces the application of various algorithms in combination with detection principles throughout the entire growth cycle as well as key phenotypic characteristics of facility vegetables. Each technology has its advantages. For example, RGB and multi/high-spectrum technologies are the most frequently used while thermal imaging is particularly suitable for early detection of non-biological and biological stress responses, and these technologies can effectively obtain physiological, biochemical, yield, and quality information about crops. However, current research mainly focuses on laboratory verification and there is still a significant gap when it comes to practical production. Future progress will depend on the integration of multiple sensing technologies, data analysis based on artificial intelligence, and improvements in model interpretability. These developments will be crucial for ultimately achieving precise breeding and intelligent greenhouse management systems, and will gradually transition from basic phenotypic analysis to comprehensive decision support systems.

1. Introduction

Facility vegetables refers to vegetable varieties cultivated in artificially controlled environments such as greenhouses and plastic tunnels to enable year-round production. These include common crops like tomatoes, cucumbers, and lettuce. The stable production of protected-culture vegetables plays a vital role in global agriculture, effectively alleviating seasonal supply gaps while ensuring consistent vegetable availability throughout the year. According to the report “National Modern Facility Agriculture Construction Plan (2023–2030)” issued by the Ministry of Agriculture and Rural Development, as of 2021, China’s facility planting area reached about 2.67 million hectares, of which the area of facility vegetables accounted for more than 80%, ranking China first in the world. Facility vegetable production reached 230 million tons, accounting for 30% of total vegetable production. However, the development of facility vegetables still faces the problems of insufficient total quantity and low quality [1].

With population growth and resource constraints, it has become a key challenge to improve the production and quality of facility vegetables. Plant phenotyping is essential for measuring and evaluating complex traits related to plant growth, yield, and other important agricultural characteristics [2] that reflect physiological, biochemical, yield, and quality characteristics as well as morphological and structural characteristics during plant growth [3]. By analyzing large amounts of crop phenotypic data, it is possible to gain insight into how genes regulate the expression of crop traits and how environmental factors affect crop growth and development, which in turn can feed into crop genetic improvement and molecular design breeding [4,5]. Therefore, the accurate acquisition of phenotypic information about vegetables can help to breed and develop high-yielding and high-quality vegetable germplasm, which can not only ensure food security but also promote the development of the facility vegetable industry [6].

Traditionally, vegetable trait studies have been assessed by visual observation and manual measurement, which is not only time-consuming and laborious but also highly subjective [6]. High performance liquid chromatography (HPLC) [7] or electrochemical analysis [8] can provide accurate physiological and biochemical parameters for vegetables, but their destructive sampling methods result in continuous in situ detection not being possible and in poor real-time performance. Optical sensing technologies, including RGB imaging, multispectral imaging (MSI), hyperspectral imaging (HSI), thermal imaging, chlorophyll fluorescence (CFI), Raman spectroscopy (RS), terahertz (THz), X-rays (CT), optical coherence tomography (OCT), and other optical detection methods, which have the advantages of rapidity and non-destructiveness, have been widely used in crop phenotyping research [9]. This research can, by analyzing the interactions between light and plant tissues, obtain facility vegetable physiological/biochemical, yield, quality, internal/external, and other characteristic information about facility vegetables.

RGB imaging detects how plants reflect or absorb different light colors when illuminated, converting them into electrical/digital signals to form color images, and enables extraction of facility vegetables’ apparent morphology [10]. Multi-spectral and hyperspectral imaging reflect the composition and content of chemical elements, such as nitrogen, phosphorus, potassium, etc., within facility vegetables through the differences in light absorption, reflection, and transmission characteristics of different substances [11,12]. Thermal imaging, based on the theory of infrared radiation and high sensitivity to temperature, can obtain high-precision heat distribution maps of the area measured in a short period of time, such as the canopy temperature and leaf temperature of facility vegetables [13], to understand the physiology and water stress status of facility vegetables [14]. Chlorophyll fluorescence (CFI) can be used to assess the photosynthetic activity of facility vegetables by studying the spatial and temporal heterogeneity of fluorescence emission patterns within cells, leaves, or whole plants [15]. Raman spectroscopy utilizes the Raman scattering effect resulting from the interaction of light with molecules to analyze the chemical composition of facility vegetables [16]. Terahertz technology utilizes the interaction of terahertz waves with matter to detect the internal structure of facility vegetables. Terahertz waves have the characteristics of high penetrability and sensitivity to moisture, and are able to penetrate the surface layer of plants and detect the moisture content of plant tissues [17]. X-rays are able to penetrate vegetables and, based on differences in the degree of X-ray absorption by different tissues, images of the interior of facility vegetables are generated, which can be used to check internal defects [18].

From planting to consumption, facility vegetables undergo critical stages including seedling growth, vegetative growth, flowering and fruit set, fruit enlargement and ripening, harvesting and grading, and transportation and storage. Due to differences in principles and characteristics, various optical sensing technologies exhibit distinct application scenarios and value across these stages. Simultaneously, the four core phenotypic traits of facility vegetables—biochemical, physiological, yield, and quality—directly correlate with growth status assessment and economic value determination. Optical sensing technology serves as the core means for achieving non-destructive, efficient detection of these traits. Based on this, this article systematically reviews the current application status of nine mainstream optical sensing technologies throughout the entire growth cycle of protected-culture vegetables. It analyzes the operational mechanisms and practical effectiveness of each technology in detecting the four types of phenotypic traits, further identifies the bottlenecks currently facing technological application, and outlines future development directions. The aim is to provide theoretical support for technology selection and results transformation in protected-culture vegetable phenotyping research, thereby advancing the industry toward precision and intelligence.

2. Overview of the Application of Optical Sensing Technology in Different Growth Cycles of Facility Vegetables

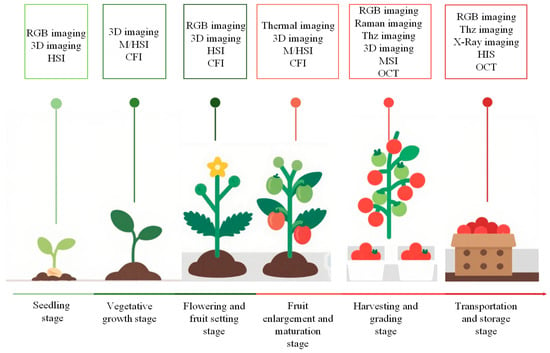

This section provides an overview of the application scenarios of nine technologies in six stages, from seedlings to transportation and storage. Different sensors are applied to different growth cycles, as shown in Figure 1.

Figure 1.

Application of optical sensing technology in different growth cycles of facility vegetables.

2.1. RGB Imaging

RGB imaging technology captures the reflected or transmitted light signals of an object in the visible band (380 nm~780 nm) and converts the acquired signals into digital image data by combining optoelectronic conversion and digital signal processing techniques [11]. In the field of agriculture, RGB imaging technology has a wide range of applications and is commonly used for crop phenotype extraction [19], biomass estimation [20], maturity detection [21], and so on.

Detecting the growth stage of seedlings is a key issue in the field of plant science. This involves identifying key milestones, such as seedling emergence from the soil, cotyledon unfolding, and the appearance of the first leaf, which represent the initial stages of plant development. The success or failure of these developmental stages, and the rate at which they progress, can significantly affect the future evolution of plants [22]. RGB imaging plays an important role in measuring seed germination rates. By analyzing seed images, germinated and non-germinated seeds can be accurately identified and the germination rate of seeds calculated. Using image recognition algorithms, morphological features of seeds, such as rupture of the seed coat and protrusion of the radicle, can be detected as a means of determining whether a seed has sprouted or not [23]. RGB imaging technology also shows unique advantages in assessing seedling robustness. By analyzing the color, shape, and size of the seedling, it is possible to determine its growth and health. Healthy seedlings usually have bright green leaves, while yellowing or wilting leaves may indicate growth problems. Seedling growth can be assessed by measuring morphological parameters such as stem thickness and leaf area [24].

During the flowering and fruiting stage of facility vegetables, RGB imaging plays an important role in identifying the number of flowers and monitoring their status by using its ability to capture color and morphology sensitively. By processing and analyzing plant images, flowers can be accurately identified and counted by using image recognition algorithms based on differences in color and morphology between flowers and leaves, stems, etc. For instance, the “bwconncomp” function in the Matlab image processing toolbox can be utilized to group pixels within the domain to determine whether they belong to the same flower [25]. By analyzing features such as the color, shape, and texture of flowers, their developmental stage and health can be determined. Fully open flowers are brightly colored with spreading petals, while flowers that are stunted or affected by pests and diseases may be dull in color, or have misshapen petals or disease spots. Studies have shown that quantitative analysis of flower color can be used to assess the physiological status of flowers and predict their potential for fruit set [26].

Accurate flower counts and condition monitoring are important for predicting fruit set. Flower number is directly related to the potential number of fruit set, while flower status affects the success of pollination and fertilization [27]. Through long-term image monitoring and data analysis, the relationship between flower number, condition and fruit set rate can be modeled to provide growers with predictive information on fruit set rate, which can help them make production management decisions in advance, such as rationally adjusting fertilizer application, irrigation, and pest control measures to improve fruit set rate and fruit yield [28].

During the harvest grading period of facility vegetables, RGB imaging technology is mainly used for appearance detection. The RGB imaging system can capture color images of objects and use image recognition algorithms such as solo to analyze their positional characteristics, making it convenient for harvesting [29].

2.2. Three-Dimensional Imaging

3D imaging technology is based on the principles of optical triangulation, time-of-flight, or interferometric measurements, etc., through LIDAR, structured light or stereoscopic vision and other sensing systems. These actively emit or passively receive light signals through point cloud data processing and 3D reconstruction algorithms and, ultimately, obtain the spatial coordinates of the object to be measured, the surface topography, and structural characteristics of the three-dimensional information [30].

During the nursery period of facility vegetables, 3D imaging technology can obtain three-dimensional morphological information about seedlings, providing more comprehensive data support for the study of seedling growth and development. It can non-contact measure key phenotypic parameters such as plant height, stem thickness, leaf area, leaf inclination, etc., quantify seedling growth and population uniformity, and accurately identify weak, overgrown, or lagging areas, thus guiding precise seedling spacing, seedling replenishment, or adjustment of environmental control strategies [31]. It can also be combined with multispectral or thermal imaging data to detect early stages of pest infestation, disease, water stress, or nutrient deficiency through changes in leaf morphology (e.g., wilting, curling) in three dimensions, or abnormalities in canopy temperature [32]. In addition, 3D imaging can assist automated nursery equipment, such as guiding transplanting robots to accurately grasp seedlings to avoid mechanical damage or optimizing supplemental lighting systems to dynamically adjust light angle and intensity according to seedling canopy structure to ensure uniform growth [33]. This technology continuously monitors growth dynamics and provides data support for precise agricultural management during the nursery period, which significantly improves the quality of nursery and production efficiency [34,35].

Three-dimensional imaging technology provides a brand-new technical means for the fine management of facility vegetables during the flowering and fruiting period [36]. This technology can accurately obtain three-dimensional spatial structure information about plants and construct high-precision three-dimensional models of them by means of advanced methods such as LiDAR and structured light. These models can clearly present the spatial distribution characteristics of flowers on the plant, including key parameters such as specific position, opening angle, and growth orientation, which are crucial for morphometrics, mechanical performance research, and mechanized equipment development [37]. Taking strawberry cultivation as an example, 3D imaging can visualize the distribution density and growth status of flowers at different canopy locations, helping growers to accurately assess plant light uniformity and growth and providing a scientific basis for optimizing planting density and light management, and thus improving productivity [38].

2.3. Multispectral and Hyperspectral Imaging

Multi-spectral imaging is an imaging technique that can acquire multiple wavelength image items of target information [39]. The information obtained by this technique is richer that obtained by monochrome and color images. Compared with hyperspectral imaging techniques, multispectral imaging has a lower spectral resolution, such as in the visible and near-infrared bands, which are about 30 nm~50 nm, while the spectral resolution of hyperspectral imaging is usually less than 10 nm [40]. While hyperspectral imaging is a set of images formed by successive imaging of the samples to be tested in different wavelength bands under the same environment, the result is the hyperspectral image data cube of the samples to be tested. This hyperspectral image data cube combines the spatial dimension and spectral dimension information of the samples to be tested, which is extremely helpful for further study of the samples to be tested [41].

During the nutrient growth period of facility vegetables, these techniques can accurately monitor growth dynamics [42]. For example, through multi-spectral indices, ordinary linear regression (OLR), multiple stepwise regression (MSR), and ridge regression (RR) inversion modeling, the nutrient content of leaves can be inversely modeled for diagnosis, thereby providing a basis for precise fertilization [43]; at the same time, the technique can efficiently diagnose the symptoms of deficiencies, for example, the abnormalities of reflectance of the red and near-infrared wavelengths in the case of nitrogen deficiency and the changes in the characteristics of the blue–green wavelength in the case of phosphorus deficiency, which are the most important factors in the development of disease. Some studies have confirmed that the accuracy of cucumber deficiency diagnosis based on hyperspectral imaging technology can reach more than 90% [44], which significantly improves the science and accuracy of facility vegetable production management and provides reliable technical support for high quality and high yield.

In addition, near-infrared hyperspectral imaging has also been widely used during the expansion and ripening period of facility vegetables. The core principle lies in the fact that different chemical components have characteristic absorption spectra in the near-infrared wavelength band (780–2500 nm), in which the key quality components such as sugar, organic acid, and water will produce specific molecular vibration absorption peaks [45]. By collecting diffuse reflectance or transmission spectra from the fruit surface and combining multivariate statistical methods (e.g., partial least squares regression, principal component analysis, etc.) to establish quantitative relationship models between spectral features and quality parameters, non-destructive testing can be realized [46]. For brix detection of tomato fruits, the prediction accuracy of near-infrared spectroscopy combined with PLS modeling exceeds 90% [47]. It provides multi-dimensional data support for fruit harvesting, irrigation control and quality grading, and significantly improves the accuracy and efficiency of fruit and vegetable quality management in facilities.

2.4. Chlorophyll Fluorescence Imaging

The release process for chlorophyll fluorescence is closely related to photochemical reactions, which can be used to sense photosynthesis, plant physiology, and environmental stress. Chlorophyll fluorescence detection plays an important role in the study of plant tissues such as leaves on a macro level, or chloroplasts and other cellular organelles on a micro level, and this detection does not cause damage to plants. Therefore, the chlorophyll fluorescence assay is known as a fast, non-destructive detection probe for plant photosynthetic function [48].

Currently, the chlorophyll fluorescence technique is mainly based on Butler’s energy competition model of photosynthesis. The model suggests that light energy absorbed by chlorophyll molecules releases energy through three pathways: photochemical reactions in the photosynthetic system, heat dissipation, and chlorophyll fluorescence. Detecting the chlorophyll fluorescence of plants can indirectly reflect the process of absorption, transfer, dissipation, and distribution of light energy in the plant photosynthetic system [49].

During the nutrient growth period of facility vegetables, chlorophyll fluorescence imaging assesses photosynthetic performance by quantifying core parameters such as maximum photochemical efficiency (Fv/Fm) and actual photochemical efficiency (ΦPSII) (typical value of Fv/Fm in healthy plants is about 0.8) [50]. Chlorophyll fluorescence imaging provides early diagnosis of abiotic stresses such as water deficit leading to abnormal fluorescence parameters [51], and biotic stresses such as changes in fluorescence characteristics at the early stage of cucumber downy mildew infestation [52], and its spatially resolved imaging features can localize the area of stress onset, providing a basis for the visualization of precise control (e.g., targeted irrigation, early disease prevention and control) [53]. Such a technological approach to visualizing and quantifying photosynthetic physiological responses significantly improves the timeliness of early warning of stress and the accuracy of management measures in vegetable production in facilities [54].

2.5. Thermal Imaging

Thermal imaging technology is mainly based on the principle of infrared radiation temperature measurement through the detection of infrared energy spontaneously radiating from the surface of the object to obtain temperature distribution information. The technology uses thermal imaging sensors to convert infrared radiation into electrical signals, which are processed to generate intuitive thermal images in which different color gradients accurately reflect the temperature changes of the object under testing [13].

The application of this technology in the expansion and ripening period of facility vegetables is mainly through non-contact monitoring of crop surface temperature distribution, which provides a scientific basis for precision agriculture management [55]. For example, in water management, it does this by detecting leaf temperature changes to determine the transpiration status of the plant to avoid water stress affecting fruit expansion, and then optimizes the irrigation strategy [56]. Thermal imaging can also identify localized temperature anomalies due to diseases or nutrient deficiencies, providing early warning of diseases and guiding precise control [57]. In addition, for fruit ripening, the technology can capture subtle temperature changes caused by metabolic activities. At the same time, based on the fact that different objects have different specific heat capacities at different temperatures, their thermal images are used as a clear indicator of maturity, which helps to determine the optimal harvest period [58]. For light management, thermal imaging can monitor leaf overheating due to bright light and help adjust supplemental light or shade programs to avoid photoinhibition affecting photosynthetic efficiency [59]. In the future, with deep integration with the Internet of Things and the popularization of low-cost equipment, thermal imaging technology will further improve the yield and quality of facility vegetables.

2.6. Raman Imaging

Raman imaging is a molecular spectral analysis method based on the Raman scattering effect. The principle is that when a laser interacts with sample molecules, the photons collide inelastically with the vibrational energy levels of the molecules, resulting in a change in the frequency of the scattered light, which produces a characteristic spectrum containing information about the molecular structure [60]. Due to the specificity of the vibrational modes of different chemical bonds, each chemical component will exhibit unique Raman spectral features, which makes this technique capable of qualitative and quantitative analysis of substances [61].

Raman imaging plays an important role in the harvesting and grading of vegetables in facilities by combining the molecular fingerprinting capability of Raman spectroscopy and the spatial analysis capability of high-resolution imaging to achieve non-destructive, rapid detection and accurate grading [62]. Raman imaging can non-destructively obtain information on the distribution of chemical components on the surface of vegetables, such as sugars, organic acids, vitamins, pigments, and pesticide residues, to assess their maturity, nutritional value, and safety [63]. In the automated sorting system, Raman imaging can classify vegetables in real time based on preset criteria (e.g., sugar–acid ratio, carotenoid content, etc.) [64], and identify moldy or diseased areas [65], which improves quality and reduces wastage, and provides a reliable technical support for intelligent harvesting and quality control in facility-based agriculture.

In addition, during the transportation and storage period for facility vegetables, Raman imaging technology can realize the accurate assessment of quality changes through dynamic monitoring of the chemical composition of fruit. The mechanism of action is as follows: over time, vegetable tissues undergo a series of biochemical reactions, such as degradation of polysaccharides, oxidative decomposition of antioxidants such as ascorbic acid, and metabolic transformation of cell wall components [66]. These changes in chemical components will directly cause changes in molecular vibration modes, which in turn leads to regular changes in the intensity, displacement, and other parameters of the characteristic peaks of Raman spectroscopy. The technique can also detect external damage suffered by fruits and vegetables during harvesting, transportation, storage, sorting, packaging, and marketing, as well as internal damage that occurs during the growth process [67]. These research results provide a reliable technical solution for the intelligent monitoring of quality in the cold chain logistics process of vegetables.

2.7. Terahertz Imaging

Terahertz (THz) radiation refers to long wavelength electromagnetic waves in the frequency range of 0.1–10 THz (corresponding to wavelengths of 30 μm–3 mm). Terahertz waves penetrate deep into the medium, and their high correlation helps to determine the exact refractive index and absorption coefficient of a given sample. Terahertz spectroscopy can be used to analyze macromolecules and constituents within crops due to the transmissive nature of the radiation, making it uniquely suited for bioinformatic detection applications [68].

The use of terahertz imaging to penetrate the skin of vegetables and obtain internal information plays an important role in the harvesting and grading period of facility vegetables, realizing non-destructive quality detection and automated grading [69]. Terahertz imaging can detect the internal water distribution and ripeness of fruits [70], and its spectral features can distinguish key indicators such as sugar [71] and starch content [72], and evaluate and grade the fruits from different perspectives. Compared with traditional visible light or near-infrared technology, terahertz imaging has better penetration ability for vegetables wrapped in multiple layers of leaves and is not disturbed by surface humidity, significantly improving the level of facility agriculture intelligence and reducing post-harvest losses. In the future, with the development of portable terahertz equipment, it is expected to become a key technology for post-harvest treatment of facility vegetables, thus improving the scientific and accuracy of grading and meeting the market demand for vegetables of different qualities.

Terahertz imaging technology plays an important role in quality monitoring and safety assurance during the transportation and storage period of facility vegetables. By analyzing the absorption and scattering characteristics of vegetable tissues on electromagnetic waves in the 0.2–1.2 THz band, we can accurately quantify the changes in cellular water content and thus determine the freshness and staleness of vegetables [73]. During the storage period, terahertz imaging can accurately identify early signs of spoilage that are difficult to detect by traditional methods and, based on the characteristic absorption peaks of the spoilage metabolites (e.g., ethylene), it can realize early deterioration diagnosis and shelf-life prediction, thus avoiding more serious damage caused by improper storage and preventing the deterioration of vegetable tissues. This can prevent greater economic losses caused by improper storage [74]. At the same time, this technology can accurately detect the surface corrosion of vegetables [74] and crop residues [75], which guarantees the quality and safety of agricultural products, provides an innovative solution for the intelligent management of cold chain logistics of agricultural products, and promotes the development of an agricultural economy.

2.8. X-Ray Imaging

The principle of X-ray imaging technology is based on the penetrating and material attenuation properties of X-rays. When high-energy X-rays pass through an object, substances of different densities absorb X-rays to different degrees, and the unabsorbed X-rays that penetrate the object are received by the detector, forming signals of different intensities, which are then converted into gray-scale images through computer processing [76].

X-ray imaging technology is used to improve the quality management and storage efficiency of vegetables mainly through non-destructive testing during the transportation and storage period of facility vegetables [77]. It is an effective tool for internal quality assessment and histological analysis of fresh fruits and vegetables because of its immunity to magnetic fields, its penetrating ability, and its ability to blacken photographic film, making it possible to examine multiple samples in greater detail in a shorter period of time [78]. During storage, the technology can rapidly detect internal defects in vegetables [79], such as mold [80] and root block damage [81], as well as mechanical damage to fruits and vegetables during transportation [82,83], to ensure that only high-quality products enter the distribution chain. In addition, studies have shown that X-ray irradiation also has the effect of extending shelf life and maintaining quality, which is of great commercial significance for food safety and preservation [84].

The application of this imaging technology in the transportation and storage period for facility vegetables can detect quality problems in vegetables in time, take corresponding measures to deal with them, prolong the storage life of vegetables, reduce losses, and provide key technical support for intelligent quality control of the facility vegetable supply chain.

2.9. Optical Coherence Tomography

Optical coherence tomography (OCT) enables non-invasive, high-resolution imaging of biological tissues and materials [85]. It operates based on the principle of low-coherence interferometry. A low-coherence light source emits light that is split into two beams: one travels through the sample (sample arm), and the other through a reference path (reference arm). Light reflected from different depths within the sample interferes with the reference light. By detecting and analyzing this interference signal, OCT can reconstruct cross-sectional images of the sample with a resolution in the order of micrometers [86].

In the study of facility vegetables, OCT has significant potential applications. During the vegetative growth stage, OCT can provide detailed images of leaf internal structures, including the arrangement of palisade and spongy mesophyll cells. Changes in these structures can indicate the physiological status of the plant, such as for water stress or nutrient deficiencies [87]. In the mature harvest stage, when evaluating the quality of fruits, OCT can be employed to detect subtle defects beneath the fruit surface, such as incipient bruising or internal structural abnormalities, which are difficult to identify with other optical techniques [88]. Compared to other imaging methods, OCT offers a unique combination of high resolution and non-invasiveness, making it a valuable tool for complementing existing optical sensing technologies in facility vegetable research. However, its current application in this field is still in the exploratory stage, with limitations such as a relatively shallow imaging depth (typically a few millimeters) and higher equipment costs than some conventional techniques like RGB imaging.

In conclusion, the nine optical sensing technologies reviewed in this chapter exhibit functional complementarity throughout the entire growth cycle of facility vegetables: RGB imaging and 3D imaging, with their low cost, ease of operation, and adaptability across the entire cycle, have become the core technologies for morphological monitoring during the seedling stage, plant type analysis during the vegetative growth stage, and appearance inspection during the harvest stage; multispectral and hyperspectral imaging, leveraging the spectral resolution advantage, perform outstandingly in nutrient diagnosis during the vegetative growth stage (such as nitrogen and phosphorus content detection) and quality component inversion during the fruit expansion and ripening stage (such as sugar content and pigments); thermal imaging and chlorophyll fluorescence imaging focus on physiological dynamic monitoring, achieving early warning for water stress, pests, and diseases, and extreme environmental responses throughout the cycle through temperature anomalies and changes in photosynthetic parameters; Raman spectroscopy, terahertz, and X-ray imaging, due to their high specificity or strong penetration, are mostly used for post-harvest quality grading and internal defect detection during storage; and OCT, although still in the exploration stage, provides a new path for micrometer resolution analysis of leaf microstructure and identification of internal hidden damages. The differences in application scenarios of the various technologies offer diversified technical options for precise management of the different growth stages of facility vegetables.

3. Application of Optical Sensing Technology in Phenotyping of Facility Vegetables

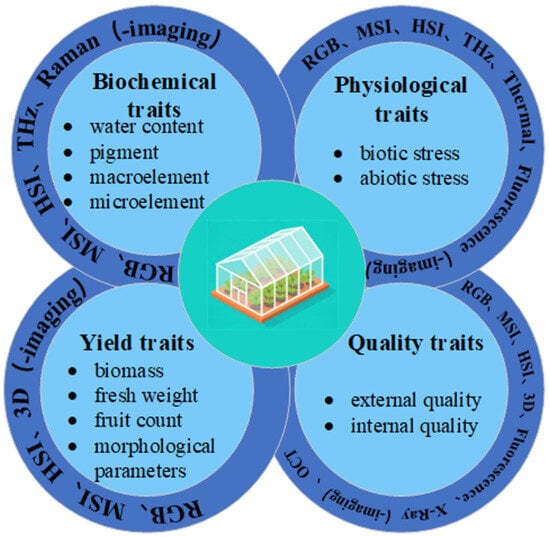

We categorize the phenotypic traits of protected-culture vegetables into biochemical traits, physiological traits, yield traits, and quality traits, covering key indicators from basal metabolism throughout the entire growth cycle to final economic value. Simultaneously, we clarify the application directions of technologies such as RGB, multi-/hyperspectral imaging, and thermal imaging in detecting biochemical traits, physiological traits, yield traits, and quality traits, as shown in Figure 2.

Figure 2.

Application of optical sensing technology in the phenotypic study of facility vegetables.

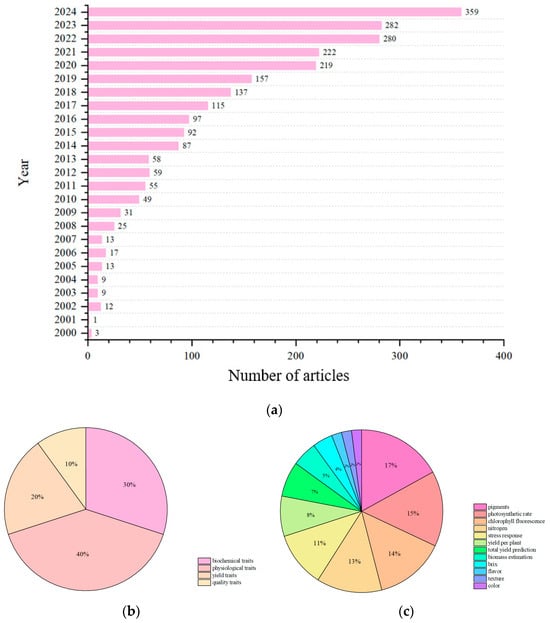

Based on Web of Science, we summarized the number of articles on the application of optical sensors in facility vegetables in each year from 2000 onwards. The literature was retrieved by using the combination of “optical technology type + facility vegetables + phenotypic research”, excluding irrelevant scenarios such as open-field cultivation, and the data were classified according to the following scientific framework: biochemical traits (divided into photosynthetic pigments, enzyme activities, etc., based on metabolic functions), physiological traits (divided into non-biological/biological stress responses and activities, photosynthetic efficiency, etc.), yield traits (divided into fruit quantity, biomass, etc., based on formation indicators), and quality traits (divided into external color/shape, internal soluble solids/freshness based on value), and corresponding detection capabilities of optical technologies were matched within each category to ensure comprehensive coverage without any omissions (as shown in Figure 3).

Figure 3.

Statistics on the application of optical sensing technology in phenotyping studies of facility vegetables from 2000 to 2024: (a) number of relevant articles published per year since 2000; (b) percentage of studies on four phenotypic traits; and (c) percentage of studies on common traits in phenotypic studies on facility vegetables.

3.1. Biochemical Traits

RGB imaging, multi/hyperspectral imaging, terahertz imaging, and Raman imaging are commonly used for the detection of biochemical traits in facility vegetables. These traits (e.g., photosynthetic pigments, nutrient elements) are the material basis of physiological functions, directly affecting vegetables’ resistance, nutritional quality, and flavor formation [89,90]. For example, water content—an important biochemical trait of lettuce—directly influences its yield and quality [91], and multispectral technology is widely applied here by analyzing the characteristic spectral response of lettuce leaves in visible and near-infrared bands [92]. When light is irradiated to lettuce leaves, different water contents will lead to differences in the absorption and reflection characteristics of light in each band, and water content information can be obtained by analyzing the intensity changes of reflected or transmitted light in each band. This technology is the basis for improved refrigeration, optimization, and for maintaining freshness and extending shelf life [93].

Hyperspectral imaging technology shows unique advantages in the detection of pigments in facility vegetables. Chlorophyll and carotenoid content are important biochemical characteristics that affect the photosynthetic capacity of vegetation and control crop productivity [94], This technology is based on the fact that chlorophyll has significant absorption peaks near 660 nm and 450 nm, and the characteristic absorption characteristics of carotenoids are mainly at 450 nm, so that pigment content can be accurately estimated by obtaining continuous spectral information and analyzing the change of reflectance or absorbance at the characteristic wavelengths [95]. Taking cucumber as an example, the hyperspectral image of the leaf is acquired by hyperspectral imager, and the pixel-level spectral analysis combined with specific algorithms can not only quantitatively determine the pigment content but also intuitively show its spatial distribution in the leaf [96].

In addition, the large amount of spectral information available in hyperspectral images allows for high-precision assessment of crop nutrient status and proposes corresponding fertilizer treatments for optimal crop production [97], e.g., leaf nitrogen content is an important factor influencing optimal canopy light-use efficiency and the canopy photosynthesis rate [98], as well as the use of hyperspectral images to estimate the nitrogen content of different crop types and thus to measure their growth status.

This method provides an important means of studying the mechanism of plant photosynthesis, growth, and development, and response to environmental stresses, and also helps growers to identify problems such as deficiencies, pests, and diseases in a timely manner, implement precise interventions, and safeguard the healthy growth of facility vegetables. Table 1 provides a detailed list of the applications of optical sensing technology in the biochemical traits of facility vegetables.

Table 1.

Application of optical sensing technology in biochemical traits of facility vegetables.

3.2. Physiological Traits

Physiological traits are the dynamic functional properties exhibited by plants during growth, such as photosynthesis, respiration, transpiration, water and nutrient uptake, and utilization [90]. These traits directly affect plant growth and development and stress tolerance, and ultimately yield and quality formation [114]. Chlorophyll fluorescence imaging, thermal imaging, and multispectral and hyperspectral imaging are commonly used to monitor abiotic and biotic stress responses in the physiological traits of facility vegetables [115,116].

Abiotic stresses, such as drought, high/low temperature, salinity, and nutrient deficiencies, and biotic stresses, such as diseases and pests, can significantly affect the physiological metabolism of facility vegetables, which in turn reduces yield and quality. In abiotic stress detection, the use of chlorophyll fluorescence imaging to detect the cold damage level of tomato seedlings under low-temperature stress by the values of six fluorescence parameters, Y(II), qP, qL, Y(NPQ), Y(NO), and Fv/Fm, has been well assessed and has considerable promise in the non-destructive diagnosis of low-temperature injury in plants [117]. The use of chlorophyll, a fluorescence OJIP transient, to assess salt stress in tomato leaves and fruits can be used as a non-destructive, simple, and rapid technique [118] and also used to characterize and assess the response of tomato leaves and fruits to high and low temperature stress [119]. Under drought stress, the water content of vegetable leaves decreases, leading to an increase in leaf temperature, and thermography can be used to estimate transpiration rates and assess plant water status [120]. Multi-spectral imaging can be used to assess the effects of drought stress on vegetable growth by analyzing changes in leaf spectral features, such as leaf area index, leaf nitrogen content and other parameters [121].

In biotic stress monitoring, when facility vegetables are attacked by pests and diseases, the plants will produce a series of physiological changes, and these changes will lead to changes in leaf temperature. Thermal imaging technology reflects the stress condition of plants by detecting changes in leaf surface temperature [14]. For example, when a cucumber is infected with downy mildew, the temperature of the diseased spot will be different from that of the healthy part, and thermal imaging can clearly show the abnormal temperature area, which can help the grower to detect the disease in time [122]. Multispectral imaging, on the other hand, monitors disease by analyzing changes in spectral reflectance of leaves at multiple specific wavelengths [123]. Chlorophyll (Chl) and carotenoid (Car) contents in cucumber leaves were measured by hyperspectral imaging to determine whether they were infected with angular leaf spot [96]. Table 2 provides a detailed list of the applications of optical sensing technology in the physiological traits of facility vegetables.

Table 2.

Application of optical sensing technology in physiological traits of facility vegetables.

3.3. Yield Traits

Yield traits are important indicators for evaluating the postharvest quality of facility vegetables. RGB imaging, multi/hyperspectral imaging, and chlorophyll fluorescence imaging are commonly used non-destructive testing methods for yield traits in facility vegetables. RGB imaging technology plays an important role in monitoring and evaluating yield traits of facility vegetables, where plant phenotypic information can be obtained quickly and non-destructively through high-resolution imaging, providing data support for precision agriculture management. Specifically, the visible light band (red, green, and blue) images captured by RGB cameras can resolve the growth status of vegetable crops, such as plant height, leaf area, and canopy cover [138], and physiological characteristics, such as leaf color change and fruit coloration [139]. Combined with image processing algorithms, key yield-related parameters such as fruit number and size distribution can be quantified [140]. In large-scale production in facility environments, the combination of RGB imaging and automated systems can realize high-throughput phenotyping of vegetable yield traits, providing a scientific basis for variety selection and cultivation decisions.

Multispectral and hyperspectral imaging technologies have significant advantages in monitoring yield traits of vegetables in facility settings, capturing vegetation reflectance features in multiple discrete bands to assess chlorophyll content [141], biomass accumulation, and water status [142]; furthermore, with the fine spectral data provided by successive narrow wavelength bands, it is possible to more deeply identify biochemical components related to yield [143] and early stress response [144]. This imaging technique accurately analyzes crop physiological status and yield potential through richer spectral information, analyzes characteristic parameters of spectral reflectance related to biomass, such as Normalized Vegetation Index (NDVI), Photochemical Reflectance Index (PRI), etc., and establishes a regression model between hyperspectral data and biomass [39]. By monitoring the changes in biomass, we can understand the growth dynamics of facility vegetables, analyze the relationship between biomass and yield, and provide scientific guidance for optimizing planting management measures and improving yield.

Chlorophyll fluorescence imaging provides visualization data for key physiological processes of yield formation by non-destructively and rapidly detecting the efficiency of light energy absorption, transfer and conversion in the plant photosynthetic system [145], and reflecting in real time the photosynthetic physiological status of the plant and its response to environmental stress [146]. In the facility environment, this technique can accurately reflect the spatial distribution and dynamic changes of leaf photosynthetic activities (e.g., maximum photochemical efficiency of PSII Fv/Fm, actual photochemical efficiency ΦPSII). In addition, chlorophyll fluorescence imaging can also diagnose photosynthetic inhibition caused by stresses such as pigment deficiency, drought [147], and disease [148] in an early stage, which can provide a basis for optimizing the regulation of the facility environment, intervening in a timely manner to reduce yield loss, and ultimately promoting an increase in vegetable yields by enhancing photosynthetic productivity. Table 3 provides a detailed list of the applications of optical sensing technology in the yield traits of facility vegetables.

Table 3.

Application of optical sensing technology in yield traits of facility vegetables.

3.4. Quality Traits

RGB imaging, Raman imaging, and multispectral and hyperspectral imaging are commonly used for non-destructive testing of facility vegetables’ quality traits [158,159]. These traits—covering external (color, shape), texture (hardness, crispness), and internal (soluble solids, vitamins) characteristics—directly determine commercial value and nutritional function. For example, color, a critical quality trait, not only affects consumer purchase intent but also reflects fruit ripeness; RGB imaging quantifies fruit color via color space conversion and image processing algorithms [160]. Combined with deep learning architectures such as Transformer, ResNet, and MobileNetV3, RGB imaging technology can be used to assess the freshness of fruits and vegetables such as apples and lettuce [161]. For shape analysis, RGB imaging technology describes and evaluates the shape using morphological parameters by extracting external contour information. Shape indices, such as roundness and aspect ratio, are parameters that reflect whether the shape is regular and uniform [162]. Regular and uniform shapes usually have better quality and market value. By analyzing the shape, the quality of good traits can be screened out and the commercial rate of the product can be improved. RGB imaging can also be used to detect the surface defects of fruits, such as diseased spots and wounds, etc., so as to detect the quality problems in a timely manner and guarantee the product quality [163].

Moisture, ripeness, internal defects, and soluble solids content (SSC), including sugar, acid, and minerals, are important internal quality characteristics of facility vegetables and are hot topics for research and attention. In tomato fruit quality assessment, an RGB imaging system is used to take images of tomato fruits, and the images are converted from the RGB color space to the HSV (hue, saturation, and lightness) color space, and the ripeness of tomato fruits can be determined by analyzing the changes in the hue (H) values [164]. As tomato fruits ripen, the hue value changes regularly, with higher hue values for unripe fruits and lower hue values for ripe fruits [165]. By setting the appropriate hue threshold, the ripeness of tomato fruits can be accurately judged, providing a basis for the selection of picking time. Raman technology is based on the principle of inelastic scattering of light. When light is irradiated on vegetables, the molecules will scatter the light, in which the Raman scattered light generated by inelastic scattering contains the vibration and rotation information of molecules, and different molecules have unique Raman spectral characteristics. By analyzing the Raman spectra, the internal components of vegetables can be identified and quantitatively detected, such as the content of carotenoids [166], nitrate [167], and so on. By measuring the spectra and pigmentation of green ripe tomatoes and using them as an indicator for classifying the first day’s spectral data as ripe or unripe, a multispectral imager can be utilized for predicting tomato ripeness [168]. Defects in pepper fruits can be accurately and reliably identified and classified by extracting spectral data from the region of interest (ROI) of pepper fruit samples, integrating hyperspectral imaging, partial least squares discriminant analysis, band selection methods, ANOVA-based classification, and improved weighted spectral analysis [169]. Due to its relatively limited penetration depth, OCT is primarily applicable for microstructural characterization of superficial tissue layers. Nevertheless, a key advantage of OCT lies in its ability to avoid compression and deformation of cells or interstitial spaces, thereby providing higher accuracy in depicting near-surface features. Consequently, by monitoring alterations in fruit cell arrangement and tissue density, OCT can effectively detect variations in internal moisture content, enabling the determination of the fruit’s drying level [170]. Furthermore, the high axial resolution depth-resolved images generated by OCT allow for the detection of changes in the healthy cell layers of infected fruits, facilitating the early visualization of internal defects caused by pathogenic infection [171]. Although both OCT and X-ray imaging can penetrate biological samples, OCT experiences greater contrast degradation with increasing penetration depth than X-rays. As a result, X-ray imaging offers superior capabilities for reconstructing and analyzing the 3D microstructure of fruits [85]. Table 4 provides a detailed list of the applications of optical sensing technology in the quality traits of facility vegetables.

Table 4.

Application of optical sensing technology in quality traits of facility vegetables.

This section reviews the application of various optical sensing technologies in detecting four key phenotypic characteristics (biochemistry, physiology, yield, and quality) of vegetables in agricultural facilities. The comparative analysis of their working principles reveals the functional complementarity for specific phenotypic characteristics but there are still significant differences in the maturity and readiness for large-scale application of the various technologies, as shown in Table 5 Hyperspectral and Raman imaging excel in quantifying biochemical components such as water, pigments, and nutrients. Thermal imaging and chlorophyll fluorescence imaging have unique advantages in non-invasive monitoring of physiological states, enabling early detection of stress through canopy temperature and photosynthetic efficiency signals. Yield estimation mainly utilizes RGB and 3D imaging to extract structural parameters, including plant height, canopy width, leaf area, and fruit number. In quality assessment, a multi-scale assessment framework has been established: RGB imaging is used to evaluate appearance, X-ray and OCT are used to detect the integrity of internal structures (such as tissue density, internal defects, and morphological disorder), and Raman spectroscopy can interpret molecular-level properties, including sugar accumulation and pesticide residues. However, in practical applications, it is still necessary to quantify the performance differences among different technologies, environmental conditions, and crop varieties. This is crucial for transforming sensing methods from experimental concepts to reliable and integrated phenotypic analysis systems that can support precision agriculture in actual production environments.

Table 5.

Comparison of Different Optical Sensing Technologies. The color bar indicates the current specification score of the sensing technology. The darker the color, the higher the score.

4. Challenges and Perspectives of Optical Sensing Technology in Facility-Based Vegetable Phenotyping Research

4.1. Problems in Reality

Despite the significant progress of optical sensing technology in laboratory-based facility vegetable phenotyping, its translation to large-scale practical production is hindered by three core bottlenecks that limit its popularization among small and medium-sized growers and integration into existing greenhouse management systems.

- (i)

- Complexity of data processing: The multi-dimensional data generated by optical sensors need to undergo specialized processing to achieve effective fusion and artificial intelligence modeling [191]. The core challenge lies in evaluating the appropriate processing methods and standardizing them, which is crucial for ensuring the reliability of the data and the consistency of the model. Data from different sensors (such as multispectral cameras from different manufacturers) may be processed using different spectral normalization techniques, resulting in incompatible and ineffective fusion outcomes and preventing comprehensive phenotypic analysis.

Although growing interest in deep learning has brought innovative algorithms that can solve these problems, such as reducing data preprocessing steps, retaining original features, and automatically learning from the data to improve detection accuracy, as well as enhancing accuracy and efficiency of vegetable phenotypic features, the collection, construction, and annotation of large-scale datasets are both time-consuming and prone to subjectivity [100]. Even with effective data and models, the reliability of some indirect phenotypic indicators used for analyzing the characteristics of facility vegetables is still limited by environmental factors. Especially, optical lenses are highly sensitive to light intensity and temperature, and this environmental interference makes it difficult to compare data at different time points or between greenhouses, further increasing the complexity of data processing and fusion.

- (ii)

- Sensor cost and robustness: The high cost of high-precision optical sensors remains a primary barrier to widespread adoption, and this issue is particularly prominent for multispectral imaging technology. For example, commercial mid-range multispectral cameras equipped with 5–8 spectral bands (covering visible, near-infrared, and red-edge bands, essential for traits like leaf nitrogen content and chlorophyll estimation) typically cost 30,000–50,000 RMB (≈4200–7000 USD). Even entry-level multispectral sensors (with 3–4 spectral bands) cost 15,000–20,000 RMB, still exceeding the affordability of most smallholders [192], and their high development and production costs make them unaffordable for many small-scale facility vegetable growers [193].

Furthermore, humidity in the greenhouse is relatively high and temperature fluctuates frequently. Occasionally, it may also be affected by pesticide fog or dust [194], which poses a significant challenge to the robustness of the sensors. The changes in instrument temperature and the external environment during operation can cause spectral drift in the data. Due to the shift in the characteristic peak positions of elements, the inversion results of the material composition will be inaccurate [195]. To ensure the accuracy and reliability of sensor measurements, the sensors need to be calibrated and maintained regularly, which not only requires specialized technicians and equipment but also incurs additional costs. Some high-end optical sensors need to be calibrated under specific environmental conditions, which increases the difficulty and cost of calibration [196].

- (iii)

- Poor interaction with the greenhouse: Most optical sensors operate as standalone devices, with limited integration into existing greenhouse intelligent systems (e.g., irrigation controllers, fertilization machines, environmental regulators) [197]. For example, hyperspectral sensors can detect nitrogen deficiency in cucumber leaves and output recommended fertilization rates, but most of the existing greenhouse fertilization systems (e.g., drip irrigation fertilization machines) lack data interfaces to receive these recommendations, requiring growers to manually input parameters, which delays action and increases human error. Similarly, thermal imaging-based crop water stress index (CWSI) data cannot be directly transmitted to irrigation controllers. This results in a certain delay between the stress detection and the irrigation adjustment [198].

In summary, resolving real-world application bottlenecks requires cross-disciplinary collaboration between sensor hardware engineers, software developers, and agricultural extension personnel. Future research should prioritize low-cost, robust sensor development and user-centric system integration to bridge the gap between laboratory innovation and practical production.

4.2. Prospects for Future Applications

4.2.1. Data Fusion and AI Applications

The data acquired by different types of optical sensors have their own unique characteristics, which means data fusion faces many difficulties. For example, RGB imaging data mainly present visual information such as the color and shape of the object [199], while multispectral imaging data contain rich spectral features and can be used to analyze the chemical composition of the object [39]. Thermal imaging data, on the other hand, focus on reflecting the temperature distribution of an object [13]. These data not only differ in dimension, but also in data format, resolution, and sampling frequency [200]. Fusing data from different sensors, due to their different installation positions, resolutions, etc., will lead to bias in the detailed expression of the fused data, and it is difficult to accurately reflect the real characteristics of the object [201].

By integrating different sensing data, the advantages of multi-source sensing can be utilized to compensate for the limitations of a single sensor, thus improving the accuracy of the assessment [202,203]. However, the data fusion process suffers from data redundancy and difficulties in mining complementary information. Data acquired by different sensors may have partially overlapping information, which generates data redundancy and increases the burden and cost of data processing. And to mine truly complementary information from these complex data, it is necessary to deeply understand the intrinsic connection and characteristics of each sensor datum, and to apply advanced data processing algorithms and models [202]. However, the relevant research is not mature enough, and effective data fusion methods still need to be further explored and improved.

A single data source still has large limitations, which not only requires multi-sensing data but also artificial intelligence-driven analysis. With the rapid development of artificial intelligence technology, it shows great potential for application in the field of optical sensor data processing and analysis [204]. Machine learning algorithms can efficiently process and analyze large amounts of optical sensor data. By learning from historical data, machine learning models can establish complex relationships between data and realize accurate prediction of the growth status of facility vegetables [205]. Deep learning algorithms excel in image recognition and classification. It is difficult to judge the quality of vegetables stored in different periods by the senses alone; the identification of vegetable freshness using hyperspectral imaging and deep learning methods can accurately evaluate the quality of vegetables [206]. For RGB imaging data, deep learning models can accurately recognize the variety, growth stage, and pest and disease symptoms of vegetables. Using deep convolutional neural networks in identifying the disease classes of vegetable pests and diseases, usually before they are visible to the human eye [207], the accuracy rate can exceed 90%, while the average detection time is less than 1s, which provides a strong support for timely disease control [208]. Through federated learning (FL), emerging artificial intelligence models are deployed on edge devices, and models are trained for tasks such as crop classification and yield prediction, marking a significant advancement for traditional agriculture towards smart agriculture [209]. Meanwhile, existing studies have utilized artificial intelligence (AI) and explainable artificial intelligence (XAI) technologies to predict crop yields and assess the impact of climate change on agriculture [210].

In conclusion, through the combination of artificial intelligence technology and data fusion, and in-depth analysis of the fused data to dig out more valuable information, the intelligent level of facility vegetable production management can be further improved [202].

4.2.2. Construction of Low-Cost and Reliable Sensor Networks

The development of low-cost and reliable sensor networks for environmental control in scenarios such as greenhouses is necessary [211], as it addresses the critical gap between advanced optical sensing technology and the economic feasibility for small-to-medium-scale growers. In terms of technical realization, how to ensure the performance and stability of sensors while reducing costs is the key issue [212]. Low-cost sensors—often constructed using consumer-grade components (e.g., off-the-shelf CMOS cameras, inexpensive narrow-band filters, and open-source microcontrollers like Arduino or Raspberry Pi) [213]—may exhibit declines in accuracy, spectral resolution, or temporal stability compared to their high-end counterparts; how to find a balance between cost and performance through technological innovation and optimization of design is thus a challenge to be solved. Existing studies have utilized the principles of wireless sensor networks (WSN) and employed low-cost non-dispersive infrared (NDIR) CO2 sensors to conduct in situ measurements of greenhouse soil gas emissions. They have achieved a R2 value of 0.96, and showed no significant difference from the 1:1 relationship at the significance level of α = 0.05 [214]. However, how to design and integrate a large number of low-cost optical sensors, and apply them reliably to the collection of greenhouse data, remains a significant challenge.

In terms of application promotion, growers’ acceptance of low-cost sensor networks is also an important factor. Many growers have a low level of understanding and trust in the new technology and are worried about the reliability and practicality of low-cost sensor networks, which requires strengthening publicity and training to improve the cognitive level and application ability of growers. It is also necessary to establish a perfect after-sales service system to provide growers with timely technical support and maintenance services to lessen their worries [215].

4.2.3. Intelligent Sensing System and Precision Agriculture

The future intelligent perception system for facility vegetables is expected to be highly integrated and intelligent. The systematic integration of a variety of advanced optical sensors, such as thermal imaging sensors, fluorescence imaging sensors, hyperspectral sensors, etc., to realize all-around real-time monitoring of the growth environment and growth state of facility vegetables has been evidenced in research on cereal crops [216]. Through wireless transmission technology, data collected by the sensors are transmitted in real time to the cloud server for storage and analysis. Using big data analysis and artificial intelligence algorithms, the massive data are deeply mined and processed to realize accurate prediction and intelligent regulation of the growth process of facility vegetables. When the system detects the symptoms of pests and diseases in vegetables, it can automatically analyze the type and severity of the pests and diseases, and issue timely warning information as well as provide corresponding suggestions for prevention and control measures. At the same time, according to the growth status of vegetables and environmental conditions, it automatically adjusts the operating parameters of irrigation, fertilization, ventilation and other equipment to realize the automation and intelligent management of facility vegetable production [217].

The development of an intelligent perception system will have a far-reaching promotion effect on precise planting and management of facility vegetables. In terms of precision planting, through real-time monitoring and analysis of the growing environment and growth status of vegetables, growers can accurately carry out irrigation, fertilization and pest control according to the actual needs of vegetables, avoiding waste of resources and environmental pollution, and improving the yield and quality of vegetables [218]. In terms of management, the intelligent perception system can provide growers with comprehensive production data and decision-making support to help them optimize the planting plan, rationally arrange the production plan, and improve production efficiency and economic benefits. The intelligent sensing system can also realize remote monitoring and management, and growers can understand the growth situation of facility vegetables anytime and anywhere through cell phones, computers, and other terminal devices, and carry out remote operation and management to improve the convenience and flexibility of management [219].

5. Conclusions

This review provides a comprehensive review and analysis of the application of various optical sensing technologies throughout the entire growth cycle of vegetables, covering their performance in terms of biochemical, physiological, yield, and quality characteristics.

The RGB imaging technology is the most widely applied, covering most of the crop growth cycle and phenotypic trait detection; hyperspectral and multispectral imaging technologies are widely used for evaluating biochemical components and physiological states; and fluorescence and thermal imaging technologies are very effective in precise and early detection of plant stress. For traits related to yield, 3D and RGB imaging provide reliable morphological data, while Raman imaging, X-ray, and optical coherence tomography technologies show great potential in non-destructive internal quality assessment after harvest.

The current challenge lies in converting the verified laboratory test results into reliable and integrated systems applicable to commercial greenhouse environments. To overcome this difficulty, issues such as sensor costs, data complexity, and the reliability of hardware under actual conditions need to be addressed.

The future development direction presents the following important trends: by integrating complementary types of sensors through multi-technology fusion, more comprehensive phenotypic images can be provided, thereby overcoming the limitations of single technology applications; the help of artificial intelligence, especially deep learning technology, will be crucial for automated analysis of complex and multi-dimensional sensor data, improving prediction accuracy, and building scalable models; and, at the same time, it is necessary to closely associate sensor data with the physiological functions and metabolic processes of plants, making the information more valuable and operational for growers.

The continuous development of optical sensing technology has provided greater impetus for more integrated and intelligent decision support systems in greenhouse vegetable production. However, most applications are limited to controlled experimental environments, and transitioning to practical applications still requires the continuous overcoming of key challenges such as multi-sensor data fusion, environmental adaptability, and better interaction with greenhouse systems. By continuously narrowing the gap between optical sensing technology and practical applications and management, the development of facility agriculture will be promoted towards greater adaptability, efficiency, and sustainability.

Author Contributions

Conceptualization, Z.L. (Zonghua Leng), X.Z., X.W., and S.T.; methodology, Z.L. (Zonghua Leng), X.Z., X.W., and Y.Z.; validation, S.T. and Y.Z.; formal analysis, Y.Z., X.H., and Z.L. (Zhaowei Li); investigation, X.H., Z.L. (Zhaowei Li); writing—original draft preparation, Z.L. (Zonghua Leng) and X.W.; writing—review and editing, X.H. and Z.L. (Zhaowei Li).; supervision X.Z. and S.T.; funding acquisition, X.Z., S.T., and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the project of the National Key Research and Development Program of China (Grant No. 2022YFD2002302); Jiangsu Province Industry Forward-looking Program Project (Grant No. BE2023017); National Key Research and Development Program for Young Scientists (Grant No. 2022YFD2000200); and Agricultural Equipment Department of Jiangsu University (Grant No. NZXB20210106).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article. The data presented in this study can be requested from the authors.

Acknowledgments

The authors express their deep gratitude to the Agricultural Engineering College of Jiangsu University for the support provided. The authors also thank the reviewers for their important feedback. During the preparation of this manuscript, the authors used [Doubao, seed-1.6, ByteDance Ltd.] for preparatory composition assistance. The authors have reviewed and edited the output and take full responsibility for the content of this publication. This literature review utilized generative artificial intelligence (AI), specifically the large language model [Doubao, seed-1.6, ByteDance Ltd.], as an auxiliary tool to enhance research efficiency during specific, non-interpretive stages of the manuscript preparation. The use of AI was strictly limited to preparatory and editing assistance, and it was not employed to generate any scientific interpretations, conclusions, or original data analysis. The authors are solely responsible for the entire intellectual content, critical analysis, and final synthesis presented in this work. The application of AI was implemented in the following phases, with explicit oversight: Ideation and Outline Structuring (Phase 1): In the initial exploratory phase, AI was prompted to generate potential high-level structures and thematic headings for a literature review on optical sensing in facility vegetables. The authors critically evaluated all AI-suggested outlines, selecting and significantly modifying a logical framework that served as a preliminary scaffold. This scaffold was then entirely restructured, expanded, and refined based on the authors’ deep domain knowledge and the actual literature findings. Literature Sifting and Initial Summarization (Phase 2): For a subset of the collected papers, AI was used to generate very brief, initial summaries of abstracts or specific sections (e.g., methodology) to aid in rapid triaging. Crucially, every AI-generated summary was rigorously fact-checked, expanded upon, and critically integrated by the authors against the full text of the original source material. No summary was adopted without full author validation and synthesis. Language Polishing and Editing (Phase 3): In the final editing stage, AI was used as an advanced grammar and syntax checker to improve the clarity and fluency of sentences originally drafted by the authors. It was also used to suggest alternative wordings for specific phrases. All suggestions were accepted, rejected, or edited at the sole discretion of the authors to ensure they accurately reflected the intended scientific meaning. Among them, the core academic contributions—including the formulation of the research scope, the critical analysis and interpretation of the reviewed literature, the identification of research gaps, the drawing of conclusions, and the crafting of all original arguments—are exclusively those of the authors. The AI tool functioned purely as an assistive instrument, analogous to an advanced search or editing tool. All prompts, inputs and, most importantly, the final output, were under the complete control and judgment of the human authors. The manuscript in its entirety represents the authors’ original scholarship and critical thinking.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zuo, L. Analysis and Suggestions on High-quality Development of China’s Modern Facility Animal Husbandry. Agric. Outlook 2024, 20, 3–6. [Google Scholar]

- Zhu, B.; Zhang, Y.; Sun, Y.; Shi, Y.; Ma, Y.; Guo, Y. Quantitative estimation of organ-scale phenotypic parameters of field crops through 3D modeling using extremely low altitude UAV images. Comput. Electron. Agric. 2023, 210, 107910. [Google Scholar] [CrossRef]

- Xiao, Q.; Bai, X.; Zhang, C.; He, Y. Advanced high-throughput plant phenotyping techniques for genome-wide association studies: A review. J. Adv. Res. 2022, 35, 215–230. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, J.; Zheng, X.; Zhang, Z.; Wang, Z.; Tan, X. Characterization of a Desiccation Stress Induced Lipase Gene from Brassica napusL. J. Agric. Sci. Technol. 2016, 18, 1129–1141. [Google Scholar]

- Movahedi, A.; Aghaei-Dargiri, S.; Barati, B.; Kadkhodaei, S.; Wei, H.; Sangari, S.; Yang, L.; Xu, C. Plant immunity is regulated by biological, genetic, and epigenetic factors. Agronomy 2022, 12, 2790. [Google Scholar] [CrossRef]

- Du, J.; Fan, J.; Wang, C.; Lu, X.; Zhang, Y.; Wen, W.; Liao, S.; Yang, X.; Guo, X.; Zhao, C. Greenhouse-based vegetable high-throughput phenotyping platform and trait evaluation for large-scale lettuces. Comput. Electron. Agric. 2021, 186, 106193. [Google Scholar] [CrossRef]

- Shi, J.; Wang, Y.; Li, Z.; Huang, X.; Shen, T.; Zou, X. Simultaneous and nondestructive diagnostics of nitrogen/magnesium/potassium-deficient cucumber leaf based on chlorophyll density distribution features. Biosyst. Eng. 2021, 212, 458–467. [Google Scholar] [CrossRef]

- Zhu, W.; Li, L.; Zhou, Z.; Yang, X.; Hao, N.; Guo, Y.; Wang, K. A colorimetric biosensor for simultaneous ochratoxin A and aflatoxins B1 detection in agricultural products. Food Chem. 2020, 319, 126544. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Yang, R.; Chen, R.; Guindo, M.L.; He, Y.; Zhou, J.; Lu, X.; Chen, M.; Yang, Y.; Kong, W. Digital techniques and trends for seed phenotyping using optical sensors. J. Adv. Res. 2024, 63, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Cai, W.; Wang, Z.; Wu, H.; Peng, Y.; Cheng, L. Phenotypic parameters estimation of plants using deep learning-based 3-D reconstruction from single RGB image. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, L.; Song, X.; Niu, Y.; Zhang, H.; Wang, A.; Zhu, Y.; Zhu, X.; Chen, L.; Zhu, Q. Estimating winter wheat plant nitrogen content by combining spectral and texture features based on a low-cost UAV RGB system throughout the growing season. Agriculture 2024, 14, 456. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Mao, H.; Wu, X.; Zhang, X.; Yang, N. Visualization research of moisture content in leaf lettuce leaves based on WT-PLSR and hyperspectral imaging technology. J. Food Process Eng. 2018, 41, e12647. [Google Scholar] [CrossRef]

- Vadivambal, R.; Jayas, D.S. Applications of thermal imaging in agriculture and food industry—A review. Food Bioprocess Technol. 2011, 4, 186–199. [Google Scholar] [CrossRef]

- Wen, T.; Li, J.-H.; Wang, Q.; Gao, Y.-Y.; Hao, G.-F.; Song, B.-A. Thermal imaging: The digital eye facilitates high-throughput phenotyping traits of plant growth and stress responses. Sci. Total Environ. 2023, 899, 165626. [Google Scholar] [CrossRef]

- Qiu, X.; Wu, M.; Mukai, K.; Shimasaki, Y.; Oshima, Y. Effects of elevated irradiance, temperature, and rapid shifts of salinity on the chlorophyll a fluorescence (OJIP) transient of Chattonella marina var. antiqua. J. Fac. Agric. Kyushu Univ. 2019, 64, 293–300. [Google Scholar] [CrossRef]

- Guo, Z.; Wu, X.; Jayan, H.; Yin, L.; Xue, S.; El-Seedi, H.R.; Zou, X. Recent developments and applications of surface enhanced Raman scattering spectroscopy in safety detection of fruits and vegetables. Food Chem. 2024, 434, 137469. [Google Scholar] [CrossRef]

- Roitsch, T.; Cabrera-Bosquet, L.; Fournier, A.; Ghamkhar, K.; Jiménez-Berni, J.; Pinto, F.; Ober, E.S. New sensors and data-driven approaches—A path to next generation phenomics. Plant Sci. 2019, 282, 2–10. [Google Scholar] [CrossRef] [PubMed]

- Du, Z.; Hu, Y.; Ali Buttar, N.; Mahmood, A. X-ray computed tomography for quality inspection of agricultural products: A review. Food Sci. Nutr. 2019, 7, 3146–3160. [Google Scholar] [CrossRef]

- Songtao, H.; Ruifang, Z.; Yinghua, W.; Zhi, L.; Jianzhong, Z.; He, R.; Wanneng, Y.; Peng, S. Extraction of potato plant phenotypic parameters based on multi-source data. Smart Agric. 2023, 5, 132. [Google Scholar]

- Wei, L.; Yang, H.; Niu, Y.; Zhang, Y.; Xu, L.; Chai, X. Wheat biomass, yield, and straw-grain ratio estimation from multi-temporal UAV-based RGB and multispectral images. Biosyst. Eng. 2023, 234, 187–205. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, Y.; Zhang, Q.; Xu, L.; Wang, L.; Zhang, P. Estimation of crop height distribution for mature rice based on a moving surface and 3D point cloud elevation. Agronomy 2022, 12, 836. [Google Scholar] [CrossRef]

- Hong, F.; Qu, C.; Wang, L. Cerium improves growth of maize seedlings via alleviating morphological structure and oxidative damages of leaf under different stresses. J. Agric. Food Chem. 2017, 65, 9022–9030. [Google Scholar] [CrossRef]

- Garbouge, H.; Rasti, P.; Rousseau, D. Enhancing the tracking of seedling growth using RGB-Depth fusion and deep learning. Sensors 2021, 21, 8425. [Google Scholar] [CrossRef]

- Yamaguchi, N.; Fukuda, O.; Okumura, H.; Yeoh, W.L.; Tanaka, M. Estimating tomato plant leaf area in greenhouse environment using multiple RGB-D cameras. In Proceedings of the 2024 Twelfth International Symposium on Computing and Networking Workshops (CANDARW), Naha, Japan, 26–29 November 2024; pp. 179–182. [Google Scholar]

- Diago, M.P.; Sanz-Garcia, A.; Millan, B.; Blasco, J.; Tardaguila, J. Assessment of flower number per inflorescence in grapevine by image analysis under field conditions. J. Sci. Food Agric. 2014, 94, 1981–1987. [Google Scholar] [CrossRef]

- Bhattarai, U.; Karkee, M. A weakly-supervised approach for flower/fruit counting in apple orchards. Comput. Ind. 2022, 138, 103635. [Google Scholar] [CrossRef]