Current Research Status and Development Trends of Key Technologies for Pear Harvesting Robots

Abstract

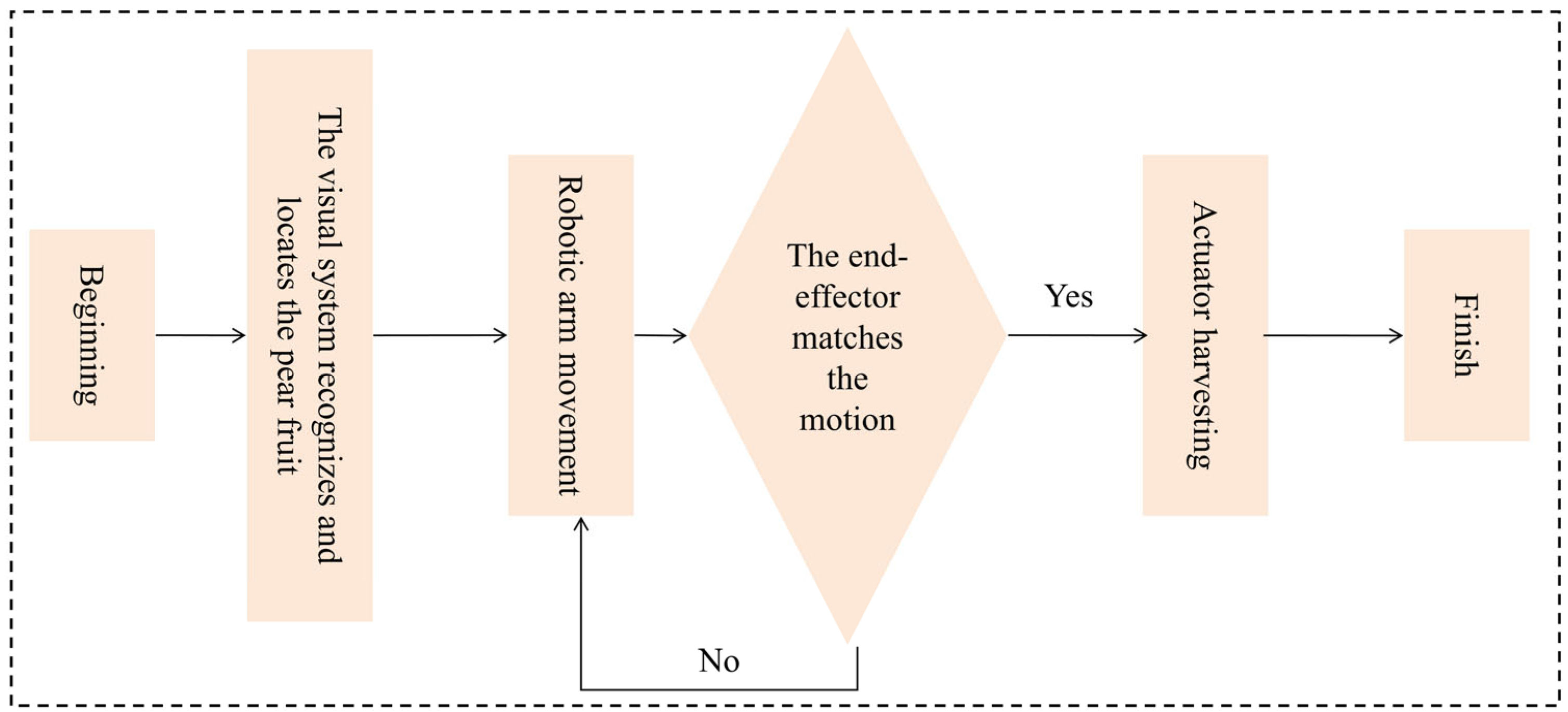

1. Introduction

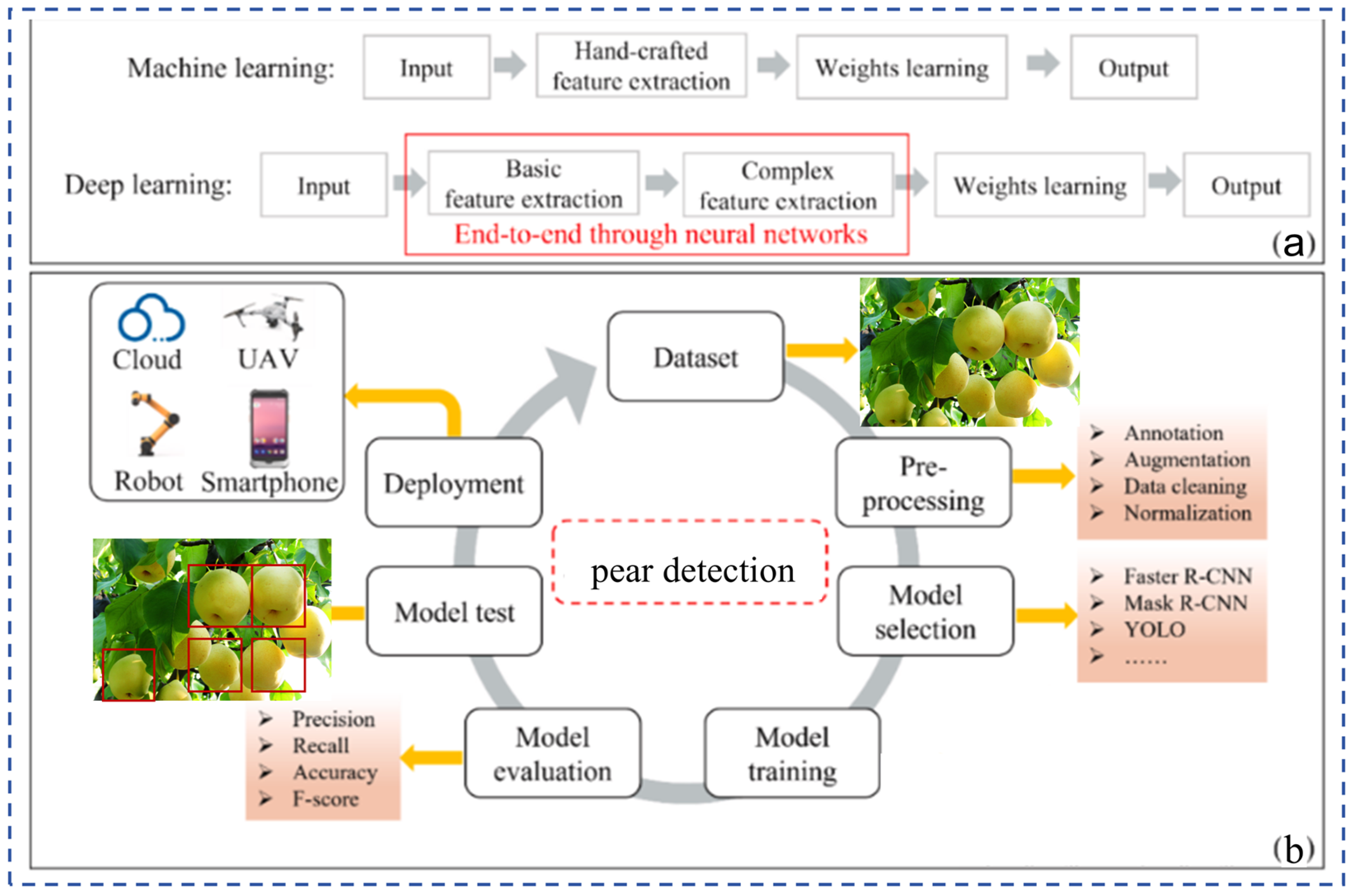

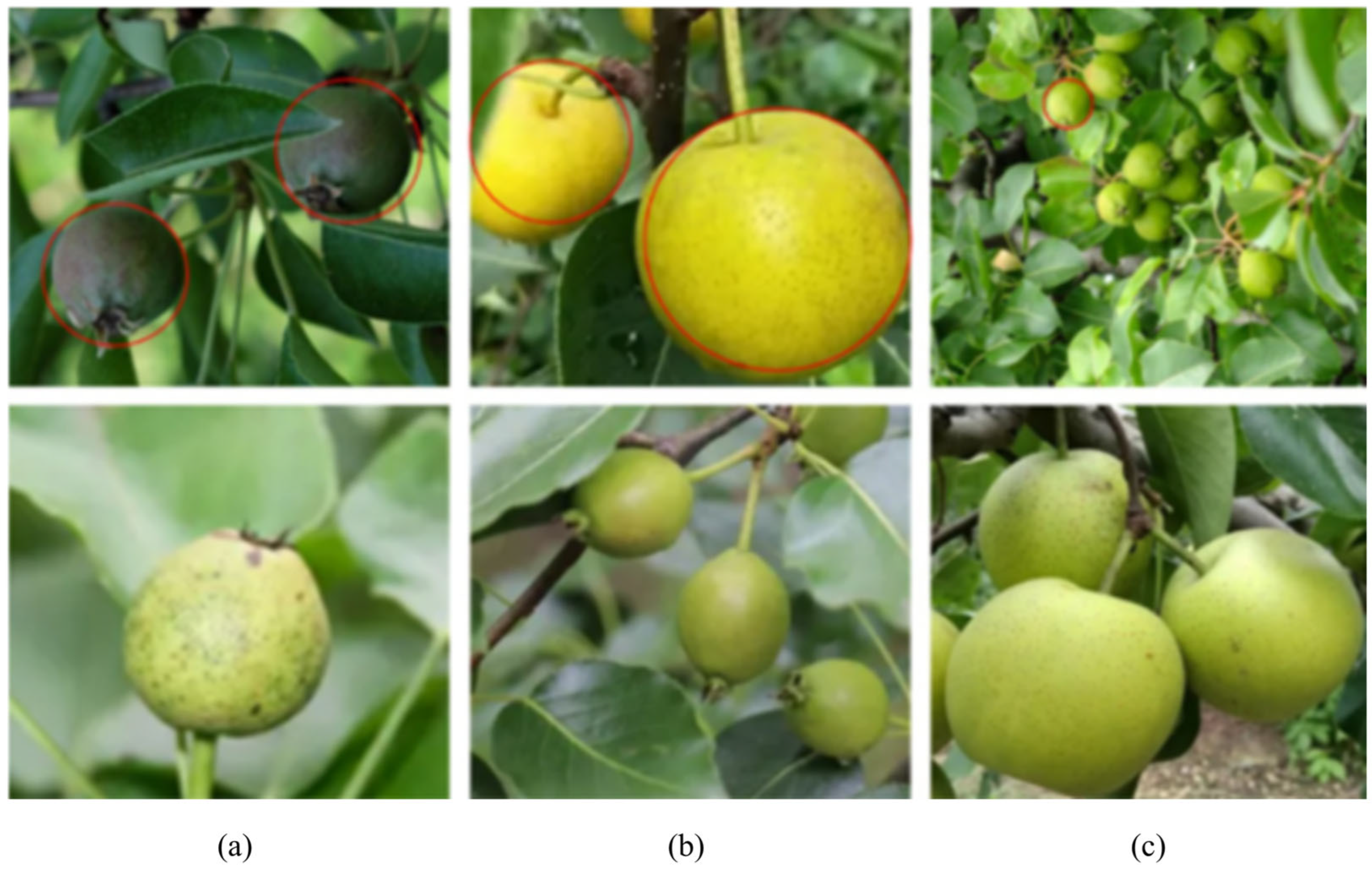

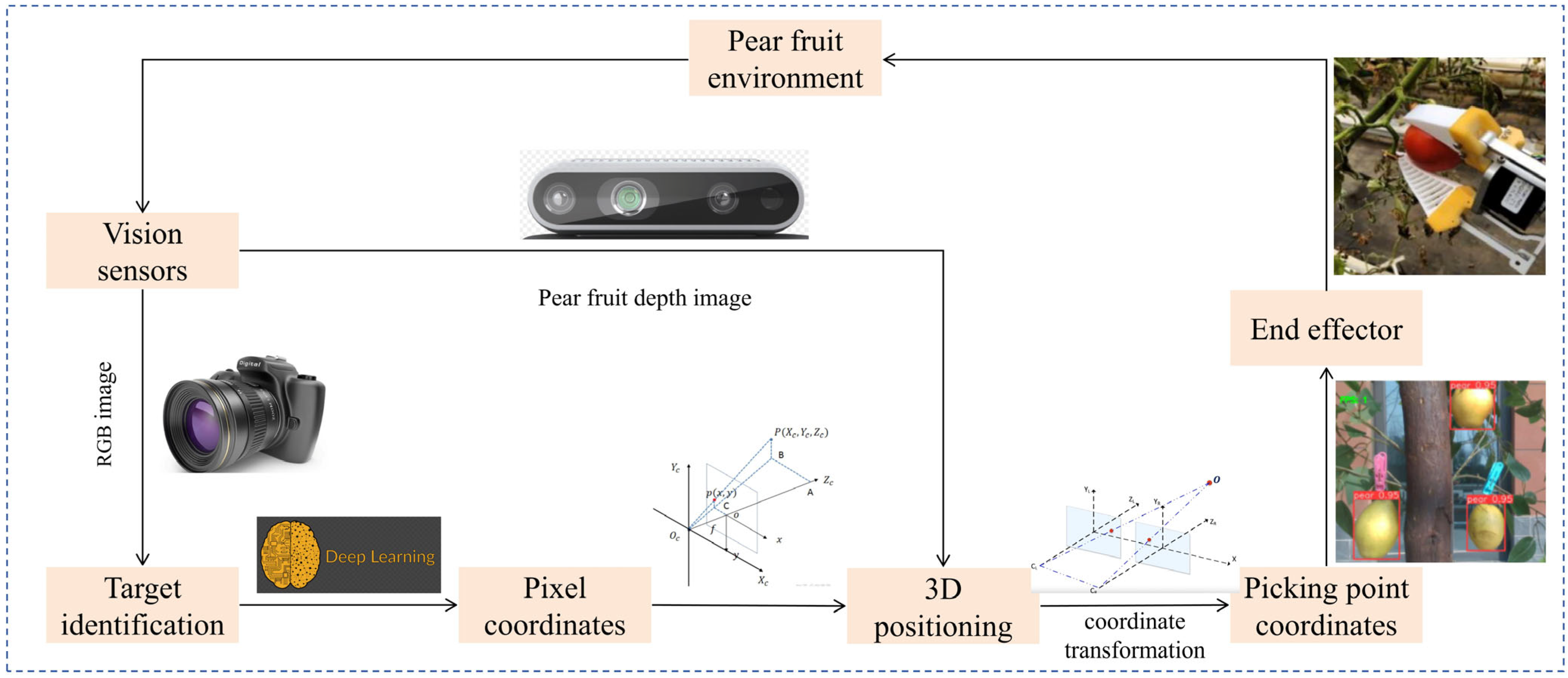

2. Recognition Technology for Pear Harvesting Robots

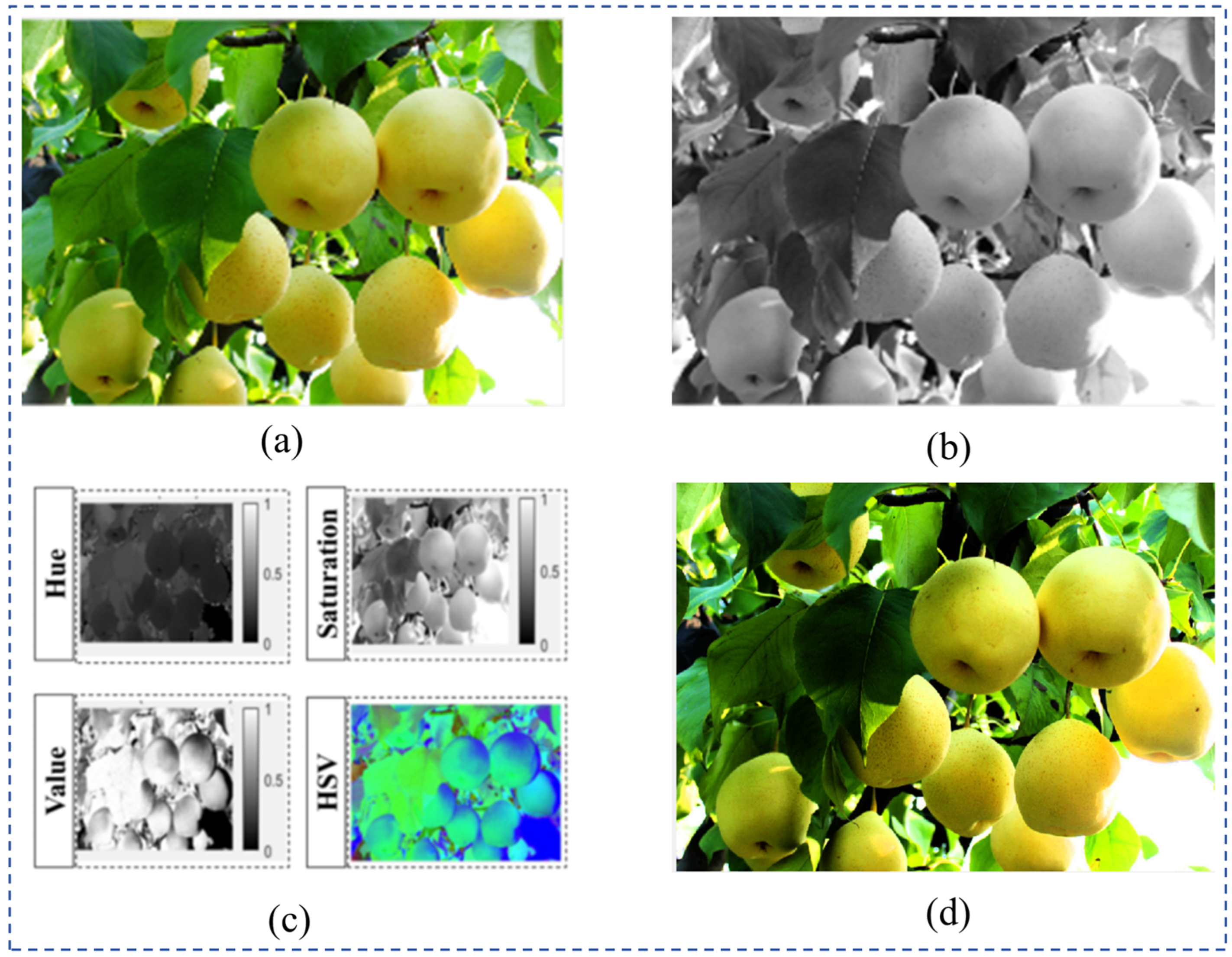

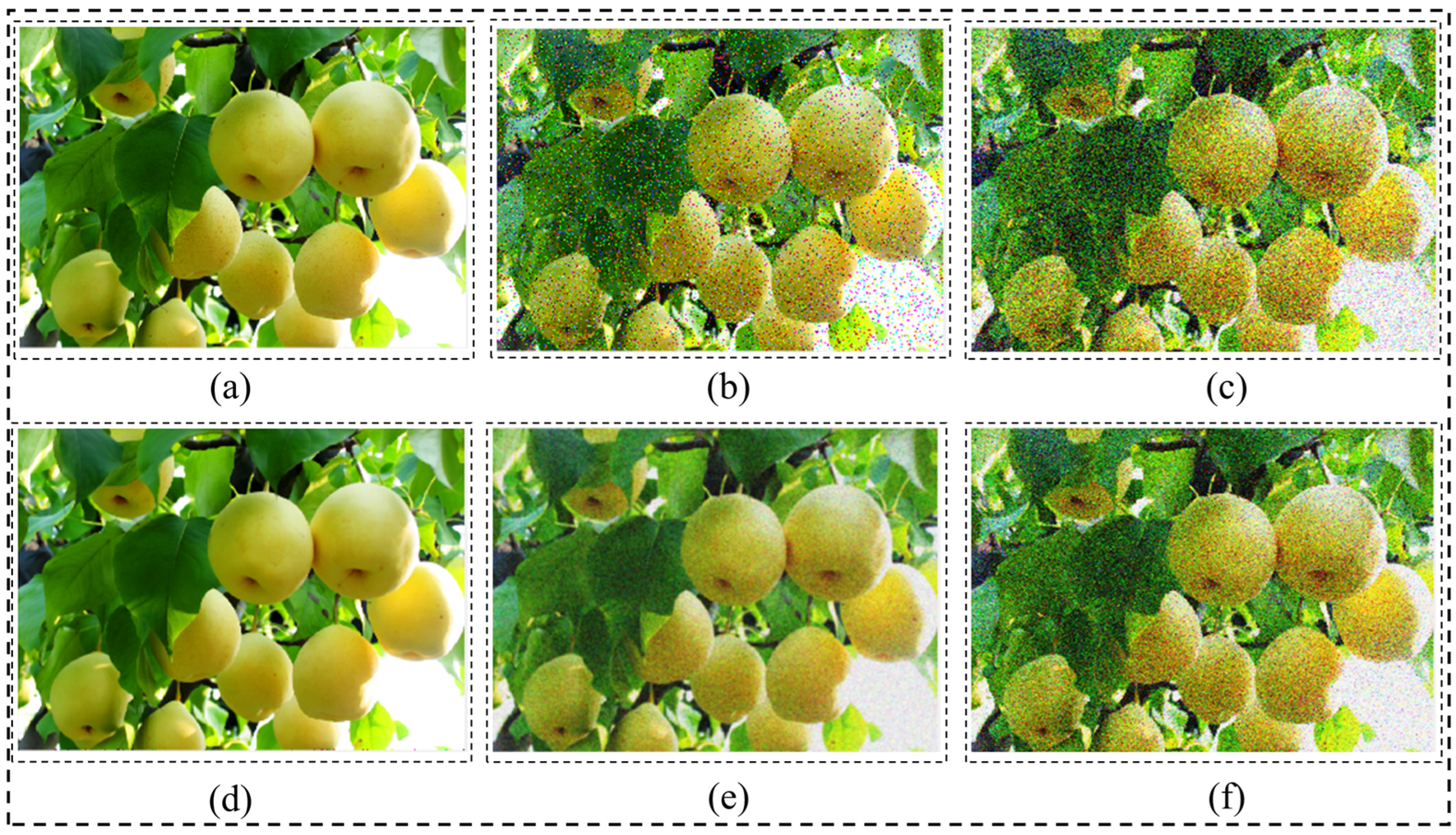

2.1. Traditional Image Recognition Methods

2.2. Deep Learning Recognition Methods

3. Localization of Pear Harvesting Robots

3.1. Two-Dimensional Information Acquisition

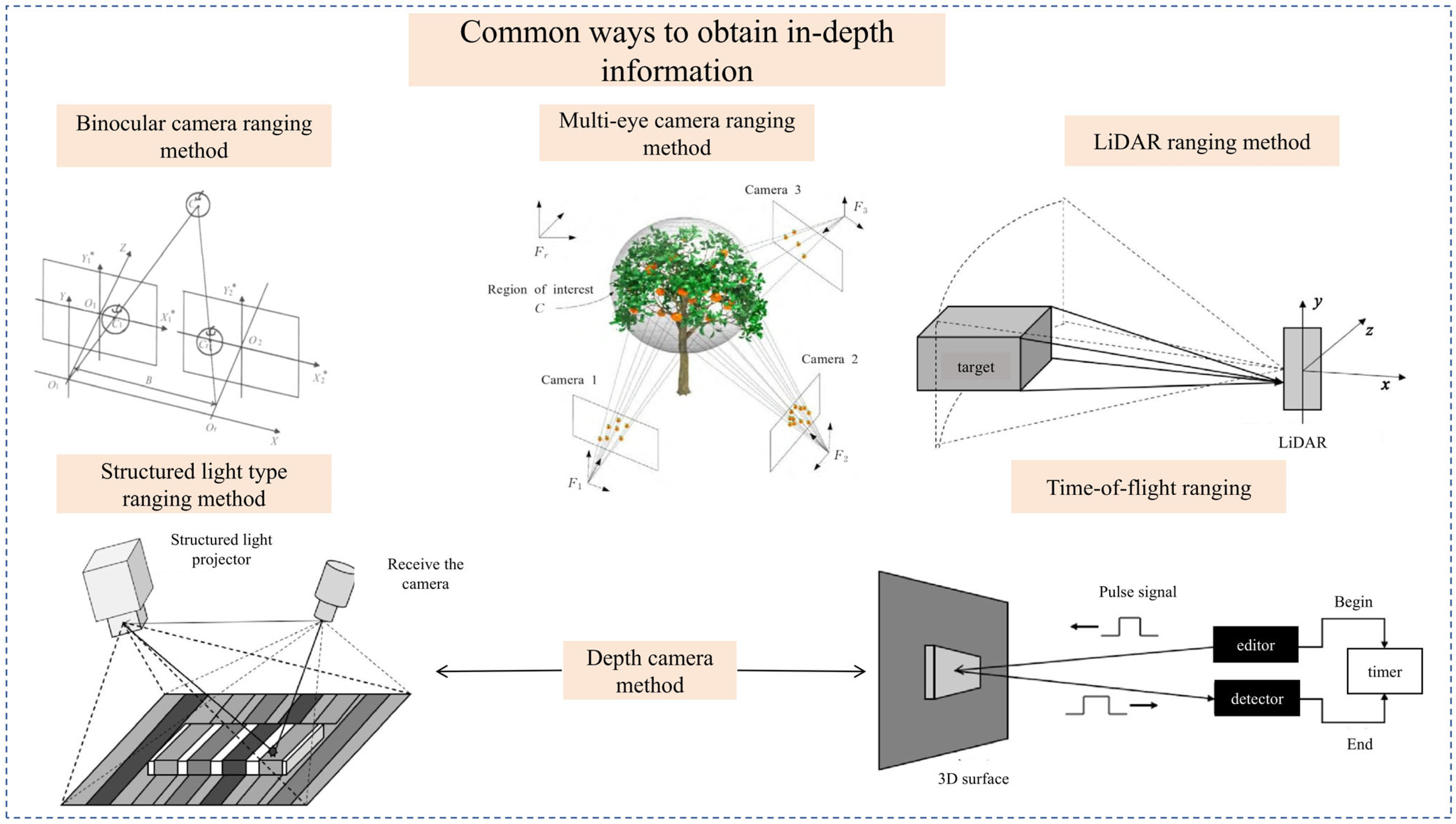

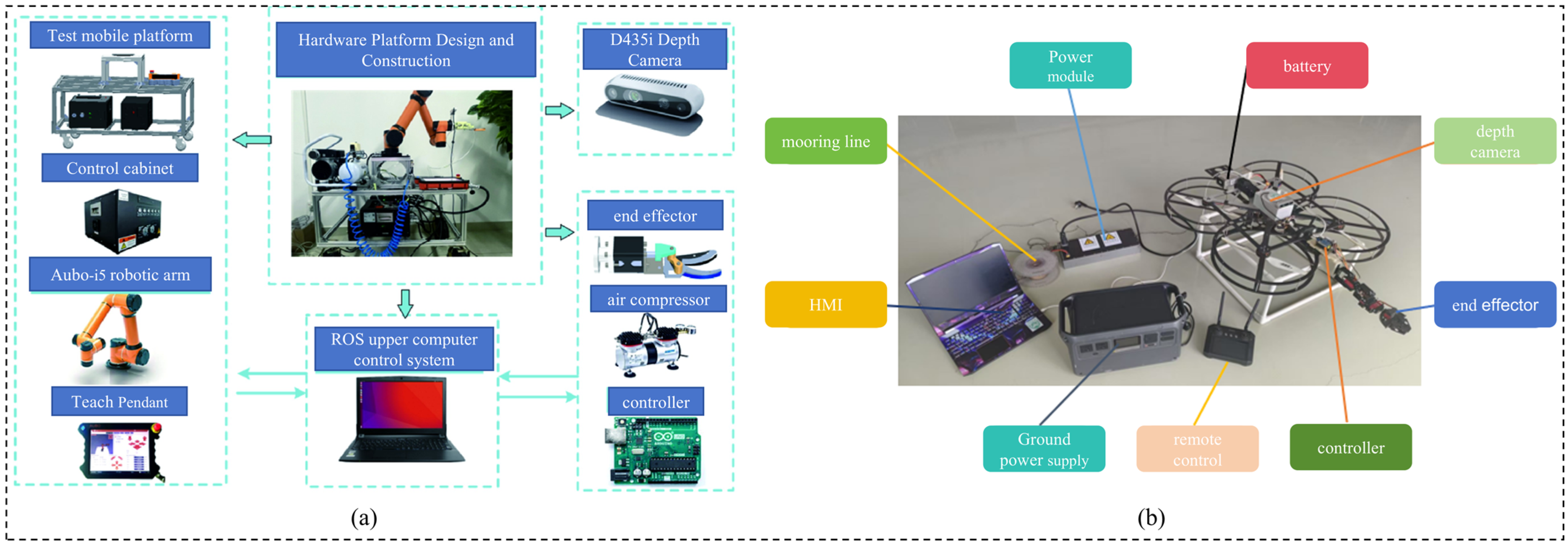

3.2. Depth Information Acquisition

3.3. Pose Acquisition

3.4. Pear Fruit Vibration Problem

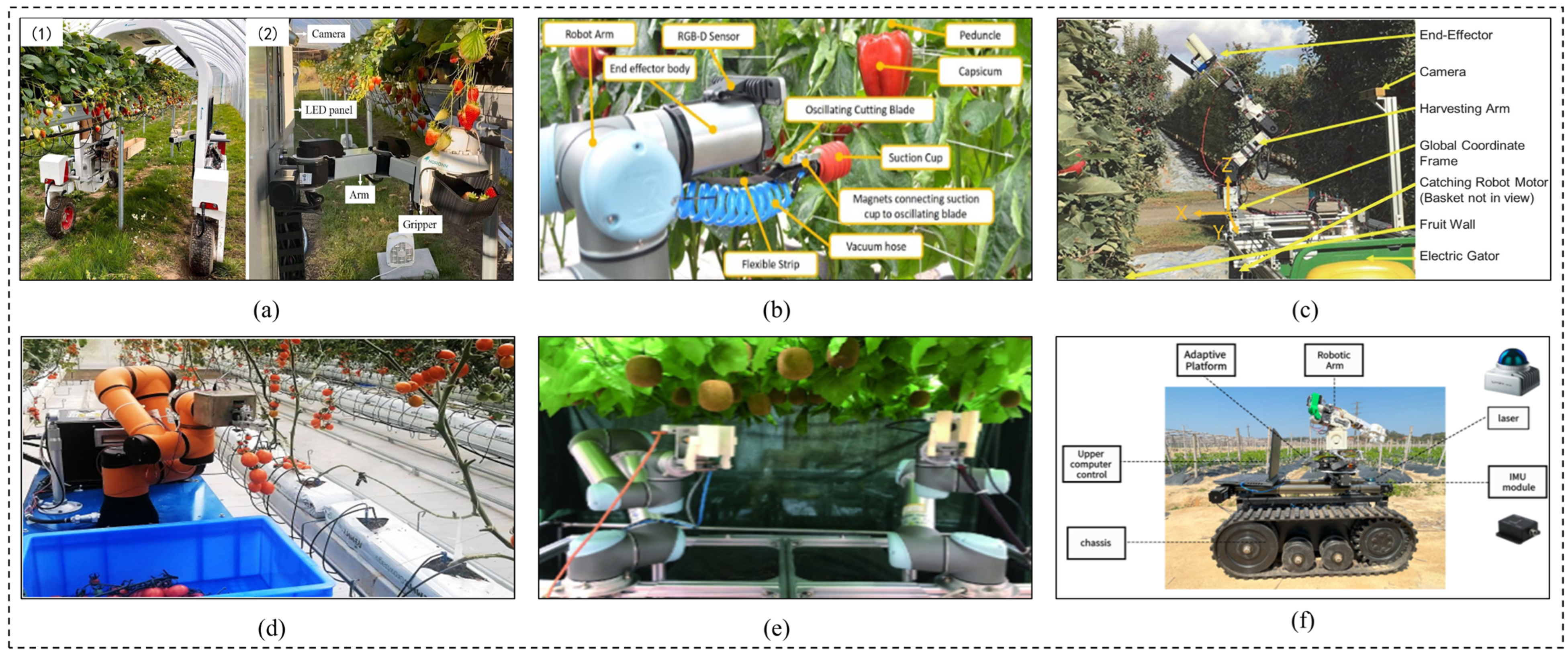

4. End Effector of the Pear Harvesting Robot

4.1. Harvesting Methods

4.2. End Effector Drive Methods

5. Discussion and Future Perspectives

5.1. Discussion

5.1.1. The Gap Between High-Precision Perception and Low-Success-Rate Execution

5.1.2. Intrinsic Trade-Offs in Technical Pathways and Compatibility Conflicts with Agricultural Scenarios

5.1.3. Core Conflict: Environmental Unstructuredness vs. Algorithmic Adaptability

5.2. Future Perspectives

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations. Production (t)” [Dataset]. Food and Agriculture Organization of the United Nations (2025) [Original Data]. 2025. Available online: https://ourworldindata.org/explorers/global-food?tab=chart&pickerSort=desc&pickerMetric=production__tonnes&Food=Pears&Metric=Production&Percapita=false&country=CHN~OWID_WRL (accessed on 27 March 2025).

- Zhao, C.; Fan, B.; Li, J.; Feng, Q. Agricultural robots: Technology progress, challenges and trends. Smart Agric. 2023, 5, 1–15. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; From, P.J. An improved obstacle separation method using deep learning for object detection and tracking in a hybrid visual control loop for fruit picking in clusters. Comput. Electron. Agric. 2021, 191, 106508. [Google Scholar] [CrossRef]

- Cui, Y.; Ma, L.; He, Z.; Zhu, Y.; Wang, Y.; Li, K. Design and Experiment of Dual Manipulators Parallel Harvesting Platform for Kiwifruit Based on Optimal Space. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2022, 53, 132–143. [Google Scholar] [CrossRef]

- An Israeli company has developed drones for fruit harvesting. Sens. World 2022, 28, 1.

- Lv, J.; Zhong, X.; Peng, Y. Design of a Fruit-Picking Drone Based on YOLOv5. China South. Agric. Mach. 2025, 56, 4. [Google Scholar] [CrossRef]

- Goswami, P.; Vaishnav, R.; Anand, T.; Dayal, P. A Comprehensive Review on LiDAR Based 3D Deep Learning Object Detection Algorithms. In Proceedings of the 2025 International Conference on Computer, Electrical and Communication Engineering, ICCECE 2025, Kolkata, India, 7–8 February 2025. [Google Scholar]

- Song, J.; Zhang, T.; Xu, L.; Tang, X. Research actuality and prospect of picking robot for fruits and vegetables. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2006, 37, 158–162. [Google Scholar]

- Wang, B. Research on Key Technologies for Pear Harvesting Robots Based on ROS and YOLOv5. Master’s Thesis, Hebei University, Baoding, China, 2024. [Google Scholar] [CrossRef]

- Meng, Y.; Wang, J.; Tian, E.; Yu, Y. Vision System Design of Picking Robot Based on Image Edge Detection Technology. J. Agric. Mech. Res. 2020, 42, 245–248. [Google Scholar] [CrossRef]

- Teng, J.; Xu, H.; Wang, Y.; Zhang, Z. Design and Simulation of Motion Trajectory Planning for Manipulator of Picking Robot. Comput. Simul. 2017, 34, 362–367. [Google Scholar]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous Sweet Pepper Harvesting for Protected Cropping Systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- Hohimer, C.J.; Wang, H.; Bhusal, S.; Miller, J.; Mo, C.; Karkee, M. Design and field evaluation of a robotic apple harvesting system with a 3d-printed soft-robotic end-effector. Trans. ASABE 2019, 62, 405–414. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, F.; Jiang, X.; Xiong, Z.; Xu, C. Motion planning method and experiments of tomato bunch harvesting manipulator. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2021, 37, 149–156. [Google Scholar] [CrossRef]

- Han, W.K.; Luo, J.L.; Wang, J.T.; Gu, Q.H.; Lin, L.J.; Gao, Y.; Chen, H.R.; Luo, K.Y.; Zeng, Z.X.; He, J. Design of a Chili Pepper Harvesting Device for Hilly Chili Fields. Agronomy 2025, 15, 1118. [Google Scholar] [CrossRef]

- Jinyang, H.; Chenghai, Y.; Kairan, L. Design and experiment of mango picking robot. J. Shihezi Univ. (Nat. Sci.) 2025, 43, 152–159. [Google Scholar] [CrossRef]

- Jin, S.; Zhou, H.; Jiang, H.; Sun, M. Research progress on visual system of picking robot. Jiangsu J. Agric. Sci. 2023, 39, 582–595. [Google Scholar] [CrossRef]

- Qin, Z. A Discussion on the Application of Computer Technology in Image Morphological Processing. J. Pu’er Univ. 2019, 35, 24–26. [Google Scholar]

- Wang, J.; Wang, H. An edge detection algorithm for noisy images based on OTSU adaptive threshold segmentation. Pract. Electron. 2025, 33, 42–45. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y. K-means clustering method for color image segmentation based on Lab space. J. Gannan Norm. Univ. 2019, 40, 44–48. [Google Scholar] [CrossRef]

- Chen, J. Large Sample Iterative Training Algorithm for Support Vector Machine. Mod. Inf. Technol. 2025, 9, 85–91. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Jha, S.N.; Khan, M.A. Machine vision system: A tool for quality inspection of food and agricultural products. J. Food Sci. Technol. 2012, 49, 123–141. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Sun, L.; Rui, Y. Detection of Small Surface Defects Based on Machine Vision. J. Appl. Sci. 2012, 30, 531–537. [Google Scholar] [CrossRef]

- Zhao, D. Research on Rapid Nondestructive Testing Method andGrading Equipment of Pear External Quality Based on Machine. Master’s Thesis, Hebei Agricultural University, Baoding, China, 2023. [Google Scholar] [CrossRef]

- Zhao, Y. Research on the Detection and Harvesting Location of Prickly Pear Based on Machine Vision. Master’s Thesis, Guizhou University, Guiyang, China, 2020. [Google Scholar] [CrossRef]

- Niu, B.; Zhang, X. Research on Digital Image Processing Methods Based on Computer Vision: A Case Study of Pear Fruit Detection and Classification. Inf. Rec. Mater. 2021, 22, 195–197. [Google Scholar] [CrossRef]

- Hosseini, S.; Zade, B.M.H. New hybrid method for attack detection using combination of evolutionary algorithms, SVM, and ANN. Comput. Netw. 2020, 173, 107168. [Google Scholar] [CrossRef]

- Li, Z. Intelligent Classification Methods and Applications of Weili Pear Quality. Master’s Thesis, Hebei University of Technology, Tianjin, China, 2023. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, L. Classification of Fruits Using Computer Vision and a Multiclass Support Vector Machine. Sensors 2012, 12, 12489–12505. [Google Scholar] [CrossRef]

- Lei, H.; Jiao, Z.; Ma, J.; Wu, L.; Zhong, Z. Fast Recognition Algorithm of Apple Varieties Based on Multi Feature Fusion and SVM. Autom. Inf. Eng. 2020, 41, 13–17. [Google Scholar] [CrossRef]

- Jiao, Y.; Luo, R.; Li, Q.; Deng, X.; Yin, X.; Ruan, C.; Jia, W. Detection and Localization of Overlapped Fruits Application in an Apple Harvesting Robot. Electronics 2020, 9, 1023. [Google Scholar] [CrossRef]

- Zhao, P.; Cai, W.; Zhou, W.; Li, N. Revolutionizing automated pear picking using Mamba architecture. Plant Methods 2024, 20, 167. [Google Scholar] [CrossRef]

- Zhang, R. Research and Implementation of Key Technology for Real-Time Video Streaming Object Detection. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2021. [Google Scholar] [CrossRef]

- Wang, N.; Zhi, M. Review of One-Stage Universal Object Detection Algorithms in Deep Learning. J. Front. Comput. Sci. Technol. 2025, 19, 1115–1140. [Google Scholar]

- Chen, Y.; Li, W.; Weng, H.; Zheng, J.; Lun, J. Overview of Two-Stage Object Detection Algorithms Based on Deep Learning. Inf. Comput. 2023, 14, 3. [Google Scholar]

- Wang, H.; Mou, Q.; Yue, Y.; Zhao, H. Research on universal detection model of fruit picking based on YOLOv3. China Sci. 2021, 16, 336–342. [Google Scholar]

- Ma, S. Research on Pear Fruit Recognition Based on Improved YOLOv4 and Yield Prediction Model. Master’s Thesis, Hebei Agricultural University, Baoding, China, 2022. [Google Scholar] [CrossRef]

- Li, Y.; Rao, Y.; Jin, X.; Jiang, Z.; Wang, Y.; Wang, T.; Wang, F.; Luo, Q.; Liu, L. YOLOv5s-FP: A Novel Method for In-Field Pear Detection Using a Transformer Encoder and Multi-Scale Collaboration Perception. Sensors 2023, 23, 30. [Google Scholar] [CrossRef] [PubMed]

- Parico, A.I.; Ahamed, T. Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef]

- Zheng, W.; Yang, Y. Target detection method for fragrant pears at mature stage based on improved lightweight YOLO v7. Jiangsu Agric. Sci. 2024, 52, 121–128. [Google Scholar] [CrossRef]

- Tan, H.; Ma, W.; Tian, Y.; Zhang, Q.; Li, M.; Li, M.; Yang, X. Improved YOLOv8n object detection of fragrant pears. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2024, 40, 178–185. [Google Scholar] [CrossRef]

- Zhao, P.; Zhou, W.; Na, L. High-precision object detection network for automate pear picking. Sci. Rep. 2024, 14, 14965. [Google Scholar] [CrossRef]

- Jia, J.; Cui, J. Application Research of Intelligent Robot in the Field of Agricultural Automation. J. Kaifeng Univ. 2023, 4, 6. [Google Scholar]

- Yan, J.; Zhao, Y.; Zhang, L.; Su, X.; Liu, H.; Zhang, F.; Fan, W.; He, L. Recognition of Rosa roxbunghii in natural environment based on improved Faster RCNN. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2019, 35, 143–150. [Google Scholar] [CrossRef]

- Meng, X.; Alifu, K.; Lv, Q.; Zhou, L. Research on Fragrant Pear Target Recognition in NaturalEnvironment Based on Transfer Learning. J. Xinjiang Univ. (Nat. Sci. Ed. Chin. Engl.) 2019, 4, 7. [Google Scholar] [CrossRef]

- Zhu, Y. Balsam Pear Segmentation and Picking Point Study Based Onimproved Mask R-C. Master’s Thesis, Tarim University, Aral, China, 2024. [Google Scholar] [CrossRef]

- Pan, S.; Ahamed, T. Pear Recognition in an Orchard from 3D Stereo Camera Datasets to Develop a Fruit Picking Mechanism Using Mask R-CNN. Sensors 2022, 22, 4187. [Google Scholar] [CrossRef] [PubMed]

- Montoya-Cavero, L.-E.; Díaz de León Torres, R.; Gómez-Espinosa, A.; Cabello, J.A.E. Vision systems for harvesting robots: Produce detection and localization. Comput. Electron. Agric. 2022, 192, 106562. [Google Scholar] [CrossRef]

- Shi, G.; Zhang, F.; Gou, Y.; Zheng, L.; Cai, J.; Feng, C. Research progress on target recognition and picking point localization of fruit picking robots. J. Chin. Agric. Mech. 2025, 46, 115–124. [Google Scholar] [CrossRef]

- Cheng, F.; Wu, W.; He, H.; Huang, Y.; Fu, J. Research on Target Recognition and Localization Methods for Citrus Harvesting Robots. Sci. Technol. Inf. 2019, 17, 30–31. [Google Scholar] [CrossRef]

- Feng, J.; Wang, S.; Liu, G.; Zeng, L. A Separating Method of Adjacent Apples Based on Machine Vision and Chain Code Information. In Proceedings of the Computer and Computing Technologies in Agriculture V, Zhangjiajie, China, 19–21 October 2012; pp. 258–267. [Google Scholar]

- Niu, L.; Zhou, W.; Wang, D.; He, D.; Zhang, H.; Song, H. Extracting the symmetry axes of partially occluded single apples in natural scene using convex hull theory and shape context algorithm. Multimed. Tools Appl. 2017, 76, 14075–14089. [Google Scholar] [CrossRef]

- Ren, R.; Sun, H.; Zhang, S.; Wang, N.; Lu, X.; Jing, J.; Xin, M.; Cui, T. Intelligent Detection of Lightweight “Yuluxiang” Pear in Non-Structural Environment Based on YOLO-GEW. Agronomy 2023, 13, 2418. [Google Scholar] [CrossRef]

- Li, Y.; He, L.; Jia, J.; Lv, J.; Chen, J.; Qiao, X.; Wu, C. In-field tea shoot detection and 3D localization using an RGB-D camera. Comput. Electron. Agric. 2021, 185, 106149. [Google Scholar] [CrossRef]

- Gou, Y.; Yan, J.; Zhang, F.; Sun, C.; Xu, Y. Research Progress on Vision System and Manipulator of Fruit Picking Robot. Comput. Eng. Appl. 2023, 59, 13–26. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Yang, C.; Wang, K.; Xie, N. Three-dimensional Spatial Localization of Overlapping Citrus Based on Binocular Stereo Vision. J. Agric. Sci. Technol. 2020, 22, 104–112. [Google Scholar] [CrossRef]

- Li, Y.; Feng, Q.; Li, T.; Xie, F.; Liu, C.; Xiong, Z. Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review. Agronomy 2022, 12, 1336. [Google Scholar] [CrossRef]

- Zhou, G.; Zhu, Y.; Zhang, P. Autonomous Obstacle Avoidance Vehicle Based on the Combination of LiDAR and Depth Camera. Sci. Technol. Innov. 2025, 5, 1–5. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, D.; Li, Y.; Li, D.; Li, Y.; Rana, R. Monocular distance measurement algorithm for pomelo fruit based on target pixels change. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2021, 37, 183–191. [Google Scholar] [CrossRef]

- Chen, C.; Li, B.; Liu, J.; Bao, T.; Ren, N. Monocular positioning of sweet peppers: An instance segmentation approach for harvest robots. Biosyst. Eng. 2020, 196, 15–28. [Google Scholar] [CrossRef]

- Xiong, J.; Lin, R.; Liu, Z.; He, Z.; Tang, L.; Yang, Z.; Zou, X. The recognition of litchi clusters and the calculation of picking point in a nocyurnal natural environment. Biosyst. Eng. 2018, 166, 44–57. [Google Scholar] [CrossRef]

- Ling, X.; Zhao, Y.; Gong, L.; Liu, C.; Wang, T. Dual-arm cooperation and implementing for robotic harvesting tomato using binocular vision. Robot. Auton. Syst. 2019, 114, 134–143. [Google Scholar] [CrossRef]

- Si, Y.; Liu, G.; Feng, J. Location of apples in trees using stereoscopic vision. Comput. Electron. Agric. 2015, 112, 68–74. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Huang, Z.; Zhou, H.; Lian, G. Three-dimensional perception of orchard banana central stock enhanced by adaptive multi-vision technology. Comput. Electron. Agric. 2020, 174, 105508. [Google Scholar] [CrossRef]

- Neupane, C.; Koirala, A.; Wang, Z.; Walsh, K.B. Evaluation of Depth Cameras for Use in Fruit Localization and Sizing: Finding a Successor to Kinect v2. Agronomy 2021, 11, 1780. [Google Scholar] [CrossRef]

- Kang, H.; Wang, X.; Chen, C. Accurate fruit localisation using high resolution LiDAR-camera fusion and instance segmentation. Comput. Electron. Agric. 2022, 203, 107450. [Google Scholar] [CrossRef]

- Furukawa, Y.; Hernandez, C. Multi-view stereo: A tutorial. Found. Trends Comput. Graph. Vision 2015, 9, 1–148. [Google Scholar] [CrossRef]

- Giancola, S.; Valenti, M.; Sala, R. A survey on 3D cameras: Metrological comparison of time-of-flight, structured-light and active stereoscopy technologies. In SpringerBriefs in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; pp. 89–90. [Google Scholar]

- Zanuttigh, P.; Marin, G.; Dal Mutto, C.; Dominio, F.; Minto, L.; Cortelazzo, G.M. Time-of-Flight and Structured Light Depth Cameras: Technology and Applications; Springer International Publishing: Cham, Switzerland, 2016; pp. 1–355. [Google Scholar]

- Li, M.; Liu, P. A bionic adaptive end-effector with rope-driven fingers for pear fruit harvesting. Comput. Electron. Agric. 2023, 211, 107952. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, W.; Li, Z.; Wang, Y.; Liu, L.; Cui, Y. A “Global–Local” Visual Servo System for Picking Manipulators. Sensors 2020, 20, 3366. [Google Scholar] [CrossRef] [PubMed]

- Gao, R.; Zhou, Q.; Cao, S.; Jiang, Q. An Algorithm for Calculating Apple Picking Direction Based on 3D Vision. Agriculture 2022, 12, 1170. [Google Scholar] [CrossRef]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of Fruit-Bearing Branches and Localization of Litchi Clusters for Vision-Based Harvesting Robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, C.; Dai, J.; Xun, Y.; Bao, G. Tracking and recognition algorithm for a robot harvesting oscillating apples. Int. J. Agric. Biol. Eng. 2020, 13, 163–170. [Google Scholar] [CrossRef]

- Guo, Z.; Yin, C.; Wu, X.; Chen, Q.; Wang, J.; Zhou, H. Research status and prospect of key technologies of fruit picking manipulator. Jiangsu J. Agric. Sci. 2024, 6, 1142–1152. [Google Scholar] [CrossRef]

- Li, G.; Ji, C.; Zhai, L. Research progress and analysis of end-effector for fruits and vegetables picking robot. J. Chin. Agric. Mech. 2014, 5, 7. [Google Scholar] [CrossRef]

- Peng, Y.; Liu, Y.; Yang, Y.; Yang, Y.; Liu, N.; Sun, Y. Research progress on application of soft robotic gripper in fruit and vegetable picking. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2018, 34, 11–20. [Google Scholar] [CrossRef]

- Yang, X.; Yang, Q.; Liu, L. Design and Experiment of End Effector for Facility Tomato Harvesting Robot. J. Agric. Mech. Res. 2025, 47, 126–134. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, S.; Fu, G.; Chen, J.; Zhu, L. Review of Research Progress on Tropical Fruit Harvesting Robots. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2025, 56, 184–201. [Google Scholar] [CrossRef]

- Liu, J.; Xu, X.; Li, P. Analysis and experiment on laser cutting of fruit peduncles. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2014, 45, 59–64. [Google Scholar] [CrossRef]

- Bulanon, D.M.; Kataoka, T. Fruit detection system and an end effector for robotic harvesting of Fuji apples. Agric. Eng. Int. CIGR J. 2010, 12, 203–210. [Google Scholar]

- Zhang, H.; Li, X.; Wang, L.; Liu, D.; Wang, S. Construction and Optimization of a Collaborative Harvesting System for Multiple Robotic Arms and an End-Picker in a Trellised Pear Orchard Environment. Agronomy 2024, 14, 80. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Wang, S. Development and Test of Mechanical Picking Device for Pear Fruit. Master’s Thesis, Shandong Agricultural University, Tai’an, China, 2023. [Google Scholar] [CrossRef]

- Liu, Y.; Dong, J.; Peng, Y.; Lan, H.; Li, P. Development and Experiment of Korla Fragrant Pear Picking End-effector with Controlled Gripping Pressure. J. Agric. Mech. Res. 2020, 42, 33–39. [Google Scholar] [CrossRef]

- Gao, Z.; Pang, G.; Li, L.; Zhao, K.; Wang, X.; Ji, C. Design of hand-operated piggyback jaw gripper type simplified picker for pear. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2019, 35, 39–45. [Google Scholar] [CrossRef]

- Xu, L.; Liu, X.; Zhang, K.; Xing, J.; Yuan, Q.; Chen, J.; Duan, Z.; Ma, S.; Yu, C. Design and test of end-effector for navel orange picking robot. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2018, 34, 53–61. [Google Scholar] [CrossRef]

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous Fruit Picking Machine: A Robotic Apple Harvester. In Field and Service Robotics: Results of the 6th International Conference; Laugier, C., Siegwart, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 531–539. [Google Scholar]

- Wang, X.; Wu, P.; Feng, Q.; Wang, G. Design and Test of Tomatoes Harvesting Robot. J. Agric. Mech. Res. 2016, 38, 94–98. [Google Scholar] [CrossRef]

- Guo, H.; Ma, R.; Zhang, Y.; Li, Z. Design an d Simulation Analysis of Ya Shaped Underactuated Korla Fragrant Pear Picking Manipulator. J. Agric. Mech. Res. 2023, 45, 110–117. [Google Scholar] [CrossRef]

- Li, G.; Ji, C.; Gu, B.; Xu, W.; Dong, M. Kinematics analysis and experiment of apple harvesting robot manipulator with multiple end-effectors. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2016, 47, 14–21 and 29. [Google Scholar] [CrossRef]

- Wang, Z. Review of smart robots for fruit and vegetable picking in agriculture. Int. J. Agric. Biol. Eng. 2021, 14, 33–54. [Google Scholar] [CrossRef]

- Yang, W.; Feng, H.; Han, Y.; Xu, Y. Development of Spherical Fruit Picking Robot End Effector Based on Pneumatic Actuation. J. Agric. Mech. Res. 2019, 41, 149–154. [Google Scholar] [CrossRef]

| Preprocessing Method | Objective | Method | Application Scenario |

|---|---|---|---|

| Color Space Transformation | Reduce light sensitivity, enhance color robustness | RGB to HSV, RGB to grayscale | For images under different lighting, requires stable pear fruit color features |

| Image Denoising | Remove image noise, ensure image quality | Median filtering, high-pass filtering, wavelet and bilateral filtering | For pear fruit detection, suitable for background removal and image clarity |

| Image Enhancement | Enhance fruit-background contrast, highlight key features | Contrast enhancement, adjustment of brightness and contrast | For distinguishing pear fruits and backgrounds, suitable for improving visibility and recognition |

| Dimension | Single-Stage Methods (e.g., YOLO, SSD) | Two-Stage Methods (e.g., Faster R-CNN, Mask R-CNN) |

|---|---|---|

| Detection Flow | Direct feature extraction → Bounding box and classification (single stage) | Region proposal → Object detection (two-stage) |

| Speed | High frame rate | Low frame rate |

| Accuracy | Medium accuracy, weak on small object detection | High accuracy, better performance on small object detection |

| Hardware Dependency | Low, can run on CPU or lightweight hardware | High, requires GPU acceleration |

| Application Scenario | Real-time or resource-limited tasks | High precision, non-time-critical tasks |

| Version | Core Improvements | Pome Fruit Recognition Characteristics (Advantages) |

|---|---|---|

| YOLOv3 | Multi-scale Prediction, Darknet-53 | Balances speed and accuracy; suitable for medium-scale deployment |

| YOLOv4 | CSPDarknet, SPP, Mish | Strong robustness against occlusion and challenging illumination |

| YOLOv5 | Lightweight design, Adaptive Anchor Boxes | Optimal real-time performance on edge devices |

| YOLOv7 | ELAN Architecture + Compound Scaling | Dual optimization of accuracy and speed in complex scenes |

| YOLOv8 | End-to-End Framework, Transformer Fusion | Multi-task support; strong generalization in complex scenarios |

| Dimension | Fast R-CNN | Mask R-CNN |

|---|---|---|

| Core Task | Object detection (Bounding box + Category classification) | Object detection + Instance segmentation (Pixel-level mask output) |

| Network Structure | Classification Branch, Regression Branch | Additional Mask Branch (FCN-based pixel-level segmentation) |

| ROI Processing | ROI Pooling (Quantization Error Present) | ROI Align (Error Elimination, Enhanced Segmentation Precision) |

| Training Objective | Classification Loss + Regression Loss | Multi-task learning: Classification + Regression + Segmentation losses |

| Performance Characteristic | Faster Inference Speed | Slower Inference Speed, but Supports Pixel-level Localization |

| Algorithm | Key Performance Metrics | Application Scenarios |

|---|---|---|

| Modified YOLOv3 [36] | mAP 89.54% | Complex illumination and occlusion |

| Modified YOLOv4 [37] | Recall 85.56%, mAP 90.18%, model size ↓44% | Similarly colored backgrounds, heavy occlusion and overlap |

| YOLOv5s-FP [38] | mAP 96.12% | High-density occlusion, small targets, dense overlap, illumination variations |

| YOLOv4-tiny + Deep SORT [39] | AP 94.09%, FPS ≥ 24, F1-score 87.85% | Orchard real-time counting |

| MobileNetv3-YOLOv7 [40] | Precision 94.36%, Recall 89.28% | Lightweight deployment |

| Optimized YOLOv8n [41] | GPU speed ↑34.0%, CPU speed ↑24.4%, F0.5 at 94.7%, mAP 88.3% | Resource-constrained edge devices |

| Lightweight YOLOv8-s [42] | Small target perception accuracy ↑ significantly | Long-distance small targets, cluttered backgrounds |

| Vmamba-SS3D-RPM-SFPN [32] | mAP@50 94.8%, dense scene precision ↑7.6% | Cluttered backgrounds, dense small target detection |

| Pruned SSD [43] | Precision 98.01%, Recall 85.03% | Multi-object recognition in complex environments |

| ROI Align–Faster R-CNN [44] | Recognition precision 95.16%, detection efficiency 0.2 s/item | Generic object detection |

| Mask R-CNN–ResNet [45] | Average segmentation precision 98.02% (95.28% under occlusion) | Mature pear segmentation (occlusion included) |

| Deformable Conv–Mask R-CNN [46] | mAP 91.3% | Small target feature preservation |

| Method Category | Typical Algorithm | Localization Accuracy | Speed | Applicable Scenarios |

|---|---|---|---|---|

| Region-based Features | Centroid Method | Low | Fast | Simple scenes; circular pear fruits with uniform pixel distribution |

| Contour-based Features | Minimum Enclosing Circle | Medium | Medium | Simple scenes with regular pear fruits |

| Deep Learning | YOLOv8 | High | Slow | Complex shapes, multi-target scenes, occlusion scenarios |

| Technology Type | Operation Mode | Principle | Core Advantage | Main Limitations | Representative Equipment |

|---|---|---|---|---|---|

| Binocular Camera | Passive Imaging | Stereo Vision (Disparity Calculation) |

|

|  |

| Multi-Camera Array | Passive Imaging | Multi-View Stereo Matching |

|

|  |

| ToF Depth Camera | Active Imaging | Time-of-Flight (Light Pulses) |

|

|  |

| Structured-Light Camera | Active Imaging | Optical Encoding (Speckle/Stripes) |

|

|  |

| LiDAR | Active Imaging | Laser Scanning (Pulse/Phase Modulation) |

|

|  |

| Feature | Grasping and Twisting Type | Grasping and Shearing Type | Vacuum Adsorption Type |

|---|---|---|---|

| Operating Principle | Grasps pear, then twists/pulls fruit stem for separation | Grasps pear, then rotates blades to sever fruit stem | Employs vacuum suction to grasp pear, separates stem via shearing or twisting |

| Primary Advantages | Accommodates varying fruit sizes; High positional tolerance | Minimizes flesh damage; Preserves intact stem | High harvesting speed; Adaptable to arbitrary fruit orientations |

| Primary Defects | Wax cuticle causes gripping slippage; Twisting tends to tear adjacent stem tissue, damaging flesh | High risk of damage to pears with short stems; Requires high-precision stem recognition | Impact during placement causes damage; Small fruit prone to separation failure (insufficient pressure due to gaps); Leaf/small debris suction risk causes blockages |

| Fruit Damage Risk | High (Stem tearing + Surface compression damage) | Low | Medium (Impact injury, post-separation deterioration of torn stems) |

| Environmental Adaptation | Suitable in sparse foliage areas; Fails in dense clusters | Stable performance under low light; Reduced accuracy during rain/fog | High tolerance to fruit orientation; Performance degradation in dusty/humid conditions (vacuum system impact) |

| Technology Module | Solution Type | Performance Parameters | Advantages | Limitations |

|---|---|---|---|---|

| Recognition | YOLOv5s-FP [38] | mAP 96.12%; High-density occlusion scenes | Multi-scale perception; Robust to lighting | Computational latency (>100 ms) |

| Mask R-CNN-ResNet [45] | Segmentation accuracy 95.28% (occluded scenes) | Instance segmentation; Handles overlap well | Low frame rate (~5 FPS) | |

| Optimized YOLOv8n [41] | GPU speed ↑34.0%; CPU speed ↑24.4%; mAP 88.3% | Edge device compatibility | Degraded small target detection | |

| Localization | Binocular Vision [63] | Error < 20 mm (400–1500 mm range) | Low cost; High resolution | Lighting-sensitive; Limited FOV (<180°) |

| LiDAR–Camera Fusion [66] | Error 0.245–0.275 cm (0.5–1.8 m range) | Millimeter accuracy; Robust to lighting | High cost (>USD 2000) | |

| ToF Depth Camera [65] | Stable under sunlight interference | Suitable for dynamic environments | Short effective range (<3 m) | |

| End effector | Pneumatic Shearing [93] | Cycle time 2.4 s/fruit; Damage rate 0% | Adapts to irregular stems | High risk for short-stem varieties |

| Vacuum Adsorption [81] | Harvesting speed 5 fruits/min | Omnidirectional adaptability | Adsorption failure due to waxy layer (gap > 2 mm) | |

| Grasping–Twisting [90] | Success rate 96% (multi-fruit clusters) | High dimensional tolerance | Flesh damage risk (>15 N grip force) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Wang, B.; Su, L.; Yu, Z.; Liu, X.; Meng, X.; Zhao, K.; He, X. Current Research Status and Development Trends of Key Technologies for Pear Harvesting Robots. Agronomy 2025, 15, 2163. https://doi.org/10.3390/agronomy15092163

Zhang H, Wang B, Su L, Yu Z, Liu X, Meng X, Zhao K, He X. Current Research Status and Development Trends of Key Technologies for Pear Harvesting Robots. Agronomy. 2025; 15(9):2163. https://doi.org/10.3390/agronomy15092163

Chicago/Turabian StyleZhang, Hongtu, Binbin Wang, Liyang Su, Zhongyi Yu, Xinchao Liu, Xiangsen Meng, Keyao Zhao, and Xiongkui He. 2025. "Current Research Status and Development Trends of Key Technologies for Pear Harvesting Robots" Agronomy 15, no. 9: 2163. https://doi.org/10.3390/agronomy15092163

APA StyleZhang, H., Wang, B., Su, L., Yu, Z., Liu, X., Meng, X., Zhao, K., & He, X. (2025). Current Research Status and Development Trends of Key Technologies for Pear Harvesting Robots. Agronomy, 15(9), 2163. https://doi.org/10.3390/agronomy15092163