ESG-YOLO: An Efficient Object Detection Algorithm for Transplant Quality Assessment of Field-Grown Tomato Seedlings Based on YOLOv8n

Abstract

1. Introduction

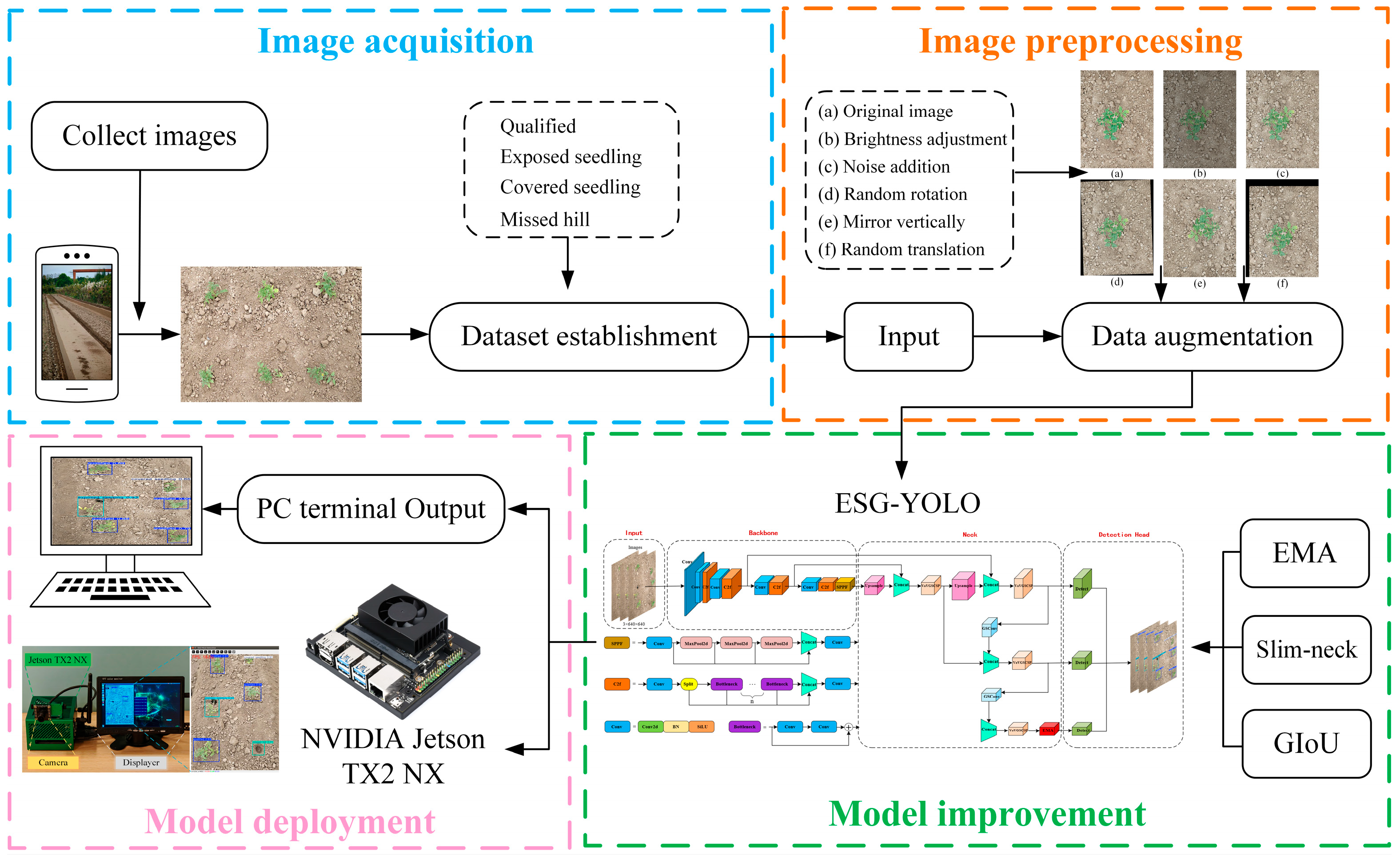

2. Materials and Methods

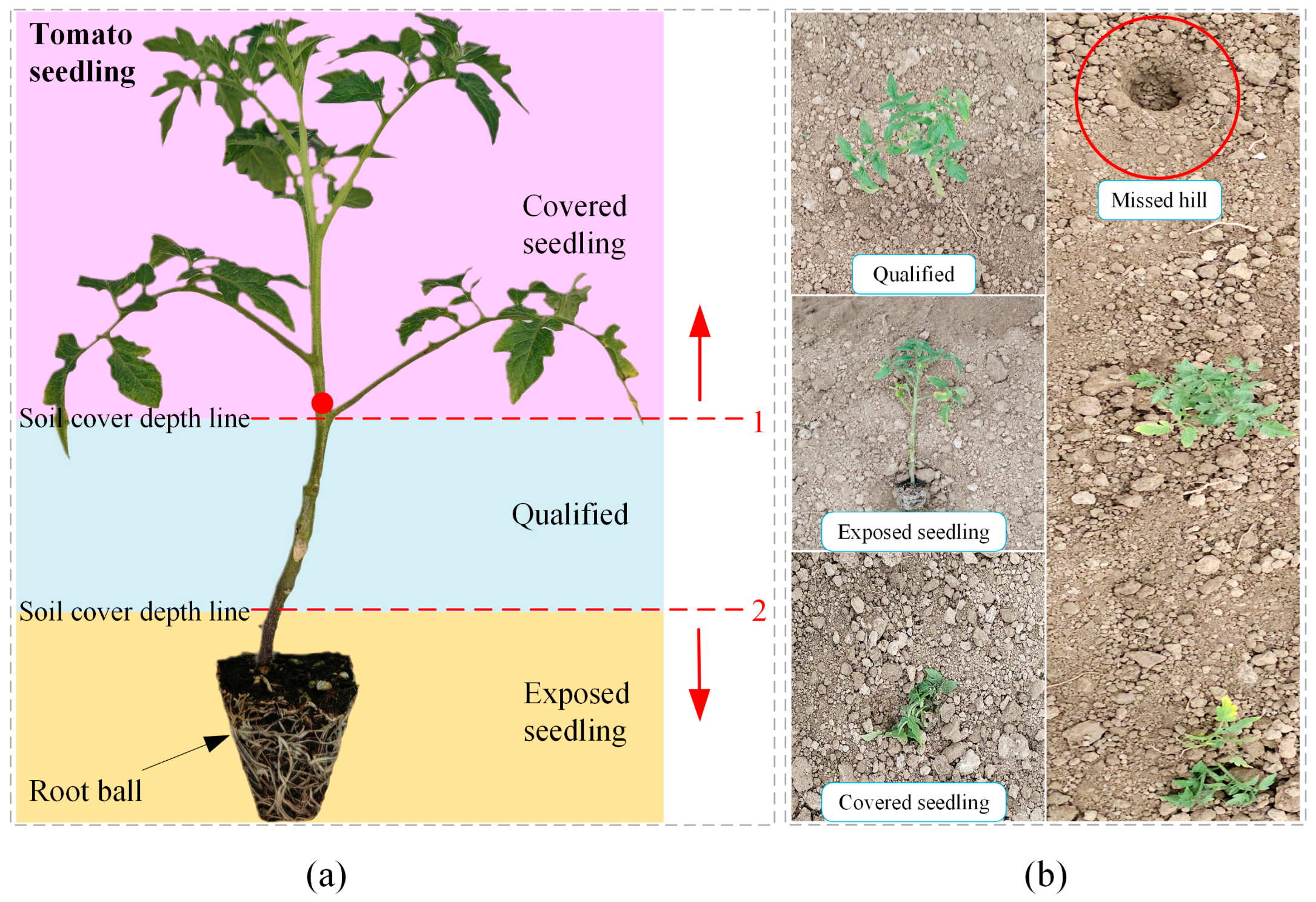

2.1. Grading of Tomato Seedling Conditions Post-Transplantation

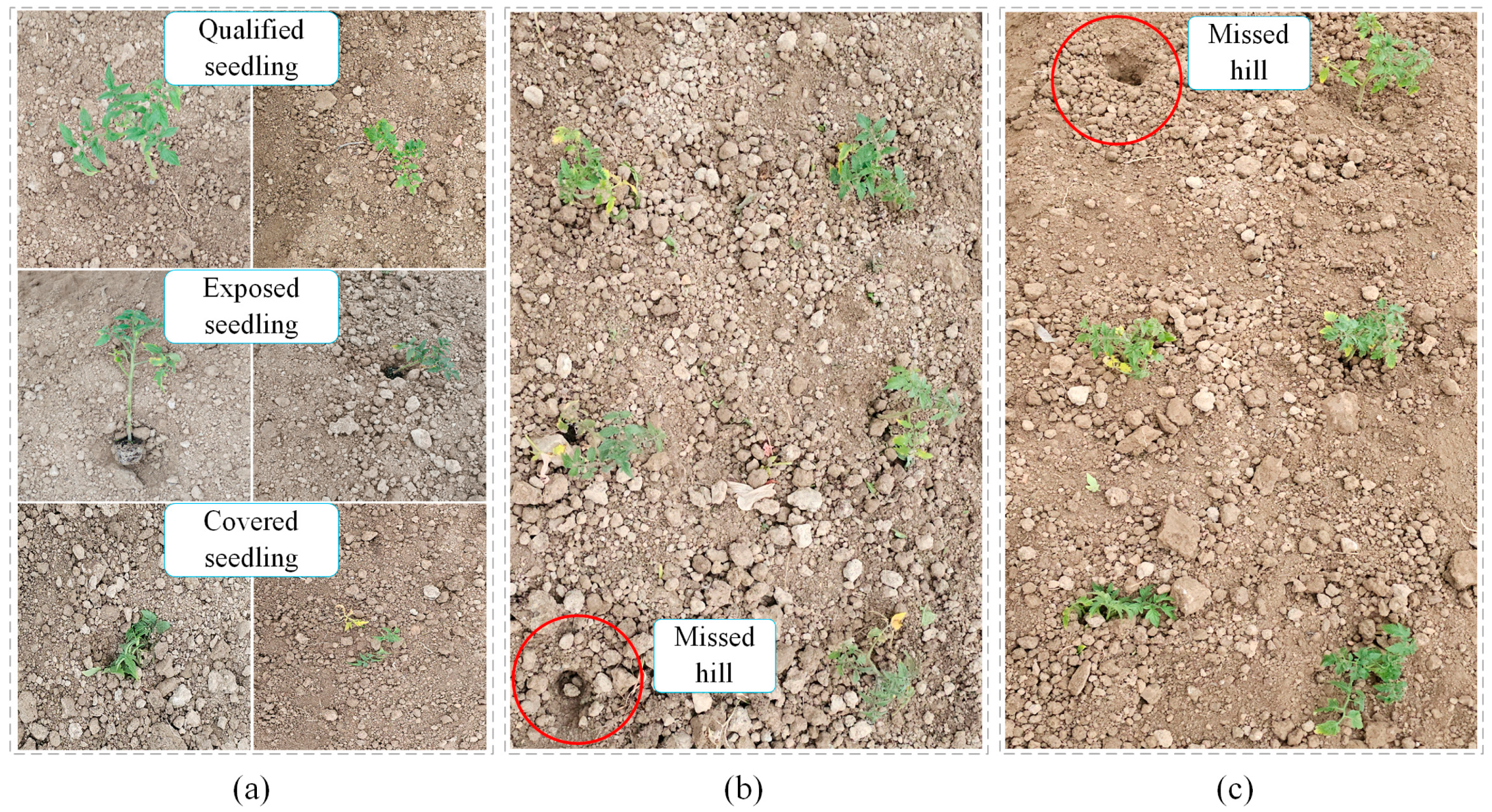

2.2. Image Acquisition and Dataset Construction

2.2.1. Image Acquisition

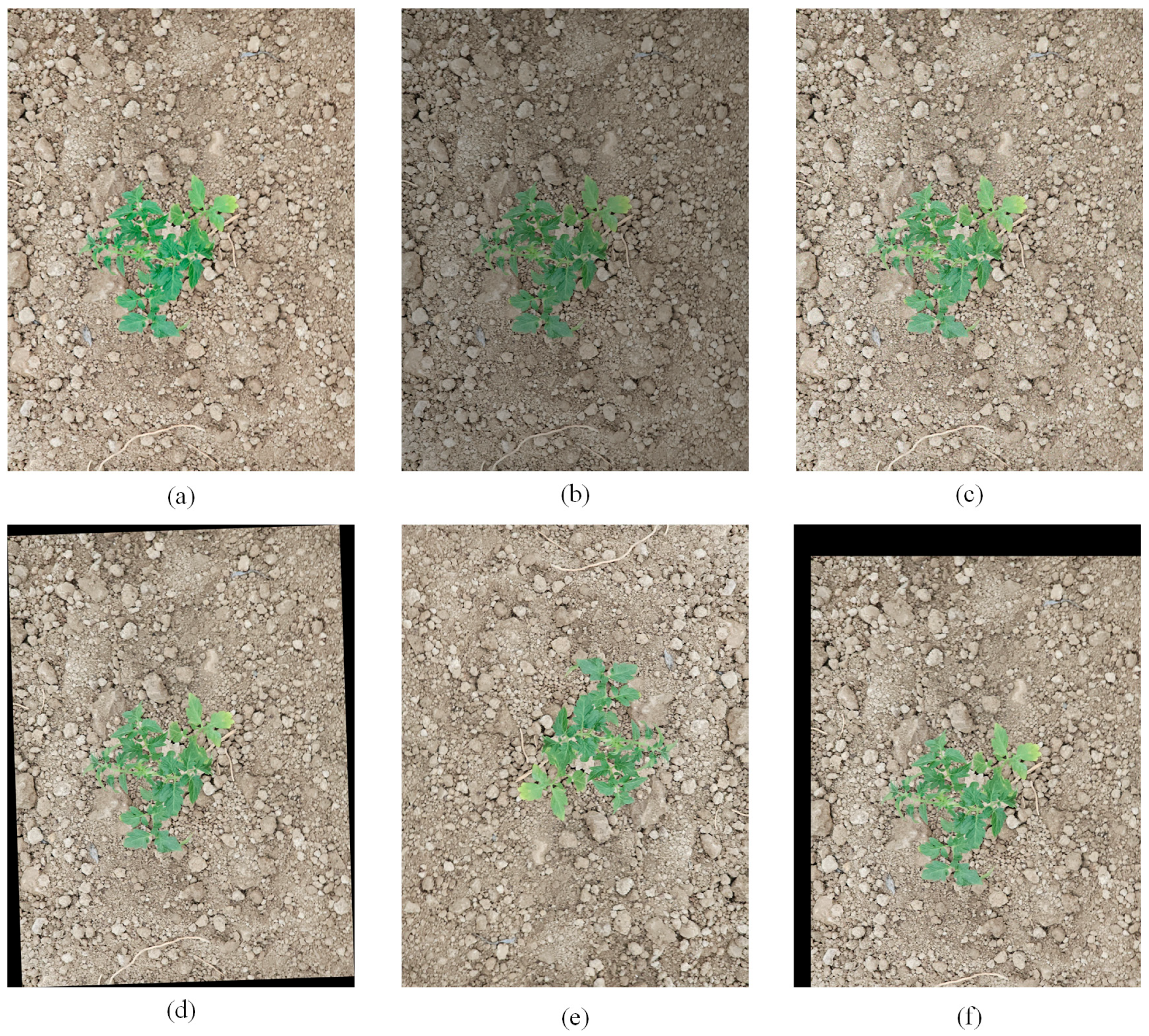

2.2.2. Data Preprocessing and Dataset Construction

2.3. Strategies for Improving the YOLOv8 Model

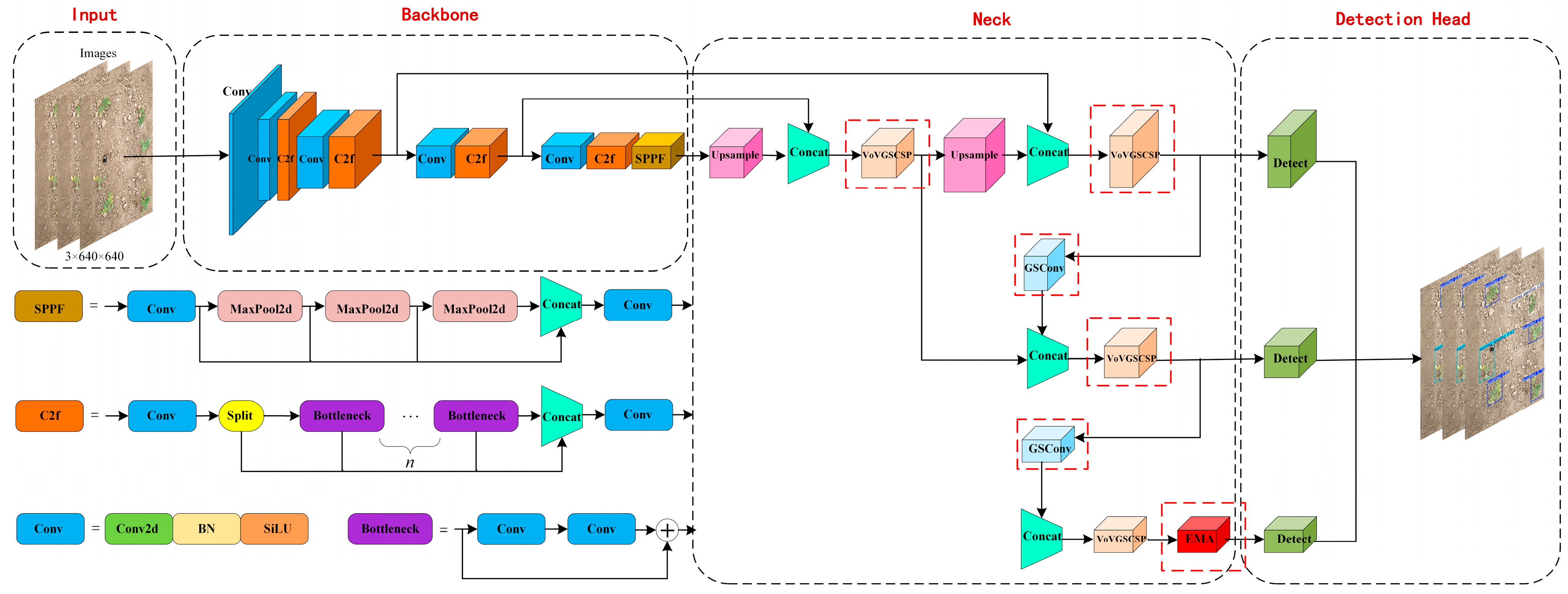

2.3.1. ESG-YOLO Object Detection Algorithm

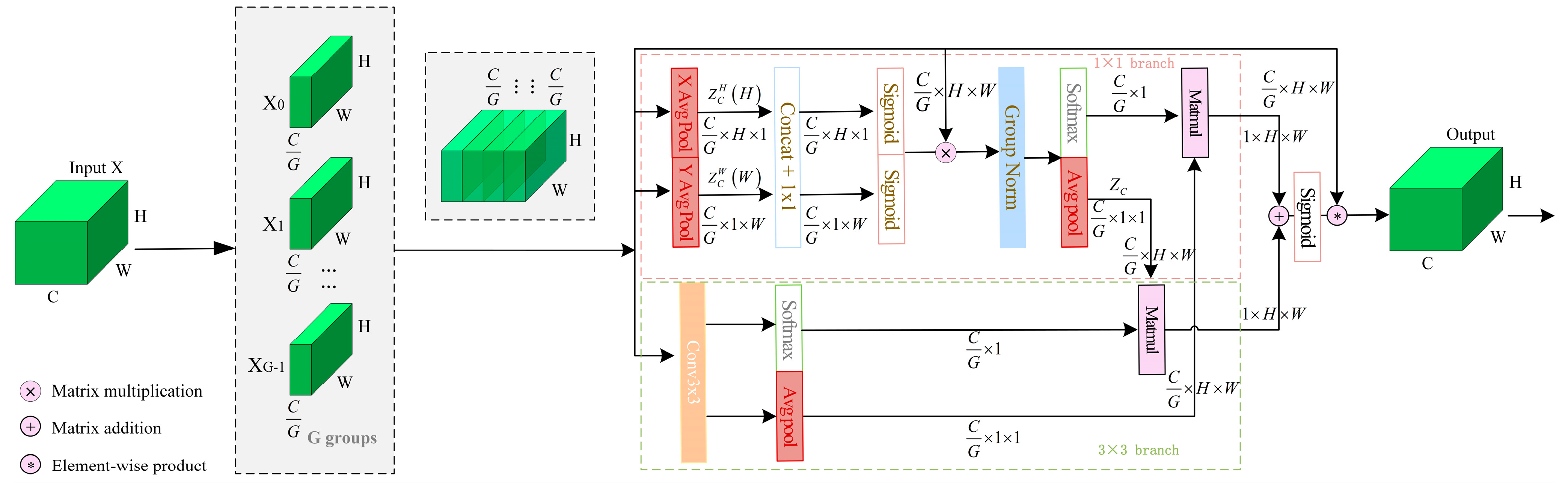

2.3.2. EMA Attention Module

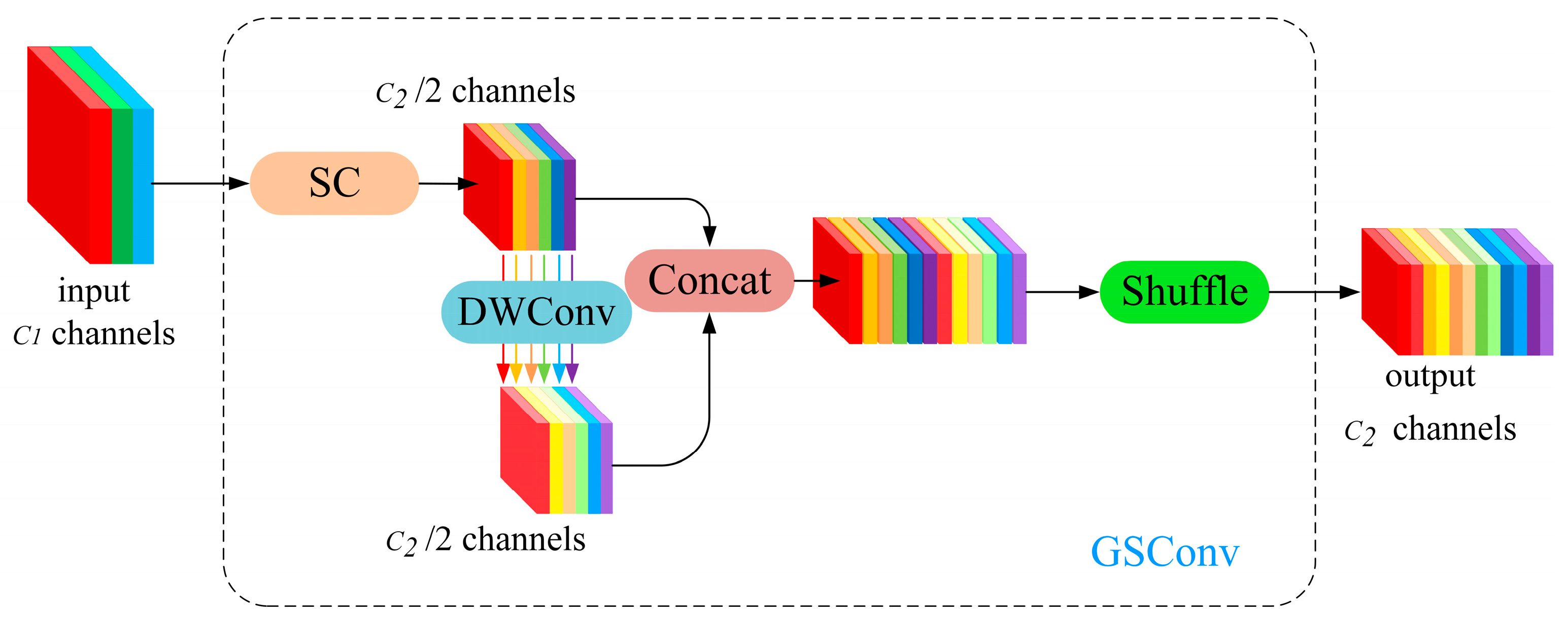

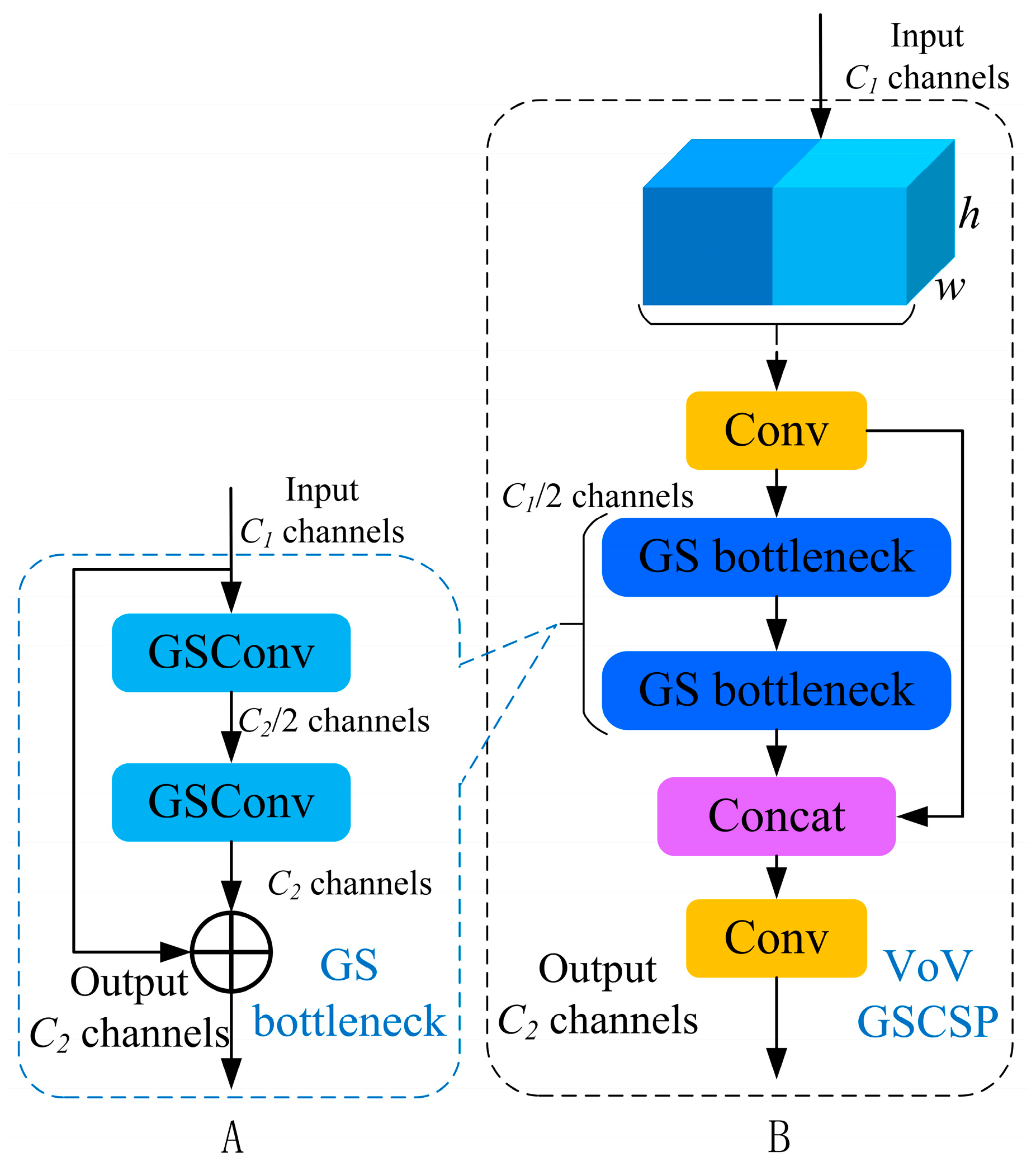

2.3.3. GSConv-Based Slim-Neck

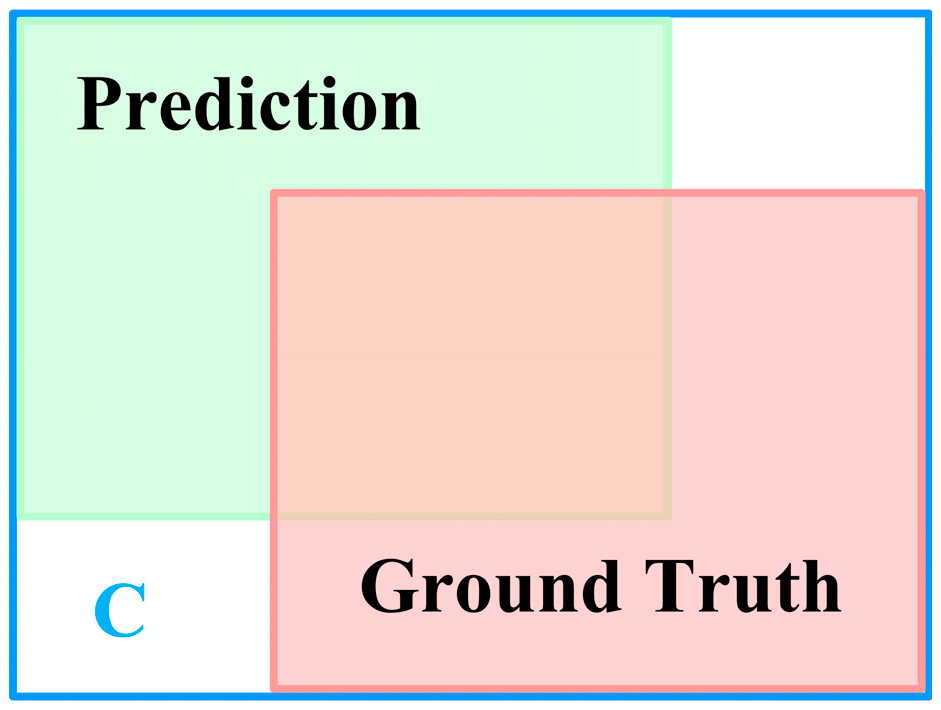

2.3.4. Improvement of IoU-Loss

2.4. Experimental Environment and Evaluation Metrics

2.4.1. Experimental Environment

- (1)

- AMP: Whether to use Automatic Mixed Precision (AMP) for training. AMP is a deep learning training technique that employs half-precision floating-point numbers to accelerate the training process and reduce memory consumption.

- (2)

- Optimizer: The optimizer, a core parameter controlling model weight update strategies, minimizes the loss function via gradient descent algorithms to enhance model performance.

2.4.2. Evaluation Metrics

3. Results

3.1. Comparative Experiments on Attention Modules

3.2. Comparative Experiments on Loss Functions

3.3. Ablation Experiment

3.3.1. Slim-Neck Lightweight Network (Group 6)

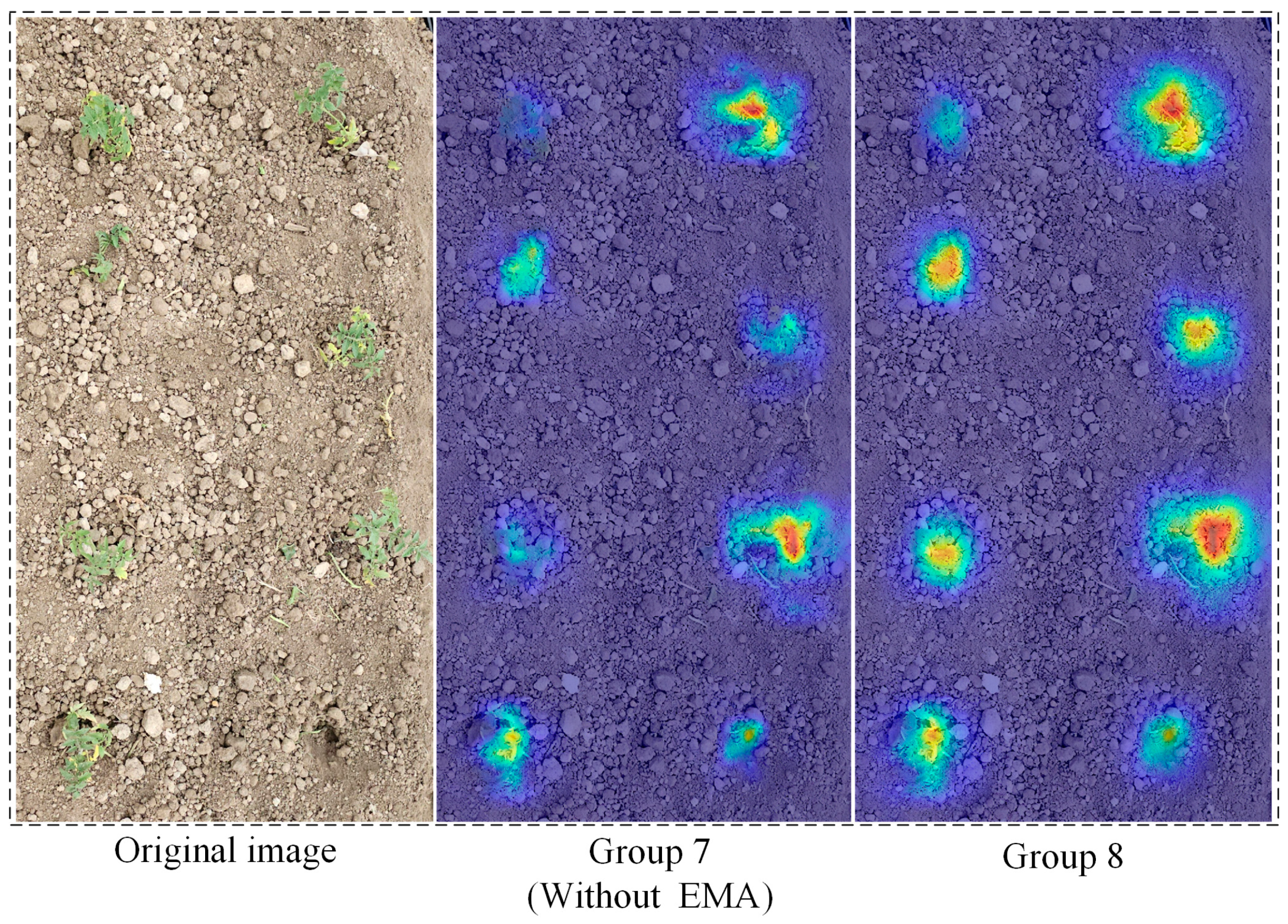

3.3.2. EMA Attention Module (Group 7)

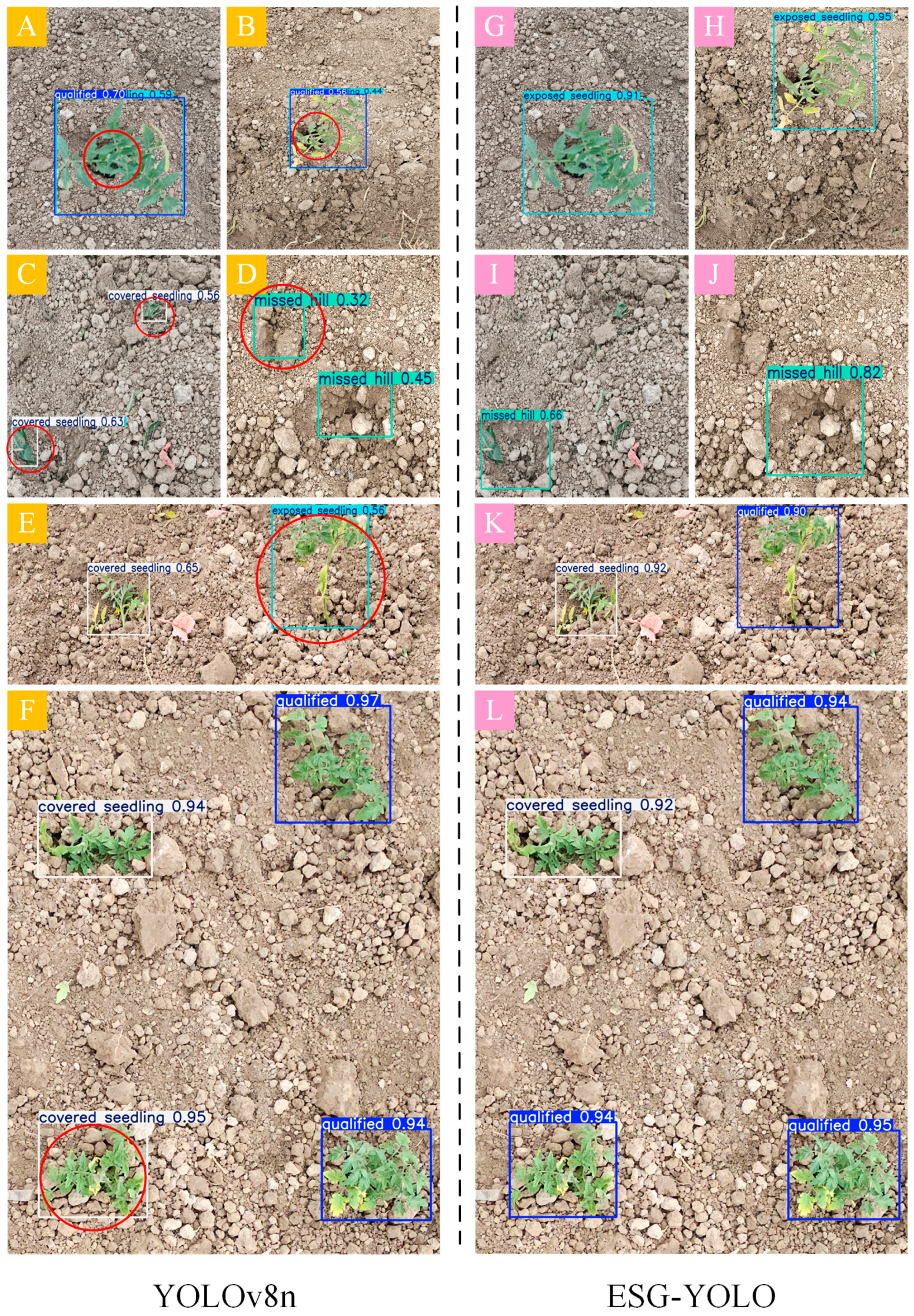

3.4. Comparative Experiments Among ESG-YOLO and Other Lightweight Models

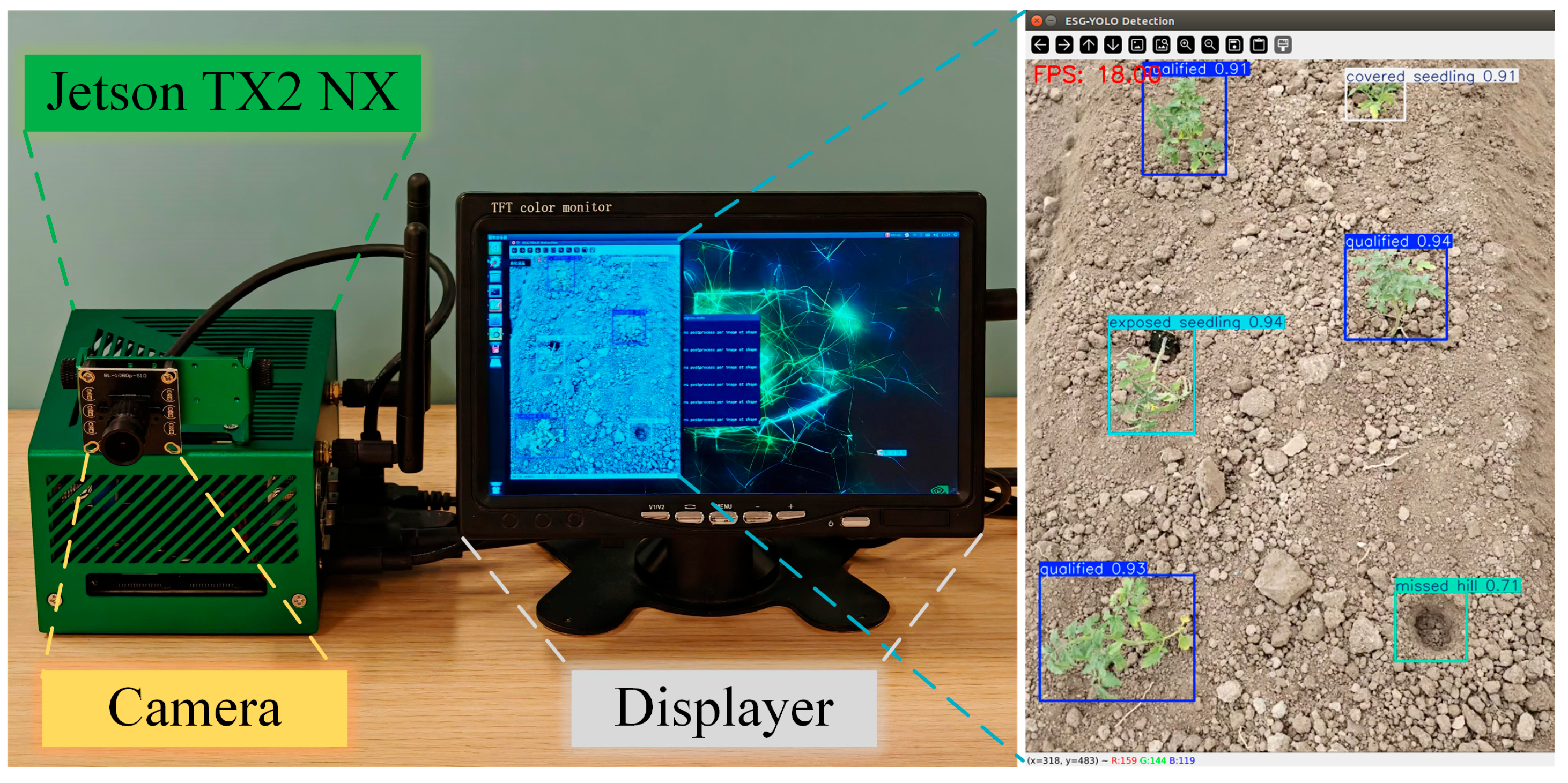

3.5. Deployment of ESG-YOLO on NVIDIA Jetson TX2 NX

3.5.1. Introduction to NVIDIA Jetson TX2 NX

3.5.2. Deploying the ESG-YOLO Model

4. Conclusions

- (1)

- To address the challenges of miniature tomato seedlings and the similar characteristics between root balls and soil in detection scenarios, an EMA attention module was embedded into the model’s neck network. This enhancement allows more precise focus on critical regions of seedlings, providing finer pixel-level attention for deep feature learning.

- (2)

- For constructing a lightweight deployable model, the YOLOv8 neck module was reconstructed using a Slim-neck architecture. This modification significantly reduces computational complexity while maintaining high-precision recognition capabilities for transplanting quality assessment.

- (3)

- The study replaced the original CIoU loss function with the GIoU loss function, substantially improving localization accuracy for small-scale targets and accelerating model convergence.

- (4)

- Ablation experiments demonstrate breakthrough progress in the ESG-YOLO model. The final mAP@0.5 reached 97.4%, representing a 2.2 percentage point increase in average precision for seedling detection compared to the baseline model. Recall improved by 4.2%, while model parameters decreased by 7%, computational load reduced by 10%, and model size compressed by 8%.

- (5)

- Field validation on the NVIDIA Jetson TX2 NX embedded platform achieved a stable detection rate exceeding 18 FPS, fully meeting real-time application requirements for transplanting quality assessment.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, H.; He, J.; Aziz, N.; Wang, Y. Spatial Distribution and Driving Forces of the Vegetable Industry in China. Land 2022, 11, 981. [Google Scholar] [CrossRef]

- Cui, Z.; Guan, C.; Yang, Y.; Gao, Q.; Chen, Y.; Xiao, T. Research status of vegetable mechanical transplanting technology and equipment. J. Chin. Agric. Mech. 2020, 41, 85–92. [Google Scholar] [CrossRef]

- Cui, Z.; Guan, C.; Xu, T.; Fu, J.; Chen, Y.; Yang, Y.; Gao, Q. Design and experiment of transplanting machine for cabbage substrate block seedlings. INMATEH Agric. Eng. 2021, 64, 375–384. [Google Scholar] [CrossRef]

- Yang, R.; Chen, M.; Lu, X.; He, Y.; Li, Y.; Xu, M.; Li, M.; Huang, W.; Liu, F. Integrating UAV remote sensing and semi-supervised learning for early-stage maize seedling monitoring and geolocation. Plant Phenomics 2025, 7, 100011. [Google Scholar] [CrossRef]

- Wu, S.; Ma, X.; Jin, Y.; Yang, J.; Zhang, W.; Zhang, H.; Wang, H.; Chen, Y.; Lin, C.; Qi, L. A novel method for detecting missing seedlings based on UAV images and rice transplanter operation information. Comput. Electron. Agric. 2025, 229, 109789. [Google Scholar] [CrossRef]

- Gao, J.; Tan, F.; Cui, J.; Hou, Z. Unmanned aerial vehicle image detection of maize-YOLOv8n seedling leakage. Front. Plant Sci. 2025, 16, 1569229. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldu, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Dalal, M.; Mittal, P. A Systematic Review of Deep Learning-Based Object Detection in Agriculture: Methods, Challenges, and Future Directions. Comput. Mater. Contin. 2025, 84, 57–91. [Google Scholar] [CrossRef]

- Liao, J.; Wang, Y.; Yin, J.; Liu, L.; Zhang, S.; Zhu, D. Segmentation of Rice Seedlings Using the YCrCb Color Space and an Improved Otsu Method. Agronomy 2018, 8, 269. [Google Scholar] [CrossRef]

- Fang, X.; Zhen, T.; Li, Z. Lightweight Multiscale CNN Model for Wheat Disease Detection. Appl. Sci. 2023, 13, 5801. [Google Scholar] [CrossRef]

- Latif, G.; Abdelhamid, S.E.; Mallouhy, R.E.; Alghazo, J.; Kazimi, Z.A. Deep Learning Utilization in Agriculture: Detection of Rice Plant Diseases Using an Improved CNN Model. Plants 2022, 11, 2230. [Google Scholar] [CrossRef]

- Li, M.-W.; Chan, Y.-K.; Yu, S.-S. Use of CNN for Water Stress Identification in Rice Fields Using Thermal Imagery. Appl. Sci. 2023, 13, 5423. [Google Scholar] [CrossRef]

- Li, S.; Li, K.; Qiao, Y.; Zhang, L. A multi-scale cucumber disease detection method in natural scenes based on YOLOv5. Comput. Electron. Agric. 2022, 202, 107363. [Google Scholar] [CrossRef]

- Zhang, H.; Fu, Z.; Han, W.; Yang, G.; Niu, D.; Zhou, X. Detection method of maize seedlings number based on improved YOLO. Trans. Chin. Soc. Agric. Mach. 2021, 52, 221–229. [Google Scholar] [CrossRef]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R-CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Pan, Y.; Zhu, N.; Ding, L.; Li, X.; Goh, H.-H.; Han, C.; Zhang, M. Identification and Counting of Sugarcane Seedlings in the Field Using Improved Faster R-CNN. Remote Sens. 2022, 14, 5846. [Google Scholar] [CrossRef]

- Sun, Z.; Zhang, C.; Ge, L.; Zhang, M.; Li, W.; Tan, Y. Image detection method for broccoli seedlings in field based on Faster R-CNN. Trans. Chin. Soc. Agric. Mach. 2019, 50, 216–221. [Google Scholar] [CrossRef]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Yao, M.; Shi, J.; Hu, J. Seedling-YOLO: High-Efficiency Target Detection Algorithm for Field Broccoli Seedling Transplanting Quality Based on YOLOv7-Tiny. Agronomy 2024, 14, 931. [Google Scholar] [CrossRef]

- Wu, X.; Guo, W.; Zhu, Y.; Zhu, H.; Wu, H. Transplant status detection algorithm of cabbage in the field based on improved YOLOv8s. Smart Agric. 2024, 6, 107–117. [Google Scholar] [CrossRef]

- Zheng, H.; Zhou, L.; Wang, Q. Research on detection method of potato seedling based on YOLO-PS. J. Chin. Agric. Mech. 2024, 45, 245–250. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, W.; Hu, X.; Wang, L.; Song, Z.; Wang, J. Rice planting machinery operation quality detection based on Improved YOLO v8s. Trans. Chin. Soc. Agric. Mach. 2024, 55, 61–70. [Google Scholar] [CrossRef]

- Cui, J.; Zheng, H.; Zeng, Z.; Yang, Y.; Ma, R.; Tian, Y.; Tan, J.; Xiao, F.; Long, Q. Real-time missing seedling counting in paddy fields based on lightweight network and tracking-by-detection algorithm. Comput. Electron. Agric. 2023, 212, 108045. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, X.; Zheng, W.; Lv, Z.; Zhang, W. Design of a Sweet Potato Transplanter Based on a Robot Arm. Appl. Sci. 2021, 11, 9349. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, Y.; Li, S.; Liu, P. The Extraction Method of Navigation Line for Cuttage and Film Covering Multi-Functional Machine for Low Tunnels. Inventions 2022, 7, 113. [Google Scholar] [CrossRef]

- Perugachi-Diaz, Y.; Tomczak, J.; Bhulai, S. Deep learning for white cabbage seedling prediction. Comput. Electron. Agric. 2021, 184, 106059. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Yang, Y.; Guo, R.; Yang, J.; Yue, J.; Wang, Y. A high-precision detection method of hydroponic lettuce seedlings status based on improved Faster RCNN. Comput. Electron. Agric. 2021, 182, 106054. [Google Scholar] [CrossRef]

- He, L.; Li, Y.; An, X.; Yao, H. Real-time monitoring system for evaluating the operational quality of rice transplanters. Comput. Electron. Agric. 2025, 234, 110204. [Google Scholar] [CrossRef]

- Wu, T.; Zhang, Q.; Wu, J.; Liu, Q.; Su, J.; Li, H. An improved YOLOv5s model for effectively predict sugarcane seed replenishment positions verified by a field re-seeding robot. Comput. Electron. Agric. 2023, 214, 108280. [Google Scholar] [CrossRef]

- Gu, Z.; He, D.; Huang, J.; Chen, J.; Wu, X.; Huang, B.; Dong, T.; Yang, Q.; Li, H. Simultaneous detection of fruits and fruiting stems in mango using improved YOLOv8 model deployed by edge device. Comput. Electron. Agric. 2024, 227, 109512. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A Real-Time Apple Targets Detection Method for Picking Robot Based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Huang, X.; Chen, W.; Hu, Z.; Chen, L. An AI Edge Computing-Based Robotic Arm Automated Guided Vehicle System for Harvesting Pitaya. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–9 January 2022. [Google Scholar] [CrossRef]

- Zhang, X.; Jing, M.; Yuan, Y.; Yin, Y.; Li, K.; Wang, C. Tomato seedling classification detection using improved YOLOv3-Tiny. Trans. Chin. Soc. Agric. Eng. 2022, 38, 221–229. [Google Scholar] [CrossRef]

- Zhao, X.; Fang, J.; Zhao, Y. Tomato Potting Seedling Classification and Recognition Model Based on Improved YOLOv5s. Sci. Technol. Eng. 2024, 24, 11774–11785. [Google Scholar] [CrossRef]

- Jing, M.; Kong, D.; Zhang, X.; Wang, P.; Yuan, Y.; Feng, S.; Li, J. A Study on Tomato Seedling Grading Detection Based on Deep Learning. J. Hebei Agric. Univ. 2023, 46, 118–124. [Google Scholar] [CrossRef]

- Vavrina, C.; Shuler, K.; Gilreath, P. Evaluating the Impact of Transplanting Depth on Bell Pepper Growth and Yield. Hort Sci. 1994, 29, 1133–1135. [Google Scholar] [CrossRef]

- Wang, Y.; He, Z.; Wang, J.; Wu, C.; Yu, G.; Tang, H. Experiment on transplanting performance of automatic vegetable pot seedling transplanter for dry land. Trans. Chin. Soc. Agric. Eng. 2018, 34, 19–25. [Google Scholar] [CrossRef]

- Ouyang, D.; Su, H.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

| Dataset | Classification of Tomato Seedlings After Planting | Total | |||

|---|---|---|---|---|---|

| Qualified Seedling | Exposed Seedling | Covered Seedling | Missed Hill | ||

| Training set | 1860 | 1674 | 1716 | 1848 | 7098 |

| Validation set | 86 | 83 | 84 | 85 | 338 |

| Detection set | 45 | 39 | 42 | 44 | 170 |

| Total | 1991 | 1796 | 1842 | 1977 | 7606 |

| Hardware | Configuration | Tool | Version |

|---|---|---|---|

| System | Windows 11 | Python | 3.10.16 |

| CPU | Intel i5-12600 KF | PyTorch | 2.5.1 + cu124 |

| GPU | RTX4070-super (12 GB) | Torchvision | 0.20.1 + cu124 |

| RAM | 32 GB | Torchaudio | 2.5.1 + cu124 |

| Training Parameter | Value |

|---|---|

| weight | yolov8n.pt |

| batch-size | 32 |

| epochs | 100 |

| amp | True |

| workers | 8 |

| imgsz | 640 × 640 |

| optimizer | Adam |

| seed | 0 |

| cos-lr | False |

| lr0 | 0.001 |

| lrf | 0.01 |

| momentum | 0.937 |

| weight_decay | 0.0005 |

| mosaic | 1.0 |

| Evaluation Metric | Full Name | Computational Formula |

|---|---|---|

| P | Precision rate | |

| R | Recall rate | |

| AP | Average Precision |

| Evaluation Metric | Full name | Computational Formula |

|---|---|---|

| Parameters (Conv) | Parameters in convolutional layers | |

| Parameters (FC) | Parameters in fully connected layers | |

| FLOPs (Conv) | Floating-point operations for convolution | |

| FLOPs (Pool) | Floating-point operations for pooling | |

| FLOPs (FC) | Floating-point operations for fully connected layers | |

| Size (MB) | Model storage size | |

| FPS | Frames processed per second |

| Group | Precision (%) | Recall (%) | mAP@0.5 (%) | Parameters (M) | FLOPs (G) | Size (MB) | FPS (BS = 1) |

|---|---|---|---|---|---|---|---|

| X + EMA | 90.0 | 96.5 | 97.4 | 2.81 | 7.4 | 5.7 | 86 |

| X + SE | 88.4 | 95.1 | 97.2 | 2.81 | 7.4 | 5.7 | 87 |

| X + CBAM | 89.8 | 95.3 | 96.8 | 2.82 | 7.4 | 5.8 | 86 |

| X + CA | 87.7 | 95.8 | 97.2 | 2.94 | 7.5 | 5.9 | 85 |

| X + ECA | 87.9 | 94.9 | 97.1 | 2.80 | 7.4 | 5.6 | 88 |

| X + SimAM | 88.3 | 94.3 | 96.6 | 2.80 | 7.4 | 5.6 | 88 |

| Group | Precision (%) | Recall (%) | mAP@0.5 (%) | FPS (BS = 1) |

|---|---|---|---|---|

| CIoU | 90.0 | 88.8 | 95.1 | 81 |

| DIoU | 90.2 | 92.5 | 96.4 | 81 |

| EIoU | 89.2 | 93.0 | 96.5 | 63 |

| SIoU | 92.1 | 91.8 | 95.9 | 84 |

| GIoU | 90.0 | 96.5 | 97.4 | 86 |

| Case | EMA | S-N | GIOU | Precision (%) | Recall (%) | mAP@0.5 (%) | Parameters (M) | FLOPs (G) | Size (MB) | FPS (BS = 1) |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | × | × | × | 90.6 | 92.3 | 95.2 | 3.01 | 8.2 | 6.2 | 115 |

| 2 | √ | × | × | 92.3 | 91.6 | 95.9 | 3.02 | 8.3 | 6.3 | 106 |

| 3 | × | √ | × | 89.3 | 93.2 | 95.9 | 2.80 | 7.4 | 5.6 | 82 |

| 4 | × | × | √ | 88.9 | 90.0 | 96.4 | 3.01 | 8.2 | 6.2 | 118 |

| 5 | √ | √ | × | 90.0 | 88.8 | 95.1 | 2.81 | 7.4 | 5.7 | 81 |

| 6 | √ | × | √ | 92.8 | 90.7 | 97.0 | 3.02 | 8.3 | 6.3 | 110 |

| 7 | × | √ | √ | 88.1 | 91.5 | 94.8 | 2.80 | 7.4 | 5.6 | 84 |

| 8 | √ | √ | √ | 90.0 | 96.5 | 97.4 | 2.81 | 7.4 | 5.7 | 86 |

| Neck Network of Group 6 | Neck Network of Group 8 | ||||

|---|---|---|---|---|---|

| Layers | FLOPs/G | Parameters | Layers | FLOPs/G | Parameters |

| Upsample | 0.00 | 0 | Upsample | 0.00 | 0 |

| Concat | 0.00 | 0 | Concat | 0.00 | 0 |

| C2f | 0.48 | 148,224 | VoVGSCSP | 0.30 | 129,600 |

| Upsample | 0.00 | 0 | Upsample | 0.00 | 0 |

| Concat | 0.00 | 0 | Concat | 0.00 | 0 |

| C2f | 0.48 | 37,248 | VoVGSCSP | 0.31 | 33,056 |

| Conv | 0.12 | 36,992 | GSConv | 0.06 | 19,360 |

| Concat | 0.00 | 0 | Concat | 0.00 | 0 |

| C2f | 0.40 | 123,648 | VoVGSCSP | 0.22 | 105,024 |

| Conv | 0.12 | 147,712 | GSConv | 0.06 | 75,584 |

| Concat | 0.00 | 0 | Concat | 0.00 | 0 |

| C2f | 0.40 | 493,056 | VoVGSCSP | 0.22 | 414,848 |

| EMA | 0.10 | 10,368 | EMA | 0.10 | 10,368 |

| Total | 2.10 | 997,248 | Total | 1.27 | 787,840 |

| Model | Precision (%) | Recall (%) | mAP@0.5 (%) | Parameter (M) | FLOPs (G) | Size (MB) | FPS (BS = 1) |

|---|---|---|---|---|---|---|---|

| YOLOv3-tiny | 81.9 | 85.2 | 88.1 | 8.70 | 12.9 | 17.4 | 69 |

| YOLOv5n | 87.4 | 88.9 | 90.2 | 2.50 | 7.2 | 5.1 | 125 |

| YOLOv7-tiny | 88.8 | 89.6 | 91.7 | 6.05 | 13.2 | 12.4 | 75 |

| YOLOv8n | 90.6 | 92.3 | 95.2 | 3.01 | 8.2 | 6.2 | 115 |

| ESG-YOLO | 90.0 | 96.5 | 97.4 | 2.81 | 7.4 | 5.7 | 86 |

| Set | YOLOv8n | ESG-YOLO | ||||

|---|---|---|---|---|---|---|

| P | R | AP | P | R | AP | |

| Qualified seedling | 94.8% | 92.5% | 98.0% | 94.8% | 96.9% | 98.9% |

| Exposed seedling | 92.2% | 98.8% | 98.4% | 90.7% | 98.8% | 98.8% |

| Covered seedling | 82.6% | 97.7% | 96.0% | 81.2% | 98.8% | 97.7% |

| Missed hill | 92.9% | 80.2% | 88.3% | 93.3% | 91.5% | 94.0% |

| Hardware/Software Environment | Full Name |

|---|---|

| Development board | NVIDIA Jetson TX2 NX |

| Operating system | Ubuntu 18.04 |

| Python | 3.8.20 |

| Torch | 1.11.0 |

| Torchvision | 0.12.0 |

| CUDA | 10.2.300 |

| CuDNN | 8.2.1.32 |

| TensorRT | 8.2.1.9 |

| Ultralytics | 8.2.50 |

| Timm | 1.0.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Dong, Z.; Wang, C.; Zhu, Z.; Guo, Y.; Zheng, S. ESG-YOLO: An Efficient Object Detection Algorithm for Transplant Quality Assessment of Field-Grown Tomato Seedlings Based on YOLOv8n. Agronomy 2025, 15, 2088. https://doi.org/10.3390/agronomy15092088

Wu X, Dong Z, Wang C, Zhu Z, Guo Y, Zheng S. ESG-YOLO: An Efficient Object Detection Algorithm for Transplant Quality Assessment of Field-Grown Tomato Seedlings Based on YOLOv8n. Agronomy. 2025; 15(9):2088. https://doi.org/10.3390/agronomy15092088

Chicago/Turabian StyleWu, Xinhui, Zhenfa Dong, Can Wang, Ziyang Zhu, Yanxi Guo, and Shuhe Zheng. 2025. "ESG-YOLO: An Efficient Object Detection Algorithm for Transplant Quality Assessment of Field-Grown Tomato Seedlings Based on YOLOv8n" Agronomy 15, no. 9: 2088. https://doi.org/10.3390/agronomy15092088

APA StyleWu, X., Dong, Z., Wang, C., Zhu, Z., Guo, Y., & Zheng, S. (2025). ESG-YOLO: An Efficient Object Detection Algorithm for Transplant Quality Assessment of Field-Grown Tomato Seedlings Based on YOLOv8n. Agronomy, 15(9), 2088. https://doi.org/10.3390/agronomy15092088