A Non-Destructive System Using UVE Feature Selection and Lightweight Deep Learning to Assess Wheat Fusarium Head Blight Severity Levels

Abstract

1. Introduction

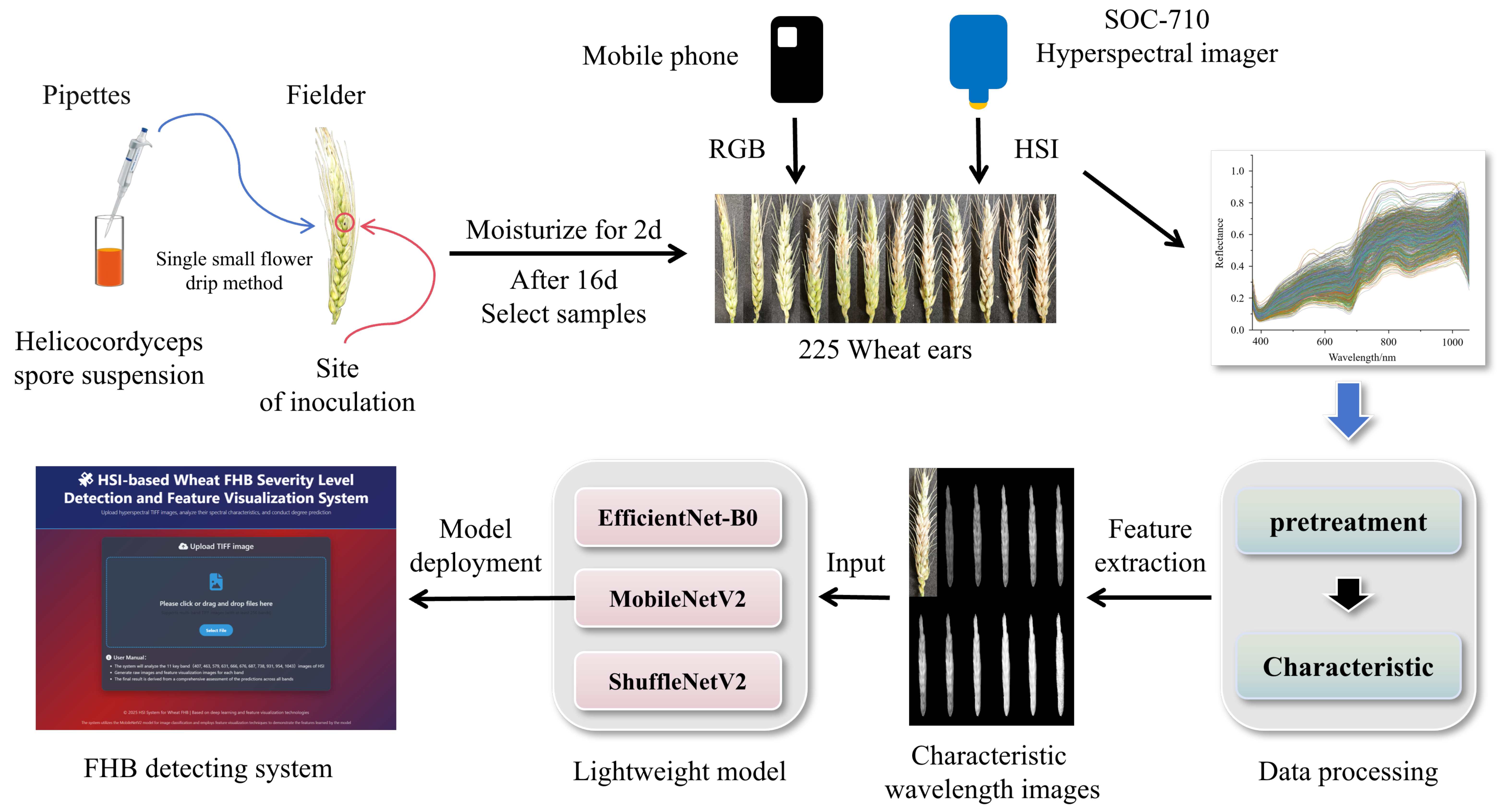

2. Materials and Methods

2.1. Experimental Materials and Sample Preparation

2.2. Data Acquisition

2.2.1. Phenotypic Image Data

2.2.2. Hyperspectral Image Data

2.3. Data Processing

2.3.1. HSI Pretreatment

2.3.2. Selection of HSI Characteristic Wavelength

2.3.3. Lightweight Model

2.4. Experimental Hyperparameter Setting

2.5. Evaluating Indicator

2.6. System Development

3. Results

3.1. Results of Data Processing

3.1.1. HSI Reflectivity Characteristics

3.1.2. HSI Data Preprocessing

3.1.3. Selection of Characteristic Wavelengths

3.2. Comparison of Different Detection Models

3.3. Model Deployment

4. Discussion

5. Conclusions

- Comparison of preprocessing methods revealed significant variation in discrimination ability across severity levels within the 370–1100 nm range. The combined MSC-UVE approach performed best, identifying 11 characteristic bands with improved discrimination. FHB spectral features mainly concentrate in regions linked to chlorophyll degradation (590–680 nm), water stress (930–1043 nm), and cell wall breakdown (approximately 738 nm), reflecting chlorophyll loss, photosystem II damage, and structural changes. Using these features, a MobileNetV2 model reached 99.93% mAP in training and 98.26% precision on test data, balancing high accuracy with operational efficiency and a small size of 8.50 MB.

- Nevertheless, lab-developed models show limited robustness in complex field environments with varying light, background, and cultivars. Further study of subtle early FHB traits and accurate selection of representative spectral bands is needed to improve the model’s usefulness in early precision management. To reduce the high cost of HSI technology, data collection can be optimized by focusing on key growth and stress stages. Alternatively, lower-cost multispectral systems with custom filters based on HSI-identified bands can be adopted, significantly cutting hardware cost while preserving critical information.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

References

- Wegulo, N.S.; Baenziger, S.P.; Nopsa, H.J.; Bockus, W.W.; Adams, H.H. Management of Fusarium head blight of wheat and barley. Crop Prot. 2015, 73, 100–107. [Google Scholar] [CrossRef]

- Mesterhazy, A. What is Fusarium Head Blight (FHB) Resistance and What Are Its Food Safety Risks in Wheat? Problems and Solutions—A Review. Toxins 2024, 16, 31. [Google Scholar] [CrossRef]

- Shang, S.; He, S.; Zhao, R.; Li, H.; Fang, Y.; Hu, Q. Fumarylacetoacetate hydrolase targeted by a Fusarium graminearum effector positively regulates wheat FHB resistance. Nat. Commun. 2025, 16, 5582. [Google Scholar] [CrossRef]

- Francis, F.; Florian, R.; Tarek, A.; Thierry, L.; Ludovic, B. Searching for FHB Resistances in Bread Wheat: Susceptibility at the Crossroad. Front. Plant Sci. 2020, 11, 731. [Google Scholar] [CrossRef]

- Li, X.; Zhao, L.; Fan, Y.; Jia, Y.; Sun, L.; Ma, S.; Ji, C.; Ma, Q.; Zhang, J. Occurrence of mycotoxins in feed ingredients and complete feeds obtained from the Beijing region of China. Anim. Sci. Biotechnol. 2014, 5, 37. [Google Scholar] [CrossRef]

- Bruce, D.; William, W.W. Risk premiums due to Fusarium Head Blight (FHB) in wheat and barley. Agric. Syst. 2018, 162, 145–153. [Google Scholar] [CrossRef]

- Alikarami, M.; Saremi, H. Fusarium Head Blight management with nanotechnology: Advances and future prospects. Physiol. Mol. Plant Pathol. 2025, 139, 102782. [Google Scholar] [CrossRef]

- Zhang, J.; Sun, S.; Guo, Y.; Ren, F.; Sheng, G.; Wu, H.; Zhao, B.; Cai, Y.; Gu, C.; Duan, Y. Risk assessment and resistant mechanism of Fusarium graminearum to fluopyram. Pestic. Biochem. Physiol. 2025, 212, 106449. [Google Scholar] [CrossRef]

- Chen, B.; Shen, X.; Li, Z.; Wang, J.; Li, X.; Xu, Z.; Shen, Y.; Lei, Y.; Huang, X.; Wang, X.; et al. Antibody generation and rapid immunochromatography using time-resolved fluorescence microspheres for propiconazole: Fungicide abused as growth regulator in vegetable. Foods 2022, 11, 324. [Google Scholar] [CrossRef] [PubMed]

- Dominik, R.; Lukas, P.; Ludwig, R.; Anja, H.; Daniel, C.; Ole, P.N.; Torstem, S. Efficient Noninvasive FHB Estimation using RGB Images from a Novel Multiyear, Multirater Dataset. Plant Phenomics 2023, 5, 0068. [Google Scholar] [CrossRef] [PubMed]

- Qiu, M.; Zheng, S.; Tang, L.; Hu, X.; Xu, Q.; Zheng, L.; Weng, S. Raman Spectroscopy and Improved Inception Network for Determination of FHB-Infected Wheat Kernels. Foods 2022, 11, 578. [Google Scholar] [CrossRef] [PubMed]

- Ba, W.; Jin, X.; Lu, J.; Rao, Y.; Zhang, T.; Zhang, X.; Zhou, J.; Li, S. Research on predicting early Fusarium head blight with asymptomatic wheat grains by micro-near infrared spectrometer. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2023, 287 Pt 1, 122047. [Google Scholar] [CrossRef]

- Liang, K.; Ren, Z.; Song, J.; Yuan, R.; Zhang, Q. Wheat FHB resistance assessment using hyperspectral feature band image fusion and deep learning. Int. J. Agric. Biol. Eng. 2024, 17, 240–249. [Google Scholar] [CrossRef]

- Song, A.; Guo, X.; Wen, W.; Wang, C.; Gu, S.; Chen, X.; Wang, J.; Zhao, C. Improving accuracy and generalization in single kernel oil characteristics prediction in maize using NIR-HSI and a knowledge-injected spectral tabtransformer. Artif. Intell. Agric. 2025, 15, 802–815. [Google Scholar] [CrossRef]

- Guerri, M.F.; Distante, C.; Spagnolo, P.; Ahmed, T.A. Boosting hyperspectral image classification with Gate-Shift-Fuse mechanisms in a novel CNN-Transformer approach. Comput. Electron. Agric. 2025, 237, 110489. [Google Scholar] [CrossRef]

- Li, S.; Sun, L.; Jin, X.; Feng, G.; Zhang, L.; Bai, H.; Wang, Z. Research on variety identification of common bean seeds based on hyperspectral and deep learning. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2025, 326, 125212. [Google Scholar] [CrossRef]

- Sylvain, L.; Olivier, D.C.; Benoît, C.; Vera, G.; Delphine, G.; Eric, M.; Dominique, V.D.S.; Fabrice, R.; Olivier, B. Phylogeny and Sequence Space: A Combined Approach to Analyze the Evolutionary Trajectories of Homologous Proteins. The Case Study of Aminodeoxychorismate Synthase. Acta Biotheor. 2020, 68, 139–156. [Google Scholar] [CrossRef]

- Benoît, C.; Laurent, V.; Sylvain, L.; Denys, D. MING: An interpretative support method for visual exploration of multidimensional data. Inf. Vis. 2022, 21, 246–269. [Google Scholar] [CrossRef]

- Colange, B.; Vuillon, L.; Lespinats, S.; Dutykh, D. Interpreting Distortions in Dimensionality Reduction by Superimposing Neighbourhood Graphs. In Proceedings of the IEEE Visualization Conference (VIS), Vancouver, BC, Canada, 20–25 October 2019; pp. 211–215. [Google Scholar] [CrossRef]

- Colange, B.; Peltonen, J.; Aupetit, M.; Dutykh, D.; Lespinats, S. Steering distortions to preserve classes and neighbors in supervised dimensionality reduction. In Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS ‘20), Vancouver, BC, Canada, 6–12 December 2020; Volume 1108, pp. 13214–13225. [Google Scholar]

- Geoffroy, H.; Berger, J.; Colange, B.; Lespinats, S.; Dutykh, D. The use of dimensionality reduction techniques for fault detection and diagnosis in a AHU unit: Critical assessment of its reliability. J. Build. Perform. Simul. 2023, 16, 249–267. [Google Scholar] [CrossRef]

- Chardome, G.; Feldheim, V. Thermal Modelling of Earth Air Heat Exchanger (EAHE) and Analyse of Health Risk. In Proceedings of the Building Simulation 2019: 16th Conference of IBPSA, Rome, Italy, 2–4 September 2019; Volume 16, pp. 1964–1970. [Google Scholar] [CrossRef]

- Yu, J.; Bai, G.; Cai, S.; Ban, T. Marker-assisted characterization of Asian wheat lines for resistance to Fusarium head blight. Theor. Appl. Genet. 2006, 113, 308–320. [Google Scholar] [CrossRef] [PubMed]

- GB/T 15796-2011; Ministry of Agriculture of the People’s Republic of China. Rules for Monitoring and Forecast of the Wheat Head Blight (Fusarium graminearum Schw./Gibberella zeae (Schw.) Petch). China Standards Press: Beijing, China, 2011.

- Plaza, A.; Benediktsson, J.A.; Boardman, W.J.; Brazile, J.; Bruzzone, L.; Valls, C.G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2007, 113 (Suppl. 1), S110–S122. [Google Scholar] [CrossRef]

- Gai, Z.; Sun, L.; Bai, H.; Li, X.; Wang, J.; Bai, S. Convolutional neural network for apple bruise detection based on hyperspectral. Spectrochim. Acta Part A: Mol. Biomol. Spectrosc. 2022, 279, 121432. [Google Scholar] [CrossRef]

- He, Y.; Zhao, Y.; Xu, J.; Zhou, D.; Shi, W.; Wang, Y.; Wang, Y.; Wang, X.; Zhang, M.; Kang, N.; et al. Hyperspectral Imaging for Benign and Malignant Diagnosis of Breast Tumors. J. Biophotonics 2025, e202500188. [Google Scholar] [CrossRef] [PubMed]

- Jiang, X.; Cao, X.; Liu, Q.; Wang, F.; Fan, S.; Yan, L.; Wei, Y.; Chen, Y.; Yang, G.; Xu, B.; et al. Prediction of multi-task physicochemical indices based on hyperspectral imaging and analysis of the relationship between physicochemical composition and sensory quality of tea. Food Res. Int. 2025, 211, 116455. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.; Meng, Q.; Wu, Z.; Tang, L.; Qiu, Z.; Ni, C.; Chu, J.; Fang, J.; Huang, Y.; Li, Y. Accurate ripening stage classification of pineapple based on visible and near-infrared hyperspectral imaging system. J. AOAC Int. 2025, 108, 293–303. [Google Scholar] [CrossRef]

- Yuan, M.; Ding, L.; Bai, R.; Yang, J.; Zhan, Z.; Zhao, Z.; Hu, Q.; Huang, L. Feature-level hyperspectral data fusion with CNN modeling for non-destructive authentication of “Weilian” from different origins. Microchem. J. 2025, 215, 114201. [Google Scholar] [CrossRef]

- Zhang, M.; Tang, S.; Lin, C.; Lin, Z.; Zhang, L.; Dong, W.; Zhong, N. Hyperspectral Imaging and Machine Learning for Diagnosing Rice Bacterial Blight Symptoms Caused by Xanthomonas oryzae pv. oryzae, Pantoea ananatis and Enterobacter asburiae. Plants 2025, 14, 733. [Google Scholar] [CrossRef]

- Wei, X.; Deng, C.; Fang, W.; Xie, C.; Liu, S.; Lu, M.; Wang, F.; Wang, Y. Classification method for folded flue-cured tobacco based on hyperspectral imaging and conventional neural networks. Ind. Crops Prod. 2024, 212, 118279. [Google Scholar] [CrossRef]

- Tyagi, N.; Porwal, S.; Singh, P.; Raman, B.; Garg, N. Nondestructive Identification of Wheat Species using Deep Convolutional Networks with Oversampling Strategies on Near-Infrared Hyperspectral Imagery. J. Nondestruct. Eval. 2024, 44, 5. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, B.; Li, S.; Yang, X.; Yu, M.; Li, Z. Multimodal Diagnostic Approach for Osteosarcoma and Bone Callus Using Hyperspectral Imaging and Deep Learning. J. Biophotonics 2025, 18, e202500087. [Google Scholar] [CrossRef]

- Liu, B.; Gao, K.; Yu, A.; Ding, L.; Qiu, C.; Li, J. ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images. Remote Sens. 2022, 14, 4236. [Google Scholar] [CrossRef]

- Li, X.; Peng, F.; Wei, Z.; Han, G. Identification of yellow vein clearing disease in lemons based on hyperspectral imaging and deep learning. Front. Plant Sci. 2025, 16, 1554514. [Google Scholar] [CrossRef]

- Mao, R.; Wang, Z.; Li, F.; Zhou, J.; Chen, Y.; Hu, X. GSEYOLOX-s: An Improved Lightweight Network for Identifying the Severity of Wheat Fusarium Head Blight. Agronomy 2023, 13, 242. [Google Scholar] [CrossRef]

- Khandagale, P.H.; Patil, T.S.; Gavali, S.V.; Manjrekar, A.A.; Halkarnikar, P.P. Enhancing fruit disease classification with an advanced 3D shallow deep neural network for precise and efficient identification. Expert Syst. Appl. 2025, 293, 128559. [Google Scholar] [CrossRef]

- Kurmi, Y.; Saxena, P.; Kirar, S.B.; Gangwar, S.; Chaurasia, V.; Goel, A. Deep CNN model for crops’ diseases detection using leaf images. Multidimens. Syst. Signal Process. 2022, 33, 981–1000. [Google Scholar] [CrossRef]

- Zhou, Q.; Huang, Z.; Liu, L.; Wang, F.; Teng, Y.; Liu, H.; Zhang, Y.; Wang, R. High-throughput spike detection and refined segmentation for wheat Fusarium head blight in complex field environments. Comput. Electron. Agric. 2024, 227, 109552. [Google Scholar] [CrossRef]

- Diao, Z.; Yan, J.; He, Z.; Zhao, S.; Guo, P. Corn seedling recognition algorithm based on hyperspectral image and lightweight-3D-CNN. Comput. Electron. Agric. 2022, 201, 107343. [Google Scholar] [CrossRef]

- Almoujahed, M.B.; Apolo, O.E.A.; Alhussein, M.; Kazlauskas, M.; Kriaučiūnienė, Z.; Šarauskis, E.; Mouazen, M.A. Deoxynivalenol prediction and spatial mapping in wheat based on online hyperspectral imagery scanning. Smart Agric. Technol. 2025, 11, 100947. [Google Scholar] [CrossRef]

- Mahlein, K.A.; Oerke, C.E.; Steiner, U.; Dehne, W.H. Recent advances in sensing plant diseases for precision crop protection. Eur. J. Plant Pathol. 2012, 133, 197–209. [Google Scholar] [CrossRef]

- Carter, G.A.; Knapp, A.K. Leaf optical properties in higher plants: Linking spectral characteristics to stress and chlorophyll concentration. Am. J. Bot. 2001, 88, 677–684. [Google Scholar] [CrossRef]

- Blackburn, A.G. Hyperspectral remote sensing of plant pigments. J. Exp. Bot. 2006, 58, 855–867. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, Z.; Wang, J.; Ding, J.; Yu, Y.; Li, J.; Xiao, N.; Jiang, L.; Zheng, Y.; Rimmington, M.G. Monitoring plant response to phenanthrene using the red edge of canopy hyperspectral reflectance. Mar. Pollut. Bull. 2014, 86, 332–341. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, Q.; Lin, F.; Yin, X.; Gu, C.; Qiao, H. Development and evaluation of a new spectral disease index to detect wheat Fusarium head blight using hyperspectral imaging. Sensors 2020, 20, 2260. [Google Scholar] [CrossRef] [PubMed]

- Weber, V.S.; Araus, J.L.; Cairns, J.E.; Sanchez, C.; Melchinger, A.E.; Orsini, E. Prediction of grain yield using reflectance spectra of canopy and leaves in maize plants grown under different water regimes. Field Crops Res. 2012, 128, 82–90. [Google Scholar] [CrossRef]

- Sankaran, S.; Mishra, A.; Reza, E.; Davis, C. A review of advanced techniques for detecting plant diseases. Comput. Electron. Agric. 2010, 72, 1–13. [Google Scholar] [CrossRef]

- Damien, V.; Damien, E.; Guillaume, J.; Anne, C.; Antonio, J.P.F.; François, S.; Vincent, B.; Benoît, M.; Philippe, V. Near infrared hyperspectral imaging method to assess Fusarium head blight infection on winter wheat ears. Microchem. J. 2023, 191, 108812. [Google Scholar] [CrossRef]

| FHB Level | Infection Area | Sample Size | |

|---|---|---|---|

| Before Expansion | After Expansion | ||

| Level 1 | <1/4 | 12 | 372 |

| Level 2 | 1/4~1/2 | 196 | 392 |

| Level 3 | 1/2~3/4 | 448 | 448 |

| Level 4 | >3/4 | 224 | 448 |

| Configuration Item | Value |

|---|---|

| CPU | ADM Ryzen 9 7940H |

| Integrated GPU | AMD Radeon 780 M Graphics |

| Dedicated GPU | NVIDIA GeForce RTX 4060 Laptop GPU |

| System Memory (RAM) | 16 GB |

| CUDA Toolkit Version | 12.4 |

| Operating system | Windows 10 |

| Deep learning framework | PyTorch 2.4.1 |

| Pretreatment | Train Set | Test Set | Precision Discrepancy/% | ||

|---|---|---|---|---|---|

| Precision/% | F1-Score/% | Precision/% | F1-Score/% | ||

| Original | 95.54 | 95.53 | 90.16 | 90.09 | 5.38 |

| Normalized | 97.81 | 97.81 | 90.20 | 90.09 | 7.61 |

| SNV | 99.92 | 99.92 | 93.32 | 93.25 | 6.60 |

| MSC | 98.99 | 98.99 | 94.31 | 94.27 | 4.68 |

| SG | 95.12 | 95.12 | 91.16 | 91.06 | 3.96 |

| Feature Selection | Train Set | Test Set | Precision Discrepancy/% | Feature Count | ||

|---|---|---|---|---|---|---|

| Precision/% | F1-Score/% | Precision/% | F1-Score/% | |||

| UVE | 98.97 | 98.97 | 94.88 | 94.75 | 4.09 | 11 |

| RF | 95.88 | 95.88 | 90.06 | 89.74 | 5.82 | 20 |

| SPA | 98.09 | 98.09 | 93.10 | 92.78 | 4.99 | 16 |

| CARS | 97.79 | 97.79 | 93.93 | 93.75 | 3.86 | 14 |

| Methods | mAP (%) | F1-Score (%) | Recall (%) | Precision (%) | Parameters (MB) | FPS | LEI | ||

|---|---|---|---|---|---|---|---|---|---|

| Train | Train | Validation | Test | ||||||

| MobileNetV2 | 99.93 | 99.40 | 99.40 | 99.40 | 98.21 | 98.26 | 8.50 | 310 | 1.56 |

| EfficientNet-B0 | 99.99 | 99.97 | 99.97 | 99.97 | 99.55 | 99.47 | 15.30 | 131 | 0.93 |

| ShuffleNetV2 | 99.34 | 99.12 | 99.12 | 99.12 | 96.50 | 96.36 | 4.80 | 1178 | 2.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, X.; Yang, S.; Mu, L.; Shi, H.; Yao, Z.; Chen, X. A Non-Destructive System Using UVE Feature Selection and Lightweight Deep Learning to Assess Wheat Fusarium Head Blight Severity Levels. Agronomy 2025, 15, 2051. https://doi.org/10.3390/agronomy15092051

Liang X, Yang S, Mu L, Shi H, Yao Z, Chen X. A Non-Destructive System Using UVE Feature Selection and Lightweight Deep Learning to Assess Wheat Fusarium Head Blight Severity Levels. Agronomy. 2025; 15(9):2051. https://doi.org/10.3390/agronomy15092051

Chicago/Turabian StyleLiang, Xiaoying, Shuo Yang, Lin Mu, Huanrui Shi, Zhifeng Yao, and Xu Chen. 2025. "A Non-Destructive System Using UVE Feature Selection and Lightweight Deep Learning to Assess Wheat Fusarium Head Blight Severity Levels" Agronomy 15, no. 9: 2051. https://doi.org/10.3390/agronomy15092051

APA StyleLiang, X., Yang, S., Mu, L., Shi, H., Yao, Z., & Chen, X. (2025). A Non-Destructive System Using UVE Feature Selection and Lightweight Deep Learning to Assess Wheat Fusarium Head Blight Severity Levels. Agronomy, 15(9), 2051. https://doi.org/10.3390/agronomy15092051