Improving Rice Nitrogen Nutrition Index Estimation Using UAV Images Combined with Meteorological and Fertilization Variables

Abstract

1. Introduction

2. Materials and Methods

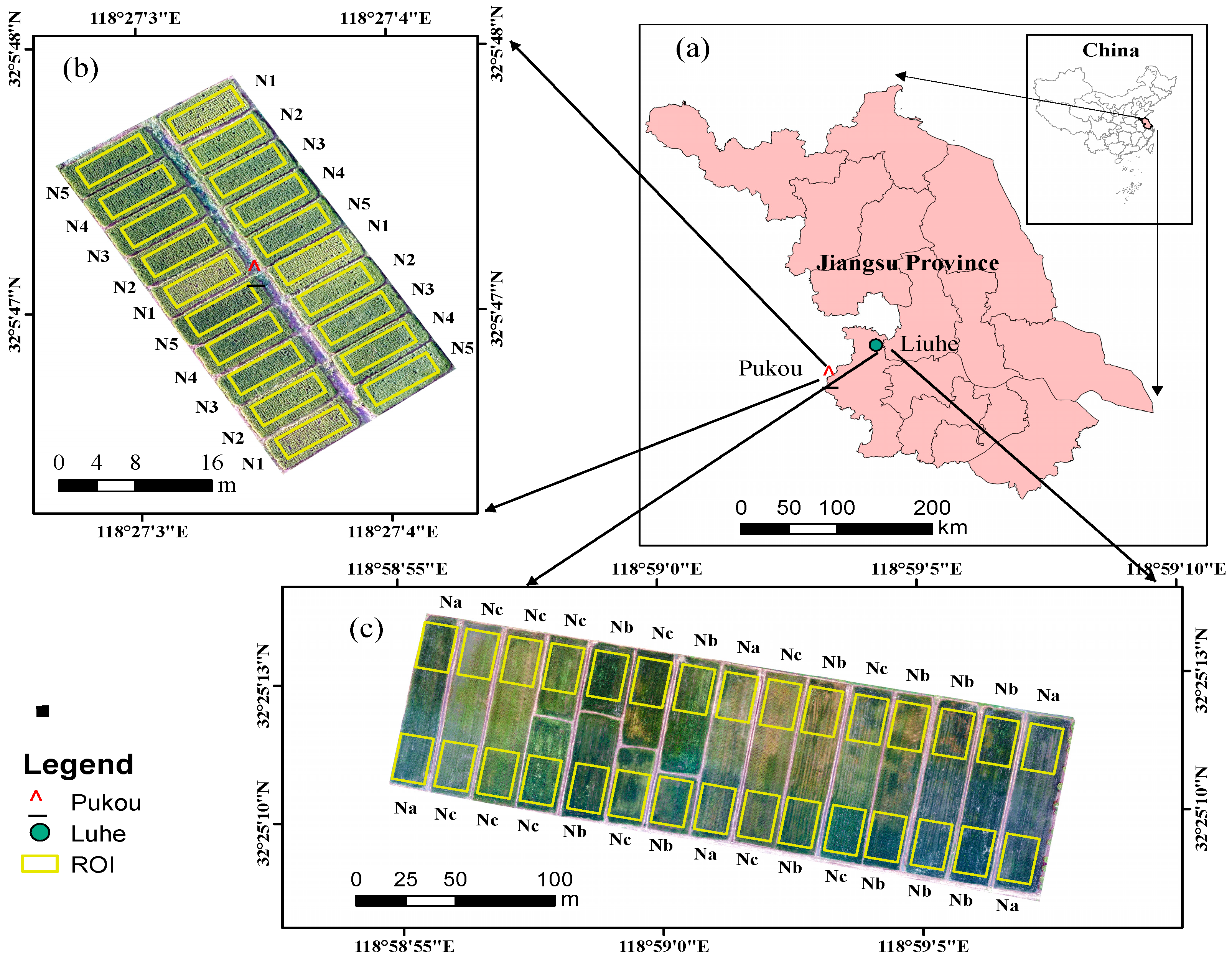

2.1. Experimental Design

2.2. Crop Data Acquisition

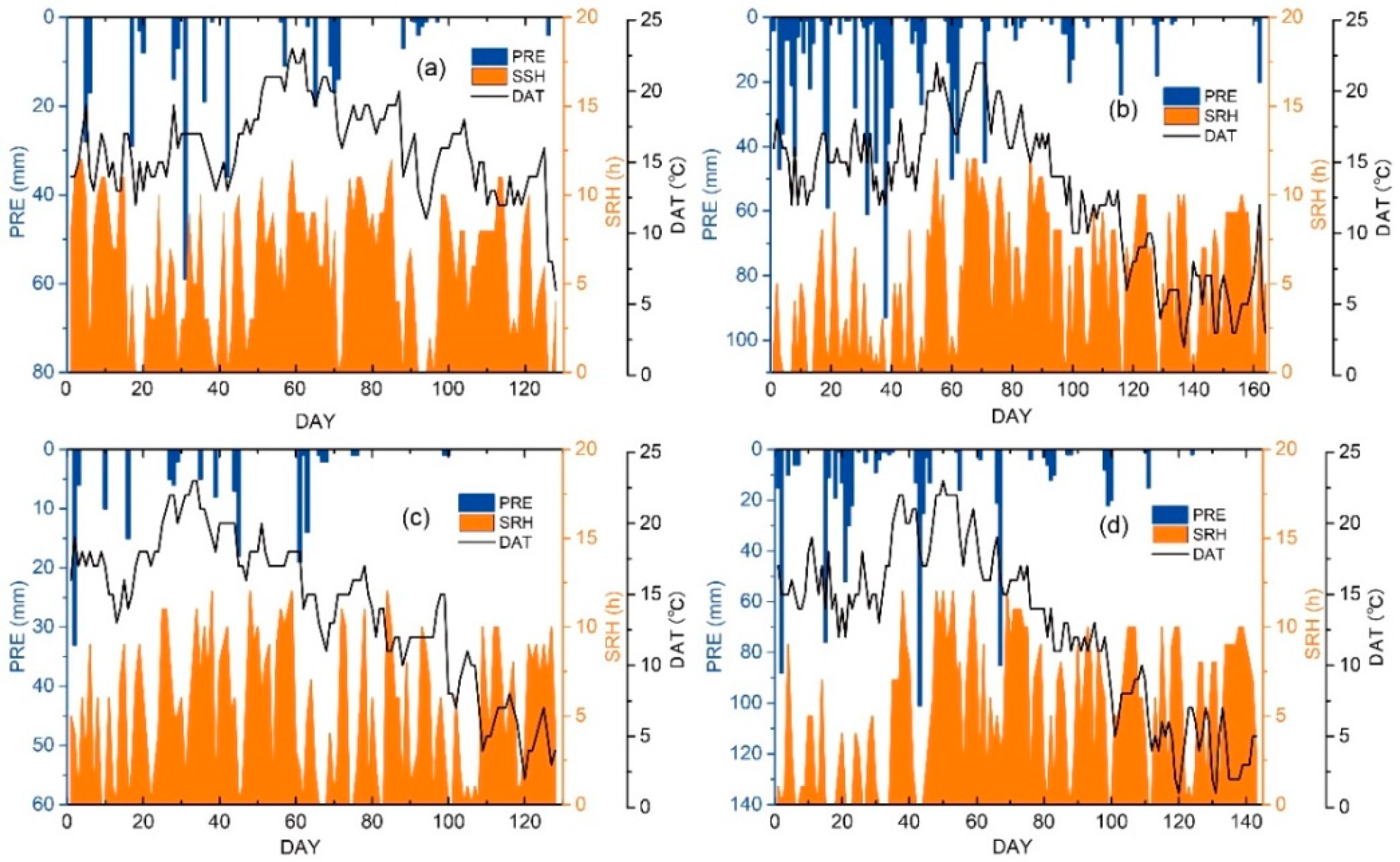

2.3. Meteorology Data Acquisition

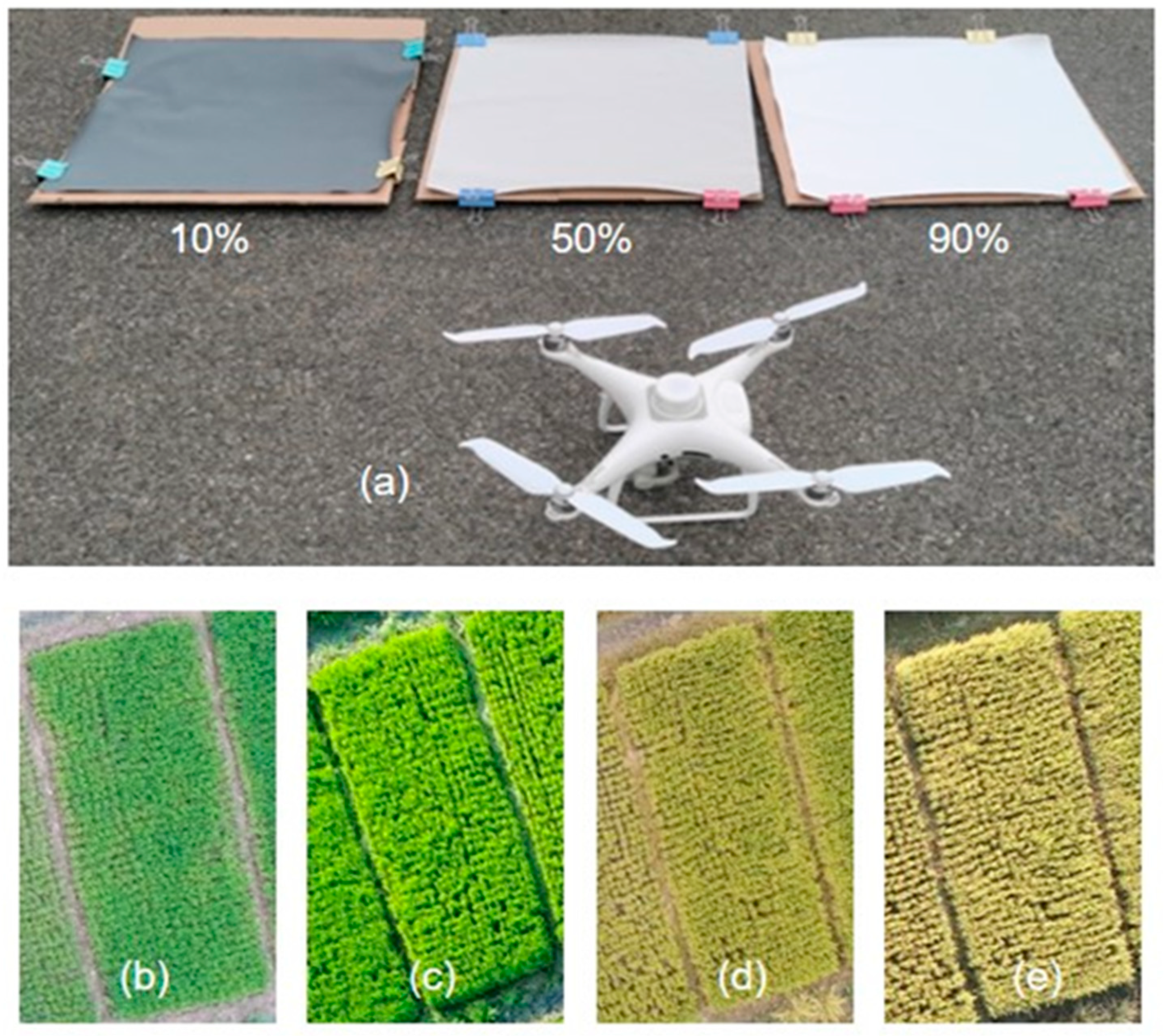

2.4. Acquisition of UAV Images

2.5. UAV Image Processing and Index Extraction

2.6. Rice NNI Estimation Modeling

2.7. Model Development and Validation

3. Results

3.1. Correlation Analysis of Variables for Rice NNI at Different Growth Periods

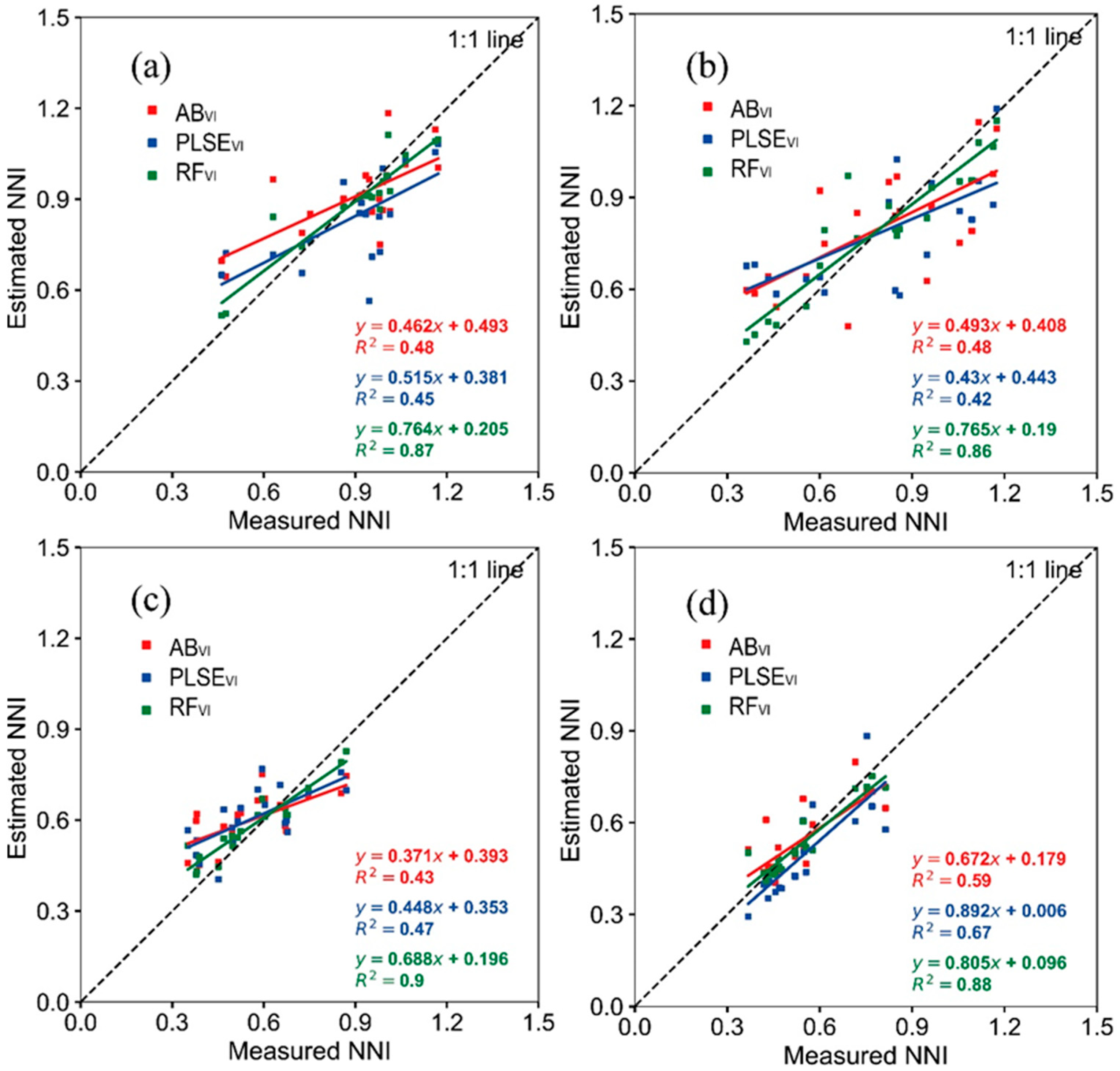

3.2. Rice NNI Estimation Based on UAV-VIs Using ML Algorithms

3.3. Estimating Rice NNI at Different Growth Periods in Combination with UAV-VI, Meteorology and Fertilization Factors

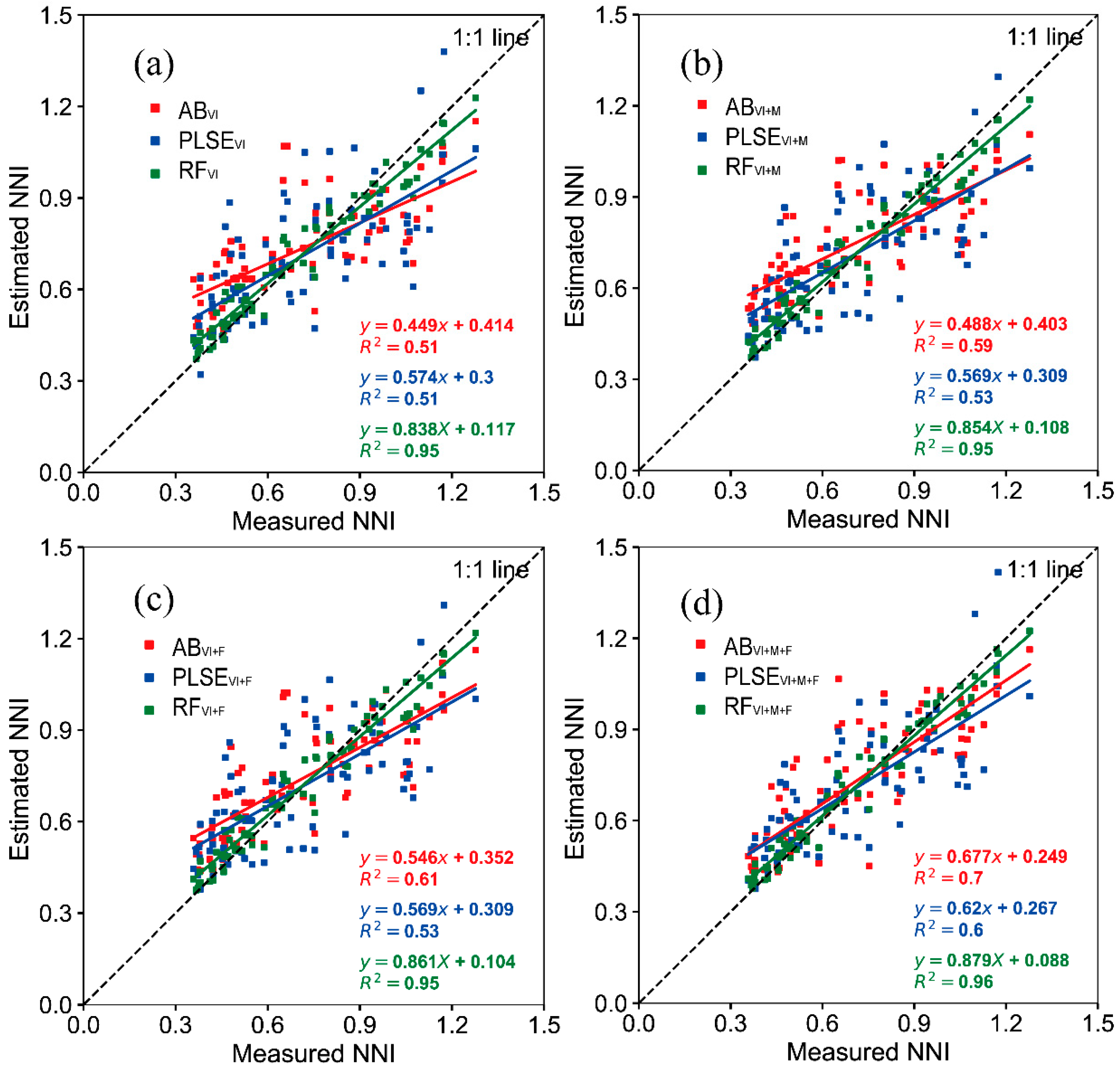

3.4. Estimating Rice NNI at Across-Stage in Combination with UAV-VI, Meteorology and Fertilization Factors

3.5. Effect of Different Input Factors on the Model

4. Discussions

4.1. Potential of Rice NNI Estimation Using ML Algorithms Based on UAV-VIs

4.2. Effect of Meteorological and Fertilization Inputs on the Performance of Rice Estimation Models

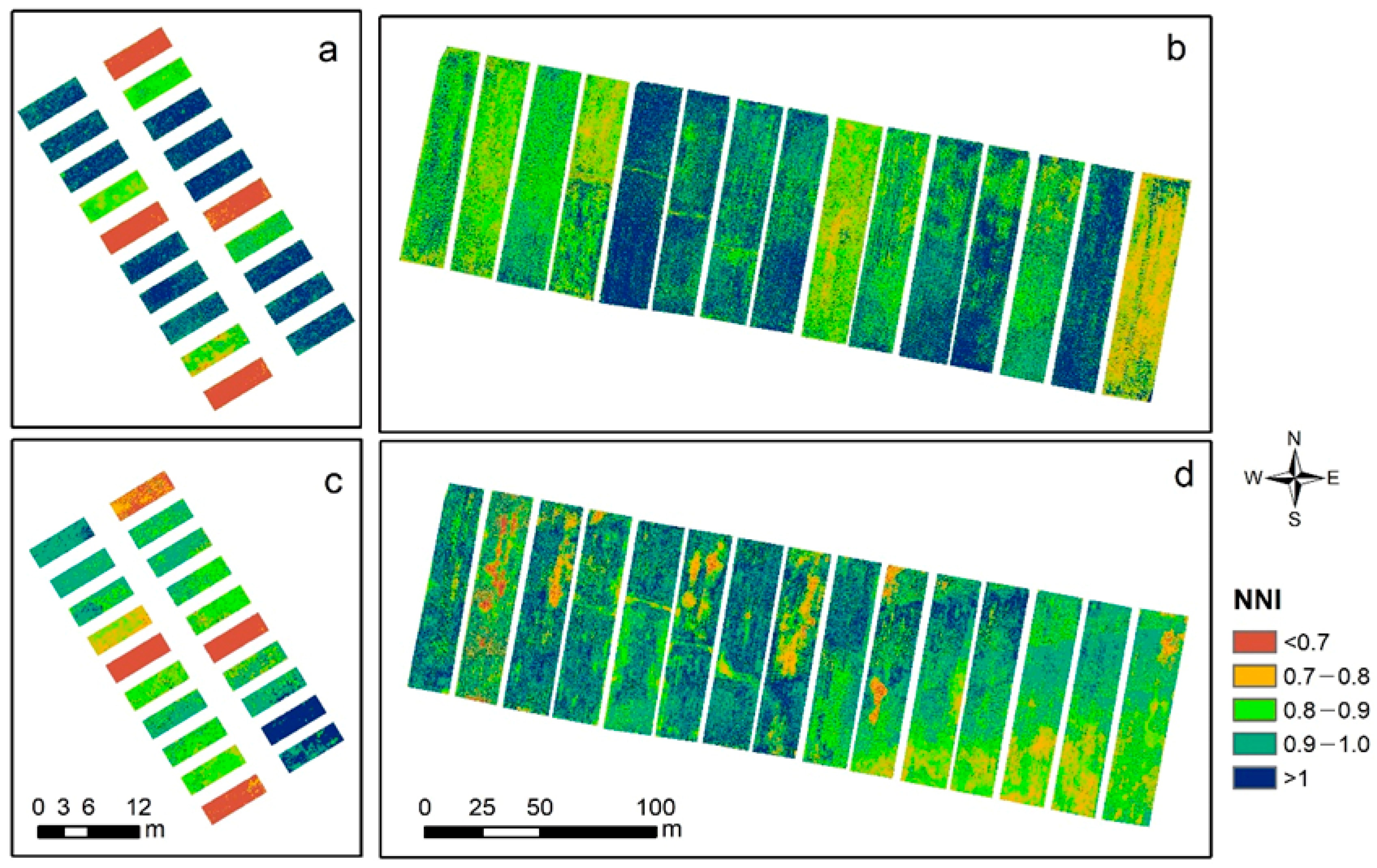

4.3. Applications and Challenges in Fertilization

4.4. Limitations and Future Directions

4.5. Key Methodological Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Y.; Ji, Y.; Li, Z.; Tu, D.; Xi, M.; Xu, Y. Yield loss of a rice ratoon crop is affected by nitrogen supply in a mechanized ratooning system. Food Energy Secur. 2025, 14, e70041. [Google Scholar] [CrossRef]

- Gao, S.; Qian, H.; Li, W.; Wang, Y.; Zhang, J.; Tao, W. Efficient fertilization pattern for rice production within the rice-wheat systems. Field Crops Res. 2025, 328, 109925. [Google Scholar] [CrossRef]

- Mueller, N.D.; Gerber, J.S.; Johnston, M.; Ray, D.K.; Ramankutty, N.; Foley, J.A. Closing yield gaps through nutrient and water management. Nature 2012, 490, 254–257. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Davidson, E.A.; Mauzerall, D.L.; Searchinger, T.D.; Dumas, P.; Shen, Y. Managing nitrogen for sustainable development. Nature 2015, 528, 51–59. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, I.M.; Lacasa, J.; van Versendaal, E.; Lemaire, G.; Belanger, G.; Jégo, G.; Ciampitti, I.A. Revisiting the relationship between nitrogen nutrition index and yield across major species. Eur. J. Agron. 2024, 154, 127079. [Google Scholar] [CrossRef]

- Wu, Y.; Lu, J.; Liu, H.; Gou, T.; Chen, F.; Fang, W.; Guan, Z. Monitoring the nitrogen nutrition index using leaf-based hyperspectral reflectance in cut chrysanthemums. Remote Sens. 2024, 16, 3062. [Google Scholar] [CrossRef]

- Islam, M.; Bijjahalli, S.; Fahey, T.; Gardi, A.; Sabatini, R.; Lamb, D.W. Destructive and non-destructive measurement approaches and the application of AI models in precision agriculture: A review. Precis. Agric. 2024, 25, 1127–1180. [Google Scholar] [CrossRef]

- Laveglia, S.; Altieri, G.; Genovese, F.; Matera, A.; Di Renzo, G.C. Advances in sustainable crop management: Integrating precision agriculture and proximal sensing. AgriEngineering 2025, 6, 3084–3120. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, X.; Ma, Y.; Zhang, R.; Cao, Q.; Zhu, Y.; Tian, Y. A comparative assessment of measures of leaf nitrogen in rice using two leaf-clip meters. Sensors 2019, 20, 175. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.; Martínez-Guanter, J.; Egea, G.; Raj, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Li, P.; Zhang, X.; Wang, W.; Zheng, H.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Chen, Q.; Cheng, T. Estimating aboveground and organ biomass of plant canopies across the entire season of rice growth with terrestrial laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102132. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Tian, F.; Potgieter, A.B.; Qin, X.; Loupian, E. Challenges and opportunities in remote sensing-based crop monitoring: A review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef]

- Qiu, Z.; Ma, F.; Li, Z.; Xu, X.; Ge, H.; Du, C. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Sun, Y.; Qin, Q.; Ren, H.; Zhang, T.; Chen, S. Red-edge band vegetation indices for leaf area index estimation from Sentinel-2/MSI Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 826–840. [Google Scholar] [CrossRef]

- Wu, S.; Yang, P.; Ren, J.; Chen, Z.; Liu, C.; Li, H. Winter wheat LAI inversion considering morphological characteristics at different growth stages coupled with microwave scattering model and canopy simulation model. Remote Sens. Environ. 2020, 240, 111681. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2018, 20, 611–629. [Google Scholar] [CrossRef]

- Lu, N.; Wang, W.; Zhang, Q.; Li, D.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Baret, F.; Liu, S.; et al. Estimation of nitrogen nutrition status in winter wheat from unmanned aerial vehicle based multi-angular multispectral imagery. Front. Plant Sci. 2019, 10, 1601. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2023, 24, 187–212. [Google Scholar] [CrossRef]

- Zheng, Y.; Shcherbakova, G.; Rusyn, B.; Sachenko, A.; Volkova, N.; Kliushnikov, I.; Antoshchuk, S. Wavelet Transform Cluster Analysis of UAV Images for Sustainable Development of Smart Regions Due to Inspecting Transport Infrastructure. Sustainability 2025, 17, 927. [Google Scholar] [CrossRef]

- Wang, L.; Ling, Q.; Liu, Z.; Dai, M.; Zhou, Y.; Shi, X.; Wang, J. Precision estimation of rice nitrogen fertilizer topdressing according to the nitrogen nutrition index using UAV multi-spectral remote sensing: A case study in southwest China. Plants 2025, 14, 1195. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Keitel, C.; Zhang, Y.; Wangeci, A.N.; Dijkstra, F.A. Global meta-analysis of nitrogen fertilizer use efficiency in rice, wheat and maize. Agric. Ecosyst. Environ. 2022, 338, 108089. [Google Scholar] [CrossRef]

- Jiang, M.; Dong, C.; Bian, W.; Zhang, W.; Wang, Y. Effects of different fertilization practices on maize yield, soil nutrients, soil moisture, and water use efficiency in northern China based on a meta-analysis. Sci. Rep. 2024, 14, 6480. [Google Scholar] [CrossRef]

- Wang, J.; Li, L.; Lam, S.K.; Shi, X.; Pan, G. Changes in plant nutrient status following combined elevated [CO2] and canopy warming in winter wheat. Front. Plant Sci. 2023, 14, 1132414. [Google Scholar] [CrossRef]

- Zhang, J.; Guan, K.; Peng, B.; Pan, M.; Zhou, W.; Jiang, C.; Kimm, H.; Franz, T.E.; Grant, R.F.; Yang, Y.; et al. Sustainable irrigation based on co-regulation of soil water supply and atmospheric evaporative demand. Nat. Commun. 2021, 12, 5549. [Google Scholar] [CrossRef]

- Lv, P.; Sun, S.; Zhao, X.; Li, Y.; Zhao, S.; Zhang, J.; Zuo, X. Effects of altered precipitation patterns on soil nitrogen transformation in different landscape types during the growing season in northern China. Catena 2023, 222, 106813. [Google Scholar] [CrossRef]

- Du, X.; Wang, Z.; Xi, M.; Wu, W.; Wei, Z.; Xu, Y.; Zhou, Y.; Lei, W.; Kong, L. A novel planting pattern increases the grain yield of wheat after rice cultivation by improving radiation resource utilization. Agric. For. Meteorol. 2021, 310, 108625. [Google Scholar] [CrossRef]

- Nelson, D.W.; Sommers, L. Determination of total nitrogen in plant material. Agron. J. 1973, 65, 109–112. [Google Scholar] [CrossRef]

- Fabbri, C.; Mancini, M.; dalla Marta, A.; Orlandini, S.; Napoli, M. Integrating satellite data with a Nitrogen Nutrition Curve for precision top-dress fertilization of durum wheat. Eur. J. Agron. 2020, 120, 126148. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, P.; Zhang, G.; Ran, J.; Shi, W.; Wang, D. A critical nitrogen dilution curve for japonica rice based on canopy images. Field Crop. Res. 2016, 198, 93–100. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2008, 16, 65–70. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, B.; Zhang, Z. Improving leaf area index estimation with chlorophyll insensitive multispectral red-edge vegetation indices. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3568–3582. [Google Scholar] [CrossRef]

- Evstatiev, B.; Mladenova, T.; Valov, N.; Zhelyazkova, T.; Gerdzhikova, M.; Todorova, M.; Stanchev, G. Fast pasture classification method using ground-based camera and the modified green red vegetation index (mgrvi). Int. J. Adv. Comput. Sci. Appl. 2023, 14, 45–51. [Google Scholar] [CrossRef]

- Yang, B.; Wang, M.; Sha, Z.; Wang, B.; Chen, J.; Yao, X.; Cheng, T.; Cao, W.; Zhu, Y. Evaluation of aboveground nitrogen content of winter wheat using digital imagery of unmanned aerial vehicles. Sensors 2019, 19, 4416. [Google Scholar] [CrossRef]

- Xu, X.; Liu, L.; Han, P.; Gong, X.; Zhang, Q. Accuracy of vegetation indices in assessing different grades of grassland desertification from UAV. Int. J. Environ. Res. Public Health 2022, 19, 16793. [Google Scholar] [CrossRef]

- Chen, C.; Yuan, X.; Gan, S.; Luo, W.; Bi, R.; Li, R.; Gao, S. A new vegetation index based on UAV for extracting plateau vegetation information. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103668. [Google Scholar] [CrossRef]

- Song, Z.; Lu, Y.; Ding, Z.; Sun, D.; Jia, Y.; Sun, W. A new remote sensing desert vegetation detection index. Remote Sens. 2023, 15, 5742. [Google Scholar] [CrossRef]

- Sewiko, R.; Sagala, H.A.M.U. The use of drone and visible atmospherically resistant index (VARI) algorithm implementation in mangrove ecosystem health’s monitoring. Asian J. Aquat. Sci. 2022, 5, 322–329. [Google Scholar]

- Farooque, A.A.; Afzaal, H.; Benlamri, R.; Al-Naemi, S.; MacDonald, E.; Abbas, F.; Ali, H. Red-green-blue to normalized difference vegetation index translation: A robust and inexpensive approach for vegetation monitoring using machine vision and generative adversarial networks. Precis. Agric. 2023, 24, 1097–1115. [Google Scholar] [CrossRef]

- Biró, L.; Kozma-Bognár, V.; Berke, J. Comparison of RGB indices used for vegetation studies based on structured similarity index (SSIM). J. Plant Sci. Phytopathol. 2024, 8, 007–012. [Google Scholar] [CrossRef]

- Salman, H.A.; Kalakech, A.; Steiti, A. Random forest algorithm overview. Babylon. J. Mach. Learn. 2024, 2024, 69–79. [Google Scholar] [CrossRef]

- Ghazwani, M.; Begum, M.Y. Computational intelligence modeling of hyoscine drug solubility and solvent density in supercritical processing: Gradient boosting, extra trees, and random forest models. Sci. Rep. 2023, 13, 10046. [Google Scholar] [CrossRef]

- Reisi Gahrouei, O.; McNairn, H.; Hosseini, M.; Homayouni, S. Estimation of crop biomass and leaf area index from multitemporal and multispectral imagery using machine learning approaches. Can. J. Remote Sens. 2020, 46, 84–99. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Yu, J.; Huang, K. A near real-time deep learning approach for detecting rice phenology based on UAV images. Agric. For. Meteorol. 2020, 287, 107938. [Google Scholar] [CrossRef]

- Adnan, M.R.; Wilujeng, E.D.; Aisyah, M.D.; Alif, T.; Galushasti, A. Preliminary agronomic characterization of japonica rice carrying a tiller number mutation under greenhouse conditions. DYSONA-Appl. Sci. 2025, 6, 291–299. [Google Scholar]

- Wang, H.; Zhong, L.; Fu, X.; Huang, S.; Zhao, D.; He, H.; Chen, X. Physiological analysis reveals the mechanism of accelerated growth recovery for rice seedlings by nitrogen application after low temperature stress. Front. Plant Sci. 2023, 14, 1133592. [Google Scholar] [CrossRef] [PubMed]

- Liang, H.; Gao, S.; Hu, K. Global sensitivity and uncertainty analysis of the dynamic simulation of crop N uptake by using various N dilution curve approaches. Eur. J. Agron. 2020, 116, 126044. [Google Scholar] [CrossRef]

| Experimental Site | Nitrogen Treatments | Total Nitrogen Rate (kg ha−1) | Proportion of Controlled-Release Nitrogen (%) |

|---|---|---|---|

| Pukou | N1 | 0 | 0 |

| N2 | 240 | 0 | |

| N3 | 240 | 30 | |

| N4 | 240 | 40 | |

| N5 | 240 | 50 | |

| Luhe | Na | 196 | 0 |

| Nb | 196 | 40 | |

| Nc | 196 | 50 |

| Experimental Field | Sowing Date | Harvest Date | Sampling Date | Sampling Period | No. of Samples |

|---|---|---|---|---|---|

| Pukou | 12 June 2019 | 2 November 2019 | 26 July 2019 | Jointing | 20 |

| 8 September 2019 | Flowering | 20 | |||

| 27 September 2019 | Filling | 20 | |||

| 19 October 2019 | Maturity | 20 | |||

| 20 June 2020 | 10 November 2020 | 3 August 2020 | Jointing | 20 | |

| 27 August 2020 | Flowering | 20 | |||

| 18 September 2020 | Filling | 20 | |||

| 29 October 2020 | Maturity | 20 | |||

| Luhe | 18 June 2019 | 5 November 2019 | 26 July 2019 | Jointing | 30 |

| 8 September 2019 | Flowering | 30 | |||

| 27 September 2019 | Filling | 30 | |||

| 31 October 2019 | Maturity | 30 | |||

| 20 June 2020 | 16 November 2020 | 4 August 2020 | Jointing | 30 | |

| 2 September 2020 | Flowering | 30 | |||

| 18 September 2020 | Filling | 30 | |||

| 29 October 2020 | Maturity | 30 |

| Growth Stages | Mean | SD | Variance | Kurtosis | Skewness | Min | Max | No. of Samples | |

|---|---|---|---|---|---|---|---|---|---|

| NNI | Jointing | 0.90 | 0.22 | 0.05 | −0.06 | −0.50 | 0.37 | 1.34 | 100 |

| Flowering | 0.76 | 0.24 | 0.06 | −0.77 | 0.21 | 0.34 | 1.39 | 100 | |

| Filling | 0.58 | 0.19 | 0.04 | −0.21 | 0.62 | 0.25 | 1.05 | 100 | |

| Maturity | 0.51 | 0.13 | 0.02 | −0.39 | 0.69 | 0.26 | 0.81 | 100 |

| Name | Index | Formulation | References |

|---|---|---|---|

| Green leaf algorithm | GLA | (2 × g − r − b)/(2 × g + r + b) | [32] |

| Green leaf index | GLI | (2 × g − r + b)/(2 × g + r + b) | [32] |

| Green–red vegetation index | GRVI | (g − r)/(g + r) | [33] |

| Modified green–red vegetation index | MGRVI | (g2 − r2)/g2 + r2) | [34] |

| Excess green minus excess red | ExGR | (2 × g − r − b) − (1.4 × r − g) | [35] |

| Excess red vegetation index | ExR | 1.4 × r − g | [36] |

| Excess blue vegetation index | ExB | 1.4 × b − g | [37] |

| Excess green vegetation index | ExG | 2 × g − r − b | [38] |

| Visible atmospherically resistant index | VARI | (g − r)/(g + r − b) | [39] |

| Red–green–blue vegetation index | RGBVI | (g2 − b × r2)/(g2 + b × r2) | [40] |

| Red–green ratio index | RGRI | r/g | [41] |

| Input Factors | Nitrogen Nutrition Index | ||||

|---|---|---|---|---|---|

| Jointing | Flowering | Filling | Maturity | Across-Stage | |

| UAV-VIs | |||||

| ExB | 0.01 | 0.45 *** | 0.20 * | 0.28 ** | 0.38 *** |

| ExGR | 0.17 * | 0.22 * | 0.32 ** | 0.37 *** | 0.40 *** |

| ExG | 0.16 | 0.13 | 0.03 | 0.20 * | 0.14 ** |

| ExR | 0.07 | 0.50 *** | 0.25 * | 0.31** | 0.41 *** |

| GLA | 0.07 | 0.04 | 0.18 * | 0.13 | 0.21 *** |

| GLI | 0.10 | 0.08 | 0.19 * | 0.12 | 0.23 *** |

| GRVI | 0.16 | 0.56 *** | 0.59 *** | 0.55 *** | 0.56 *** |

| MGRVI | 0.14 | 0.56 *** | 0.59 *** | 0.55 *** | 0.55 *** |

| RGBVI | 0.09 | 0.17 | 0.18 * | 0.39 *** | 0.16 ** |

| RGRI | 0.11 | 0.56 *** | 0.59 *** | 0.54 *** | 0.55 *** |

| VARI | 0.18 | 0.59 *** | 0.59 *** | 0.57 *** | 0.57 *** |

| Meteorology | |||||

| TEM | 0.25 * | 0.23 * | 0.22 * | 0.32 ** | 0.62 *** |

| PRE | 0.28 ** | 0.48 *** | 0.14 | 0.47 ** | 0.41 *** |

| SSH | 0.06 | 0.08 | 0.02 | −0.18 | 0.53 *** |

| Fertilization | |||||

| FER | 0.60 *** | 0.51 *** | 0.30 ** | 0.04 | 0.40 *** |

| Growth Period | Variables | AB | PLSR | RF | |||

|---|---|---|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | R2 | RMSE | ||

| Jointing | VI | 0.48 | 0.13 | 0.49 | 0.15 | 0.87 | 0.06 |

| VI + M | 0.54 | 0.13 | 0.6 | 0.13 | 0.92 | 0.06 | |

| VI + F | 0.57 | 0.13 | 0.61 | 0.12 | 0.91 | 0.06 | |

| VI + M + F | 0.76 | 0.1 | 0.66 | 0.12 | 0.95 | 0.05 | |

| Flowering | VI | 0.48 | 0.17 | 0.42 | 0.18 | 0.86 | 0.07 |

| VI + M | 0.58 | 0.17 | 0.52 | 0.18 | 0.91 | 0.07 | |

| VI + F | 0.58 | 0.17 | 0.53 | 0.18 | 0.91 | 0.07 | |

| VI + M + F | 0.58 | 0.17 | 0.6 | 0.18 | 0.95 | 0.07 | |

| Filling | VI | 0.43 | 0.11 | 0.47 | 0.11 | 0.86 | 0.05 |

| VI + M | 0.46 | 0.11 | 0.55 | 0.1 | 0.91 | 0.05 | |

| VI + F | 0.47 | 0.11 | 0.55 | 0.1 | 0.91 | 0.05 | |

| VI + M + F | 0.54 | 0.11 | 0.63 | 0.1 | 0.94 | 0.04 | |

| Maturity | VI | 0.59 | 0.07 | 0.67 | 0.09 | 0.88 | 0.03 |

| VI + M | 0.69 | 0.07 | 0.73 | 0.08 | 0.93 | 0.03 | |

| VI + F | 0.77 | 0.06 | 0.73 | 0.08 | 0.93 | 0.03 | |

| VI + M + F | 0.85 | 0.05 | 0.77 | 0.08 | 0.95 | 0.03 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Ma, F.; Zhou, J.; Du, C. Improving Rice Nitrogen Nutrition Index Estimation Using UAV Images Combined with Meteorological and Fertilization Variables. Agronomy 2025, 15, 1946. https://doi.org/10.3390/agronomy15081946

Qiu Z, Ma F, Zhou J, Du C. Improving Rice Nitrogen Nutrition Index Estimation Using UAV Images Combined with Meteorological and Fertilization Variables. Agronomy. 2025; 15(8):1946. https://doi.org/10.3390/agronomy15081946

Chicago/Turabian StyleQiu, Zhengchao, Fei Ma, Jianmin Zhou, and Changwen Du. 2025. "Improving Rice Nitrogen Nutrition Index Estimation Using UAV Images Combined with Meteorological and Fertilization Variables" Agronomy 15, no. 8: 1946. https://doi.org/10.3390/agronomy15081946

APA StyleQiu, Z., Ma, F., Zhou, J., & Du, C. (2025). Improving Rice Nitrogen Nutrition Index Estimation Using UAV Images Combined with Meteorological and Fertilization Variables. Agronomy, 15(8), 1946. https://doi.org/10.3390/agronomy15081946