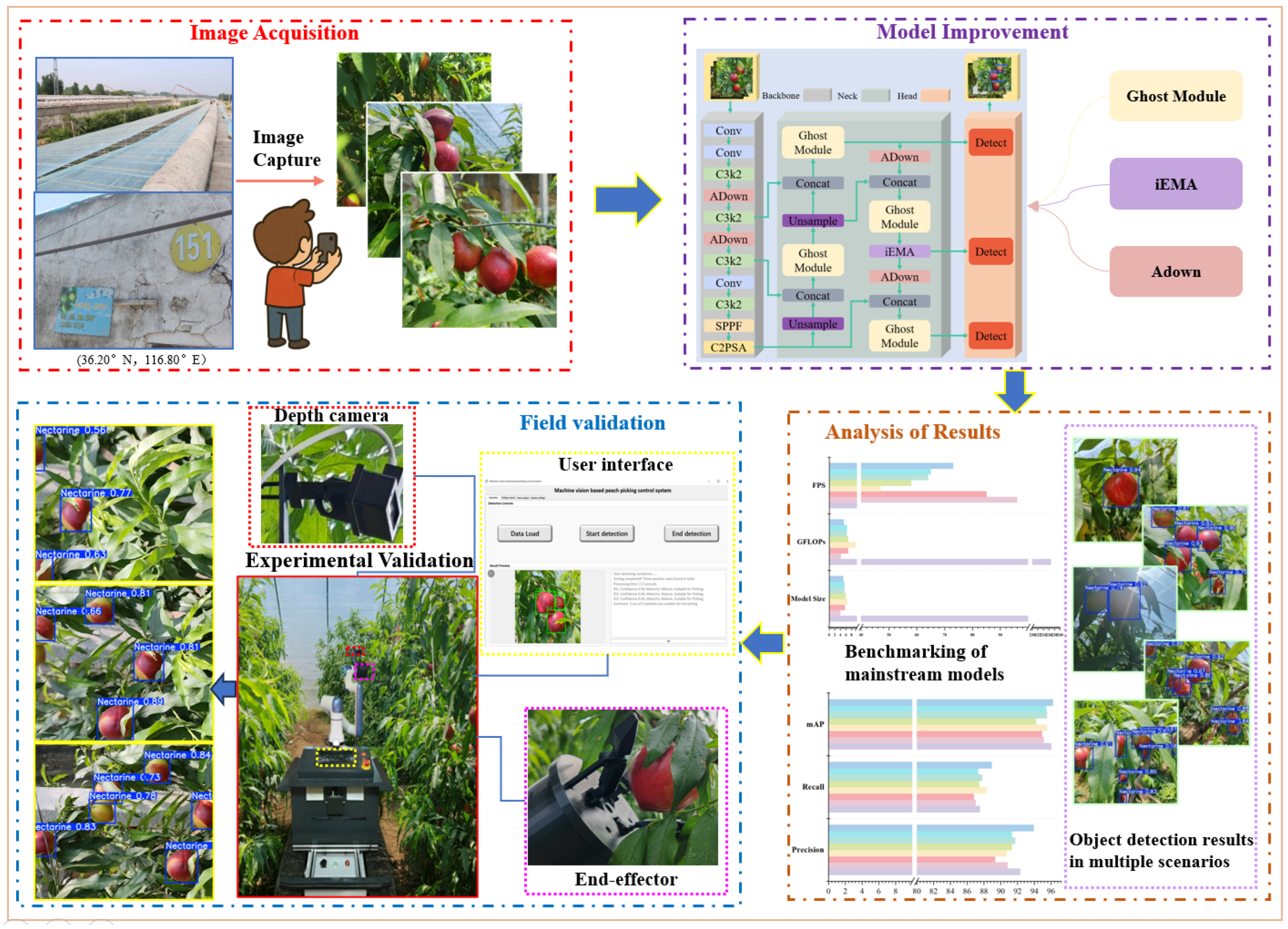

GIA-YOLO: A Target Detection Method for Nectarine Picking Robots in Facility Orchards

Abstract

1. Introduction

2. Materials and Methods

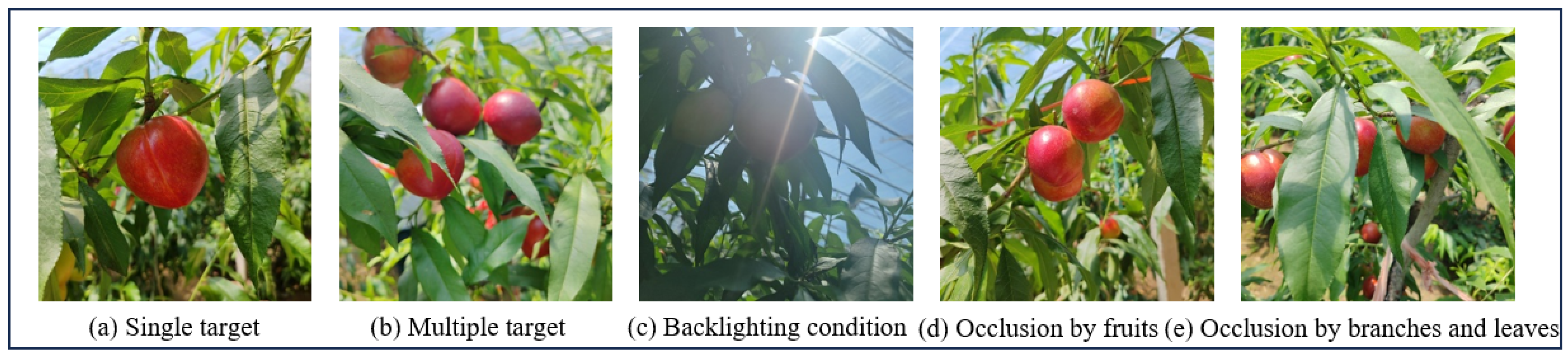

2.1. Image Acquisition

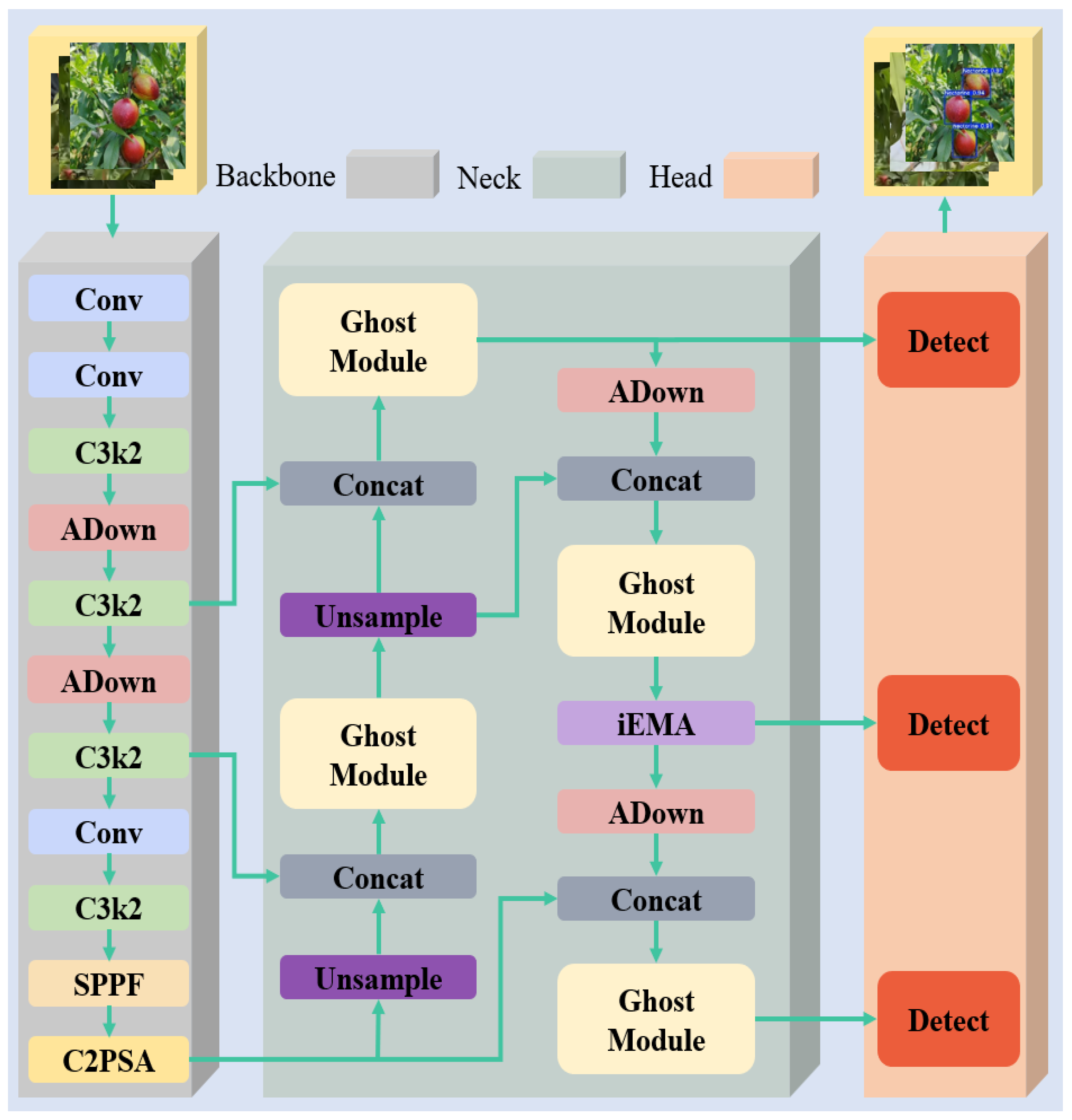

2.2. GIA-YOLO Target Detection Network

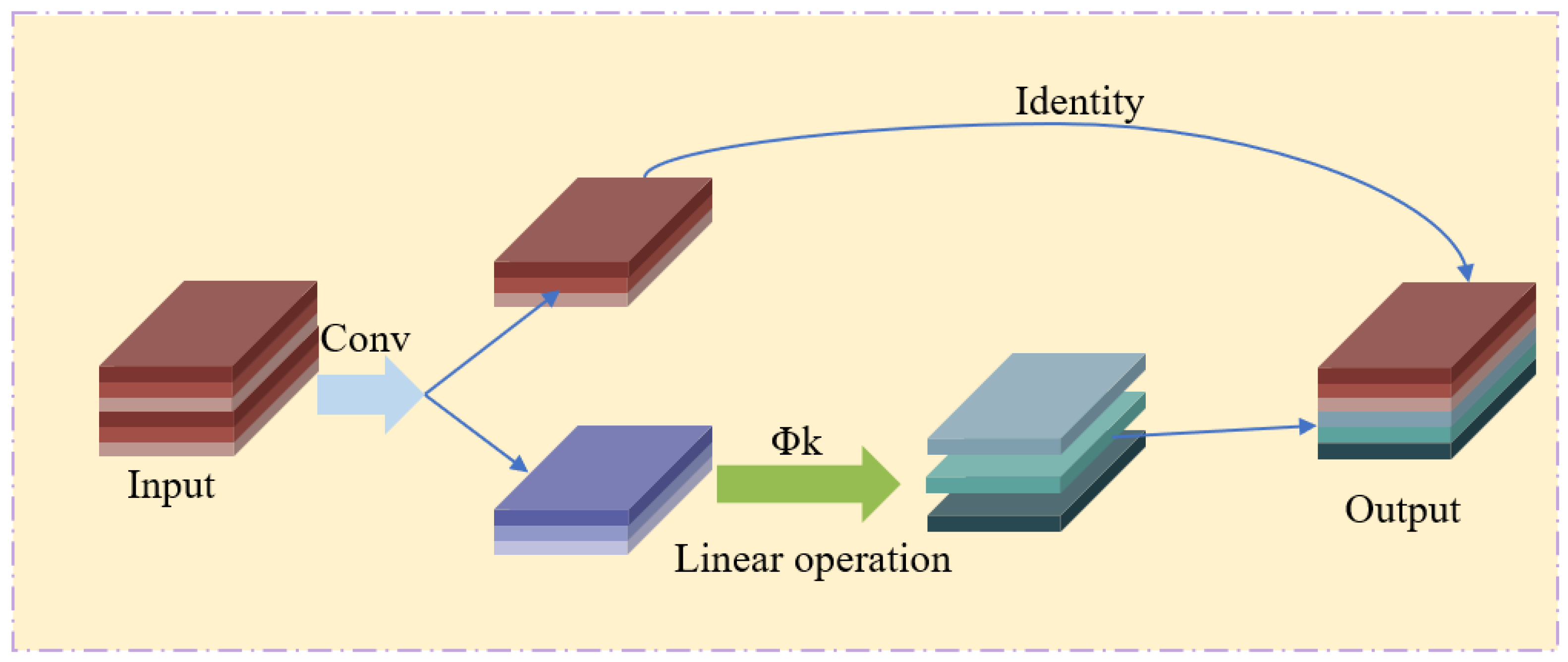

2.2.1. Convolutional Neural Network GhostModule

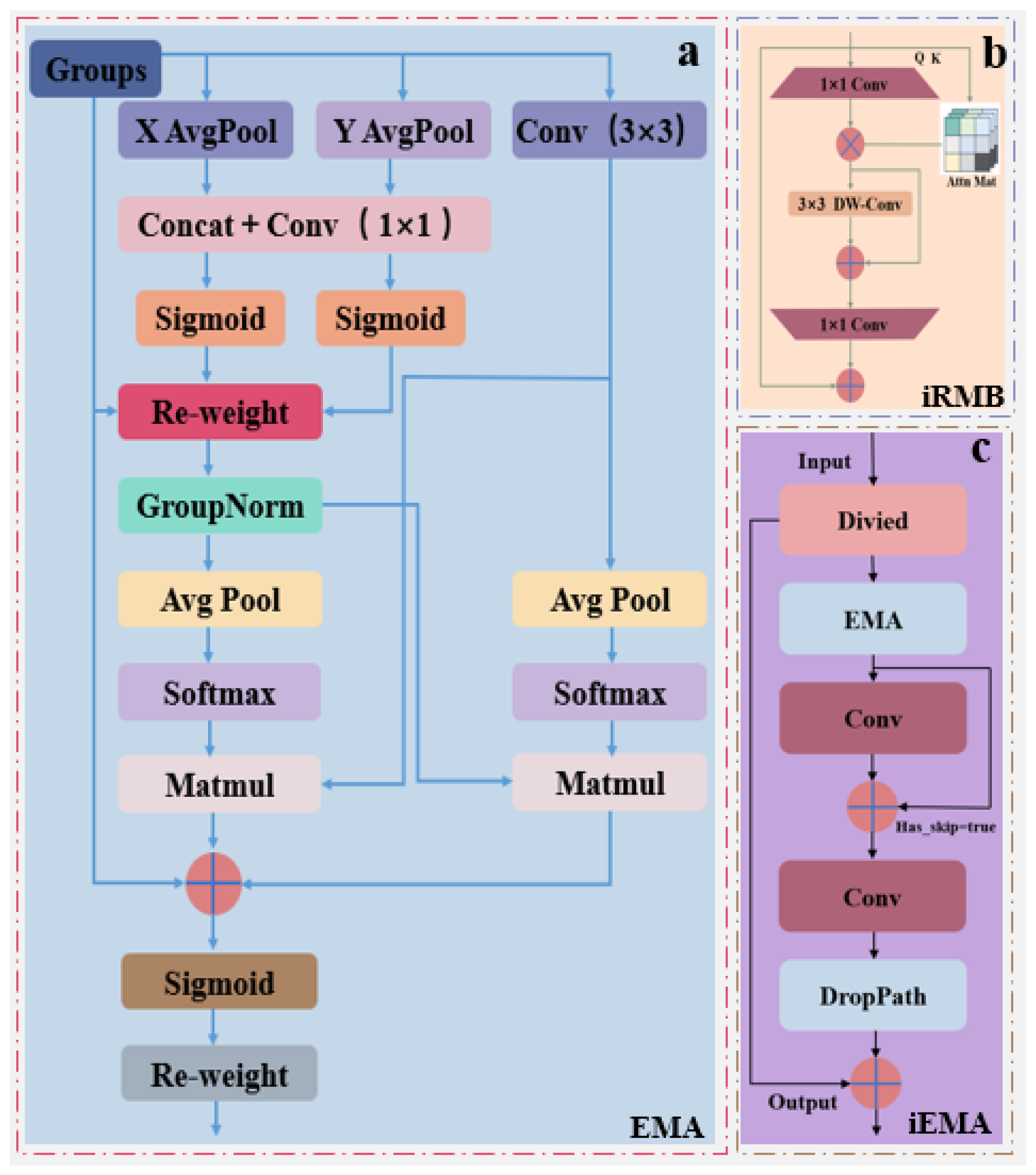

2.2.2. The iEMA Fusion Attention Mechanism

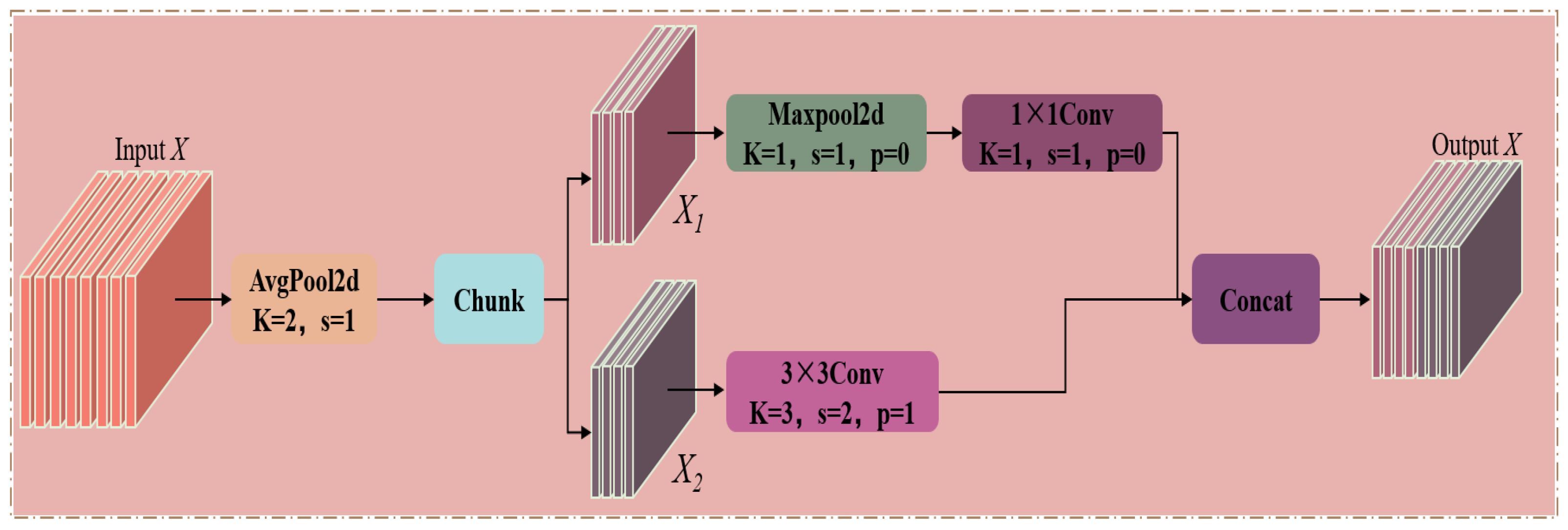

2.2.3. Downsampling Module ADown

2.3. Edge Device Deployment

2.4. Evaluation Indicators and Parameterization

3. Results and Analysis

3.1. Analysis of Ablation Test Results

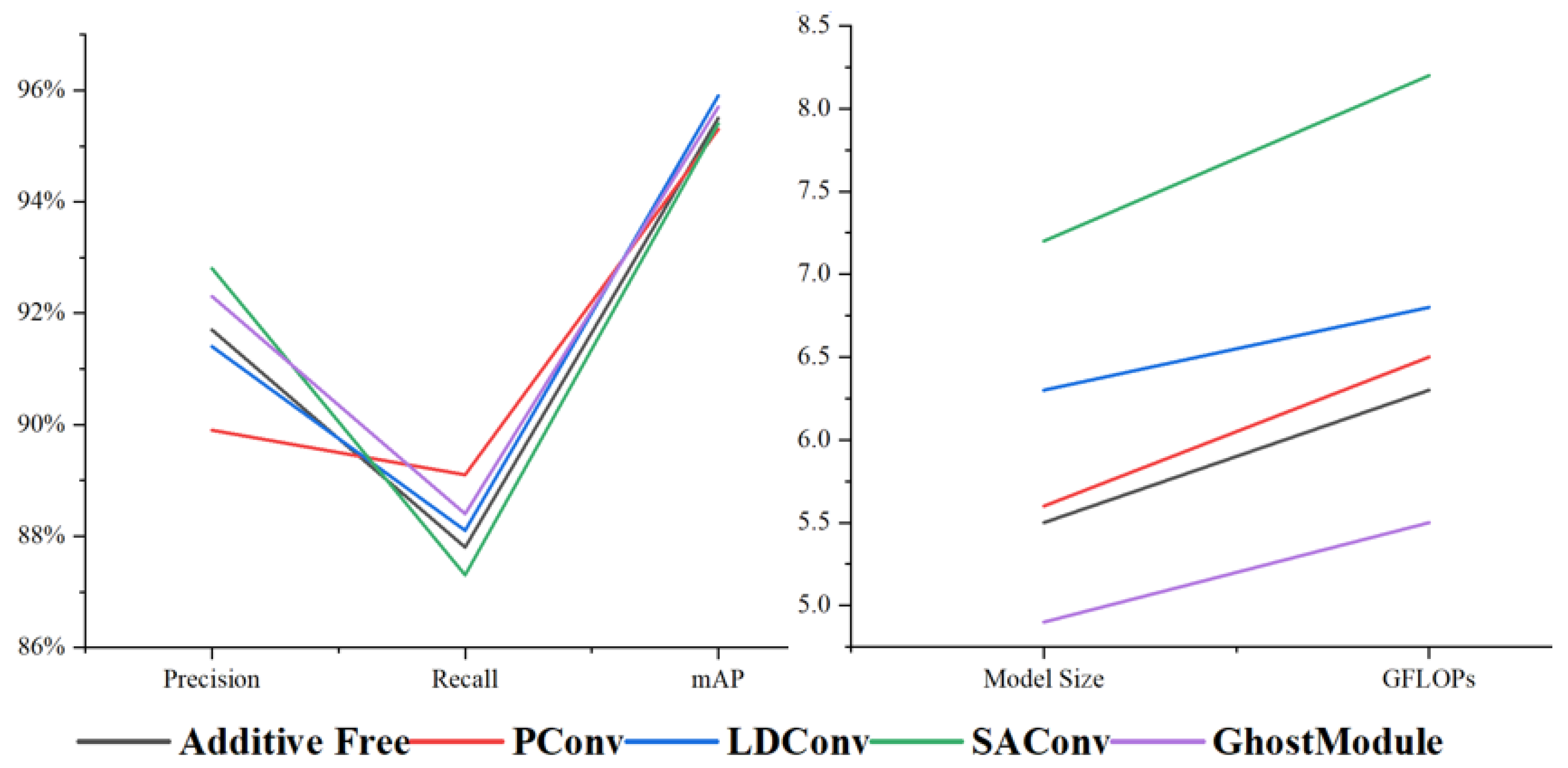

3.2. Analysis of the GhostModule Test Results

3.3. Analysis of the iEMA Attention Mechanism Test Results

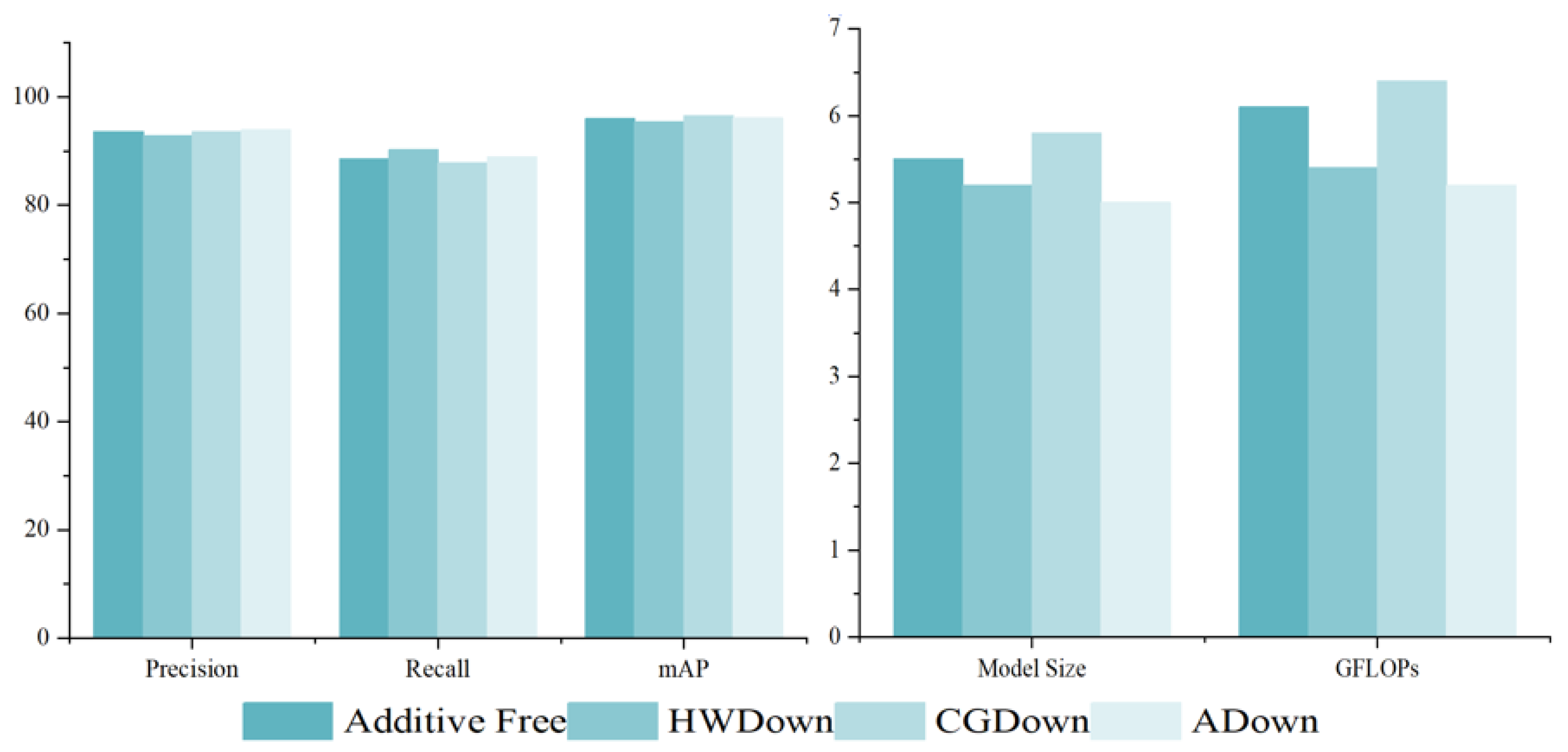

3.4. Analysis of the ADown Module Test Results

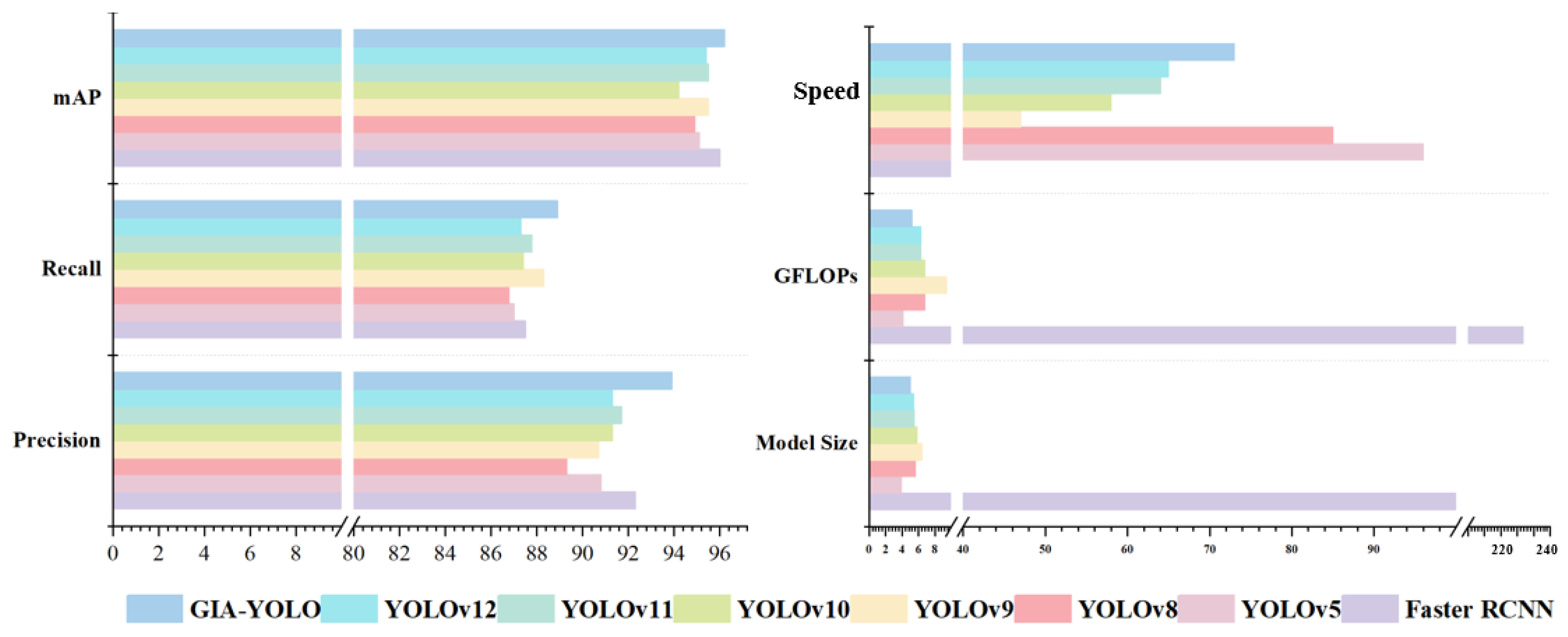

3.5. Mainstream Model Comparison Results

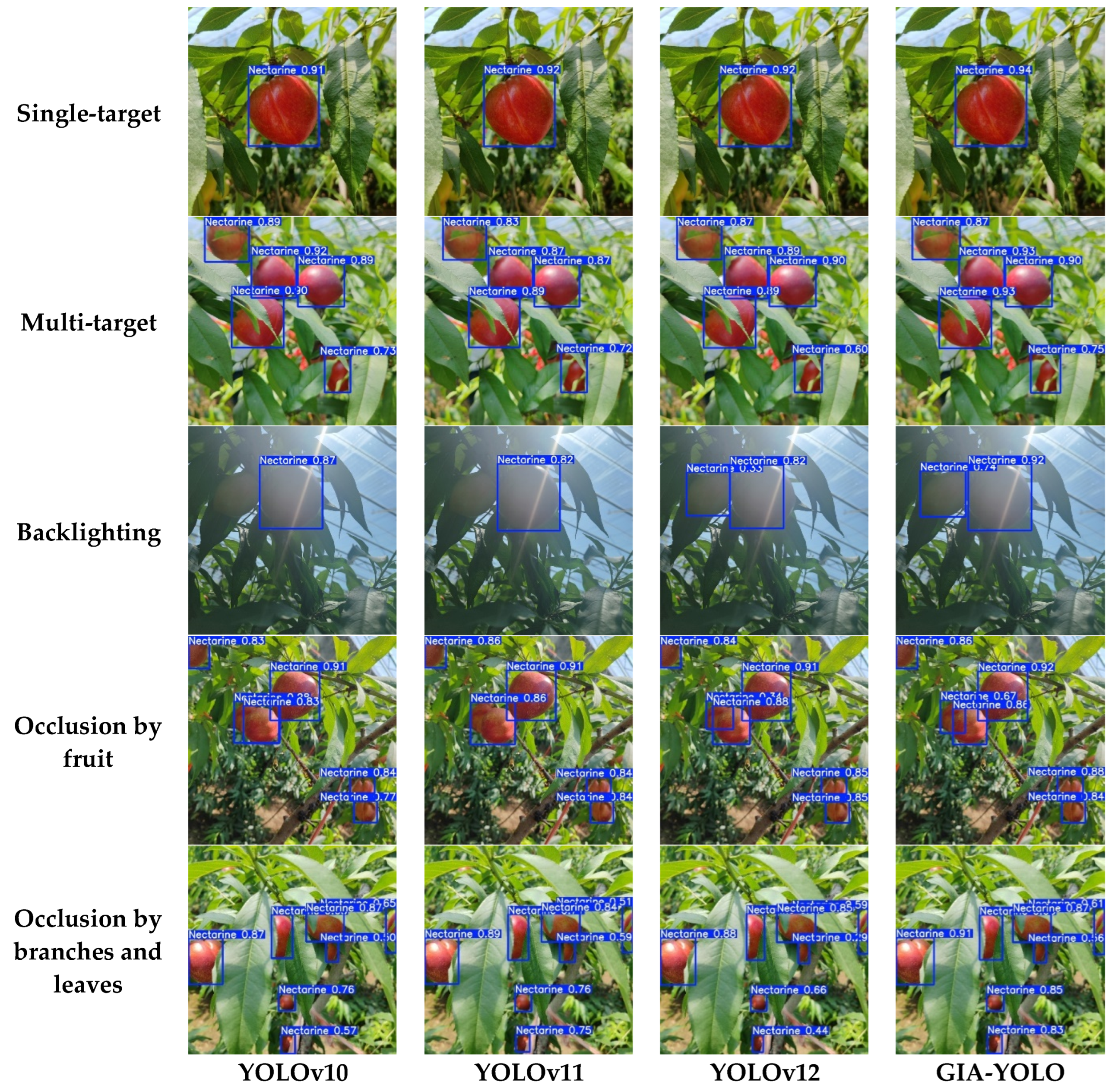

3.6. Visualization Analysis

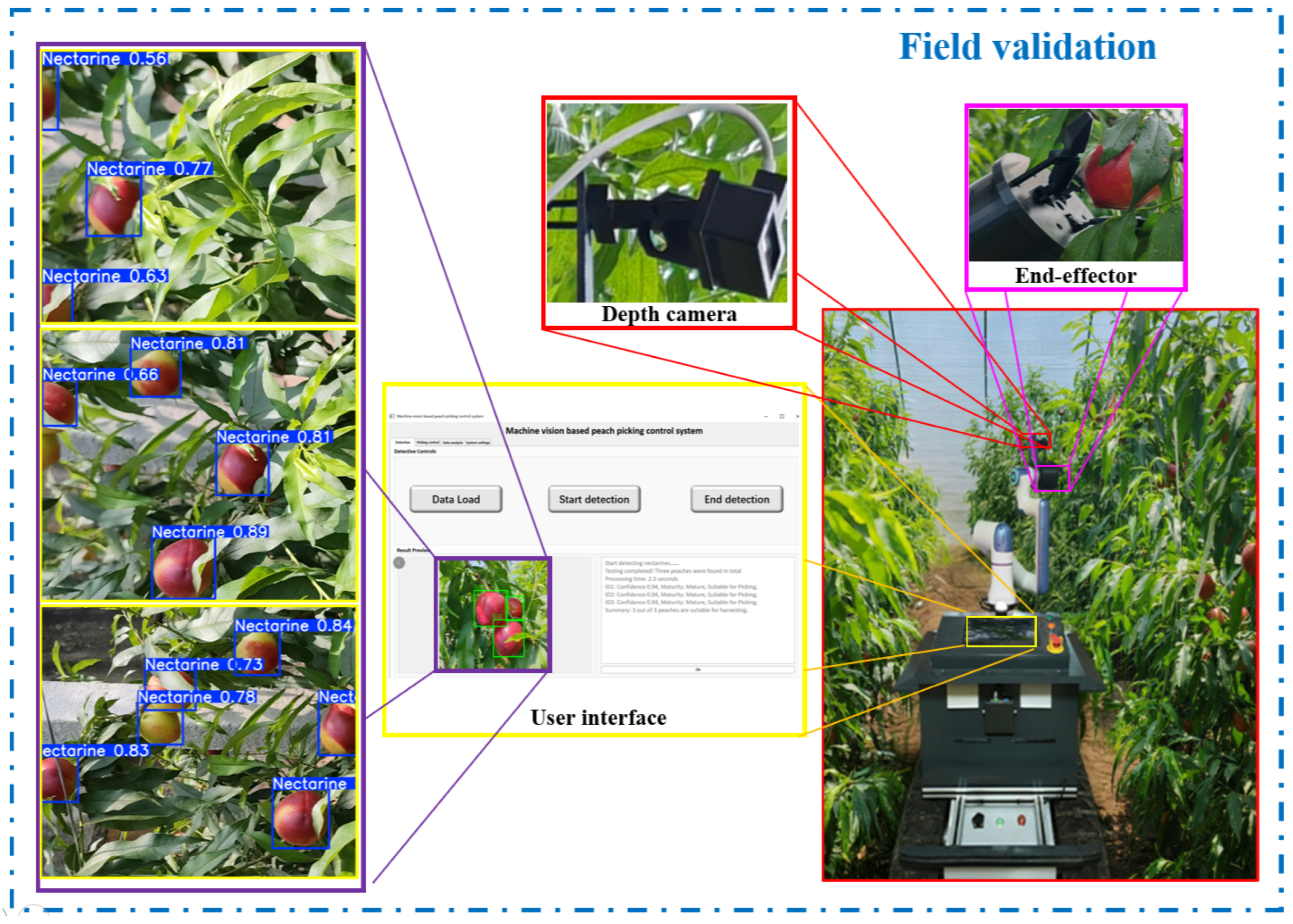

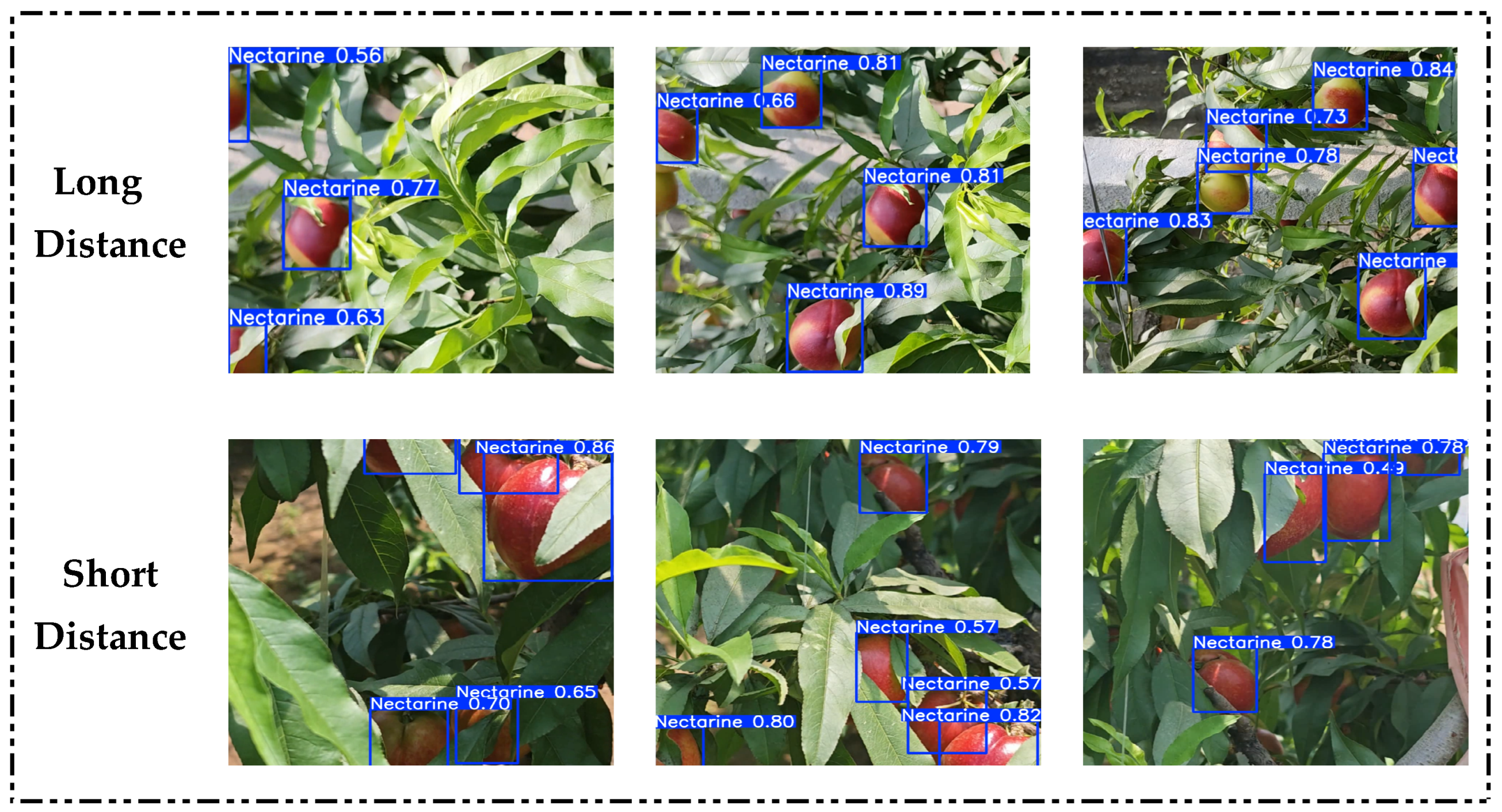

3.7. Experimental Validation of Nectarine Orchards

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Izmailov, A.Y. Intelligent Technologies and Robotic Means in Agricultural Production. Her. Russ. Acad. Sci. 2019, 89, 209–210. [Google Scholar] [CrossRef]

- Xiao, X.; Wang, Y.; Zhou, B.; Jiang, Y. Flexible Hand Claw Picking Method for Citrus-Picking Robot Based on Target Fruit Recognition. Agriculture 2024, 14, 1227. [Google Scholar] [CrossRef]

- Liu, J.; Guo, J.; Zhang, S. YOLOv11-HRS: An Improved Model for Strawberry Ripeness Detection. Agronomy 2025, 15, 1026. [Google Scholar] [CrossRef]

- Yan, Z.; Wu, Y.; Zhao, W.; Zhang, S.; Li, X. Research on an Apple Recognition and Yield Estimation Model Based on the Fusion of Improved YOLOv11 and DeepSORT. Agriculture 2025, 15, 765. [Google Scholar] [CrossRef]

- Wu, Q.; Huang, H.; Song, D.; Zhou, J. YOLO-PGC: A Tomato Maturity Detection Algorithm Based on Improved YOLOv11. Appl. Sci. 2025, 15, 5000. [Google Scholar] [CrossRef]

- Bi, Z.; Yang, L.; Lü, S.; Gong, Y.; Zhang, J.; Zhao, L. Lightweight Greenhouse Tomato Detection Method Based on EDH−YOLO. Trans. Chin. Soc. Agric. Mach. 2024, 55, 246–254. [Google Scholar]

- He, B.; Zhang, Y.; Gong, J.; Fu, G.; Zhao, Y.; Wu, R. Fast Recognition of Tomato Fruit in Greenhouse at Night Based on Improved YOLO v5. Trans. Chin. Soc. Agric. Mach. 2022, 53, 201–208. [Google Scholar]

- Zhu, X.; Chen, F.; Zheng, Y.; Chen, C.; Peng, X. Detection of Camellia oleifera fruit maturity in orchards based on modified lightweight YOLO. Comput. Electron. Agric. 2024, 226, 109471. [Google Scholar] [CrossRef]

- Ma, N.; Sun, Y.; Li, C.; Liu, Z.; Song, H. AHG-YOLO: Multi-category detection for occluded pear fruits in complex orchard scenes. Front. Plant Sci. 2025, 16, 1580325. [Google Scholar] [CrossRef]

- Jing, J.; Zhang, S.; Sun, H.; Ren, R.; Cui, T. YOLO-PEM: A Lightweight Detection Method for Young “Okubo” Peaches in Complex Orchard Environments. Agronomy 2024, 14, 1757. [Google Scholar] [CrossRef]

- Zhang, G.; Yang, X.; Lv, D.; Zhao, Y.; Liu, P. YOLOv8n-CSD: A Lightweight Detection Method for Nectarines in Complex Environments. Agronomy 2024, 14, 2427. [Google Scholar] [CrossRef]

- Xie, Y.; Zhong, X.; Zhan, J.; Wang, C.; Liu, N.; Li, L.; Zhou, G. ECLPOD: An extremely compressed lightweight model for pear object detection in smart agriculture. Agronomy 2023, 13, 1891. [Google Scholar] [CrossRef]

- Zhu, F.; Wang, S.; Liu, M.; Wang, W.; Feng, W. A Lightweight Algorithm for Detection and Grading of Olive Ripeness Based on Improved YOLOv11n. Agronomy 2025, 15, 1030. [Google Scholar] [CrossRef]

- Xu, W.; Yang, R.; Karthikeyan, R.; Shi, Y.; Su, Q. GBiDC-PEST: A novel lightweight model for real-time multiclass tiny pest detection and mobile platform deployment. J. Integr. Agric. 2024, 24, 2749–2769. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yang, Y.; Wang, L.; Huang, M.; Zhu, Q.; Wang, R. Polarization imaging based bruise detection of nectarine by using ResNet-18 and ghost bottleneck. Postharvest Biol. Technol. 2022, 189, 111916. [Google Scholar] [CrossRef]

- Chen, C.; Guo, Z.; Zeng, H.; Gong, P.; Dong, J. Repghost: A hardware-efficient ghost module via reparameterization. arXiv 2022, arXiv:2211.06088. [Google Scholar]

- Wang, T.; Zhang, S. DSC-Ghost-Conv: A compact convolution module for building efficient neural network architectures. Multimed. Tools Appl. 2024, 83, 36767–36795. [Google Scholar] [CrossRef]

- Liu, T.; Gu, M.; Sun, S. RIEC-YOLO: An improved road defect detection model based on YOLOv8. Signal, Image Video Process. 2025, 19, 285. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, J.; Liu, Q.; Liang, C.; Zhang, S.; Li, M. Fast quality detection of Astragalus slices using FA-SD-YOLO. Agriculture 2024, 14, 2194. [Google Scholar] [CrossRef]

- Xie, X.; Xu, B.; Chen, Z. Real-time fall attitude detection algorithm based on iRMB. Signal Image Video Process. 2025, 19, 156. [Google Scholar]

- Wang, Y.; Rong, Q.; Hu, C. Ripe Tomato Detection Algorithm Based on Improved YOLOv9. Plants 2024, 13, 3253. [Google Scholar] [CrossRef]

- Mathew, M.P.; Mahesh, T.Y. Leaf-based disease detection in bell pepper plant using YOLO v5. Signal Image Video Process. 2022, 16, 841–847. [Google Scholar] [CrossRef]

- Liu, X.; Wang, T.; Yang, J.; Tang, C.; Lv, J. MPQ-YOLO: Ultra low mixed-precision quantization of YOLO for edge devices deployment. Neurocomputing 2023, 574, 127210. [Google Scholar] [CrossRef]

- Xu, K.; Xu, Y.; Xing, Y.; Liu, Z. YOLO-F: YOLO for flame detection. Int. J. Pattern Recognit. Artif. Intell. 2023, 37, 2250043. [Google Scholar] [CrossRef]

- Kang, L.; Lu, Z.; Meng, L.; Gao, Z. YOLO-FA: Type-1 fuzzy attention based YOLO detector for vehicle detection. Expert Syst. Appl. 2024, 237, 121209. [Google Scholar] [CrossRef]

- Zhong, Z.; Yun, L.; Cheng, F.; Chen, Z.; Zhang, C. Light-YOLO: A Lightweight and Efficient YOLO-Based Deep Learning Model for Mango Detection. Agriculture 2024, 14, 140. [Google Scholar] [CrossRef]

- Liu, Y.; Han, X.; Ren, L.; Ma, W.; Liu, B.; Sheng, C.; Li, Q. Surface Defect and Malformation Characteristics Detection for Fresh Sweet Cherries Based on YOLOv8-DCPF Method. Agronomy 2025, 15, 1234. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, R.; Wang, X. Visual SLAM Mapping Based on YOLOv5 in Dynamic Scenes. Appl. Sci. 2022, 12, 11548. [Google Scholar] [CrossRef]

- Zhang, L.J.; Fang, J.J.; Liu, Y.X.; Le, H.F.; Rao, Z.Q.; Zhao, J.X. CR-YOLOv8: Multiscale Object Detection in Traffic Sign Images. IEEE Access 2023, 12, 219–228. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2014; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Gao, A.; Du, Y.; Li, Y.; Ren, L.; Song, Y. Apple flower phenotype detection method based on YOLO-FL and application of intelligent flower thinning robot. Int. J. Agric. Biol. Eng. 2025, 18, 236–246. [Google Scholar]

- Balasubramani, M.; Sung, C.W.; Hsieh, M.Y. Automated Left Ventricle Segmentation in Echocardiography Using YOLO: A Deep Learning Approach for Enhanced Cardiac Function Assessment. Electronics 2024, 13, 2587. [Google Scholar] [CrossRef]

- Birogul, S.; Temür, G.; Kose, U. YOLO object recognition algorithm and “buy-sell decision” model over 2D candlestick charts. IEEE Access 2020, 8, 91894–91915. [Google Scholar] [CrossRef]

| Dataset Sample Types | Number of Samples in the Dataset |

|---|---|

| Single-target | 419 |

| Multi-target | 771 |

| Backlighting | 435 |

| Occlusion by fruit | 754 |

| Occlusion by branches and leaves | 855 |

| Enterprise | Models |

|---|---|

| Depth cameras | Obi Zhongguang |

| Robotic arms | Nova Series Robotic Arm |

| Industrial controllers | Nuvo-8003 |

| CPU | Intel i7-8700 |

| GPU | GeForce RTX 2060 |

| GhostModule | iEMA | ADown | Precision (%) | Recall (%) | mAP (%) | Model Size (MB) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| × | × | × | 91.7 | 87.8 | 95.5 | 5.5 | 6.3 |

| √ | × | × | 92.3 | 88.4 | 95.7 | 4.9 | 5.5 |

| √ | √ | × | 93.6 | 88.5 | 96.0 | 5.5 | 6.1 |

| √ | √ | √ | 93.9 | 88.9 | 96.2 | 5.0 | 5.2 |

| Module | Precision (%) | Recall (%) | mAP (%) | Model Size (MB) | FLOPs (G) |

|---|---|---|---|---|---|

| Additive Free | 91.7 | 87.8 | 95.5 | 5.5 | 6.3 |

| PConv | 89.9 | 89.1 | 95.3 | 5.2 | 5.9 |

| LDConv | 91.4 | 88.1 | 95.9 | 6.3 | 6.8 |

| SAConv | 92.8 | 87.3 | 95.4 | 7.2 | 8.2 |

| GhostModule | 92.3 | 88.4 | 95.7 | 4.9 | 5.5 |

| Module | Precision (%) | Recall (%) | mAP (%) | Model Size (MB) | FLOPs (G) |

|---|---|---|---|---|---|

| Additive Free | 92.3 | 88.4 | 95.7 | 4.9 | 5.5 |

| SEAM | 93.7 | 86.6 | 95.9 | 5.6 | 6.0 |

| ACmix | 91.6 | 89.1 | 94.9 | 5.6 | 6.2 |

| ECA | 93.3 | 86.9 | 96.2 | 5.5 | 6.0 |

| iRMB | 92.1 | 88.4 | 95.3 | 5.6 | 8.0 |

| iEMA | 93.6 | 88.5 | 96.0 | 5.5 | 6.1 |

| Module | Precision (%) | Recall (%) | mAP (%) | Model Size (MB) | FLOPs (G) |

|---|---|---|---|---|---|

| Additive Free | 93.6 | 88.5 | 96.0 | 5.5 | 6.1 |

| HWDown | 92.8 | 90.2 | 95.4 | 5.2 | 5.4 |

| CGDown | 93.6 | 87.8 | 96.5 | 5.8 | 6.4 |

| ADown | 93.9 | 88.9 | 96.2 | 5.0 | 5.2 |

| Module | Precision (%) | Recall (%) | mAP (%) | Model Size (MB) | FLOPs (G) | Speed (fps) |

|---|---|---|---|---|---|---|

| Faster RCNN | 92.3 | 87.5 | 96.0 | 112.4 | 236.7 | 19 |

| YOLOv5 | 90.8 | 87.0 | 95.1 | 3.9 | 4.1 | 96 |

| YOLOv8 | 89.3 | 86.8 | 94.9 | 5.6 | 6.8 | 85 |

| YOLOv9 | 90.7 | 88.3 | 95.5 | 6.4 | 9.4 | 47 |

| YOLOv10 | 91.3 | 87.4 | 94.2 | 5.8 | 6.8 | 58 |

| YOLOv11 | 91.7 | 87.8 | 95.5 | 5.5 | 6.3 | 64 |

| YOLOv12 | 91.3 | 87.3 | 95.4 | 5.4 | 6.3 | 65 |

| GIA-YOLO | 93.9 | 88.9 | 96.2 | 5.0 | 5.2 | 73 |

| Category | Total Number | Detected/ Count | Missed/ Count | False Positives/ Count | Miss Detection Rate (%) | False Detection Rate (%) |

|---|---|---|---|---|---|---|

| Long Distance | 104 | 84 | 9 | 11 | 8.7 | 10.5 |

| Short Distance | 87 | 77 | 4 | 6 | 4.6 | 6.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, L.; Li, Y.; Du, Y.; Gao, A.; Ma, W.; Song, Y.; Han, X. GIA-YOLO: A Target Detection Method for Nectarine Picking Robots in Facility Orchards. Agronomy 2025, 15, 1934. https://doi.org/10.3390/agronomy15081934

Ren L, Li Y, Du Y, Gao A, Ma W, Song Y, Han X. GIA-YOLO: A Target Detection Method for Nectarine Picking Robots in Facility Orchards. Agronomy. 2025; 15(8):1934. https://doi.org/10.3390/agronomy15081934

Chicago/Turabian StyleRen, Longlong, Yuqiang Li, Yonghui Du, Ang Gao, Wei Ma, Yuepeng Song, and Xingchang Han. 2025. "GIA-YOLO: A Target Detection Method for Nectarine Picking Robots in Facility Orchards" Agronomy 15, no. 8: 1934. https://doi.org/10.3390/agronomy15081934

APA StyleRen, L., Li, Y., Du, Y., Gao, A., Ma, W., Song, Y., & Han, X. (2025). GIA-YOLO: A Target Detection Method for Nectarine Picking Robots in Facility Orchards. Agronomy, 15(8), 1934. https://doi.org/10.3390/agronomy15081934