Banana Fusarium Wilt Recognition Based on UAV Multi-Spectral Imagery and Automatically Constructed Enhanced Features

Abstract

1. Introduction

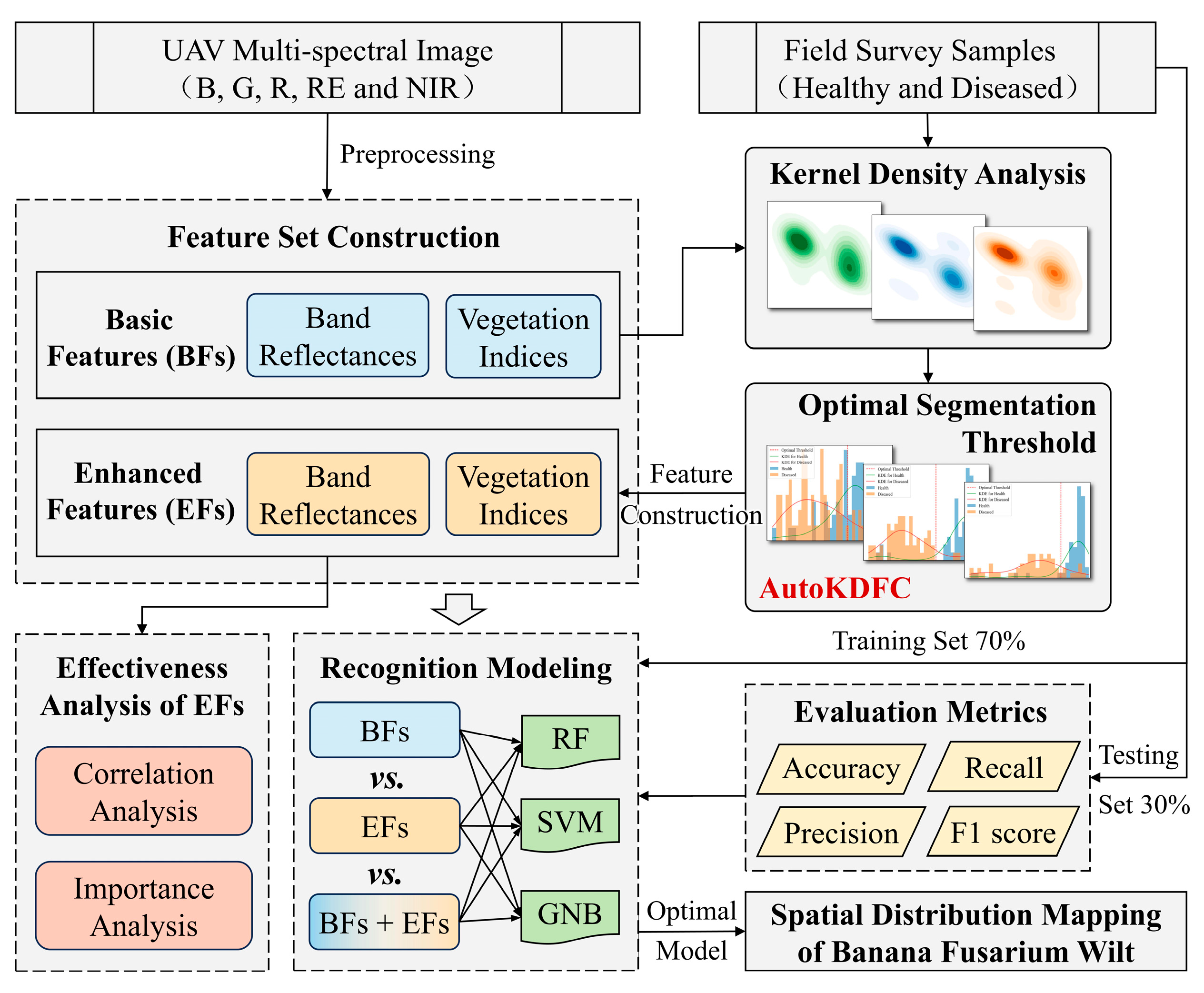

2. Materials and Methods

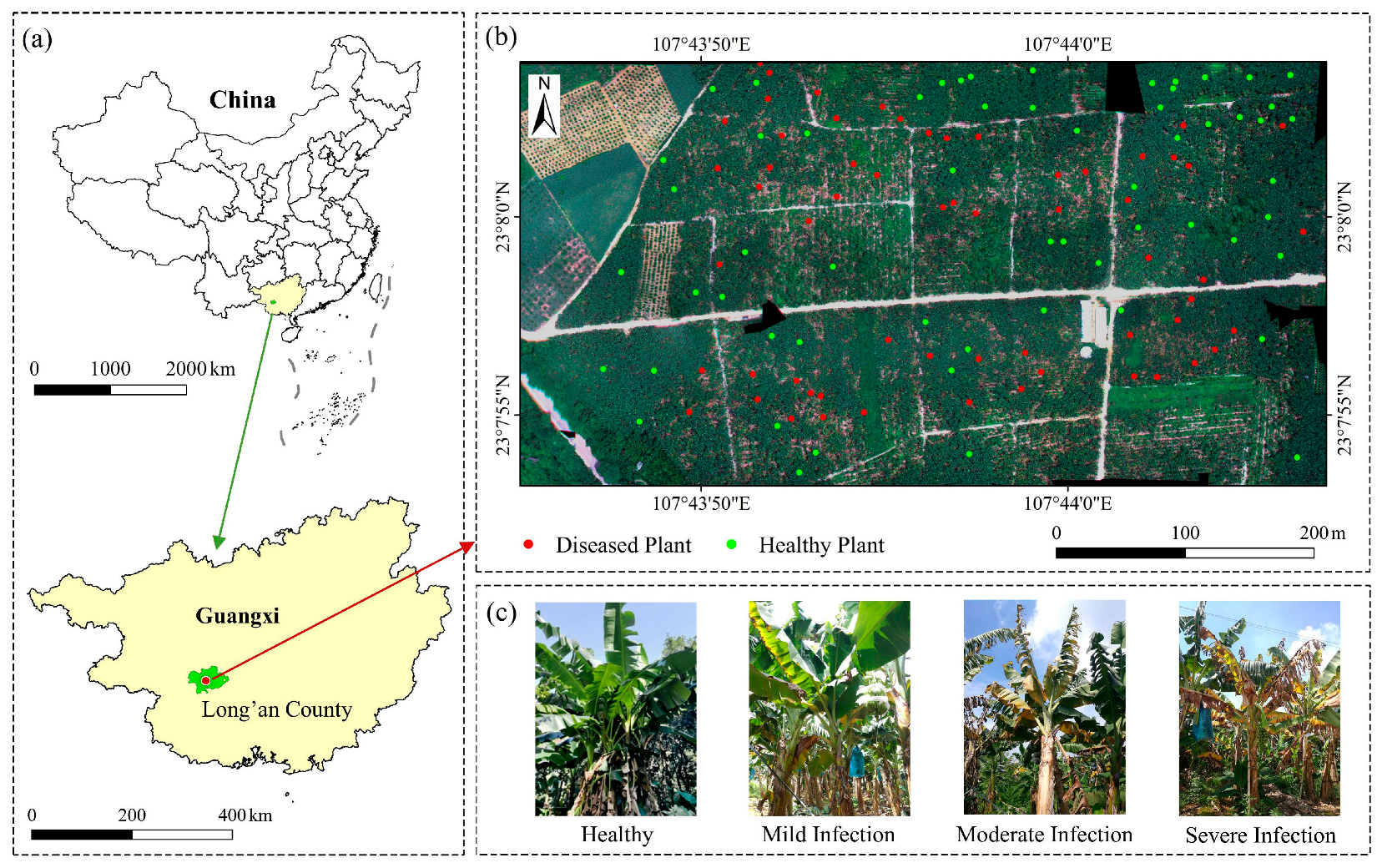

2.1. Study Area and Data

2.1.1. Study Area

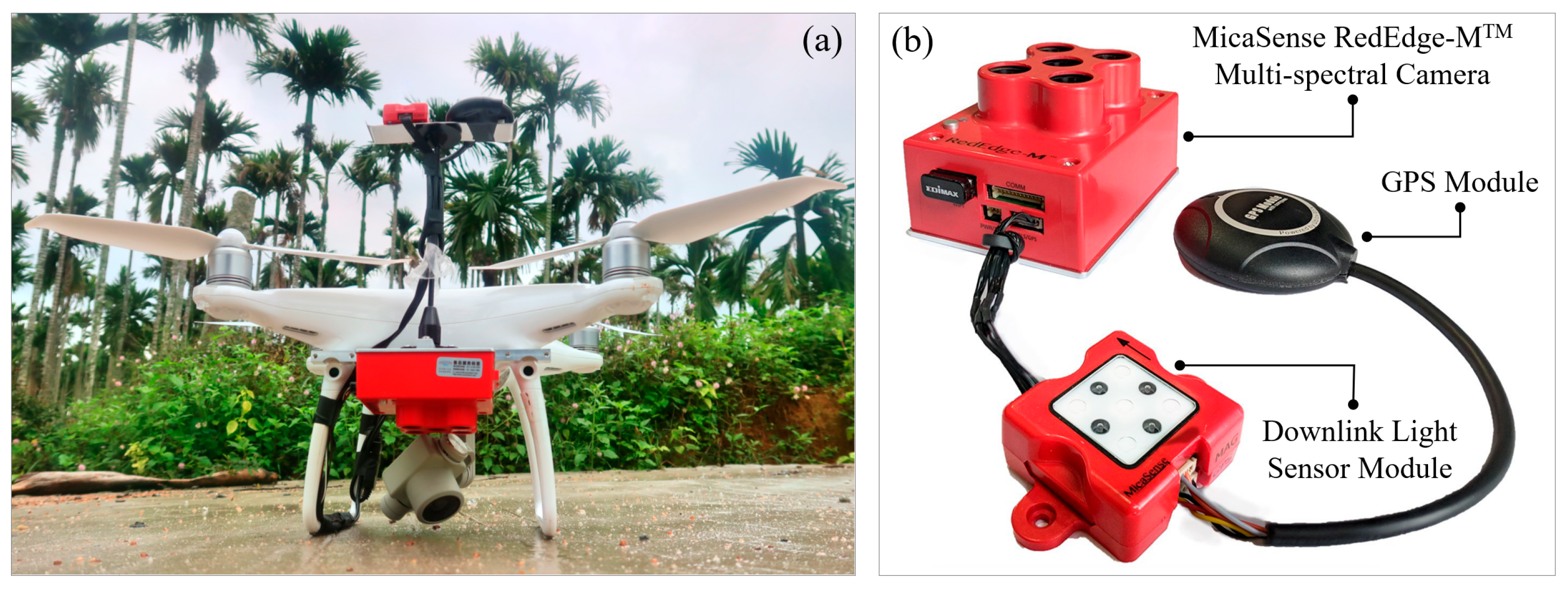

2.1.2. Data and Preprocessing

Field Survey of Banana Fusarium Wilt

UAV Multi-Spectral Data Acquisition and Preprocessing

2.2. Methods

2.2.1. Construction of Basic Features

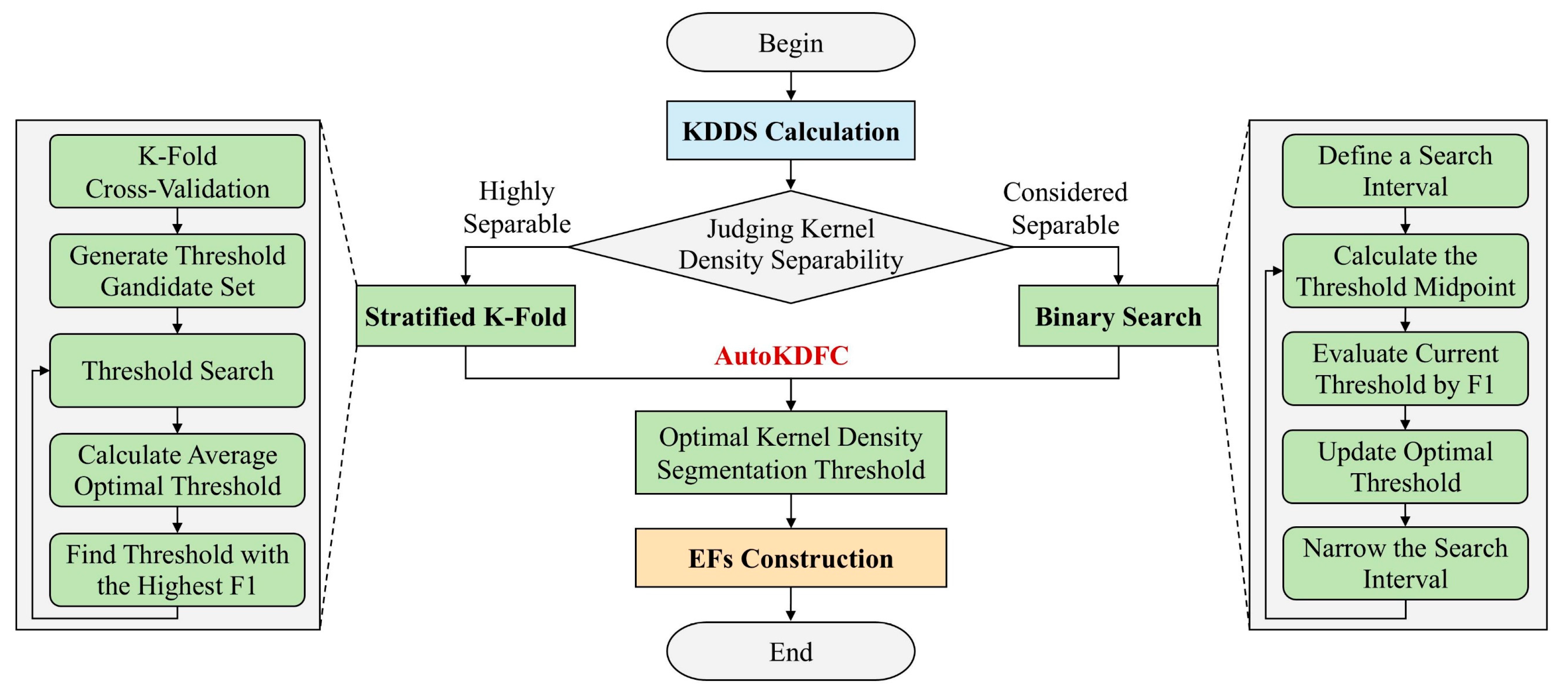

2.2.2. Automated Construction of Enhanced Features

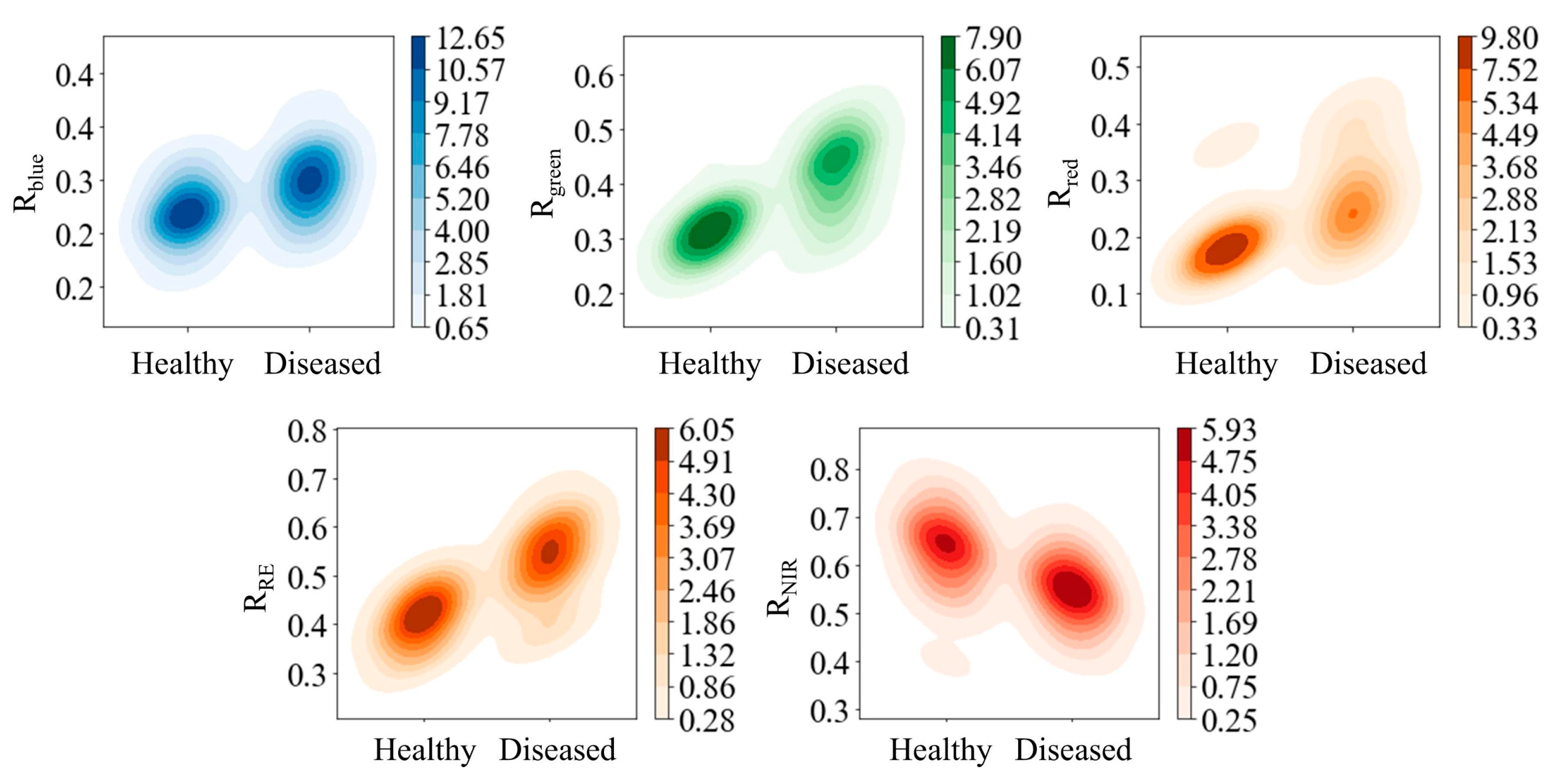

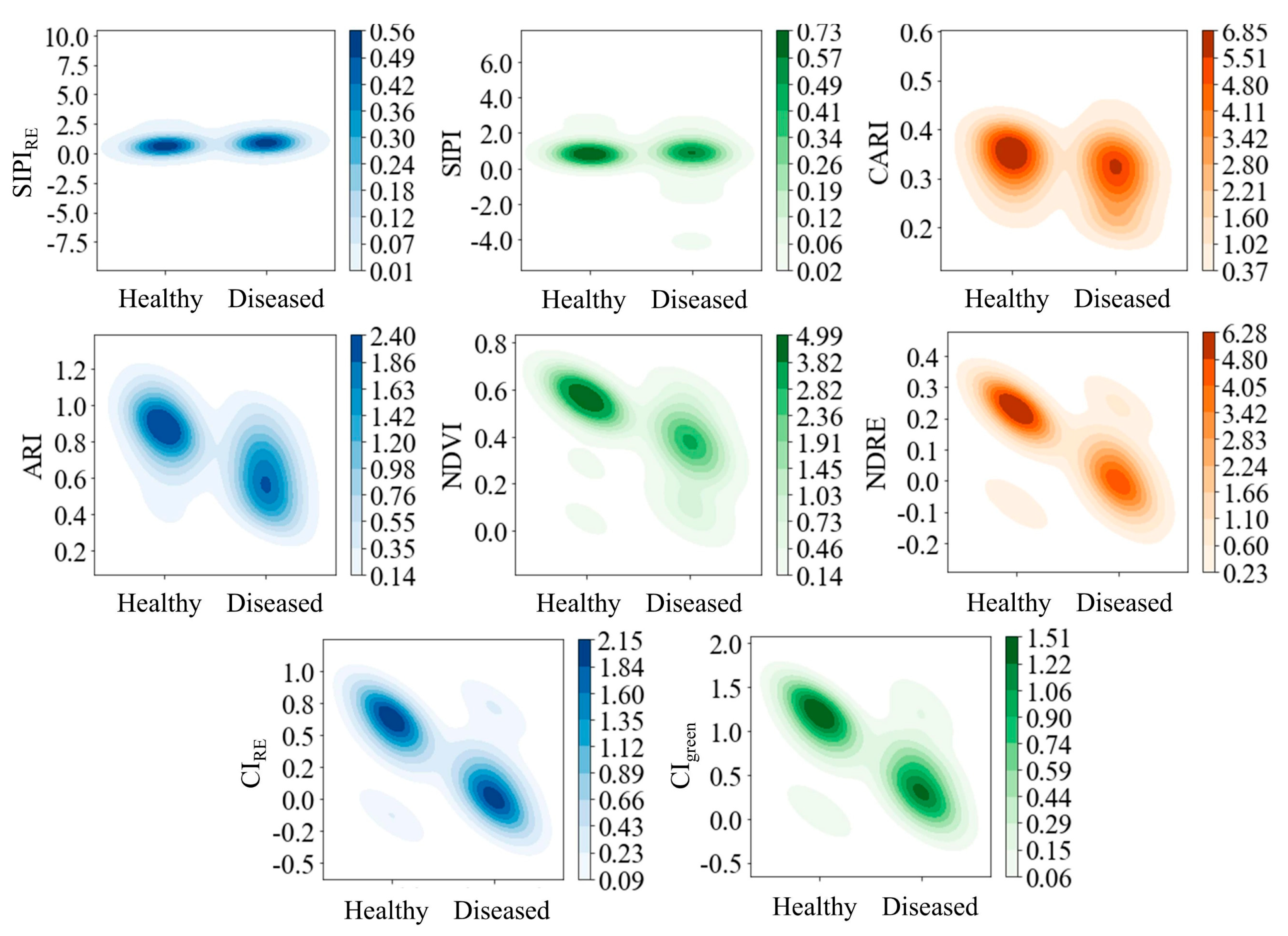

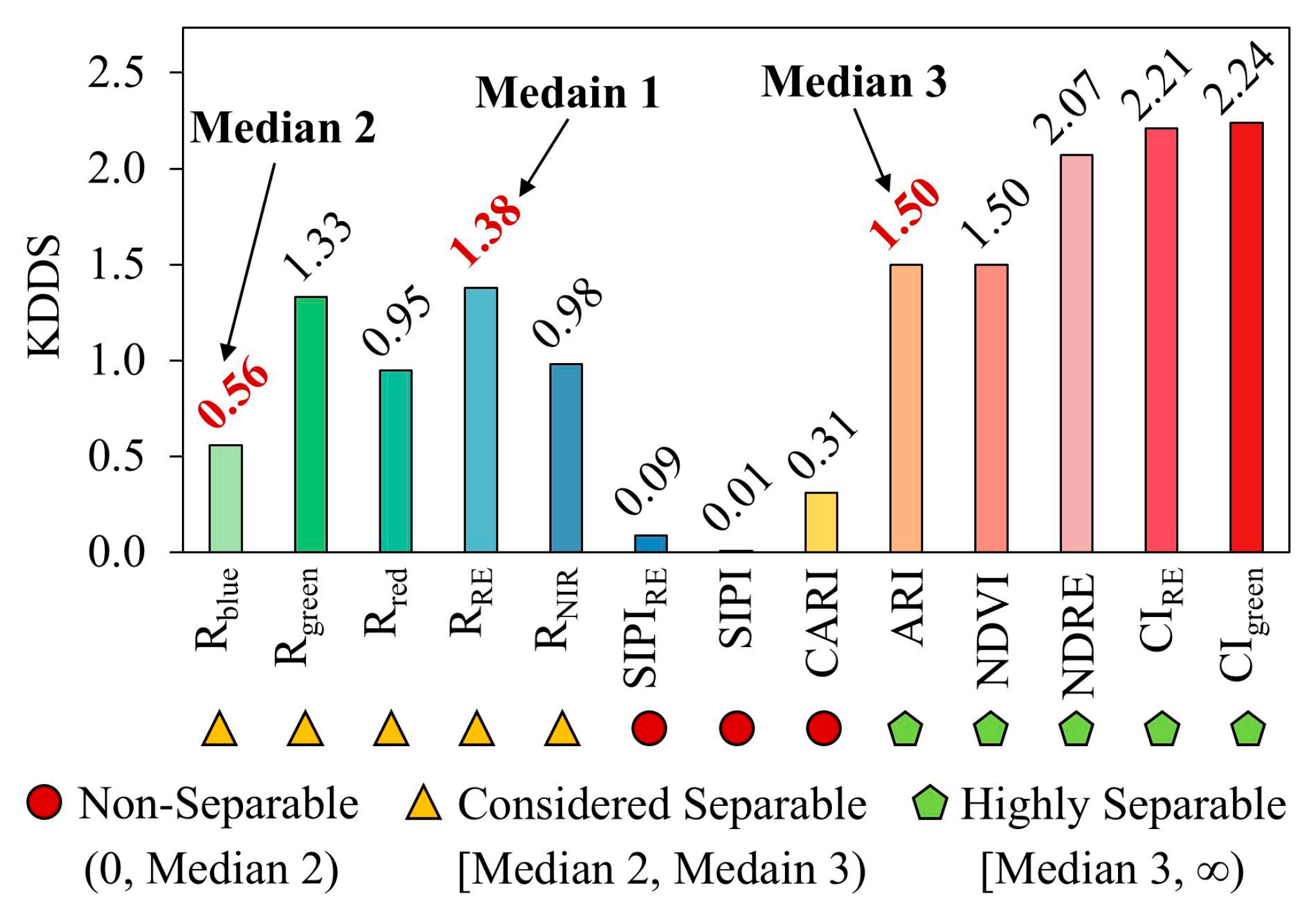

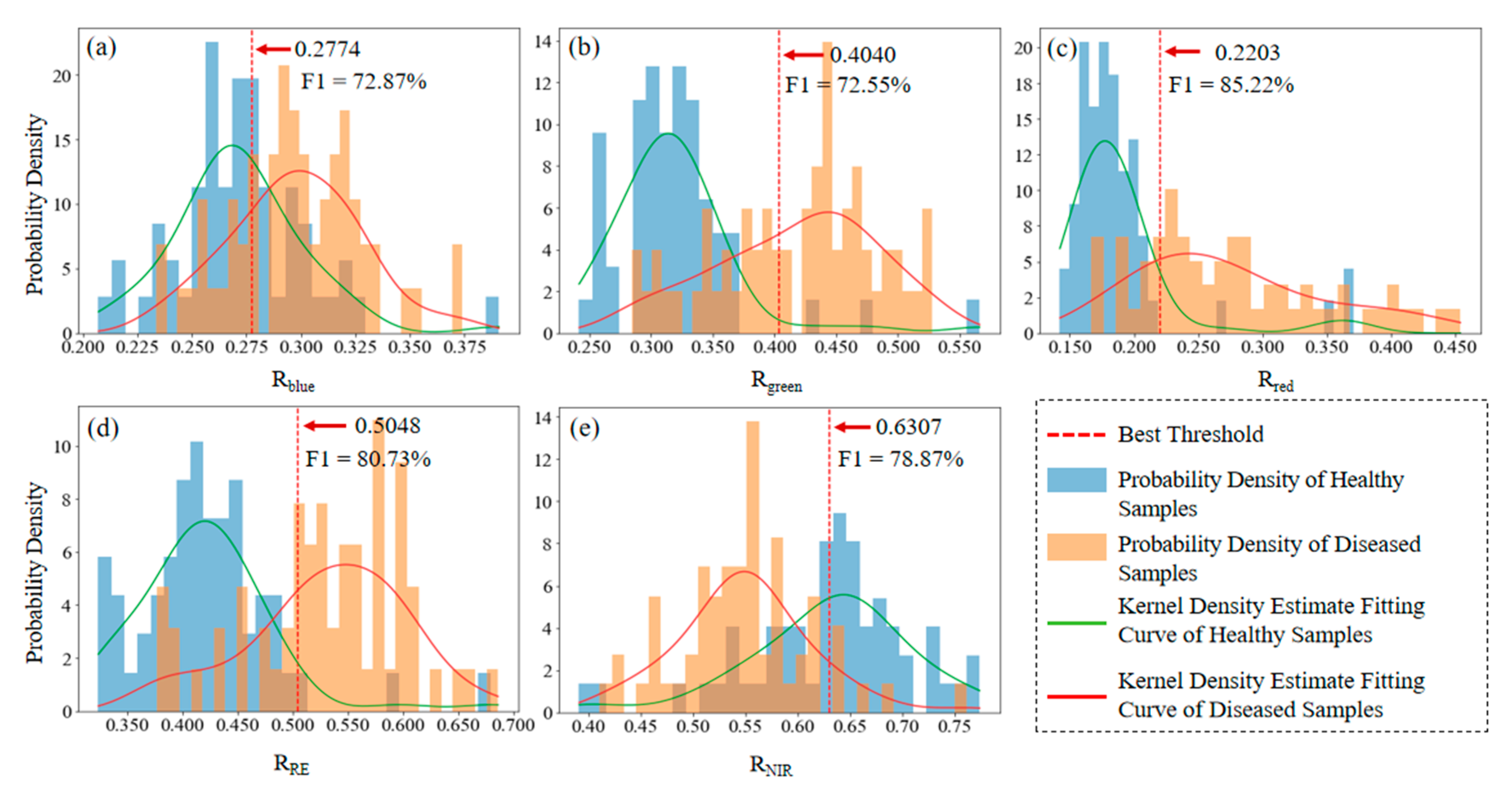

Evaluation of Basic Feature Separability Using Kernel Density

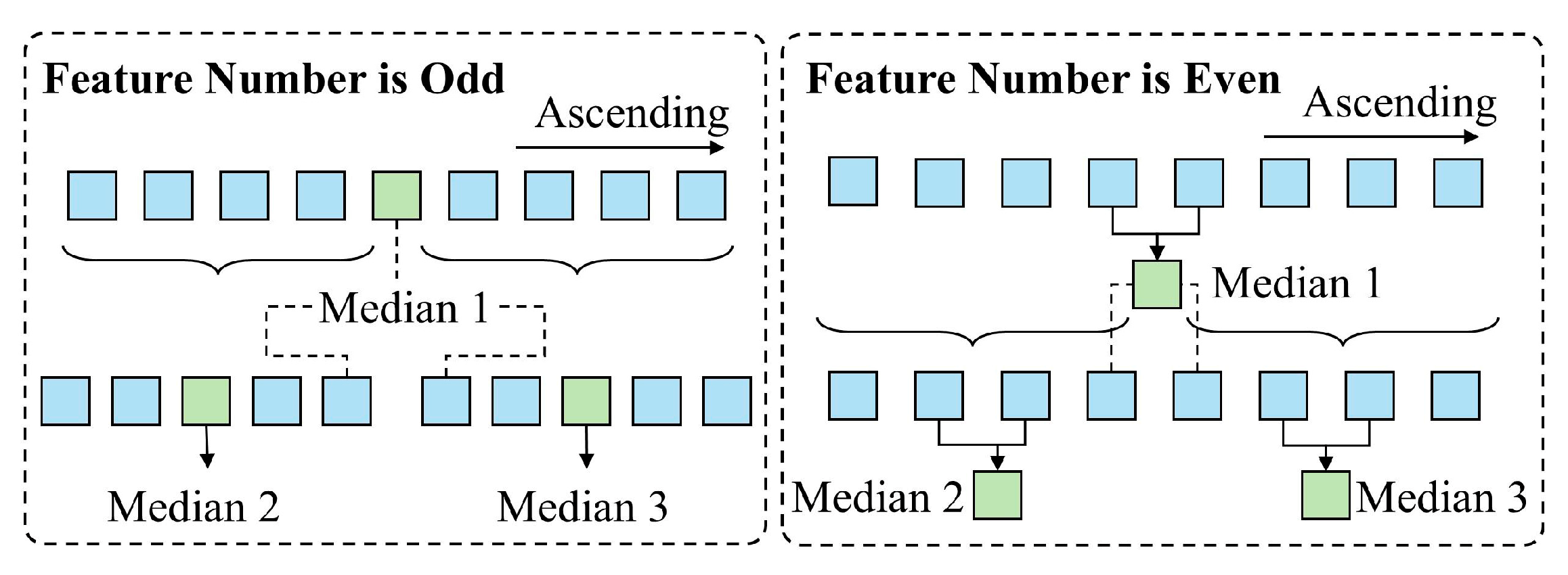

Automatic Determination of Optimal Segmentation Thresholds

- (1)

- Highly Separable Features—Stratified K-Fold Cross-Validation

- Uniformly sample candidate thresholds within the range from the minimum to maximum values of the basic feature (e.g., NDVI) in the training set.

- For each threshold, label samples based on whether the NDVI value is below or above the threshold (label 0 or 1, respectively).

- Compute the F1 score on the validation set for each threshold.

- Identify the threshold with the highest F1 score and record both the threshold and corresponding F1 score.

- Compute the mean F1 score and the mean optimal threshold across all folds.

- (2)

- Considered Separable Features—Binary Search

- Set the minimum and maximum values of the feature (e.g., Rblue) as the initial search interval.

- In each iteration, compute the midpoint as a candidate threshold and evaluate its F1 score.

- If the current F1 score exceeds the previous best, update the optimal threshold.

- Adjust the search interval based on the F1 score: If the left interval yields a better F1 score, narrow the search interval to the left (update Rblue_max); otherwise, narrow the search interval to the right (update Rblue_min).

- Terminate the search when the range is less than 1 × 10−5 or the maximum number of iterations (max_iter) is reached, and return the optimal threshold and its F1 score.

Construction of Enhanced Features Based on OST

2.2.3. Machine Learning Modeling

2.2.4. Model Performance Evaluation

3. Results

3.1. Optimal Kernel Density Segmentation Thresholds

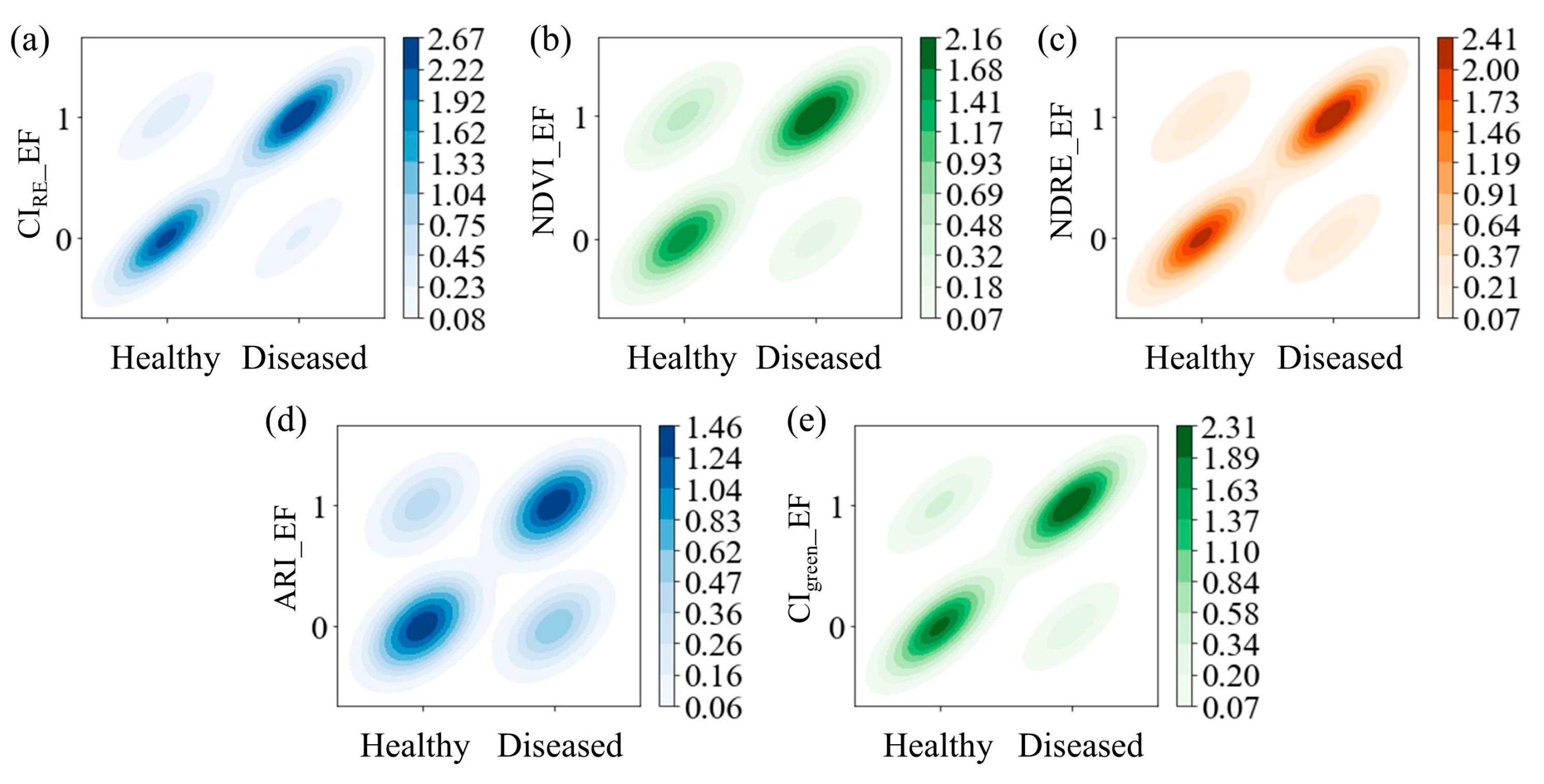

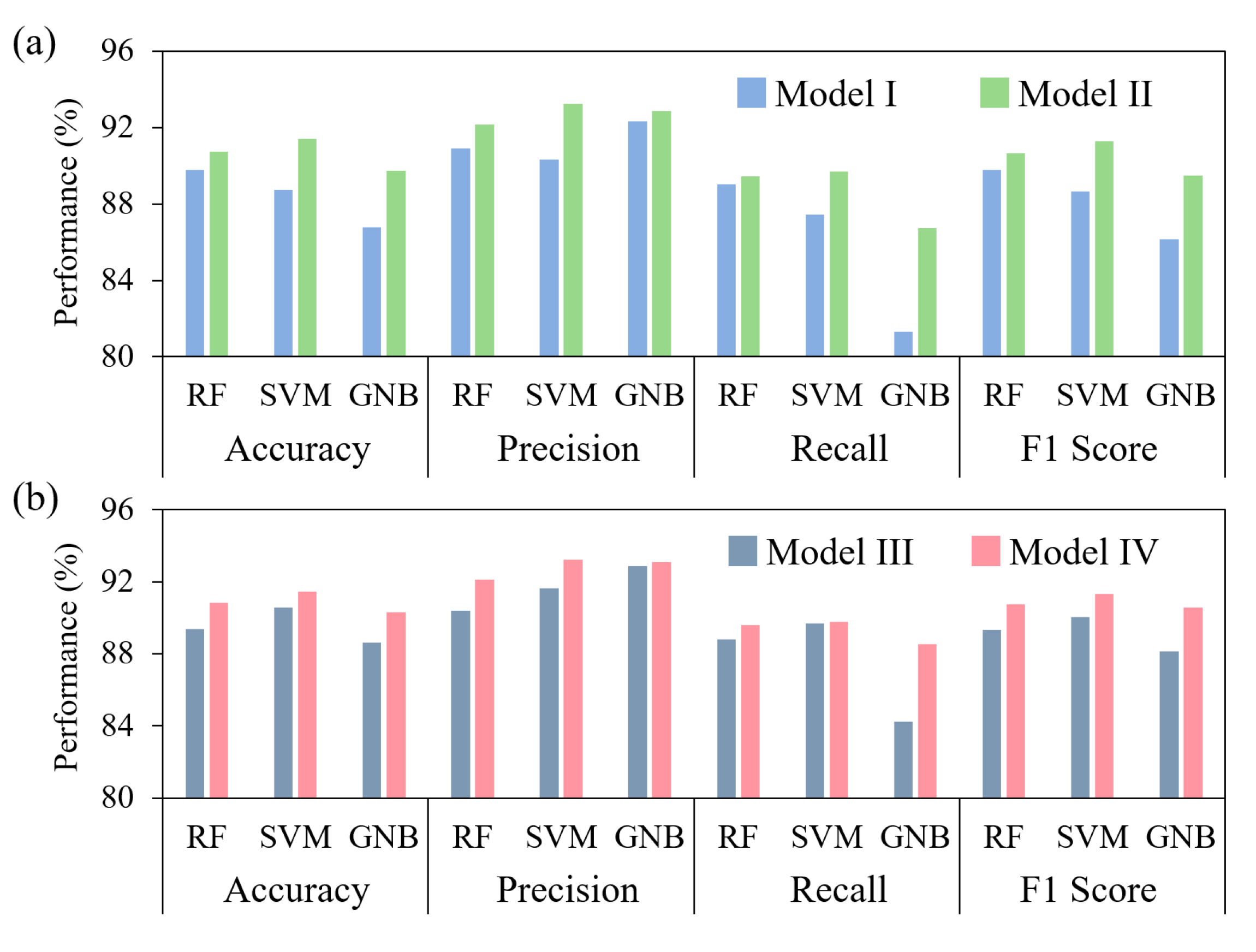

3.2. Effectiveness of Enhanced Features

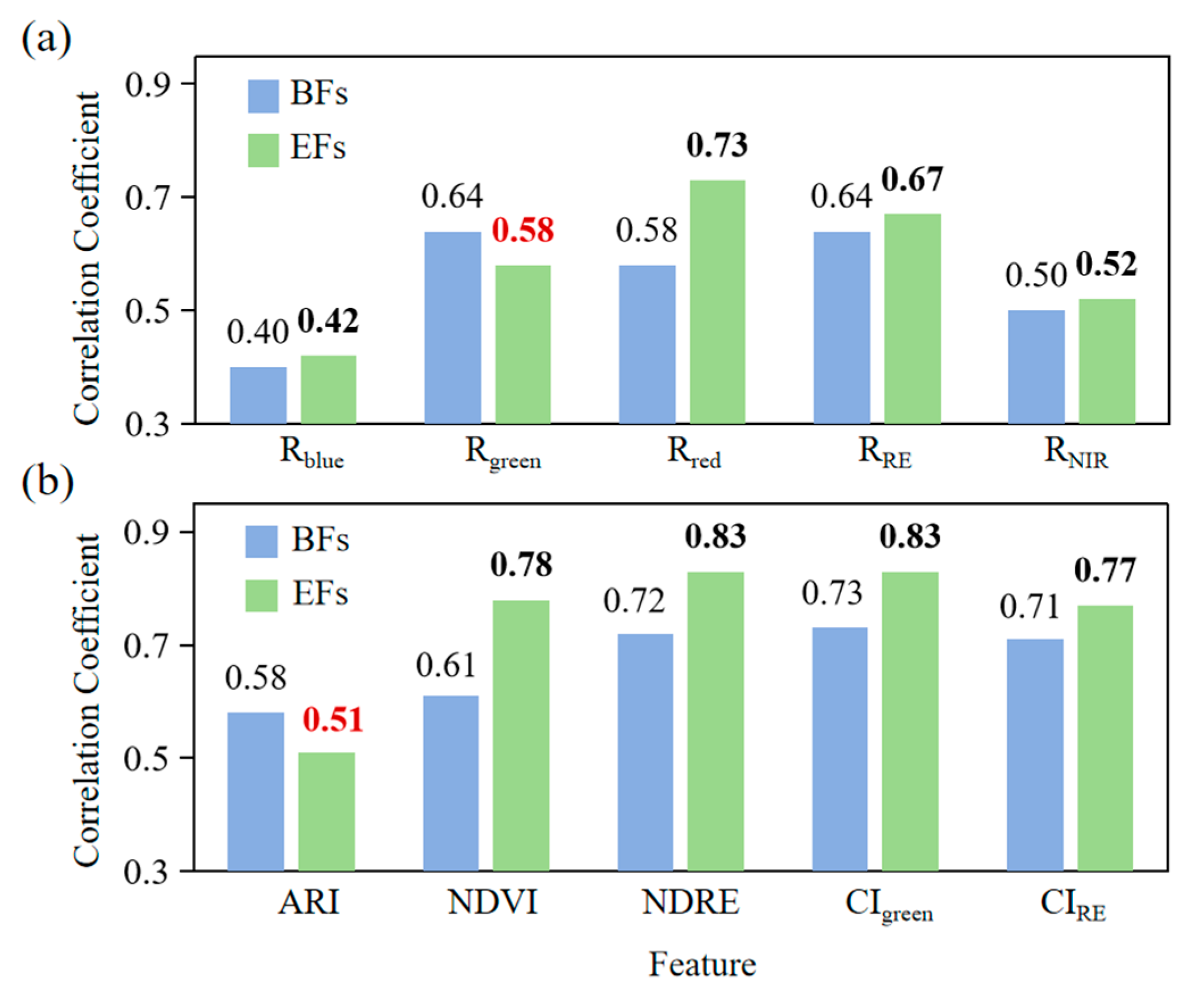

3.2.1. Correlation Analysis

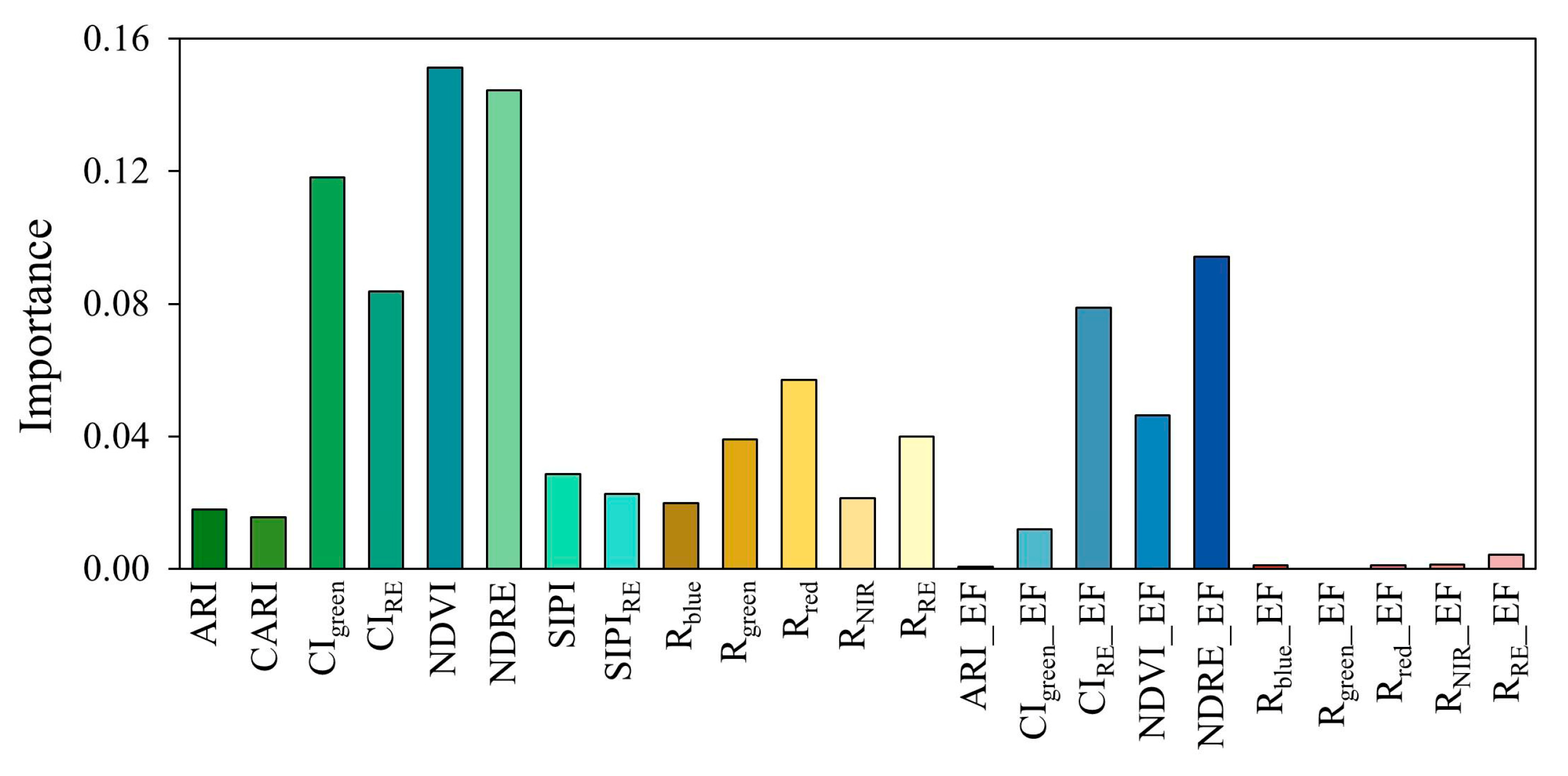

3.2.2. Feature Importance Analysis

3.3. Predictive Performance of BFW Recognition Models

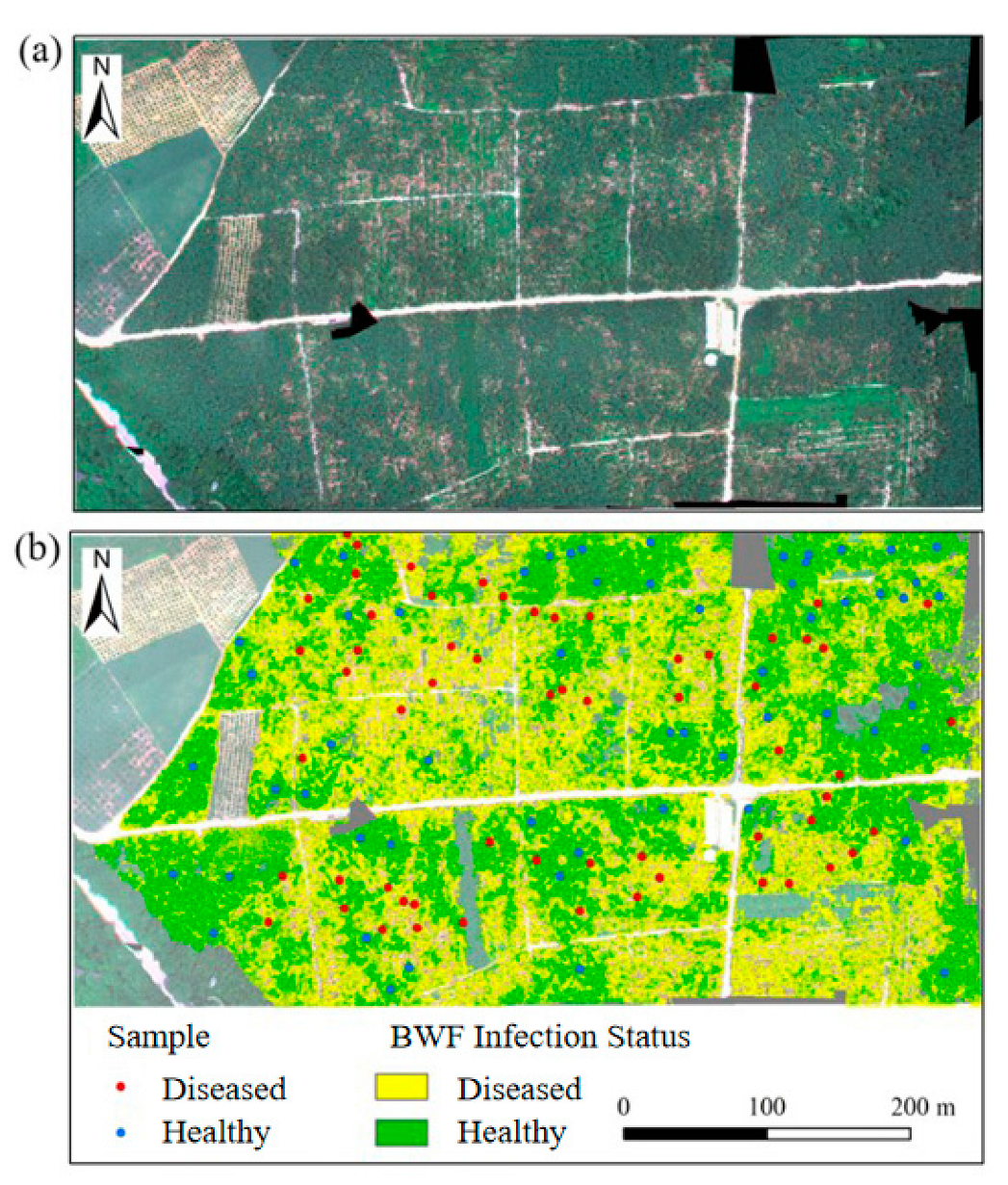

3.4. Spatial Distribution Mapping of Banana Fusarium Wilt

4. Discussion

4.1. Mechanistic Interpretation of Feature Separability and Threshold Rationality

4.2. Mechanistic Interpretation of the Importance of Enhanced Features

4.3. Methodological Benchmarking and Performance Reference to Similar Studies

4.4. Future Research Directions and Perspectives

- (1)

- Disease severity monitoring: EFs are constructed to capture subtle spectral vibrations during early-stage disease infection, showing particularly high recognition capability for mildly infected samples. However, due to the limited sample size, this study did not conduct an in-depth analysis of disease severity monitoring. Future research with a sufficient data volume could further validate the role of EFs in identifying mildly infected samples and evaluate their applicability across different disease progression stages.

- (2)

- Feature optimization: This study did not apply independent feature selection and instead combined BFs and EFs. Given their derivation, there may be significant information redundancy. Future work could apply feature selection methods such as the Pearson correlation coefficient [42], mutual information [43], or LASSO [44] to reduce redundancy and improve model generalizability [45,46,47].

- (3)

- Application extension: AutoKDFC demonstrates strong potential for disease recognition and could be extended to other crop disease monitoring tasks. Integrating this approach with multi-source remote sensing data and deep learning models may yield more efficient and accurate disease recognition systems, supporting the advancement of precision agriculture.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shen, Z.; Xue, C.; Penton, C.R.; Thomashow, L.S.; Zhang, N.; Wang, B.; Ruan, Y.; Li, R.; Shen, Q. Suppression of banana Panama disease induced by soil microbiome reconstruction through an integrated agricultural strategy. Soil Biol. Biochem. 2019, 128, 164–174. [Google Scholar] [CrossRef]

- Ploetz, R.C. Fusarium Wilt of Banana. Phytopathology 2015, 105, 1512. [Google Scholar] [CrossRef] [PubMed]

- Ismaila, A.A.; Ahmad, K.; Siddique, Y.; Wahab, M.A.A.; Kutawa, A.B.; Abdullahi, A.; Zobir, S.A.M.; Abdu, A.; Abdullah, S.N.A. Fusarium wilt of banana: Current update and sustainable disease control using classical and essential oils approaches. Hortic. Plant J. 2023, 9, 1–28. [Google Scholar] [CrossRef]

- Pegg, K.G.; Coates, L.M.; O’Neill, W.T.; Turner, D.W. The Epidemiology of Fusarium Wilt of Banana. Front. Plant Sci. 2019, 10, 1395. [Google Scholar] [CrossRef]

- Ordonez, N.; Seidl, M.F.; Waalwijk, C.; Drenth, A.; Kilian, A.; Thomma, B.P.H.J.; Ploetz, R.C.; Kema, G.H.J. Worse Comes to Worst: Bananas and Panama Disease—When Plant and Pathogen Clones Meet. PLoS Pathog. 2015, 11, e1005197. [Google Scholar] [CrossRef]

- Segura-Mena, R.A.; Stoorvogel, J.J.; García-Bastidas, F.; Salacinas-Niez, M.; Kema, G.H.J.; Sandoval, J.A. Evaluating the potential of soil management to reduce the effect of Fusarium oxysporum f. sp. cubense in banana (Musa AAA). Eur. J. Plant Pathol. 2021, 160, 441–455. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, D.; Qi, D.; Wei, Y.; Chen, Y.; Feng, J.; Wang, W.; Xie, J. Research progress on the integrated control of Fusarium wilt disease in banana. Sci. Sin. Vitae 2024, 54, 1843–1852. [Google Scholar]

- Dita, M.; Barquero, M.; Heck, D.; Mizubuti, E.S.G.; Staver, C.P. Fusarium Wilt of Banana: Current Knowledge on Epidemiology and Research Needs Toward Sustainable Disease Management. Front. Plant Sci. 2018, 9, 1468. [Google Scholar] [CrossRef]

- Wang, W.; Liu, Z.; Zheng, C.; Zhang, L. Research Progress on Banana Fusarium Wilt. China Port Sci. Technol. 2024, 6, 44–51. [Google Scholar]

- Siamak, S.B.; Zheng, S. Banana Fusarium Wilt (Fusarium oxysporum f. sp. cubense) Control and Resistance, in the Context of Developing Wilt-resistant Bananas Within Sustainable Production Systems. Hortic. Plant J. 2018, 4, 208–218. [Google Scholar] [CrossRef]

- Lan, Y.; Zhu, Z.; Deng, X.; Lian, B.; Huang, Y.; Huang, Z.; Hu, J. Monitoring and classification of citrus Huanglongbing based on UAV hyperspectral remote sensing. Trans. Chin. Soc. Agric. Eng. 2019, 35, 92–100. [Google Scholar]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. Early detection of pine wilt disease using deep learning algorithms and UAV-based multispectral imagery. For. Ecol. Manag. 2021, 497, 119493. [Google Scholar] [CrossRef]

- Liao, J.; Tao, W.; Zang, Y.; Wang, P.; Luo, X. Research Progress and Prospect of Key Technologies in Crop Disease and Insect Pest Monitoring. Trans. Chin. Soc. Agric. Mach. 2023, 54, 1–19. [Google Scholar]

- Duarte, A.; Borralho, N.; Cabral, P.; Caetano, M. Recent Advances in Forest Insect Pests and Diseases Monitoring Using UAV-Based Data: A Systematic Review. Forests 2022, 13, 911. [Google Scholar] [CrossRef]

- Kaivosoja, J.; Hautsalo, J.; Heikkinen, J.; Hiltunen, L.; Ruuttunen, P.; Näsi, R.; Niemeläinen, O.; Lemsalu, M.; Honkavaara, E.; Salonen, J. Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases. Remote Sens. 2021, 13, 1238. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of Banana Fusarium Wilt Based on UAV Remote Sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Ba, Y.; Lyu, X.; Zhang, M.; Li, M. Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery. Remote Sens. 2022, 14, 1231. [Google Scholar] [CrossRef]

- Lin, S.; Ji, T.; Wang, J.; Li, K.; Lu, F.; Ma, C.; Gao, Z. BFWSD: A lightweight algorithm for banana fusarium wilt severity detection via UAV-Based Large-Scale Monitoring. Smart Agric. Technol. 2025, 11, 101047. [Google Scholar] [CrossRef]

- Yuan, L.; Pu, R.; Zhang, J.; Wang, J.; Yang, H. Using high spatial resolution satellite imagery for mapping powdery mildew at a regional scale. Precis. Agric. 2016, 17, 332–348. [Google Scholar] [CrossRef]

- Yang, G.; He, Y.; Feng, X.; Li, X.; Zhang, J.; Yu, Z. Methods and New Research Progress of Remote Sensing Monitoring of Crop Disease and Pest Stress Using Unmanned Aerial Vehicle. Smart Agric. 2022, 4, 1–16. [Google Scholar]

- Wang, G.; Lan, Y.; Qi, H.; Chen, P.; Hewitt, A.; Han, Y. Field evaluation of an unmanned aerial vehicle (UAV) sprayer: Effect of spray volume on deposition and the control of pests and disease in wheat. Pest Manag. Sci. 2019, 75, 1546–1555. [Google Scholar] [CrossRef]

- Das, S.; Biswas, A.; VimalKumar, C.; Sinha, P. Deep Learning Analysis of Rice Blast Disease using Remote Sensing Images. IEEE Geosci. Remote Sens. 2023, 20, 1. [Google Scholar] [CrossRef]

- Zhang, N.; Chai, X.; Li, N.; Zhang, J.; Sun, T.; Sveriges, L. Applicability of UAV-based optical imagery and classification algorithms for detecting pine wilt disease at different infection stages. GIScience Remote Sens. 2023, 60, 2170479. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, Z.; Yang, J.; Zheng, Z.; Huang, Z.; Yin, X.; Wei, S.; Lan, Y. Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sens. 2020, 12, 2678. [Google Scholar] [CrossRef]

- Tu, T.; Su, Y.; Tang, Y.; Guo, G.; Tan, W.; Ren, S. SHFW: Second-order hybrid fusion weight–median algorithm based on machining learning for advanced IoT data analytics. Wirel. Netw. 2023, 30, 6055–6067. [Google Scholar] [CrossRef]

- Tu, T.; Su, Y.; Ren, S. FC-MIDTR-WCCA: A Machine Learning Framework for PM2.5 Prediction. IAENG Int. J. Comput. Sci. 2024, 51, 544–552. [Google Scholar]

- Su, Y.; Zhao, L.; Li, X.; Li, H.; Ge, Y.; Chen, J. FC-StackGNB: A novel machine learning modeling framework for forest fire risk prediction combining feature crosses and model fusion algorithm. Ecol. Indic. 2024, 166, 112577. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third ERTS-1 Symposium NASA SP-351, Greenbelt, MD, USA, 10–14 December 1973. [Google Scholar]

- Gitelson, A.; Merzlyak, M.N. Spectral reflectance changes associated with autumn senescence of aesculus–hippocastanum L and acer-platanoides L leaves—Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Vina, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Peñuelas, J.; Inoue, Y. Reflectance indices indicative of changes in water and pigment contents of peanut and wheat leaves. Photosynthetica 1999, 36, 355–360. [Google Scholar] [CrossRef]

- Ramoelo, A.; Skidmore, A.K.; Cho, M.A.; Schlerf, M.; Mathieu, R.; Heitkonig, I.M.A. Regional estimation of savanna grass nitrogen using the red-edge band of the spaceborne RapidEye sensor. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 151–162. [Google Scholar] [CrossRef]

- Kim, M.S.; Daughtry, C.S.T.; Chappelle, E.W.; McMurtrey, J.E.; Walthall, C.L. The use of high spectral resolution bands for estimating absorbed photosynthetically active radiation (APAR). In Proceedings of the 6th International Symposium on Physical Measurements and Signatures in Remote Sensing, Val d’Isère, France, 17–21 January 1994; pp. 299–306. [Google Scholar]

- Gitelson, A.A.; Merzlyak, M.N.; Chivkunova, O.B. Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.S.; Scott, C.D. Robust kernel density estimation. J. Mach. Learn. Res. 2012, 13, 2529–2565. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Xue, J.; Titterington, D.M. Comment on “On Discriminative vs. Generative Classifiers: A Comparison of Logistic Regression and Naive Bayes”. Neural Process. Lett. 2008, 28, 169–187. [Google Scholar] [CrossRef]

- Fang, C.; Wang, L.; Xu, H. A comparative study of different red edge indices for remote sensing recognition of urban grassland health status. J. Geo-Inf. Sci. 2017, 19, 1382–1392. [Google Scholar]

- Yuan, X.; Zhou, G.; Wang, Q.; He, Q. Hyperspectral characteristics of chlorophyll content in summer maize under different water irrigation conditions and its inversion. Acta Ecol. Sin. 2021, 41, 543–552. [Google Scholar] [CrossRef]

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson Correlation Coefficient. In Noise Reduction in Speech Processing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–4. [Google Scholar] [CrossRef]

- Duncan, T.E. On the calculation of mutual information. SIAM J. Appl. Math. 1970, 19, 215–220. [Google Scholar] [CrossRef]

- Roth, V. The generalized LASSO. IEEE Trans. Neural Netw. 2004, 15, 16–28. [Google Scholar] [CrossRef]

- Kumar, V.; Minz, S. Feature Selection: A literature Review. Smart Comput. Rev. 2014, 4, 211–229. [Google Scholar] [CrossRef]

- Hancer, E.; Xue, B.; Zhang, M. A survey on feature selection approaches for clustering. Artif. Intell. Rev. 2020, 53, 4519–4545. [Google Scholar] [CrossRef]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.P.; Tang, J.; Liu, H. Feature Selection. ACM Comput. Surv. 2018, 50, 1–45. [Google Scholar] [CrossRef]

| Vegetation Indices | Formulation | Sensitive Parameter | Reference |

|---|---|---|---|

| NDVI | Leaf area index, green biomass | [28] | |

| NDRE | Leaf area index, green biomass | [29] | |

| CIgreen | Chlorophyll content | [30] | |

| CIRE | Chlorophyll content | [31] | |

| SIPI | Pigment content | [32] | |

| SIPIRE | Pigment content | [33] | |

| CARI | Carotenoid content | [34] | |

| ARI | Anthocyanin content | [35] |

| Feature Type | Feature Category | Specific Feature |

|---|---|---|

| Basic Features (BFs) | BRBFs | (1) Rblue; (2) Rgreen; (3) Rred; (4) RRE; (5) RNIR |

| VIBFs | (6) SIPIRE; (7) SIPI; (8) CARI; (9) ARI; (10) CIgreen; (11) NDVI; (12) NDRE; (13) CIRE | |

| Enhanced Features (EFs) | BREFs | (14) Rblue_EF; (15) Rgreen_EF; (16) Rred_EF; (17) RRE_EF; (18) RNIR_EF |

| VIEFs | (19) ARI_EF; (20) CIRE_EF; (21) NDVI_EF; (22) NDRE_EF; (23) CIgreen_EF |

| Model | Feature Set | Features Used for Modeling |

|---|---|---|

| I | BFs (13) | BRBFs (8) + VIBFs (5) |

| II | BFs (13) + EFs (10) | BRBFs (8) + VIBFs (5) + BREFs (5) + VIEFs (5) |

| III | BFs (10) | BRBFs (5) + VIBFs (5) |

| IV | EFs (10) | BREFs (5) + VIEFs (5) |

| Comparative Experiment | Algorithm | Model | Evaluation Metric | Average | |||

|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1 Score | ||||

| Comparative Experiment 1 | RF | Model I | 89.78 | 90.89 | 89.02 | 89.76 | 89.86 |

| Model II | 90.72 | 92.17 | 89.44 | 90.63 | 90.74 | ||

| Improvement | 0.94 | 1.28 | 0.42 | 0.87 | 0.88 | ||

| SVM | Model I | 88.72 | 90.32 | 87.46 | 88.64 | 88.79 | |

| Model II | 91.39 | 93.22 | 89.68 | 91.27 | 91.39 | ||

| Improvement | 2.67 | 2.90 | 2.22 | 2.63 | 2.61 | ||

| GNB | Model I | 86.78 | 92.32 | 81.30 | 86.13 | 86.63 | |

| Model II | 89.72 | 92.86 | 86.75 | 89.49 | 89.71 | ||

| Improvement | 2.94 | 0.54 | 5.45 | 3.36 | 3.07 | ||

| Comparative Experiment 2 | RF | Model III | 89.39 | 90.38 | 88.81 | 89.34 | 89.48 |

| Model IV | 90.83 | 92.31 | 89.58 | 90.76 | 90.82 | ||

| Improvement | 1.44 | 1.93 | 0.77 | 1.42 | 1.39 | ||

| SVM | Model III | 90.56 | 91.63 | 89.69 | 90.50 | 90.60 | |

| Model IV | 91.44 | 93.22 | 89.78 | 91.33 | 91.44 | ||

| Improvement | 0.85 | 1.59 | 0.09 | 0.83 | 0.84 | ||

| GNB | Model III | 88.61 | 92.86 | 84.26 | 88.15 | 88.47 | |

| Model IV | 90.78 | 93.08 | 88.55 | 90.58 | 90.62 | ||

| Improvement | 2.17 | 0.22 | 4.29 | 2.43 | 2.28 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Y.; Zhao, L.; Ye, H.; Huang, W.; Li, X.; Li, H.; Chen, J.; Kong, W.; Zhang, B. Banana Fusarium Wilt Recognition Based on UAV Multi-Spectral Imagery and Automatically Constructed Enhanced Features. Agronomy 2025, 15, 1837. https://doi.org/10.3390/agronomy15081837

Su Y, Zhao L, Ye H, Huang W, Li X, Li H, Chen J, Kong W, Zhang B. Banana Fusarium Wilt Recognition Based on UAV Multi-Spectral Imagery and Automatically Constructed Enhanced Features. Agronomy. 2025; 15(8):1837. https://doi.org/10.3390/agronomy15081837

Chicago/Turabian StyleSu, Ye, Longlong Zhao, Huichun Ye, Wenjiang Huang, Xiaoli Li, Hongzhong Li, Jinsong Chen, Weiping Kong, and Biyao Zhang. 2025. "Banana Fusarium Wilt Recognition Based on UAV Multi-Spectral Imagery and Automatically Constructed Enhanced Features" Agronomy 15, no. 8: 1837. https://doi.org/10.3390/agronomy15081837

APA StyleSu, Y., Zhao, L., Ye, H., Huang, W., Li, X., Li, H., Chen, J., Kong, W., & Zhang, B. (2025). Banana Fusarium Wilt Recognition Based on UAV Multi-Spectral Imagery and Automatically Constructed Enhanced Features. Agronomy, 15(8), 1837. https://doi.org/10.3390/agronomy15081837